Abstract

Localization has become an important aspect in a wide range of mobile services with the integration of the Internet of things and service on demand. Numerous mechanisms have been proposed for localization, most of which are based on the estimation of distances. Depending on the channel modeling, each mechanism has its advantages and limitations on deployment, exhibiting different performances in terms of error rates and implementation. With the development of technology, these limitations are rapidly overcome with hybrid systems and enhancement schemes. The successful approach depends on the achievement of a low error rate and its controllability by the integration of deployed products. In this study, we propose and analyze a new distance estimation technique employing photography and image sensor communications, also named optical camera communications (OCC). It represents one of the most important steps in the implemented trilateration localization scheme with real architectures and conditions of deployment which is the second our contribution for this article. With the advantages of the image sensor hardware integration in smart mobile devices, this technology has great potential in localization-based optical wireless communication

1. Introduction

In the last few decades, localization techniques that obtain the user’s location in a particular environment have been widely considered as a core technology for on-demand applications and services. This technology mainly supports industrial business solutions and includes robotic, sensor network, or human life actions such as navigation and tracking [1,2]. One of the well-known localization technologies is the Global Positioning System (GPS), which cross-references received signals from several satellites [3]. However, GPS faces many difficulties with lines-of-sight signal transmissions due to the building block propagation or signal clock. For indoor environments, this technique can be employed by re-using its simple existing infrastructure. These approaches use an additional outdoor antenna to amplify and communicate the satellite signal to an indoor antenna [4]. Various algorithms are applied for enhanced location calculation. However, these algorithms are computationally expensive and perform with low accuracy. In practice, GPS is not the optimal technique for indoor localization [5]. The development of on-demand localization services, applied both indoors and outdoors, has been an important task in wireless communication. Most localization techniques perform in two phases: distance detection and localization calculation with reference coordinators. The main factor affecting the cost and error performance of a localization technique is the distance estimation phase. When choosing calculation methods, the classification is mainly based on the signal measurement such as angle of arrival (AOA), time of arrival (ToA), received signal strength indication (RSSI), time of flight (TOF), or time difference of arrival (TDoA) [2,4,6,7,8,9,10]. Another is the signaling model base, where the classification is based on diverse designed technologies, such as RADAR, Wi-Fi, Bluetooth, UWB, visible light communications (VLC), or cellular for both indoor and outdoor scenarios.

As a new challenge in wireless communication, VLC demonstrated great achievement in its performance and in business development [11]. VLC can function at low rates, the high rate for both indoor and outdoor topologies. The main limitation of VLC is the hardware extension of the photodiode, which is a challenge for mobile integration systems, such as the smartphone. A new generation device, optical camera communication uses an image sensor from the camera for receiving, and it has an advanced hardware structure and configuration. In this study, a new approach for distance estimation and localization is proposed using OCC and photogrammetry. The proposed architecture takes advantage of the hardware on most commercial mobile devices to decode the reference coordinate. Subsequently, the position is estimated using photogrammetry on the captured image of the reference node. The article includes five sections for a detailed description of the architecture, calculations, processing steps, the state of the art with the overview of OCC, and a survey of distance estimation and localization mechanisms. Section 3 presents the architecture of the OCC system for proposed distance and localization estimation. This includes transmitter design with the communication protocol, receiver operation, and calculation process. Section 4 presents the evaluation of the performance along with the system analysis and experimental implementation. The conclusion and discussion are presented in Section 5.

2. State of the Art

Location information is the key component of many navigation and position services in indoor and outdoor topologies. The technical solutions for localization are well known, with different directions of approaches including active sensing (laser, IR, camera, ultrasonic, etc.) and passive sensing methods such as Wi-Fi, Bluetooth, UWB, and GPS. Table 1 summarizes the localization performance by different channel modeling. The location can be calculated using at least three distances from three reference points applying the triangle theorem. Therefore, the localization technique accuracies will depend on the accurate measurement of the distance from the device to the reference nodes. Many techniques have been proposed to address this issue, including angle of arrival, time of arrival, TDoA, RSSI, TOF, and structured light. The approach on the distance measurement based on OCC and photogrammetry is one of the promising technologies following the development of LED, image sensing, and OCC.

Table 1.

Summary of localization performances.

2.1. Optical Camera Communication

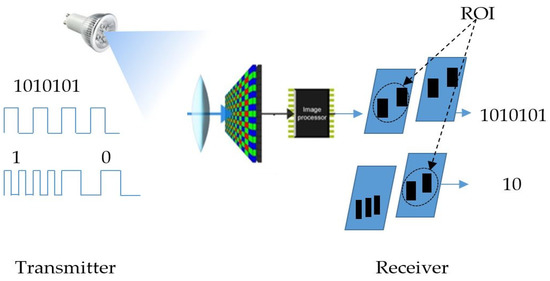

OCC is a new technology employed for communication following the development of VLC, which takes advantage of its optical channel, bandwidth, and security. In addition to the traditional functions, which are related to multimedia data, the communication purpose creates new challenges for the camera. As a great achievement of semiconductor and nanotechnology, the development of the image sensor in embedded systems is on the rise, and applications using the camera will be a major business trend in the near future. The camera-based communication technique has a long history, and is mainly applied using screen display topology, in particular, in vehicle communication systems in intelligent transportation. Following the development of smartphones, VLC using image sensors is a new form of optical wireless communication. It has been considered for standardization in IEEE 802.15.7m [12] with the amendment of photodiode based VLC. The current technology of the camera has some limitations regarding the frame rate, which are reflected on the data rate. However, with the development of semiconductors for new image sensor architecture and MIMO (multiple-input and multiple-output) topology, this issue will not pose a large problem for OCC in the near future. There are already some cameras currently in operation that can operate at hundreds frame per second. However, they are applied only for certain specific applications. The detailed architecture of OCC for both deployment modes is presented in Figure 1. It has the same operational concept as VLC, as the transmitter of the OCC is an LED, LED array, or a screen display device. The receiver is the camera application from the smartphone, webcam, or digital camera.

Figure 1.

OCC architecture.

The decoding operation comprises image processing functions of the captured color pixel information. Depending on the image sensor architecture, this could be in the form of spatial or temporal data from the captured image, depending on whether the global or rolling shutter image sensor is used. With the global shutter image sensor, the entire image is captured at once. This architecture has the advantage of spatial modulation with the MIMO scenario. In contrast, the rolling shutter image sensor mode includes multiple captured states at different times in a single captured image. This architecture has the advantage of an improved data rate compared with the global shutter mode. The OCC receiving signal in this architecture is color pixel rolls, which arise from the ON–OFF light signal of the optical transmitter. By applying image processing algorithms for color classification, the receiver can decode the embedded data following the color or pixel pattern. This can be in the form of color cell status in the global shutter image sensor or frequency separation from image rolls in the rolling shutter image sensor.

One of the well-known international specifications for VLC on PHY and MAC layer from the modulation technique and error correction to medium access control function is IEEE 802.15.7. This extends to IEEE 802.15.7 m with the issues on image sensor communications. The modulation techniques in this specification include OOK (On-Off keying), FSOOK (frequency shift On-Off keying), and screen modulation. These modulation mechanisms can operate with the global shutter and the rolling image sensor. The undersampled frequency shift OOK, namely, UFSOOK [13], applies two frequencies on the OOK light signal for data modulation and one frequency for the start frame synchronization. This modulation technique is based on the binary frequency shift subcarrier. The frequencies of OOK for encoding 1 and 0 bits are separated by a specific frequency distance. The sampling frequencies of 1- and 0-bit encoding are lower than the camera frame rate for the global shutter image sensor. With two frequencies for embedded data, the proposed scheme can modulate 1 bit per sample. For synchronization, more than one frequency other than the data bit is required. It is performed with a synchronization period at each data frame. The synchronization bits operate at high-frequency OOK (10 kHz), which is above the frequency response of the image sensor. This is defined by a resulting average light intensity value from the image sensor. The main advantage of the frequency shift OOK modulation is the high-embedding bit rate per sample of the OCC system. One option is to overcome the limitation of the data rate. UFSOOK can likewise operate with the rolling shutter image at low rates. For higher data rates, the modulation can be performed by OOK, which modulates the binary bit by the ON–OFF light source. This technique has a limitation with respect to distance variation due to the captured FOV in the image sensor.

2.2. Overview of Distance Estimation Techniques

2.2.1. Structured Light

This technique uses a single image sensor from the camera, which constructs the projected light pattern. Typically, it includes two components: the laser beams and the camera for laser triangulation. The laser beam projects a dot at a specified coordinate onto the image sensor. The position and depth of the object surface is determined by observation of the light patterns and calculation.

2.2.2. AOA

The AOA technique estimates the distance using angles of arrival of signals from reference nodes. After obtaining the AOA, the position of the mobile device is determined as the intersection of multiple bearings. AOA has an advantage with respect to synchronization, because there is no requirement regarding the clock between the reference node and the mobile device. However, it has the disadvantage of complexity and cost, with the scan technique or sensor array for the LOS detection being based on the received power distribution.

2.2.3. TOA

Time of arrival is the core technology for the GPS system. This technique estimates the separation distance based on the signal arrival time, which is indicated by the stamp time. This one-way propagation time is calculated by the speed of light and the carrier frequency. With the high accuracy of the time clock, TOA represents a challenge to the technology for distance estimation, because the error is 300 m at 1 μs variance. To apply this technique to location services in 2-D topology, at least three reference base station distances must be obtained.

2.2.4. ToF

The concept of ToF is related to the travel time measurement of the light signal from the sensor to object target, which is similar to the ToA technique. This is the round trip time of a modulated (waveforms or pulses subcarrier) light signal. Because it is necessary to measure at the speed of light, the response of the light-sensing hardware must be sufficiently fast, namely, in the nanosecond range. The decoding process takes several duty cycles. The separation distance is calculated based on light speed, signal duty cycle, and angular frequency, which is defined by the traveling time of the signal in modulated ToF or the proportion of pulse delay in pulsed ToF.

2.2.5. TDoA

The measurement is established by the time difference of the signal arriving between transmitters that simultaneously broadcast the reference signal. The TDOA technique determines the position of the mobile device based on multiple reference nodes with the distance variation. Similar to TOA, TDOA requires strict control of the signal propagation clock time, and additional communication hardware or time protocol is required to provide synchronization among the base stations and mobile devices.

2.2.6. RSSI

Distance estimation can be defined by an RSS measurement, knowing the attenuation characteristics of transmitted signals. The distance between the transmitter and receiver is estimated by the signal strength, which is reduced during traveling due to the propagation effect. This is a major mechanism for distance estimation in the development and research of wireless communication. The relation between transmission, receiving power, and link distance is shown in the channel modeling ratio, the distance between transmitter and receiver, the transmission power, and the receiving power.

2.2.7. Vision Photogrammetry

The main mechanism behind vision photogrammetry is based on image geometry, perspective projection, and planar holography obtained by the mono or stereo camera. Using image perspective information of the reference object and intrinsic characteristics of the camera, the distance between the camera and the object can be calculated by the initial object surface area or moving distance between two captured images. The stereo camera can provide accurate distance calculations between the camera and objects, containing information about the baseline, focal length, and corresponding disparity pixels of the left and right captured images.

2.3. Survey of Distance Estimation Research

Precise distance information is one of the most important requirements for driving assistance systems in the ITS system or localization for on-demand services. Most research regarding distance estimation is applied using RSSI techniques, due to their low cost and complexity. A study presented in Ref. [14] employs the TOA mechanism on UWB for high accuracy in a single base station positioning system by inverting the relations between the arriving signal amplitude and angle, which are obtained between the tag and the base stations. The distance is obtained by analyzing the flight time of the arrived signal based on the communication protocol. The ranging proposal from Ref. [7], which is based on a hybrid of TOA and RSS measurements, performs a theoretical analysis on cases of practical interest for wireless sensor network applications. Here, the inter-node separation overlaps with the region around the critical distance. The hybrid TOA/RSS maximum likelihood estimator is obtained by maximizing the joint pdf of the RSS and TOA observations. The enhanced RSS-based distance estimator [15,16] uses an iterative form of Newton’s method for maximum likelihood-based distance estimation due to the rain attenuation effect, achieving up to 90% error reduction rate. The proposed mechanism in Ref. [17] uses the Monte Carlo algorithm with variance determination by the visible light RSS-based. The calculation is built on the maximization of the signal-to-noise-ratio by means of matched filtering with intensive analytical elaborations. Likewise, working on this issue, schemes from Refs. [18,19] adopt the RSS between a base and a mobile station to obtain accuracy with particle filtering under mixed indoor LTE-A network line-of-sight and non-line-of-sight topologies. Employing another technique, the distance estimation from Ref. [20] utilizes a real-time monocular-vision-based mechanism for a vehicle scenario. The inter-vehicle distance estimation is defined by Haar-like features for vehicle detection, tail-light segmentation, and virtual symmetry detection. The detection process is based on a multi-feature fusion technique to enhance its accuracy and robustness. The distance is defined by the pixel information of the captured image with a reference angle and the distance of the camera position to the head/rear of the vehicle. This mechanism is expected to operate both day and night, and likewise for short-and long-range distances. Moreover, with the use of a single camera, the research from Ref. [21] determines the disparity map and the distance of the object from the camera system using two convex mirrors with a sufficiently long radius of curvature. The pair of images capturing the same scene from two different viewpoints is equivalent to two conventional cameras used in the stereo vision system. The study presented in Ref. [22] obtains distance based on the vanishing point, which is specified by the intersection points of straight lane lines in a vehicle scenario. Applying the Hough transform, the right and left edges are extracted and connected to the vanishing point to segment the road area and distance estimates. The solution using the infrastructure concept from Ref. [23], which represents an imaging mechanism with a blob-guided reference point, combines the pattern recognition of line laser image shapes at a certain angle. The estimation is based on the blobs–gaps relation of the position of the laser projector and the camera coordinates. The principle of the proposed scheme from Ref. [24] detects the width of the license plate as known information, and subsequently calculates the pixel size of the license plate appearing in the CCD image. From the distance–pixel relation between CCD images with known vehicle distances, the distance information can be obtained by a simple mathematical operation. Another study on the vehicle-to-vehicle topology presented in Ref. [25] uses a stereo camera for vehicle LED tracking and distance estimation. The calculation is the combination of a physical model and the Kalman filter for outdoor traveling on-road scenarios. The real-time approach for traveled distance estimation is based on a double integral of acceleration data using the built-in sensors on a smartphone during the cart movement [26]. A precise and robust distance measurement using frequency-domain analysis based on a stereo camera from the analysis of captured images was conducted in Ref. [27]. Here, distance information is calculated using the baseline of two monocular cameras, the focal length, and the pixel disparity that can be calculated directly through the phase of the frequency domain.

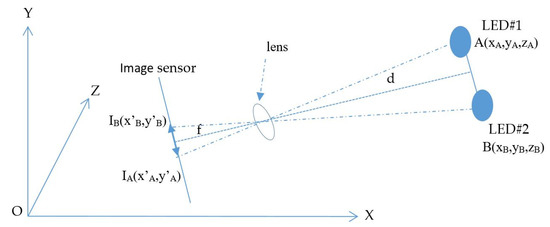

The distances between two LEDs in a real-world plane, AB, with the coordinate (x, y, z), and in the image plane, I1I2, with coordinate (x’, y’), are given by Equations (1) and (2).

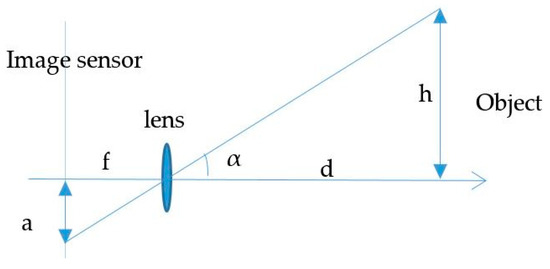

Using the pinhole camera approximation, the cross-height relation between the object and image is presented by Equation (3).

Therefore, the distance between the camera and LEDs plane, d, is given as Equation (4).

where f is the camera focal length.

2.4. Survey of VLC Localization Estimation

The research and implementation of localization has a long history involving different levels of accuracy and complexity. VLC localization techniques can be classified into proximity, fingerprinting, triangulation, and photography vision. In the proximity technique [28,29,30], the relative position is estimated on the basis of the unique identification code of the light source, which defines one specific coordinate in the database. The accuracy level depends on the field of view of the transmitter. The mobile node can retrieve reference location offline or online using wireless communication interfaces such as Wi-Fi, Bluetooth, or ZigBee. Although this technique has low accuracy, it is facile to implement and can be applied in proximity to localization applications. The medical tracking system used in hospitals, presented in Ref. [28], estimates the location of moving devices based on the nearest ID from the illuminating LED base station. The coordinate retrieval and mapping process between mobile devices and the database server is based on the ZigBee or Wi-Fi connection interface. The enhancement of the proximity method is considered with multi-reference light sources in the presence of LED combinations in Ref. [30]. The optical element was deployed with the full angular diversity illumination from multiple LEDs by shaping their emission patterns. A simple binary signal detection of LED transmitters was proposed to identify the overlapping regions and bound the receiver location. The intersection of the optical cone lighting sources bounds the receiver location to a specific pattern. The optical signal received from a single photodiode of the LED array defines the pattern of the overlapping region by the FFT of the modulated light signal, which has different frequencies ranging from 4 to 10 kHz. The accuracy depends on the density of LEDs and the FOV of the captured signal. The fingerprinting method is a pattern recognition technique. It operates by matching the measured data with pre-measured reference location data based on the distribution of LEDs and the variance of received power due to reflections and scattering of the light source. The estimated coordinate is calculated on the basis of the linear static data or using an AI training model such as a neural network. In comparison with the proximity, the fingerprinting technique is more complex and has a higher accuracy of estimation. The proposed system can achieve average distance error is 33.5 cm and the standard deviation is 16.6 cm with a single luminary, 12.9 cm with a deviation of 8.5 cm with 9 lights, and 26 cm of average error with 4 lights.

Another technique that can achieve a higher accuracy level using the triangles’ geometric properties is triangulation. The core calculation of this technique is based on distance estimation that includes the TOA, the time difference of arrival (TDOA), the RSS, and angulation estimation with the AOA method. The VLC positioning system from Ref. [31] uses the Cramer–Rao bound for TOA-based distance estimation. The LEDs are separated by different frequencies and perfectly synchronized with the PD receiver. The VLC TDOA-based indoor positioning system from Refs. [32,33] estimates the indoor localization with PD and multiple known LEDs. The LEDs’ separation for the reference node identification is based on the TDMA technique with a square pulse pilot signal. The pilot signal can also be a sinusoidal waveform, as in Ref. [34]. The position of the mobile node was estimated from the ID detection and signal collection from PD. The proposed system from Ref. [35] uses the PD array to detect the AOA of the source signal by comparison of the received power weighted sum of angles of PDs in the PD array, which is controlled by the distance, radiance angle, and incidence angle. The result of ToA-based 3D localization experiments is obtained the precision of 100 mm to 200 mm, which would be improved by compensating the phase estimation for time synchronization from Ref. [33] and 30 cm of AOA estimation schemes based on circular PD from Ref. [35]. Working with ADOAs, the VLC positioning framework from Ref. [36] defined the angle between reference LEDs and receiver by image sensors or PD array. The scheme can achieve 15 cm error with the least-squares method. The system from Ref. [37] works with RSSI and survey data for indoor localization. The signal strength of each LED transmitter was obtained from the photo diode receiver module. The approximate position of the mobile device which holds the receiver was estimated by comparing the received values with the captured dataset value. The system includes four transmitters with a 16-cell labels arrangement for RSSI survey data.

With photography vision, the geometric relation between the world coordinates and the image plane is obtained by lens transfer using image processing. The relative coordinates of the mobile node can be derived with a single camera using co-linearity, or a dual camera using geometric distance estimation. The simulation and implementation from Ref. [38] is based on distance estimation of four preferred LEDs. The position of the mobile device is calculated by the trilateration method. The enhancement of accuracy can be considered by augmenting image sensor resolution and improving the object detection method. The localization scheme from Ref. [39] converts the pixel coordinate system of the image sensor into a mesh coordinate system using collinearity equation. The indoor localization scheme based on the OCC and PDR systems in Ref. [40] combined the identification information of the transmitter and its orientation vector to obtain an approximate location of the mobile device. The former of the rotation vector can be obtained from accelerometers and gravity data, and the latter from magnetometer and gyroscope. This is a real-time localization with a recalculated scheme to update the position and direction information of the LED. The error rate varies from 0.2 to 1.0 m, with an average value of 35 cm. Another indoor localization proposal in Ref. [41] designed an LED-based beaconing infrastructure which could be integrated to the existing lighting infrastructure. The system estimates the unknown location based on the detection image and the a priori information on the beacon positions. Each LED blinks at a high frequency to avoid flickering. Localization can be determined on the basis of LED classification using beacon and distance estimation based on LED blob size. The measurement results with 4 beacons had an error rate of 17 cm.

3. Proposed Localization Estimation Scheme

3.1. Methodology

3.1.1. Distance Estimation

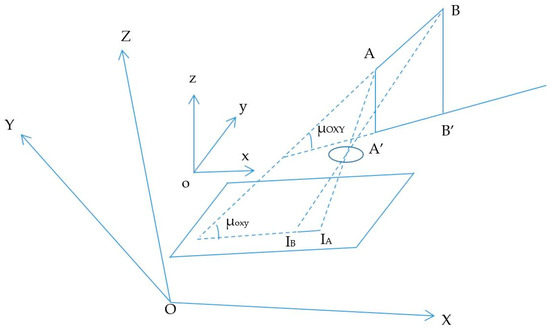

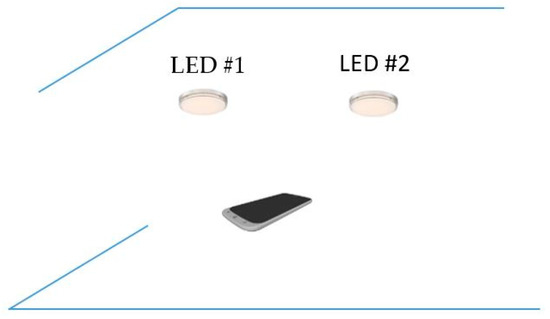

The proposed scenario of distance estimation based on the image sensor is shown in Figure 2. The scheme uses at least two LEDs, which continuously broadcast their coordinate information using VLC technology. From the captured coordinate information on the image sensor, the distance between the two LEDs is given by Equation (5).

where X, Y, and Z are coordinates of the LEDs in the real world, which are represented with A and B; f is the camera focal length. In addition, I1I2 is the distance between the two LEDs’ image in the image sensor with the x’ and y’ coordinates, which is given by Equation (2).

Figure 2.

Distance-estimation-based photography technique.

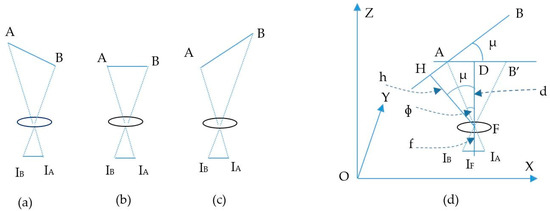

The estimation process includes two main calculations: the image sensor rotation distance and the center shift distance. First, the image sensor rotation distance defines the proportion distance between the two LEDs and their image in the image sensor by means of a rotation angle. It includes two processing steps, which define the image of LEDs on the OXY plane with a separation of the Z coordinator of the LEDs and the image sensor plane with a rotation angle. If the camera image sensor is parallel to the LED plane, as in Figure 2, the distance, calculated using Equation (4), is the proportion among the focal lens, the reference LEDs, and the pixel length of the image of the LEDs. However, with a general captured case, such as that shown in the scenario illustrations in Figure 3a, 3b and 3c, where the captured image sensor plane is not parallel to the LED plane, the distances between the LEDs on the image sensor are the same. For the general scenario, the calculation must consider the captured angle that comes from the lines between the two LEDs and the camera surface.

Figure 3.

Illustration of different captured angle scenarios. (a), (c) LEDs line not parallel with image sensor plane. (b) LEDs line parallel with image sensor plane. (d) Image of LEDs line in image sensor plane.

As shown in Figure 3d, with the assumption that A and B are known LEDs, the image of AB on the OXY plane is AB’, the image of AB on the image sensor is IAIB, the distance from AB to the image sensor can be defined by pinhole camera approximation, and is d. However, the desired value is h, which can be calculated as follows:

where is angle between AB and AB’, is also equal to the angle between HF and FD. Then, ɸ is the angle between AF and FD. Finally, IF is the center point of the image sensor.

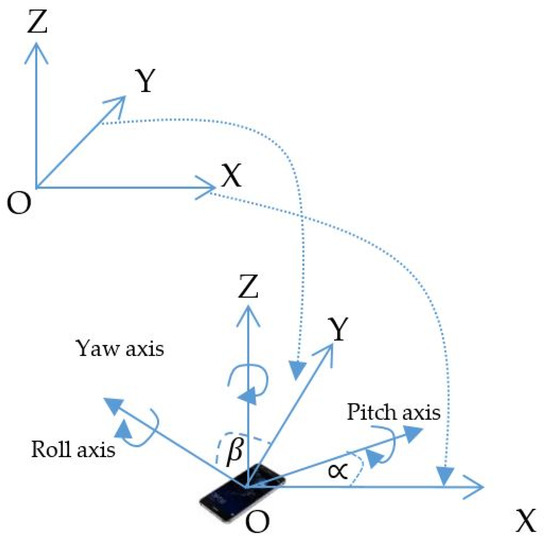

Considering the image sensor rotation status in Figure 4, the rotation angle with OXY plane is controlled by the pitch and roll axis information. These can be acquired from the variation detected by the Gyroscope Sensor with a reference coordinate or direction coordinate of the two reference LEDs. The calculation for distance estimation with the scenario in Figure 3d assumes that the image sensor plane and the OXY plane are parallel. For a general rotation of the image sensor to the OXY plane, the calculation of in Equation (6), which directly affects AB’ (as Figure 3d), is redefined by µoxy as in Figure 5, where µoxy is the angle between AB and the OXY plane; µOXY is the angle between AB and the OXY plane. The rotary image sensor plane has a normal vector, which is defined in Equation (7).

where are the rotation vectors of the x-world coordinate axis and the y-world coordinate axis, respectively, given by Equation (8). β and are the rotation angle of image sensor on Roll axis and Pitch axis.

Figure 4.

Image plane rotation scenario.

Figure 5.

Image of reference points in the image sensor plane.

The angle between the LED line and the camera image sensor plane in Equation (5) is as follows:

where = (XB-XA,YB-YA,ZB-ZA).

The second calculation is for the center shift distance, which defines the real distance between the plane of the two LEDs and the image sensor with a shift distance from the center of the image sensor, as shown in Figure 6. The expected distance, EF, is defined by Equation (12).

Figure 6.

Center shift scenario.

Here, IG is the center point of the image sensor, which is generated from the principal axis of a lens and the image sensor. FG, which depicts the distance between the LED plane and the lens in the center of the image sensor, can be calculated from the first step. Subsequently, the IGIE is the center shift of the LED image, which can be measured from image sensor pixels through the perpendicular line of the image of the two LEDs and the image sensor center point.

The equation of the line from the two points (IA, IB) with the slope, m, and the intercept of the line, b, is given by the following conditions (13).

In the image sensor plane (oxyz), the coordinates of IE are defined by the intersection point between IAIB and the perpendicular line to IAIB traveling through the image sensor center point, given by Equation (14).

Then, the coordinates of the intersection point, E, from the intersection between the expectation distance line and the line between the two LEDs in the LED plane are calculated by Equation (15).

where IAIE is calculated based on the coordination of detected IA in the image sensor and defined by the formula in Equation (14) for point IE.

3.1.2. Coordinate Estimation

In the general scenario shown in Figure 6, the intersection point, E, between the LED line, AB, and the distance of the line between LEDs plane to camera, EF, from the distance estimation step, includes the complete coordinates based on trilateration formulas. On the basis of this mechanism, every pair of transmitter LEDs can define one distance between the camera and the LED pair at the specified intersection point. With n LEDs, we have k combinations of n sets of distance (between the camera and the LED line) and full coordinate of intersection point.

In the general scenario, the localization of mobile devices can be estimated on the basis of reference nodes with known coordinates and measured distances. With n known anchor nodes and distances from the mobile node to anchor nodes, Li = (xi,yi), ri, where (i = 1, …, n), the estimated location of mobile node M = (x,y) in two dimensions can be calculated by Equation (16) [42]. The anchor nodes and distance are defined in Section 3.1.1, especially Equations (12) and (15).

The calculation can be represented in matrix form as Ax = b, which defines the relationships between positions and distances according to Equation (17).

The position of the mobile node with coordinates (x,y) can be calculated using the least-squares matrix by Equation (18).

x = (ATA)−1ATb

It can be seen that the accuracy of the coordinate calculation is mainly controlled by the distance estimation results. However, due to the Gaussian distributions, and inaccurate anchor positions and distance measurements, the least-squares formula can be reassigned with a weight of estimation variances as Equation (19). By applying more reference nodes which Gaussian distribution error for the distance estimation, the error with respect to the coordinates can be reduced.

Here, .

The calculations from Equations (16) to (19) for x, y, and z correlates with the least-squares system for three reference points in a 2D topology and four reference points for a 3D topology.

3.2. OCC Transmitter

With the limitations of OOK modulation on the distance variation due to the captured FOV of the image sensor, FSOOK demonstrates the advantages in terms of stripe width separation. At different distances of the communication link, the covered bits in the captured image will be inversely proportional to the distance. This means that the amount of covered bits at a greater communication distance will be smaller. The bit loss arises from the coverage problem of the camera FOV. This cannot be recovered by any technique due to the out of protocol control rule. This is one of the main limitations of OOK modulation for the OCC system. With the frequency subcarrier, where the modulated bit is represented by multiple cycles, the distance variation does not affect the decoding process. In this technique, the binary bit classification is defined on the basis of the waveform frequency of the subcarrier, which is represented by a group of rolling stripes at the image sensor. This demonstrates the advantage of bit encoding compared with OOK modulation. At different link distances, the subcarrier frequency can be classified by the Fourier transform or the stripe width pattern. The number of rolling stripes depends on the captured FOV of the image sensor and the link distance. However, if the width of rolling stripes is constant, then the subcarrier frequency will also be the same. This is the basic advantage of FSOOK modulation compared with the OOK technique with respect to bit decoding.

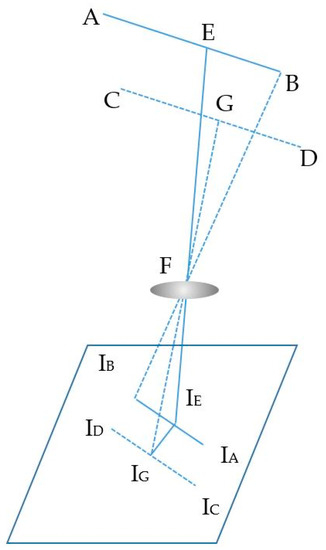

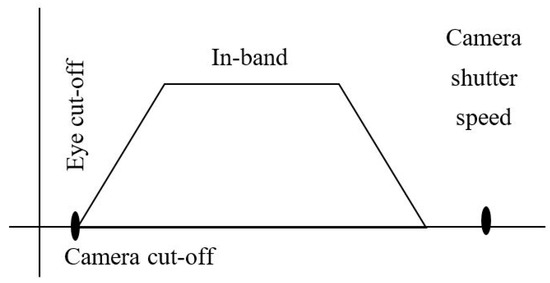

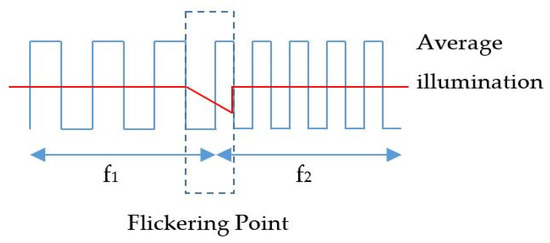

The frequency shift OOK modulation operates with multiple frequency shifts of the on-and-off LED light. The number of frequency shifts decides the number of embedded bits in one symbol. The frequency selection should consider eye safety and the threshold frequency of the shutter. Basically, these frequencies range from 100 Hz to 8 kHz for complete illumination with flickering mitigation, as shown in Figure 7. However, to reduce the down-rate of the coding bit due to the line coding mechanism, besides the frequencies for data coding, one more frequency should be allocated for the preamble signal. The preamble signal operates at the beginning of every super frame for synchronization. Most existing studies on the frequency shift OOK modulation define the preamble frequency above the image sensor shutter speed threshold. This is about 10 kHz, at which the captured image is at the average brightness level of the light source.

Figure 7.

OCC frequency band.

Flickering is the first consideration in VLC deployment, which is defined as one of the most important factors of the transmitter. The frequency separation is defined by the difference in width between the black and white stripes, which is created by the on-and-off signal duration of the LED, as shown in Figure 8. If there is a big variation in the average illumination, the flickering point will be recognized by the human eye, as shown in Figure 9. The higher the frequencies that are applied, the more flickering will occur because of the larger distance of the frequency switch. This is a tradeoff between the embedded rate and the flickering index of the flickering point.

Figure 8.

OOK signal at 2 kHz (a), 1 kHz (b), and 500 Hz (c).

Figure 9.

OCC flickering.

To minimize the flickering point, the proposed system applies two frequencies for the subcarrier on–off signal. The bit encoding mechanism and frequency selection are shown in Table 2. The line coding mechanism is defined with two sampling bits “00” for the synchronization signal. At the frequencies of 2 and 4 kHz, the flickering point is minimized to 10% with variation in illumination. The system can achieve a flickering index of 90% with the “VISO system flicker tester” application [43]. The frequency selection is based on two factors: the flickering point and the communication reliability.

Table 2.

Bit encoding.

With regard to communication, if low frequency is selected for subcarrier, there is a tradeoff relation between the numbers and the pixel width of the LED captured roll. The number of rolls is decreased when the captured distance is increased. This is reliable for decoding the signal. However, the flickering point is large because of the illumination variation between on and off light signals at the frequencies of f1 and f2. The optical clock rate of the transmitter should be the same as the camera frame rate of the receiver. The optical rate and camera sampling rate selection should consider the image processing for the bit decoding algorithm.

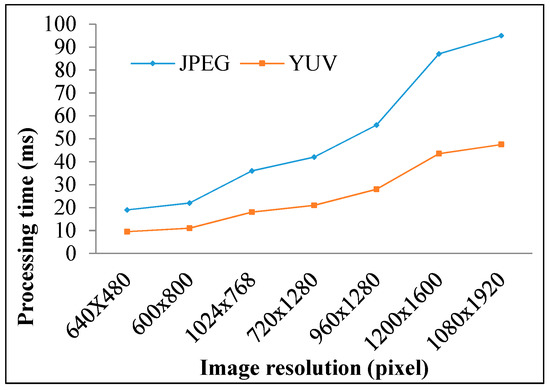

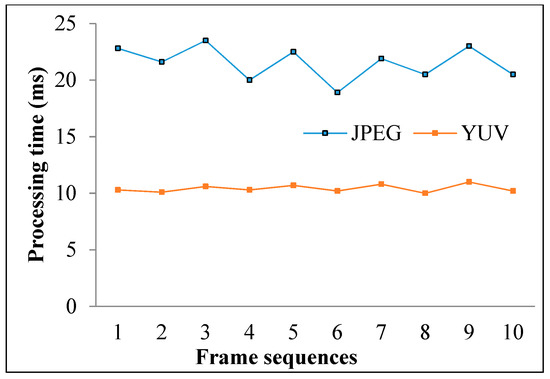

3.3. OCC Receiver

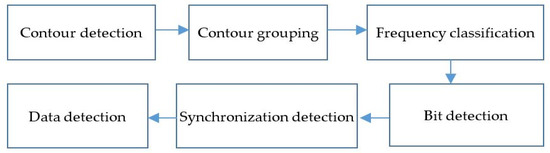

The proposed OCC receiver architecture is based on the rolling shutter mode of the image sensor. The modulated optical signal is captured and converted to a color pixel array by the camera. The out stream is a buffer at which the output image data are dumped at each sampling frame. The data decoding includes light conversion, memory access time, and data image processing. The total time must be less than 1/(frame rate). If this value is larger than the buffer, the memory will overflow. The memory access time is dependent on the image type format and resolution. To minimize the decoding time, we applied a non-compressing image method, based on the gray-scale YUV color space. Figure 10 presents the data decoding with image processing. The gray-scale image data from the buffer are dumped to bitmap data using a two-dimensional array to minimize the processing of contour detections that result from the color conversion.

Figure 10.

Data decoding processes.

The data decoding process includes two steps: detection of the regions of interest (ROIs) and subsequent extracting cell data. Among these, the first step isolates the image areas from the captured image. Subsequently, the second step decodes the frequency from detected ROIs. The ROI separating step is more important, because it can cause a fatal error in the next step. While detection of high-frequency changes in illumination is conducted, we exploit the fact that the rolling shutter of most modern CMOS cameras does not capture total image simultaneously. Alternately, the data transfer is pipelined by the sensor with the exposure of a pixel. This means that a light pulsing at a frequency, which is much higher than the capturing time of a frame, will light up only some rows, thus producing bands in the image. By detecting the frequency of these bands in the frequency domain of the image and inferring the pulsing light frequency, we can use the relationship between them to build up the demodulation for FSOOK.

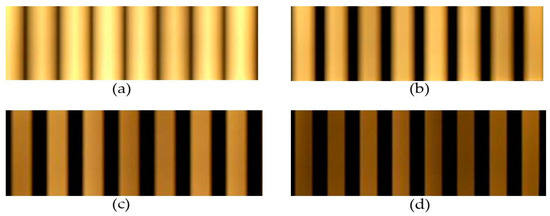

The main difference in the OCC receiver system configuration is the exposure time, which is defined as the pixel roll exposure duration. Under a different configuration of the exposure time, which follows the shutter speed, the light density is spread out onto the image sensor during absorption. This can likewise reduce the interference or the blur effect of being near the lighting source. Then it has a direct effect on the frequency separation in the decoding process. The large reduction configuration, however, has the disadvantage of low brightness, as the captured image is portrayed at a dark gray level. Therefore, the shutter speed configuration should consider the brightness of the scenario, which depends on LED power and the environment.

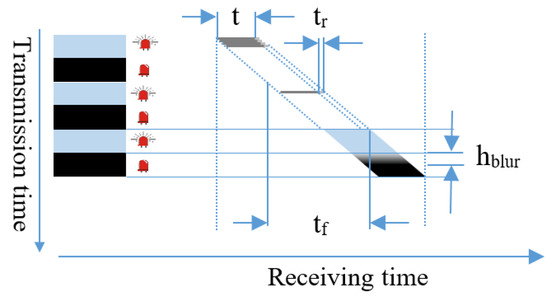

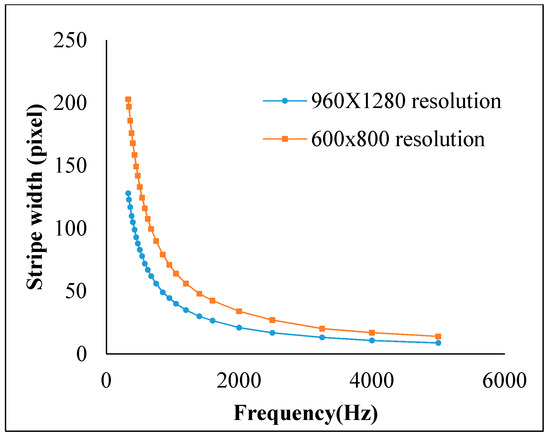

The on–off signal is presented by black and white stripes of occupied pixels in the captured image. The width of the stripes depends on modulation frequency. With a square waveform signal at a frequency fs, the duration of the on–off state, tf, will be 1/(2fs) seconds for a pair of black and white stripes. To generate one stripe in hs pixel rows with readout time, the width of the stripe pair ws, in millimeters, is defined by Equation (20).

The blur effect, which arises from the inter-symbol interference and the offset shifting time of exposure process, has a width given by Equation (21).

Here, dc is the pixel density, tr is the row read out time, and hs is the height of the image in pixels, as shown in Figure 11.

where t is the exposure time at which the sensor opens the shutter to receive photons.

Figure 11.

Effect of shutter speed in the received image. (a) 1000 Hz of shutter speed. (b) 2000 Hz of shutter speed. (c) 4000 Hz of shutter speed. (d) 8000 Hz of shutter speed.

If the transition time of the modulation optical signal is larger than the camera exposure time, the captured signal cannot be classified. The stripe width in Figure 12 shows the effect of different shutter speed configurations. At short shutter speeds, the stripe width is reduced to close to the optical clock rate.

Figure 12.

Rolling image sensor operation.

3.4. Topology and Operation

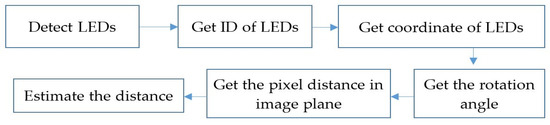

The flowchart for distance estimation based on OCC is shown in Figure 13. It includes five steps: OCC transmitter classification, coordinate query, device rotation matrix calculation, OCC LED image coordinate tracking, and distance estimation. In the first step, the OCC LEDs are extracted from among the illumination LEDs to be used for data communication in the second step. The OCC LEDs broadcast their coordinates through the optical channel. The coordinates of the two LEDs are queried from a server with the unique IDs, which are obtained by the OCC technique at the third step. The query can be executed with the index table or communication technology such as Wi-Fi or LTE. The distance between two LEDs is obtained from the coordinate information. Subsequently, the separation distance of two LED images on the image sensor plane can be calculated by pixel measurement. By combining the rotation angle between the LED lines and the image sensor plane at the fourth step, distance estimation can be finalized.

Figure 13.

Distance estimation processes.

3.5. Error Analysis

The proportions of h and a in the camera architecture shown in Figure 14 are defined by Equation (22). The accuracy of the object image height a will affect the error rate of d, where the focal length f and the object height h are defined values extracted from the camera information and OCC reference coordinate calculation.

Figure 14.

Pinhole camera.

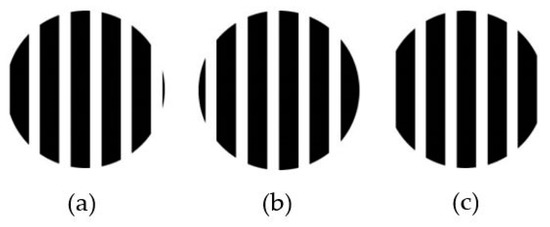

The estimation accuracy is directly dependent on the LED image landmark recognition. More specifically, it is strongly affected by the reference point for coordinate identification, which depends on the light conditions of the environment and the reference landmarks. The specified coordinates of the proposed scheme are defined on the basis of the center of the LED contours, as shown in Figure 15. However, because of the rolling effect portrayed in Figure 16, the captured stripes of LED are not at a fixed location in the image plane. This generates error with regard to the center position of LED contours.

Figure 15.

Reference coordinates on image sensor plane.

Figure 16.

Captured contour scenarios. (a) Stripe starts at ON state and finishes at OFF state. (b) Stripe starts at OFF state. (c) Stripe starts at ON state and finishes at ON state.

With the error of pixel amounts in the image line of the two LEDs, the distance calculation is proportional to h and f. A 1-pixel error at the image sensor plane can generate an error in the distance, according to Equations (23)–(25). With common camera parameters, e.g., f = 600 pixels, the distance is equal to ~3 cm for a 1-pixel error.

The clarity of the stripes in the captured image affects the data decoding error and distance estimation accuracy, which are based on the stripe width separation. Assuming that a white LED is used as a transmitter, the width of the stripe comes from the color band between the black and white regions of the two LED off-state stripes. The most important detection parameter is the on–off separation threshold value, which is controlled by the transition between black and white and the LED driver frequency response.

The absorbed photon intensity of the LED signal at the image sensor with the LOS path loss model is given by Equation (26).

where hi is the color channel DC gain, P(t) is the transmission power of the light source, and Pr is the received optical power.

The channel DC gain path loss can be defined with Equation (27).

where m is the order of the Lambertian emission, A is the image sensor pixel area, D is the distance between the transmitter and the receiver, Ɵ is the angle of irradiance, Ts(Ɵ) is the angle of incidence, g(Ɵ) is the signal transmission coefficient of an optical filter, cos(Ɵ) is the gain of an optical concentrator, and ƟFOV is the receiver field of view. The values of m and g are defined by Equation (28).

where n denotes the internal refractive index of the optical concentrator.

With the effect of the camera lens, the channel gain is defined by Equation (29).

where is the blur concentration ratio, which is quantified by its standard deviation by Equation (30).

where s, f, and l are the pixel edge length, camera focal length, and the diameter of the LED source.

To analyze the performance of the system’s SNR over signal propagation, the noise model plays an important role. Optical noise includes shot noise ∂, thermal noise, and multipath inter-symbol interference [29], defined by Equations (31)–(34), respectively.

where µ is the detector responsibility, q is the electronic charge, is the equivalent noise bandwidth, W is the sampling rate of the camera, Ibg is the background current, is the noise bandwidth factor, and is the affection noise from the neighbor channel. K is the Boltzmann constant, Tk is the absolute temperature, G is the open-loop voltage gain, δ is the fixed capacitance of the photo detector per unit area, E is the FET channel noise factor, gm is the FET trans-conductance, I3 = 0.868, and I2 = 0.562. denotes the received power, and is the received power resulting from the inter-symbol interference. T depicts the exposure time of the camera.

The SNR value is defined by Equations (35) and (36).

With the proposed 2FSOOK modulation at 2 and 4 kHz of the subcarrier, the BER is defined by Equation (37).

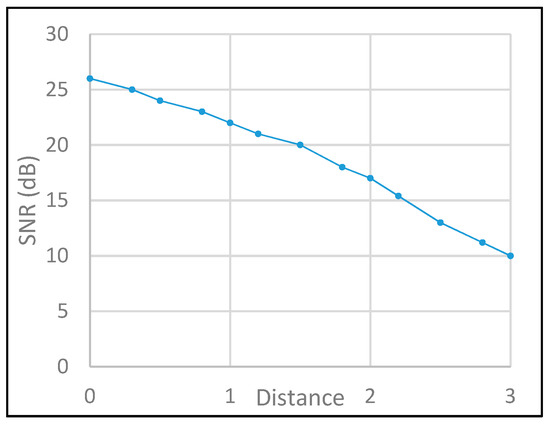

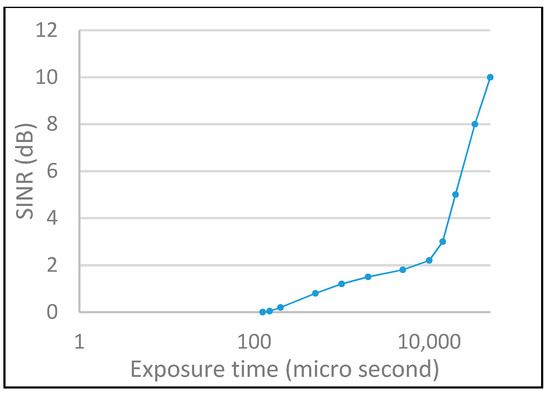

Figure 17 and Figure 18 show the performance of the SNR and SINR with different configurations of shutter speed and link distance. For the SNR, the fluctuation of the signal strength is controlled by the received power propagation and the lens blur effect. In contrast to SNR, the main factor of SINR is the exposure time, which avoids the interference of the optical source from neighbor modulated light pulses.

Figure 17.

SNR evaluation.

Figure 18.

SINR evaluation.

4. Performance Evaluation

4.1. Image Sensor Communication Performance

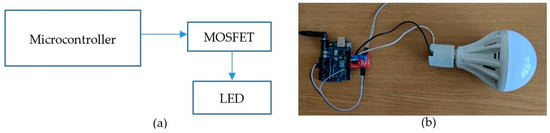

The distance and localization estimation performance of the proposed scheme was evaluated by applying the experiment implementation circuit for the transmitter, including the microcontroller LED driver and the LED, as shown in Figure 19. The receiver comprises a smartphone rolling shutter camera and an application that can configure the camera shutter speed.

Figure 19.

Transmitter implementation architecture. (a) Circuit diagram. (b) Devices demo.

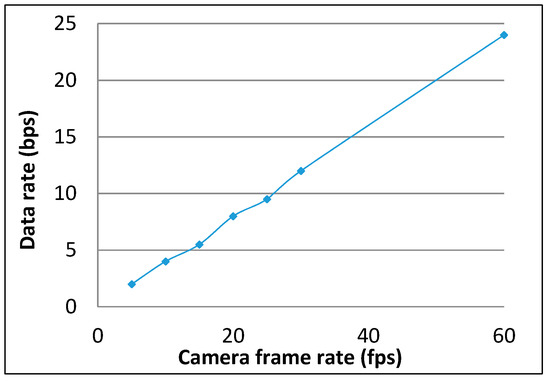

The data rate of the proposed 2FSOOK modulation scheme at different configured camera frame rates is shown in Figure 20. The system data rate is proportional to the camera frame rate. However, high camera frame rates create a problem with decoding processing. The experiment performed on the current commercial smartphone at more than 20 fps is not reliable with respect to the processing capability, due to the cache memory. The buffer is overloaded in some scenarios where the shot noise is high. Table 3 provides the configuration of the experiment that guarantees data encoding and decoding.

Figure 20.

Data rate performance of 2FSOOK.

Table 3.

Configuration of experimental implementation.

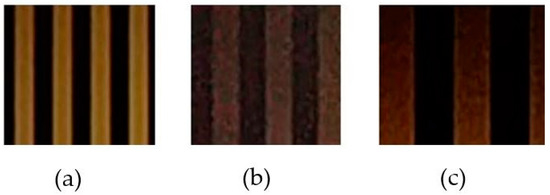

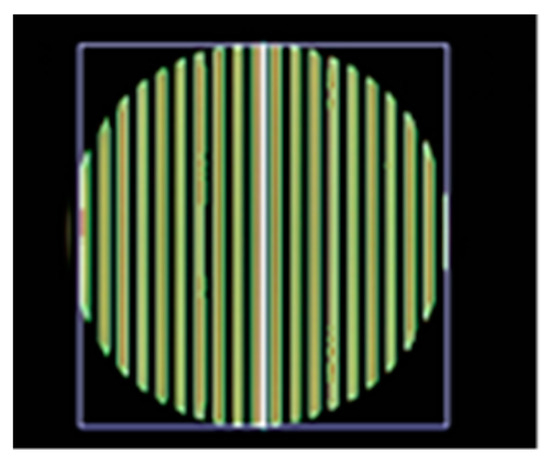

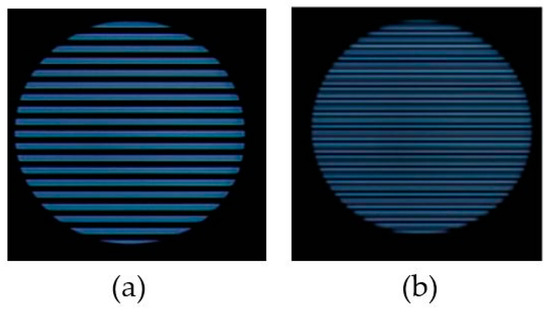

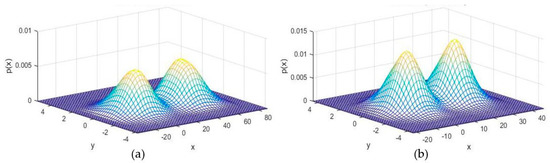

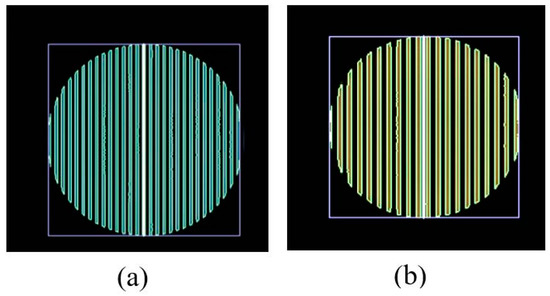

The roll stripes of subcarrier frequencies of 2FSOOK are shown in Figure 21, where the 2 and 4 kHz OOK frequencies are embedded. In this experiment, the implementation of a 17 cm diameter of LED and the power density distribution analysis of two neighbors LED optical pulse at 2 and 4 kHz OOK frequency are shown in Figure 22. The distance from the transmitter to the receiver can be at 2 m for reliable decoding data with identified stripes in the captured image. At a larger distance, the separation distance between the contours is not stable, and the system encounters an error resulting from missing stripe widths, which arise as a result of the rolling mechanism limitation. Therefore, to achieve high accuracy and minimize the complexity of error correction, the maximum number of rolling contours should be guaranteed for the demodulation of one frequency. The number of contours depicts the maximum communication range between the transmitter and the receiver. This means that with FSK modulation, the position of the receiver can change dynamically, whereas the maximum communication range will be recognized when the number of contours is more than one. In our implementation, we configure the image sensor with a resolution of 600 × 800 pixels and a maximum communication range of 2 m. The higher the resolution configuration, the longer the distance we can achieve for communication. However, this setup faces processing time issues on the device. The performance with regard to processing capability with respect to image formats, resolution configuration, and the rolling signal pixel width of 2FSOOK are shown in Figure 23, Figure 24 and Figure 25.

Figure 21.

Rolling shutter image of 2FSOOK. (a) 2 kHz signal. (b) 4 kHz signal.

Figure 22.

Power density of LED pulse at 2 kHz OOK subcarrier (a) and 4 kHz OOK subcarrier (b).

Figure 23.

Processing performance vs. image sensor resolution.

Figure 24.

Processing performance vs. image type configuration.

Figure 25.

Roll width feature with respect to signal frequency and image sensor resolution.

4.2. Distance Estimation Performance

The performance of the proposed scheme for distance estimation is measured using photography with the setup illustrated in Figure 26. As described in the analysis from the previous section, the estimation error arises from the calculation of the distance between the LEDs, which is defined by the line connecting the two center points of reference LEDs. The OCC LEDs can be separated into common LEDs by continuous rolling contours. The coordinates of the LED ROI are covered by the position of the first and last contour, which are varied at every captured frame due to the rolling effect with the on status of LED. In the experiment, Figure 27a,b represent the features of the 4 and 2 kHz LED signal, which correspond to the maximum of 4 and 6 pixels at 600 × 800-pixel resolution. The error of the coordinates of the LED contour on the image sensor ranges from 1 to 6 pixels under stable conditions.

Figure 26.

Distance estimation scenario.

Figure 27.

ROI contour feature. (a) Captured image of 4 kHz signal. (b) Captured image of 2 kHz signal.

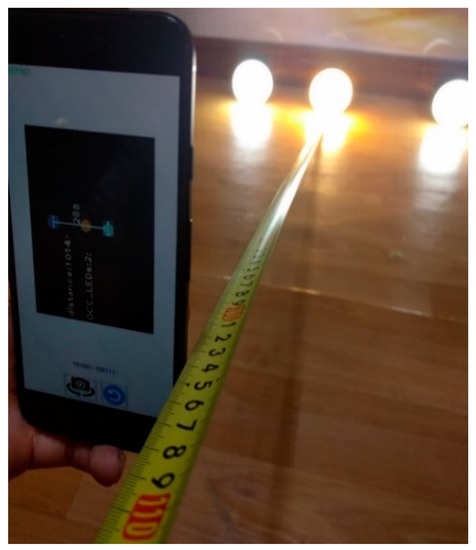

A photograph depicting the experimental implementation of the proposed scheme is presented in Figure 28, portraying two OCC LEDs among three illumination LEDs. The coordinates of the two OCC LEDs are mapped with the index table through LED-ID [44] or by receiving the coordinates directly from the LED signal. In this scenario, we applied the LED-ID architecture, where there is 5-bits ID. The data are broadcast by the LEDs, which are 17 cm apart, and received by the rolling shutter camera in the smartphone. The coordinates of the LEDs are queried by a server via a Wi-Fi connection. With regard to the camera focal length, this is one of the most important parameters for distance estimation using the pinhole camera approximation. It should be defined initially for the estimation processing. Its value can be obtained from the Camera API (for example, CameraIntrinsics or CameraCharacteristics library for Android devices). It can also be defined initially through manual configuration using a built-in database. The focal length of existing cameras will be surveyed and adapted for the application. By extracting the device model from the camera API, which most existing devices can support, we can match the device camera focal length from the database and then configure these values.

Figure 28.

Distance estimation experiment.

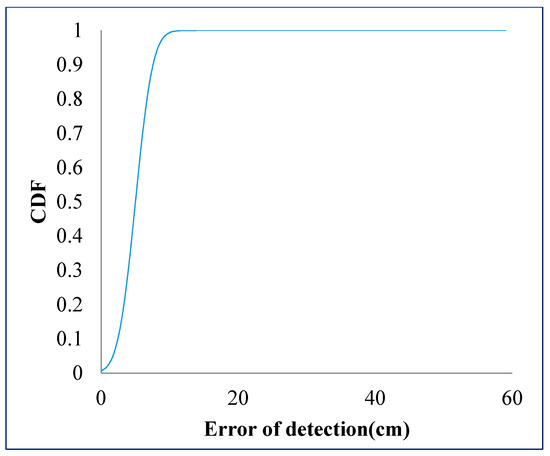

For the centralization error rate analysis of the image sensor photography technique, the experiment implementation only considered the optical camera communications and pinhole camera approximation calculation. The camera focal length value is calibrated by manual configuration to minimize the error value. In addition, then, the rotation angle between the mobile device and the LED plane, which can be extracted from the gyroscope sensor, is also considered with the fixed scenario. The rotation angle with the gyroscope sensor was expected to be a minimum of 2 degrees in Ref. [45]. We conduct the experiment by measuring 1,000 times in order to calculate the average across mean and variance. The performance of distance estimation is shown in terms of the cumulative distribution function (CDF) results in Figure 29. It can achieve an average estimation error of 10 cm, which arises from the error of the LED center point detection due to the projected contours.

Figure 29.

CDF of distance error performance.

4.3. Localization Estimation Performance

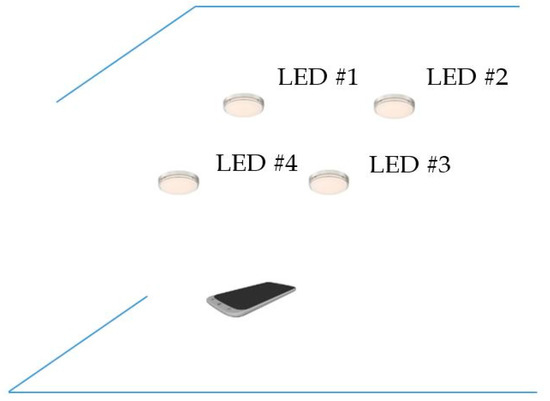

The performance evaluation of the localization estimation is based on the scenario portrayed in Figure 30. It includes three main steps, extracted from four processes: OCC detection, coordinator download, ranging estimation, and coordinator estimation. Firstly, the mobile terminal downloads the coordinates of the three reference LEDs using OCC. Secondly, it references the LED range calculation based on photography geometry. Finally, it performs the localization estimation using trilateration. Among these four steps, OCC detection plays an important role in all system functions that support data decoding, coordinator mapping, and OCC receiver classification.

Figure 30.

Localization estimation scenario.

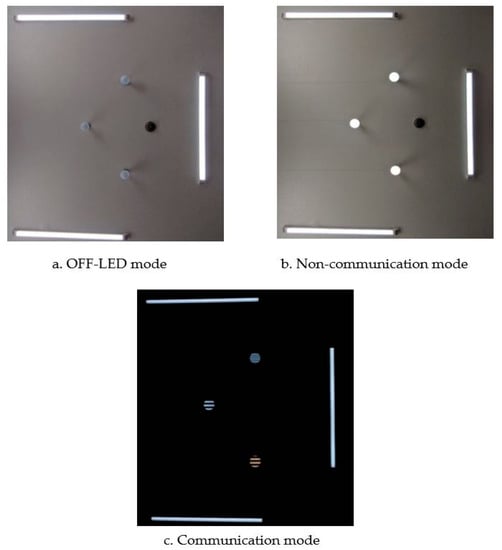

The experimental setup for localization estimation is shown in Figure 31a–c, which portray the off-status of reference LEDs, non-communicative mode of camera, and communication mode of camera, respectively. In this scenario, there are three data LEDs that coexist with other illumination lighting LEDs. In the non-communicative mode, the camera shutter speed is configured at 30 Hz, at which there is no classification between the illumination LEDs and communication LEDs. However, in the communication mode, the inner optical interference is removed by the high speed of the camera shutter. Using a computer vision algorithm, we can easily separate the OCC LEDs and illumination LEDs. As discussed in the previous section, the main consideration with respect to the OCC data rate is the processing time capacity at the receiver, which is affected by the image processing algorithm, resolution, and the number of sources.

Figure 31.

Evaluation experiment scenario. (a) Off state of LEDs scenario. (b) Illumination state of LEDs scenario. (c) Communication state of LEDs scenario.

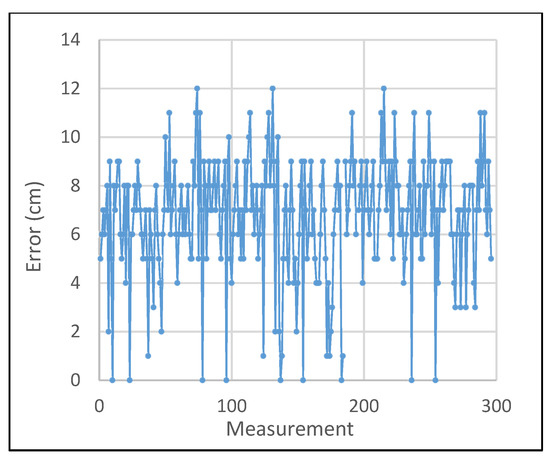

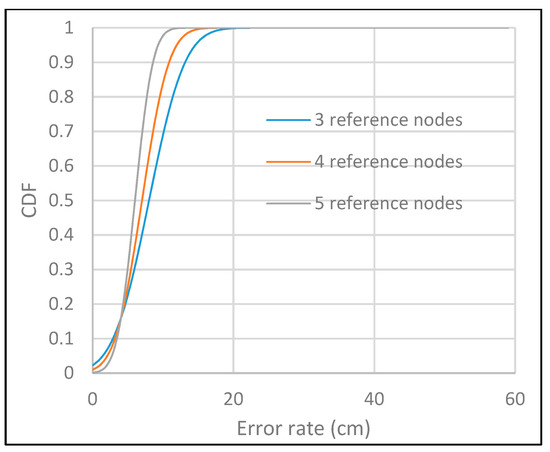

As a result of distance estimation in Section 4.2, which is controlled by camera focal length, rotation matrix angle and LED ROI, the performance evaluation of system localization, which is defined by trilateration formulas, has another factor, Gaussian distributions error of anchor distances. The performance of 300 localization estimation measurements with three reference nodes, as in the configuration scenario in Section 4.2, is shown in Figure 32. In this experiment, the vertical value, which is defined by the “z” coordinate, is calculated on the basis of the photography image sensor calculation of Section 3. The origin of the coordinate system and the direction of the axis are defined by the received reference coordinates and the image sensor coordinates. The performance accuracy of more reference coordinates with Gaussian weighing is shown in Figure 33.

Figure 32.

Localization error performance.

Figure 33.

CDF performance of localization.

5. Conclusions

Considering the importance of localization services in the business trends of on-demand applications, the proposed scheme for distance estimation based on OCC and photogrammetry shows the advantages of VLC in visible light optical channels and the development of image sensors in smart devices. The proposed system includes a non-flickering visible light system transmitter, an OCC rolling shutter receiver, and employs a photogrammetry mechanism for distance estimation. The main contributions of this article are the OCC system design and implementation, and the distance estimation and localization calculation mechanism using OCC and photography. The performance results, as well as advantages of the hardware and low complexity, imply a new promising technique for indoor localization services. In future work, the performance evaluation of the camera’s focal length calibration and rotation angle of image sensor will be considered with a thorough analysis.

Author Contributions

N.T.L. proposed the idea, performed the experiments and wrote paper; Y.M.J. supervised the work and provided funding support.

Funding

This research was supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program(IITP-2018-0-01396) supervised by the IITP (Institute for Information & communications Technology Promotion).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yassin, A.; Nasser, Y.; Awad, M.; Al-Dubai, A.; Liu, R.; Yuen, C.; Raulefs, R.; Aboutanios, E. Recent Advances in Indoor Localization: A Survey on Theoretical Approaches and Applications. IEEE Commun. Surv. Tutor. 2017, 19, 1327–1346. [Google Scholar] [CrossRef]

- Do, T.H.; Yoo, M. An in-Depth Survey of Visible Light Communication Based Positioning Systems. Sensors 2016, 16, 678. [Google Scholar] [CrossRef] [PubMed]

- Ozsoy, K.; Bozkurt, A.; Tekin, I. Indoor Positioning Based on Global Positioning System Signals. Microw. Opt. Technol. Lett. 2013, 55, 1091–1097. [Google Scholar] [CrossRef]

- El Assaad, A.; Krug, M.; Fischer, G. Highly Accurate Distance Estimation Using Spatial Filtering and GNSS in Urban Environments. In Proceedings of the IEEE 84th Vehicular Technology Conference, Montréal, QC, Canada, 18–21 September 2016. [Google Scholar]

- Xu, R.; Chen, W.; Xu, Y.; Ji, S. A New Indoor Positioning System Architecture Using GPS Signals. Sensors 2015, 15, 10074–10087. [Google Scholar] [CrossRef] [PubMed]

- Saeed, N.; Ahmad, W.; Bhatti, D.M.S. Localization of Vehicular Ad-Hoc Networks with RSS Based Distance Estimation. In Proceedings of the International Conference on Computing, Mathematics and Engineering Technologies, Sukkur, Sindh, Pakistan, 3–4 March 2018. [Google Scholar]

- Coluccia, A.; Fascista, A. On the Hybrid TOA/RSS Range Estimation in Wireless Sensor Networks. IEEE Trans. Wirel. Commun. 2017, 17, 361–371. [Google Scholar] [CrossRef]

- Amini, C.; Taherpour, A.; Khattab, T.; Gazor, S. Theoretical Accuracy Analysis of Indoor Visible Light Communication Positioning System Based on Time-of-Arrival. In Proceedings of the IEEE Canadian Conference on Electrical and Computer Engineering, Vancouver, BC, Canada, 15–18 May 2016; pp. 1–5. [Google Scholar]

- Sung, Y. RSSI-Based Distance Estimation Framework Using a Kalman Filter for Sustainable Indoor Computing Environments. Sustainability 2016, 8, 1136. [Google Scholar] [CrossRef]

- Keskin, M.F.; Sezer, A.D.; Gezici, S. Localization via Visible Light Systems. Proc. IEEE 2018, 106, 1063–1088. [Google Scholar] [CrossRef]

- IEEE Standards Association. IEEE Standard for Local and Metropolitan Area Networks; Part 15.7: Short-range Wireless Optical Communication Using Visible Light; IEEE Standards Association: Piscataway, NJ, USA, 2011. [Google Scholar]

- IEEE Standards Association. IEEE Standard for Local and Metropolitan Area Networks; Part 15.7: Short-Range Optical Wireless Communications; Task Group IEEE 15.7m; IEEE Standards Association: Piscataway, NJ, USA, 18 May 2018. [Google Scholar]

- Richard, D.; Roberts, R.D. Undersampled Frequency Shift ONOFF Keying (UFSOOK) for Camera Communication (CamCom). In Proceedings of the Wireless and Optical Communication Conference, Newark, NJ, USA, 16–18 May 2013; pp. 645–648. [Google Scholar]

- Zhang, H.; Cui, X.; An, B.; Gulliver, T.A. A Distance and Angle Estimated Method Based on Single UWB Station. In Proceedings of the IEEE International Conference on Signal Processing, Communication and Computing, Shimla, India, 26–28 September 2013. [Google Scholar]

- Fang, S.H.; Cheng, Y.C.; Chien, Y.R. Exploiting Sensed Radio Strength and Precipitation for Improved Distance Estimation. IEEE Sens. J. 2018, 18, 6863–6873. [Google Scholar] [CrossRef]

- Stevens, N.; Plets, D.; De Strycker, L. Monte Carlo Algorithm for the Evaluation of the Distance Estimation Variance in RSS-Based Visible Light Positioning. In Proceedings of the 20th International Symposium on Wireless Personal Multimedia Communications, Bali, Indonesia, 17–20 December 2017; pp. 212–216. [Google Scholar]

- Sharifi, H.; Kumar, A.; Alam, F.; Arif, K.M. Indoor Localization of Mobile Robot with Visible Light Communication. In Proceedings of the 12th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications, Auckland, New Zealand, 29–31 August 2016; pp. 1–6. [Google Scholar]

- Chen, C.H.; Feng, K.T. Statistical Distance Estimation Algorithms with RSS Measurements for Indoor LTE-A Networks. IEEE Trans. Veh. Technol. 2017, 66, 1709–1722. [Google Scholar] [CrossRef]

- Chen, C.H.; Feng, K.T. Enhanced Distance and Location Estimation for Broadband Wireless Networks. IEEE Trans. Mob. Comput. 2015, 14, 2257–2271. [Google Scholar] [CrossRef]

- Rezaei, M.; Terauchi, M.; Klette, R. Robust Vehicle Detection and Distance Estimation Under Challenging Lighting Conditions. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2723–2743. [Google Scholar] [CrossRef]

- Murmu, N.; Nandi, D. Low Cost Distance Estimation System Using Low Resolution Single Camera and High Radius Convex Mirrors. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics, Greater Noida, India, 24–27 September 2014; pp. 998–1003. [Google Scholar]

- Chen, C.H.; Chen, T.Y.; Huang, D.Y.; Feng, K.W. Front Vehicle Detection and Distance Estimation Using Single-Lens Video Camera. In Proceedings of the Third International Conference on Robot, Vision and Signal Processing, Kaohsiung, Taiwan, 18–20 November 2015; pp. 14–17. [Google Scholar]

- Utaminingrum, F.; Kurniawan, T.A.; Fauzi, M.A.; Maulana, R.; Syauqy, D.; Wihandika, R.C.; Sari, Y.A.; Adikara, P.P. A Laser-Vision based Obstacle Detection and Distance Estimation for Smart Wheelchair Navigation. In Proceedings of the IEEE International Conference on Signal and Image Processing, Beijing, China, 13–15 August 2016; pp. 123–127. [Google Scholar]

- Chen, S.H.; Chen, R.S. Vision-Based Distance Estimation for Multiple Vehicles Using Single Optical Camera Feature. In Proceedings of the International Conference on Innovations in Bio-inspired Computing and Applications, Shenzhen, Guangdong, China, 16–18 December 2011; pp. 9–12. [Google Scholar]

- Tram, V.T.B.; Yoo, M. Vehicle-to Vehicle Distance Estimation Using a Low-Resolution Camera Based on Visible Light Communications. IEEE Access 2018, 6, 4521–4527. [Google Scholar] [CrossRef]

- Truong, P.H.; Kim, S.I.; Jeong, G.M. Real-Time Estimation of Distance Traveled by Cart Using Smartphones. IEEE Sens. J. 2016, 16, 4149–4150. [Google Scholar] [CrossRef]

- Yoo, I.S.; Seo, S.W. Accurate Object Distance Estimation Based on Frequency-domain Analysis with a Stereo Camera. IET Intell. Transp. Syst. 2017, 11, 248–254. [Google Scholar] [CrossRef]

- Armstrong, J.; Yasar, S.; Neild, A. Visible Light Positioning: A Roadmap for International Standardization. IEEE Commun. Mag. 2013, 51, 68–73. [Google Scholar] [CrossRef]

- Le, N.T.; Jang, Y.M. Smart Color Channel Allocation for Visible Light Communication Cell ID. Opt. Switch. Netw. 2015, 15, 75–86. [Google Scholar] [CrossRef]

- Taylor, M.T.; Hranilovic, S. Angular Diversity Approach to Indoor Positioning Using Visible Light. In Proceedings of the IEEE Globecom Workshops, Atlanta, GA, USA, 9–13 December 2013; pp. 1093–1098. [Google Scholar]

- Wang, T.Q.; Sekercioglu, Y.A.; Neild, A.; Armstrong, J. Position Accuracy of Time-of-Arrival Based Ranging Using Visible Light with Application in Indoor Localization Systems. J. Lightwave Technol. 2013, 31, 3302–3308. [Google Scholar] [CrossRef]

- Do, T.H.; Yoo, M. TDOA-based Indoor Positioning Using Visible Light. Photonic Netw. Commun. 2014, 27, 80–88. [Google Scholar] [CrossRef]

- Akiyama, T.; Sugimoto, M.; Hashizume, H. Time-of-arrival-based Smartphone Localization Using Visible Light Communication. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation, Sapporo, Japan, 18–21 September 2017. [Google Scholar]

- Kim, Y.; Shin, Y.; Yoo, M. VLC-TDOA Using Sinusoidal Pilot Signal. In Proceedings of the IEEE International Conference on IT Convergence and Security, Macao, 16–18 December 2013; pp. 1–3. [Google Scholar]

- Lee, S.; Jung, S.Y. Location Awareness Using Angle-of Arrival Based Circular-PD-array for Visible Light Communication. In Proceedings of the 18th Asia-Pacific Conference on Communications, Jeju Island, Korea, 15–17 October 2012; pp. 480–485. [Google Scholar]

- Zhu, B.; Cheng, J.; Wang, Y.; Yan, J.; Wang, J. Three-Dimensional VLC Positioning Based on Angle Difference of Arrival with Arbitrary Tilting Angle of Receiver. IEEE J. Sel. Areas Commun. 2018, 36, 8–22. [Google Scholar] [CrossRef]

- Aswin, P.K.; Shyama, P.; Das, L.B. Indoor Localization Using Visible Light Communication and Image Processing. In Proceedings of the 2018 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 4–6 January 2018. [Google Scholar]

- Rahman, M.S.; Haque, M.M.; Kim, K.D. Indoor Positioning by LED Visible Light Communication and Image Sensors. Int. J. Electr. Comput. Eng. 2011, 1, 161–170. [Google Scholar] [CrossRef]

- Ifthekhar, M.S.; Mondal, R.K.; Le, N.-T.; Jang, Y.M. Simple Method for Indoor Localization in OCC Using Smart Phone Image Sensor. In Proceedings of the 2014 Sixth International Conference on Ubiquitous and Future Networks (ICUFN), Shanghai, China, 8–11 July 2014; pp. 55–58. [Google Scholar]

- Chae, Y.; Nguyen, D.T.; Park, S.; Park, Y. Indoor Localization Using Digital Auto Zoom of a Smart-phone Camera and Integrated Sensors. In Proceedings of the 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), Prague, Czech Republic, 3–6 July 2018; pp. 567–570. [Google Scholar]

- Zachár, G.; Vakulya, G.; Simon, G. Design of a VLC-based Beaconing Infrastructure for Indoor Localization Applications. In Proceedings of the 2017 IEEE International Instrumentation and Measurement Technology Conference, Torino, Italy, 22–25 May 2017. [Google Scholar]

- Dargie, W.; Poellabauer, C. Fundamentals of Wireless Sensor Networks: Theory and Practice; Wiley: Hoboken, NJ, USA, 2010. [Google Scholar]

- Viso Systems. Available online: http://www.visosystems.com (accessed on 11 July 2019).

- Xie, C.; Guan, W.; Wu, Y.; Fang, L.; Cai, Y. The LED-ID Detection and Recognition Method Based on Visible Light Positioning Using Proximity Method. IEEE Photonics J. 2018, 10, 1–6. [Google Scholar] [CrossRef]

- Mourcou, Q.; Fleury, A.; Franco, C.; Klopcic, F.; Vuillerme, N. Performance Evaluation of Smartphone Inertial Sensors Measurement for Range of Motion. Sensors 2015, 15, 23168–23187. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).