Motion Segmentation Based on Model Selection in Permutation Space for RGB Sensors

Abstract

1. Introduction

- We propose a data grouping method, which defines the similarity between data points, and introduce the LSH tool in the processing of the similarity to group the data points;

- We propose a model selection approach that combines energy minimization and the geometric robust information criterion (GRIC) to optimize the model set obtained by the data grouping;

- No prior knowledge is needed, such as the number of motions, as this can be automatically estimated through the model selection.

2. Data Grouping in Permutation Space

2.1. Preference Analysis

2.2. Data Grouping by Locality-Sensitive Hashing (LSH)

3. Model Selection

4. Model Clustering

| Algorithm 1: Motion Segmentation Algorithm | |

| Input: | // dataset |

| Output: | // clusters of point belonging to the same model |

| 1: | = PermutationSpace () // get the similarity matrix |

| 2: | = LSH () // get the initial model set |

| 3: | Repeat |

| 4: | = RandomSampling () |

| 5: | = AscendSort () // sort by ascending order according to the residuals and extract the top-10 hypotheses |

| 6: | = α-expansion () // select the best-quality hypothesis in each cluster |

| 7: | = GRIC () // select the model fitting the data best, where |

| 8: | Until is not changed. |

| 9: | = LinkageClustering (,) // n is the estimated number of motions, R is the residual information of the data points |

5. Experiments

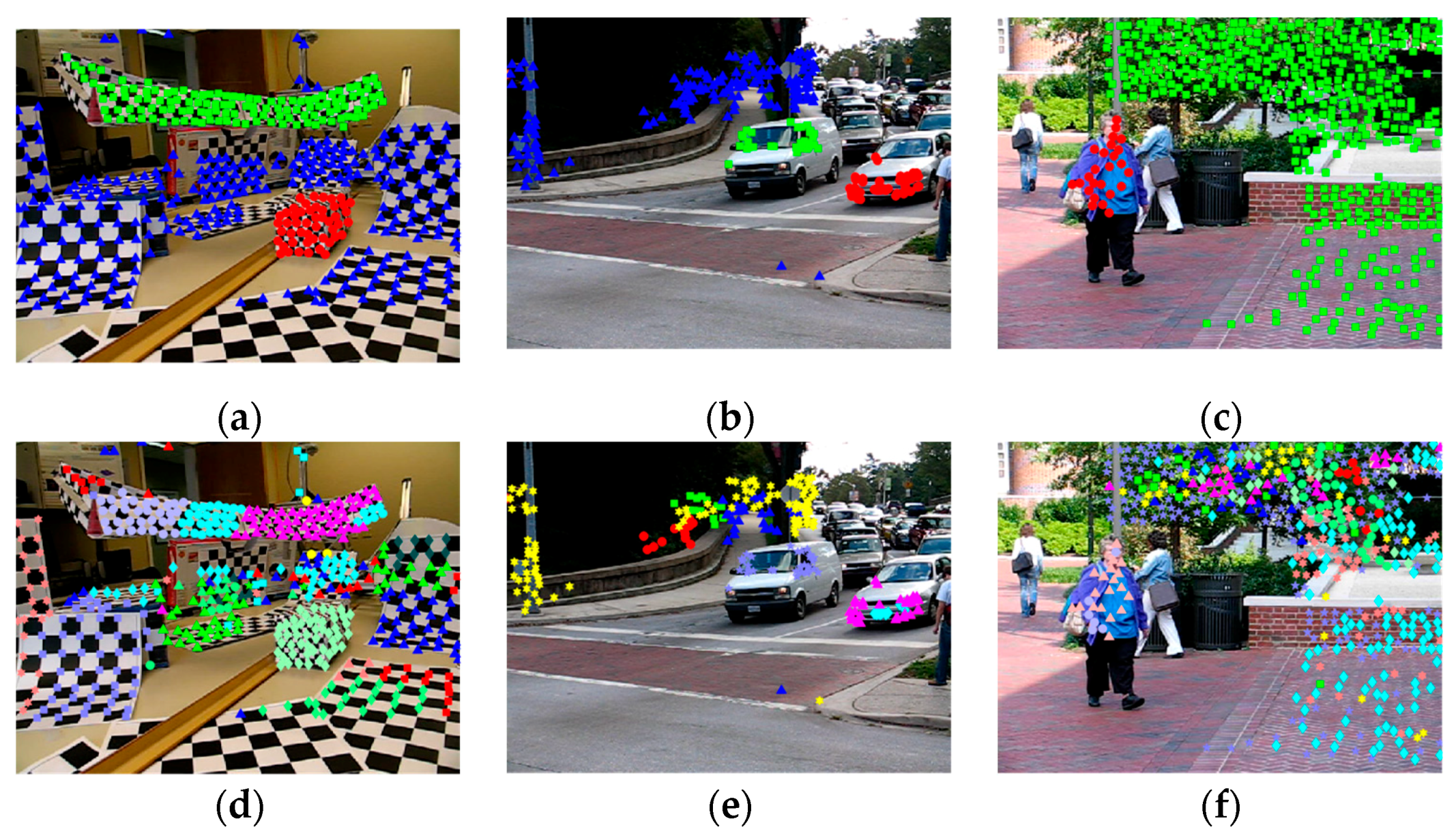

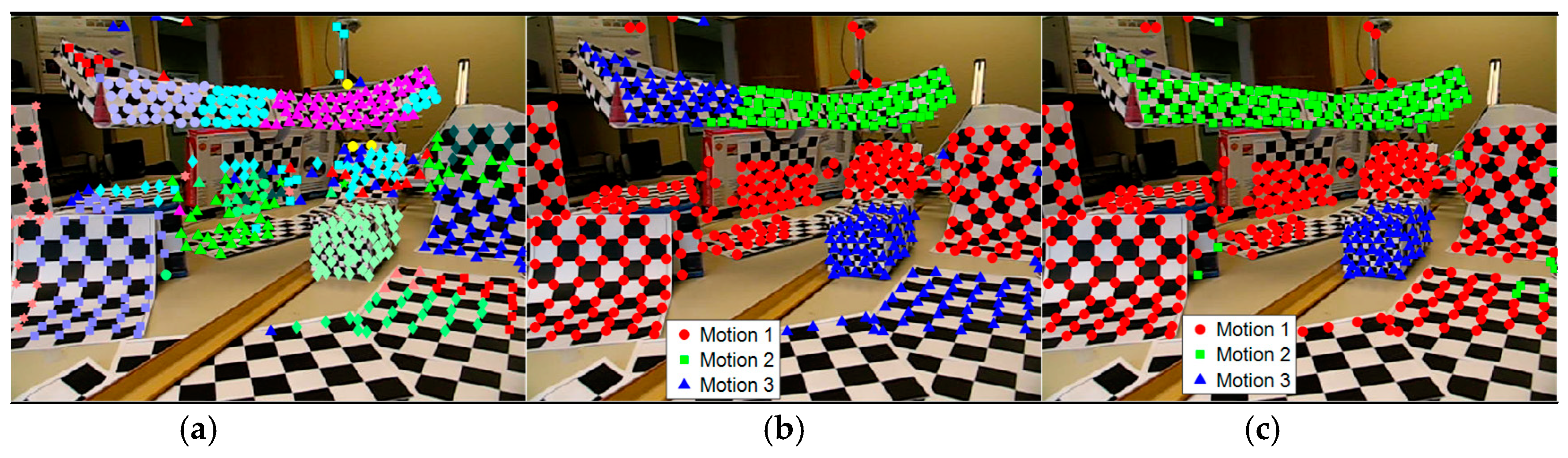

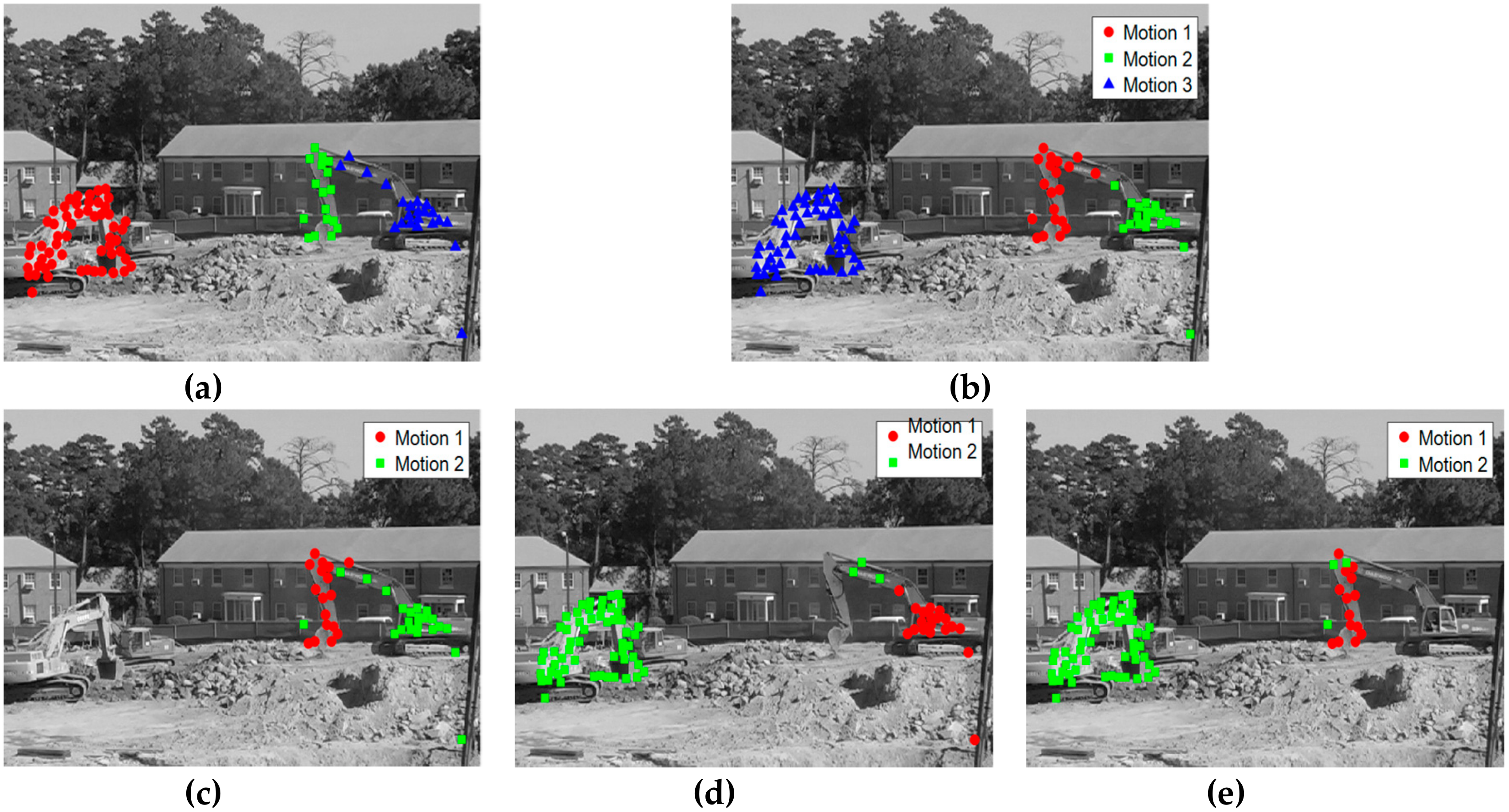

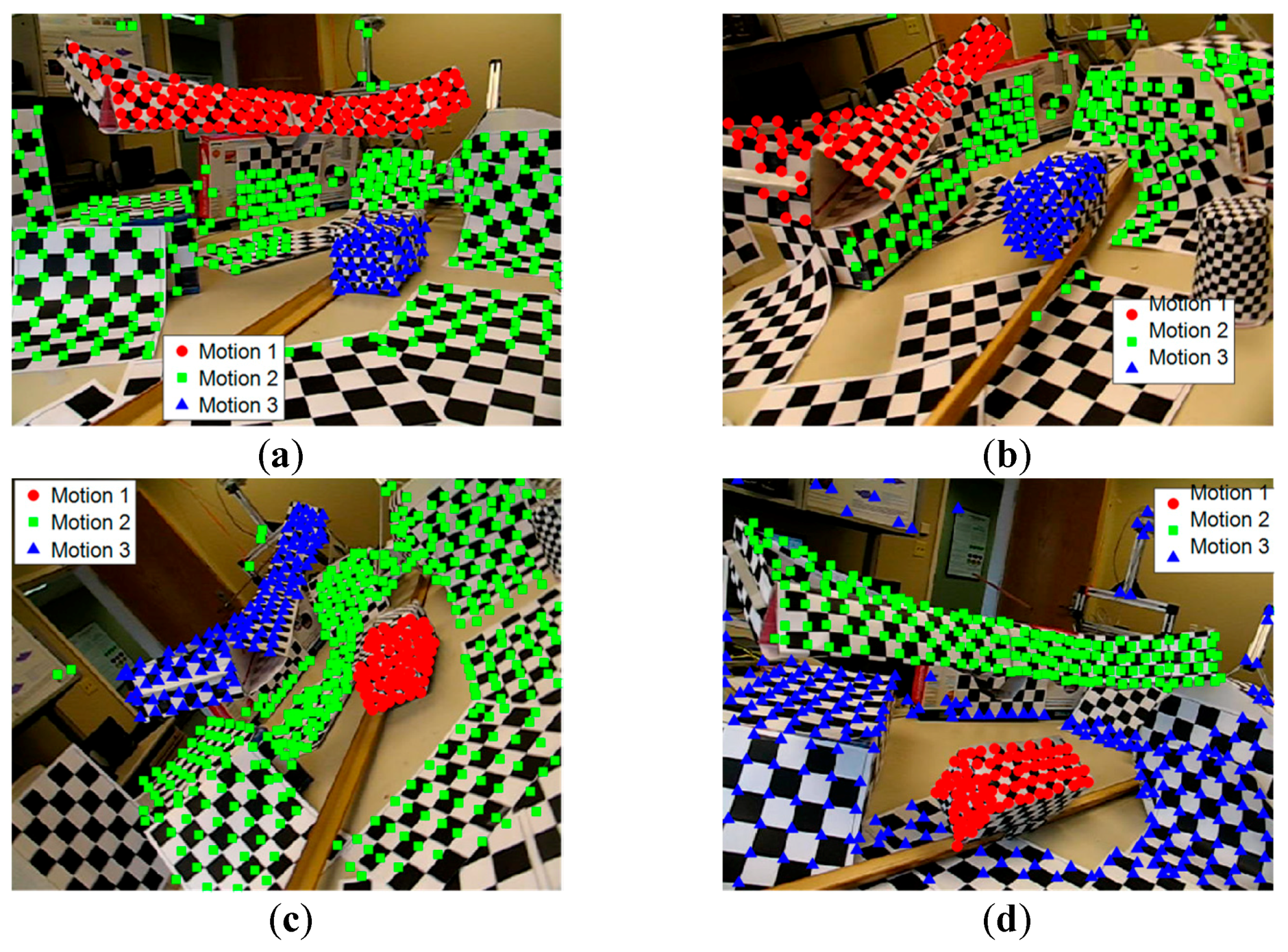

5.1. Results of the Hopkins 155 Dataset

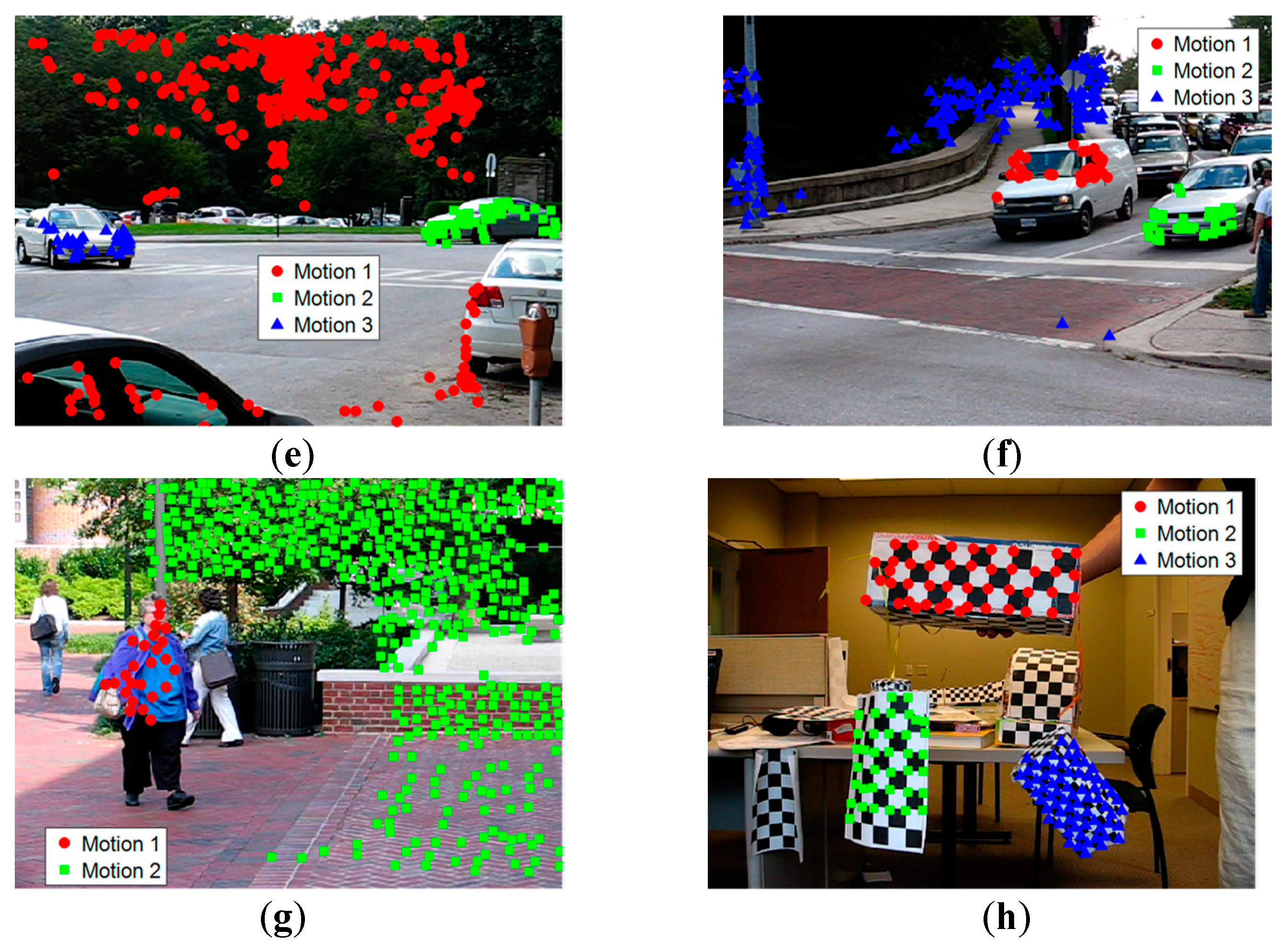

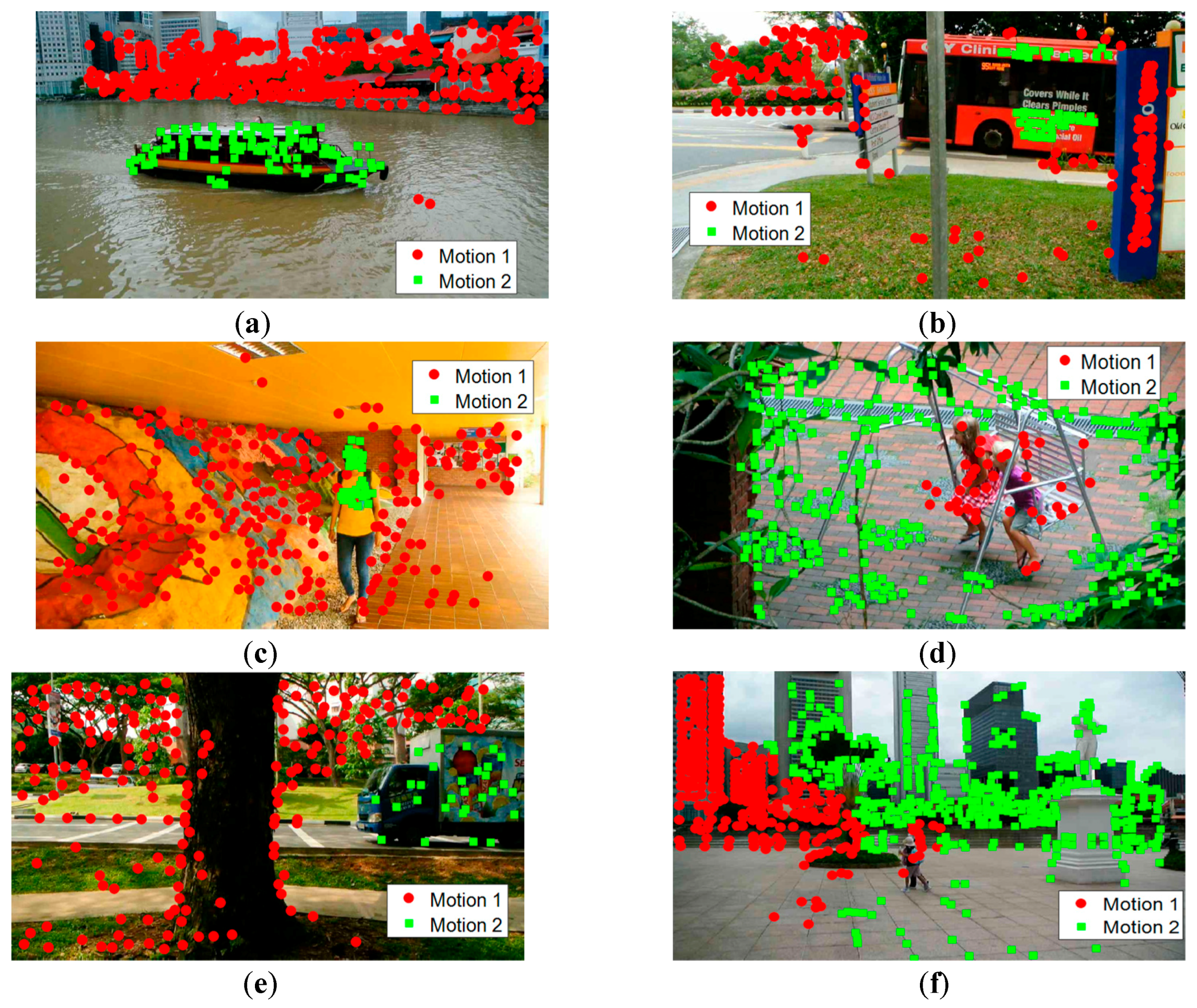

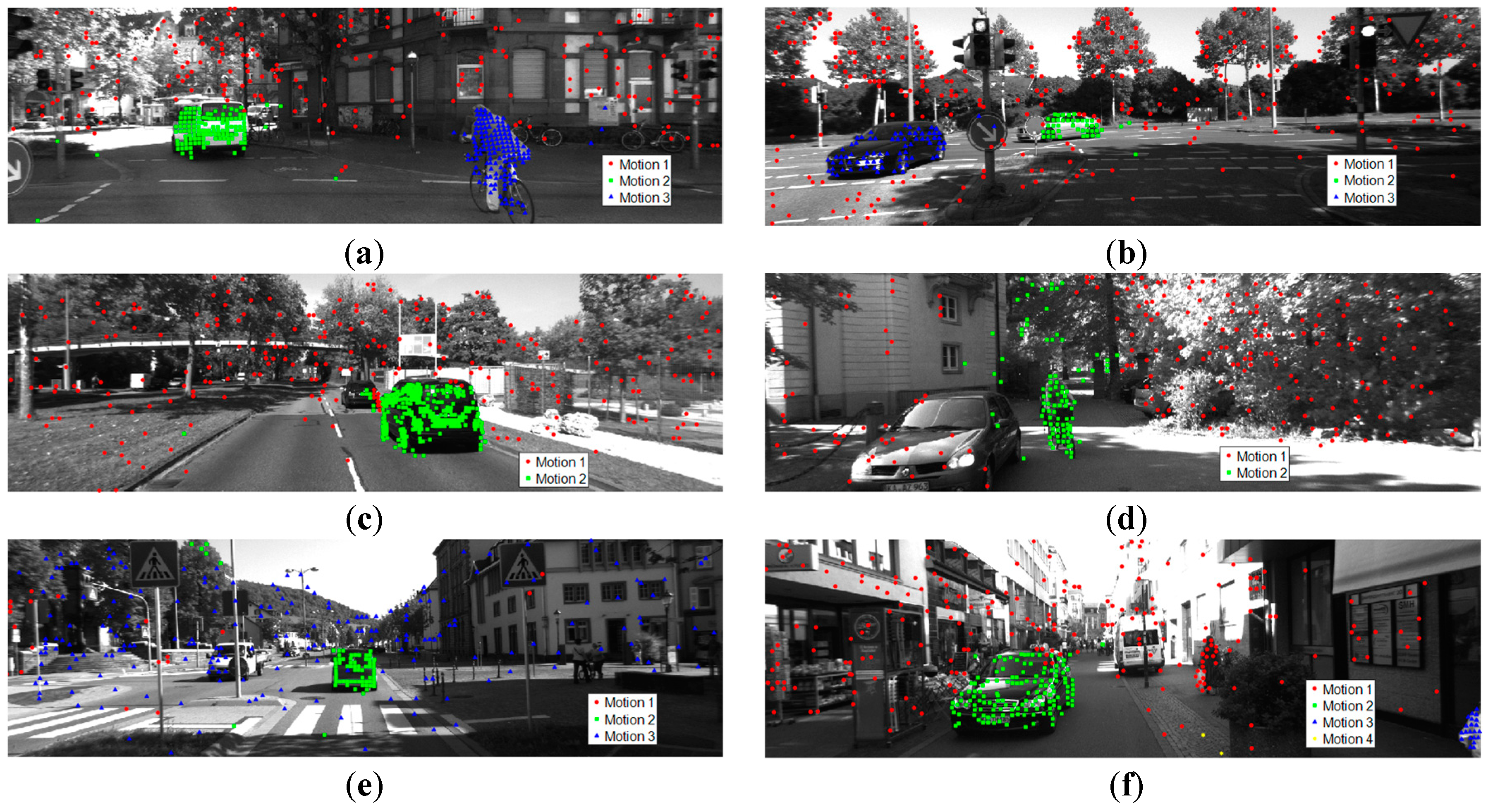

5.2. Results of the Real-World Dataset

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Zappella, L.; Llado, X.; Salvi, J. Motion segmentation: A review. Front. Artif. Intell. Appl. 2008, 184, 398–407. [Google Scholar]

- Boult, T.E.; Brown, L.G. Factorization-based segmentation of motions. In Proceedings of the IEEE Workshop on Visual Motion, Princeton, NJ, USA, 7–9 October 1991; pp. 179–186. [Google Scholar]

- Tomasi, C.; Kanade, T. Shape and motion from image streams under orthography: A factorization method. Int. J. Comput. Vis. 1992, 9, 137–154. [Google Scholar] [CrossRef]

- Goh, A.; Vidal, R. Segmenting motions of different types by unsupervised manifold clustering. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–6. [Google Scholar]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering: Algorithm, theory, and applications. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2765–2781. [Google Scholar] [CrossRef] [PubMed]

- Vidal, R.; Hartley, R. Motion segmentation with missing data using Power Factorization and GPCA. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; pp. 310–316. [Google Scholar]

- Vidal, R.; Ma, Y.; Sastry, S. Generalized principal component analysis (GPCA). IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1945–1959. [Google Scholar] [CrossRef] [PubMed]

- Vidal, R.; Ma, Y.; Piazzi, J. A new GPCA algorithm for clustering subspaces by fitting, differentiating and dividing polynomials. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Gruber, A.; Weiss, Y. Multibody factorization with uncertainty and missing data using the EM algorithm. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1. [Google Scholar] [CrossRef]

- Sugaya, Y.; Kanatani, K. Geometric structure of degeneracy for multi-body motion segmentation. In International Workshop on Statistical Methods in Video Processing; Springer: Berlin/Heidelberg, Germany, 2004; Volume 3247, pp. 13–25. [Google Scholar]

- Rao, S.; Tron, R.; Vidal, R.; Ma, Y. Motion segmentation in the presence of outlying, incomplete, or corrupted trajectories. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1832–1845. [Google Scholar] [CrossRef] [PubMed]

- Tseng, P. Nearest q-flat to m points. J. Optim. Theory Appl. 2000, 105, 249–252. [Google Scholar] [CrossRef]

- Ho, J.; Yang, M.H.; Lim, J.; Lee, K.C.; Kriegman, D. Clustering appearances of objects under varying illumination conditions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; Volume 1, pp. 11–18. [Google Scholar]

- Zhang, T.; Szlam, A.; Lerman, G. Median K-flats for hybrid linear modeling with many outliers. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 234–241. [Google Scholar]

- Zelnik-Manor, L.; Irani, M. Degeneracies, dependencies and their implications in multi-body and multi-sequence factorizations. In Proceedings of the 2003 IEEE Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 2, pp. 287–293. [Google Scholar]

- Yan, J.; Pollefeys, M. A general framework for motion segmentation: Independent, articulated, rigid, non-rigid, degenerate and non-degenerate. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3954, pp. 94–106. [Google Scholar]

- Fan, Z.M.; Zhou, J.; Wu, Y. Multibody grouping by inference of multiple subspaces from high-dimensional data using oriented-frames. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 91–105. [Google Scholar]

- Elhamifar, E.; Vidal, R. Sparse subspace clustering. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2790–2797. [Google Scholar]

- Lauer, F.; Schnoerr, C. Spectral clustering of linear subspaces for motion segmentation. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 678–685. [Google Scholar]

- Shi, F.; Zhou, Z.; Xiao, J.; Wu, W. Robust Trajectory Clustering for Motion Segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3088–3095. [Google Scholar]

- Ochs, P.; Malik, J.; Brox, T. Segmentation of moving objects by long term video analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1187–1200. [Google Scholar] [CrossRef]

- Li, C.; Vidal, R. Structured sparse subspace clustering: A unified optimization framework. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 277–286. [Google Scholar]

- Vertens, J.; Valada, A.; Burgard, W. Smsnet: Semantic motion segmentation using deep convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 582–589. [Google Scholar]

- Fan, Q.; Yi, Y.; Hao, L.; Mengyin, F.; Shunting, W. Semantic motion segmentation for urban dynamic scene understanding. In Proceedings of the 2016 IEEE International Conference on Automation Science and Engineering (CASE), Fort Worth, TX, USA, 21–25 August 2016; pp. 497–502. [Google Scholar]

- Lin, T.; Wang, C. Deep learning of spatio-temporal features with geometric-based moving point detection for motion segmentation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3058–3065. [Google Scholar]

- Haque, N.; Reddy, D.; Krishna, K.M. Joint semantic and motion segmentation for dynamic scenes using deep convolutional networks. arXiv 2017, arXiv:1704.08331. [Google Scholar]

- Toldo, R.; Fusiello, A. Robust multiple structures estimation with J-Linkage. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; Volume 5302, pp. 537–547. [Google Scholar]

- Chin, T.; Wang, H.; Suter, D. Robust fitting of multiple structures: The statistical learning approach. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 413–420. [Google Scholar]

- Magri, L.; Fusiello, A. T-Linkage: A continuous relaxation of J-Linkage for multi-model fitting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Kyoto, Japan, 29 September–2 October 2014; pp. 3954–3961. [Google Scholar]

- Magri, L.; Fusiello, A. Multiple Models Fitting as a Set Coverage Problem. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3318–3326. [Google Scholar]

- Magri, L.; Fusiello, A. Multiple structure recovery with t-linkage. J. Vis. Commun. Image Represent. 2017, 49, 57–77. [Google Scholar] [CrossRef]

- Pham, T.T.; Chin, T.; Yu, J.; Suter, D. The random cluster model for robust geometric fitting. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1658–1671. [Google Scholar] [CrossRef] [PubMed]

- Magri, L.; Fusiello, A. Robust multiple model fitting with preference analysis and low-rank approximation. In Proceedings of the British Machine Vision Conference 2015, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Dragon, R.; Rosenhahn, B.; Ostermann, J. Multi-scale clustering of frame-to-frame correspondences for motion segmentation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7573, pp. 445–458. [Google Scholar]

- Jian, Y.; Chen, C. Two-view motion segmentation with model selection and outlier removal by ransac-enhanced dirichlet process mixture models. Int. J. Comput. Vis. 2010, 88, 489–501. [Google Scholar] [CrossRef]

- Li, H. Two-view motion segmentation from Linear Programming Relaxation. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 49–56. [Google Scholar]

- Schindler, K.; James, U.; Wang, H. Perspective n-view multibody structure-and-motion through model selection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 606–619. [Google Scholar]

- Schindler, K.; Suter, D. Two-view multibody structure-and-motion with outliers through model selection. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 983–995. [Google Scholar] [CrossRef] [PubMed]

- Torr, P. Bayesian model estimation and selection for epipolar geometry and generic manifold fitting. Int. J. Comput. Vis. 2002, 50, 35–61. [Google Scholar] [CrossRef]

- Torr, P. Geometric motion segmentation and model selection. Philos. Trans. R. Soc. A 1998, 356, 1321–1338. [Google Scholar] [CrossRef]

- Costeira, J.P.; Kanade, T. A multibody factorization method for independently moving objects. Int. J. Comput. Vis. 1998, 29, 159–179. [Google Scholar] [CrossRef]

- Gruber, A.; Weiss, Y. Incorporating non-motion cues into 3D motion segmentation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3953, pp. 84–97. [Google Scholar]

- Kanatani, K. Evaluation and selection of models for motion segmentation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2352, pp. 335–349. [Google Scholar]

- Sugaya, Y.; Kanatani, K. Multi-stage unsupervised learning for multi-body motion segmentation. IEICE Trans. Inf. Syst. 2004, 87, 1935–1942. [Google Scholar]

- Vidal, R.; Ma, Y.; Soatto, S.; Sastry, S. Two-view multibody structure from motion. Int. J. Comput. Vis. 2006, 68, 7–25. [Google Scholar] [CrossRef]

- Wolf, L.; Shashua, A. Two-body segmentation from two perspective views. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 263–270. [Google Scholar]

- Li, Z.; Guo, J.; Cheong, L.; Zhou, S.Z. Perspective Motion Segmentation via Collaborative Clustering. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1369–1376. [Google Scholar]

- Zisserman, A.; Hartley, R. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003; p. 655. [Google Scholar]

- Chin, T.; Yu, J.; Suter, D. Accelerated hypothesis generation for multi-structure data via preference analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 625–638. [Google Scholar] [CrossRef]

- Shapira, L.; Avidan, S.; Shamir, A. Mode-Detection via Median-Shift. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1909–1916. [Google Scholar]

- Datar, M.; Indyk, P.; Immorlica, N.; Mirrokni, V.S. Locality-sensitive hashing scheme based on p-stable distributions. In Proceedings of the Twentieth Annual Symposium on Computational Geometry, New York, NY, USA, 8–11 June 2004; pp. 253–262. [Google Scholar]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef]

- Huber, P.J. Robust estimation of a location parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Wong, H.S.; Chin, T.; Yu, J.; Suter, D. Mode seeking over permutations for rapid geometric model fitting. Pattern Recognit. 2013, 46, 257–271. [Google Scholar] [CrossRef]

- Wong, H.S.; Chin, T.; Yu, J.; Suter, D. A simultaneous sample-and-filter strategy for robust multi-structure model fitting. Comput. Vis. Image Underst. 2013, 117, 1755–1769. [Google Scholar] [CrossRef]

- Tron, R.; Vidal, R. A benchmark for the comparison of 3-D motion segmentation algorithms. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Xu, X.; Cheong, L.F.; Li, Z. Motion Segmentation by Exploiting Complementary Geometric Models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2859–2867. [Google Scholar]

- Liu, G.; Lin, Z.; Yan, S.; Sun, J.; Yu, Y.; Ma, Y. Robust recovery of subspace structures by low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 171–184. [Google Scholar] [CrossRef] [PubMed]

- Lai, T.; Wang, H.; Yan, Y.; Chin, T.; Zhao, W. Motion Segmentation via a Sparsity Constraint. IEEE Trans. Intell. Transp. Syst. 2017, 18, 973–983. [Google Scholar] [CrossRef]

- Chen, P. Optimization algorithms on subspaces: Revisiting missing data problem in low-rank matrix. Int. J. Comput. Vis. 2008, 80, 125–142. [Google Scholar] [CrossRef]

| Methods | RANSAC | GPCA | LSA 4n | ALC 5 | SSC | J-Lnkg | T-Lnkg | Proposed |

|---|---|---|---|---|---|---|---|---|

| Checkerboard: 26 sequences | ||||||||

| Mean | 25.78 | 31.95 | 5.80 | 6.78 | 2.97 | 8.55 | 7.05 | 0.17 |

| Median | 26.00 | 32.93 | 1.77 | 0.92 | 0.27 | 4.38 | 2.46 | 0.00 |

| Traffic: 7 sequences | ||||||||

| Mean | 12.83 | 19.83 | 25.07 | 4.01 | 0.58 | 0.97 | 0.48 | 0.08 |

| Median | 11.54 | 19.55 | 23.79 | 1.35 | 0.00 | 0.00 | 0.00 | 0.00 |

| Articulated: 2 sequences | ||||||||

| Mean | 21.38 | 16.85 | 7.25 | 7.25 | 1.42 | 9.04 | 7.97 | 1.65 |

| Median | 21.38 | 16.85 | 7.25 | 7.25 | 0.00 | 9.04 | 7.97 | 1.65 |

| All: 35 sequences | ||||||||

| Mean | 22.94 | 28.66 | 9.73 | 6.26 | 2.45 | 7.06 | 5.78 | 0.24 |

| Median | 22.03 | 28.26 | 2.33 | 1.02 | 0.20 | 0.73 | 0.58 | 0.00 |

| Methods | RANSAC | GPCA | LSA 4n | ALC 5 | SSC | J-Lnkg | T-Lnkg | Proposed |

|---|---|---|---|---|---|---|---|---|

| Checkerboard: 78 sequences | ||||||||

| Mean | 6.52 | 6.09 | 2.57 | 2.56 | 1.12 | 1.20 | 7.05 | 0.02 |

| Median | 1.75 | 1.03 | 0.27 | 0.00 | 0.00 | 0.00 | 2.46 | 0.00 |

| Traffic: 31 sequences | ||||||||

| Mean | 2.55 | 1.41 | 5.43 | 2.83 | 0.02 | 0.70 | 0.02 | 0.00 |

| Median | 0.21 | 0.00 | 1.48 | 0.30 | 0.00 | 0.00 | 0.00 | 0.00 |

| Articulated: 11 sequences | ||||||||

| Mean | 7.25 | 2.88 | 4.10 | 6.90 | 0.62 | 0.82 | 7.97 | 0.82 |

| Median | 2.64 | 0.00 | 0.22 | 0.89 | 0.00 | 0.00 | 7.97 | 0.00 |

| All: 120 sequences | ||||||||

| Mean | 5.56 | 4.59 | 3.45 | 3.03 | 0.82 | 1.62 | 0.86 | 0.09 |

| Median | 1.18 | 0.38 | 0.59 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Methods | MTPV62 | KT3DMoSeg | ||||

|---|---|---|---|---|---|---|

| State of the Art | Perspective 9 clips | Missing Data 12 clips | Hopkins 50 clips | All 62 clips | Average | Median |

| LSA | - | - | - | - | 38.30 | 38.58 |

| GPCA | 40.83 | 28.77 | 16.20 | 16.58 | 34.60 | 33.95 |

| ALC | 0.35 | 0.43 | 18.28 | 14.88 | 24.31 | 19.04 |

| SSC | 9.68 | 17.22 | 2.01 | 5.17 | 33.88 | 33.54 |

| TPV | 0.46 | 0.91 | 2.78 | 2.37 | - | - |

| LRR | - | - | - | - | 33.67 | 36.01 |

| MSSC | - | 0.65 | 0.65 | 0.65 | - | - |

| Proposed | - | 3.36 | 0.16 | 0.78 | 23.69 | 23.97 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Qin, Q.; Luo, B. Motion Segmentation Based on Model Selection in Permutation Space for RGB Sensors. Sensors 2019, 19, 2936. https://doi.org/10.3390/s19132936

Zhao X, Qin Q, Luo B. Motion Segmentation Based on Model Selection in Permutation Space for RGB Sensors. Sensors. 2019; 19(13):2936. https://doi.org/10.3390/s19132936

Chicago/Turabian StyleZhao, Xi, Qianqing Qin, and Bin Luo. 2019. "Motion Segmentation Based on Model Selection in Permutation Space for RGB Sensors" Sensors 19, no. 13: 2936. https://doi.org/10.3390/s19132936

APA StyleZhao, X., Qin, Q., & Luo, B. (2019). Motion Segmentation Based on Model Selection in Permutation Space for RGB Sensors. Sensors, 19(13), 2936. https://doi.org/10.3390/s19132936