Learning the Orientation of a Loosely-Fixed Wearable IMU Relative to the Body Improves the Recognition Rate of Human Postures and Activities

Abstract

1. Introduction

1.1. Multiple Sensors or a Single Sensor?

1.2. Smartphone-Based Human Activity Recognition

1.2.1. Fixed-to-the-Body

1.2.2. Body-Position-Dependent

1.2.3. Body-Position-Dependent

1.3. Extracting Information for Activity Recognition

1.3.1. Accounting for Variability in Device Orientation and Position

1.3.2. Feature Engineering and Classification

1.3.3. Deep Learning/Deep Neural Networks

1.4. Contribution

2. Materials

3. Methods

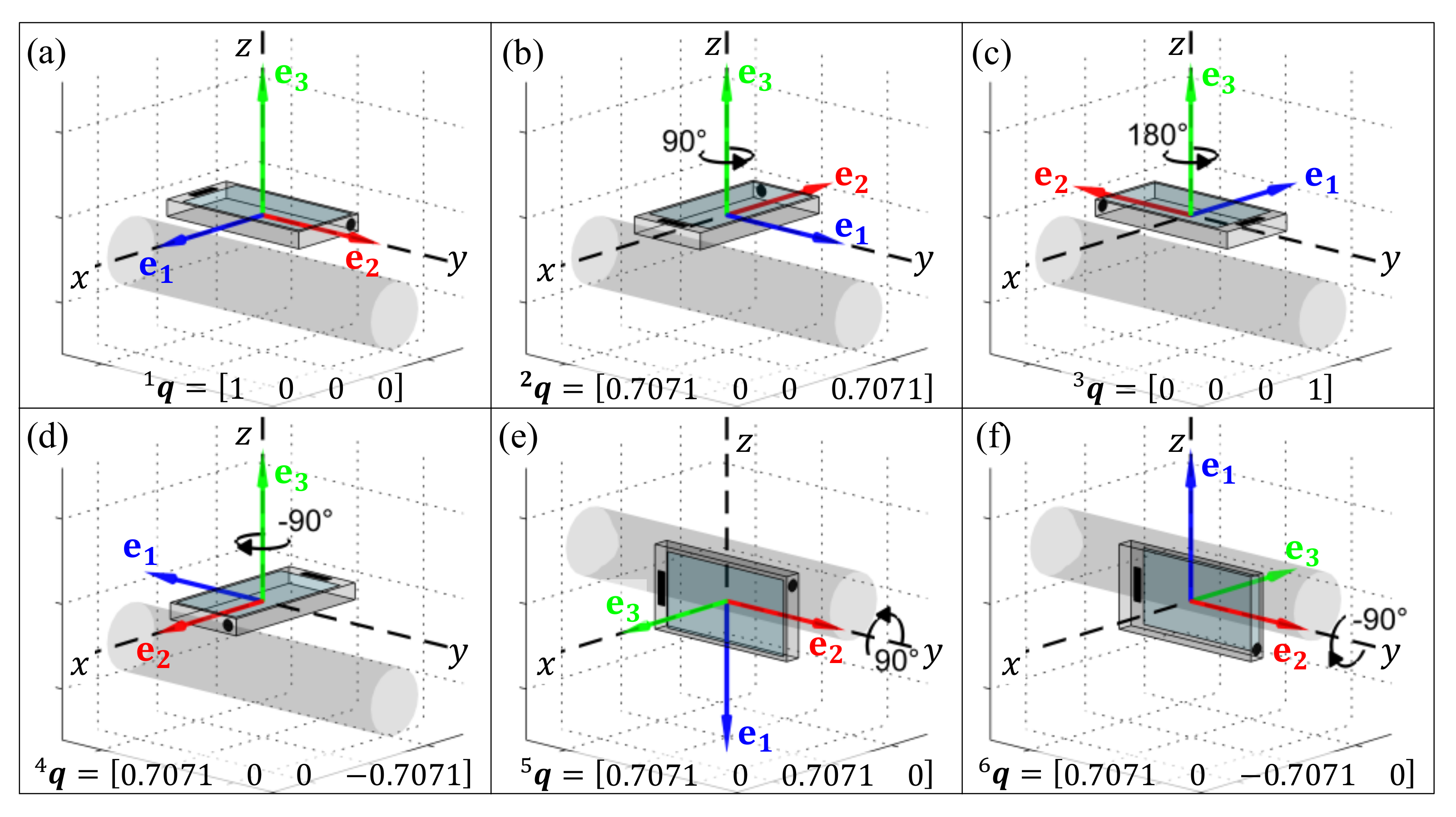

3.1. Generating Data Representative of Different Orientations

3.2. Estimating the Orientation of the IMU

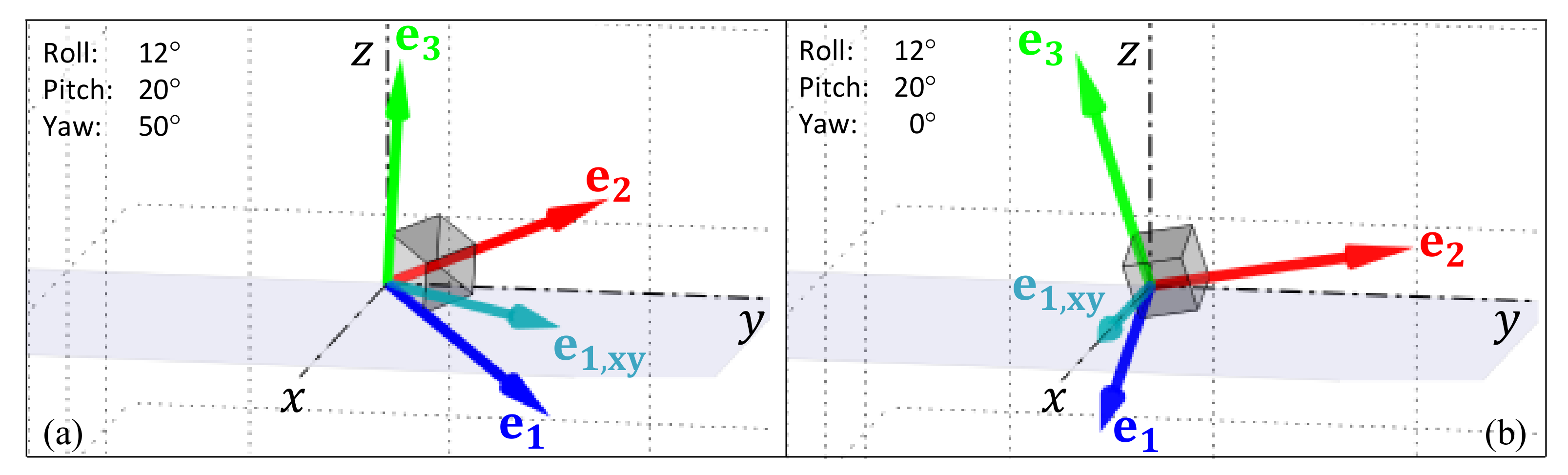

3.2.1. Removing the Heading from the Estimated Orientation

3.2.2. Smoothing the Estimated Orientation

4. Feature Extraction

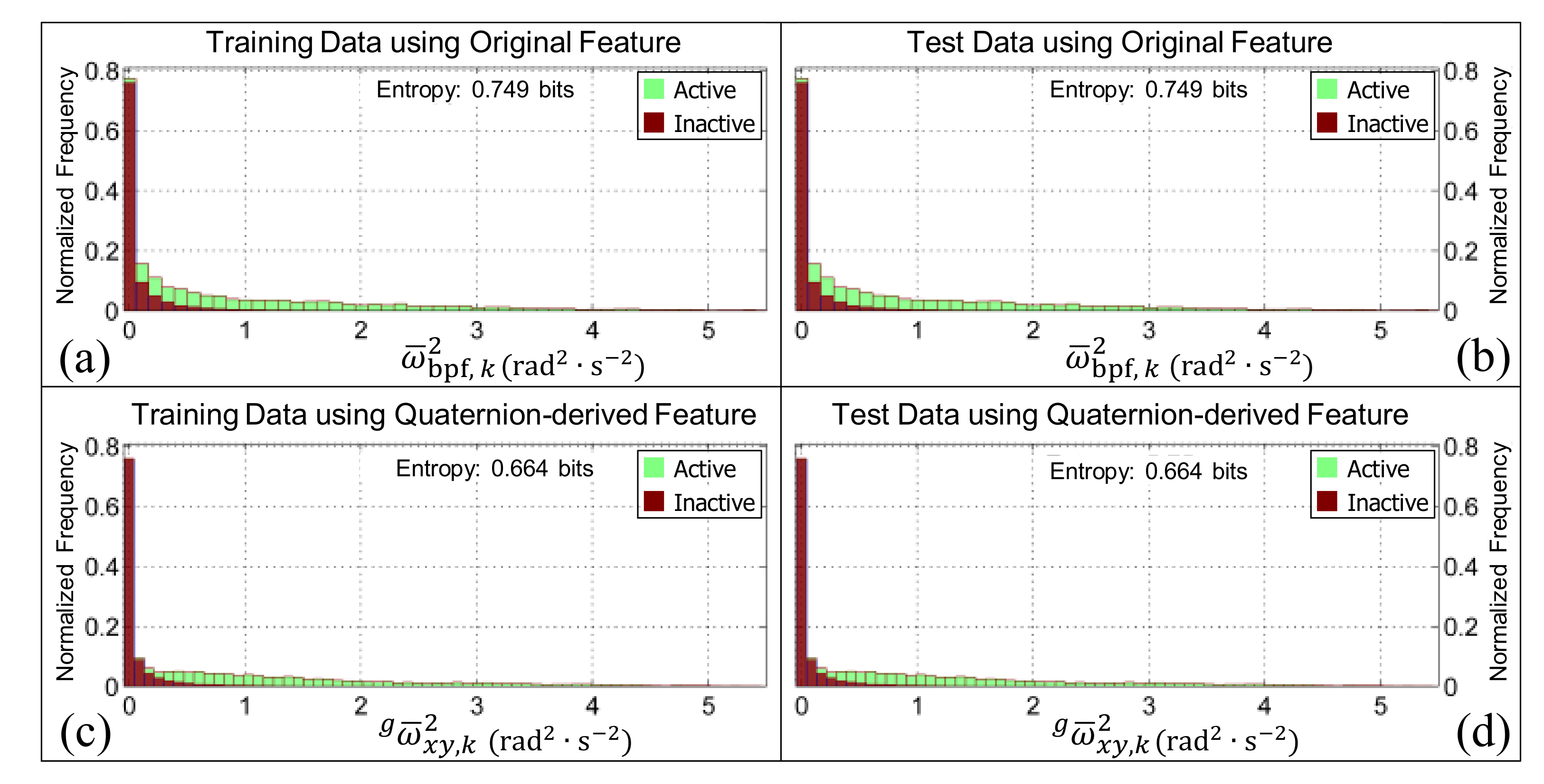

4.1. Squared Magnitude of Pitch/Roll Angular Velocity

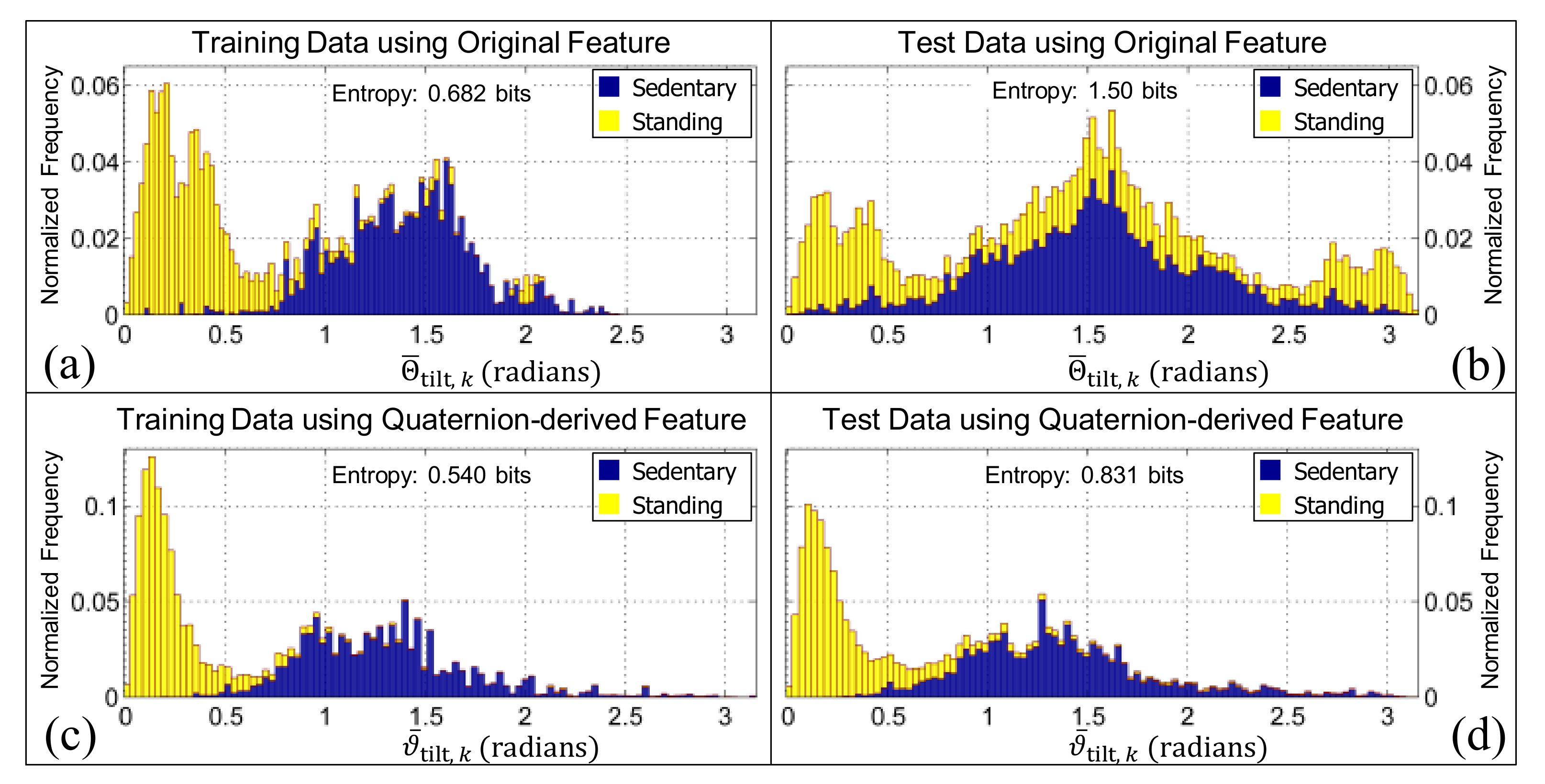

4.2. Detecting Sedentary Periods

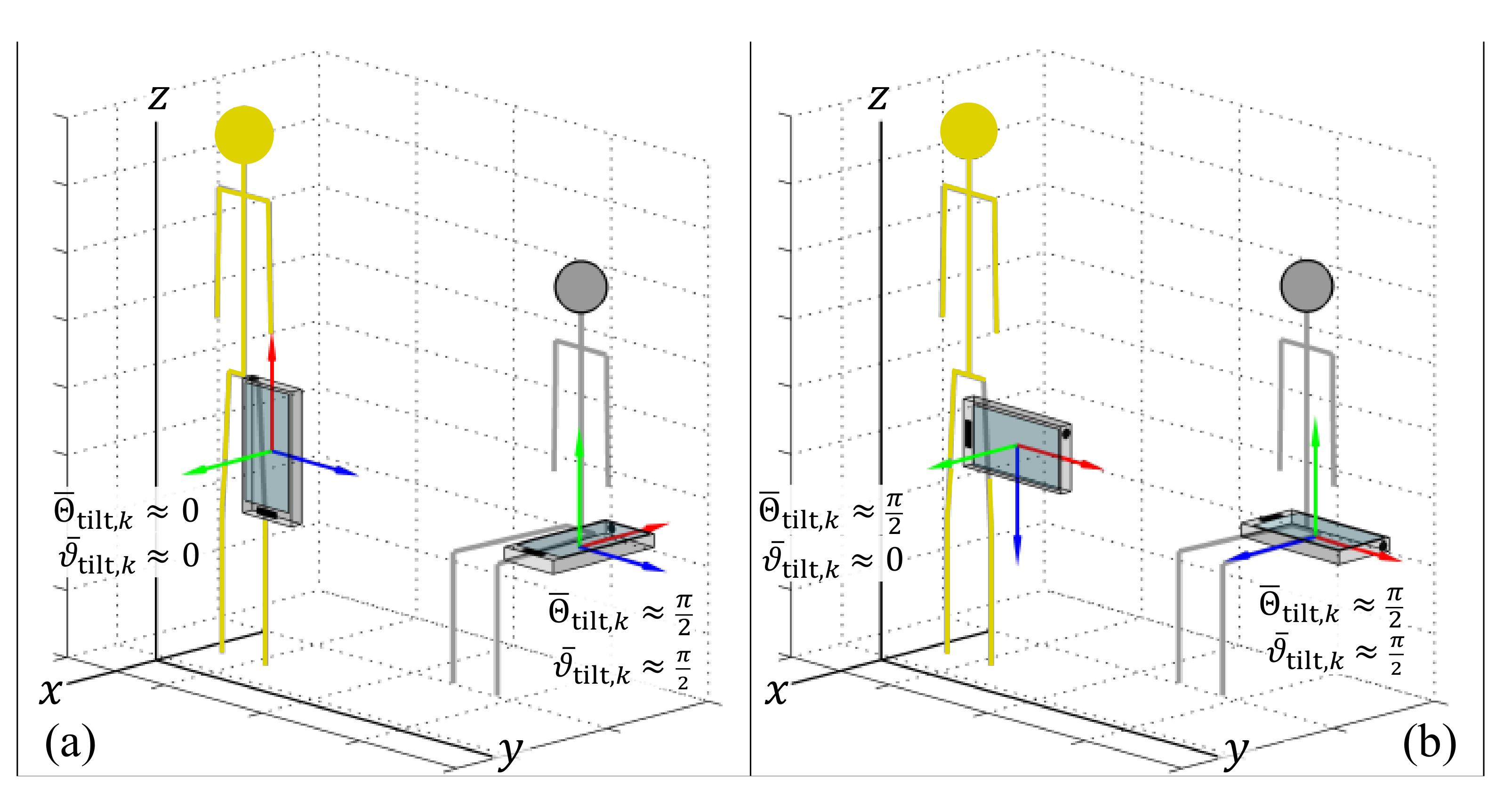

4.2.1. Estimate the Upright Orientation using the Orientation during Walking Periods

4.2.2. Calculate the Shortest Rotation between the Upright Orientation and the Average Recent Orientation

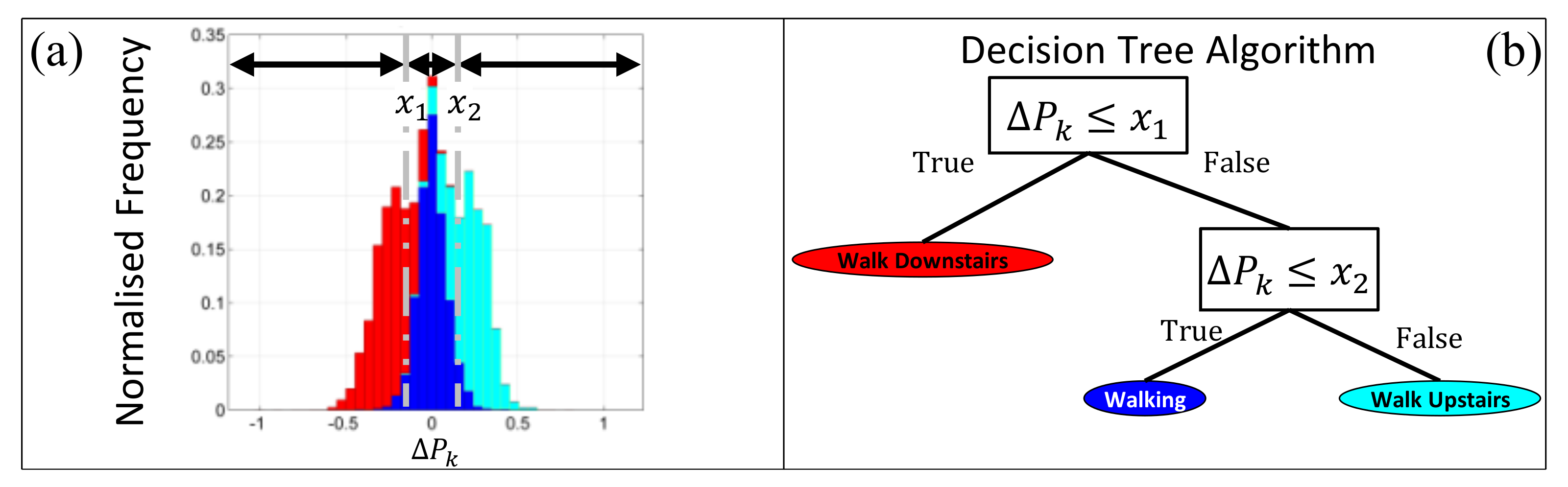

4.3. Estimating Velocity in the Vertical Direction of the GFR

4.3.1. Process Model

4.3.2. Observation Model

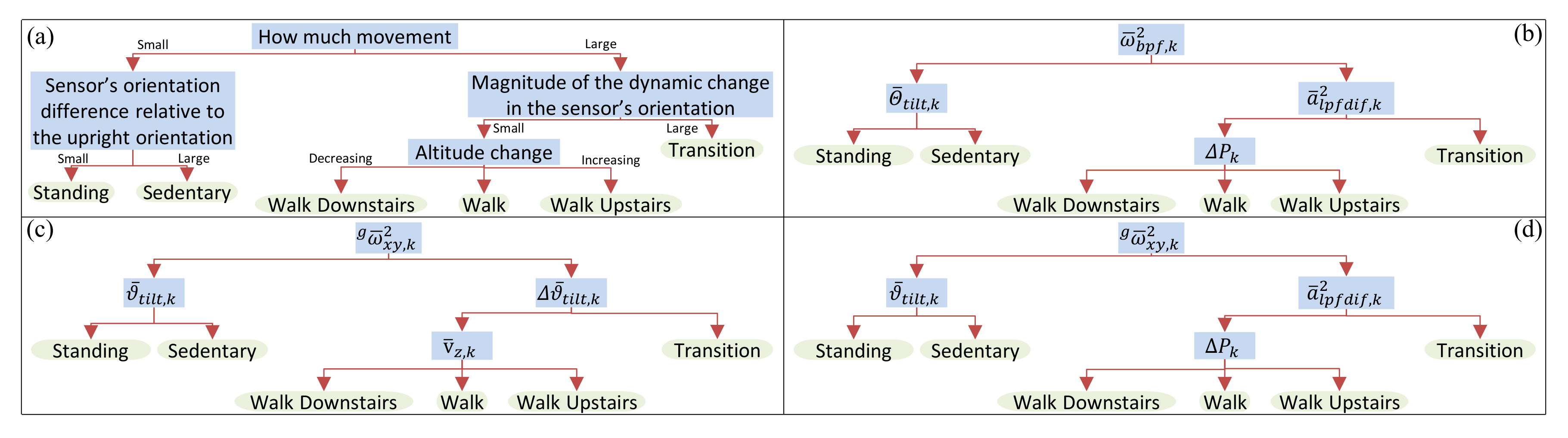

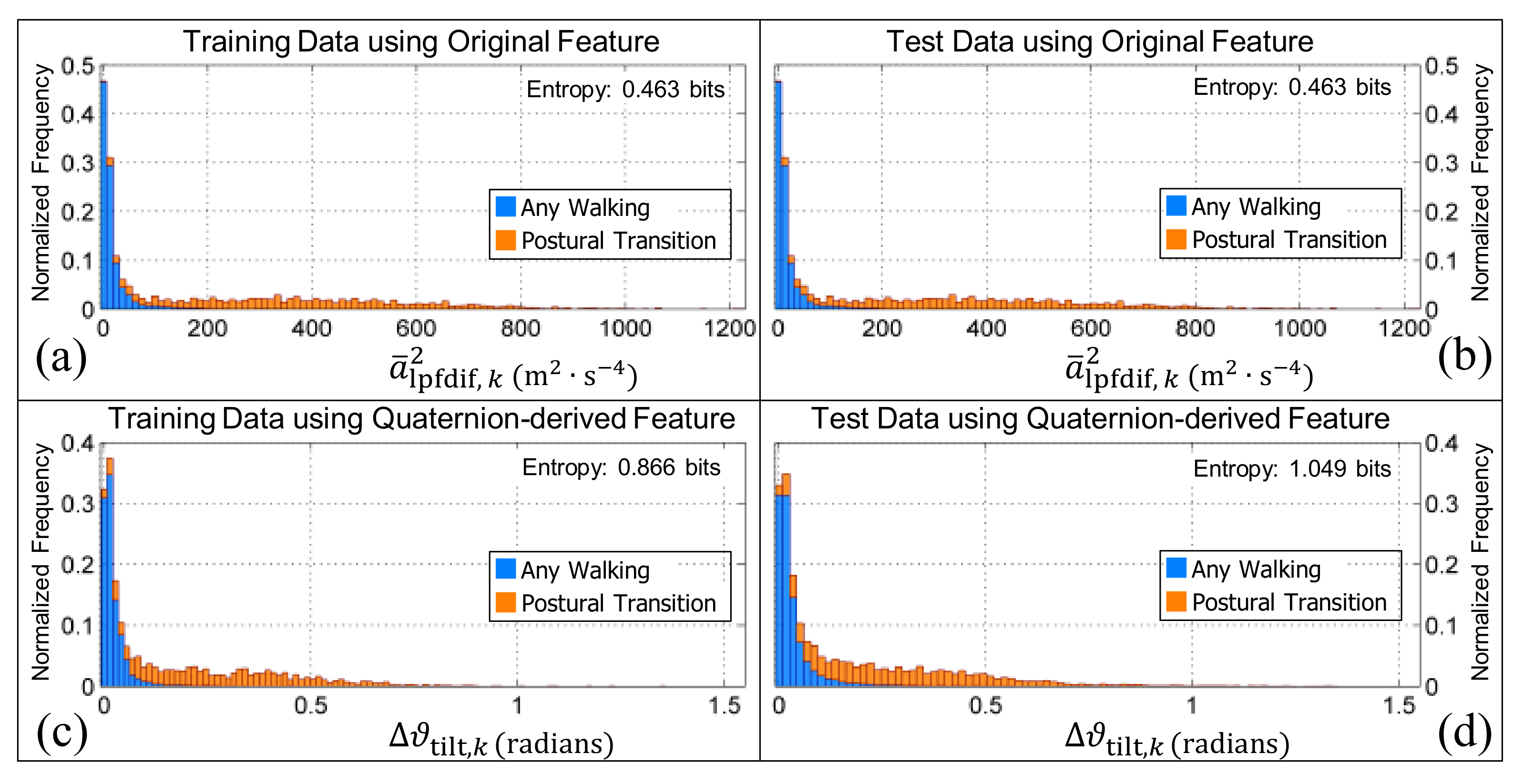

5. Hierarchical Description of Human Activity

6. Models and Performance Metrics

6.1. Performance at a High Sampling Rate

6.2. Translating Performance to Different Sampling Rates

7. Results and Discussion

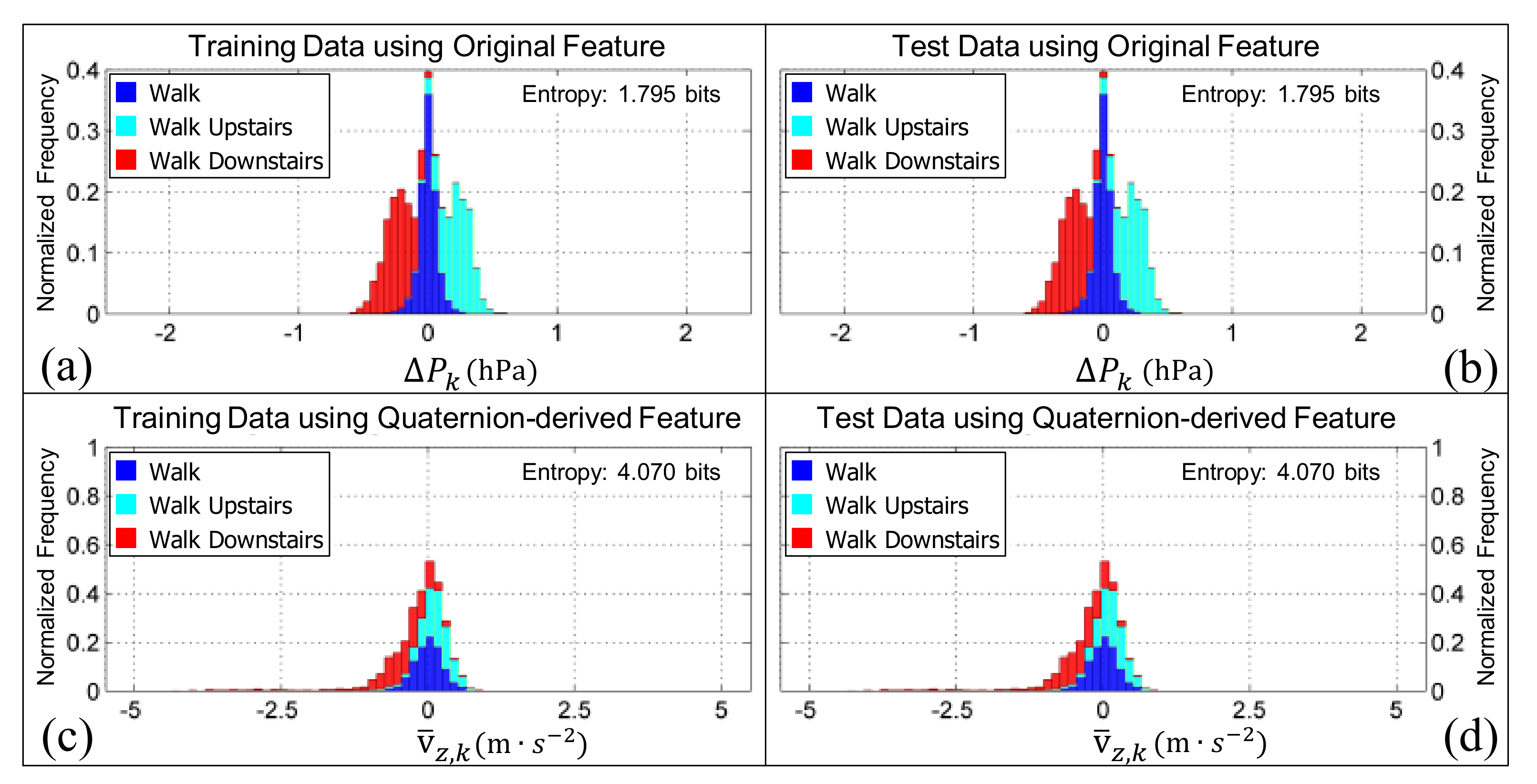

7.1. Comparing Features Using Shannon Entropy

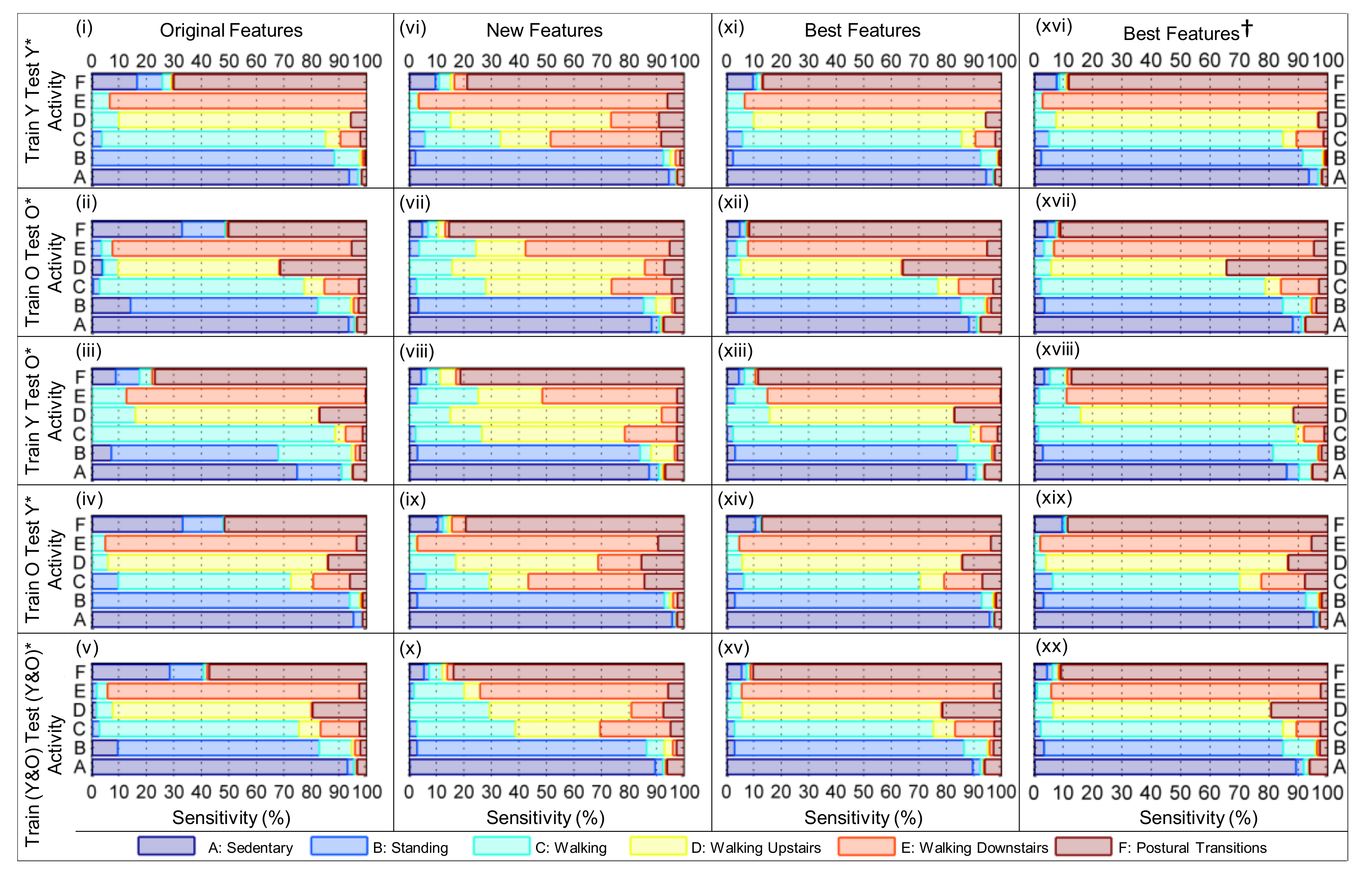

7.2. Comparing the Overall Performance of Models for HAR

7.3. Identifying Which Features Drive Model Performance

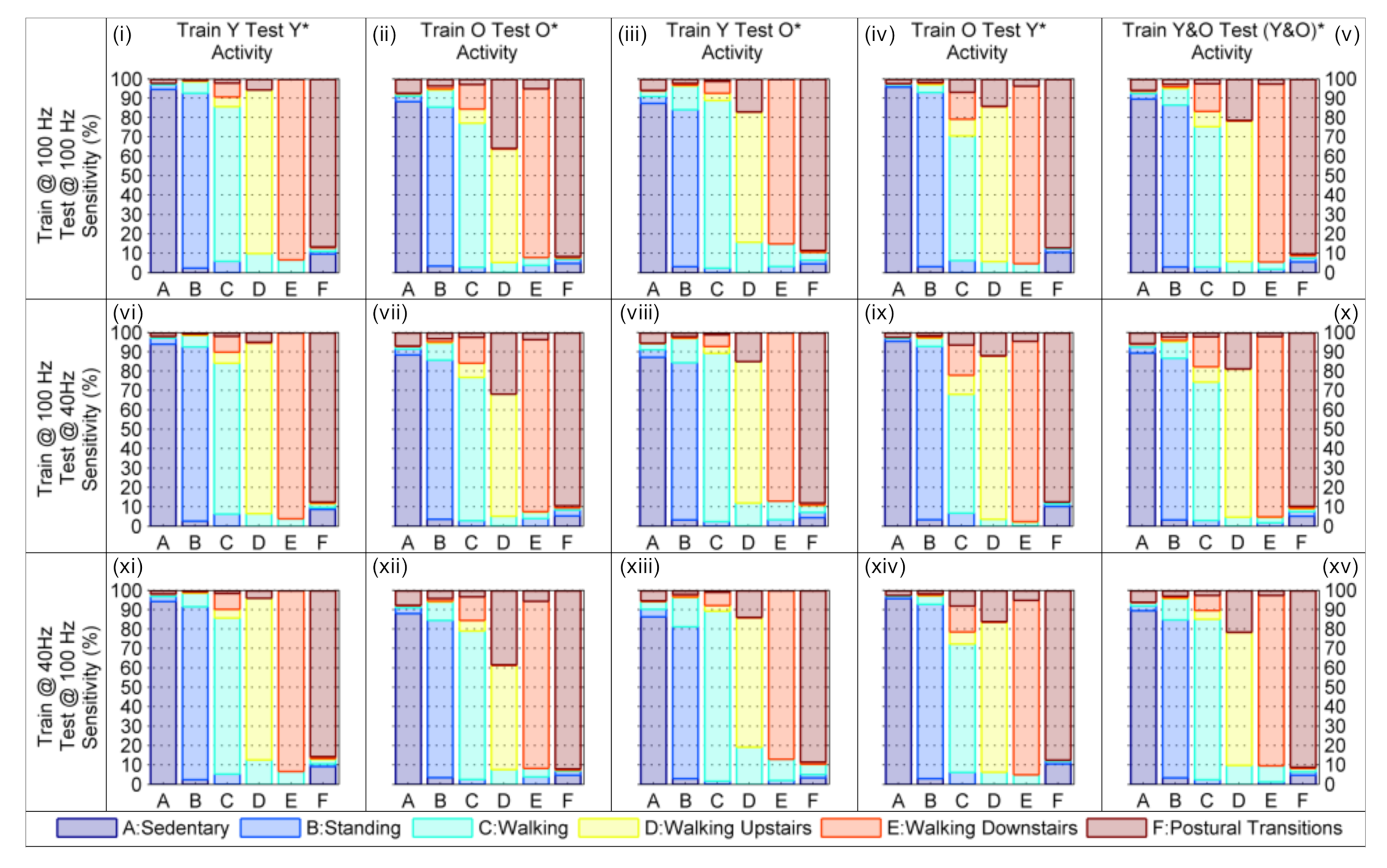

7.4. Comparing Model Performance at Different Sampling Rates

7.5. Comparison to the State-of-the-Art

8. Limitations

9. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Average of Multiple Quaternions

Appendix B. Shortest Rotation Between Two Quaternions

Appendix C. Ninety-five Percent Confidence Intervals for the Class Sensitivity and Class Specificity of the Hierarchical Models of Human Activity

| Activity | Sensitivity (%) | Specificity (%) | |||||

|---|---|---|---|---|---|---|---|

| Original | New | Best | Original | New | Best | ||

| Features | Features | Features | Features | Features | Features | ||

| Sedentary | |||||||

| Standing | |||||||

| Train Y | Walking | ||||||

| Test Y * | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transitions | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train O | Walking | ||||||

| Test O * | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transitions | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train Y | Walking | ||||||

| Test O * | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transitions | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train O | Walking | ||||||

| Test Y * | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transitions | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train Y&O | Walking | ||||||

| Test (Y&O) * | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transitions | |||||||

| Activity | Sensitivity (%) | Specificity (%) | |||||

|---|---|---|---|---|---|---|---|

| Train | 100 Hz | 100 Hz | 40 Hz | 100 Hz | 100 Hz | 40 Hz | |

| Test | 100 Hz | 40 Hz | 100 Hz | 100 Hz | 40 Hz | 100 Hz | |

| Sedentary | |||||||

| Standing | |||||||

| Train Y | Walking | ||||||

| Test Y | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transition | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train O | Walking | ||||||

| Test O | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transition | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train Y | Walking | ||||||

| Test O | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transition | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train O | Walking | ||||||

| Test Y | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transition | |||||||

| Sedentary | |||||||

| Standing | |||||||

| Train Y&O | Walking | ||||||

| Test (Y&O) | Walking Upstairs | ||||||

| Walking Downstairs | |||||||

| Postural Transition | |||||||

References

- Appelboom, G.; Camacho, E.; Abraham, M.E.; Bruce, S.S.; Dumont, E.L.; Zacharia, B.E.; D’Amico, R.; Slomian, J.; Reginster, J.Y.; Bruyère, O.; et al. Smart wearable body sensors for patient self-assessment and monitoring. Arch. Public Health 2014, 72, 28–36. [Google Scholar] [CrossRef] [PubMed]

- Rosario, M.D.; Lovell, N.H.; Fildes, J.; Holgate, K.; Yu, J.; Ferry, C.; Schreier, G.; Ooi, S.Y.; Redmond, S.J. Evaluation of an mHealth-based Adjunct to Outpatient Cardiac Rehabilitation. IEEE J. Biomed. Health Inform. 2018, 22, 1938–1948. [Google Scholar] [CrossRef] [PubMed]

- Seel, T.; Raisch, J.; Schauer, T. IMU-Based Joint Angle Measurement for Gait Analysis. Sensors 2014, 14, 6891–6909. [Google Scholar] [CrossRef] [PubMed]

- Dadashi, F.; Mariani, B.; Rochat, S.; Büla, C.J.; Santos-Eggimann, B.; Aminian, K. Gait and Foot Clearance Parameters Obtained Using Shoe-Worn Inertial Sensors in a Large-Population Sample of Older Adults. Sensors 2014, 14, 443–457. [Google Scholar] [CrossRef] [PubMed]

- Wang, K.; Delbaere, K.; Brodie, M.; Lovell, N.; Kark, L.; Lord, S.; Redmond, S. Differences between Gait on Stairs and Flat Surfaces in Relation to Fall Risk and Future Falls. IEEE J. Biomed. Health Inform. 2017, 21, 1479–1486. [Google Scholar] [CrossRef] [PubMed]

- Casamassima, F.; Ferrari, A.; Milosevic, B.; Ginis, P.; Farella, E.; Rocchi, L. A Wearable System for Gait Training in Subjects with Parkinson’s Disease. Sensors 2014, 14, 6229–6246. [Google Scholar] [CrossRef] [PubMed]

- Patel, S.; Lorincz, K.; Hughes, R.; Huggins, N.; Growdon, J.; Standaert, D.; Akay, M.; Dy, J.; Welsh, M.; Bonato, P. Monitoring motor fluctuations in patients with Parkinson’s disease using wearable sensors. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 864–873. [Google Scholar] [CrossRef]

- Hubble, R.P.; Naughton, G.A.; Silburn, P.A.; Cole, M.H. Wearable sensor use for assessing standing balance and walking stability in people with Parkinson’s disease: A systematic review. PLoS ONE 2015, 10, 1–22. [Google Scholar] [CrossRef]

- Healy, G.N.; Wijndaele, K.; Dunstan, D.W.; Shaw, J.E.; Salmon, J.; Zimmet, P.Z.; Owen, N. Objectively measured sedentary time, physical activity, and metabolic risk: The Australian diabetes, obesity and lifestyle study (AusDiab). Diabetes Care 2008, 31, 369–371. [Google Scholar] [CrossRef]

- Tudor-Locke, C.; Brashear, M.; Johnson, W.; Katzmarzyk, P. Accelerometer profiles of physical activity and inactivity in normal weight, overweight, and obese U.S. men and women. Int. J. Behav. Nutr. Phys. Act. 2010, 7, 60–70. [Google Scholar] [CrossRef]

- Westerterp, K. Physical activity assessment with accelerometers. Int. J. Obes. Relat. Metab. Disord. J. Int. Assoc. Study Obes. 1999, 23 (Suppl. 3), S45–S49. [Google Scholar] [CrossRef]

- Zhang, K.; Werner, P.; Sun, M.; Pi-Sunyer, F.X.; Boozer, C.N. Measurement of Human Daily Physical Activity. Obes. Res. 2003, 11, 33–40. [Google Scholar] [CrossRef] [PubMed]

- Trost, S.G.; McIver, K.L.; Pate, R.R. Conducting accelerometer-based activity assessments in field-based research. Med. Sci. Sports Exerc. 2005, 37, S531–S543. [Google Scholar] [CrossRef] [PubMed]

- Atallah, L.; Lo, B.; King, R.; Yang, G.Z. Sensor Positioning for Activity Recognition Using Wearable Accelerometers. IEEE Trans. Biomed. Circuits Syst. 2011, 5, 320–329. [Google Scholar] [CrossRef] [PubMed]

- Ridgers, N.D.; Salmon, J.; Ridley, K.; O’Connell, E.; Arundell, L.; Timperio, A. Agreement between activPAL and ActiGraph for assessing children’s sedentary time. Int. J. Behav. Nutr. Phys. Act. 2012, 9, 15. [Google Scholar] [CrossRef] [PubMed]

- Steeves, J.A.; Bowles, H.R.; Mcclain, J.J.; Dodd, K.W.; Brychta, R.J.; Wang, J.; Chen, K.Y. Ability of thigh-worn ActiGraph and activPAL monitors to classify posture and motion. Med. Sci. Sports Exerc. 2015, 47, 952–959. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.C.; Hsu, Y.L. A Review of Accelerometry-Based Wearable Motion Detectors for Physical Activity Monitoring. Sensors 2010, 10, 7772–7788. [Google Scholar] [CrossRef]

- Smith, A. Nearly Half of American Adults are Smartphone Owners. 2012. Available online: http://s4.goeshow.com/bricepac/pie/2013/PDF/Software_and_Apps,_in_the_City_and_on_the_Campus_3-13-13.pdf (accessed on 24 May 2017).

- Smith, A. Smartphone Ownership 2013. 2013. Available online: http://boletines.prisadigital.com/PIP_Smartphone_adoption_2013.pdf (accessed on 24 May 2017).

- Ting, D.H.; Lim, S.F.; Patanmacia, T.S.; Low, C.G.; Ker, G.C. Dependency on Smartphone and the Impact on Purchase Behaviour. Young Consum. 2011, 12, 193–203. [Google Scholar] [CrossRef]

- Sarwar, M.; Soomro, T.R. Impact of Smartphone’s on Society. Eur. J. Sci. Res. 2013, 98, 216–226. [Google Scholar]

- Fiordelli, M.; Diviani, N.; Schulz, J.P. Mapping mHealth Research: A Decade of Evolution. J. Med. Internet Res. 2013, 15, 1–14. [Google Scholar] [CrossRef]

- Neubeck, L.; Lowres, N.; Benjamin, E.J.; Freedman, S.B.; Coorey, G.; Redfern, J. The mobile revolution—Using smartphone apps to prevent cardiovascular disease. Nat. Rev. Cardiol. 2015, 12, 350–360. [Google Scholar] [CrossRef] [PubMed]

- Del Rosario, M.B.; Redmond, S.J.; Lovell, N.H. Tracking the Evolution of Smartphone Sensing for Monitoring Human Movement. Sensors 2015, 15, 18901–18933. [Google Scholar] [CrossRef] [PubMed]

- Mellone, S.; Tacconi, C.; Schwickert, L.; Klenk, J.; Becker, C.; Chiari, L. Smartphone-based solutions for fall detection and prevention: The FARSEEING approach. Z. Gerontol. Geriatr. 2012, 45, 722–727. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; McCullagh, P.; Nugent, C.; Zheng, H. Activity monitoring using a smart phone’s accelerometer with hierarchical classification. In Proceedings of the Sixth International Conference on Intelligent Environments (IE), Kuala Lumpur, Malaysia, 19–21 July 2010; pp. 158–163. [Google Scholar]

- Mitchell, E.; Monaghan, D.; Connor, N. Classification of sporting activities using smartphone accelerometers. Sensors 2013, 13, 5317–5337. [Google Scholar] [CrossRef] [PubMed]

- Marshall, J. Smartphone sensing for distributed swim stroke coaching and research. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, 8–12 September 2013; ACM: New York, NY, USA, 2013. UbiComp ’13 Adjunct. pp. 1413–1416. [Google Scholar]

- Shoaib, M.; Bosch, S.; Incel, O.; Scholten, H.; Havinga, P. Fusion of smartphone motion sensors for physical activity recognition. Sensors 2014, 14, 10146–10176. [Google Scholar] [CrossRef] [PubMed]

- Antos, S.A.; Albert, M.V.; Kording, K.P. Hand, belt, pocket or bag: Practical activity tracking with mobile phones. J. Neurosci. Methods 2014, 231, 22–30. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.M.; Tufail, A.; Khattak, A.M.; Laine, T.H. Activity recognition on smartphones via sensor-fusion and KDA-based SVMs. Int. J. Distrib. Sens. Netw. 2014, 2014, 1–14. [Google Scholar] [CrossRef]

- Lu, H.; Yang, J.; Liu, Z.; Lane, N.D.; Choudhury, T.; Campbell, A.T. The jigsaw continuous sensing engine for mobile phone applications. In Proceedings of the 8th ACM Conference on Embedded Networked Sensor Systems, SenSys ’10, Zurich, Switzerland, 3–5 November 2010; ACM: New York, NY, USA, 2010; pp. 71–84. [Google Scholar]

- Anjum, A.; Ilyas, M. Activity recognition using smartphone sensors. In Proceedings of the IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2013; pp. 914–919. [Google Scholar]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. A triaxial accelerometer-based physical-activity recognition via augmented-signal features and a hierarchical recognizer. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1166–1172. [Google Scholar] [CrossRef]

- Khan, A.; Lee, Y.K.; Lee, S.; Kim, T.S. Human activity recognition via an accelerometer-enabled-smartphone using kernel discriminant analysis. In Proceedings of the 5th International Conference on Future Information Technology (FutureTech), Busan, Korea, 21–23 May 2010; pp. 1–6. [Google Scholar]

- Henpraserttae, A.; Thiemjarus, S.; Marukatat, S. Accurate activity recognition using a mobile phone regardless of device orientation and location. In Proceedings of the International Conference on Body Sensor Networks, Dallas, TX, USA, 23–25 May 2011; pp. 41–46. [Google Scholar]

- Yurtman, A.; Barshan, B. Activity Recognition Invariant to Sensor Orientation with Wearable Motion Sensors. Sensors 2017, 17, 1838. [Google Scholar] [CrossRef]

- Yurtman, A.; Barshan, B.; Fidan, B. Activity Recognition Invariant to Wearable Sensor Unit Orientation Using Differential Rotational Transformations Represented by Quaternions. Sensors 2018, 18, 2725. [Google Scholar] [CrossRef]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.J.; Howard, D.; Meijer, K.; Crompton, R. Activity identification using body-mounted sensors—A review of classification techniques. Physiol. Meas. 2009, 30, 1–33. [Google Scholar] [CrossRef] [PubMed]

- Figo, D.; Diniz, P.C.; Ferreira, D.R.; Cardoso, J.M.P. Preprocessing techniques for context recognition from accelerometer data. Pers. Ubiquitous Comput. 2010, 14, 645–662. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Raví, D.; Wong, C.; Lo, B.; Yang, G. A Deep Learning Approach to on-Node Sensor Data Analytics for Mobile or Wearable Devices. IEEE J. Biomed. Health Inform. 2017, 21, 56–64. [Google Scholar] [CrossRef] [PubMed]

- Milenkoski, M.; Trivodaliev, K.; Kalajdziski, S.; Jovanov, M.; Stojkoska, B.R. Real time human activity recognition on smartphones using LSTM networks. In Proceedings of the International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 21–25 May 2018; pp. 1126–1131. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A Survey. Pattern Recognit. Lett. 2018. [Google Scholar] [CrossRef]

- Grant, P.M.; Ryan, C.G.; Tigbe, W.W.; Granat, M.H. The validation of a novel activity monitor in the measurement of posture and motion during everyday activities. Br. J. Sports Med. 2006, 40, 992–997. [Google Scholar] [CrossRef] [PubMed]

- Fisher, C.J. Using an Accelerometer for Inclination Sensing; Application Note: AN-1057; Analog Devices: Norwood, MA, USA, 2010; pp. 1–8. [Google Scholar]

- Reiff, C.; Marlatt, K.; Dengel, D.R. Difference in caloric expenditure in sitting versus standing desks. J. Phys. Act. Health 2012, 9, 1009–1011. [Google Scholar] [CrossRef]

- Mansoubi, M.; Pearson, N.; Clemes, S.A.; Biddle, S.J.; Bodicoat, D.H.; Tolfrey, K.; Edwardson, C.L.; Yates, T. Energy expenditure during common sitting and standing tasks: examining the 1.5 MET definition of sedentary behaviour. BMC Public Health 2015, 15, 516–523. [Google Scholar] [CrossRef]

- Petersen, C.B.; Bauman, A.; Tolstrup, J.S. Total sitting time and the risk of incident diabetes in Danish adults (the DANHES cohort) over 5 years: A prospective study. Br. J. Sports Med. 2016, 50, 1382–1387. [Google Scholar] [CrossRef] [PubMed]

- Åsvold, B.O.; Midthjell, K.; Krokstad, S.; Rangul, V.; Bauman, A. Prolonged sitting may increase diabetes risk in physically inactive individuals: An 11 year follow-up of the HUNT Study, Norway. Diabetologia 2017, 60, 830–835. [Google Scholar] [CrossRef] [PubMed]

- Wilmot, E.G.; Edwardson, C.L.; Achana, F.A.; Davies, M.J.; Gorely, T.; Gray, L.J.; Khunti, K.; Yates, T.; Biddle, S.J.H. Sedentary time in adults and the association with diabetes, cardiovascular disease and death: Systematic review and meta-analysis. Diabetologia 2012, 55, 2895–2905. [Google Scholar] [CrossRef] [PubMed]

- Tran, B.; Falster, M.O.; Douglas, K.; Blyth, F.; Jorm, L.R. Health Behaviours and Potentially Preventable Hospitalisation: A Prospective Study of Older Australian Adults. PLoS ONE 2014, 9, e93111. [Google Scholar] [CrossRef] [PubMed]

- Biswas, A.; Oh, P.I.; Faulkner, G.E.; Bajaj, R.R.; Silver, M.A.; Mitchell, M.S.; Alter, D.A. Sedentary time and its association with risk for disease incidence, mortality, and hospitalization in adults: A systematic review and meta-analysis. Ann. Intern. Med. 2015, 162, 123–132. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Wang, K.; Wang, J.; Liu, Y.; Brodie, M.; Delbaere, K.; Lovell, N.H.; Lord, S.R.; Redmond, S.J. A comparison of activity classification in younger and older cohorts using a smartphone. Physiol. Meas. 2014, 35, 2269–2286. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Siddiqi, M.; Lee, S.W. Exploratory data analysis of acceleration signals to select light-weight and accurate features for real-time activity recognition on smartphones. Sensors 2013, 13, 13099–13122. [Google Scholar] [CrossRef]

- Chou, J.C.K. Quaternion kinematic and dynamic differential equations. IEEE Trans. Robot. Autom. 1992, 8, 53–64. [Google Scholar] [CrossRef]

- Del Rosario, M.B.; Khamis, H.; Ngo, P.; Lovell, N.H.; Redmond, S.J. Computationally-Efficient Adaptive Error-State Kalman Filter for Attitude Estimation. IEEE Sens. J. 2018, 18, 9332–9342. [Google Scholar] [CrossRef]

- Welford, B.P. Note on a method for calculating corrected sums of squares and products. Technometrics 1962, 4, 419–420. [Google Scholar] [CrossRef]

- Antonsson, E.K.; Mann, R.W. The frequency content of gait. J. Biomech. 1985, 18, 39–47. [Google Scholar] [CrossRef]

- Jiménez, A.R.; Seco, F.; Prieto, J.C.; Guevara, J. Indoor pedestrian navigation using an INS/EKF framework for yaw drift reduction and a foot-mounted IMU. In Proceedings of the 2010 7th Workshop on Positioning Navigation and Communication, Dresden, Germany, 11–12 March 2010; pp. 135–143. [Google Scholar]

- Del Rosario, M.B.; Lovell, N.H.; Redmond, S.J. Quaternion-Based Complementary Filter for Attitude Determination of a Smartphone. IEEE Sens. J. 2016, 16, 6008–6017. [Google Scholar] [CrossRef]

- Madgwick, S.; Harrison, A.; Vaidyanathan, R. Estimation of IMU and MARG orientation using a gradient descent algorithm. In Proceedings of the IEEE International Conference on Rehabilitation Robotics, Zurich, Switzerland, 29 June–1 July 2011; pp. 1–7. [Google Scholar]

- Elvira, V.; Nazábal-Renteria, A.; Artés-Rodríguez, A. A novel feature extraction technique for human activity recognition. In Proceedings of the 2014 IEEE Workshop on Statistical Signal Processing (SSP), Gold Coast, VIC, Australia, 29 June–2 July 2014; pp. 177–180. [Google Scholar]

- Higgins, W.T. A comparison of complementary and Kalman filtering. IEEE Trans. Aerosp. Electron. Syst. 1975, AES-11, 321–325. [Google Scholar] [CrossRef]

- Sabatini, A.; Genovese, V. A sensor fusion method for tracking vertical velocity and height based on inertial and barometric altimeter measurements. Sensors 2014, 14, 13324–13347. [Google Scholar] [CrossRef]

- Bar-Shalom, Y.; Li, X.R.; Kirubarajan, T. Estimation with Applications to Tracking and Navigation; John Wiley & Sons, Inc.: New York, NY, USA, 2002. [Google Scholar]

- Guo, G. Pressure Altimetry Using the MPL3115A2; Application Note: AN4528; Freescale Semiconductor Ltd.: Austin, TX, USA, 2012; pp. 1–13. [Google Scholar]

- Sabatini, A.M.; Ligorio, G.; Mannini, A.; Genovese, V.; Pinna, L. Prior-to- and Post-Impact Fall Detection Using Inertial and Barometric Altimeter Measurements. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 774–783. [Google Scholar] [CrossRef]

- Capela, N.A.; Lemaire, E.D.; Baddour, N. Feature selection for wearable smartphone-based human activity recognition with able bodied, elderly, and stroke patients. PLoS ONE 2015, 10, e0124414. [Google Scholar] [CrossRef] [PubMed]

- Capela, N.A.; Lemaire, E.D.; Baddour, N. Improving classification of sit, stand, and lie in a smartphone human activity recognition system. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications, Turin, Italy, 7–9 May 2015; pp. 473–478. [Google Scholar]

- Breiman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classification and Regression Trees; The Wadsworth and Brooks-Cole Statistics-Probability Series; Taylor & Francis: Abingdon, UK, 1984. [Google Scholar]

- Esposito, F.; Malerba, D.; Semeraro, G.; Kay, J. A comparative analysis of methods for pruning decision trees. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 476–491. [Google Scholar] [CrossRef]

- Aggarwal, J.; Ryoo, M. Human Activity Analysis: A Review. ACM Comput. Surv. 2011, 43, 1–43. [Google Scholar] [CrossRef]

- Chawla, N.V. Overview. In Data Mining and Knowledge Discovery Handbook; Springer: Boston, MA, USA, 2010; pp. 875–886. [Google Scholar]

- Shannon, C.; Weaver, W. The Mathematical Theory of Communication; Number v. 1 in The Mathematical Theory of Communication; University of Illinois Press: Champaign, IL, USA, 1949. [Google Scholar]

- Li, F.; Shirahama, K.; Nisar, M.A.; Köping, L.; Grzegorzek, M. Comparison of Feature Learning Methods for Human Activity Recognition Using Wearable Sensors. Sensors 2018, 18, 679. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity Recognition from User-Annotated Acceleration Data. In Pervasive Computing; Ferscha, A., Mattern, F., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In Proceedings of the 4th International Conference on Ambient Assisted Living and Home Care, IWAAL’12, Vitoria-Gasteiz, Spain, 3–5 December 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 216–223. [Google Scholar]

- Gu, F.; Khoshelham, K.; Valaee, S.; Shang, J.; Zhang, R. Locomotion Activity Recognition Using Stacked Denoising Autoencoders. IEEE Internet Things J. 2018, 5, 2085–2093. [Google Scholar] [CrossRef]

- Gramkow, C. On averaging rotations. Int. J. Comput. Vis. 2001, 42, 7–16. [Google Scholar] [CrossRef]

- Dam, E.B.; Koch, M.; Lillholm, M. Quaternions, Interpolation and Animation; Technical Report; University of Copenhagen: Copenhagen, Denmark, 1998. [Google Scholar]

| Sampling Rate | ||||||||

|---|---|---|---|---|---|---|---|---|

| = 100 Hz | 0.01 | 0.1 | 0.99 | 7 | 49 | 1 | 7 | 5 |

| = 40 Hz | 0.01 | 0.1 | 0.99 | 3 | 19 | 1 | 3 | 5 |

| No. | Feature | Description |

|---|---|---|

| (1) | average squared band-pass- | |

| filtered angular velocity | ||

| (2) | average inclination angle | |

| (3) | average squared band- | |

| pass-filtered acceleration | ||

| (4) | average differential pressure | |

| (5) | average squared pitch/roll | |

| angular velocity | ||

| (6) | average of the shortest rotation between | |

| the upright and average orientations | ||

| (7) | change in the shortest rotation between | |

| the upward and average orientations | ||

| (8) | average velocity in the vertical | |

| direction of the estimated GFR |

| Cohen’s Kappa () | |||||

|---|---|---|---|---|---|

| Train | Test | Original Features | New Features | Best Features | Best Features |

| Y | Y | ||||

| O | O | ||||

| Y | O | ||||

| O | Y | ||||

| Y&O | (Y&O) | ||||

| Total Class Sensitivity (%) | |||||

| Train | Test | Original Features | New Features | Best Features | Best Features |

| Y | Y | ||||

| O | O | ||||

| Y | O | ||||

| O | Y | ||||

| Y&O | (Y&O) | ||||

| Cohen’s Kappa () | Total Class Sensitivity (%) | ||||||

|---|---|---|---|---|---|---|---|

| Train | 100 Hz | 100 Hz | 40 Hz | 100 Hz | 100 Hz | 40 Hz | |

| Test | 100 Hz | 40 Hz | 100 Hz | 100 Hz | 40 Hz | 100 Hz | |

| Y | Y | ||||||

| O | O | ||||||

| Y | O | ||||||

| O | Y | ||||||

| Y&O | (Y&O) | ||||||

| Training Data | Feature | Threshold | Rule * | |

|---|---|---|---|---|

| 100 Hz | 40 Hz | |||

| Y | 0.232 | 0.195 | Inactive if ≤ threshold, else Active | |

| O | 0.249 | 0.237 | ||

| Y& O | (rad·s) | 0.230 | 0.202 | |

| Y | 0.668 | 0.689 | Standing if ≤ threshold, else Sedentary (i.e., sitting or lying) | |

| O | 0.616 | 0.617 | ||

| Y& O | (radians) | 0.640 | 0.617 | |

| Y | 75.6 | 93.4 | Any Walking if ≤ threshold, else Postural Transition | |

| O | 36.4 | 32.8 | ||

| Y& O | (m·s) | 46.7 | 46.4 | |

| Y | −0.107 | −0.101 | Walking Downstairs if ≤ threshold, else Walking | |

| O | −0.067 | −0.068 | ||

| Y& O | (hPa·s) | −0.062 | −0.094 | |

| Y | 0.128 | 0.142 | Walking if ≤ threshold, else Walking Upstairs | |

| O | 0.092 | 0.105 | ||

| Y& O | (hPa·s) | 0.092 | 0.119 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Del Rosario, M.B.; Lovell, N.H.; Redmond, S.J. Learning the Orientation of a Loosely-Fixed Wearable IMU Relative to the Body Improves the Recognition Rate of Human Postures and Activities. Sensors 2019, 19, 2845. https://doi.org/10.3390/s19132845

Del Rosario MB, Lovell NH, Redmond SJ. Learning the Orientation of a Loosely-Fixed Wearable IMU Relative to the Body Improves the Recognition Rate of Human Postures and Activities. Sensors. 2019; 19(13):2845. https://doi.org/10.3390/s19132845

Chicago/Turabian StyleDel Rosario, Michael B., Nigel H. Lovell, and Stephen J. Redmond. 2019. "Learning the Orientation of a Loosely-Fixed Wearable IMU Relative to the Body Improves the Recognition Rate of Human Postures and Activities" Sensors 19, no. 13: 2845. https://doi.org/10.3390/s19132845

APA StyleDel Rosario, M. B., Lovell, N. H., & Redmond, S. J. (2019). Learning the Orientation of a Loosely-Fixed Wearable IMU Relative to the Body Improves the Recognition Rate of Human Postures and Activities. Sensors, 19(13), 2845. https://doi.org/10.3390/s19132845