Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model for Human Action Recognition

Abstract

1. Introduction

2. Related Work

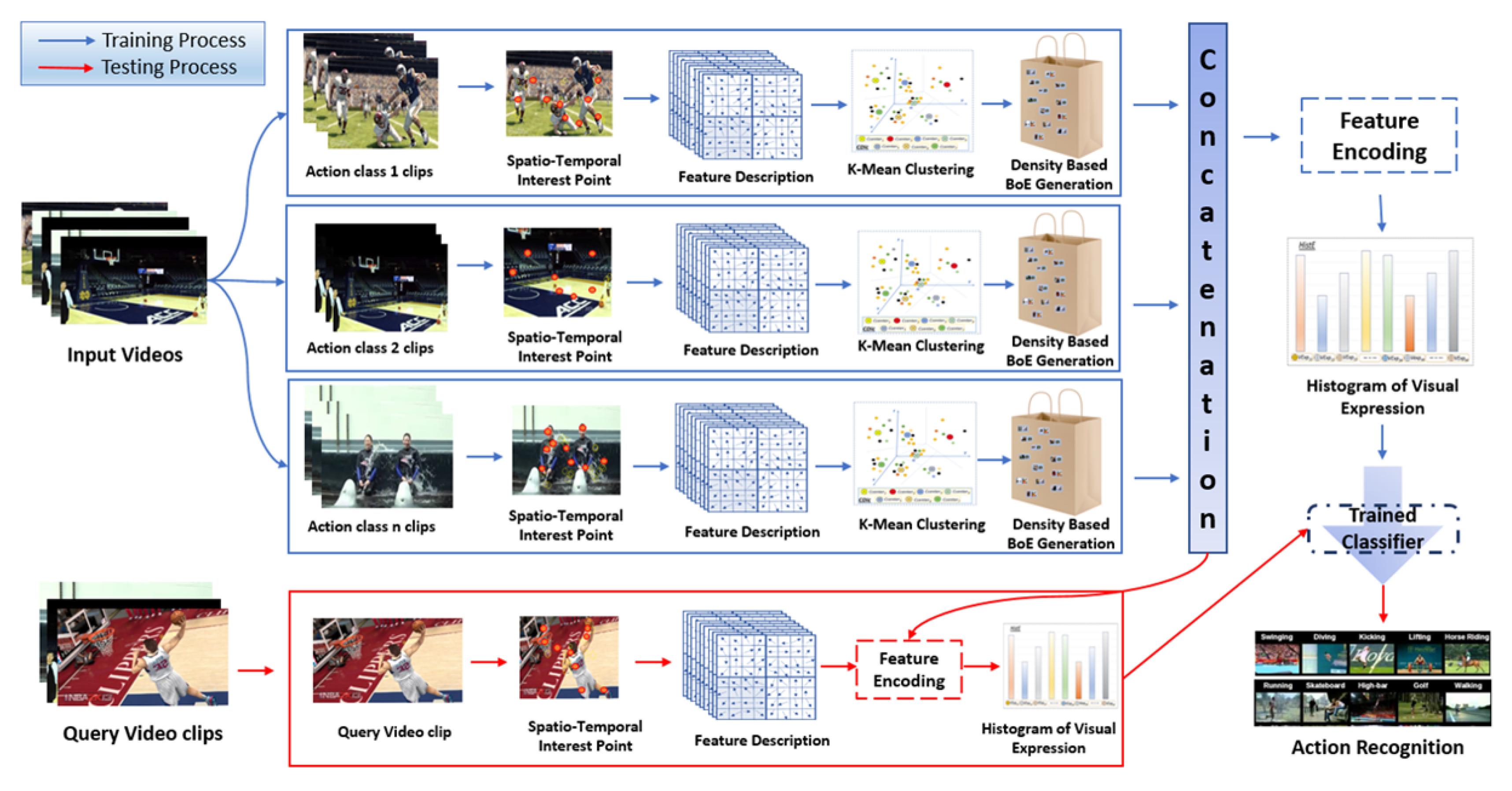

3. Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model

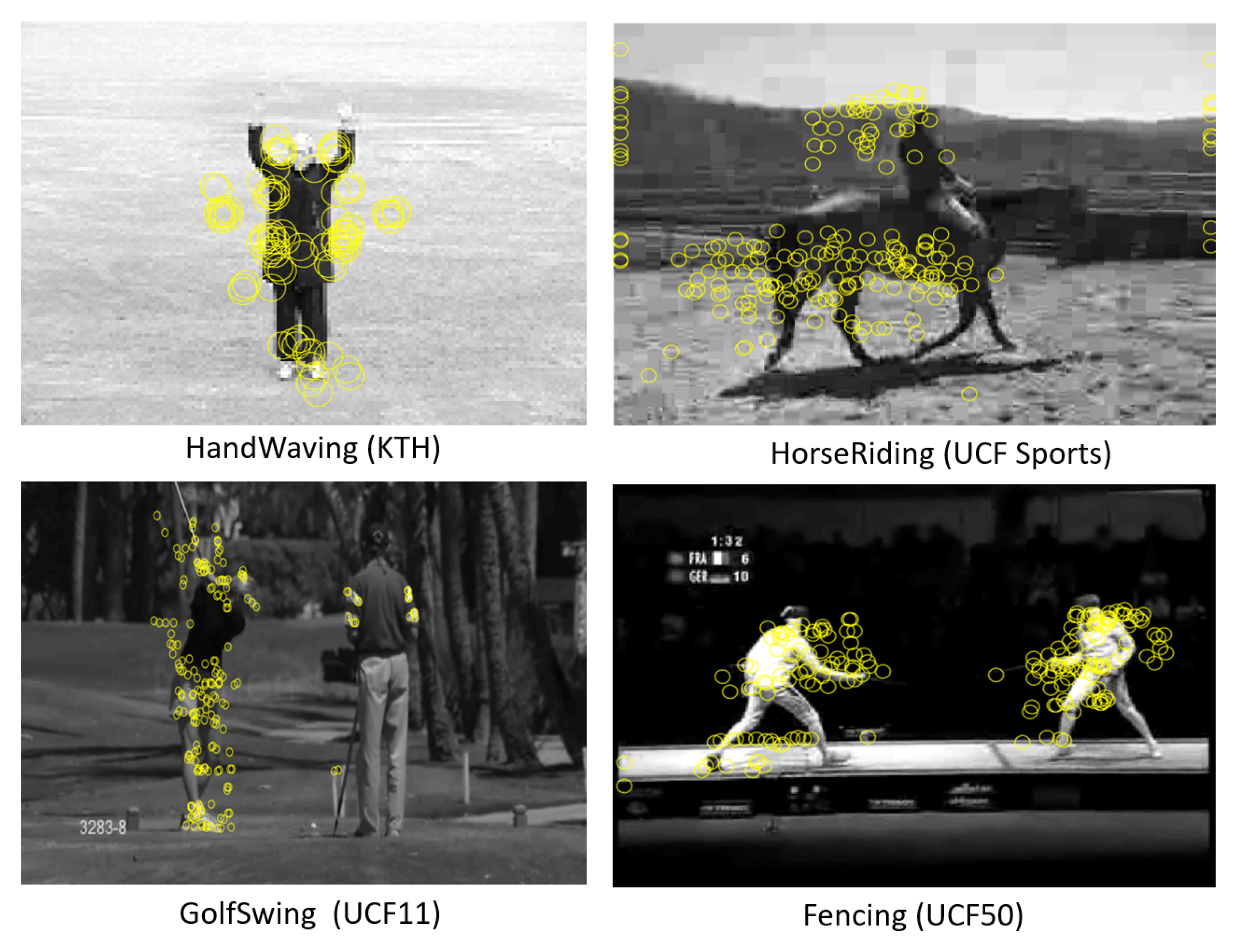

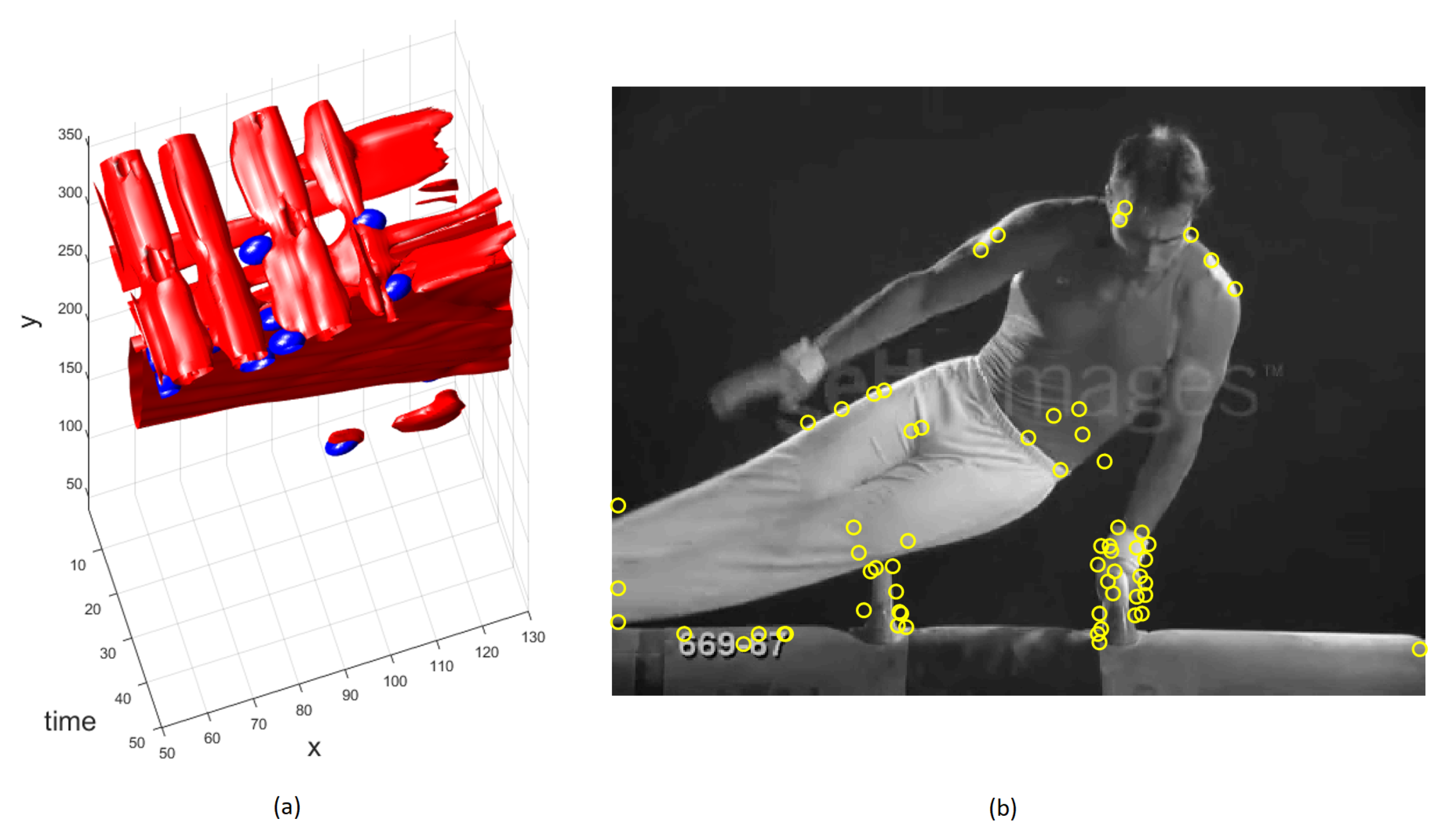

3.1. Spatio-Temporal Interest Points Detection

3.2. STIP Description using 3D SIFT

3.3. Class Specific Visual Word Dictionary

3.4. Bag of Expressions Generation

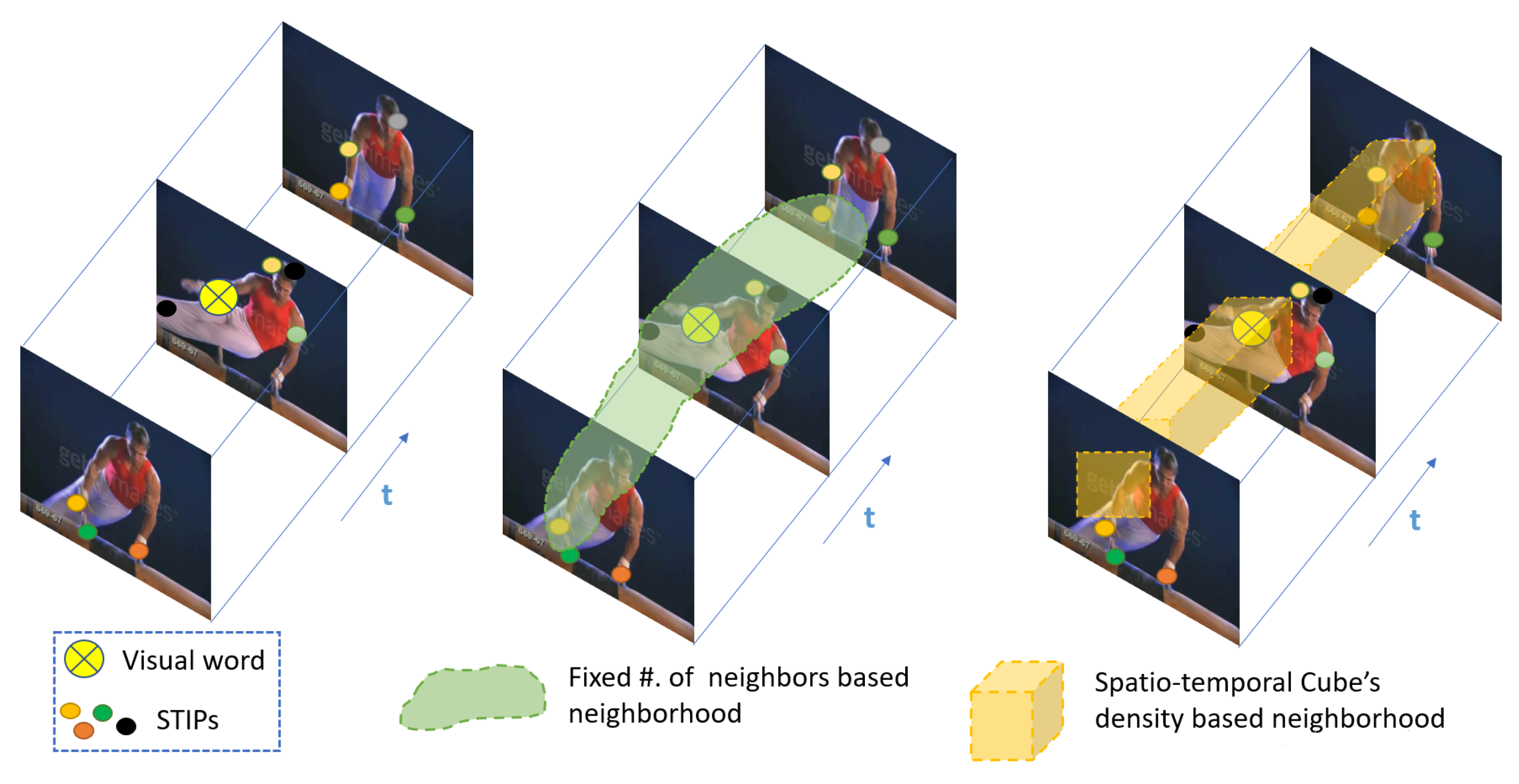

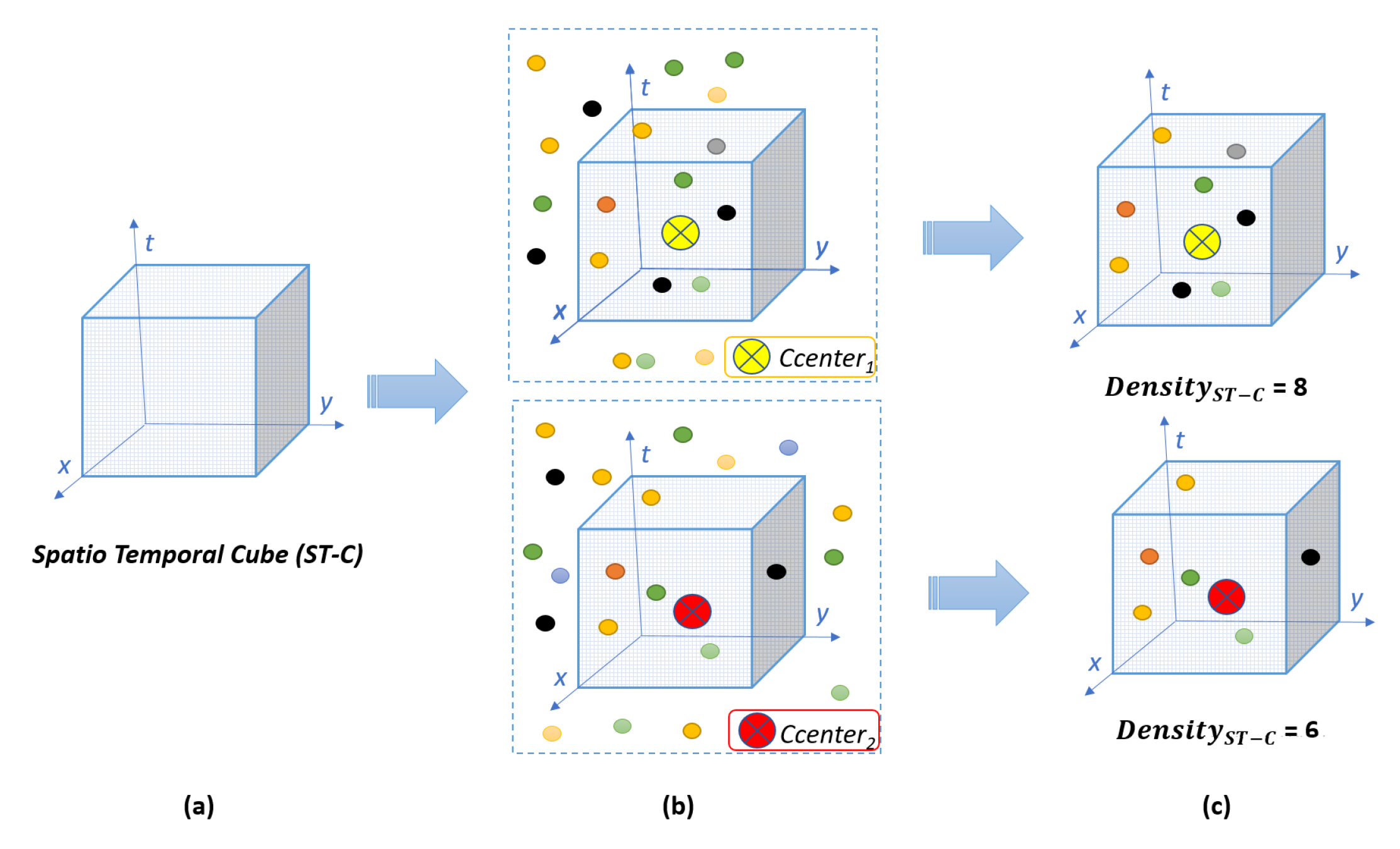

3.4.1. Spatio-Temporal Cube

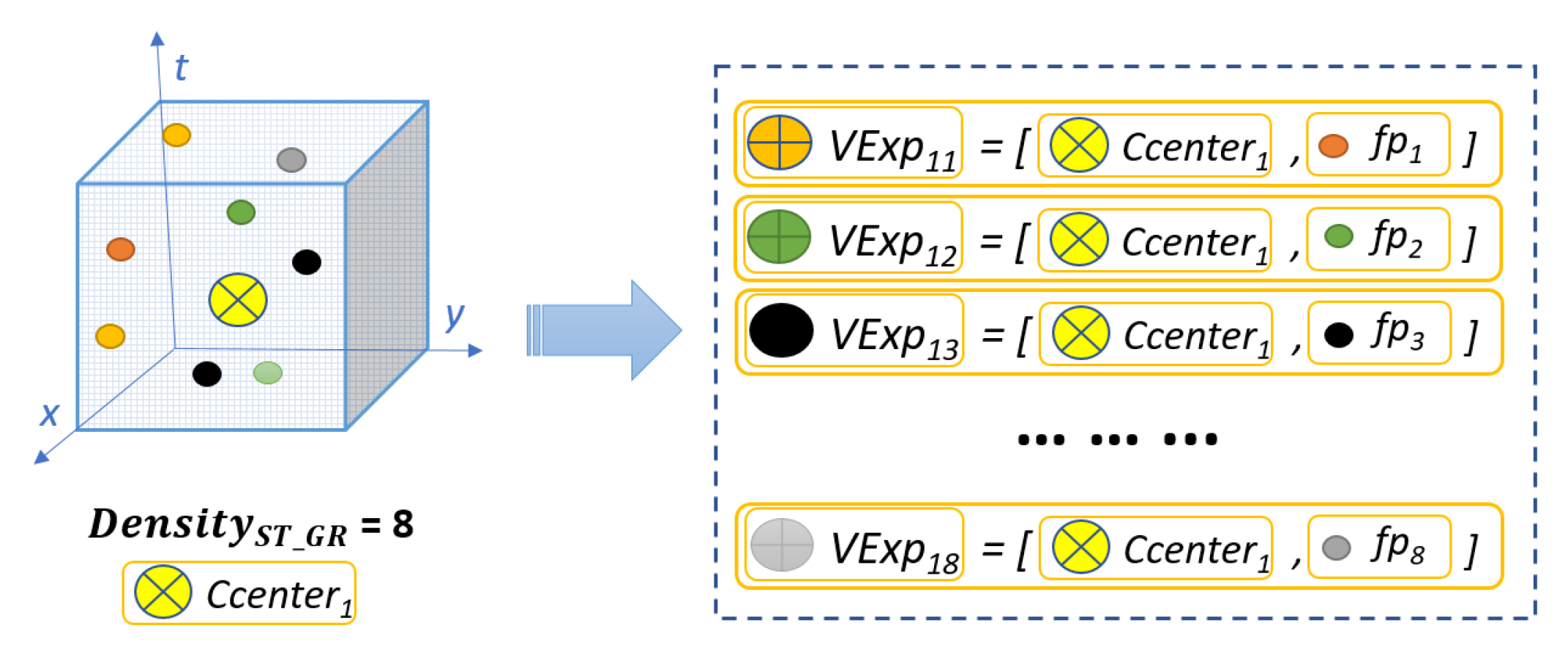

3.4.2. Visual Expression Formation

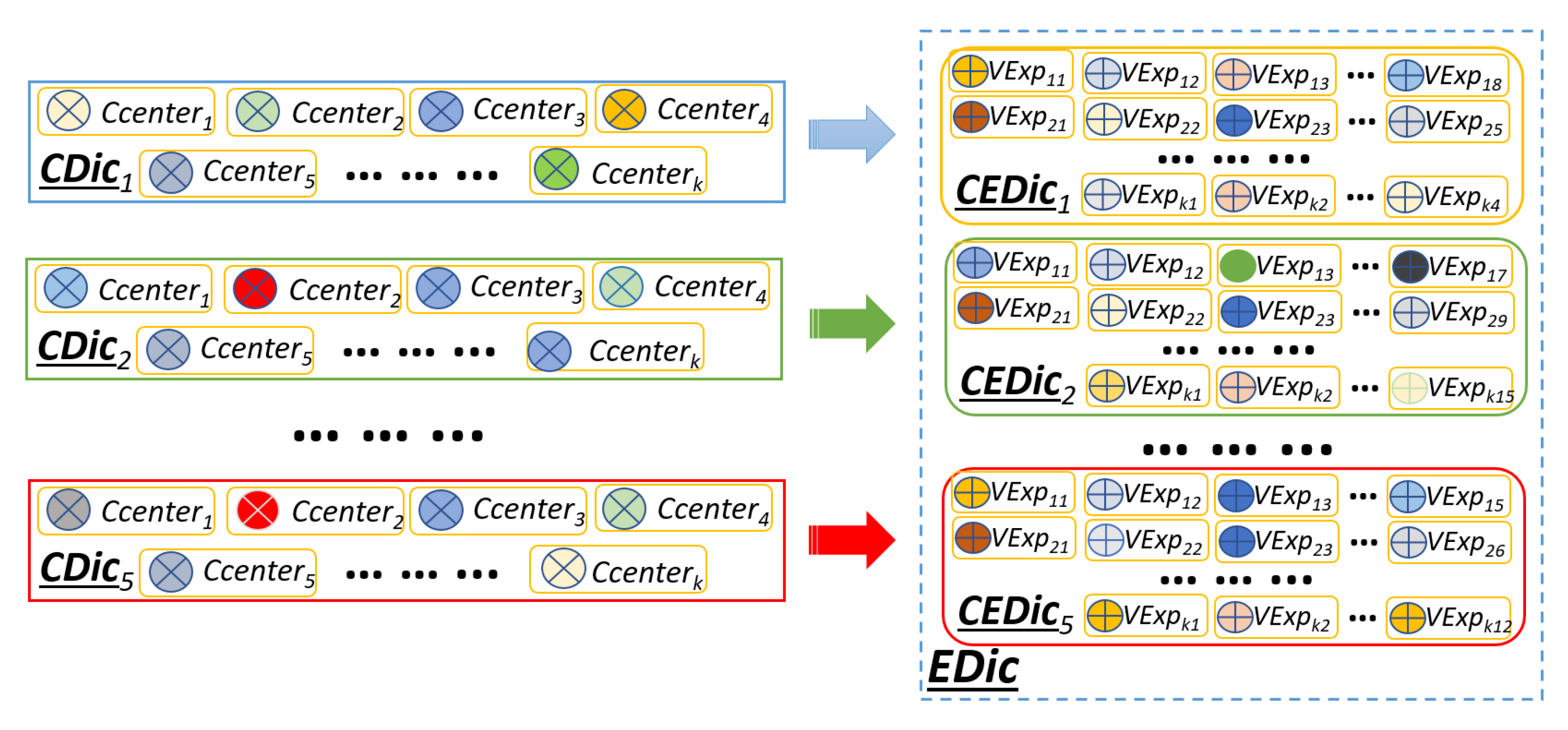

3.4.3. Visual Expression Dictionary

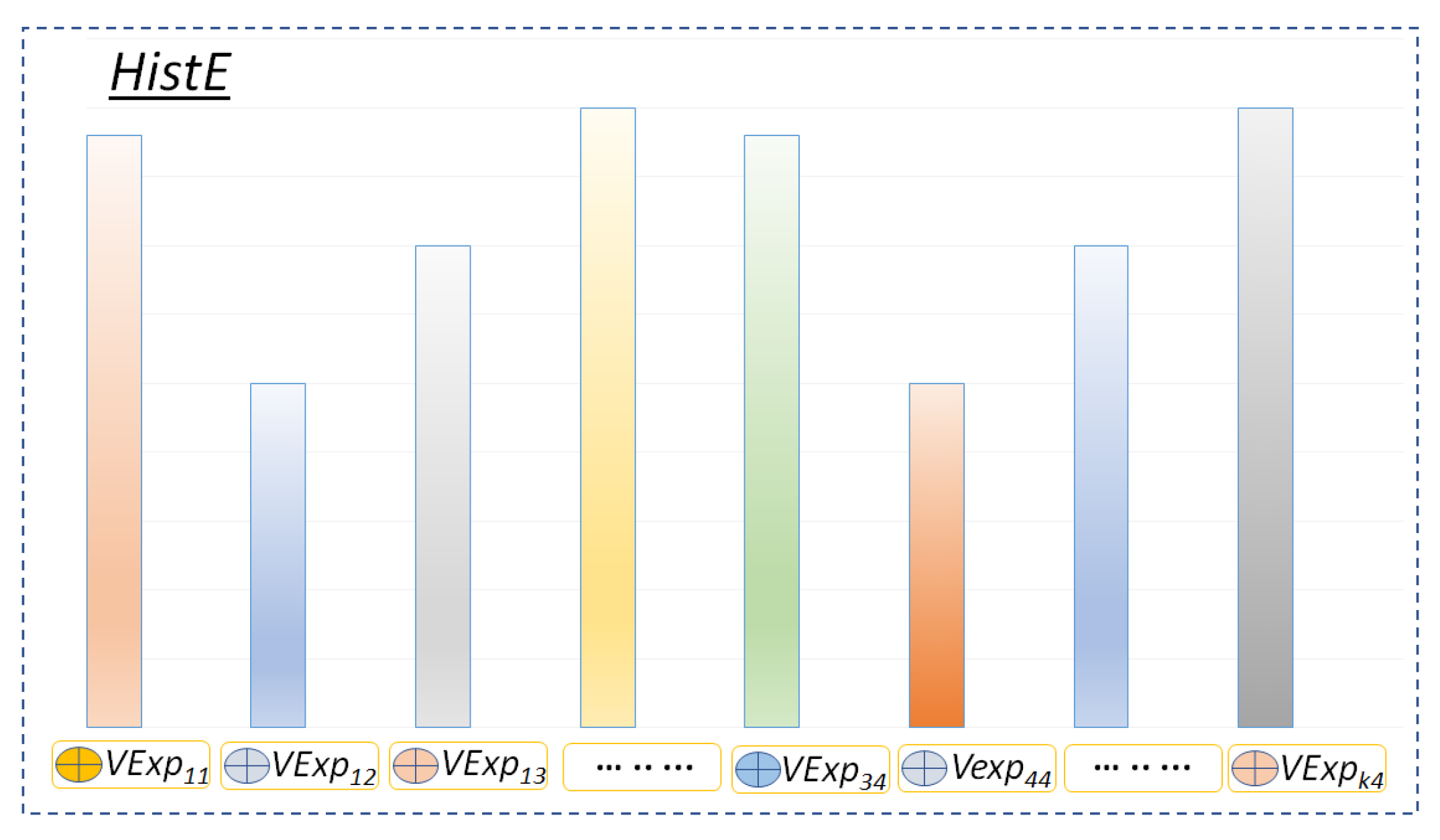

3.5. Histogram of Visual Expressions Encoding

3.6. Action Recognition

- One vs. one: The multiclass model learns C(C − 1) binary learners. For this coding design matrix, one class is positive, another is negative and the rest is ignored.

- One vs. All: For each binary learner of a total C binary learners, one class is positive and the rest is negative.

- Ordinal: Matrix elements are assigned such that for the first binary learner the first class is positive and others are negative. For the second binary learner, the first two classes are positive and the rest are negative and so on. It has C − 1 binary learners.

- Binary Complete: The multi-class SVMs model learns binary learners such that it assigns 1 or −1 to each class provided that at least one class is assigned positive and one class is assigned negative.

- Dense Random: Each element of the design matrix is assigned equal probability and has approximately binary learners.

- Sparse Random: It assigns 1 or −1 to the class with 0.25 probability and 0 to class with 0.5 probability. It has approximately binary learners.

| Algorithm 1 D-STBoE algorithm for Human Action Recognition |

Require: Training video dataset where C is the number of action classes,

|

4. Experimentation Results and Discussion

4.1. Datasets

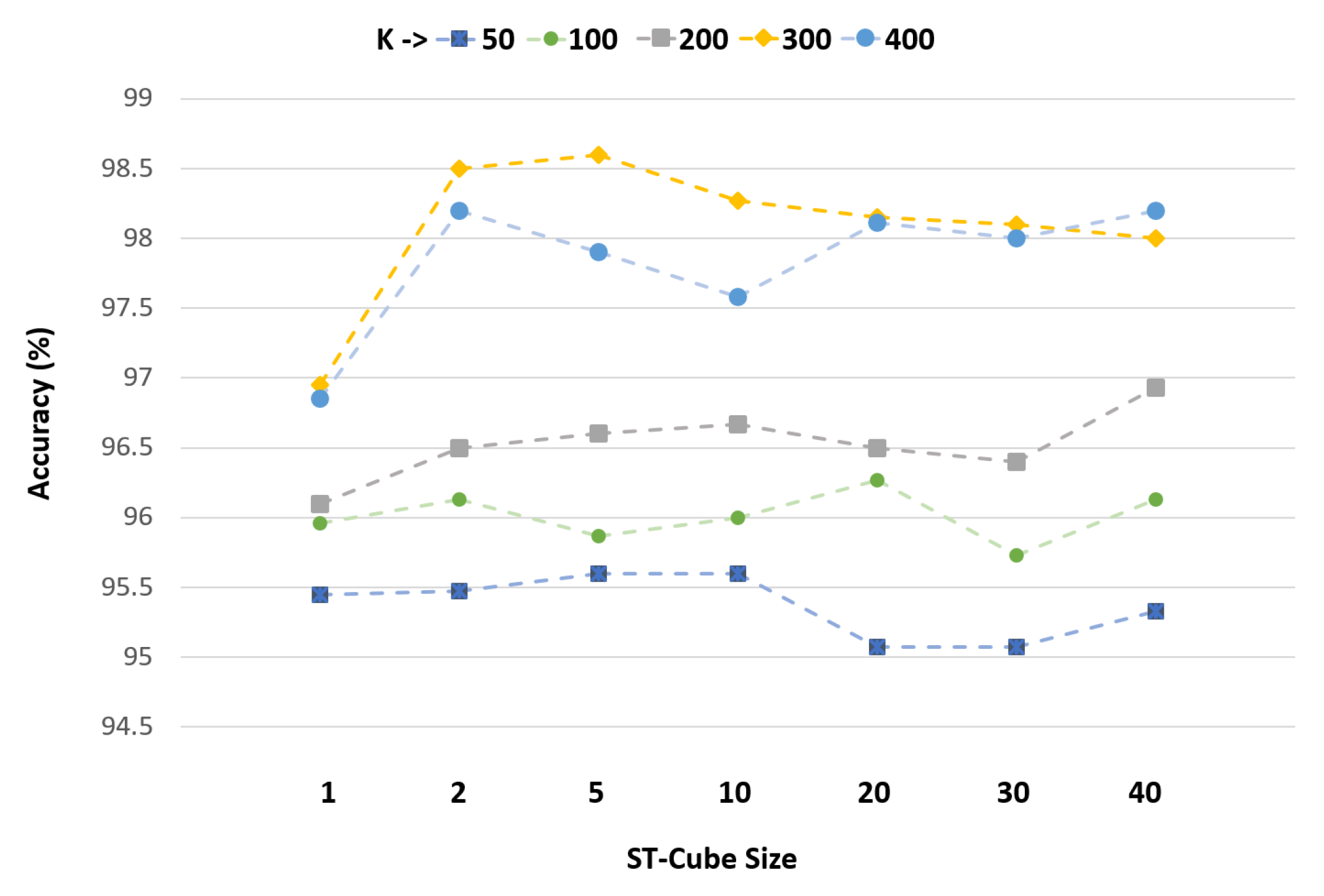

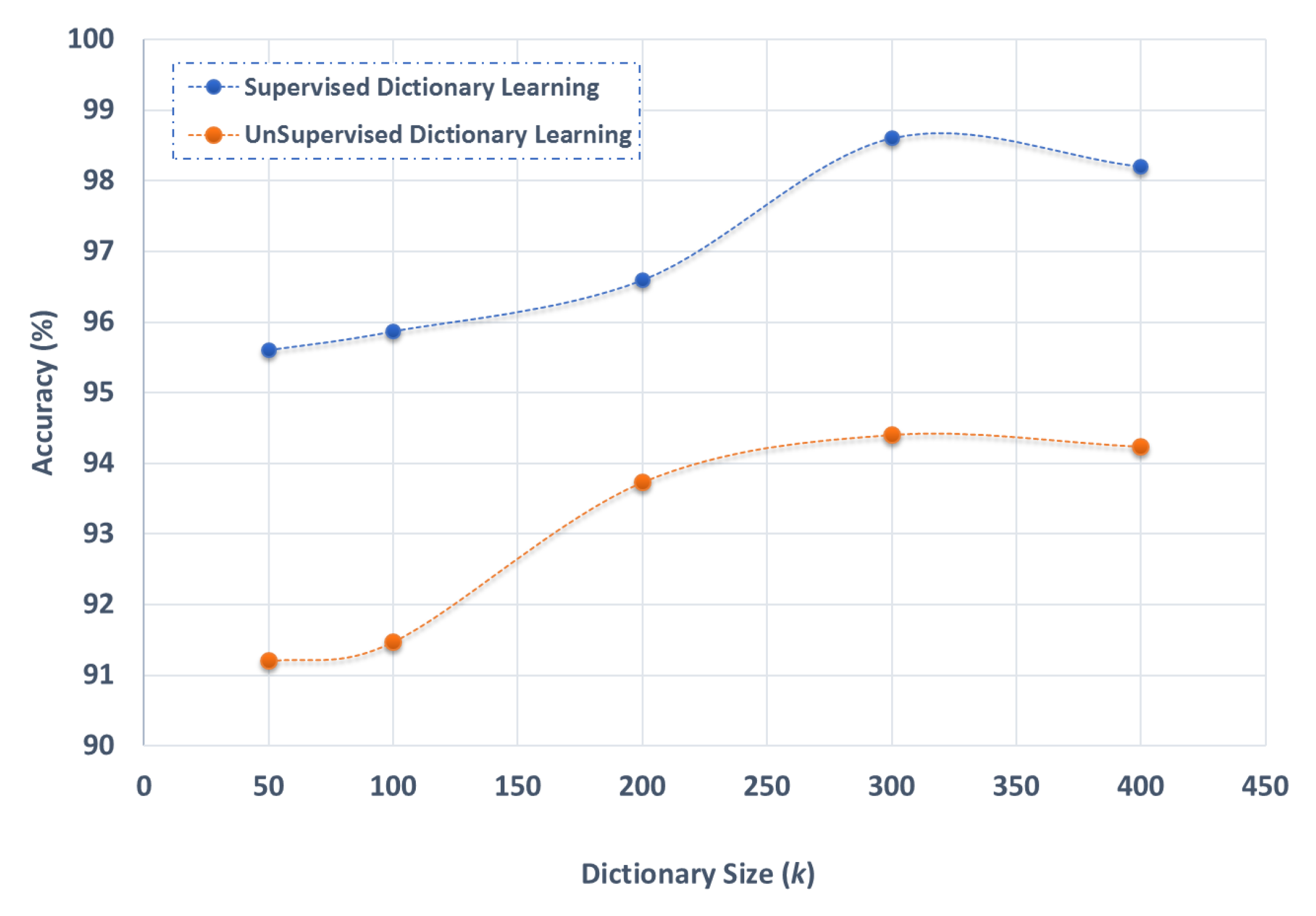

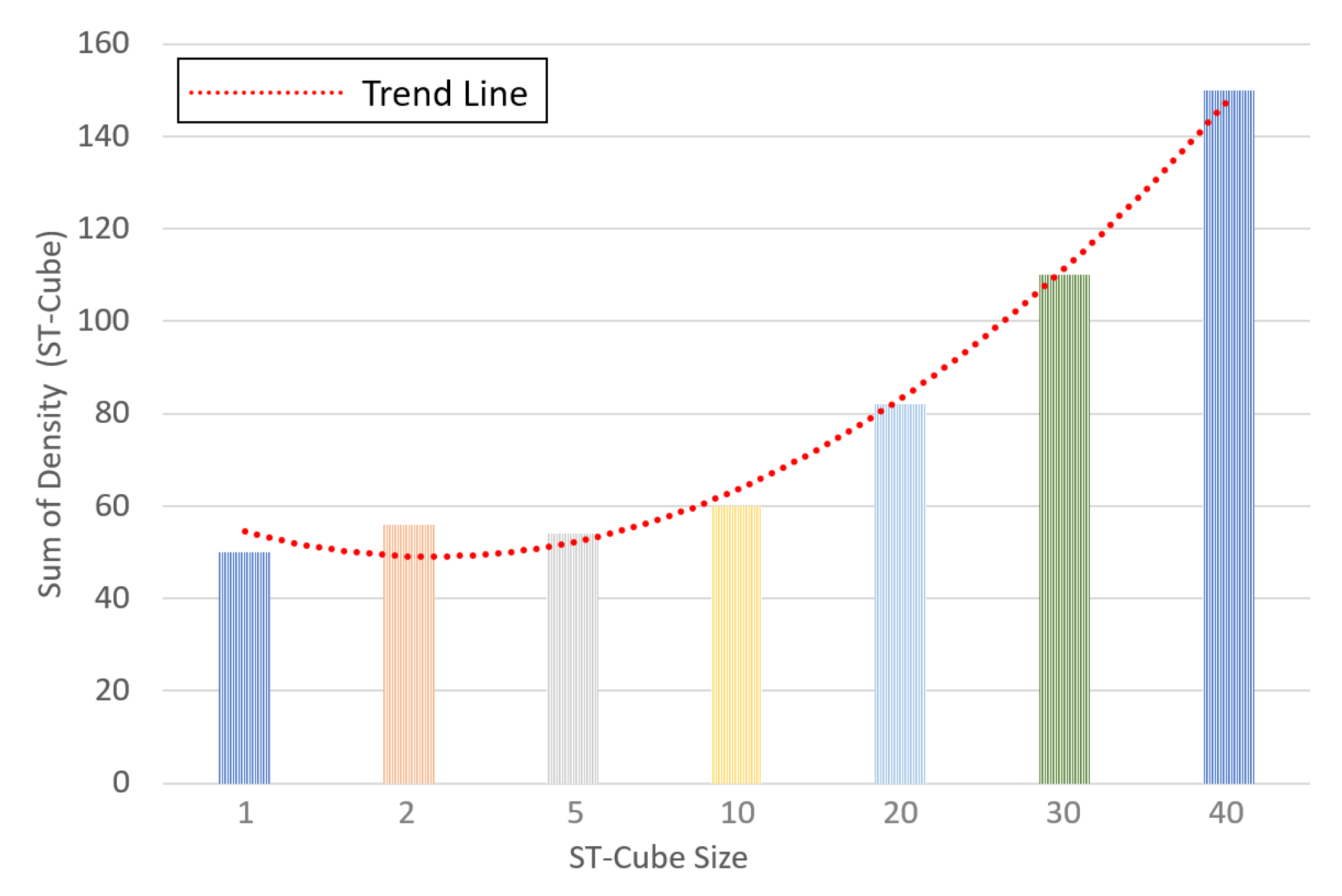

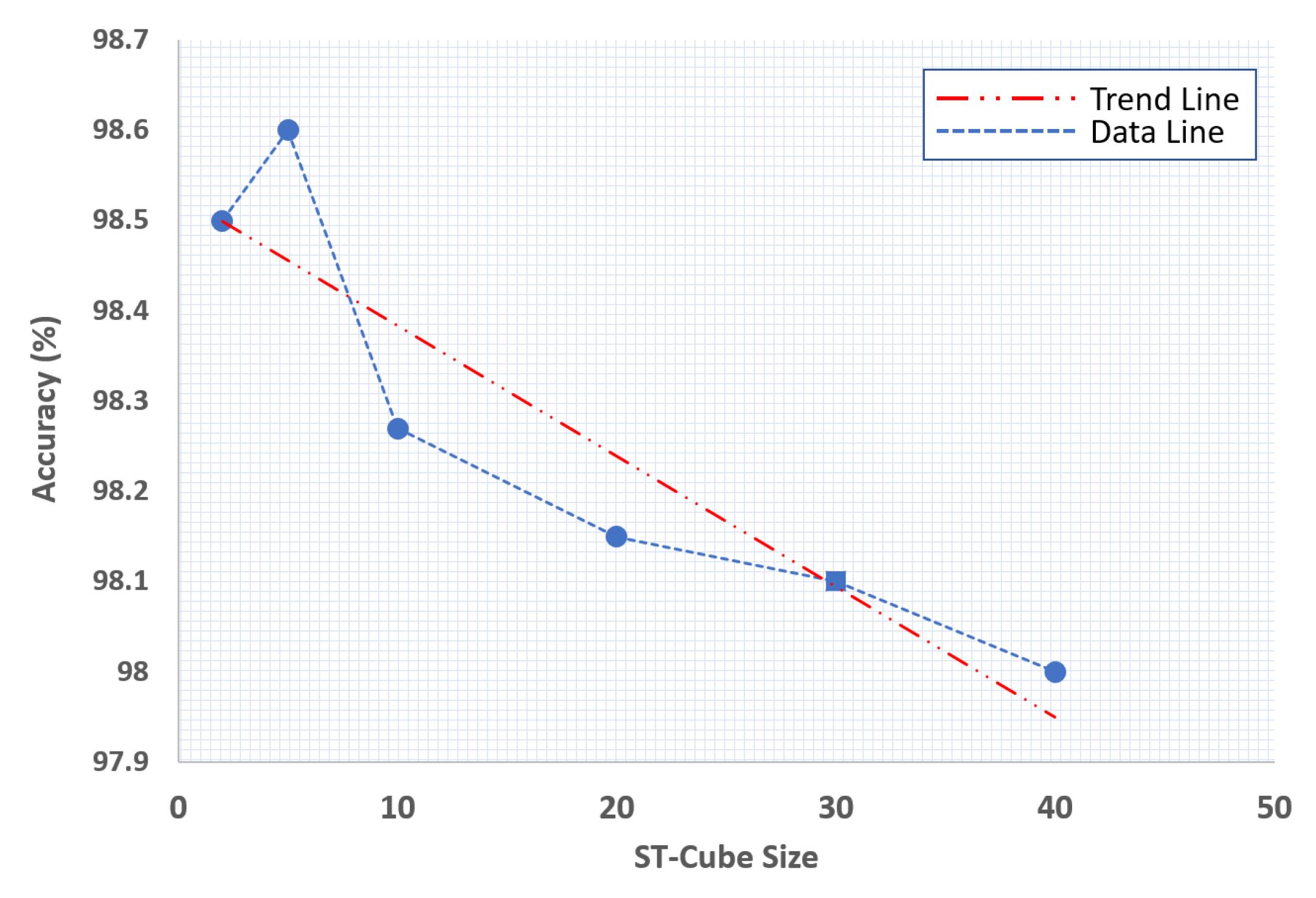

4.2. Feature Extraction and Parameter Tuning

4.3. Contribution of Each Stage

4.4. Comparison with the State-of-the-Art

4.4.1. Evaluation on the KTH Dataset

4.4.2. Evaluation on the UCF Sports Dataset

4.4.3. Evaluation on the UCF11 and UCF50 Datasets

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Poppe, R. A survey on vision-based human action recognition. Image Vis. Comput. 2010, 28, 976–990. [Google Scholar] [CrossRef]

- Xia, L.; Chen, C.C.; Aggarwal, J. View invariant human action recognition using histograms of 3d joints. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Providence, RI, USA, 16–21 June 2012; pp. 20–27. [Google Scholar]

- Nazir, S.; Yousaf, M.H.; Velastin, S.A. Feature Similarity and Frequency-Based Weighted Visual Words Codebook Learning Scheme for Human Action Recognition. In Pacific-Rim Symposium on Image and Video Technology; Springer: Berlin/Heidelberg, Germany, 2017; pp. 326–336. [Google Scholar]

- Lopes, A.P.B.; Oliveira, R.S.; de Almeida, J.M.; de Araújo, A.A. Spatio-temporal frames in a bag-of-visual-features approach for human actions recognition. In Proceedings of the 2009 XXII Brazilian Symposium on Computer Graphics and Image Processing (SIBGRAPI), Rio de Janiero, Brazil, 11–15 October 2009; pp. 315–321. [Google Scholar]

- Wang, H.; Oneata, D.; Verbeek, J.; Schmid, C. A robust and efficient video representation for action recognition. Int. J. Comput. Vis. 2016, 119, 219–238. [Google Scholar] [CrossRef]

- Shao, L.; Mattivi, R. Feature detector and descriptor evaluation in human action recognition. In Proceedings of the ACM International Conference on Image and Video Retrieval, Xi’an, China, 5–7 July 2010; pp. 477–484. [Google Scholar]

- Nazir, S.; Yousaf, M.H.; Nebel, J.C.; Velastin, S.A. A Bag of Expression framework for improved human action recognition. Pattern Recognit. Lett. 2018, 103, 39–45. [Google Scholar] [CrossRef]

- Kovashka, A.; Grauman, K. Learning a hierarchy of discriminative space-time neighborhood features for human action recognition. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2046–2053. [Google Scholar]

- Gilbert, A.; Illingworth, J.; Bowden, R. Action recognition using mined hierarchical compound features. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 883–897. [Google Scholar] [CrossRef] [PubMed]

- Bobick, A.F.; Davis, J.W. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 257–267. [Google Scholar] [CrossRef]

- Tian, Y.; Cao, L.; Liu, Z.; Zhang, Z. Hierarchical filtered motion for action recognition in crowded videos. IEEE Trans. Syst. Man Cybern. Part C 2012, 42, 313–323. [Google Scholar] [CrossRef]

- Murtaza, F.; Yousaf, M.H.; Velastin, S.A. Multi-view Human Action Recognition Using Histograms of Oriented Gradients (HOG) Description of Motion History Images (MHIs). In Proceedings of the 2015 13th International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 14–16 December 2015; pp. 297–302. [Google Scholar]

- Nazir, S.; Yousaf, M.H.; Velastin, S.A. Evaluating a bag-of-visual features approach using spatio-temporal features for action recognition. Comput. Electr. Eng. 2018, 72, 660–669. [Google Scholar] [CrossRef]

- Bregonzio, M.; Gong, S.; Xiang, T. Recognising action as clouds of space-time interest points. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; pp. 1948–1955. [Google Scholar]

- Nazir, S.; Yousaf, M.H.; Velastin, S.A. Inter and intra class correlation analysis (IICCA) for human action recognition in realistic scenarios. In Proceedings of the 8th International Conference of Pattern Recognition Systems (ICPRS 2017), Madrid, Spain, 11–13 July 2017. [Google Scholar]

- Laptev, I. On space-time interest points. Int. J. Comput. Vis. 2005, 64, 107–123. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Dollár, P.; Rabaud, V.; Cottrell, G.; Belongie, S. Behavior recognition via sparse spatio-temporal features. In Proceedings of the 2nd Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 65–72. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Laptev, I.; Caputo, B. Recognition of Human Actions. 2005. Available online: http://www.nada.kth.se/cvap/actions/ (accessed on 15 June 2019).

- Schuldt, C.; Laptev, I.; Caputo, B. Recognizing human actions: A local SVM approach. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 32–36. [Google Scholar]

- Laptev, I. Local Spatio-Temporal Image Features for Motion Interpretation. Ph.D. Thesis, Numerisk Analys Och Datalogi, KTH, Stockholm, Sweden, 2004. [Google Scholar]

- Laptev, I.; Lindeberg, T. Velocity adaptation of space-time interest points. In Proceedings of the 17th International Conference on Pattern Recognition (ICPR), British Machine Vis Assoc, Cambridge, UK, 23–26 August 2004; pp. 52–56. [Google Scholar]

- Rodriguez, M.D.; Ahmed, J.; Shah, M. UCF Sports Action Data Set. 2011. Available online: https://www.crcv.ucf.edu/data/UCF_Sports_Action.php (accessed on 15 June 2019).

- Rodriguez, M.D.; Ahmed, J.; Shah, M. Action mach a spatio-temporal maximum average correlation height filter for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Soomro, K.; Zamir, A.R. Action recognition in realistic sports videos. In Computer Vision in Sports; Springer: Berlin/Heidelberg, Germany, 2014; pp. 181–208. [Google Scholar]

- Liu, J.; Shah, M. UCF YouTube Action Data Set. 2011. Available online: https://www.crcv.ucf.edu/data/UCF_YouTube_Action.php (accessed on 15 June 2019).

- Liu, J.; Luo, J.; Shah, M. Recognizing realistic actions from videos “in the wild”. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1996–2003. [Google Scholar]

- Reddy, K.; Shah, M. UCF50—Action Recognition Data Set. 2012. Available online: https://www.crcv.ucf.edu/data/UCF50.php (accessed on 15 June 2019).

- Reddy, K.K.; Shah, M. Recognizing 50 human action categories of web videos. Mach. Vis. Appl. 2013, 24, 971–981. [Google Scholar] [CrossRef]

- Klaser, A.; Marszałek, M.; Schmid, C. A spatio-temporal descriptor based on 3d-gradients. In Proceedings of the BMVC 2008-19th British Machine Vision Conference, British Machine Vision Association, Leeds, UK, 1–4 September 2008; pp. 1–275. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Scovanner, P.; Ali, S.; Shah, M. A 3-dimensional sift descriptor and its application to action recognition. In Proceedings of the 15th ACM international conference on Multimedia, Augsburg, Germany, 25–29 September 2007; pp. 357–360. [Google Scholar]

- Yuan, J.; Wu, Y.; Yang, M. Discovery of collocation patterns: From visual words to visual phrases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Meng, J.; Yuan, J.; Yang, J.; Wang, G.; Tan, Y.P. Object instance search in videos via spatio-temporal trajectory discovery. IEEE Trans. Multimed. 2016, 18, 116–127. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, Y.; Han, Y.; Hong, R.; Hu, Q.; Tian, Q. Pooling the convolutional layers in deep convnets for video action recognition. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1839–1849. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Advances in Neural Information Processing Systems 27 (NIPS Proceedings); MIT Press: Cambridge, MA, USA, 2014; pp. 568–576. [Google Scholar]

- Xu, Y.; Han, Y.; Hong, R.; Tian, Q. Sequential video VLAD: Training the aggregation locally and temporally. IEEE Trans. Image Process. 2018, 27, 4933–4944. [Google Scholar] [CrossRef] [PubMed]

- Murtaza, F.; HaroonYousaf, M.; Velastin, S.A. DA-VLAD: Discriminative Action Vector of Locally Aggregated Descriptors for Action Recognition. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3993–3997. [Google Scholar]

- Rahmani, H.; Mian, A.; Shah, M. Learning a deep model for human action recognition from novel viewpoints. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 667–681. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Xu, Y.; Cheng, J.; Xia, H.; Yin, J.; Wu, J. Human Action Recognition by Learning Spatio-Temporal Features With Deep Neural Networks. IEEE Access 2018, 6, 17913–17922. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Javed, M.Y.; Muhammad, N.; Yasmin, M. An implementation of optimized framework for action classification using multilayers neural network on selected fused features. In Pattern Analysis and Applications; Springer: London, UK, February 2018; pp. 1–21. [Google Scholar]

- Hara, K.; Kataoka, H.; Satoh, Y. Learning spatio-temporal features with 3D residual networks for action recognition. In Proceedings of the ICCV Workshop on Action, Gesture, and Emotion Recognition, Venice, Italy, 29 October 2017; p. 4. [Google Scholar]

- Yue-Hei Ng, J.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 4694–4702. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Wildes, R. Spatiotemporal residual networks for video action recognition. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2016; pp. 3468–3476. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Wildes, R.P. Temporal residual networks for dynamic scene recognition. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7435–7444. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Sun, X.; Chen, M.; Hauptmann, A. Action recognition via local descriptors and holistic features. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Miami, FL, USA, 20–25 June 2009; pp. 58–65. [Google Scholar]

- Wagstaff, K.; Cardie, C.; Rogers, S.; Schrödl, S. Constrained k-means clustering with background knowledge. In Proceedings of the Eighteenth International Conference on Machine Learning, Williamstown, MA, USA, 28 June–1 July 2001; pp. 577–584. [Google Scholar]

- Chatfield, K.; Lempitsky, V.S.; Vedaldi, A.; Zisserman, A. The devil is in the details: An evaluation of recent feature encoding methods. In Proceedings of the British Machine Vision Conference, Dundee, UK, 29 August–2 September 2011; p. 8. [Google Scholar]

- Escalera, S.; Pujol, O.; Radeva, P. Separability of ternary codes for sparse designs of error-correcting output codes. Pattern Recognit. Lett. 2009, 30, 285–297. [Google Scholar] [CrossRef]

- Chapelle, O.; Vapnik, V.; Bousquet, O.; Mukherjee, S. Choosing multiple parameters for support vector machines. Mach. Learn. 2002, 46, 131–159. [Google Scholar] [CrossRef]

- Yi, Y.; Zheng, Z.; Lin, M. Realistic action recognition with salient foreground trajectories. Expert Syst. Appl. 2017, 75, 44–55. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Fang, Y. Power difference template for action recognition. Mach. Vis. Appl. 2017, 28, 1–11. [Google Scholar] [CrossRef]

- Sheng, B.; Yang, W.; Sun, C. Action recognition using direction-dependent feature pairs and non-negative low rank sparse model. Neurocomputing 2015, 158, 73–80. [Google Scholar] [CrossRef]

- Ballas, N.; Yang, Y.; Lan, Z.Z.; Delezoide, B.; Prêteux, F.; Hauptmann, A. Space-time robust representation for action recognition. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2704–2711. [Google Scholar]

- Yao, T.; Wang, Z.; Xie, Z.; Gao, J.; Feng, D.D. Learning universal multiview dictionary for human action recognition. Pattern Recognit. 2017, 64, 236–244. [Google Scholar] [CrossRef]

- Ullah, J.; Jaffar, M.A. Object and motion cues based collaborative approach for human activity localization and recognition in unconstrained videos. Clust. Comput. 2017, 21, 1–12. [Google Scholar] [CrossRef]

- Tong, M.; Wang, H.; Tian, W.; Yang, S. Action recognition new framework with robust 3D-TCCHOGAC and 3D-HOOFGAC. Multimed. Tools Appl. 2017, 76, 3011–3030. [Google Scholar] [CrossRef]

- Hsieh, C.Y.; Lin, W.Y. Video-based human action and hand gesture recognition by fusing factored matrices of dual tensors. Multimed. Tools Appl. 2017, 76, 7575–7594. [Google Scholar] [CrossRef]

- Peng, X.; Schmid, C. Multi-region two-stream R-CNN for action detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 744–759. [Google Scholar]

- Wang, T.; Wang, S.; Ding, X. Detecting human action as the spatio-temporal tube of maximum mutual information. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 277–290. [Google Scholar] [CrossRef]

- Cho, J.; Lee, M.; Chang, H.J.; Oh, S. Robust action recognition using local motion and group sparsity. Pattern Recognit. 2014, 47, 1813–1825. [Google Scholar] [CrossRef]

- Wang, H.; Kläser, A.; Schmid, C.; Liu, C.L. Dense trajectories and motion boundary descriptors for action recognition. Int. J. Comput. Vis. 2013, 103, 60–79. [Google Scholar] [CrossRef]

- Wang, H.; Yuan, C.; Hu, W.; Sun, C. Supervised class-specific dictionary learning for sparse modeling in action recognition. Pattern Recognit. 2012, 45, 3902–3911. [Google Scholar] [CrossRef]

- Le, Q.V.; Zou, W.Y.; Yeung, S.Y.; Ng, A.Y. Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 20–25 June 2011; pp. 3361–3368. [Google Scholar]

- Kläser, A.; Marszałek, M.; Laptev, I.; Schmid, C. Will Person Detection Help Bag-of-Features Action Recognition? INRIA: Rocquencourt, France, 2010. [Google Scholar]

- Yi, Y.; Lin, M. Human action recognition with graph-based multiple-instance learning. Pattern Recognit. 2016, 53, 148–162. [Google Scholar] [CrossRef]

- Duta, I.C.; Uijlings, J.R.; Ionescu, B.; Aizawa, K.; Hauptmann, A.G.; Sebe, N. Efficient human action recognition using histograms of motion gradients and VLAD with descriptor shape information. Multimed. Tools Appl. 2017, 76, 22445–22472. [Google Scholar] [CrossRef]

- Peng, X.; Wang, L.; Wang, X.; Qiao, Y. Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice. Comput. Vis. Image Underst. 2016, 150, 109–125. [Google Scholar] [CrossRef]

| Size (CDic) | Time (s) |

|---|---|

| K = 50 | 92.20 |

| K = 100 | 198.35 |

| K = 200 | 369.45 |

| K = 300 | 524.12 |

| Coding Design Matrix/ | Gaussian | Linear | Polynomial |

|---|---|---|---|

| Kernel Function | Kernel | Kernel | Kernel |

| One vs. One | 96.7 | 95.5 | 94.5 |

| One vs. All | 98.6 | 97.1 | 96.7 |

| Ordinal | 95.7 | 94.7 | 94.0 |

| Binary Complete | 97.7 | 96.4 | 95.8 |

| Dense Random | 98.5 | 97.2 | 96.6 |

| Sparse Random | 98.5 | 97.7 | 96.7 |

| Author | Method | Results |

|---|---|---|

| Proposed | Dynamic Spatio-temporal Bag of Expressions (D-STBoE) Model | 99.21 |

| [7] | Bag of Expression (BoE) | 99.51 |

| [44] | Multilayer neural network | 99.80 |

| [55] | Foreground Trajectory extraction method | 97.50 |

| [56] | Power difference template representation | 95.18 |

| [57] | Direction-dependent feature pairs and non-negative low-rank sparse model | 94.99 |

| [58] | Space-time robust representation | 94.60 |

| Author | Method | Results |

|---|---|---|

| Proposed | Dynamic Spatio-temporal Bag of Expressions (D-STBoE) Model | 98.60 |

| [7] | Bag of Expression (BoE) | 97.33 |

| [43] | Spatio-temporal features with deep neural network | 91.80 |

| [42] | Robust non-linear knowledge transfer model (R-NKTM) | 90.00 |

| [59] | Universal multi-view dictionary | 91.00 |

| [55] | Foreground Trajectory extraction method | 91.40 |

| [60] | Holistic and motion cues based approach | 95.60 |

| [61] | 3D-TCCHOGAC and 3D-HOOFGAC feature descriptors | 96.00 |

| [56] | Power difference template representation | 88.60 |

| [62] | Factored matrices of dual tensors | 92.70 |

| [63] | Multi-Region two stream R-CNN | 95.50 |

| [64] | Spatio-temporal tube of maximum mutual information | 90.70 |

| [65] | Local motion and group sparsity-based approach | 89.70 |

| [66] | Dense trajectories and motion boundary descriptors | 88.00 |

| [67] | Sparse representation and supervised class specific dictionary learning | 86.60 |

| [68] | Invariant spatio-temporal features with independent subspace analysis | 86.50 |

| [69] | Person detector along with BOF approach | 86.70 |

| [8] | hierarchy of discriminative space-time neighborhood features | 87.30 |

| Author | Method | Results |

|---|---|---|

| Proposed | Dynamic Spatio-temporal Bag of Expressions (D-STBoE) Model | 96.94 |

| [43] | Spatio-temporal features with deep neural network | 98.76 |

| [59] | Universal multi-view dictionary | 85.90 |

| [55] | Foreground Trajectory extraction method | 91.37 |

| [70] | Graph-based multiple-instance learning | 84.60 |

| [65] | Local motion and group sparsity-based approach | 86.10 |

| [66] | Dense trajectories and motion boundary descriptors | 84.10 |

| [68] | Invariant spatio-temporal features with independent subspace analysis | 75.80 |

| Author | Method | Results |

|---|---|---|

| Proposed | Dynamic Spatio-temporal Bag of Expressions (D-STBoE) Model | 94.10 |

| [71] | HMG + iDT Descriptor | 93.00 |

| [72] | Bag of Words and Fusion Methods | 92.30 |

| [5] | Dense Trajectories | 91.70 |

| [66] | Dense Trajectories and motion boundary descriptor | 91.20 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nazir, S.; Yousaf, M.H.; Nebel, J.-C.; Velastin, S.A. Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model for Human Action Recognition. Sensors 2019, 19, 2790. https://doi.org/10.3390/s19122790

Nazir S, Yousaf MH, Nebel J-C, Velastin SA. Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model for Human Action Recognition. Sensors. 2019; 19(12):2790. https://doi.org/10.3390/s19122790

Chicago/Turabian StyleNazir, Saima, Muhammad Haroon Yousaf, Jean-Christophe Nebel, and Sergio A. Velastin. 2019. "Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model for Human Action Recognition" Sensors 19, no. 12: 2790. https://doi.org/10.3390/s19122790

APA StyleNazir, S., Yousaf, M. H., Nebel, J.-C., & Velastin, S. A. (2019). Dynamic Spatio-Temporal Bag of Expressions (D-STBoE) Model for Human Action Recognition. Sensors, 19(12), 2790. https://doi.org/10.3390/s19122790