Dual-Channel Reconstruction Network for Image Compressive Sensing

Abstract

1. Introduction

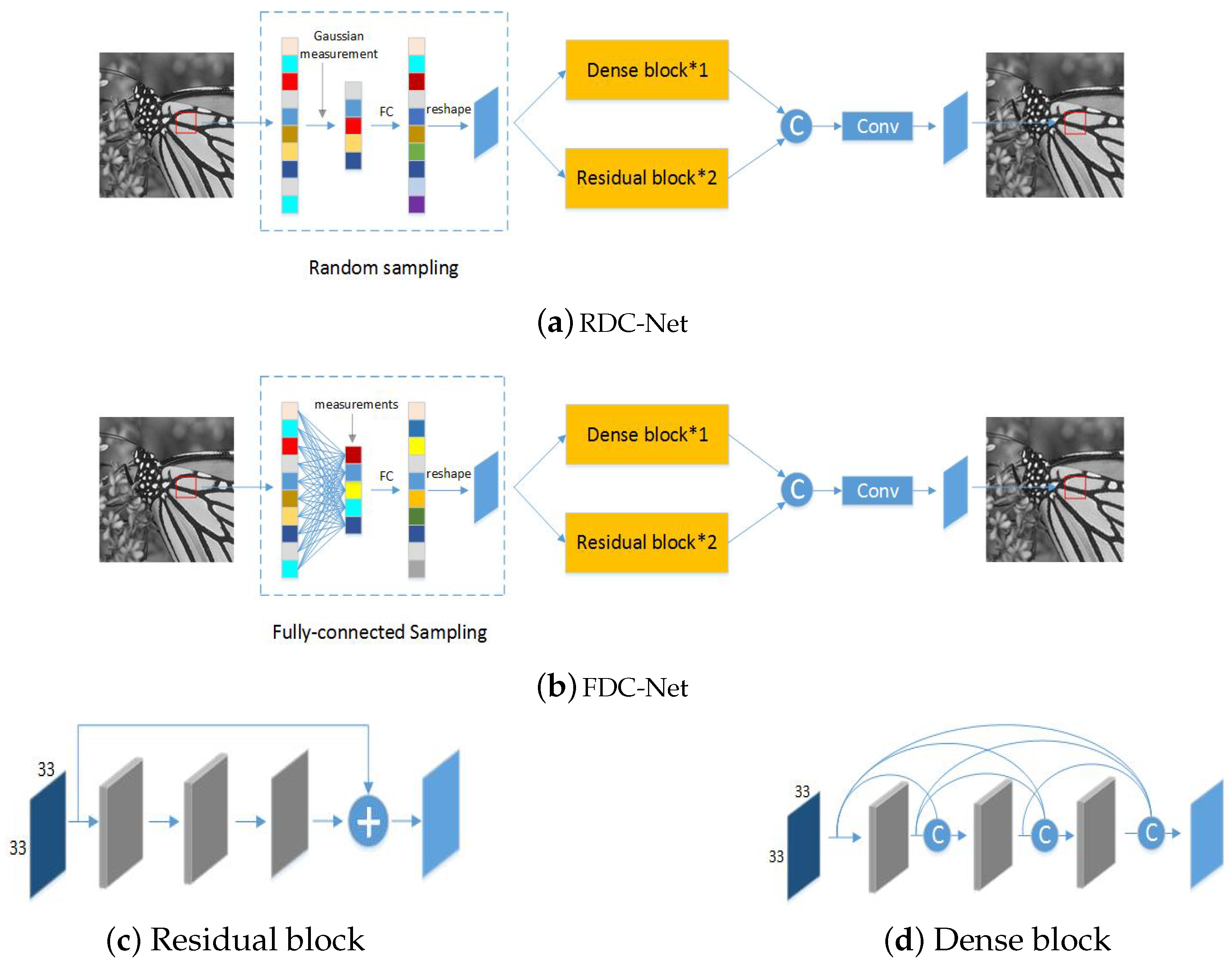

- Unlike the deep-learning network with a very deep single-channel, we propose a novel shallow dual-channel reconstruction module for image compressive sensing reconstruction, in which each channel can extract different level features. It brings the better reconstruction quality.

- The proposed DC-Net module has two residual blocks and one dense block. Because the dense block has fewer parameters than residual block, the time complexity of the proposed method is lower than DR-Net with four residual blocks.

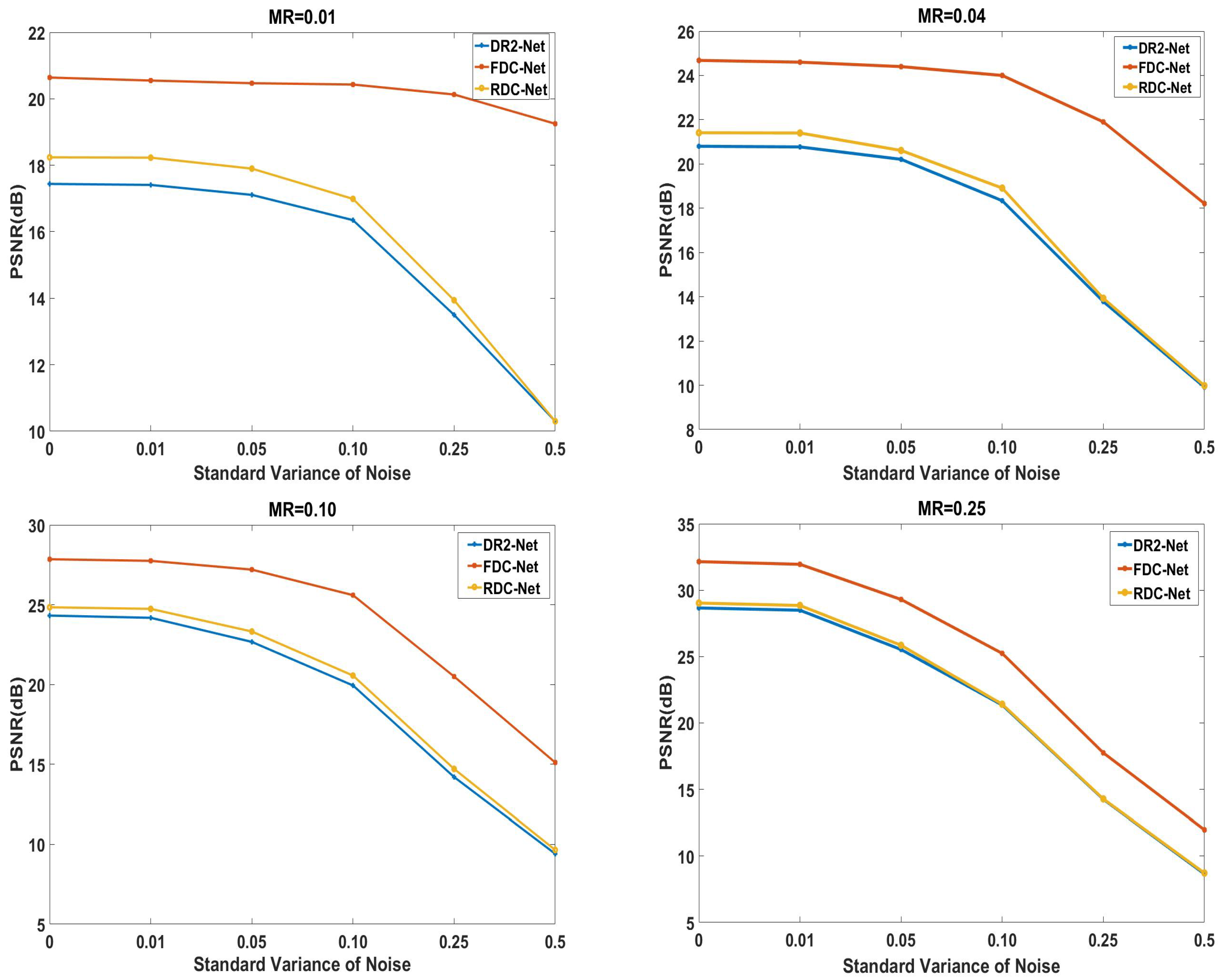

- In our method, two residual blocks in one channel can obtain high level features and one dense block in another channel can obtain the low level features. Experiment results show both RDC-Net and FDC-Net have better robustness than DR-Net.

2. Related Work

3. Network Architecture

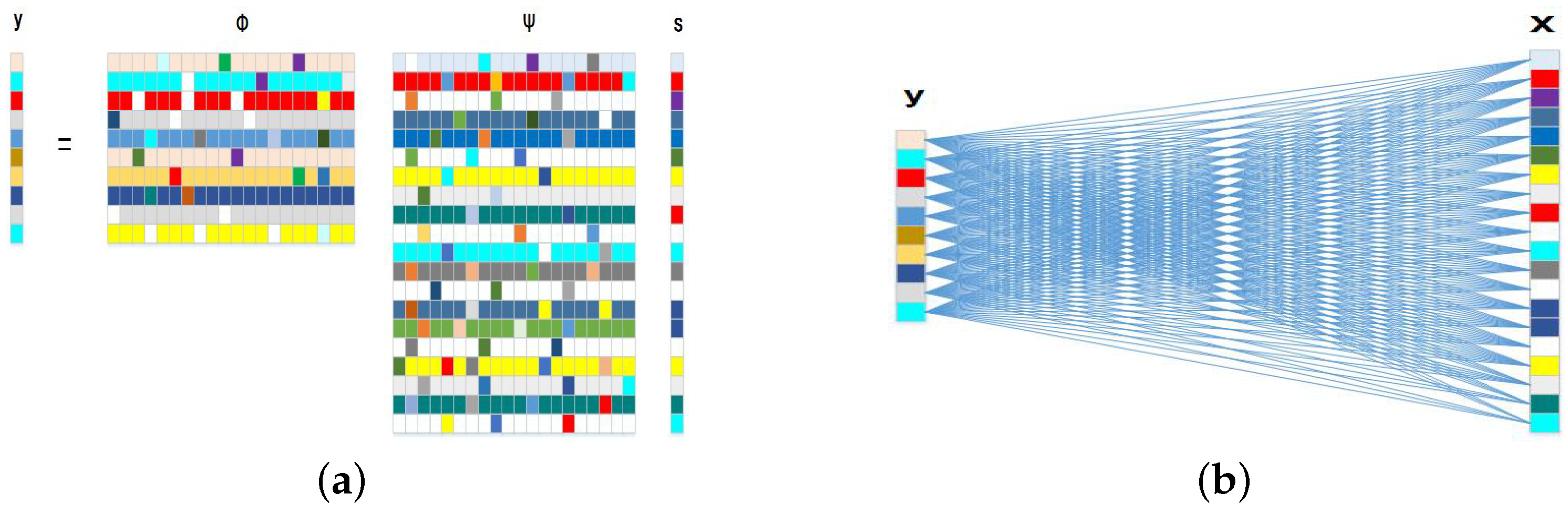

3.1. Under-Sampling and Preliminary Reconstruction

3.2. Dual-Channel Network Module

3.3. Architecture

4. Experiments

4.1. Training Data

4.2. Training Strategy

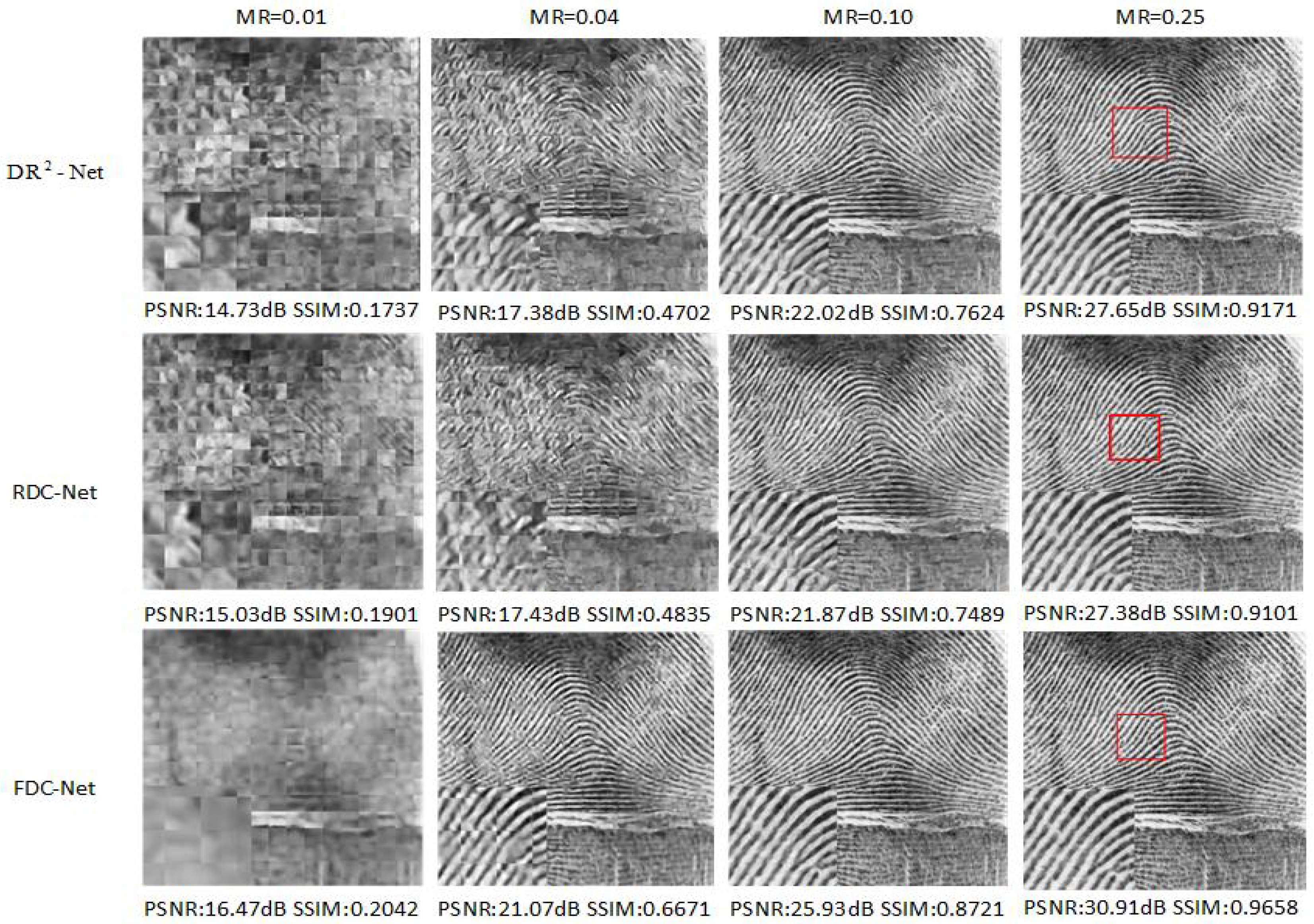

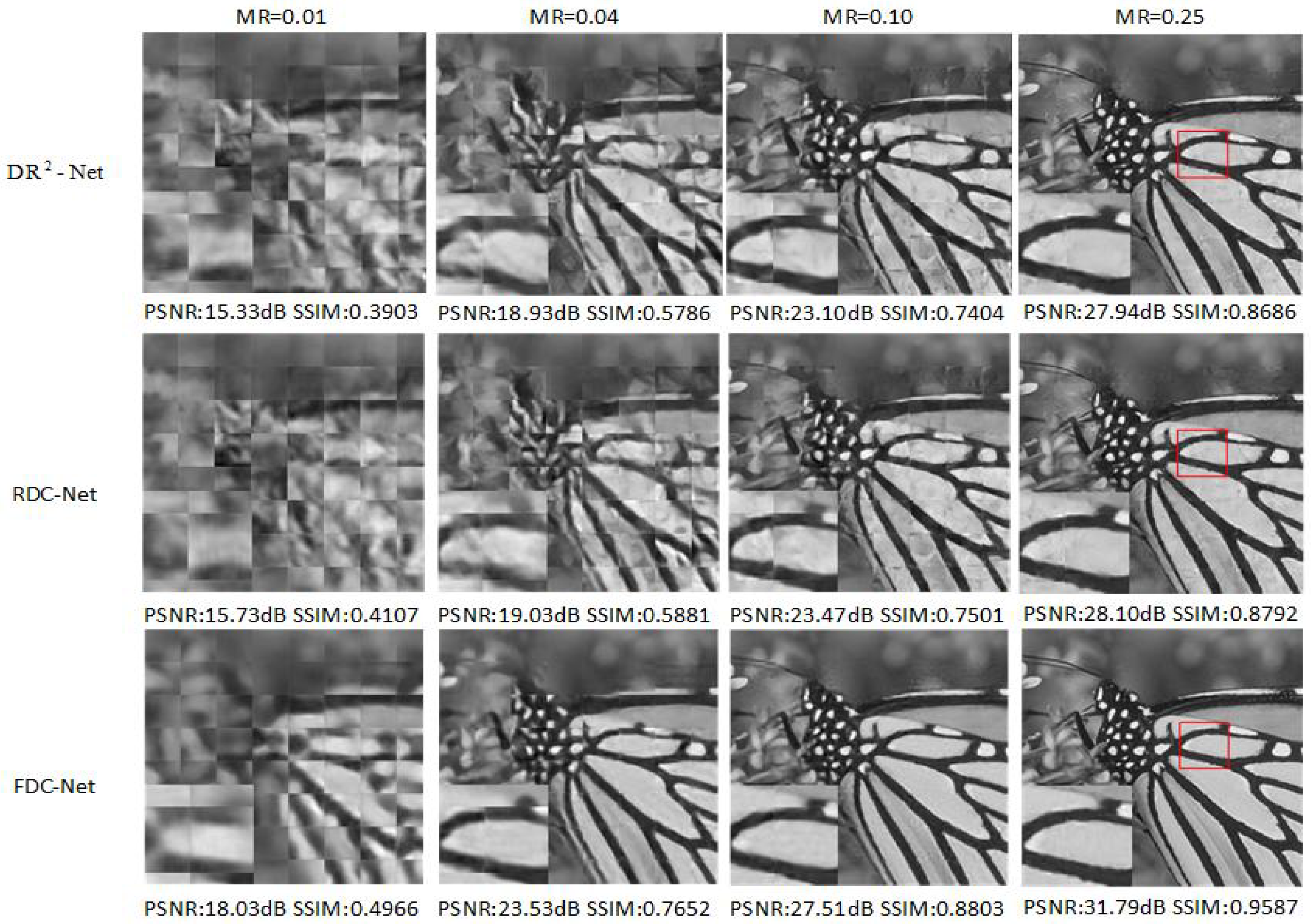

4.3. Comparison with Other Methods

4.4. Evaluation on Different Network Architectures

4.5. Robustness to Noise

4.6. Evaluation on ImageNet Val Dataset

4.7. Time Complexity and Network Convergence

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Huang, G.; Jiang, H.; Matthews, K.; Wilford, P. Lensless imaging by compressive sensing. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 2101–2105. [Google Scholar] [CrossRef]

- Gehm, M.E.; John, R.; Brady, D.J.; Willett, R.M.; Schulz, T.J. Single-shot compressive spectral imaging with a dual-disperser architecture. Opt. Express 2007, 15, 14013–14027. [Google Scholar] [CrossRef] [PubMed]

- Rajwade, A.; Kittle, D.; Tsai, T.H.; Brady, D.; Carin, L. Coded Hyperspectral Imaging and Blind Compressive Sensing. SIAM J. Imaging Sci. 2013, 6, 782–812. [Google Scholar] [CrossRef]

- Hitomi, Y.; Gu, J.; Gupta, M.; Mitsunaga, T.; Nayar, S.K. Video from a single coded exposure photograph using a learned over-complete dictionary. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 287–294. [Google Scholar]

- Lustig, M.; Donoho, D.; Santos, J.; Pauly, J. Compressed Sensing MRI. IEEE Signal Process. Mag. 2008, 25, 72–82. [Google Scholar] [CrossRef]

- Mallat, S.G.; Zhang, Z. Matching pursuits with time-frequency dictionaries. IEEE Trans. Signal Process. 1993, 41, 3397–3415. [Google Scholar] [CrossRef]

- Tropp, J.A.; Gilbert, A.C. Signal Recovery From Random Measurements Via Orthogonal Matching Pursuit. IEEE Trans. Inf. Theory 2007, 53, 4655–4666. [Google Scholar] [CrossRef]

- Tropp, J.A. Greed is good: Algorithmic results for sparse approximation. IEEE Trans. Inf. Theory 2004, 50, 2231–2242. [Google Scholar] [CrossRef]

- Li, C.; Yin, W.; Jiang, H.; Zhang, Y. An efficient augmented Lagrangian method with applications to total variation minimization. Comput. Optim. Appl. 2013, 56, 507–530. [Google Scholar] [CrossRef]

- Blumensath, T.; Davies, M.E. Iterative hard thresholding for compressed sensing. Appl. Comput. Harmon. Anal. 2009, 27, 265–274. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; De Mol, C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2003, 57, 1413–1457. [Google Scholar] [CrossRef]

- Donoho, D.L.; Maleki, A.; Montanari, A. Message-passing algorithms for compressed sensing. Proc. Natl. Acad. Sci. USA 2009, 106, 18914–18919. [Google Scholar] [CrossRef]

- Metzler, C.A.; Maleki, A.; Baraniuk, R.G. From Denoising to Compressed Sensing. IEEE Trans. Inf. Theory 2016, 62, 5117–5144. [Google Scholar] [CrossRef]

- Mousavi, A.; Patel, A.B.; Baraniuk, R.G. A deep learning approach to structured signal recovery. In Proceedings of the 2015 53rd Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 29 September–2 October 2015; pp. 1336–1343. [Google Scholar] [CrossRef]

- Kulkarni, K.; Lohit, S.; Turaga, P.K.; Kerviche, R.; Ashok, A. ReconNet: Non-Iterative Reconstruction of Images from Compressively Sensed Random Measurements. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Yao, H.; Dai, F.; Zhang, D.; Ma, Y.; Zhang, S.; Zhang, Y. DR2-Net: Deep Residual Reconstruction Network for Image Compressive Sensing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Mousavi, A.; Baraniuk, R.G. Learning to Invert: Signal Recovery via Deep Convolutional Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 2272–2276. [Google Scholar]

- Metzler, C.; Mousavi, A.; Baraniuk, R. Learned D-AMP: Principled Neural Network based Compressive Image Recovery. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 1772–1783. [Google Scholar]

- Unde, A.S.; Deepthi, P. Block compressive sensing: Individual and joint reconstruction of correlated images. J. Vis. Commun. Image Represent. 2017, 44, 187–197. [Google Scholar] [CrossRef]

- Mun, S.; Fowler, J.E. Block Compressed Sensing of Images Using Directional Transforms. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3021–3024. [Google Scholar]

- Huang, G.; Liu, Z.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Lu, X.; Dong, W.; Wang, P.; Shi, G.; Xie, X. ConvCSNet: A Convolutional Compressive Sensing Framework Based on Deep Learning. arXiv 2018, arXiv:1801.10342. [Google Scholar]

- Wang, Y.; Bai, H.; Zhao, L.; Zhao, Y. Cascaded reconstruction network for compressive image sensing. EURASIP J. Image Video Process. 2018, 2018, 77. [Google Scholar] [CrossRef]

- Khoramian, S. An iterative thresholding algorithm for linear inverse problems with multi-constraints and its applications. Appl. Comput. Harmon. Anal. 2012, 32, 109–130. [Google Scholar] [CrossRef]

- Jin, T.; Ma, Y.; Baron, D. Compressive Imaging via Approximate Message Passing with Image Denoising. IEEE Trans. Signal Process. 2015, 63, 2085–2092. [Google Scholar]

- Tan, J.; Ma, Y.; Rueda, H.; Baron, D.; Arce, G.R. Compressive Hyperspectral Imaging via Approximate Message Passing. IEEE J. Sel. Top. Signal Process. 2016, 10, 389–401. [Google Scholar] [CrossRef]

- Schniter, P.; Rangan, S.; Fletcher, A. Denoising based Vector Approximate Message Passing. arXiv 2016, arXiv:1611.01376. [Google Scholar]

- Tipping, M.E.; Faul, A. Fast Marginal Likelihood Maximisation for Sparse Bayesian Models. In Proceedings of the Ninth International Workshop on Artificial Intelligence and Statistics, Key West, FL, USA, 3–6 January 2003; pp. 3–6. [Google Scholar]

- Wu, J.; Liu, F.; Jiao, L. Fast lp-sparse Bayesian learning for compressive sensing reconstruction. In Proceedings of the 2011 4th International Congress on Image and Signal Processing, Shanghai, China, 15–17 October 2011; Volume 4, pp. 1894–1898. [Google Scholar] [CrossRef]

- Meng, X.; Wu, S.; Zhu, J. A Unified Bayesian Inference Framework for Generalized Linear Models. IEEE Signal Process. Lett. 2018, 25, 398–402. [Google Scholar] [CrossRef]

- Zhu, J.; Han, L.; Meng, X. An AMP-Based Low Complexity Generalized Sparse Bayesian Learning Algorithm. IEEE Access 2019, 7, 7965–7976. [Google Scholar] [CrossRef]

- Fang, J.; Zhang, L.; Li, H. Two-Dimensional Pattern-Coupled Sparse Bayesian Learning via Generalized Approximate Message Passing. IEEE Trans. Image Process. 2016, 25, 2920–2930. [Google Scholar] [CrossRef]

- Shekaramiz, M.; Moon, T.K.; Gunther, J.H. Bayesian Compressive Sensing of Sparse Signals with Unknown Clustering Patterns. Entropy 2019, 21, 247. [Google Scholar] [CrossRef]

- Kang, B.; Zhu, W.; Yan, J. Fusion framework for multi-focus images based on compressed sensing. IET Image Process. 2013, 7, 290–299. [Google Scholar] [CrossRef]

- Li, K.; Gan, L.; Ling, C. Convolutional Compressed Sensing Using Deterministic Sequences. IEEE Trans. Signal Process. 2013, 61, 740–752. [Google Scholar] [CrossRef]

- Yu, N.Y.; Gan, L. Convolutional Compressed Sensing Using Decimated Sidelnikov Sequences. IEEE Signal Process. Lett. 2014, 21, 591–594. [Google Scholar] [CrossRef]

- Dong, W.; Shi, G.; Wu, X.; Zhang, L. A learning-based method for compressive image recovery. J. Vis. Commun. Image Represent. 2013, 24, 1055–1063. [Google Scholar] [CrossRef]

- Yang, G.; Yu, S.; Dong, H.; Slabaugh, G.; Firmin, D. DAGAN: Deep De-Aliasing Generative Adversarial Networks for Fast Compressed Sensing MRI Reconstruction. IEEE Trans. Med. Imaging 2018, 37, 1310–1321. [Google Scholar] [CrossRef]

- Yu, S.; Dong, H.; Yang, G.; Slabaugh, G.G.; Dragotti, P.L.; Ye, X.; Liu, F.; Arridge, S.R.; Keegan, J.; Firmin, D.N.; et al. Deep De-Aliasing for Fast Compressive Sensing MRI. arXiv 2017, arXiv:1705.07137. [Google Scholar]

- Seitzer, M.; Yang, G.; Schlemper, J.; Oktay, O.; Würfl, T.; Christlein, V.; Wong, T.; Mohiaddin, R.; Firmin, D.; Keegan, J.; et al. Adversarial and Perceptual Refinement for Compressed Sensing MRI Reconstruction. In Proceedings of the International Conference on Medical Image Computing and Computer Assisted Intervention—MICCAI, Granada, Spain, 16–20 September 2018. [Google Scholar]

- Schlemper, J.; Yang, G.; Ferreira, P.; Scott, A.; McGill, L.A.; Khalique, Z.; Gorodezky, M.; Roehl, M.; Keegan, J.; Pennell, D.; et al. Stochastic Deep Compressive Sensing for the Reconstruction of Diffusion Tensor Cardiac MRI. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI, Granada, Spain, 16–20 September 2018. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Wang, B.; Ma, S.X. Improvement of Gaussian Random Measurement Matrices in Compressed Sensing. Adv. Mater. Res. 2011, 301–303, 245–250. [Google Scholar] [CrossRef]

- Adcock, B.; Hansen, A.C.; Roman, B. A Note on Compressed Sensing of Structured Sparse Wavelet Coefficients From Subsampled Fourier Measurements. IEEE Signal Process. Lett. 2016, 23, 732–736. [Google Scholar] [CrossRef]

- Huang, T.; Fan, Y.Z.; Hu, M. Compressed sensing based on random symmetric Bernoulli matrix. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017; pp. 191–196. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning Representations by Back-Propagating Errors; MIT Press: Cambridge, MA, USA, 1988; pp. 533–536. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional Architecture for Fast Feature Embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; ACM: New York, NY, USA, 2014; pp. 675–678. [Google Scholar] [CrossRef]

- Dong, W.; Shi, G.; Li, X.; Ma, Y.; Huang, F. Compressive Sensing via Nonlocal Low-Rank Regularization. IEEE Trans. Image Process. 2014, 23, 3618–3632. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

| Image Name | Methods | PSNR (without Using BM3D/with Using BM3D) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| MR = 0.01 | MR = 0.04 | MR = 0.10 | MR = 0.25 | ||||||

| Barbara | TVAL3 | 11.94 | 11.96 | 18.97 | 18.99 | 21.85 | 22.23 | 24.21 | 24.26 |

| NLR-CS | 5.50 | 5.86 | 11.08 | 11.56 | 14.80 | 14.84 | |||

| D-AMP | 5.48 | 5.51 | 16.37 | 16.37 | 21.23 | 21.24 | 25.08 | 25.96 | |

| ReconNet | 18.61 | 19.07 | 20.38 | 21.20 | 21.90 | 22.51 | 23.20 | 23.55 | |

| DR-Net | 18.65 | 19.10 | 20.69 | 21.31 | 22.69 | 22.84 | 25.77 | 25.99 | |

| CSRNet | 19.10 | 19.21 | 22.94 | 22.95 | 26.17 | 26.34 | |||

| RDC-Net | 21.07 | 21.18 | 25.80 | 25.91 | |||||

| Fingerprint | TVAL3 | 10.35 | 10.37 | 16.03 | 16.07 | 18.68 | 18.71 | 22.71 | 22.68 |

| NLR-CS | 4.85 | 5.19 | 9.67 | 10.10 | 12.80 | 12.84 | 23.51 | 23.52 | |

| D-AMP | 4.66 | 4.74 | 13.83 | 14.00 | 17.13 | 17.14 | 25.18 | 24.15 | |

| ReconNet | 14.82 | 14.88 | 16.91 | 16.96 | 20.75 | 20.96 | 25.57 | 25.14 | |

| DR-Net | 14.73 | 14.95 | 17.38 | 17.47 | 27.76 | ||||

| CSRNet | 21.64 | 21.91 | 27.22 | 27.49 | |||||

| RDC-Net | 15.03 | 15.05 | 17.43 | 17.45 | 21.87 | 21.89 | 27.38 | ||

| Flinstones | TVAL3 | 9.75 | 9.78 | 14.87 | 14.91 | 18.89 | 18.93 | 24.06 | 24.08 |

| NLR-CS | 4.45 | 4.76 | 8.98 | 9.26 | 12.15 | 12.24 | 22.41 | 22.66 | |

| D-AMP | 4.33 | 4.35 | 12.94 | 13.07 | 16.94 | 16.86 | 25.02 | 24.46 | |

| ReconNet | 13.96 | 14.07 | 16.31 | 16.56 | 18.92 | 19.20 | 22.46 | 22.60 | |

| DR-Net | 14.00 | 14.18 | 16.94 | 17.06 | 21.45 | ||||

| CSRNet | 14.32 | 14.39 | 20.52 | 20.82 | 25.46 | 25.47 | |||

| RDC-Net | 17.15 | 21.00 | 25.94 | 26.08 | |||||

| Lena | TVAL3 | 11.87 | 11.91 | 19.47 | 19.53 | 24.17 | 24.21 | 28.68 | 28.72 |

| NLR-CS | 5.96 | 6.26 | 11.62 | 11.98 | 15.31 | 15.34 | 29.39 | 29.67 | |

| D-AMP | 5.73 | 5.96 | 16.53 | 16.87 | 22.53 | 22.54 | 28.00 | 27.46 | |

| ReconNet | 17.87 | 18.07 | 21.28 | 21.83 | 23.83 | 24.51 | 26.52 | 26.55 | |

| DR-Net | 17.97 | 18.43 | 22.13 | 22.73 | 25.38 | 25.77 | 29.42 | 29.64 | |

| CSRNet | 22.89 | 25.72 | 25.97 | 29.55 | 29.70 | ||||

| RDC-Net | 18.69 | 18.96 | |||||||

| Monarch | TVAL3 | 11.09 | 11.12 | 16.74 | 16.75 | 21.16 | 21.16 | 27.75 | 27.77 |

| NLR-CS | 6.38 | 6.76 | 11.62 | 11.98 | 14.60 | 14.67 | 25.91 | 26.10 | |

| D-AMP | 6.21 | 6.21 | 14.57 | 14.57 | 19.00 | 19.00 | 26.39 | 26.56 | |

| ReconNet | 15.39 | 15.47 | 18.18 | 18.33 | 21.10 | 22.51 | 24.32 | 25.05 | |

| DR-Net | 15.33 | 15.50 | 18.93 | 19.23 | 23.10 | 23.54 | 27.94 | 28.30 | |

| CSRNet | 15.42 | 15.46 | 22.99 | 23.25 | 27.98 | 28.37 | |||

| RDC-Net | 19.03 | ||||||||

| Parrot | TVAL3 | 11.44 | 11.46 | 18.88 | 18.91 | 23.13 | 23.15 | 27.18 | 27.24 |

| NLR-CS | 5.12 | 5.44 | 10.60 | 10.92 | 14.14 | 14.18 | 26.53 | 26.72 | |

| D-AMP | 5.08 | 5.08 | 15.78 | 15.78 | 21.63 | 21.63 | 26.88 | 26.99 | |

| ReconNet | 17.61 | 18.31 | 20.27 | 21.06 | 22.63 | 23.25 | 25.59 | 26.22 | |

| DR-Net | 18.01 | 18.41 | 21.16 | 21.86 | 23.95 | 24.32 | 28.72 | ||

| CSRNet | 19.61 | 28.86 | 29.05 | ||||||

| RDC-Net | 19.27 | 21.86 | 21.98 | 24.45 | 24.98 | 29.01 | |||

| Boats | TVAL3 | 11.86 | 11.87 | 19.21 | 19.21 | 23.85 | 23.86 | 28.81 | 28.81 |

| NLR-CS | 5.38 | 5.73 | 10.77 | 11.22 | 14.83 | 14.86 | 29.11 | 29.25 | |

| D-AMP | 5.34 | 5.35 | 16.01 | 16.01 | 21.95 | 21.95 | 29.26 | 29.26 | |

| ReconNet | 18.49 | 18.87 | 21.38 | 21.62 | 24.15 | 24.21 | 27.30 | 27.35 | |

| DR-Net | 18.67 | 18.96 | 22.11 | 25.58 | 25.91 | 30.09 | 30.30 | ||

| CSRNet | 18.99 | 19.09 | 22.55 | 25.65 | 25.80 | 30.14 | |||

| RDC-Net | 22.20 | 22.35 | 30.35 | ||||||

| Cameraman | TVAL3 | 11.97 | 11.98 | 18.30 | 18.33 | 21.91 | 21.92 | 25.69 | 25.70 |

| NLR-CS | 5.98 | 6.36 | 11.04 | 11.46 | 14.18 | 14.22 | 24.88 | 24.97 | |

| D-AMP | 5.64 | 5.65 | 15.12 | 15.12 | 20.35 | 20.35 | 24.42 | 24.56 | |

| ReconNet | 17.11 | 17.49 | 19.28 | 19.73 | 21.29 | 21.67 | 23.16 | 23.61 | |

| DR-Net | 17.08 | 17.34 | 19.84 | 20.31 | 22.46 | 22.76 | 25.61 | 25.91 | |

| CSRNet | 17.75 | 17.90 | 20.23 | 20.38 | 22.29 | 22.53 | 25.85 | 26.15 | |

| RDC-Net | |||||||||

| Foreman | TVAL3 | 10.98 | 11.02 | 20.64 | 20.65 | 28.69 | 28.74 | 35.41 | 35.55 |

| NLR-CS | 3.92 | 4.26 | 9.08 | 9.46 | 13.53 | 13.54 | |||

| D-AMP | 3.84 | 3.84 | 16.27 | 16.31 | 25.50 | 25.53 | 35.45 | 35.06 | |

| ReconNet | 20.04 | 20.33 | 23.72 | 24.61 | 27.10 | 28.58 | 29.47 | 30.79 | |

| DR-Net | 20.59 | 21.08 | 25.34 | 26.32 | 29.20 | 30.18 | 33.53 | 34.28 | |

| CSRNet | 23.32 | 30.96 | 31.35 | 34.89 | 35.10 | ||||

| RDC-Net | 22.98 | 27.27 | 27.29 | 35.11 | 35.31 | ||||

| House | TVAL3 | 11.86 | 11.90 | 20.94 | 20.96 | 26.29 | 26.33 | 32.09 | 32.14 |

| NLR-CS | 4.96 | 5.26 | 10.66 | 11.08 | 14.77 | 14.80 | |||

| D-AMP | 5.00 | 5.01 | 16.91 | 16.37 | 24.83 | 24.73 | 33.64 | 32.96 | |

| ReconNet | 19.31 | 19.52 | 22.57 | 23.20 | 26.69 | 26.70 | 28.47 | 29.20 | |

| DR-Net | 19.61 | 19.99 | 23.91 | 24.70 | 27.52 | 28.42 | 31.82 | 32.52 | |

| CSRNet | 20.67 | 20.79 | 24.55 | 24.85 | 28.24 | 28.68 | 32.46 | 33.05 | |

| RDC-Net | 32.87 | 33.07 | |||||||

| Peppers | TVAL3 | 11.35 | 11.37 | 18.21 | 18.23 | 22.64 | 22.65 | 29.65 | |

| NLR-CS | 5.76 | 6.11 | 11.38 | 11.81 | 14.94 | 14.99 | 28.89 | 29.24 | |

| D-AMP | 5.79 | 5.84 | 16.17 | 16.46 | 21.33 | 21.38 | 29.88 | 28.96 | |

| ReconNet | 16.83 | 16.98 | 19.57 | 20.00 | 22.15 | 22.68 | 24.77 | 25.15 | |

| DR-Net | 16.90 | 17.11 | 20.32 | 20.75 | 23.72 | 24.26 | 28.48 | 29.11 | |

| CSRNet | 17.61 | 17.67 | 24.35 | 28.58 | 29.19 | ||||

| RDC-Net | 21.03 | 21.21 | 24.64 | 29.27 | |||||

| Mean | TVAL3 | 11.31 | 11.34 | 18.39 | 18.41 | 22.84 | 22.90 | 27.84 | 27.87 |

| NLR-CS | 5.30 | 5.64 | 10.59 | 10.98 | 14.19 | 14.23 | 28.05 | 28.20 | |

| D-AMP | 5.19 | 5.23 | 15.50 | 15.54 | 21.13 | 21.12 | 28.11 | 27.85 | |

| ReconNet | 17.28 | 17.55 | 19.99 | 20.46 | 22.77 | 23.34 | 25.53 | 25.93 | |

| DR-Net | 17.41 | 17.73 | 20.80 | 21.29 | 24.25 | 24.72 | 28.66 | 29.06 | |

| CSRNet | 18.35 | 24.55 | 24.81 | 28.83 | 29.11 | ||||

| RDC-Net | 18.24 | 21.41 | 21.55 | ||||||

| Image Name | Methods | PSNR (without Using BM3D/with Using BM3D) | |||||||

|---|---|---|---|---|---|---|---|---|---|

| MR = 0.01 | MR = 0.04 | MR = 0.10 | MR = 0.25 | ||||||

| Barbara | SDA | 18.59 | 18.76 | 20.49 | 20.86 | 22.17 | 22.39 | 23.19 | 23.21 |

| ConvCSNet | 18.14 | 18.35 | 20.85 | 21.00 | 22.95 | 23.01 | 25.85 | 25.98 | |

| ASRNet | 21.40 | 21.52 | 23.48 | 23.54 | 24.34 | 24.35 | 26.30 | 26.43 | |

| FDC-Net | |||||||||

| Fingerprint | SDA | 14.81 | 14.82 | 16.85 | 16.87 | 20.29 | 20.32 | 24.29 | 24.21 |

| ConvCSNet | 14.54 | 14.82 | 18.44 | 18.71 | 19.76 | 20.11 | 28.00 | 28.11 | |

| ASRNet | 16.20 | 16.21 | 20.98 | 28.82 | 29.23 | ||||

| FDC-Net | 21.11 | 25.93 | 26.11 | ||||||

| Flinstones | SDA | 13.91 | 13.96 | 16.21 | 16.10 | 18.40 | 18.21 | 20.88 | 20.21 |

| ConvCSNet | 15.04 | 15.32 | 17.22 | 17.58 | 19.49 | 19.82 | 26.42 | 26.53 | |

| ASRNet | 16.30 | 16.39 | 19.78 | 20.08 | 24.01 | 26.93 | 27.40 | ||

| FDC-Net | 24.55 | ||||||||

| Lena | SDA | 17.84 | 17.95 | 21.17 | 21.56 | 23.81 | 24.16 | 25.87 | 25.70 |

| ConvCSNet | 17.97 | 18.16 | 21.78 | 22.08 | 25.27 | 25.61 | 27.11 | 27.32 | |

| ASRNet | 25.74 | 25.93 | 28.54 | 28.78 | 30.65 | 30.89 | |||

| FDC-Net | 21.67 | 21.71 | |||||||

| Monarch | SDA | 15.31 | 15.38 | 18.11 | 18.19 | 20.95 | 21.04 | 23.54 | 23.32 |

| ConvCSNet | 16.31 | 16.81 | 18.92 | 19.18 | 21.76 | 22.01 | 26.59 | 26.71 | |

| ASRNet | 17.74 | 17.85 | 23.23 | 23.49 | 27.17 | 27.50 | 29.29 | 29.60 | |

| FDC-Net | |||||||||

| Parrot | SDA | 17.71 | 17.89 | 20.37 | 20.67 | 22.14 | 22.35 | 24.48 | 24.37 |

| ConvCSNet | 17.86 | 18.15 | 20.55 | 21.18 | 24.41 | 24.85 | 26.26 | 26.38 | |

| ASRNet | 21.87 | 22.01 | 24.67 | 27.68 | 29.61 | 29.80 | |||

| FDC-Net | 24.50 | 27.84 | |||||||

| Boats | SDA | 18.55 | 18.68 | 21.29 | 21.54 | 24.01 | 24.18 | 26.56 | 26.24 |

| ConvCSNet | 18.11 | 18.39 | 21.81 | 22.08 | 24.82 | 25.31 | 27.86 | 27.98 | |

| ASRNet | 25.52 | 25.72 | 28.86 | 29.17 | 31.28 | 31.64 | |||

| FDC-Net | 21.39 | 21.50 | |||||||

| Cameraman | SDA | 17.06 | 17.19 | 19.31 | 19.56 | 21.15 | 21.30 | 22.77 | 22.64 |

| ConvCSNet | 17.61 | 17.92 | 19.40 | 20.01 | 22.31 | 22.69 | 25.15 | 25.26 | |

| ASRNet | 19.77 | 19.89 | 22.74 | 22.88 | 25.00 | 25.13 | 26.46 | 26.66 | |

| FDC-Net | |||||||||

| Foreman | SDA | 20.08 | 20.24 | 23.62 | 24.09 | 26.43 | 27.16 | 28.40 | 28.91 |

| ConvCSNet | 19.09 | 19.54 | 22.46 | 22.81 | 25.97 | 26.11 | 30.39 | 30.81 | |

| ASRNet | 25.77 | 30.56 | 30.78 | 33.79 | 34.09 | 35.85 | 36.19 | ||

| FDC-Net | 25.92 | ||||||||

| House | SDA | 19.45 | 19.59 | 22.51 | 22.94 | 25.41 | 26.07 | 27.65 | 27.86 |

| ConvCSNet | 18.40 | 18.82 | 22.22 | 22.71 | 26.46 | 26.51 | 26.76 | 26.98 | |

| ASRNet | 27.82 | 28.21 | 31.47 | 33.44 | 33.84 | ||||

| FDC-Net | 23.08 | 23.14 | 31.79 | ||||||

| Peppers | SDA | 16.93 | 17.04 | 19.63 | 19.89 | 22.10 | 22.35 | 24.31 | 24.15 |

| ConvCSNet | 17.69 | 18.01 | 20.76 | 21.08 | 23.12 | 23.66 | 26.26 | 26.51 | |

| ASRNet | 20.17 | 20.33 | 24.03 | 24.32 | 27.03 | 29.72 | 30.18 | ||

| FDC-Net | 27.21 | ||||||||

| Mean | SDA | 17.29 | 17.41 | 19.96 | 20.21 | 22.43 | 22.68 | 24.72 | 24.55 |

| ConvCSNet | 17.34 | 17.66 | 20.40 | 20.77 | 23.30 | 23.61 | 26.97 | 27.14 | |

| ASRNet | 20.51 | 20.66 | 24.40 | 24.65 | 27.65 | 27.96 | 29.85 | 30.17 | |

| FDC-Net | |||||||||

| Models | PSNR (without Using BM3D/with Using BM3D) | |||||||

|---|---|---|---|---|---|---|---|---|

| MR = 0.01 | MR = 0.04 | MR = 0.10 | MR = 0.25 | |||||

| One-densblock | 20.41 | 20.52 | 24.17 | 24.82 | 27.29 | 27.55 | 30.29 | 30.85 |

| Two-resblocks | 20.37 | 20.50 | 24.41 | 24.72 | 27.52 | 27.83 | 30.57 | 30.99 |

| one-resblock+one-densblock | 20.43 | 20.48 | 24.43 | 24.75 | 27.50 | 27.78 | 30.47 | 30.98 |

| two-resblocks+two-densblock | 20.60 | 20.78 | 24.57 | 24.80 | 27.54 | 27.76 | 31.64 | 32.06 |

| three-resblocks+one-densblock | 24.60 | 24.89 | 27.49 | 27.68 | 31.55 | 31.81 | ||

| FDC-Net | 20.64 | 20.82 | ||||||

| Models | MR = 0.01 | MR = 0.04 | MR = 0.10 | MR = 0.25 |

|---|---|---|---|---|

| DR-Net | 23.27 | 25.90 | 27.78 | 29.10 |

| RDC-Net | 23.87 | 26.92 | 29.76 | 32.07 |

| FDC-Net |

| Models | MR = 0.01 | MR = 0.04 | MR = 0.10 | MR = 0.25 |

|---|---|---|---|---|

| DR-Net | 0.0686 | 0.0676 | 0.0680 | 0.0678 |

| RDC-Net | 0.0590 | 0.0591 | 0.0595 | 0.0591 |

| FDC-Net |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Gao, D.; Xie, X.; Shi, G. Dual-Channel Reconstruction Network for Image Compressive Sensing. Sensors 2019, 19, 2549. https://doi.org/10.3390/s19112549

Zhang Z, Gao D, Xie X, Shi G. Dual-Channel Reconstruction Network for Image Compressive Sensing. Sensors. 2019; 19(11):2549. https://doi.org/10.3390/s19112549

Chicago/Turabian StyleZhang, Zhongqiang, Dahua Gao, Xuemei Xie, and Guangming Shi. 2019. "Dual-Channel Reconstruction Network for Image Compressive Sensing" Sensors 19, no. 11: 2549. https://doi.org/10.3390/s19112549

APA StyleZhang, Z., Gao, D., Xie, X., & Shi, G. (2019). Dual-Channel Reconstruction Network for Image Compressive Sensing. Sensors, 19(11), 2549. https://doi.org/10.3390/s19112549