1. Introduction

The classic Kalman filtering (KF) [

1] requires the model of the dynamic system is accurate. However, in many realistic situations, the model may contain unknown inputs in process or measurement equations. The issue concerning estimating the state of a linear time-varying discrete time system with unknown inputs is widely studied by researchers. One widely adopted approach is to consider the unknown inputs as part of the system state and then estimate both of them. This leads to an augmented state Kalman filtering (ASKF). Its computational cost increases due to the augmented state dimension. It is proposed by Friedland [

2] in 1969 a two-stage Kalman filtering (TSKF) to reduce the computation complexity of the ASKF, which is optimal for the situation of a constant unknown input. On the basis of the work in [

2], it is proposed by Hsieh et al. an optimal two-stage algorithm (OTSKF) for the dynamic system with random bias and a robust two-stage algorithm for the dynamic system with unknown inputs in 1999 [

3] and 2000 [

4] respectively. It is assumed in [

3,

4,

5] that the unknown inputs were an autoregressive AR (1) process, with the two-stage algorithms being optimal in the mean square error (MSE) sense. However, the optimality of the ASKF and OTSKF depends on the premise that the initial value of the unknown measurement can be estimated correctly. Under the condition of incorrect initial value of the unknown measurement, the ASKF and OTSKF will have poor performance (see Examples 1 and 2 in

Section 5), especially, when the unknown measurement is not stationary as regarded in [

4,

5]. Due to the difficulty of knowing the exact initial value of the unknown measurement, improvements should be made on these approaches. Many other researchers have focused on the problem of unknown inputs [

6,

7,

8] in recent years.

A large number of sensors are now used in practical applications in numerous advanced systems. With the processing center receiving all measurements from the local sensors in time, centralized Kalman filtering (CKF) can be accomplished, and the resulting state estimates are optimal in the MSE. Nevertheless, because of limited communication bandwidth, and relatively low survivability of the system in unfavorable conditions, like martial circumstances, Kalman filtering is required to be carried on every local sensor upon its own observation first for local requirement, and then the processed data-local state estimate is transmitted to a fusion center. Therefore, the fusion center now needs to fuse all the local estimates received to produce a globally optimal or suboptimal state estimate. In the existing research literatures, a large number of researches on distributed Kalman filtering (DKF) have been done. Under certain common conditions, particularly, the supposition of cross-independent sensor noises, an optimal DKF fusion was proposed in [

9,

10,

11] respectively, which was proved to be the same as the CKF adopting all sensor measurements, illustrating that it is universally optimal. Besides, a Kalman filtering fusion with feedback was also suggested there. Then, it was presented in [

12] a rigorous performance analysis for Kalman filtering fusion with feedback. The results mentioned above are effective only for conditions with uncoupled observation noises across sensors. It is demonstrated by Song et al. [

13] that when the sensor noises are cross-correlated, the fused state estimate was also equal to the CKF under a mild condition. Similarly with [

13], Luo et al. [

14] posed a distributed Kalman filtering fusion with random state transition and measurement matrices, i.e., random parameter matrices Kalman filtering in 2008. Moreover, they proved that under a mild condition the distributed fusion state estimate is equivalent to the centralized random parameter matrices Kalman filtering using all sensor measurement, which under the assumption that the expectation of all sensor measurement matrices are of full row rank. As far as we know, few studies have been done for multisensor system with unknown inputs by the above mentioned augmented methods. The main reason is that the augmented methods greatly increase the state dimension and computation complexity for the multisensor system.

In this paper, an optimal estimation for dynamic system with unknown inputs in the MSE sense is proposed. Different from the work in [

2,

3,

4,

5], the approach of eliminating instead of estimating the unknown inputs is used. The unknown inputs are assumed to be an autoregressive AR (1) process and are eliminated by measurement difference method. Then the original dynamic system is converted to a remodeled system with correlated process and measurement noises. The new measurement noise of the remodeled system in this paper is not only one-step correlated in time but also correlated with the process noise. We propose a globally optimal recursive state estimate algorithm for this remodeled system. Compared with the ASKF and OTSKF, the new algorithm is still optimal in the MSE sense but with less computation stress. Additionally, it is showed that the performance of the new algorithm does not rely on the initial value of the unknown input. For the multisensor system with unknown inputs, we show that the centralized filtering can still be expressed by a linear combination of the local estimates. Therefore, the performance of the distributed filtering fusion is the same as that of the centralized fusion. The new algorithm is optimal in the MSE sense with low computation complexity. Numerical examples are given to support our analysis.

The remainder of this paper is organized as follows: the problem formulation is discussed in

Section 2, followed by an optimal estimation algorithm for dynamic system with unknown inputs being put forward in

Section 3. In

Section 4, a distributed algorithm for multisensor system with unknown inputs will be given, demonstrating that the fused state estimate is equal to the centralized Kalman filtering with all sensor measurements. Several simulation examples are given in

Section 5.

Section 6 is the summary of our analysis and possible future work.

2. Problem Formulation

Consider a discrete time dynamic system:

where

is the system state,

is the measurement vector, the process noise and measurement noise

are zero-mean white noise sequences with the following covariances:

where:

is the unknown input. Matrices

and

are of appropriate dimensions by assuming that

is of full column rank, i.e.,

. Therefore, we have

, where the superscript “

” denotes Moore-Penrose pseudo inverse. It is assumed

follows an autoregressive AR (1), i.e.,:

where

is nonsingular and

is a zero-mean white noise sequences with covariance:

This model is widely considered in [

2,

3,

4,

5]. For example, in radar systems, the measurement often contains a fixed unknown deviation or an unknown deviation that gradually increases as the distance becomes longer. Such deviations can be described by Equation (6).

ASKF and OTSKF are two classic algorithms to handle this problem. The ASKF regards

and

as an augmented state and estimates them together, while the OTSKF estimates

and

respectively at first and then fusions them to achieve the optimal estimation. As a matter of fact, the unknown inputs can be eliminated easily by difference method. Denote:

Equations (1) and (2) can be represented as:

where:

From Equation (12), it is not difficult to find out that the new measurement noise

is one-step correlated and correlates with the process noise, i.e.,:

4. Multisensor Fusion

The

-sensor dynamic system is given by:

where

is the system state,

is the measurement vector in the

-th sensor,

is the process noise and

is measurement noise,

is the unknown input in

-th sensor. Matrices

and

are of appropriate dimensions.

We assume the system has the following statistical properties:

- (1)

Every single sensor satisfies the assumption in

Section 2.

- (2)

is of full column rank, then .

- (3)

is a sequence of independent variables.

Similarly to Equations (9) and (10), Equation (21) could be converted to:

where:

The stacked measurement equation is written as:

where:

According to Theorem 1, the local Kalman filtering at the

-th sensor is:

with covariances of filtering error given by:

where:

According to Theorem 1, the centralized Kalman filtering with all sensor data is given by:

The covariance of filtering error given by:

where:

is the diagonalization of a matrix.

Remark 3. There are two key points to express the centralized filtering Equations (27) and (28) in terms of the local filtering:

(1) Taking into consideration the measurement noise of single sensor in new system Equations (22) and (23), it can be observed that the sensor noises of the converted system are cross-correlated even if the original sensor noises are mutually independent.

(2) in Equation (27) is not stacked by localin Equation (26) directly and includesin its expression, which makes our problem more complicated than the previous distributed problem in [9,10,11,12,13,14,21]. Next, we will solve these two problems to express the centralized filtering Equation (28) in terms of the local filtering. We assume that

is of full column rank. Thus, we have

. Using (28), we can get:

Substituting (29) and (30) into (27), we have:

We assume that

is of full column rank, i.e.,

. Thus, we have

. Then, using (24), we can get:

To express the centralized filtering

in terms of the local filtering, by (25), (32) and (33), we have:

where

is the

i-th column block of

.

Thus, substituting (34) into (31) yields:

Similarly to Equation (35), using Equations (24), (26), (29) and (32), we have:

That means the centralized filtering is expressed in terms of the local filtering. Therefore, the distributed fused state estimate is equal to the centralized Kalman filtering adopting all sensor measurements, which means the distributed fused state estimate achieves the best performance.

Remark 4. From this new algorithm, it is easy to see that local sensors should transmit,,andto the fusion center to get global fusion result. The augmented methods greatly increase the state dimension and computation complexity for the multisensor system. Since the difference method does not increase the state dimension, the computation complexity is lower than that of the augmented method for the multisensory system.

5. Numerical Examples

In this section, several simulations will be carried out for dynamic system with unknown inputs. It is assumed that the unknown input in this paper. Actually, the unknown measurement is a stationary time series if the eigenvalue of is less than 1, or else the unknown measurement is a non-stationary time series. The performances of the new algorithm (denoted as Difference KF) in these two cases are discussed in Example 1 and 2, respectively:

Example 1. A two dimension target tracking problem is considered. The target dynamic models are given as Equations (1)–(7). The state transition matrices:and the measurement matrix is given by: Supposeis an identity matrix with appropriate dimensions,. In this case,is a stationary time series. The targets start atand the initial value. The covariance matrices of the noises are given by: In the following, the computer time and performances of the ASKF, OTSKF and Difference KF will be compared respectively.

The complexities of the three algorithms are analyzed in Remark 2, which shows the complexities of the three algorithms are the equivalent order polynomials. Now let us compare their computer time by this example.

Table 1 illustrates the computer time of the three algorithms with 1000 Monte-Carlo runs respectively, through which we can find out that the new algorithm is the fastest algorithm in this example.

In [

3], Hsieh et al. has proved that the OTSKF is equivalent to the ASKF, so the tracking results of the two algorithms are the same. In order to make the figure clearer, we will only compare the performances of the following six algorithms:

Algorithm 1: KF without considering unknown input.

Algorithm 2: ASKF with accurate initial value of unknown input .

Algorithm 3: OTSKF with accurate initial value of unknown input .

Algorithm 4: ASKF with wrong inaccurate initial value of unknown input .

Algorithm 5: ASKF with inaccurate initial value of unknown input .

Algorithm 6: Difference KF without any information about initial value of unknown input.

The initial states of the six algorithms are set at

, the initial

,

. Using 100 Monte-Carlo runs, we can evaluate estimation performance of an algorithm by estimating the second moment of the tracking error:

It must be noticed that Difference KF uses

to estimate

at step

. However, the KF, ASKF and OTSKF only use

to estimate

at step

. To make an equal comparison,

in Difference KF with

in the other five algorithms is compared. As

,

is almost equal to a random white noise with small covariance after several steps and the influence of the initial value

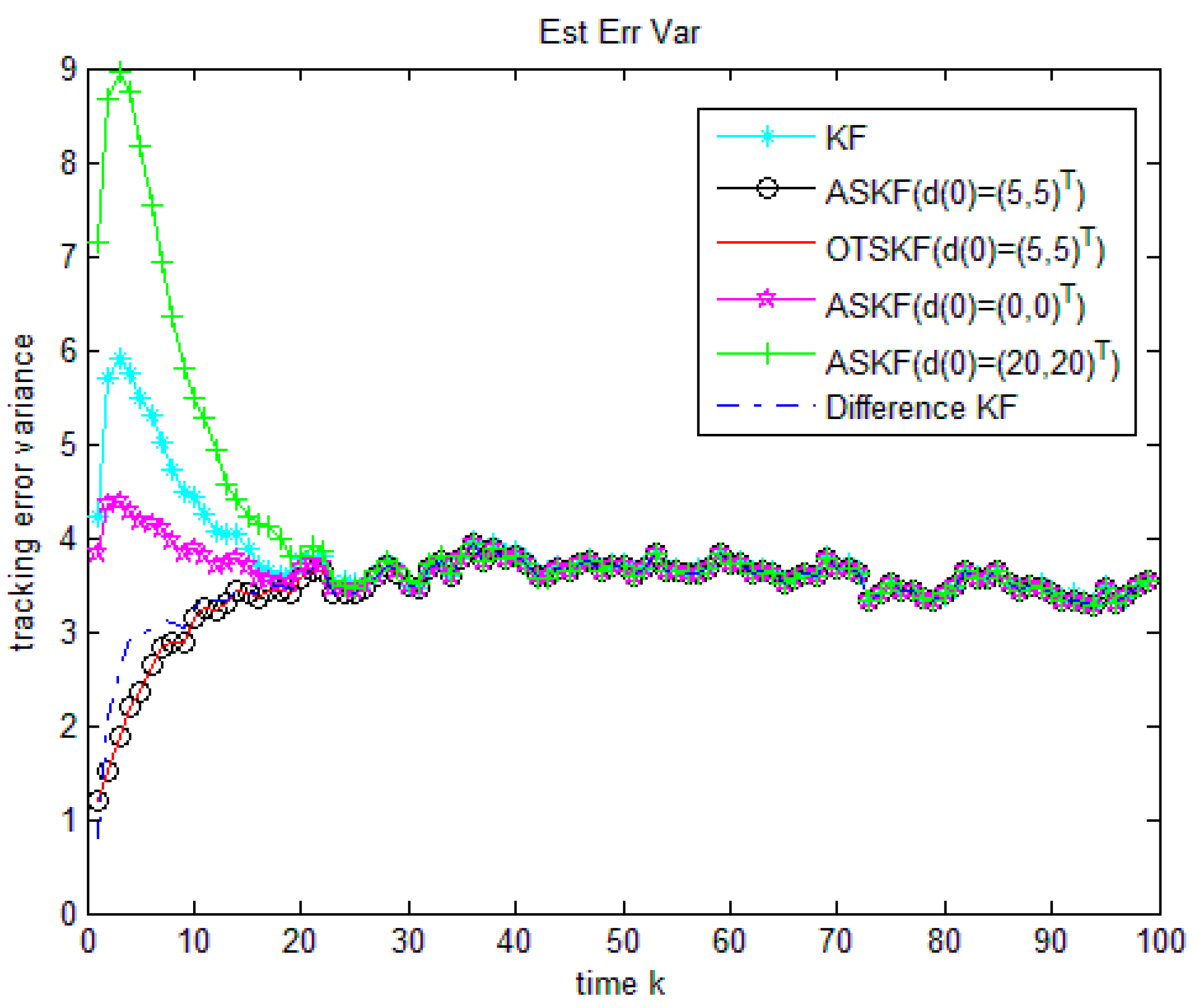

will be gradually weakened. The tracking errors of the six methods are compared in

Figure 1 and

Table 2. It can be noticed that no matter whether the initial values of the unknown input in ASKF and OTSKF are accurate or wrong, the tracking results of the six algorithms are almost the same after about 25 steps. However, it should be noticed that the Difference KF performs better than the ASKF with inaccurate initial value of unknown measurement in the first stage, which is important for some practical conditions, for instance, in multi-target tracking problems, due to data association errors and heavy clutters, tracking has to restart very often. Therefore, in order to derive an entirely good tracking, initial work status at each tracking restarting should be as good as possible.

Example 2. The dynamic equations are the same as Example 1. Assume. This model has been considered in [4,5].is a non-stationary time series here. The non-stationary unknown measurement is common in practice. For instance, for an air target, the unknown radar bias is frequently increasing with distance changing between the target and radar. The targets start at and the initial value .The performances of the following six algorithms are compared:

Algorithm 1: KF without considering unknown input.

Algorithm 2: ASKF with accurate initial value of unknown input .

Algorithm 3: OTSKF with accurate initial value of unknown input .

Algorithm 4: ASKF with wrong inaccurate initial value of unknown input .

Algorithm 5: ASKF with inaccurate initial value of unknown input .

Algorithm 6: Difference KF without any information about initial value of unknown input.

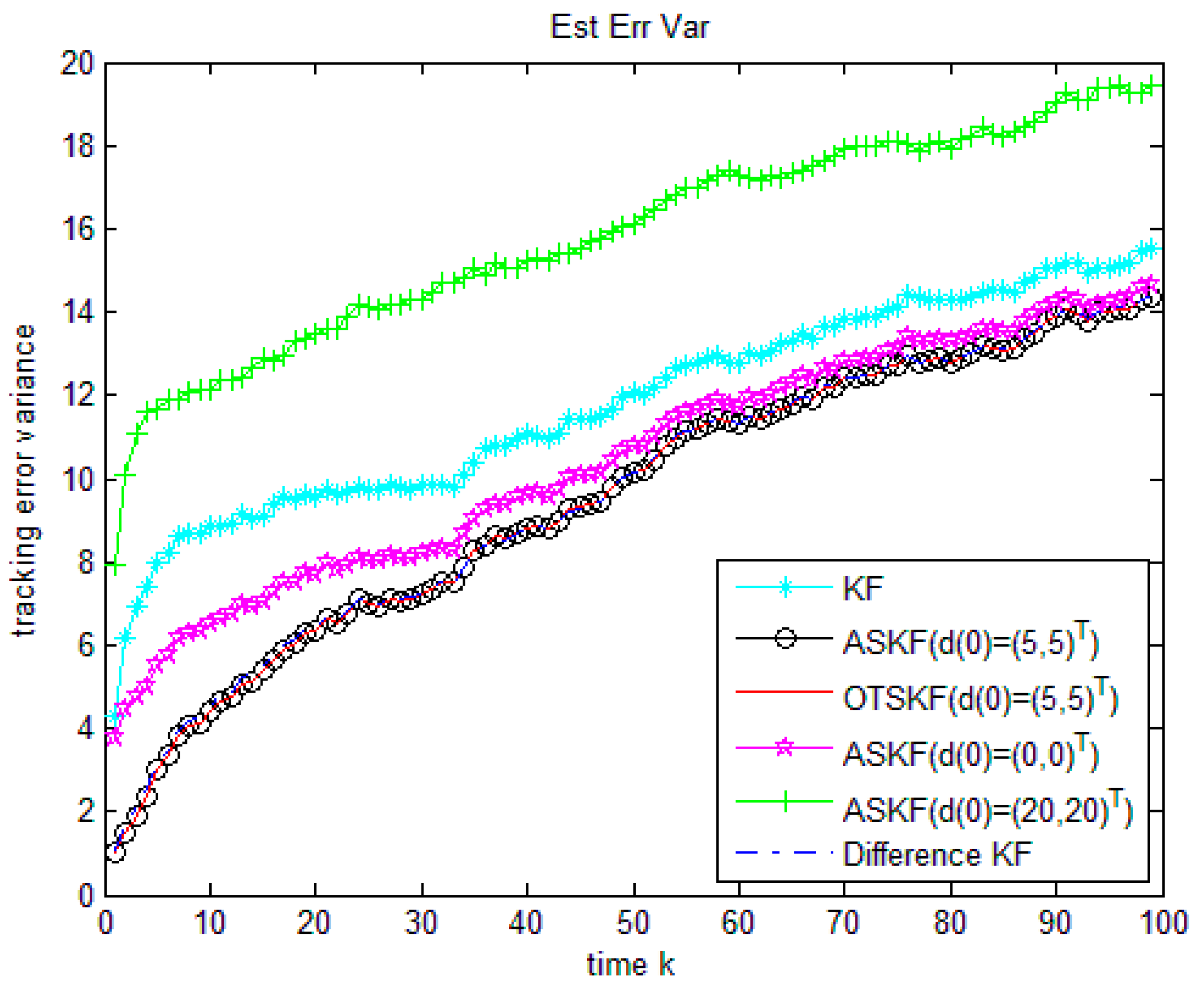

Figure 2 and

Table 3 compare the tracking errors of the six methods. As the new algorithm, ASKF and OTSKF with accurate initial value of unknown input are optimal in the MSE sense. Their performances are almost of no difference. The KF without considering unknown input is worse because it does not use any information of the unknown input. Numerical examples also demonstrate that once the initial value of the unknown input is inaccurate, the performance of the ASKF becomes poorer. We can also see that if the initial value of the unknown input is largely biased, the performance of ASKF is even poorer than KF ignoring unknown input. This is because

in this example, the influence of the incorrect initial value

will always exist. Nevertheless, the new algorithm is independent of the initial value of the unknown input and yet performs well.

From Examples 1 and 2, we can see that the performance of the difference KF is almost the same to that of the ASKF and OTSKF with accurate initial value of unknown input. If the initial value of the unknown measurement is largely biased, performances of the ASKF and OTSKF will be badly influenced. Due to the fact that it is not easy to get the exact initial value of the unknown measurement, Difference KF is a better option than the ASKF and OTSKF in practice.

Example 3. A two-sensor Kalman filtering fusion problem with unknown inputs is considered. The object dynamics and measurement equation are modeled as follows: The state transition matrixand the measurement matricesare the same as Example 1,andare identity matrix with appropriate dimensions. The targets start atand the initial value.

The covariance matrices of the process noises is given by: The covariance matrices of the measurement noises and the unknown inputs are diagonal given by.

The performances of the following three algorithms are compared as follows:

Algorithm 1: Centralized KF without considering unknown input.

Algorithm 2: The centralized fusion of the Difference KF.

Algorithm 3: The distributed fusion of the Difference KF.

The initial states of the three algorithms are set at , the initial . Using 100 Monte-Carlo runs, we can evaluate estimation performance of an algorithm by estimating the second moment of the tracking error.

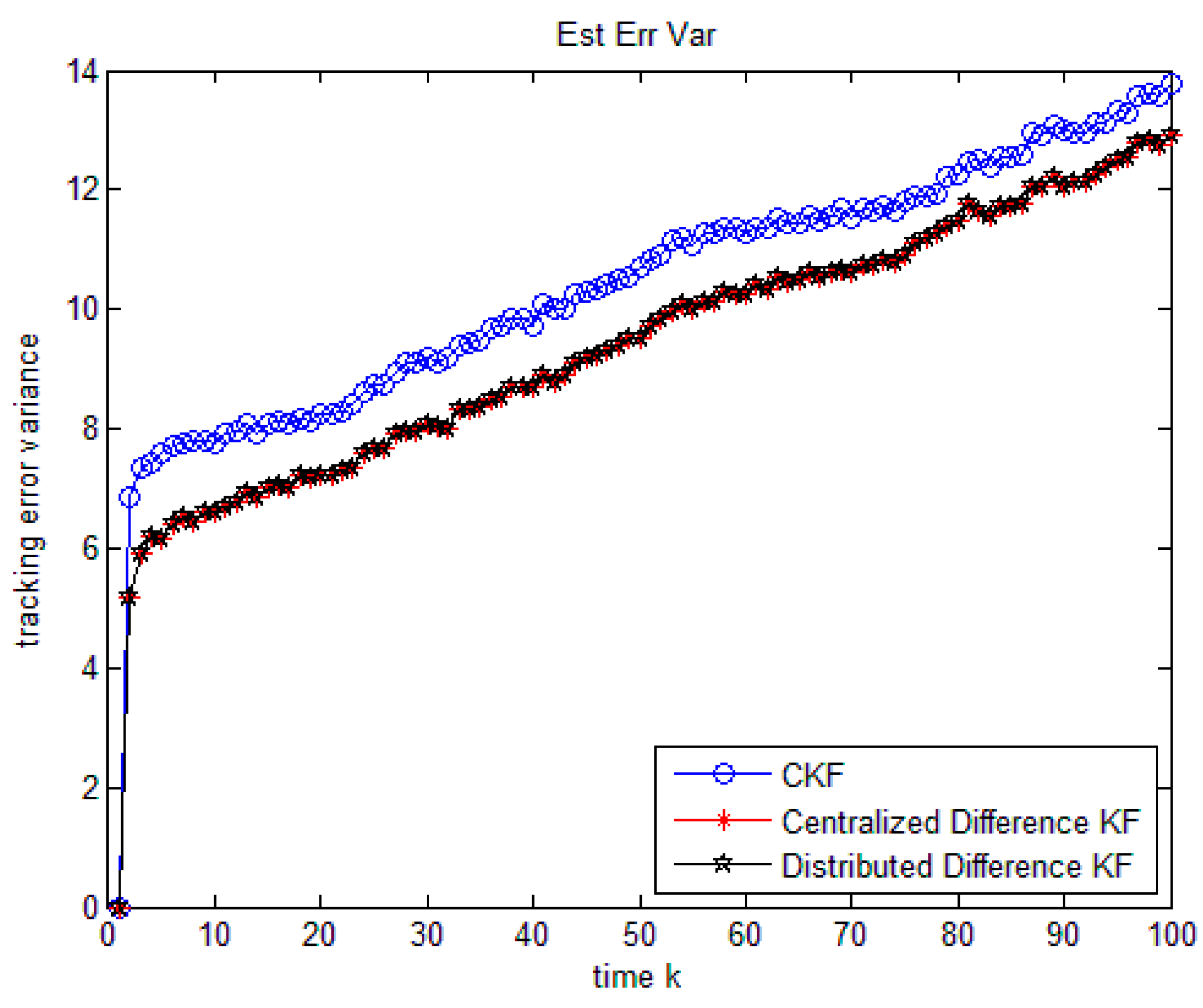

It is illustrated in

Figure 3 and

Table 4 that the simulation outcome of distributed fusion and centralized fusion of the new algorithm are exactly the same. Additionally, the new algorithm fusion gives better performance than the KF. Thus, the distributed algorithm has not only the global optimality, but also the good survivability in a poor situation.