1. Introduction

Synthetic aperture radar (SAR) works regardless of light and weather conditions, and observes the Earth’s surface all day [

1,

2]. SAR is widely applied in various civil and military fields such as resource exploration, ecological environment monitoring, climate change research, military mapping, and military reconnaissance [

3,

4,

5]. With the continuous developments of SAR technology, automatic target recognition (ATR) [

6,

7,

8] of SAR images has attracted great attention over the years. The SAR images are attributed to electromagnetic scattering, which is not visual and hard to interpret directly, and they are also sensitive to aspect and depression angles [

9]. This means few varieties in these angles cause significant changes in target images, which increases the difficulty of the classification.

In general, an integrated SAR ATR system may consist of three stages: detection, discrimination, and classification. Detection and discrimination will reject the clutter false alarms and will select out the image chips, that is, regions of interest (ROIs), containing candidate targets. The ROIs are sent to the classifier to decide the target class [

10,

11]. In the literature, the automatic SAR target recognition technology generally includes the traditional template matching method, the model-based algorithm, and the methods based on features such as principal component analysis (PCA) [

12,

13], wavelet transform [

14,

15], radon transform [

16]. In addition, considering the scattering characteristics of SAR images, there are two typical models: conditionally Gaussian model [

11] and scattering centers model [

17]. The conditionally Gaussian model considers a stochastic signal model and forms a complex Gaussian random process under treating a SAR image as a column vector. The scattering center model provides a concise and physically relevant description of the target radar signature. For ground targets, a global scattering center model is proposed in [

18], which is established offline using range profiles at multiple viewing angles. In this paper, we focus on the model based machine learning and tread the SAR images as a two-dimensional data matrix.

With the developments of machine learning, it has been successfully applied in SAR target images classification as well. Recently, sparse representation becomes a useful technique to represent signals by a linear combination of a series of known signals, where the representation coefficients are sparse [

19,

20]. The classification model based on sparse representation provides advantages of high recognition rate and robustness to strong noise. Particularly, Zhang et al. [

21] proposed a multi-view joint sparse representation method for SAR ATR. The advantages of this method are exploiting the correlation among multiple views of the same target in different aspects without knowing the pose and achieving better recognition results. Dong et al. [

22,

23] studied the SAR target recognition method based on joint sparse representation with monogenic features, and then developed the approach on the Grassman manifold, which exploits similarity between the sets of monogenic components on Grassmann manifolds for target recognition and avoids high dimension and redundancy. Liu et al. [

24] investigated the Dempster-Shafer fusion of multiple sparse representations for SAR target images recognition, which can describe both the detail and global features of targets. This method makes use of the prior information and dictionaries are constructed by using the samples of each configuration to better capture the detail information of the SAR images. Although sparse representation has obtained promising results, there still are some inevitable problems in practice. For example, dictionaries of sparse representation are usually composed of global aspect training samples, leading to high storage and calculation costs. To solve this issue, the dictionary learning technique can be utilized. In a natural image recognition application, Jiang et al. [

25] proposed a label consistent K-singular value decomposition (LC-KSVD) algorithm and introduced a binary class label sparse code matrix to challenge samples from the same class with similar sparse codes, which obtained the optimal solution efficiently in experiments. In 2014, Gu et al. [

26] developed a projective dictionary pair learning (DPL) framework to learn a synthesis dictionary and an analysis dictionary jointly to achieve the goal of signal representation and discrimination, and dictionary pair learning process avoids

l0-norm or

l1-norm optimization, and reduces the time complexity in the training and testing phase. Projection transform method is very effective in computer vision. Kahaki [

27] proposed mean projection transform (MPT) as a corner classifier, which presented fewer false-positive (FP) and false-negative (FN) points. What’s more, the output results of the corner classifier exhibit better repeatability, localization, and accuracy of repeatability for the detected points compared with the criteria in original and transformed images. For SAR target image recognition, Sun et al. [

28] proposed a SAR image target recognition method based on dynamic sparse representation and dictionary learning. The learned dictionaries have smaller sizes and are more distinctive among different classes, which can speed up recognition and improve the accuracies. Song et al. [

29] reported a sparse representation SAR target recognition algorithm with the supervised discrimination dictionary learning based on histogram of oriented gradients (HOG) features. The method can reliably capture the structures of targets in SAR images and achieves a state-of-the-art performance. Liu et al. [

30] introduced a new scattering center feature extraction and target recognition methods based on sparse representation and dictionary refinement to decrease the cost of computation and storage. It is of interest to note that, in these above SAR target recognition approaches based on dictionary learning, global aspects training samples are all used as atoms, by doing which aspect characteristics of SAR target are neglected. Of cause, there are still other methods using different local information. For instance, Liu et al. [

31] proposed a novel method based on deep belief network and local spatial information for polarimetric SAR (POL-SAR) image classification, which makes full use of the prior knowledge of POL-SAR data and overcomes shortcomings of traditional methods sensitive to extracted features and slow to execute. Cao et al. [

32] developed a method of joint sparse representation of heterogeneous multi-view SAR images over a locally adaptive dictionary, in which high recognition accuracy is guaranteed by combination of more target information and adjustment of the inter correlation information guarantee.

In this paper, the intention is to exploit the local aspect characteristics of SAR targets. Based on the projective dictionary pair learning algorithm, a new approach for SAR target images classification is proposed.

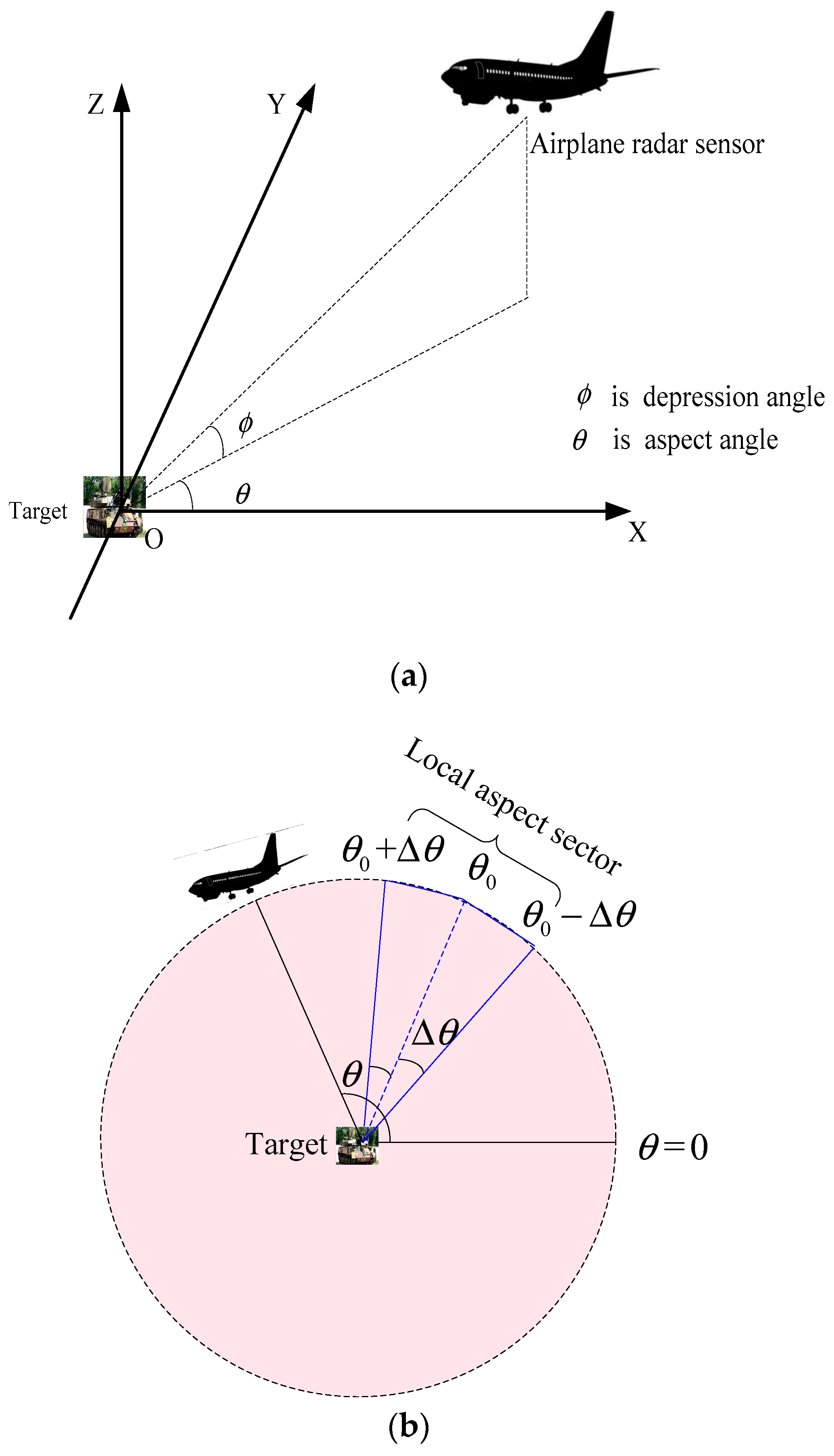

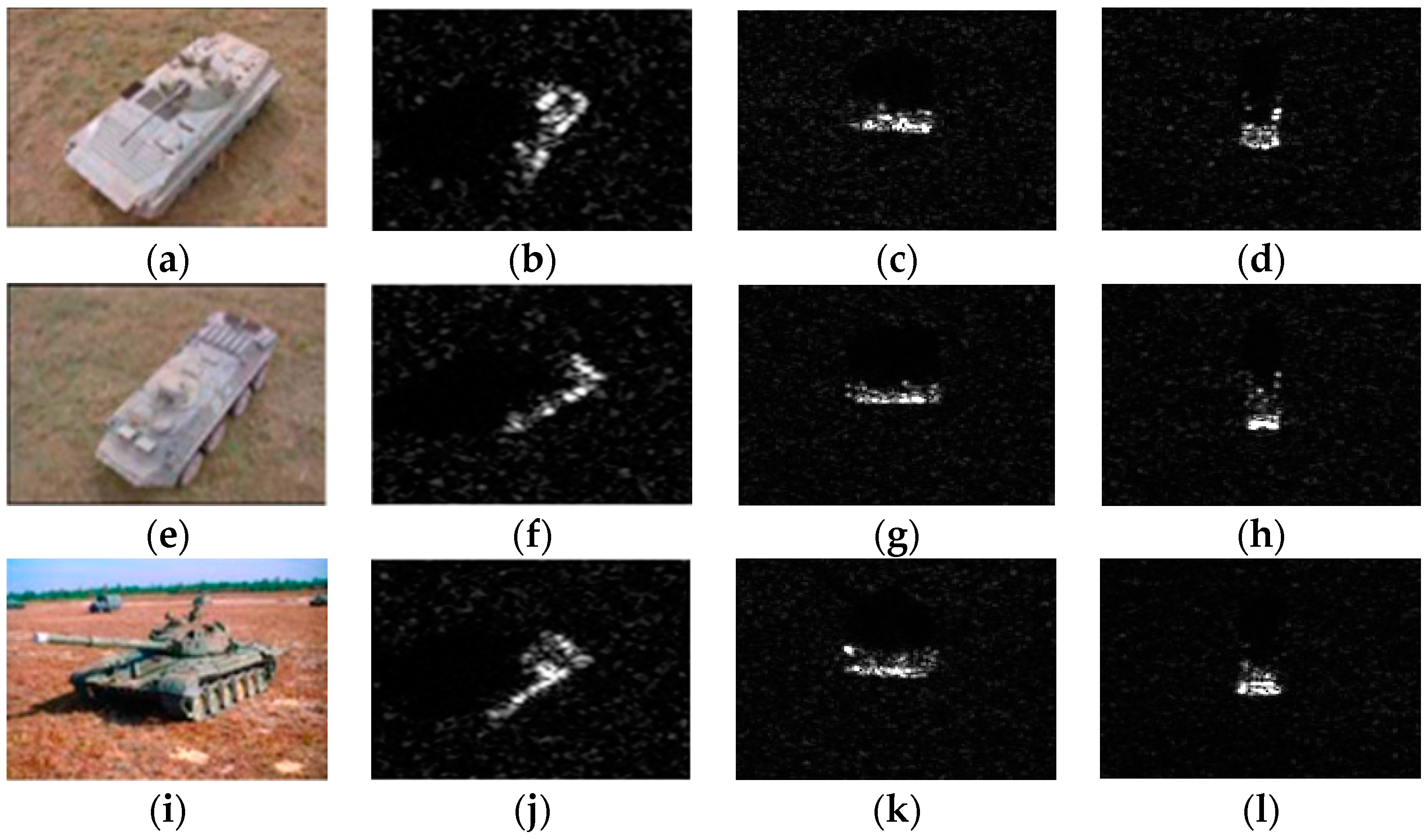

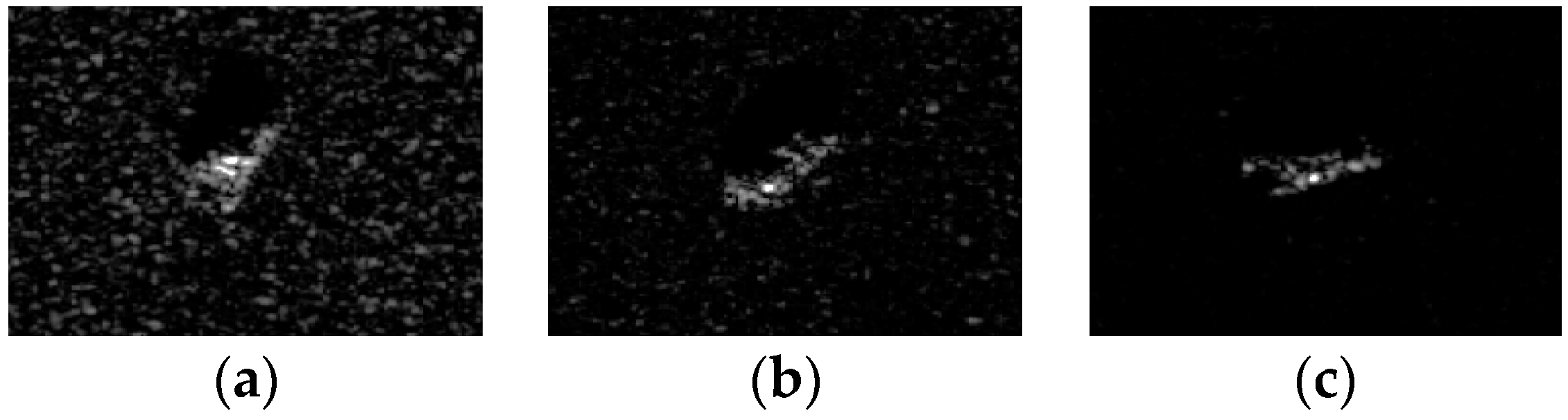

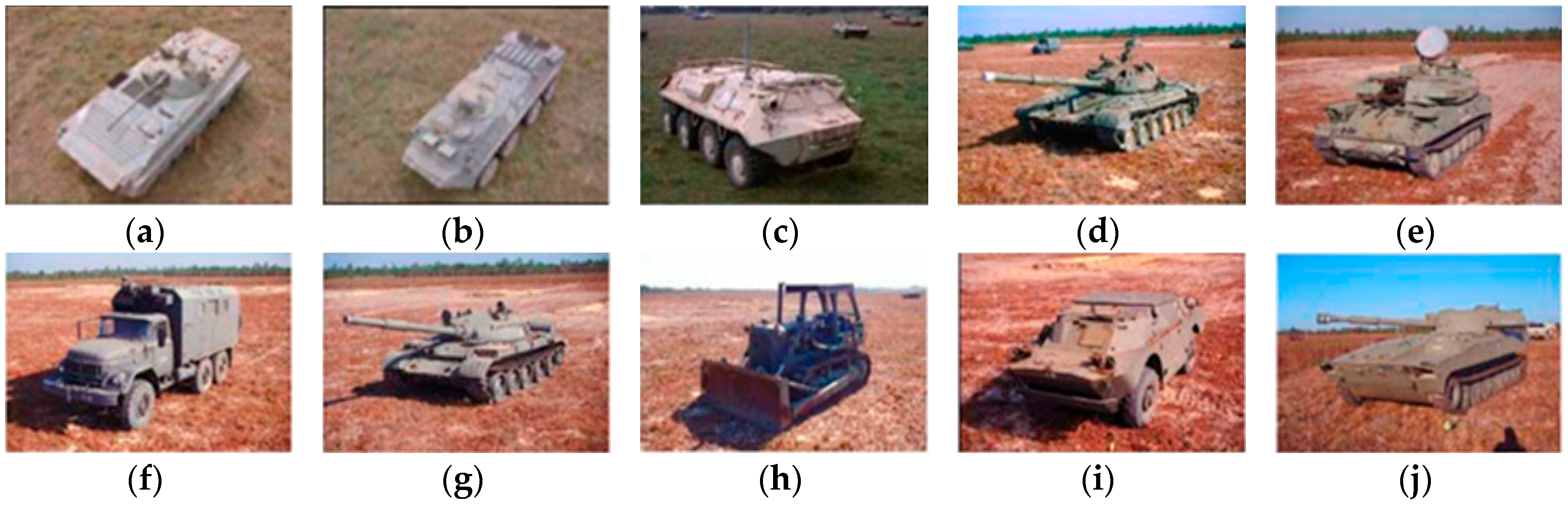

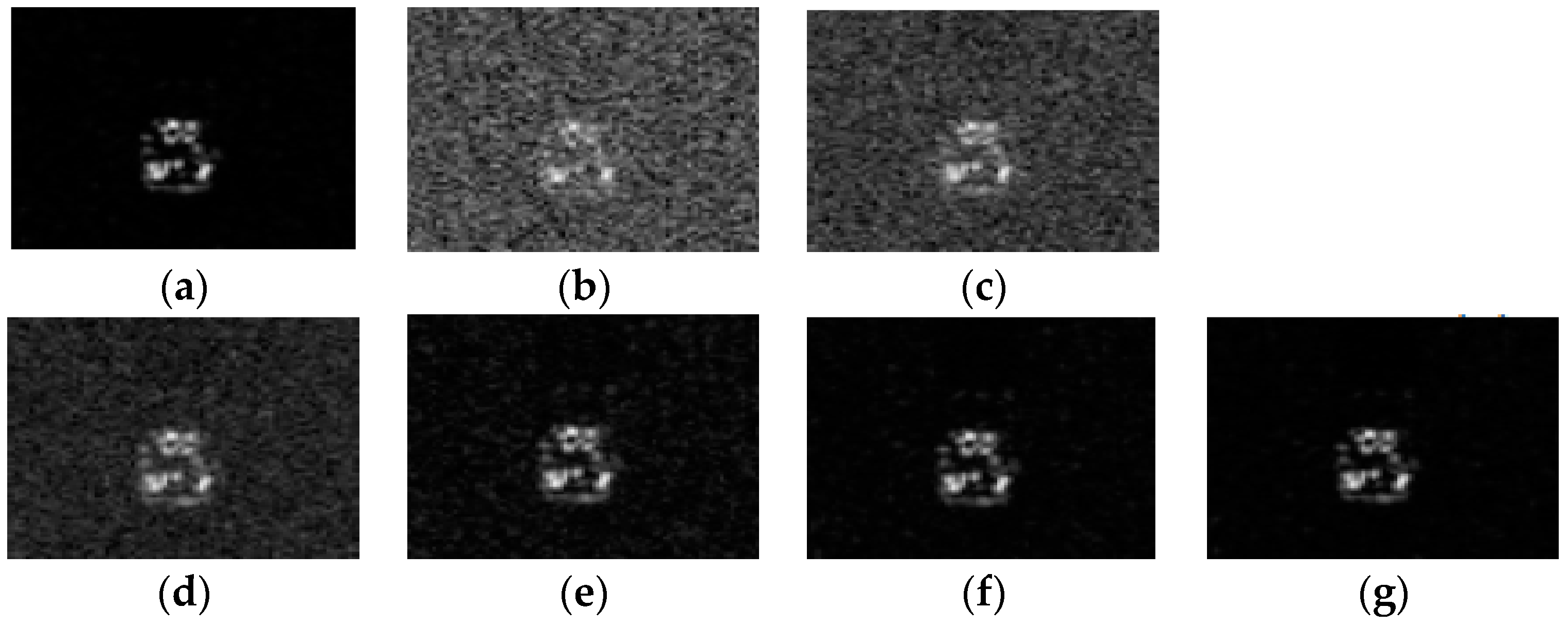

Figure 1 gives a brief depiction about the concept of local aspect.

Figure 1a describes the aspect angle and depression angle when radar sensors imaging.

Figure 1b visually describes the local aspect sector and global aspect. The local aspect means a small range of aspects variation, in which target scattering characteristics does not change significantly leading to similar images as shown in

Figure 1c. On the contrary, SAR images with large aspect difference from 0.5° to 359.5° are distinct each other as shown in

Figure 1d. As discussed early, in the most of the previous SAR target images recognition methods, images acquired at all aspect angles from 0° to 360° for the same target are considered to be equally correlated. In other words, there is no consideration on the likelihood difference of various aspects training samples and the test sample.

The aforementioned sparse representation-based recognition models assume that a test sample is represented by a linear representation of global aspect training samples. However, this assumption is not really reasonable. In fact, for the same target, when its relative position with the radar varies, the changes of its scattering structure lead to the changes of the strong scattering point position and scattering intensity, which generates the changed echoes. When the aspect changes greatly, the echoes of the target are obviously different. Therefore, the SAR target images are closely related to the aspects when target is being imaged, and the information of two images in different aspects is very different. According to the characteristics of SAR target images, global aspects training samples are actually in a nonlinear manifold. However, because the structural scattering of the target is stable over a small range of local aspects, the test sample can be represented linearly by the training samples near the corresponding local aspect sector.

The main contribution of this paper is to propose a new SAR target images recognition method based on adaptive local aspect dictionary pair learning.

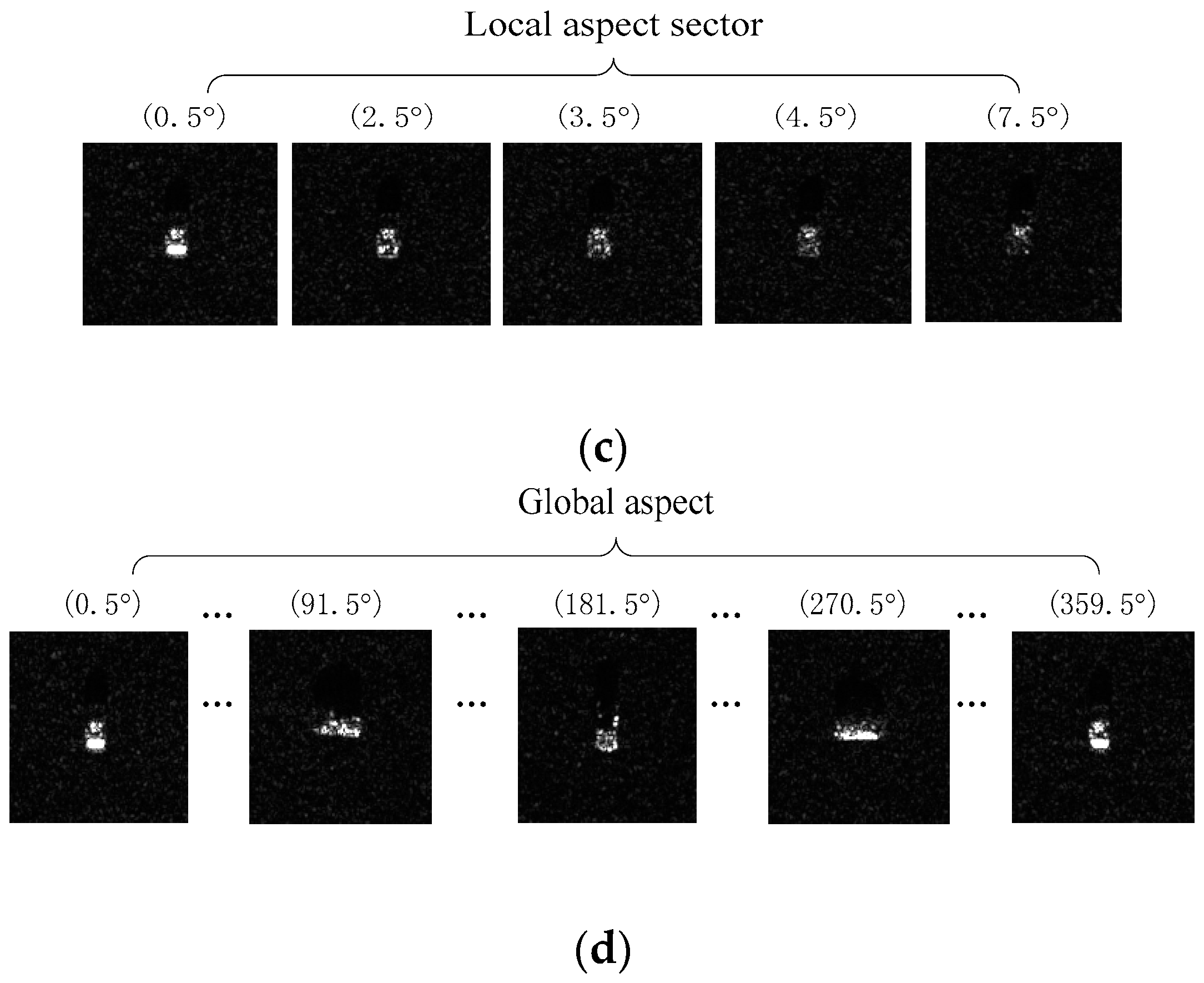

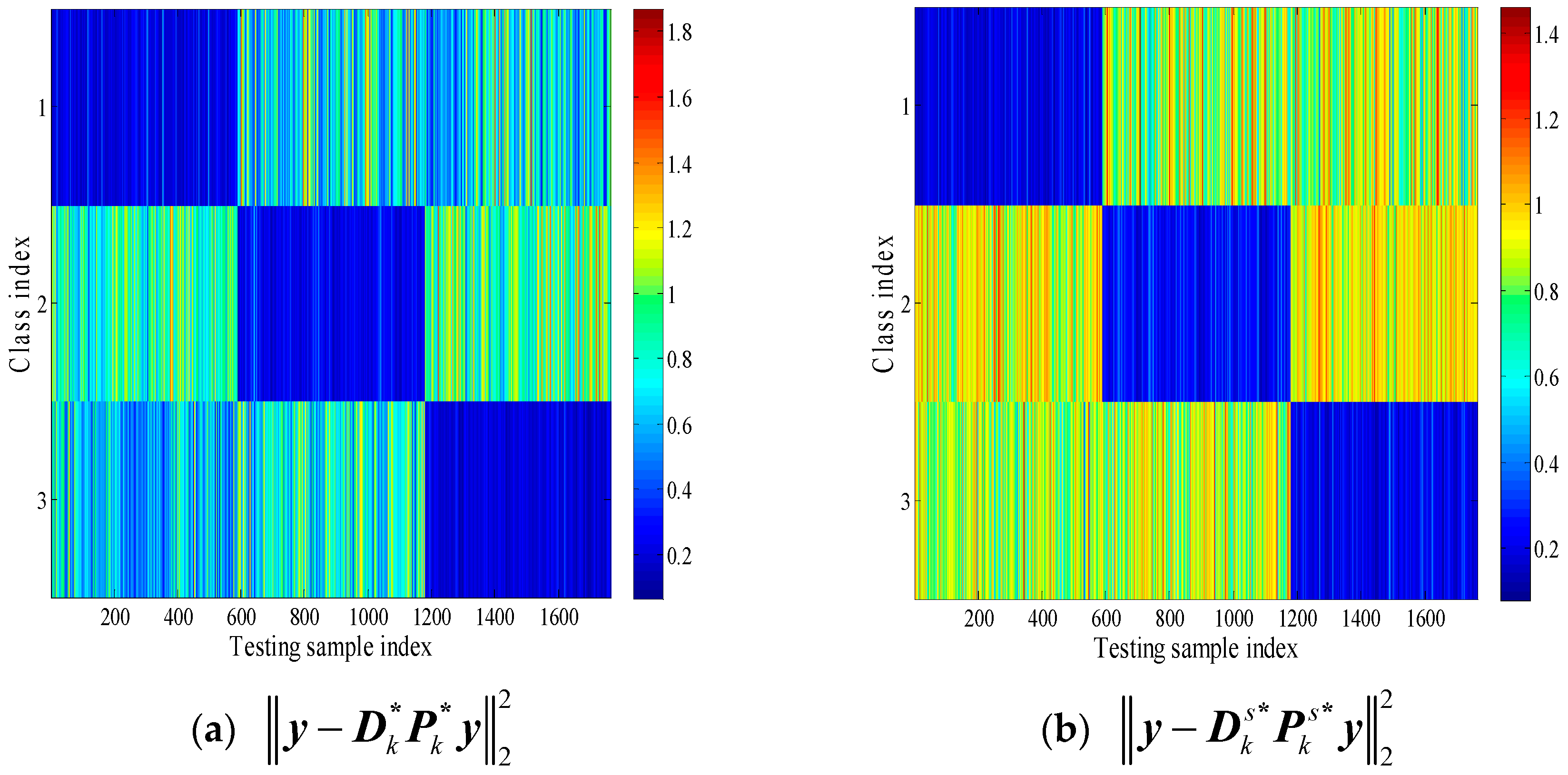

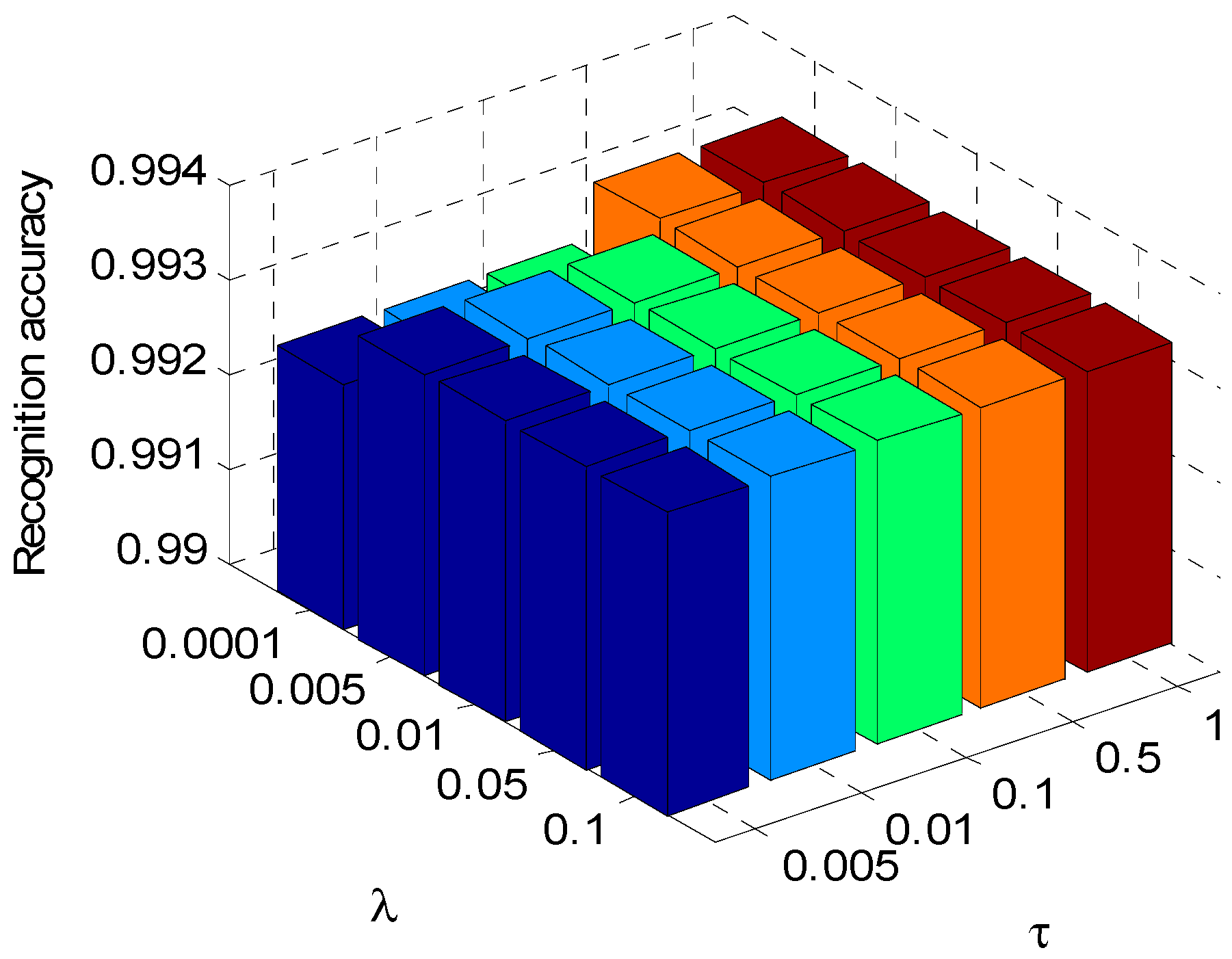

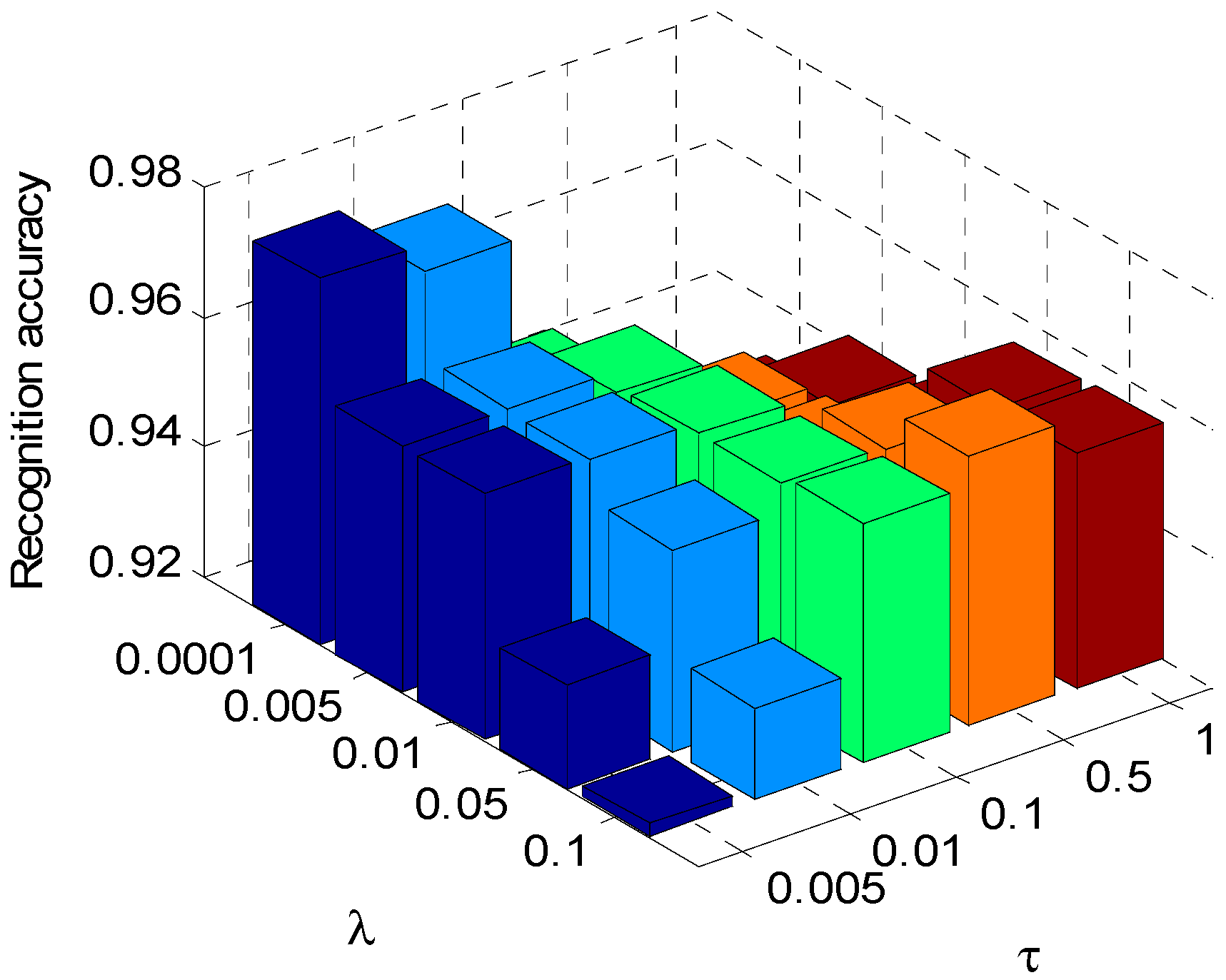

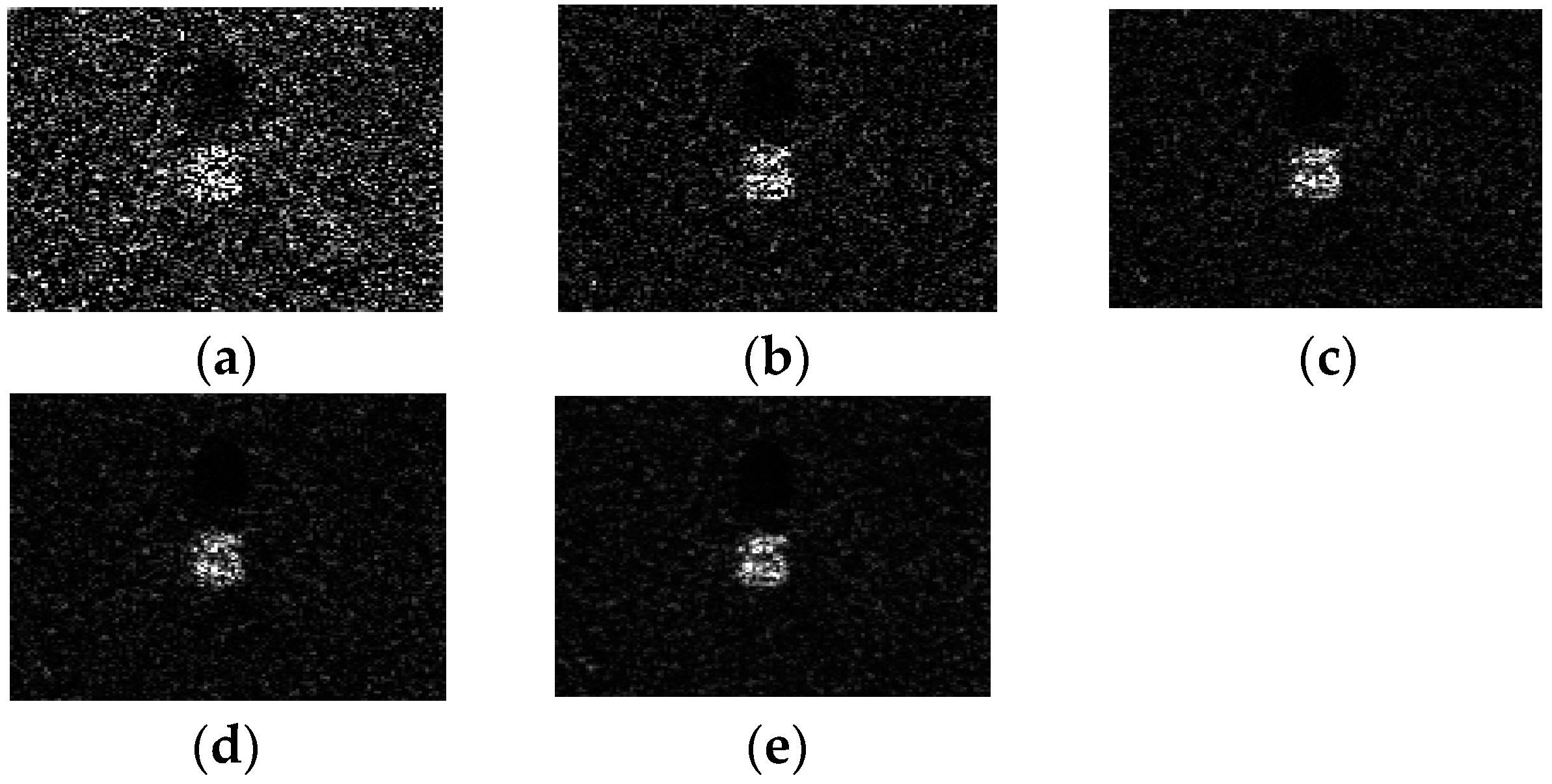

Figure 2 shows a scheme of the proposed method. As seen in

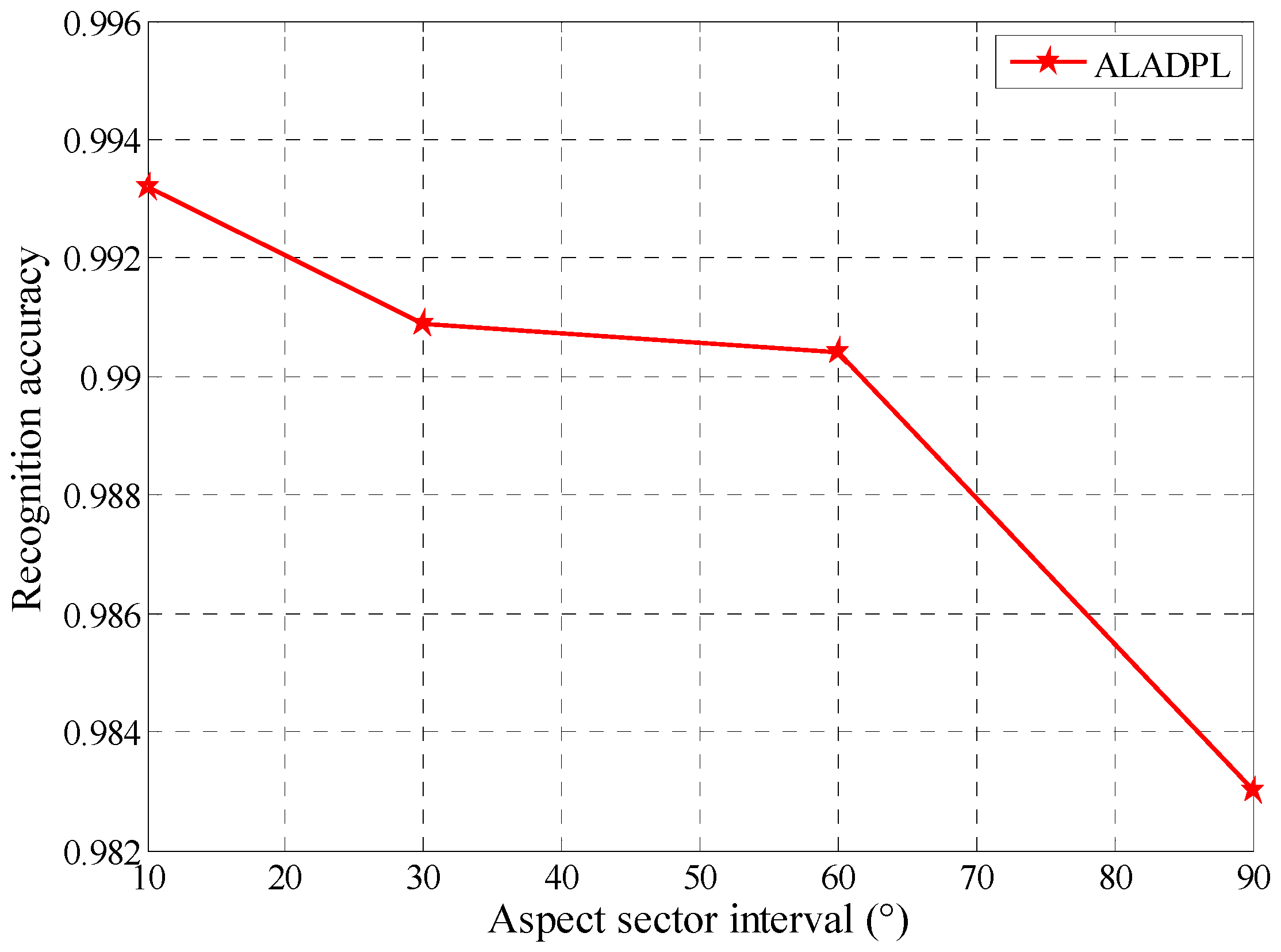

Figure 2, first, the global aspect range is divided into multiple local aspect sectors. For the current testing sample, the local aspect sector is adaptively determined based on regularized non-negative sparse learning according to its representation coefficient in the middle graph. Then, a dictionary pair including a synthesis dictionary and an analysis dictionary is learned from the training subset in the local aspect sector. Finally, under the local discriminate dictionary pair obtained from the training phase, the class label of the test sample is determined with the minimum reconstruction error. The mechanism behind this method is that the training subset in the local aspect sector satisfies the local linear representation qualification. The learned dictionary pair has better interclass discrimination ability. In addition, the interference of training samples outside the local aspect sector is excluded, which further improves the recognition performance. The experiments based on Moving and Stationary Target Acquisition and Recognition (MSTAR) dataset are conducted and the results verify the effectiveness and superiority of the proposed method.

This paper is organized as follows: in

Section 2, the selection of adaptive local aspect sector based on regularized non-negative sparse learning is introduced. In

Section 3, the dictionary pair learning and recognition method based on adaptive local aspect is proposed. The experimental results and analysis of the proposed method are provided in

Section 4. The conclusions are drawn in

Section 5.

2. Adaptive Local Aspect Sector Selection

As mentioned in the introduction, the global aspect training samples of SAR target actually lie in a non-linear manifold space. For a test sample, if its aspect angle is

θ0, only those training samples in the local aspect sector nearby

θ0 can linearly represent the current test sample. The key issue is to find the correct local aspect sector of the current test sample. In this paper, we propose a regularized non-negative sparse learning approach to solve this problem. Assuming that the aspect of the current test sample

y0 is

θ0, it can be represented linearly by

training samples

located in local aspect sector

. The representation is:

where

is the coefficient vector of

over

. Since the true aspect of the test sample is unknown, it should be represented by all aspect training samples from 0°–360°. To obtain the sparsest solution, we can turn the problem into an optimization problem with the

l0-norm constraint, given by:

where

denotes the entire training set,

represents the coefficient vector, and

indicates the tolerance.

represents the

l0-norm, and

denotes the

l2-norm.

According to the sparse learning theory, if we sparsely regularize and constrain with all aspect sectors training samples, the elements in the coefficient vector corresponding to atoms with the same aspect sector as the test sample should be non-zero values, while other coefficient elements are zeros [

19,

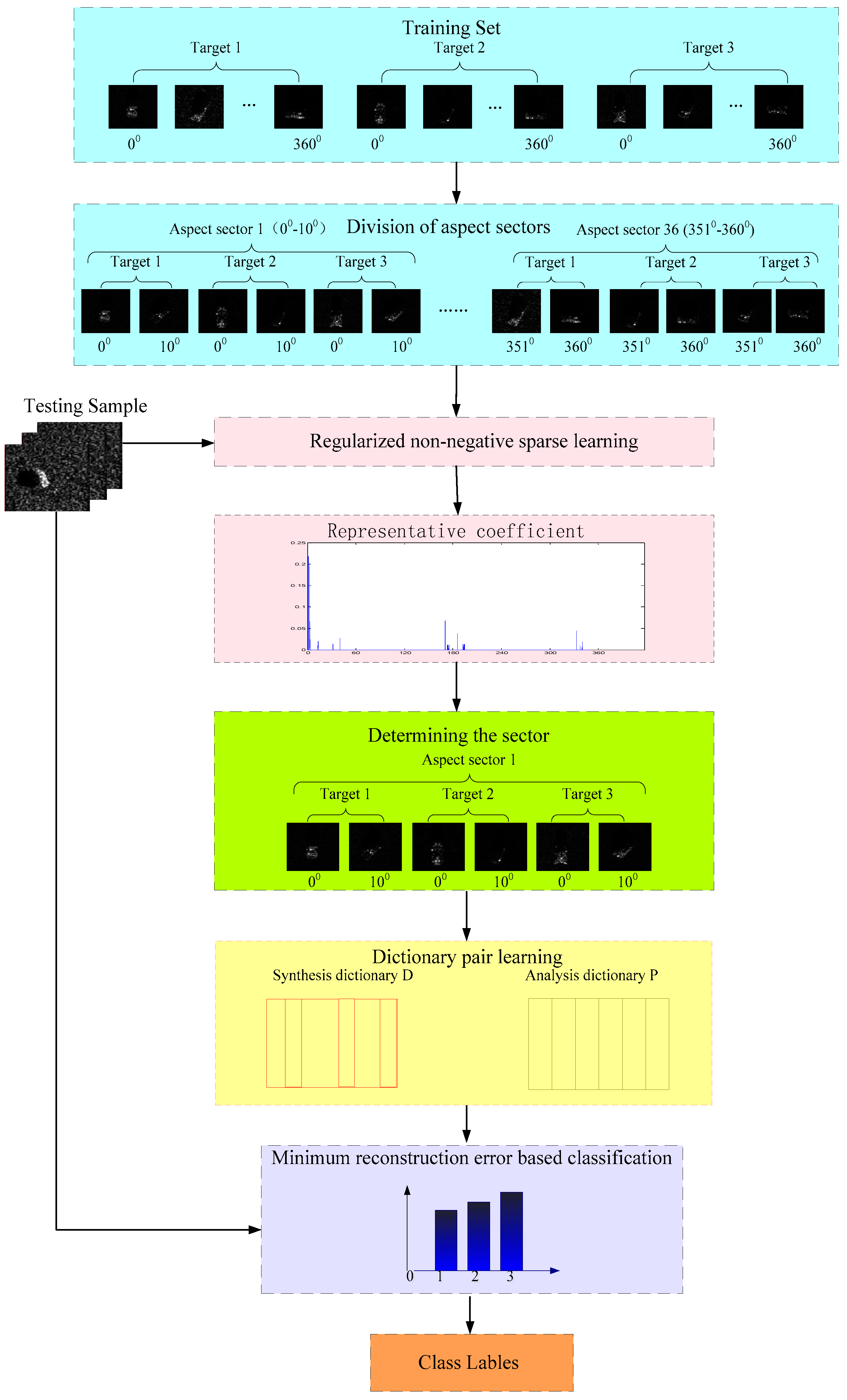

20]. To effectively represent the training samples, a new dictionary is constructed based on the aspect angles instead of the traditional class labels. In traditional dictionaries, the order of atoms is arranged according to their class labels, and the order of the same class has no relevance with the aspect angle and is arranged randomly. For instance, the aspect sector interval is set to 10°. In this work, the dictionary atoms are arranged according to aspect angles as Target1 (0°–10°); Target2 (0°–10°); Target3 (0°–10°); Target1 (11°–20°); Target2 (11°–20°); Target3 (11°–20°); …; Target1 (351°–360°); Target2 (351°–360°); Target3 (351°–360°). The difference between the traditional dictionary construction and the dictionary constructed with local aspect sectors are displayed in

Figure 3.

Moreover, in order to comply with the physical meaning of representation learning, the non-negative constraints to the representation coefficient vector are added. Since the optimization of

l0-norm minimum problem is NP-hard, the problem is usually solved by minimizing the

l1-norm. Moreover, to make the elements of the coefficient vector more likely to be in a probabilistic sense, we introduce the constraint of the sum of all elements in each representation coefficient, that is

. With this constraint, the value of each elements in representation coefficient can evaluate the contribution of each training sample for representing the

. Therefore, the final model for adaptively selecting local aspect sectors based on regularized non-negative sparse learning is:

where

donates the dictionary designed according to aspect angles,

is the coefficient vector.

is the total number of training sets.

denotes the

-norm.

In this way, for each test sample, after obtaining the coefficient vector, the sum of coefficient elements for each local aspect sector can be calculated, which can be seen as the efforts corresponding to each local aspect sector. According to the aforementioned analysis, it is reasonable to infer that the current test sample corresponds to the local sector

with the maximum sum, which indicates that the aspect sector corresponding to a test sample can be determined adaptively. The formula is written as follows:

where

is the number of samples of each sector.

To efficiently obtain the solution, the accelerated projected gradient method [

33] is employed to optimize the model (3). It should be noted that the purpose of the regularized non-negative sparse learning is not to obtain the class label of the test sample, but to adaptively choose the local aspect sectors where the test sample is located.

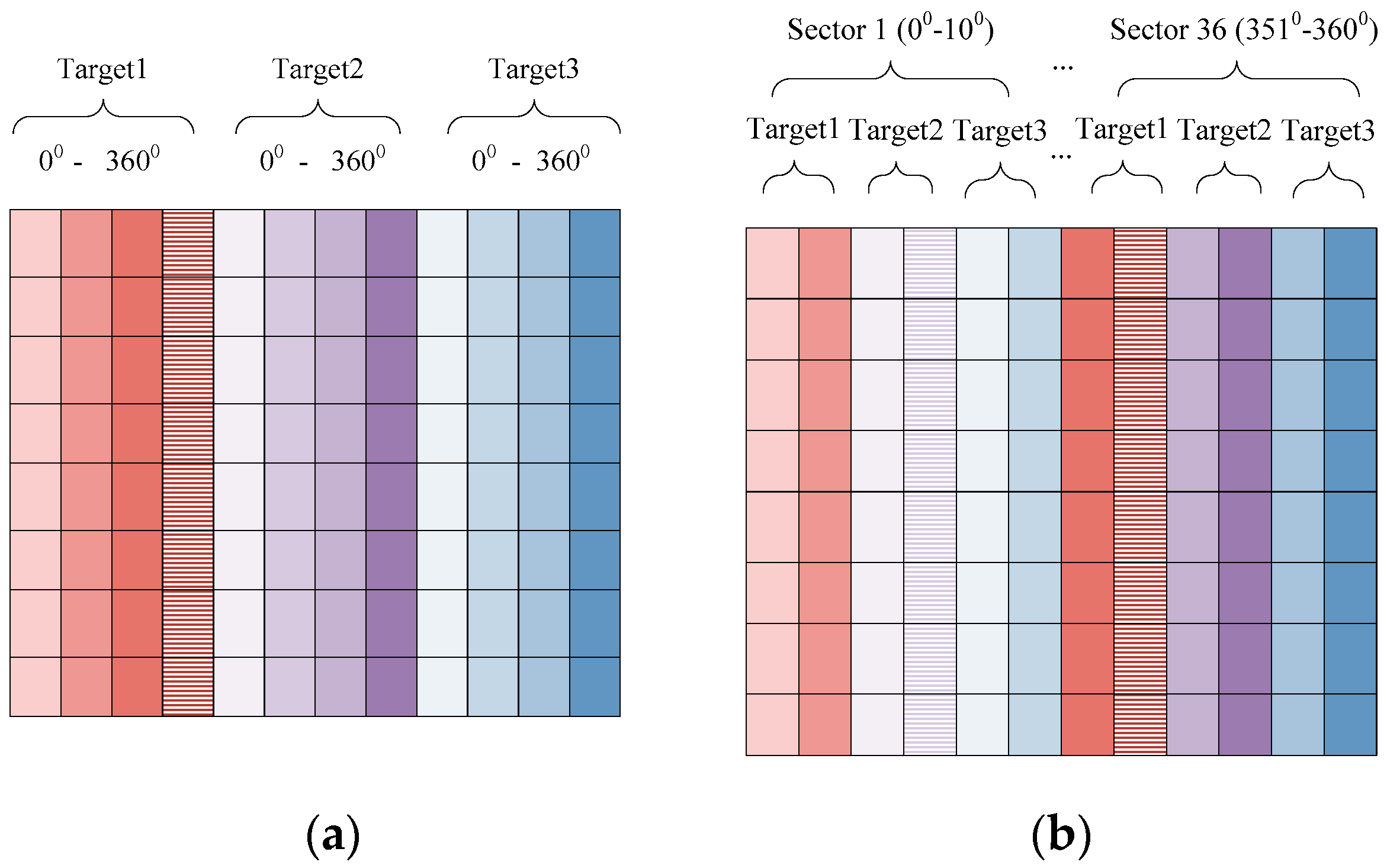

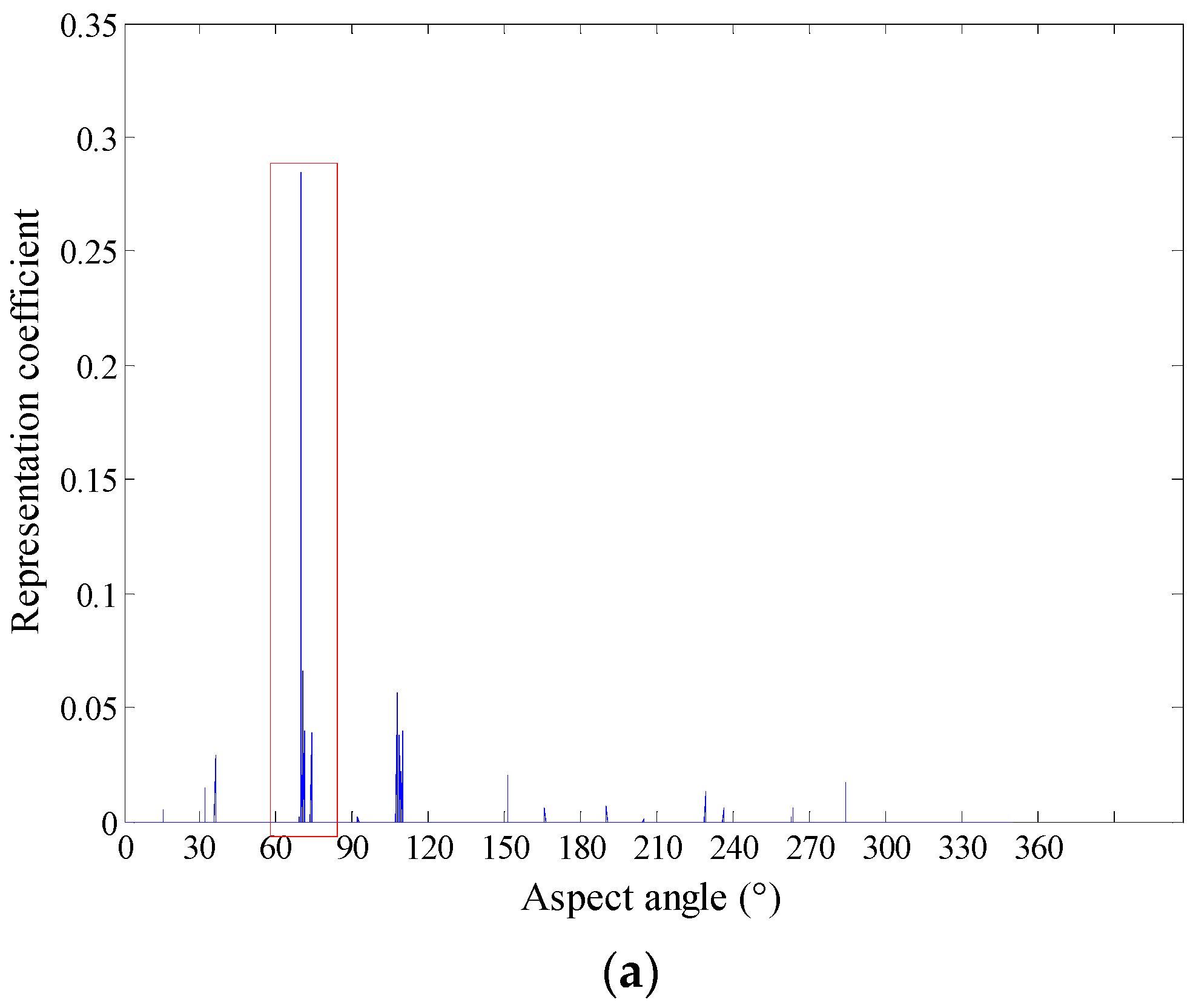

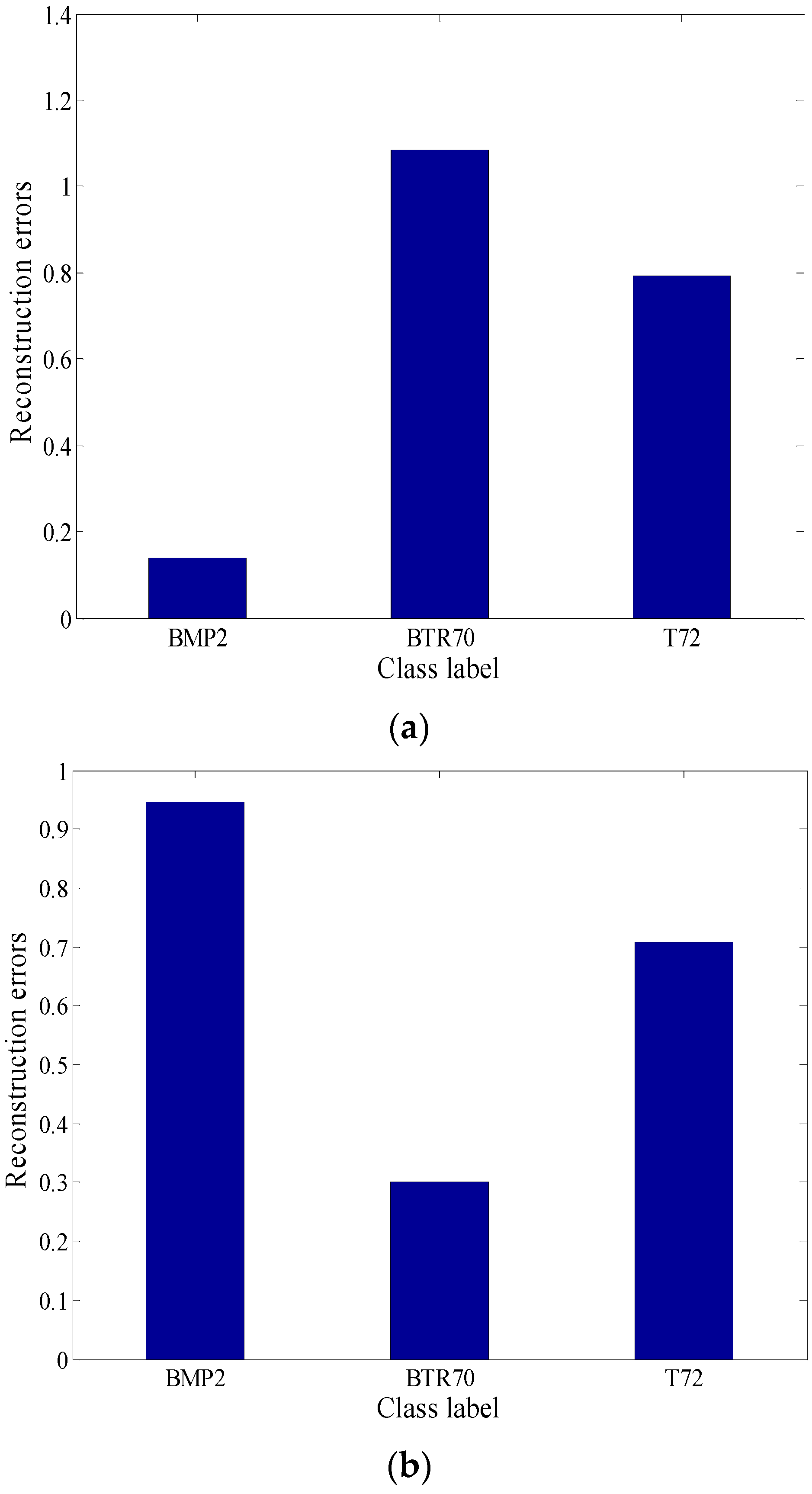

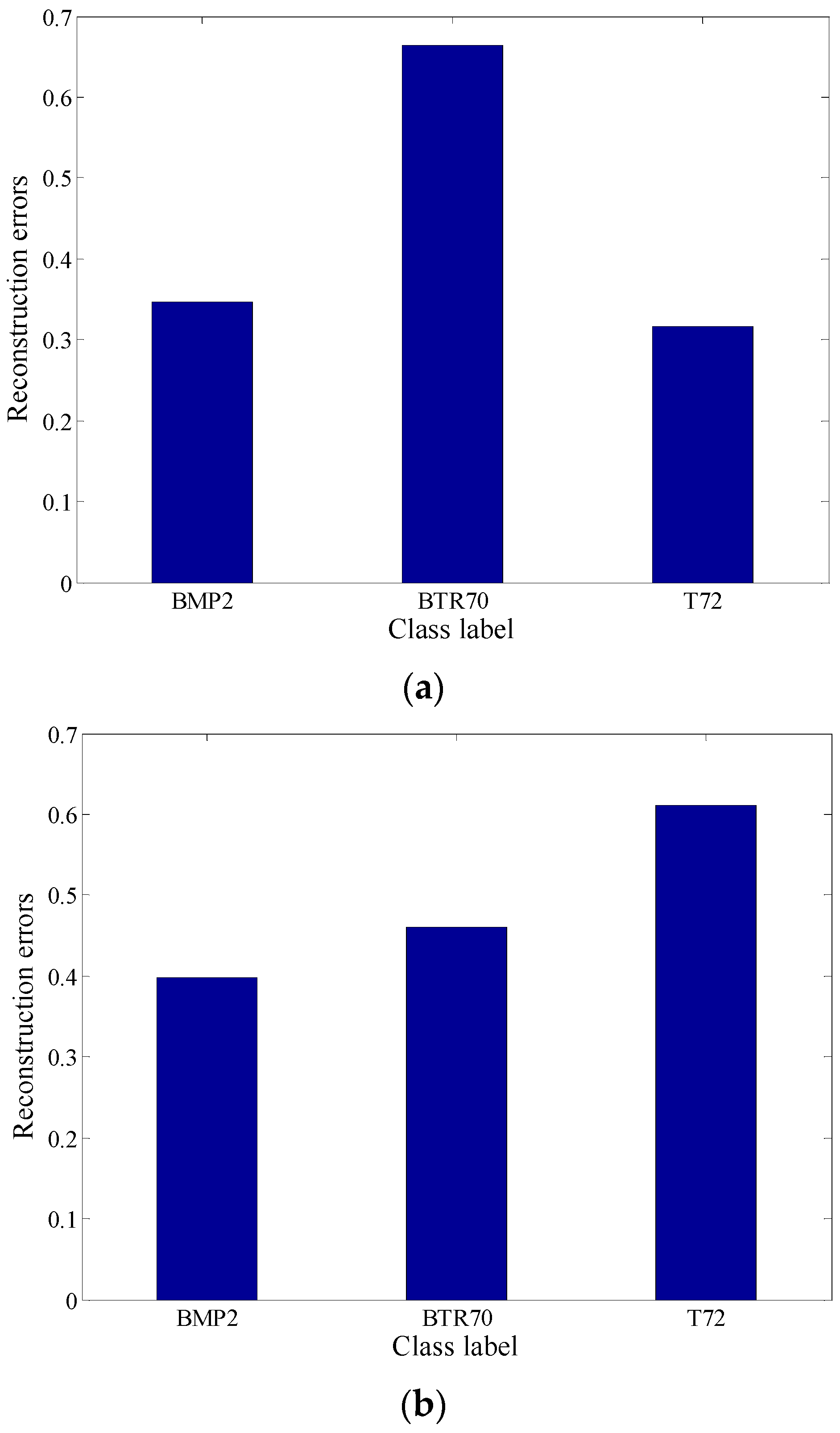

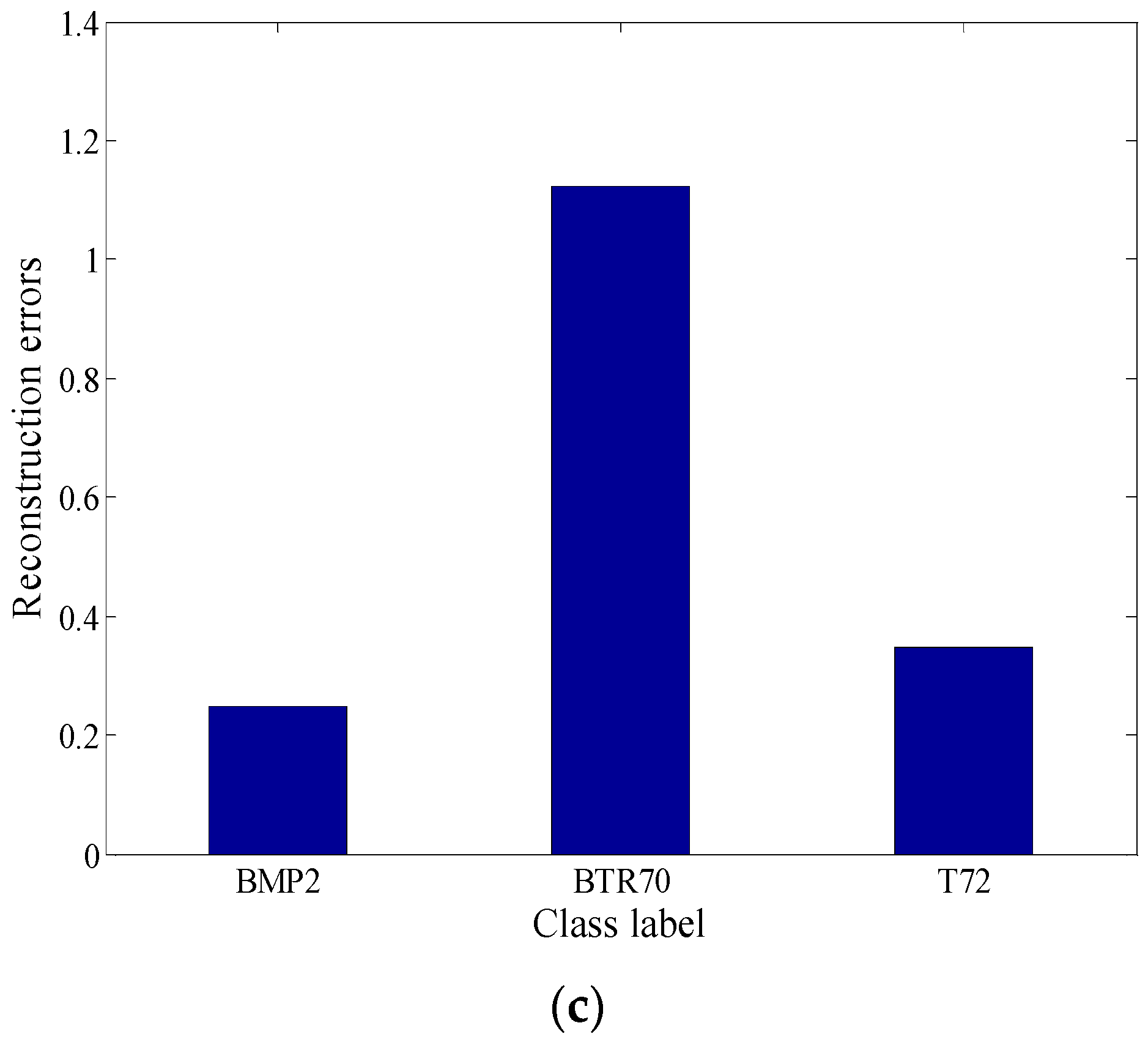

To clearly illustrate our idea, the following experiment is conducted to show the local aspect sector selection process. For a test sample of Target1, its real aspect angle is 68.5°. Because we divide the global aspect to local aspect sector by 10°, the sample should be located in the (60°, 70°) sector. The coefficient vector obtained by the regularized non-negative sparse learning method is shown in

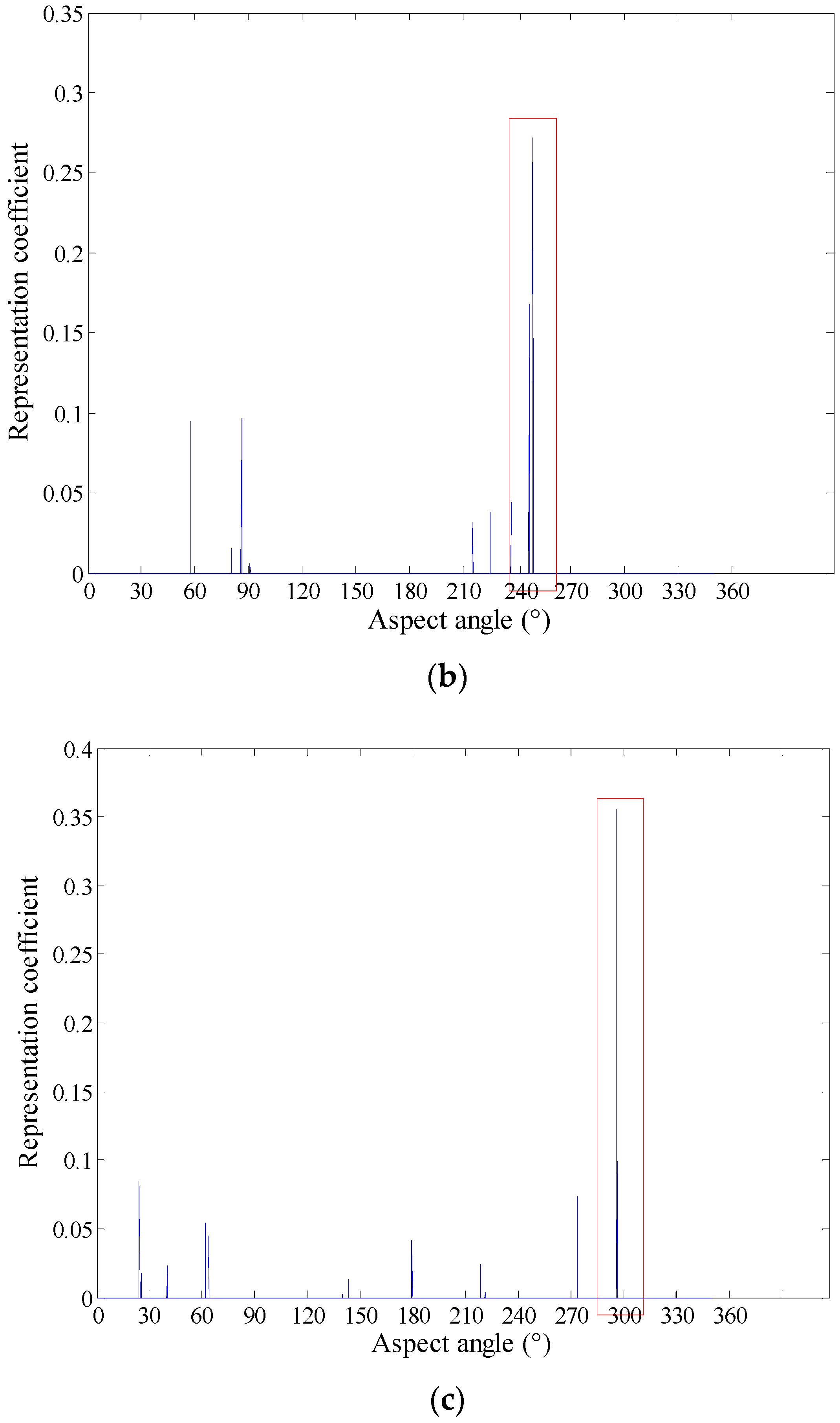

Figure 4a. It is seen that the elements corresponding to the (60°, 70°) sector is obviously larger than others, which indicates the local aspect sector of the test sample is correctly determined.

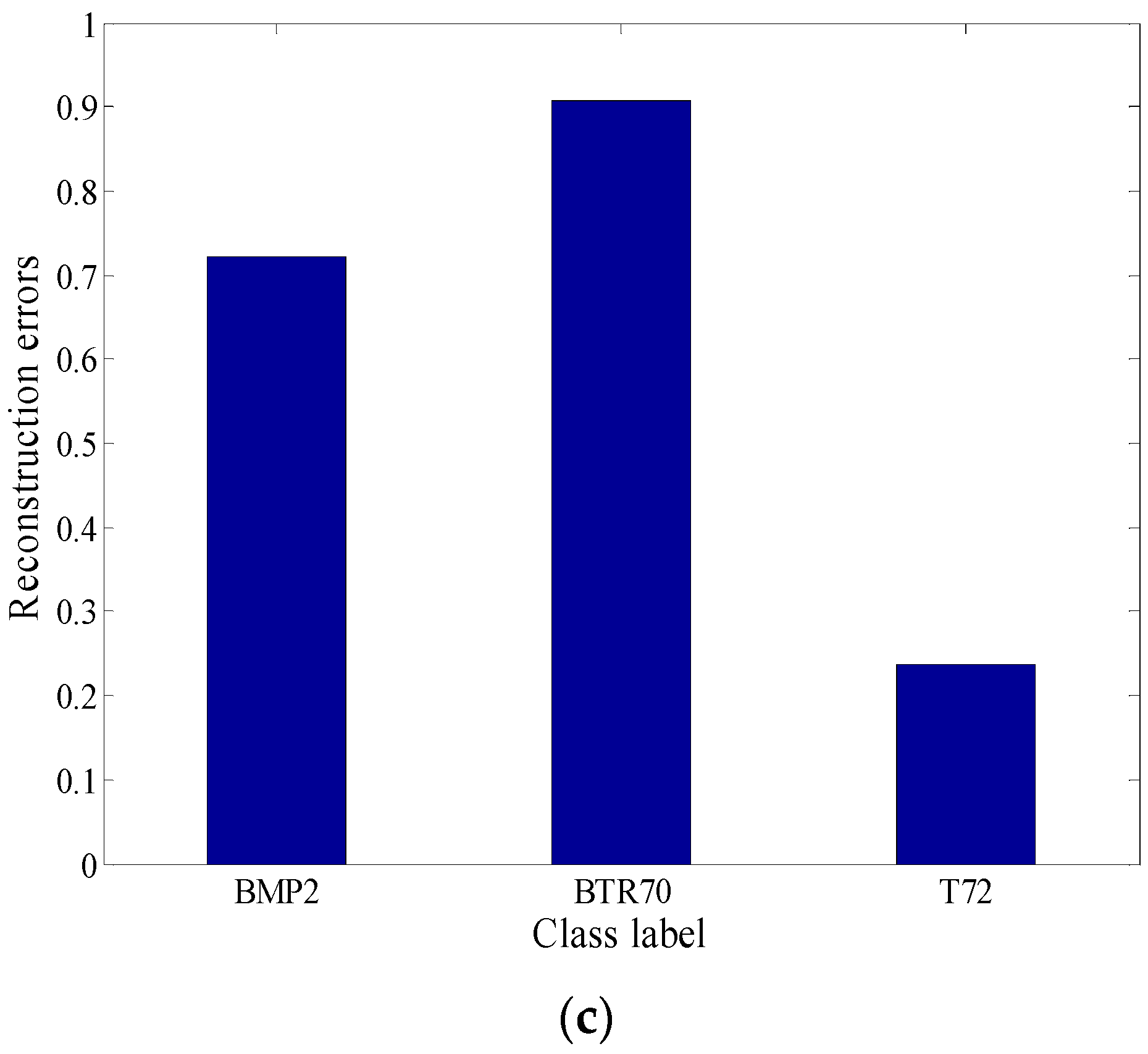

Figure 4b,c show the experimental results of a Target2 test sample (240.0°) and Target3 test sample (293.8°) respectively. As shown in these figures, although the test samples are from different targets, the local aspect sector of them can be inferred exactly and adaptively.