Non-Contact Measurement of the Surface Displacement of a Slope Based on a Smart Binocular Vision System

Abstract

1. Introduction

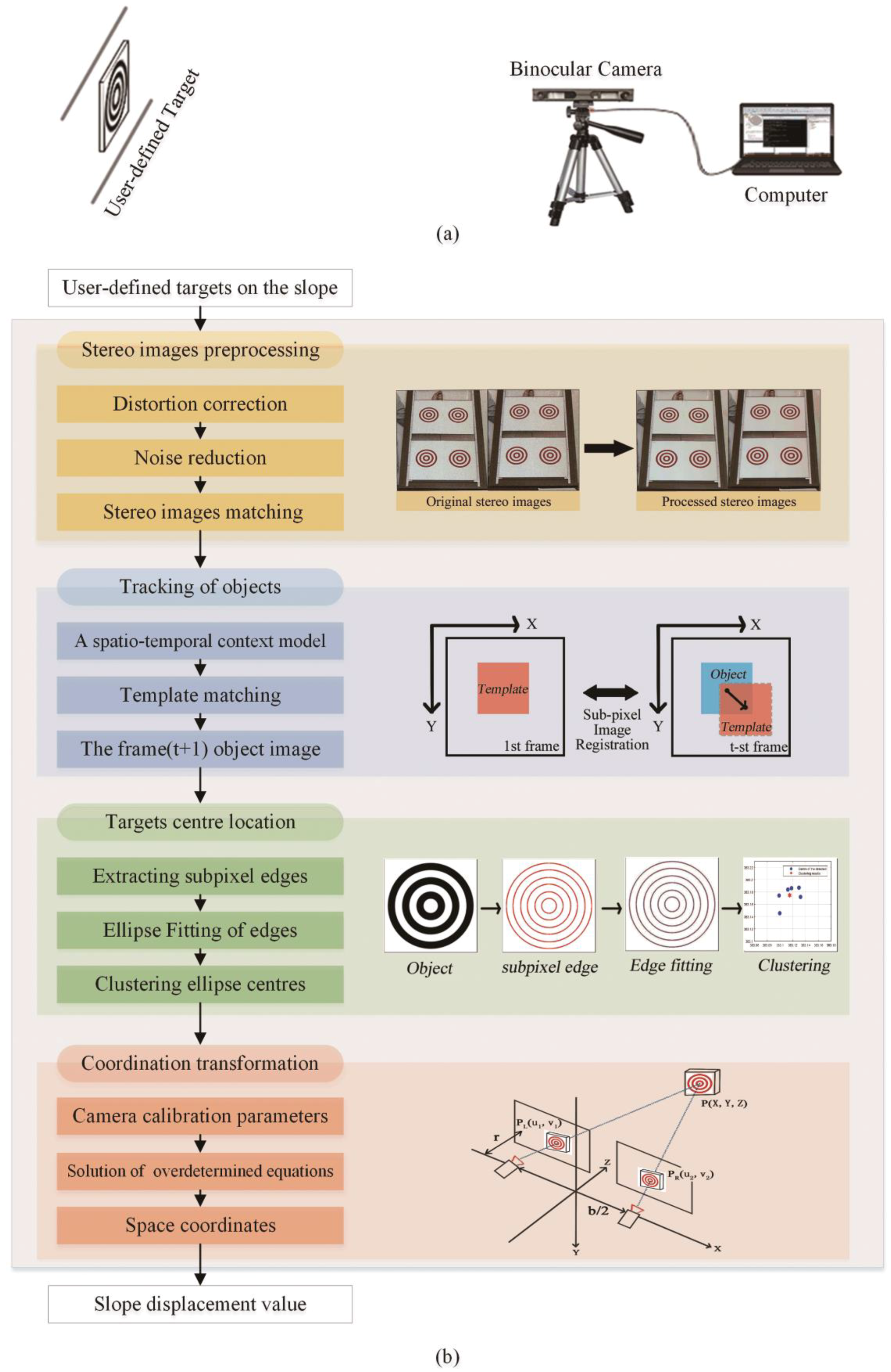

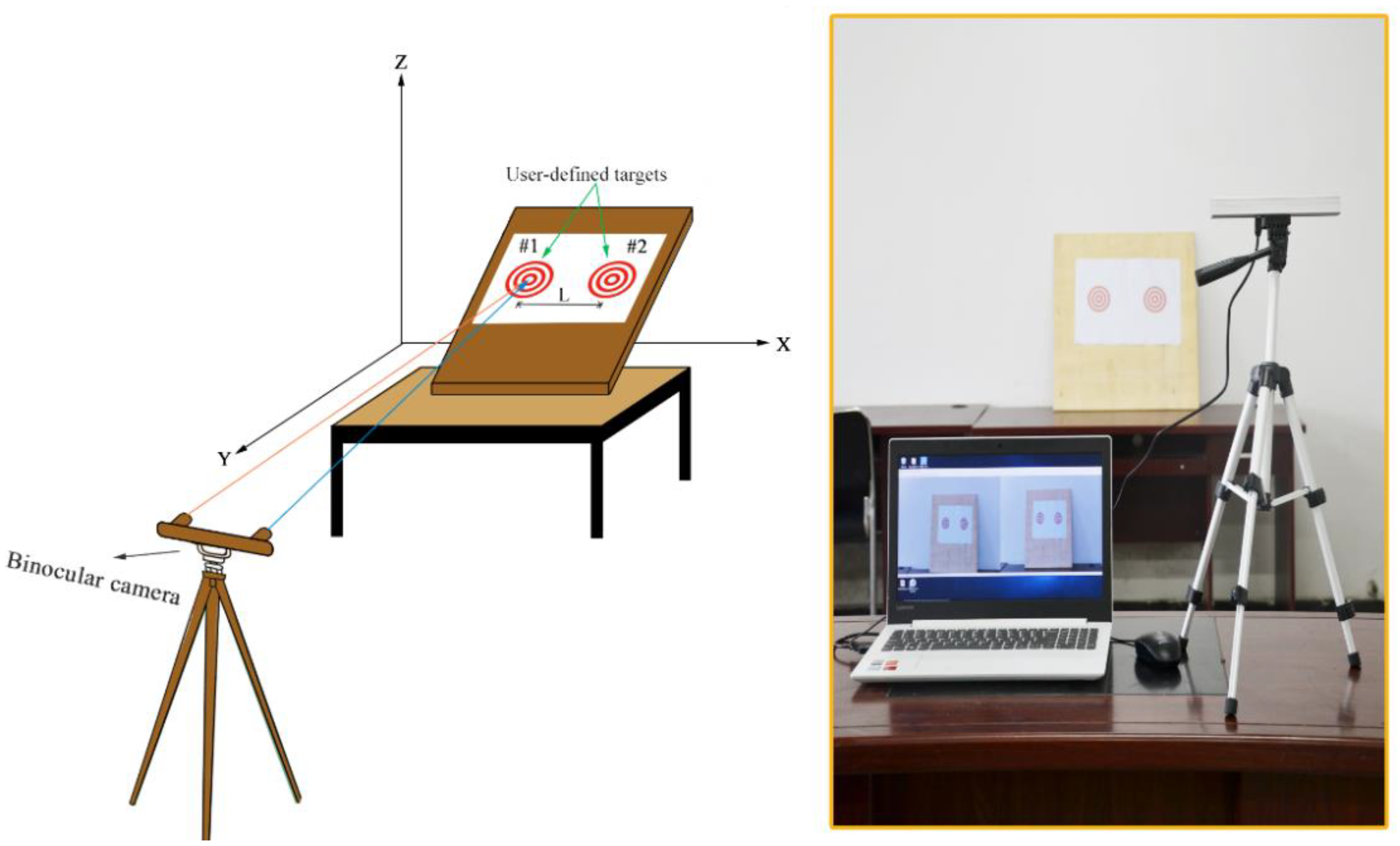

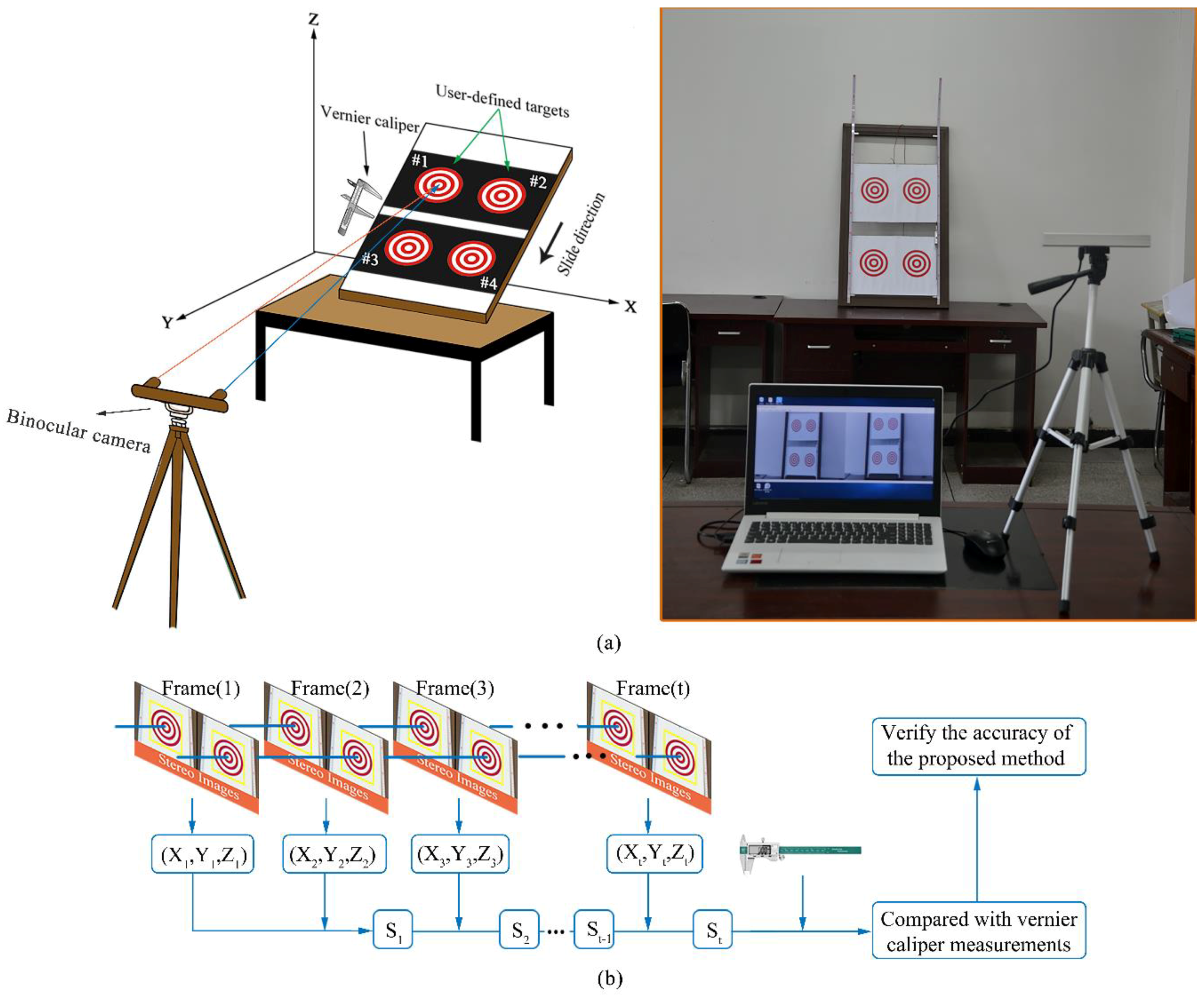

2. Proposed Smart Binocular Vision System

2.1. Overview

2.2. Target Design

2.2.1. Design Scheme

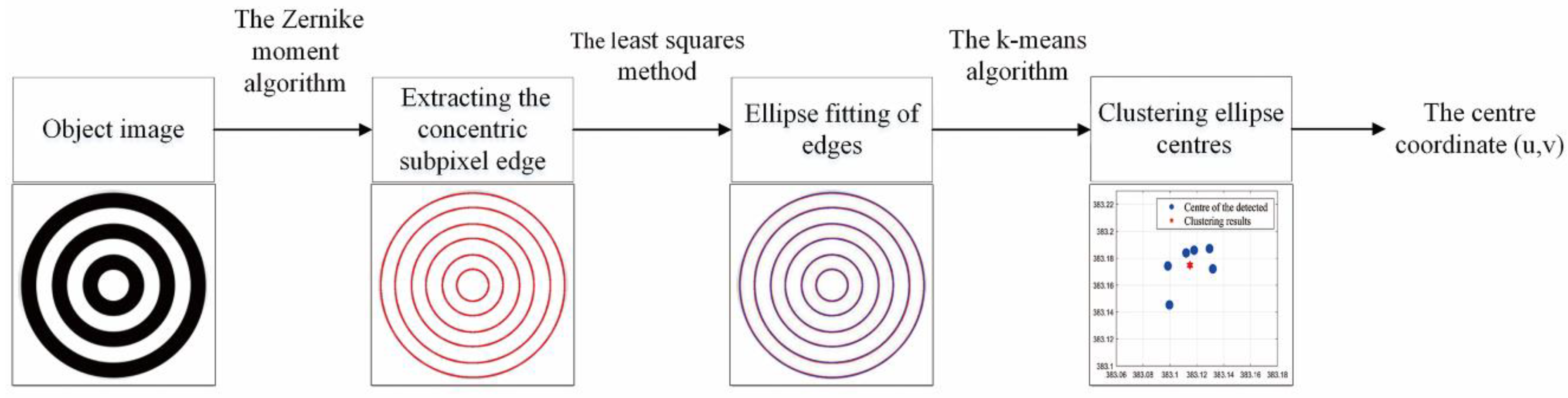

2.2.2. Object Positioning Method

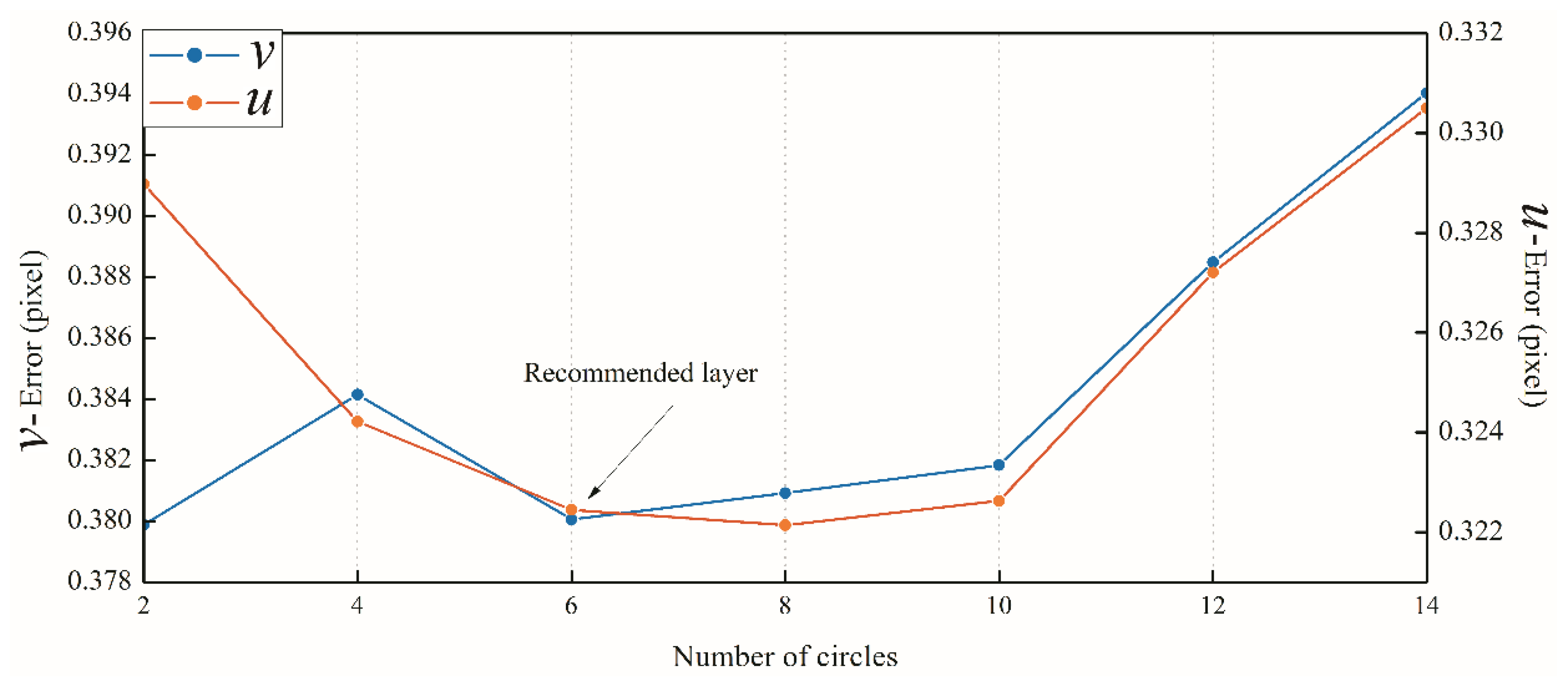

2.2.3. Target Parameter Design

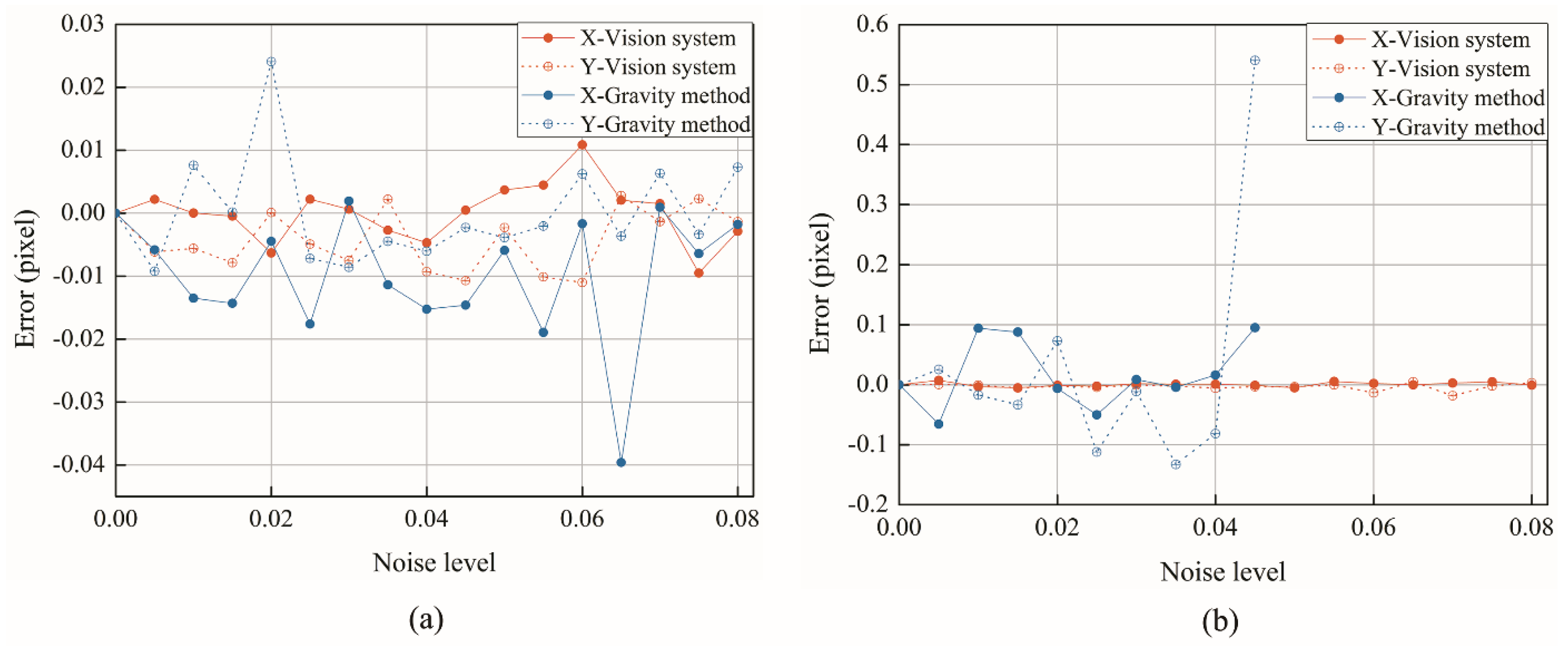

2.2.4. Noise Robustness

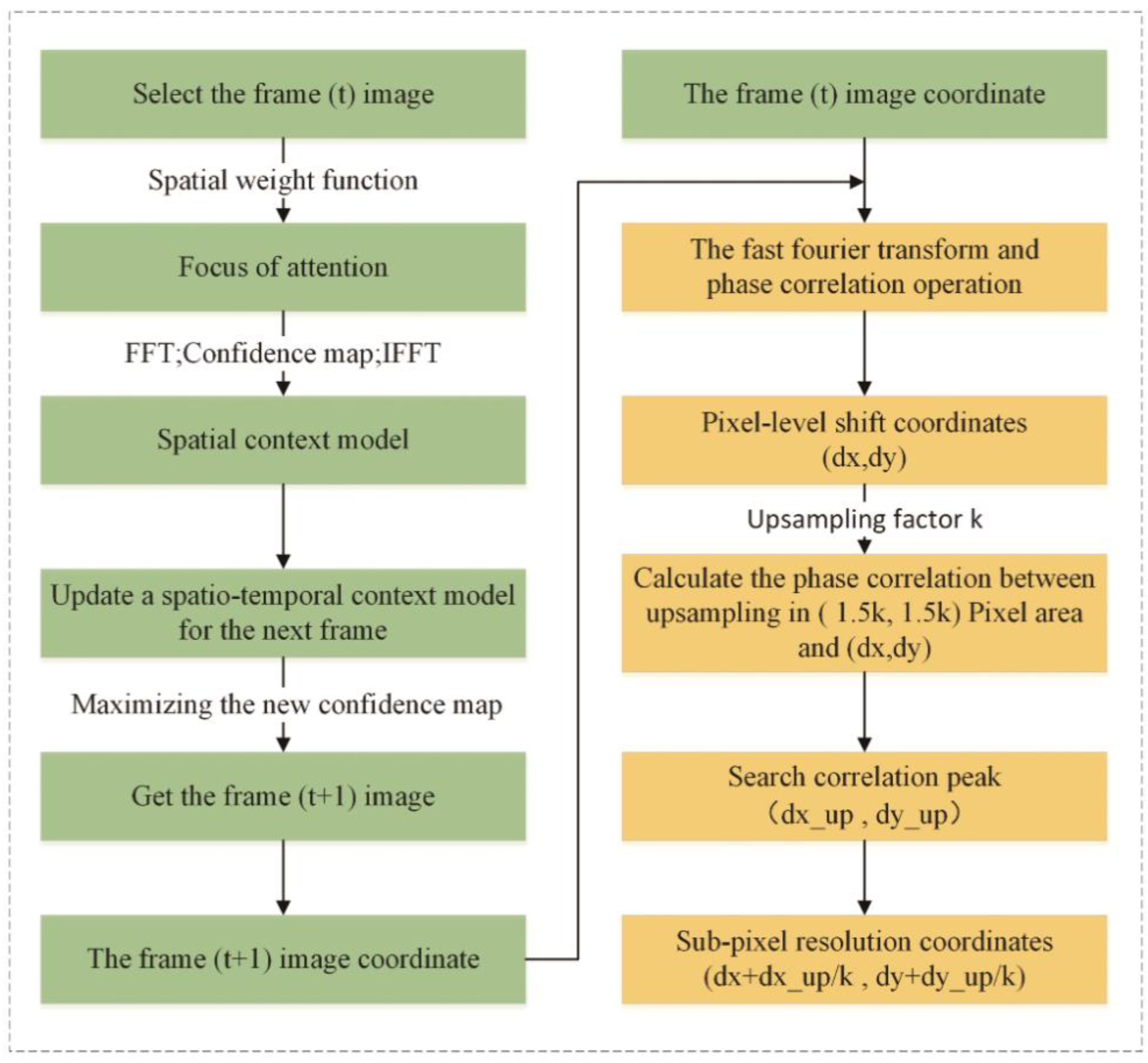

2.3. Target Tracking

2.3.1. Theory

2.3.2. Performance Evaluation

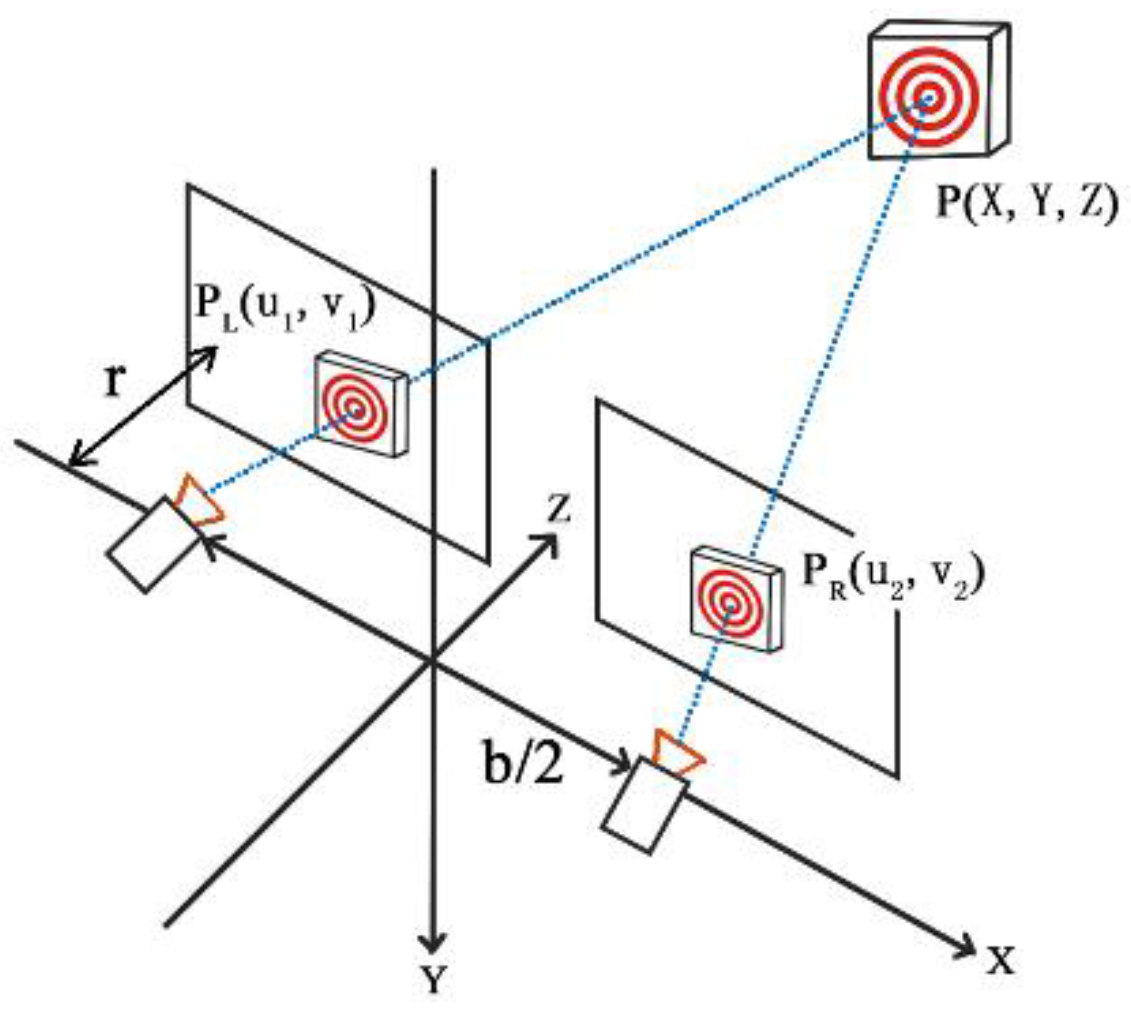

2.4. Coordinate Transformation

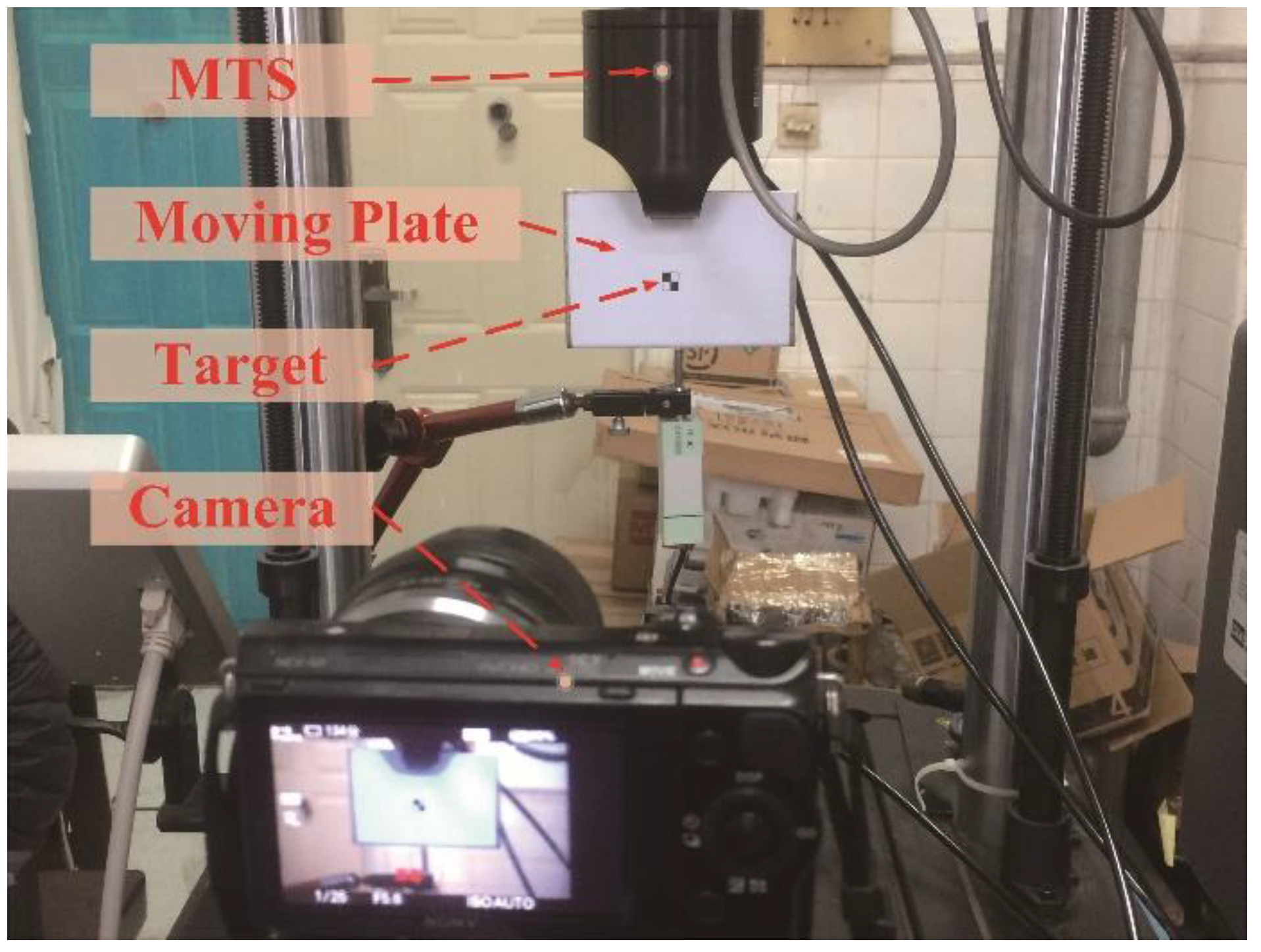

3. In-Laboratory Validation Test

3.1. Static Distance Measurement Test

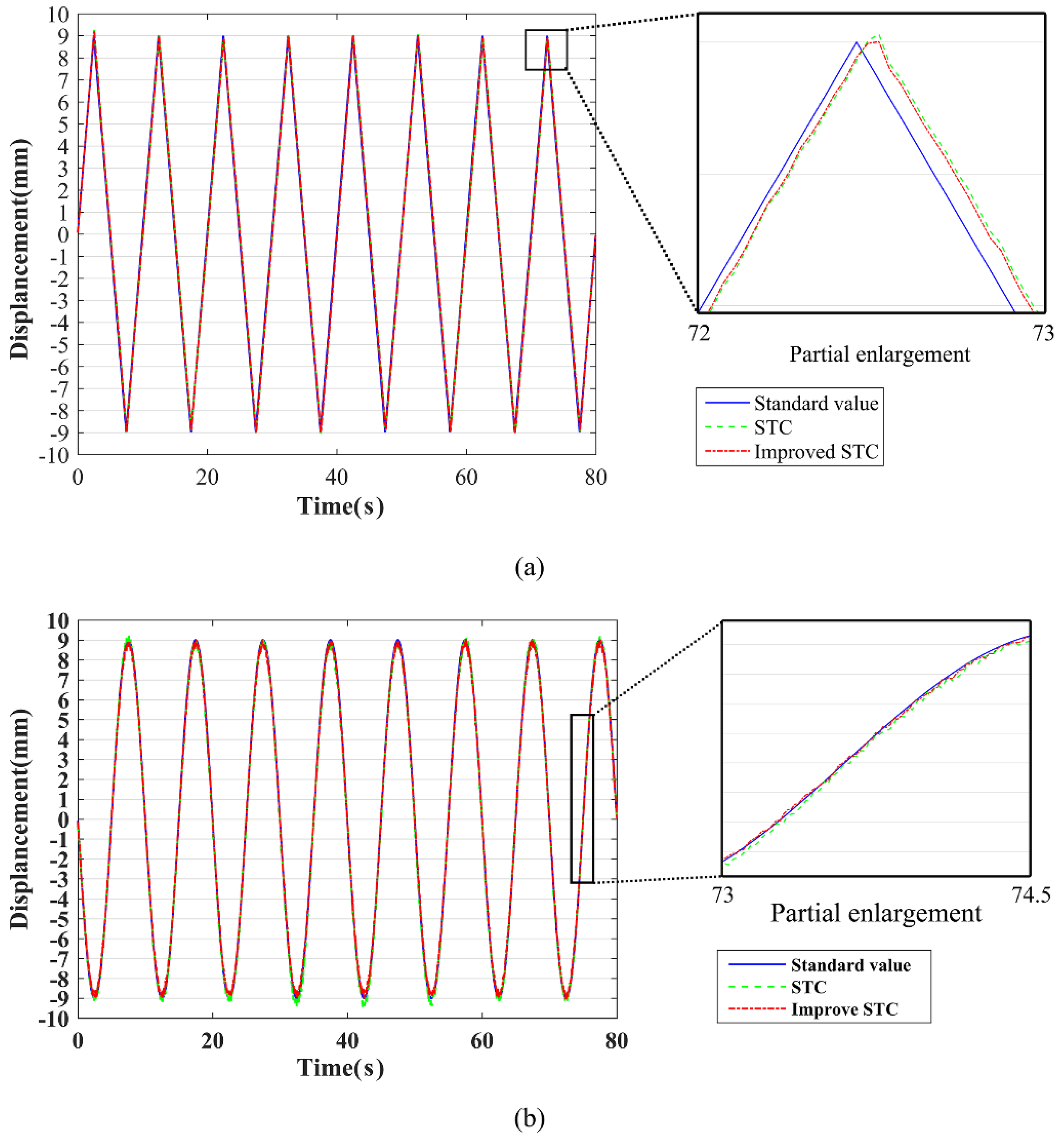

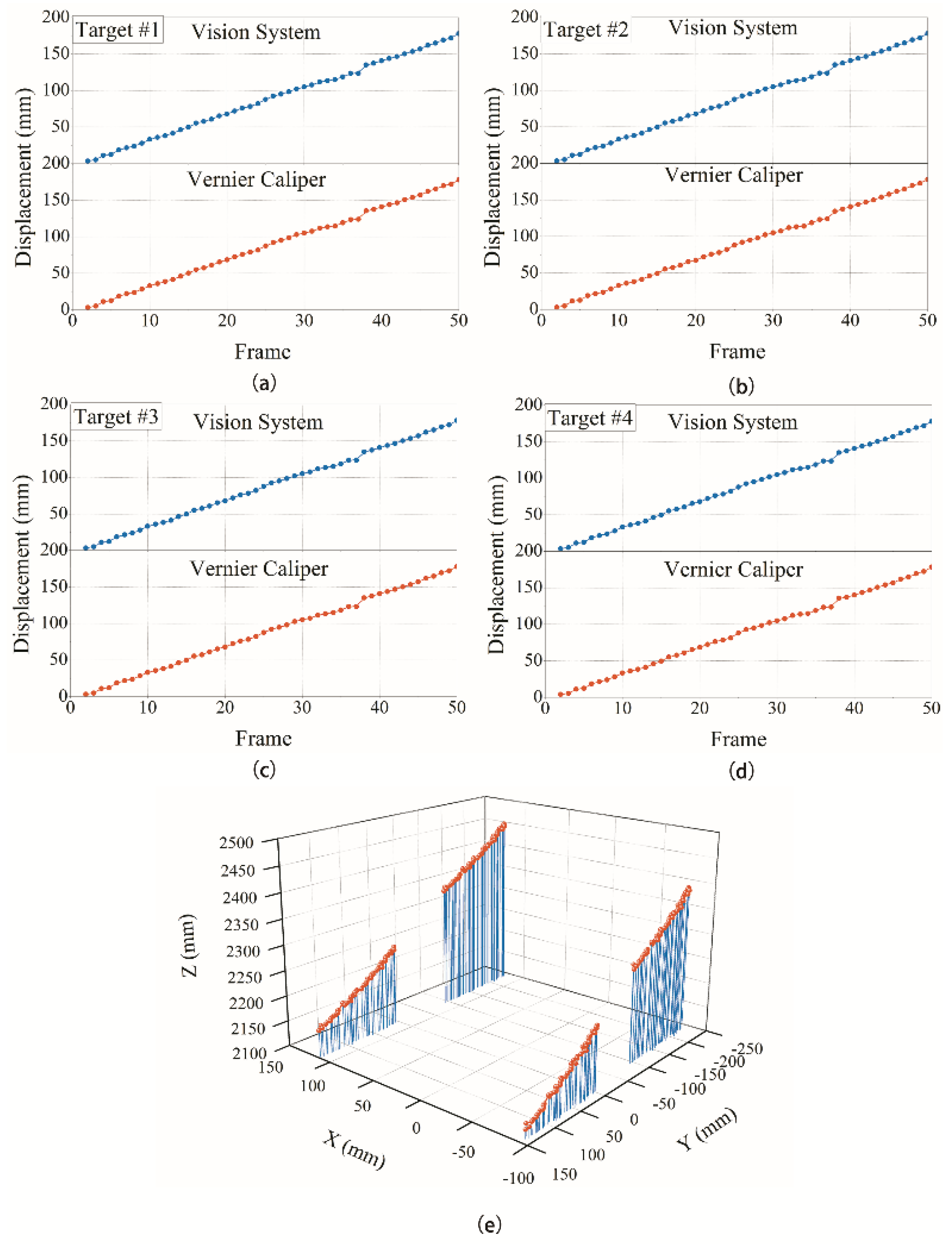

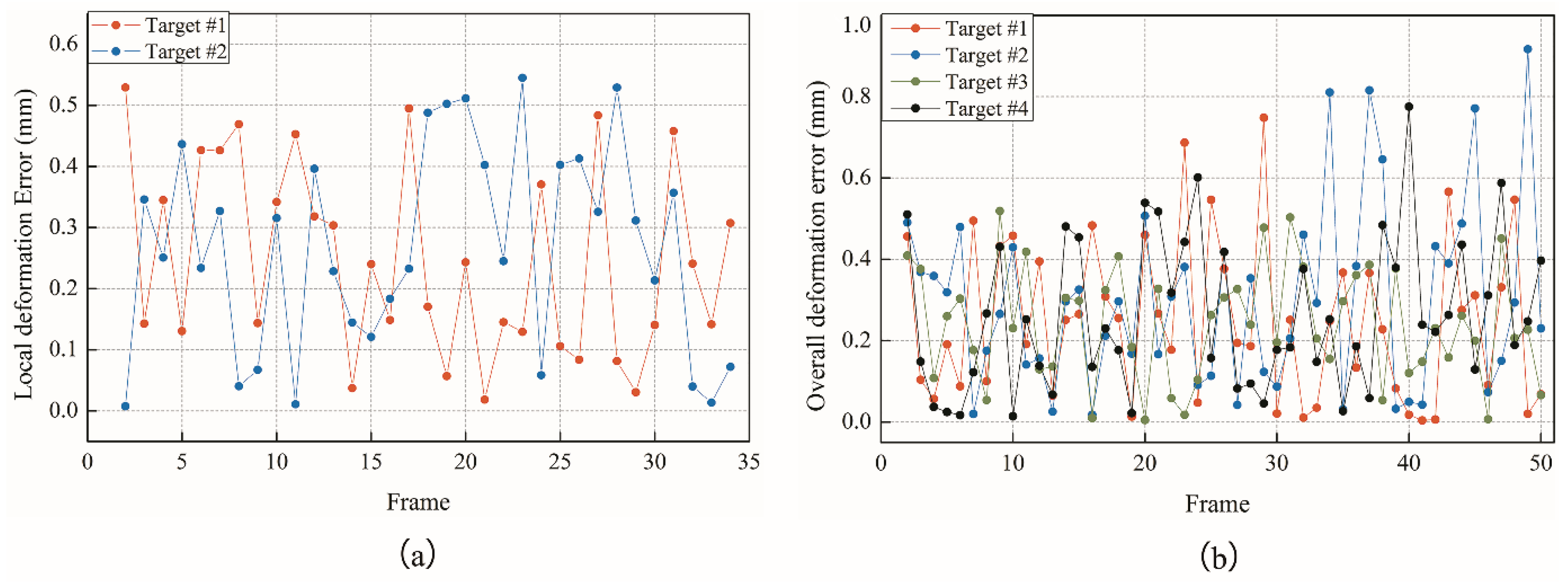

3.2. Moving Platform Experiment

4. Conclusions

- (1)

- Target markers adapted to the monitoring system are specially designed as concentric circles. Considering the error of program operation, graphics positioning size and time cost, the research suggests setting the number of concentric layers to six, and the pixel size of the marker points to no smaller than 28 × 28 pixels. Under the design of the target, it can be seen from the noise robustness test that the positioning method has better positioning accuracy and stability under different levels of Gaussian noise and impulse noise than the center of gravity method.

- (2)

- This study successfully introduces the target tracking technology into the deformation monitoring of the slope and improves the degree of intelligence. The tracking performance evaluation test shows that the use of UCC sub-pixel template matching technology to optimize the tracking accuracy of an STC target can effectively reduce the measurement error.

- (3)

- Finally, slope movement is simulated by the indoor sliding plate, and the deformation is monitored employing the proposed method. The results show that the accuracy of the deformation measurement can achieve a millimeter level. It validates the potentials of the stereo vision displacement sensor for cost-effective slope health monitoring. However, the actual slope application needs to be further explored according to the actual situation.

Author Contributions

Funding

Conflicts of Interest

References

- Marek, L.; Miřijovský, J.; Tuček, P. Monitoring of the Shallow Landslide Using UAV Photogrammetry and Geodetic Measurements. In Engineering Geology for Society and Territory; Springer: Berlin, Germany, 2015; Volume 2, pp. 113–116. [Google Scholar]

- Benoit, L.; Briole, P.; Martin, O.; Thom, C.; Malet, J.P.; Ulrich, P. Monitoring landslide displacements with the Geocube wireless network of low-cost GPS. Eng. Geol. 2015, 195, 111–121. [Google Scholar] [CrossRef]

- China National Standards. GB 50026-2007, Code for Engineering Surveying; China Planning Press: Shenzhen, China, 2008; p. 8.

- Abellán, A.; Oppikofer, T.; Jaboyedoff, M.; Rosser, N.J.; Lim, M.; Lato, M.J. Terrestrial laser scanning of rock slope instabilities. Earth Surf. Process. Landf. 2014, 39, 80–97. [Google Scholar] [CrossRef]

- Sun, Q.; Zhang, L.; Ding, X.L.; Hu, J.; Li, Z.W.; Zhu, J.J. Slope deformation prior to Zhouqu, China landslide from InSAR time series analysis. Remote Sens. Environ. 2015, 156, 45–57. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A Vision-Based Sensor for Noncontact Structural Displacement Measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Yoon, H.; Elanwar, H.; Choi, H.; Golparvar-Fard, M.; Spencer, B.F. Target-free approach for vision-based structural system identification using consumer-grade cameras. Struct. Control Health Monit. 2016, 23, 1405–1416. [Google Scholar] [CrossRef]

- Khuc, T.; Catbas, F.N. Completely contactless structural health monitoring of real-life structures using cameras and computer vision. J. Int. Assoc. Struct. Control Monit. 2017, 24. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. Proc. Alvey Vis. Conf. 1988, 1988, 147–151. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Understand. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Chang, C.C.; Ji, Y.F. Flexible Videogrammetric Technique for Three-Dimensional Structural Vibration Measurement. J. Eng. Mech. 2007, 133, 656–664. [Google Scholar] [CrossRef]

- Choi, I.; Kim, J.; Kim, D. A Target-Less Vision-Based Displacement Sensor Based on Image Convex Hull Optimization for Measuring the Dynamic Response of Building Structures. Sensors 2016, 16, 2085. [Google Scholar] [CrossRef] [PubMed]

- Hu, Q.; He, S.; Wang, S.; Liu, Y.; Zhang, Z.; He, L.; Wang, F.; Cai, Q.; Shi, R.; Yang, Y. A High-Speed Target-Free Vision-Based Sensor for Bus Rapid Transit Viaduct Vibration Measurements Using CMT and ORB Algorithms. Sensors 2017, 17, 1305. [Google Scholar] [CrossRef] [PubMed]

- Feng, D.; Feng, M.Q. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Signal Process. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Choi, H.S.; Cheung, J.H.; Kim, S.H.; Ahn, J.H. Structural dynamic displacement vision system using digital image processing. NDT E Int. 2011, 44, 597–608. [Google Scholar] [CrossRef]

- Song, Y.Z.; Bowen, C.R.; Kim, A.H.; Nassehi, A.; Padget, J.; Gathercole, N. Virtual visual sensors and their application in structural health monitoring. Struct. Health Monit. 2014, 13, 251–264. [Google Scholar] [CrossRef]

- Zhao, X.; Liu, H.; Yu, Y.; Xu, X.; Hu, W.; Li, M.; Ou, J. Bridge displacement monitoring method based on laser projection-sensing technology. Sensors 2015, 15, 8444–8463. [Google Scholar] [CrossRef] [PubMed]

- Jeon, H.; Kim, Y.; Lee, D.; Myung, H. Vision-Based Remote 6-DOF Structural Displacement Monitoring System Using a Unique Marker. Smart Struct. Syst. 2014, 13, 927–942. [Google Scholar] [CrossRef]

- Shariati, A.; Schumacher, T. Eulerian-based virtual visual sensors to measure dynamic displacements of structures. Struct. Control Health Monit. 2017, 24, e1977. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Vision-based multipoint displacement measurement for structural health monitoring. Struct. Control Health Monit. 2016, 23, 876–890. [Google Scholar] [CrossRef]

- Ye, X.W.; Yi, T.H.; Dong, C.Z.; Liu, T. Vision-based structural displacement measurement: System performance evaluation and influence factor analysis. Measurement 2016, 88, 372–384. [Google Scholar] [CrossRef]

- Lee, H.; Rhee, H.; Oh, J.H.; Jin, H.P. Measurement of 3-D Vibrational Motion by Dynamic Photogrammetry Using Least-Square Image Matching for Sub-Pixel Targeting to Improve Accuracy. Sensors 2016, 16, 359. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Li, Q.; Feng, H.J.; Xu, Z.H.; Chen, Y.T. A novel star image thresholding method for effective segmentation and centroid statistics. Optik Int. J. Light Electron Opt. 2013, 124, 4673–4677. [Google Scholar] [CrossRef]

- Weng, M.; He, M. Image detection based on SUSAN method and integrated feature matching. Int. J. Innov. Comput. Inf. Control Ijicic 2008, 4, 671–680. [Google Scholar]

- Hollitt, C. A convolution approach to the circle Hough transform for arbitrary radius. Mach. Vis. Appl. 2013, 24, 683–694. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi, C. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’94), Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; Weijer, J.V.D. Adaptive Color Attributes for Real-Time Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Beijing, China, 23–28 June 2014; pp. 1090–1097. [Google Scholar]

- Henriques, J.F.; Rui, C.; Martins, P.; Batista, J. High-Speed Tracking with Kernelized Correlation Filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhang, L.; Yang, M.H. Real-time object tracking via online discriminative feature selection. IEEE Trans. Image Process. 2013, 22, 4664–4677. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Zhang, L.; Liu, Q.; Zhang, D.; Yang, M.H. Fast Visual Tracking via Dense Spatio-temporal Context Learning. In Proceedings of the 13th European Conference on Computer vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Volume 8693, pp. 127–141. [Google Scholar]

- Zhang, K.; Zhang, L.; Yang, M.H.; Zhang, D. Fast Tracking via Spatio-Temporal Context Learning. arXiv, 2013; arXiv:1311.1939. [Google Scholar]

- Molinaviedma, A.J.; Felipesesé, L.; Lópezalba, E.; Díaz, F. High frequency mode shapes characterisation using Digital Image Correlation and phase-based motion magnification. Mech. Syst. Signal Process. 2018, 102, 245–261. [Google Scholar] [CrossRef]

- Javh, J.; Slavič, J.; Boltežar, M. High frequency modal identification on noisy high-speed camera data. Mech. Syst. Signal Process. 2018, 98, 344–351. [Google Scholar] [CrossRef]

- Javh, J.; Slavič, J.; Boltežar, M. The subpixel resolution of optical-flow-based modal analysis. Mech. Syst. Signal Process. 2017, 88, 89–99. [Google Scholar] [CrossRef]

- Xu, Y.; Brownjohn, J.; Kong, D. A non-contact vision-based system for multipoint displacement monitoring in a cable-stayed footbridge. Struct. Control Health Monit. 2018, 25, e2155. [Google Scholar] [CrossRef]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156. [Google Scholar] [CrossRef] [PubMed]

- Zhang, G. Vision Measurement; Science Press: Beijing, China, 2008; pp. 144–148. (In Chinese) [Google Scholar]

- Gao, S.Y. Improved Algorithm about Subpixel Edge Detection of Image Based on Zernike Orthogonal Moments. Acta Autom. Sin. 2008, 34, 1163–1168. [Google Scholar] [CrossRef]

- Gander, W.; Golub, G.H.; Strebel, R. Least-Squares Fitting of Circles and Ellipses. BIT Numer. Math. 1994, 34, 558–578. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, H.; Li, Y.; Hu, B. Improved K-means Algorithm Based on the Clustering Reliability Analysis. In Proceedings of the International Symposium on Computers and Informatics, Beijing, China, 17–18 January 2015. [Google Scholar]

- Sun, S.-G.; Wang, C.; Zhao, J.; Destech Publicat, I. The Application of Improved GM (1,1) Model in Deformation Prediction of Slope. In Proceedings of the 2nd International Conference on Sustainable Energy and Environmental Engineering, Xiamen, China, 18–19 December 2016. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration; IEEE Computer Society: Washington, DC, USA, 2000; pp. 1330–1334. [Google Scholar]

| Number of Circles | Measured Coordinates (pixel) | True Coordinates (pixel) | Error (pixel) | Time | |||

|---|---|---|---|---|---|---|---|

| (ms) | |||||||

| 2 | 855.620117 | 855.671021 | 856 | 856 | 0.379883 | 0.328979 | 3707 |

| 4 | 855.615845 | 855.675781 | 856 | 856 | 0.384155 | 0.324219 | 3885 |

| 6 | 855.619934 | 855.677551 | 856 | 856 | 0.380066 | 0.322449 | 4134 |

| 8 | 855.619080 | 855.677856 | 856 | 856 | 0.380920 | 0.322144 | 4337 |

| 10 | 855.618164 | 855.677368 | 856 | 856 | 0.381836 | 0.322632 | 4477 |

| 12 | 855.611511 | 855.672791 | 856 | 856 | 0.388489 | 0.327209 | 4849 |

| 14 | 855.605957 | 855.669495 | 856 | 856 | 0.394043 | 0.330505 | 5184 |

| Size (pixels) | Classify | Number of Circles Detected | Clustering Coordinates (pixels) | True Coordinates (pixels) | Error (pixels) | |||

|---|---|---|---|---|---|---|---|---|

| 41 × 41 | circle | 6 | 20.154753 | 20.214046 | 20.5 | 20.5 | 0.345247 | 0.285954 |

| ellipse | 6 | 20.08853 | 20.192606 | 20.5 | 20.5 | 0.41147 | 0.307394 | |

| 36 × 36 | circle | 6 | 17.625174 | 17.636324 | 18 | 18 | 0.374826 | 0.363676 |

| ellipse | 6 | 17.594893 | 17.673111 | 18 | 18 | 0.405107 | 0.326889 | |

| 30 × 30 | circle | 6 | 14.616336 | 14.700969 | 15 | 15 | 0.383664 | 0.299031 |

| ellipse | 6 | 14.589076 | 14.743123 | 15 | 15 | 0.410924 | 0.256877 | |

| 28 × 28 | circle | 6 | 13.625415 | 13.72232 | 14 | 14 | 0.374585 | 0.27768 |

| ellipse | 6 | 13.612086 | 13.60793 | 14 | 14 | 0.387914 | 0.39207 | |

| 25 × 25 | circle | 5 | 13.420197 | 11.999551 | 12.5 | 12.5 | −0.9202 | 0.500449 |

| ellipse | 6 | 12.436928 | 13.601745 | 12.5 | 12.5 | 0.063072 | −1.10175 | |

| Number | Noise Level | Gaussian Noise Error (pixels) | Impulse Noise Error (pixels) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Vision System | Gravity Method | Vision System | Gravity Method | ||||||

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0.005 | 0.002198 | −0.00613 | −0.00582 | −0.00922 | 0.007202 | 0.000458 | −0.06564 | 0.025549 |

| 3 | 0.01 | 0.000031 | −0.00558 | −0.01349 | 0.007616 | −0.00336 | −0.00061 | 0.094164 | −0.01722 |

| 4 | 0.015 | −0.000457 | −0.00784 | −0.0143 | 0.000161 | −0.00513 | −0.00552 | 0.087803 | −0.0336 |

| 5 | 0.02 | −0.006286 | 0.000122 | −0.00445 | 0.024084 | −0.00107 | −0.00293 | −0.00639 | 0.072873 |

| 6 | 0.025 | 0.002228 | −0.00488 | −0.01759 | −0.00717 | −0.00241 | −0.00424 | −0.05005 | −0.11229 |

| 7 | 0.03 | 0.000641 | −0.00748 | 0.001939 | −0.00858 | 0.001587 | −0.00052 | 0.008688 | −0.01136 |

| 8 | 0.035 | −0.002685 | 0.002228 | −0.01136 | −0.00445 | 0.000977 | −0.00198 | −0.00427 | −0.13275 |

| 9 | 0.04 | −0.004699 | −0.00928 | −0.01524 | −0.00603 | 0.000977 | −0.00598 | 0.016176 | −0.08148 |

| 10 | 0.045 | 0.000489 | −0.01071 | −0.01459 | −0.00226 | −0.00095 | −0.00345 | 0.09499 | 0.540542 |

| 11 | 0.05 | 0.003693 | −0.00229 | −0.0059 | −0.00388 | −0.00513 | −0.00339 | — | — |

| 12 | 0.055 | 0.004456 | −0.0101 | −0.01893 | −0.00204 | 0.005402 | −0.00037 | — | — |

| 13 | 0.06 | 0.010865 | −0.01099 | −0.00166 | 0.006228 | 0.001984 | −0.01364 | — | — |

| 14 | 0.065 | 0.002076 | 0.002808 | −0.03957 | −0.00365 | −0.00018 | 0.004792 | — | — |

| 15 | 0.07 | 0.001526 | −0.00134 | 0.000937 | 0.006315 | 0.002747 | −0.01834 | — | — |

| 16 | 0.075 | −0.009491 | 0.002289 | −0.0064 | −0.00334 | 0.004792 | −0.00192 | — | — |

| 17 | 0.08 | −0.002868 | −0.00134 | −0.00178 | 0.007308 | −0.00082 | 0.002961 | — | — |

| Number | Minimum Diameter | Pixel Size | Target Space Coordinates | Measurement (mm) | Error (mm) | ||

|---|---|---|---|---|---|---|---|

| I-1 | 5 | 66 × 66 | −47.2496 | 60.7972 | 1540.49 | 149.7765 | 0.2235 |

| 101.466 | 64.0262 | 1522.99 | |||||

| I-2 | 7.5 | 99 × 99 | −45.7566 | 48.5814 | 1526.84 | 149.8464 | 0.1536 |

| 102.9 | 55.1874 | 1544.49 | |||||

| I-3 | 10 | 132 × 132 | −72.0315 | 72.8357 | 1535.54 | 150.2367 | 0.2367 |

| 76.8983 | 78.3795 | 1554.52 | |||||

| I-4 | 12.5 | 165 × 165 | −45.524 | 70.3383 | 1534.15 | 149.7905 | 0.2095 |

| 101.925 | 71.9863 | 1507.82 | |||||

| I-5 | 15 | 198 × 198 | −33.9774 | 64.1482 | 1523.63 | 149.8362 | 0.1638 |

| 115.1 | 67.5548 | 1538.3 | |||||

| I-6 | 17.5 | 231 × 231 | −84.4728 | 90.9581 | 1536.39 | 149.7684 | 0.2316 |

| 63.3845 | 91.6196 | 1512.55 | |||||

| I-7 | 20 | 264 × 264 | −37.0946 | 87.539 | 1517.28 | 150.1354 | 0.1354 |

| 108.986 | 84.4612 | 1482.76 | |||||

| I-8 | 22.5 | 297 × 297 | −101.026 | 85.8391 | 1518.59 | 149.8158 | 0.1842 |

| 46.4737 | 86.6175 | 1492.36 | |||||

| Number | Real (mm) | Target Space Coordinates | Measurement (mm) | Error (mm) | ||

|---|---|---|---|---|---|---|

| II-1 | 100 | −31.187 | 95.2045 | 1529.29 | 100.1681 | 0.1681 |

| 66.6902 | 95.3143 | 1507.99 | ||||

| II-2 | 125 | 15.1994 | 81.9168 | 1526.35 | 124.7468 | 0.2532 |

| 139.581 | 85.3977 | 1535.23 | ||||

| II-3 | 150 | −33.9774 | 64.1482 | 1523.63 | 149.8362 | 0.1638 |

| 115.1 | 67.5548 | 1538.3 | ||||

| II-4 | 175 | −84.989 | 109.588 | 1543.95 | 174.8049 | 0.1951 |

| 87.5032 | 109.406 | 1515.61 | ||||

| II-5 | 200 | −36.0215 | 65.7006 | 1538.77 | 200.1552 | 0.1552 |

| 163.315 | 67.5481 | 1556.76 | ||||

| II-6 | 225 | −86.3799 | 60.4379 | 1544.6 | 225.26 | 0.26 |

| 137 | 61.2969 | 1573.63 | ||||

| II-7 | 250 | −65.7222 | 107.195 | 1540.87 | 250.1702 | 0.1702 |

| 183.541 | 113.807 | 1561.1 | ||||

| II-8 | 275 | −52.424 | 74.3521 | 1516.97 | 275.2898 | 0.2898 |

| 221.944 | 80.5141 | 1538.62 | ||||

| II-9 | 300 | −144.008 | 67.8541 | 1520.25 | 300.2821 | 0.2821 |

| 154.401 | 74.3054 | 1553.11 | ||||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, L.; Tan, J.; Hu, Q.; He, S.; Cai, Q.; Fu, Y.; Tang, S. Non-Contact Measurement of the Surface Displacement of a Slope Based on a Smart Binocular Vision System. Sensors 2018, 18, 2890. https://doi.org/10.3390/s18092890

He L, Tan J, Hu Q, He S, Cai Q, Fu Y, Tang S. Non-Contact Measurement of the Surface Displacement of a Slope Based on a Smart Binocular Vision System. Sensors. 2018; 18(9):2890. https://doi.org/10.3390/s18092890

Chicago/Turabian StyleHe, Leping, Jie Tan, Qijun Hu, Songsheng He, Qijie Cai, Yutong Fu, and Shuang Tang. 2018. "Non-Contact Measurement of the Surface Displacement of a Slope Based on a Smart Binocular Vision System" Sensors 18, no. 9: 2890. https://doi.org/10.3390/s18092890

APA StyleHe, L., Tan, J., Hu, Q., He, S., Cai, Q., Fu, Y., & Tang, S. (2018). Non-Contact Measurement of the Surface Displacement of a Slope Based on a Smart Binocular Vision System. Sensors, 18(9), 2890. https://doi.org/10.3390/s18092890