A Novel Coordinated Motion Fusion-Based Walking-Aid Robot System

Abstract

:1. Introduction

- (1)

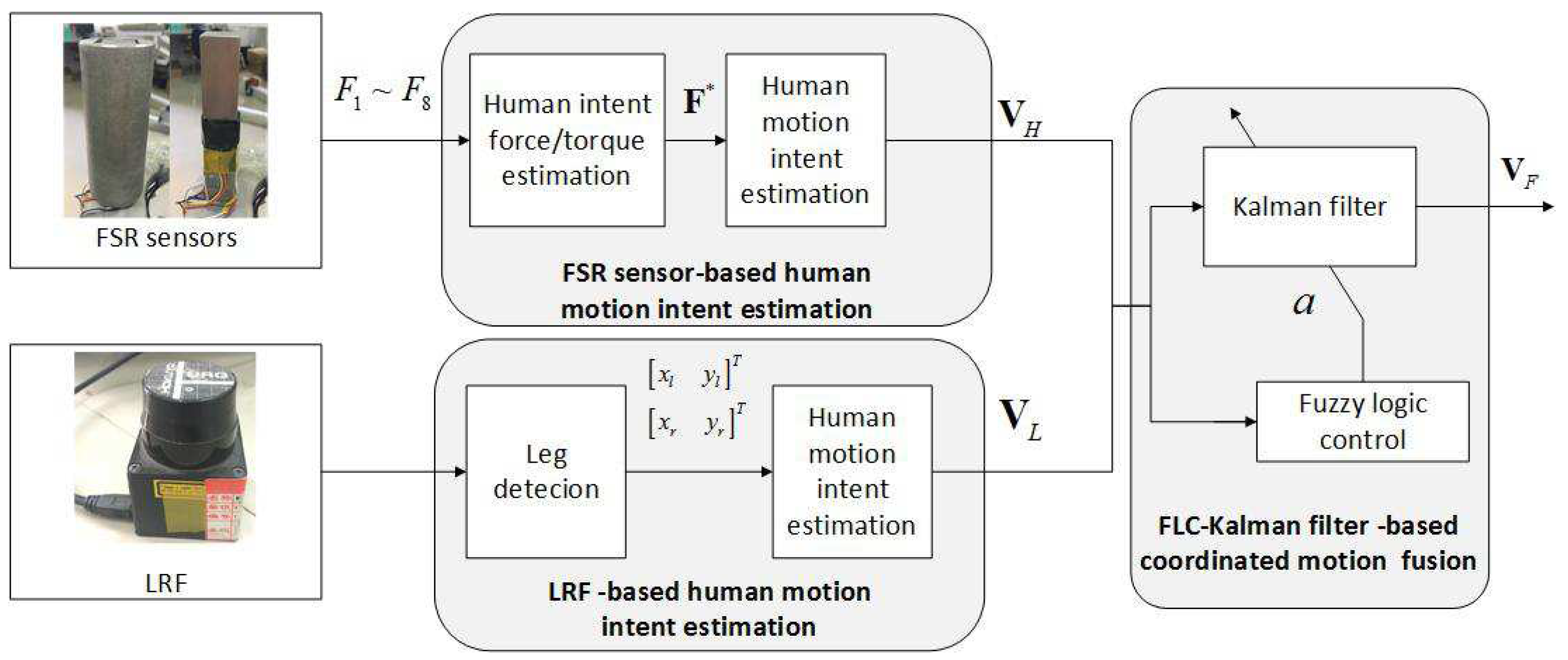

- We aim to investigate how to utilize the synergetic movements of the arms and legs to perceive more accurate HMI and a more compliant human-robot interface method in the normal walking state. According to the coordinated motion of the human-robot system, force sensors and an laser range finder (LRF) were used to detect the velocities of the human’s upper and lower limbs to estimate HMIs. The synergy of arm and leg movements will help the robot to perceive more accurate HMI and release a part of the user’s hand strength. Consequently, the user will feel that the robot is following rather than pushing him/her. Thus, the robot will better understand the user’s intention, and more effective guidance commands will be generated so that the walking-aid robot operates in a safe manner.

- (2)

- Compared with the conventional force control methods (such as admittance control), the proposed coordinated motion-based motion control algorithm can detect the user’s abnormal gait in abnormal walking state, then remind the robot to react to prevent the user from falling.

2. Related Work

3. Multi-Sensor-Based HMI Estimation Algorithms

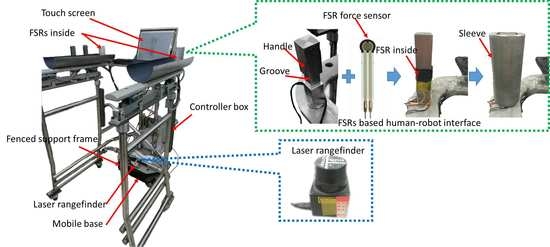

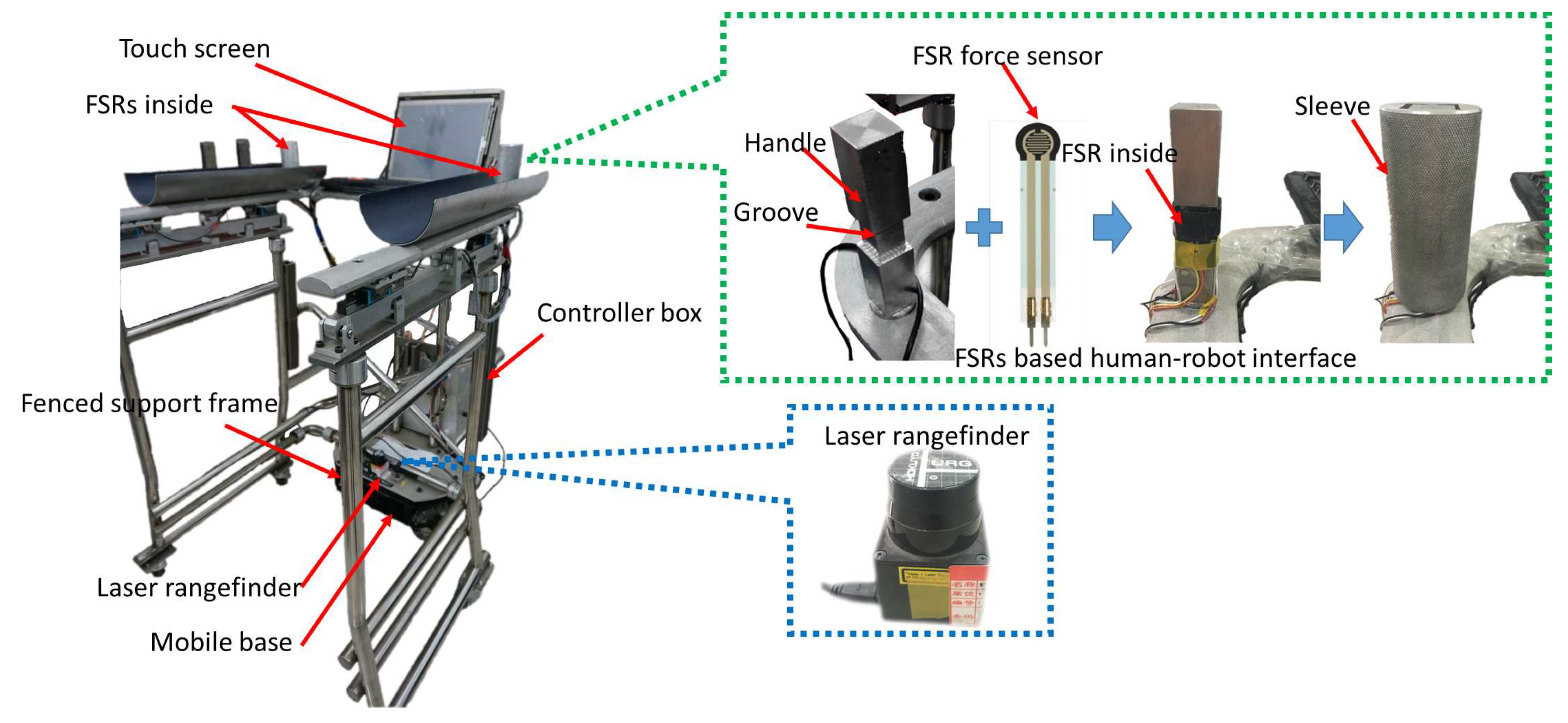

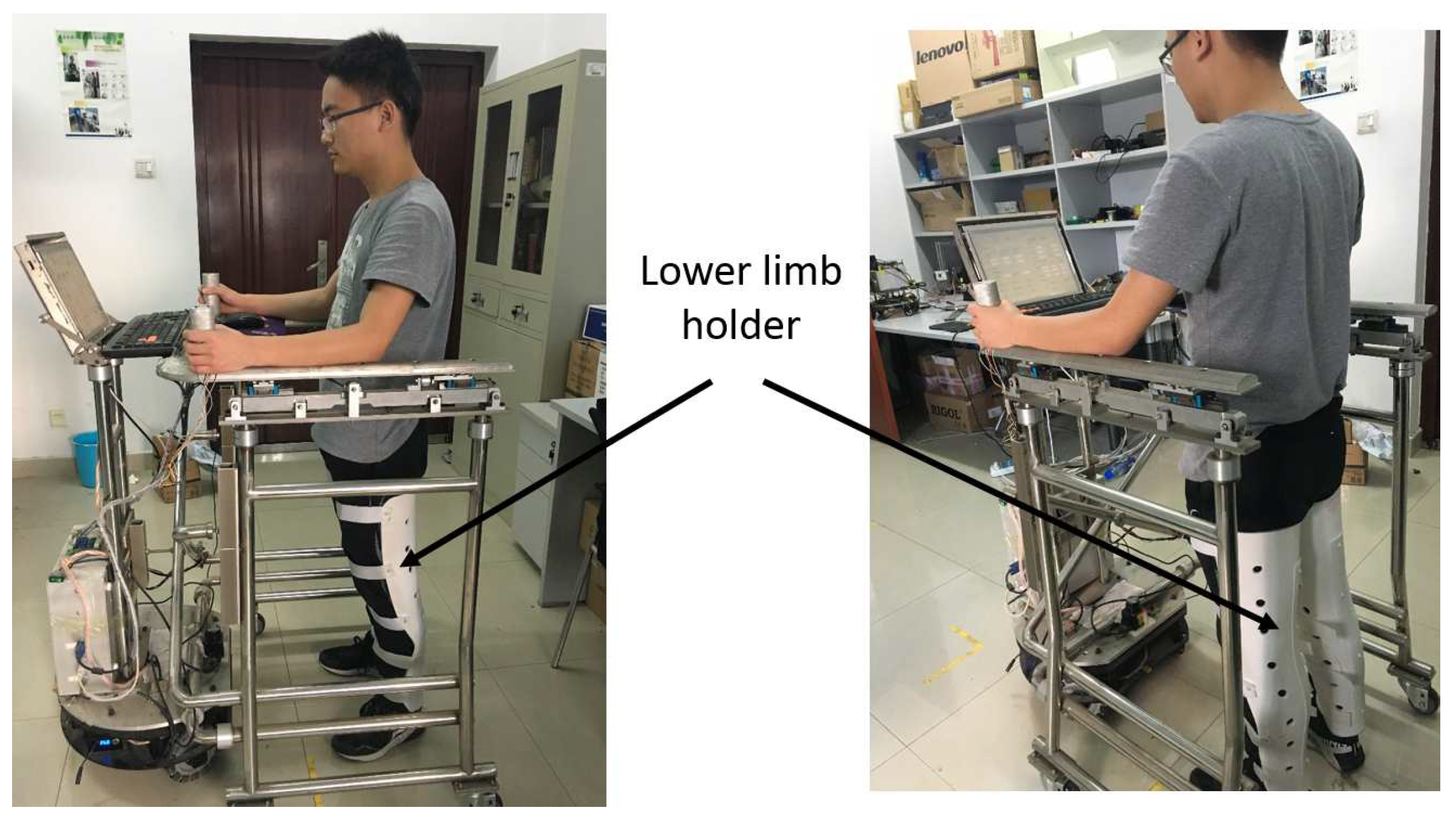

3.1. Mechanism for the Walking-Aid Robot

3.2. FSR Sensor-Based HMI Estimation Algorithm

3.3. LRF-Based HMI Algorithm

- Range segmentation:Due to the proximity of consecutive scan points probably belonging to the same object, range segmentation divides these consecutive scan points into a cluster. The segmentation method calculates the distance between two consecutive points because it is less than a given threshold. Isolated scan points are rejected.

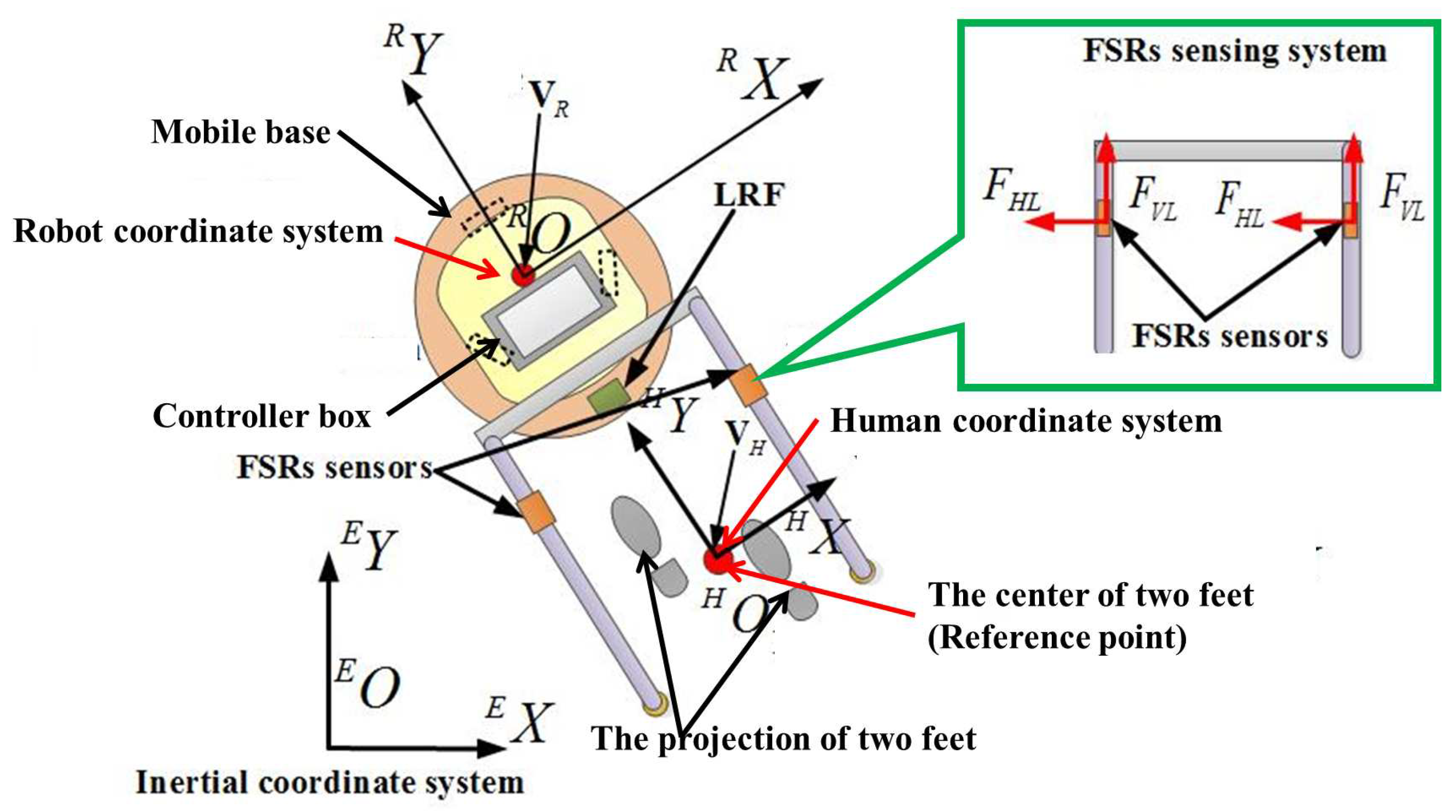

- Circle identification:We used the method in [28] to identify the circle. When a circle is identified, its center and radius need to be estimated. From analytic geometry, the three points on a unique circle P1, P2 and P3 constitute two secant lines (as shown in Figure 3). The first line denoted a passes through points P1 and P2, and the second line denoted b passes through points P2 and P3. The equations of these two lines are as follows:where are the slopes of two secant lines.The center of the circle is the intersection of the two lines perpendicular to and passing through the midpoints of the secant line segments and , as shown in Figure 3. The position of the center is as follows:where line a passes through points P1 and P2, and line b passes through points P2 and P3.Since some circles are not human’s legs, a precondition is used to remove the segments that are not circles: the middle point of the segment must be inside an area delimited by two lines parallel to the extremes of the same segment, , as shown in Figure 3.

- Leg detection:According to the inscribed angle theorem, if four consecutive points , , and are on the same circle, according to geometrical analysis, they have the same inscribed angles. Then:After calculating the average of the inscribed angles of all points, if the standard deviation values are less than and the average values are between and , the segment is classified as a circle. The procedure for detecting legs is an extension of circle detection. To identify a leg, the extra constraint of the distance between end-points falling within the range of expected leg diameters (0.1m–0.25 m) and the farthest distance between the LRF and a leg with a range of 0.3–1.2 m are proposed. Table 2 testifies the validity of the leg detection method when the user wears different clothes in different seasons. In the leg detection experiment, seven subjects wore their daily clothes and trousers in different seasons. All the success rates of the leg detection method in different seasons were 100%.

- LRF-based HMI estimation:Walking is a process in which two legs move alternately, but using only the velocities of legs, it is difficult to express a human’s moving velocity and direction. Therefore, after detecting two leg positions relative to the LRF, we used the center position of the line segment, which consists of the positions of both legs to estimate the HMI:where and are the positions of the left leg and and are the positions of the right leg. and are the actual velocities of the robot. Because it is difficult to obtain the intent angular velocity from the middle point of the two feet, in this paper, we formulated a design in which is equal to . are the desired velocities of the robot as detected by LRF.

| Algorithm 1: |

| Input: and Output: 1. Get each scan point position and by LRF. 2. Divide consecutive scan points into a cluster by range segmentation. 3. Identify the detected circle. 4. Calculate the center of the circles by Equations (4)–(6). 5. Identify the user’s legs from the detected circles. 6. Calculate the LRF-based HMI by Equations (8)–(10). |

4. Coordinated Motion Fusion-Based Walking-Aid Robot System

4.1. Coordinated Motion Fusion-Based Compliance Control Algorithm in the Normal Walking State

4.1.1. Kalman Filter-Based Coordinated Motion Fusion Algorithm

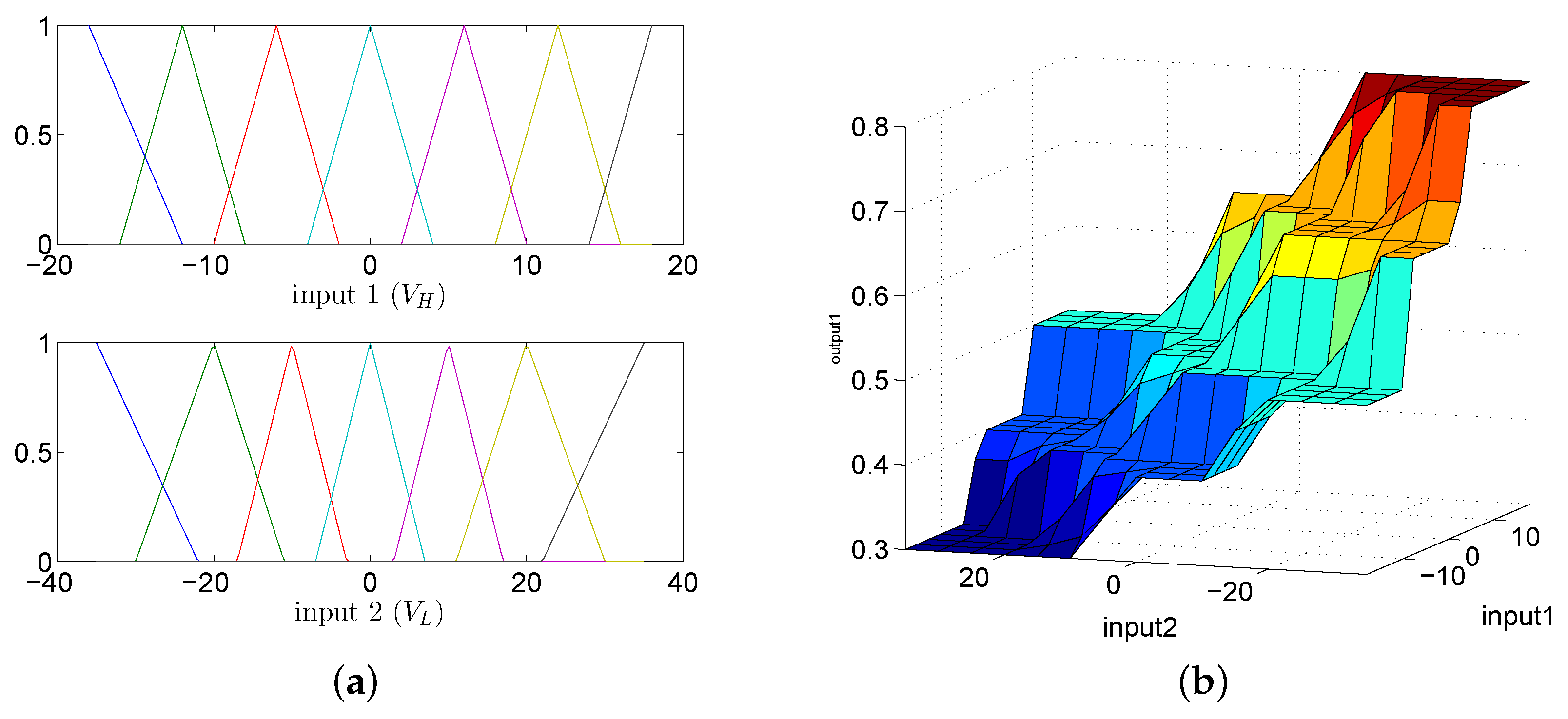

4.1.2. Fuzzy Logic Adaptive System

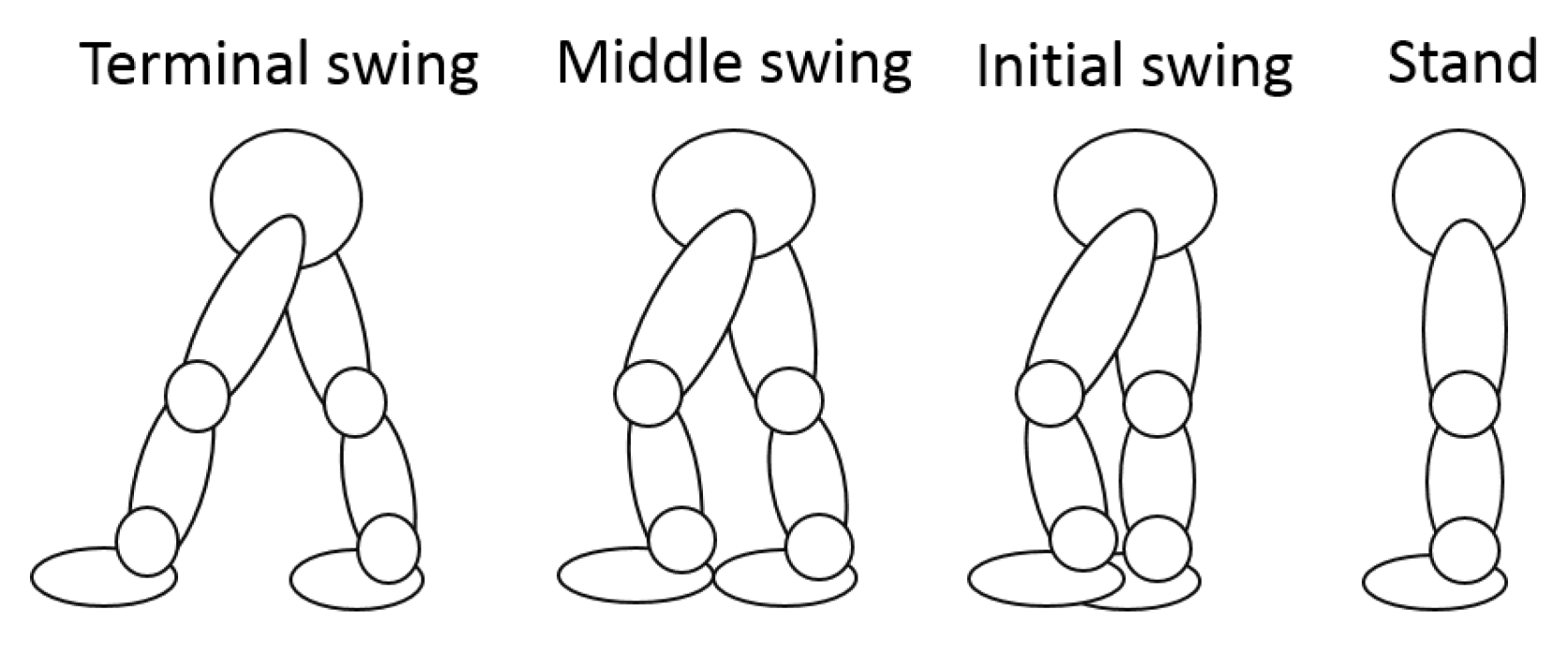

- Initial swing: At the beginning of a stride, FSR-based HMI velocities are smaller than LRF-based HMI velocities. When an individual uses a robot, he/she will sense that the robot is heavy and must exert effort to push the robot. If the robot assigns more trust to the LRF-based HMI velocities, it will have a faster starting speed. Therefore, the user can apply less strength to manipulate the robot and feel more comfortable.

- Middle swing: When the user is in the middle swing phase, both of the HMI velocities have reached their peak values and the robot trusts both of them.

- Terminal swing: In the terminal swing phase, the LRF-based HMI velocities will decrease rapidly, and the FSR-based HMI velocities will remain unchanged. At this time, due to safety requirements, the robot should assign more trust to the FSR-based HMI velocities.

| Algorithm 2: |

| Input: , Output: 1. Get and . 2. Calculate the membership functions of and 3. Calculate the output a (the confidence variable of HMI algorithms) by the fuzzy logic rules in Table 3. 4. Get the state variables by Equations (11) and (12). 5. Calculate the “predict” stage of the Kalman filter. 6. Calculate the “correct” stage of the Kalman filter according to the confidence variable a. 7. Get the output of the Kalman filter. |

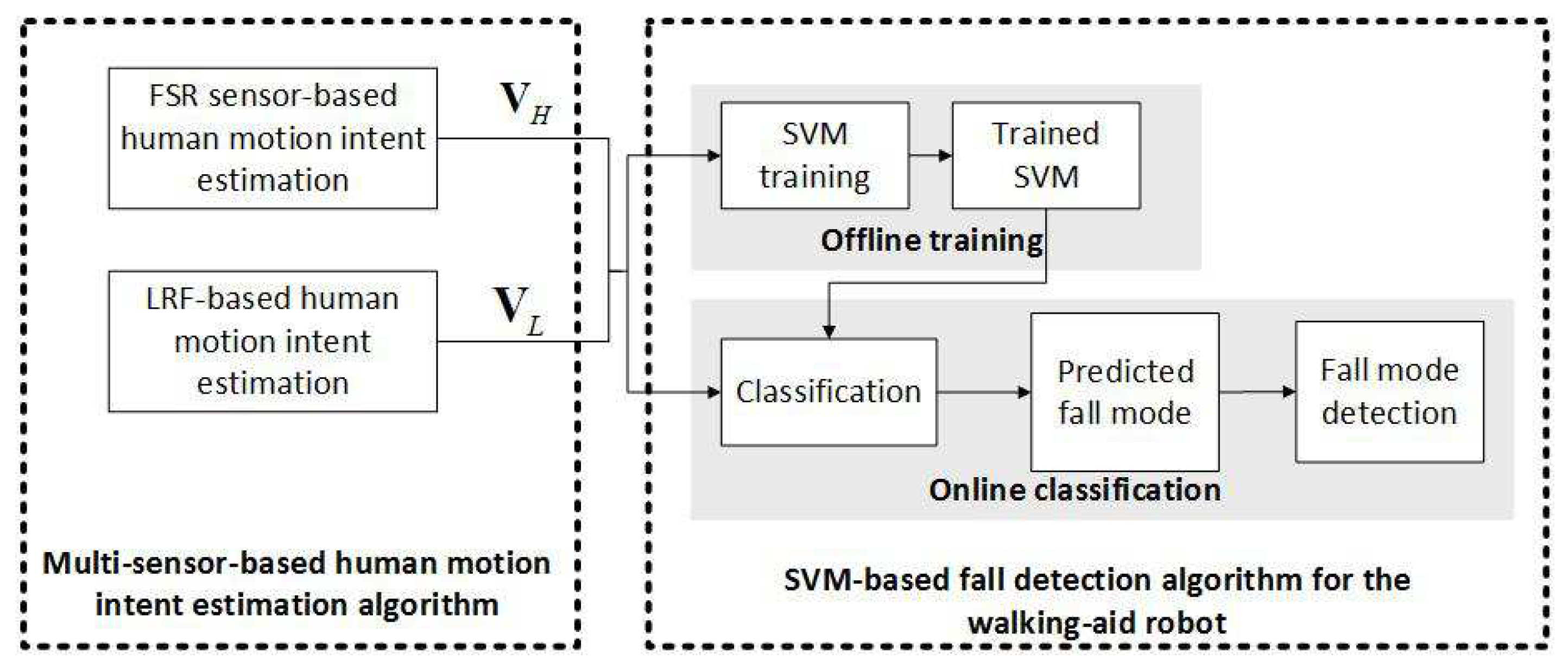

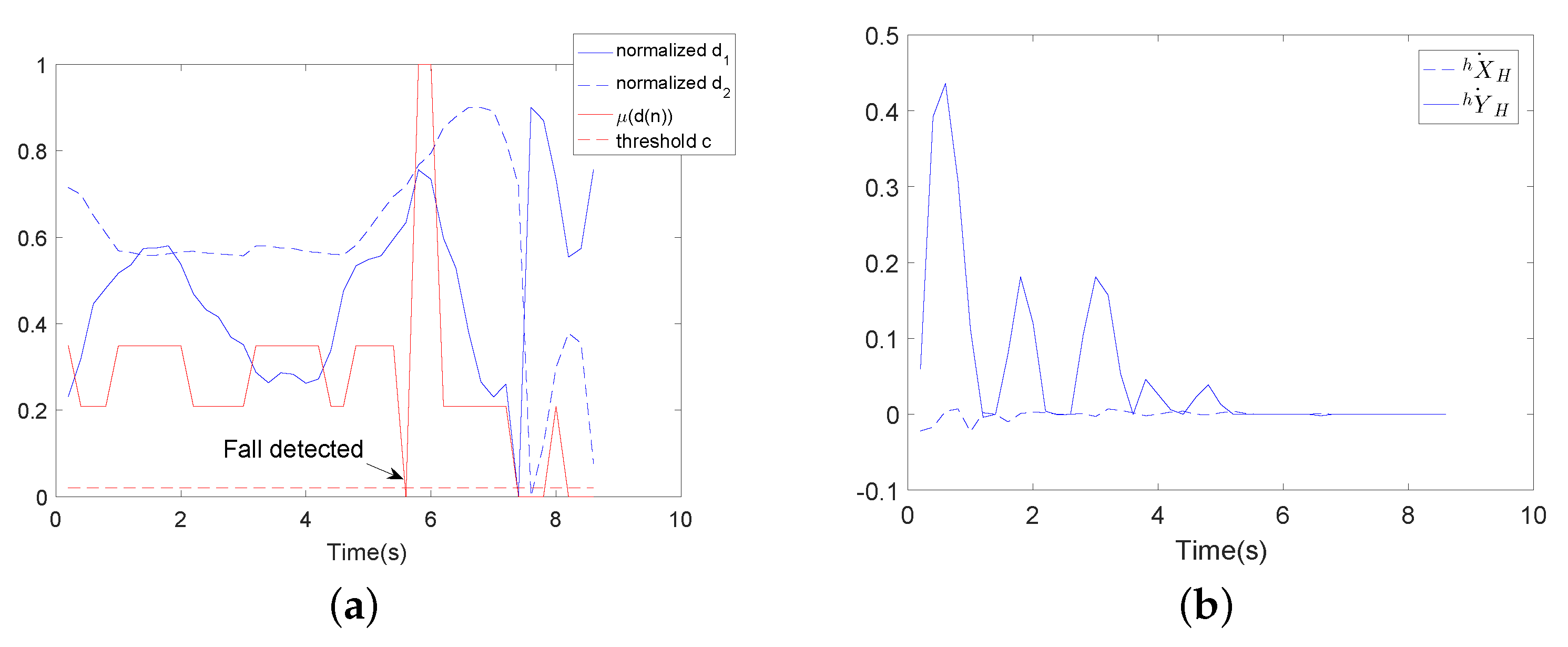

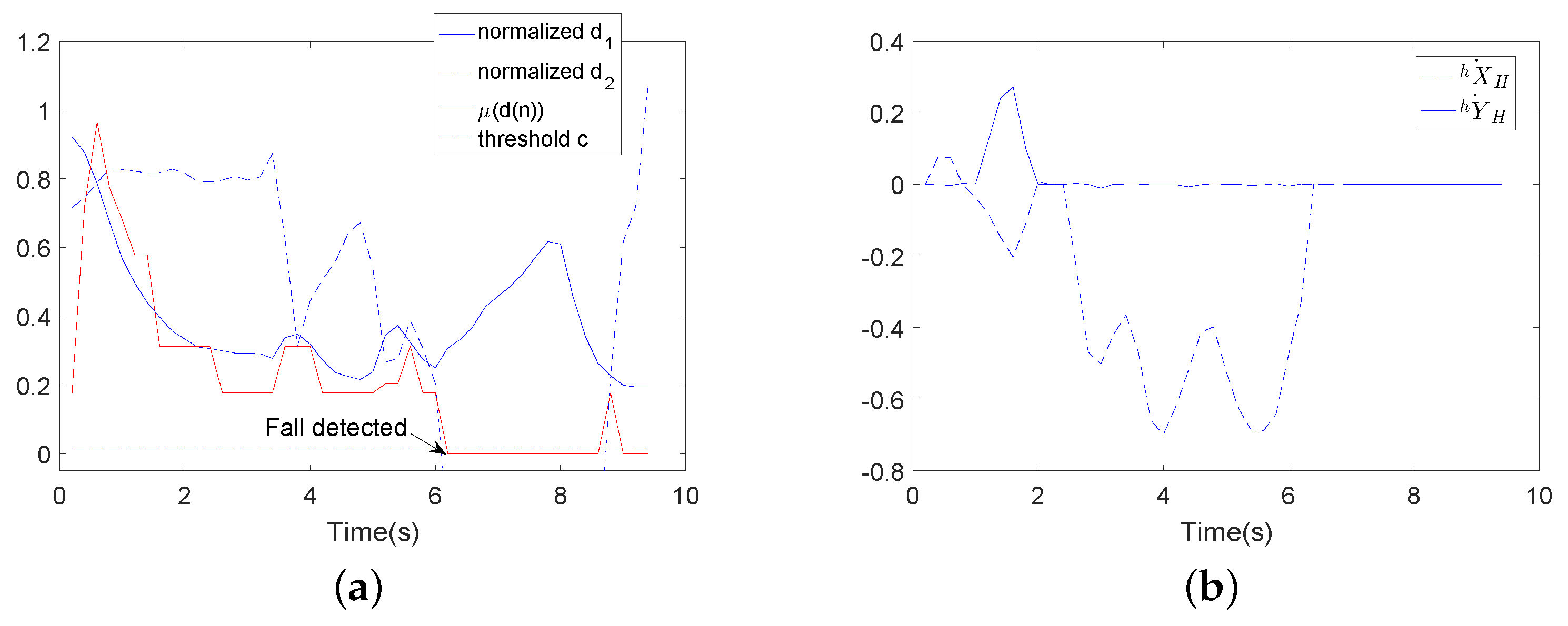

4.2. Coordinated Motion-Based Fall Detection Algorithm in the Abnormal Walking State

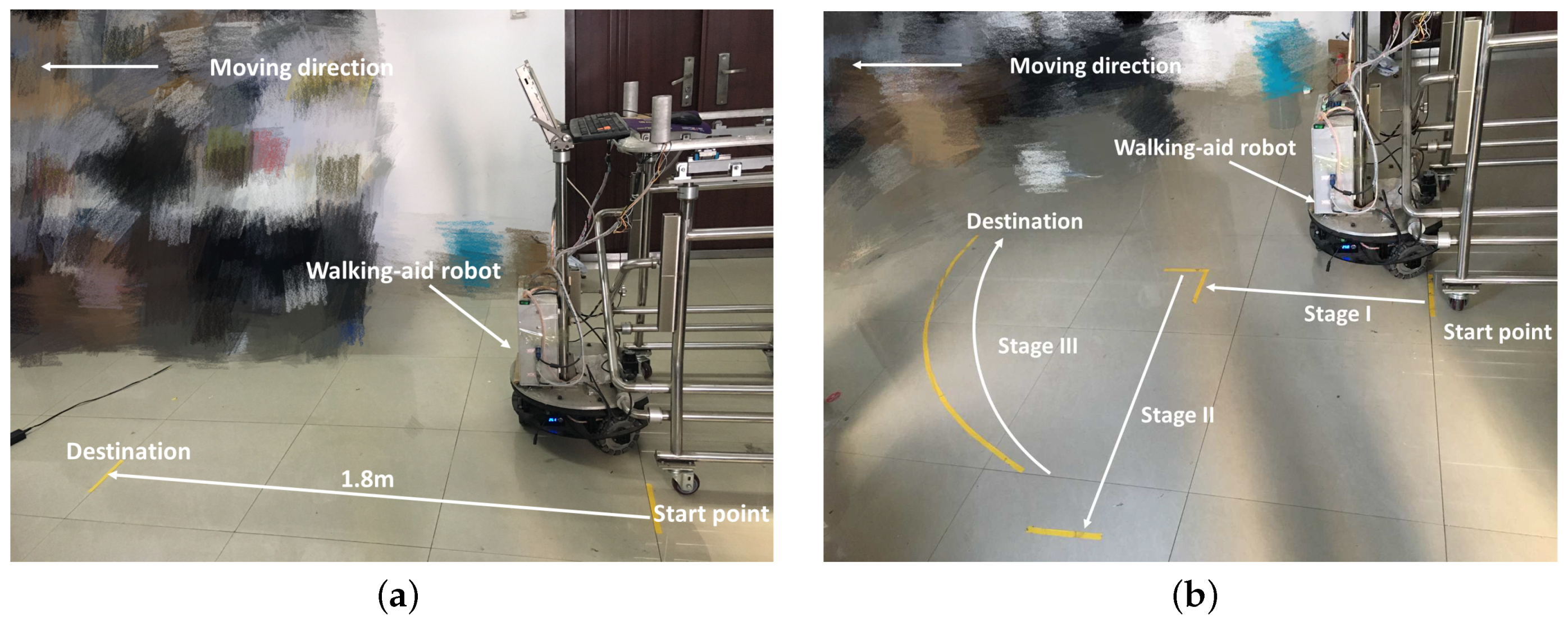

5. Experiment

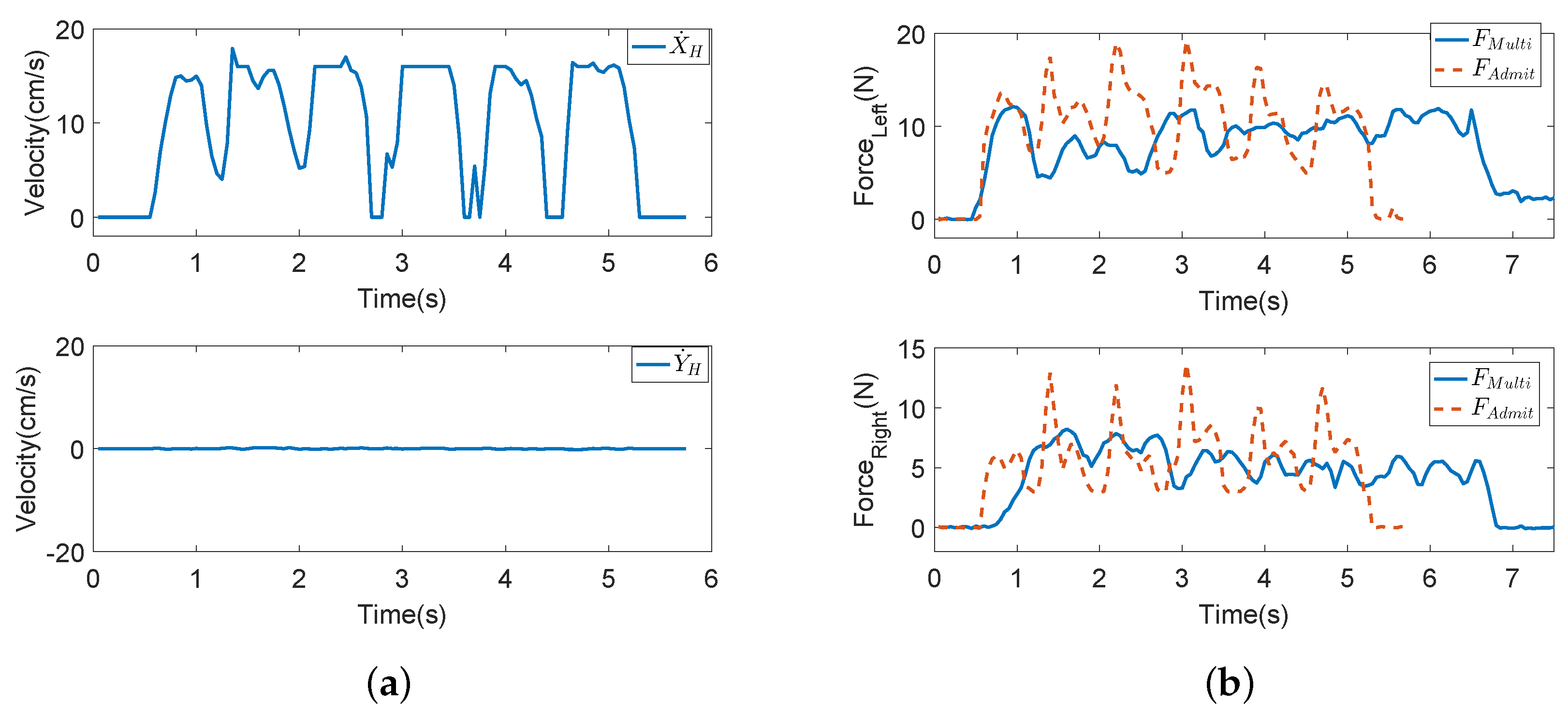

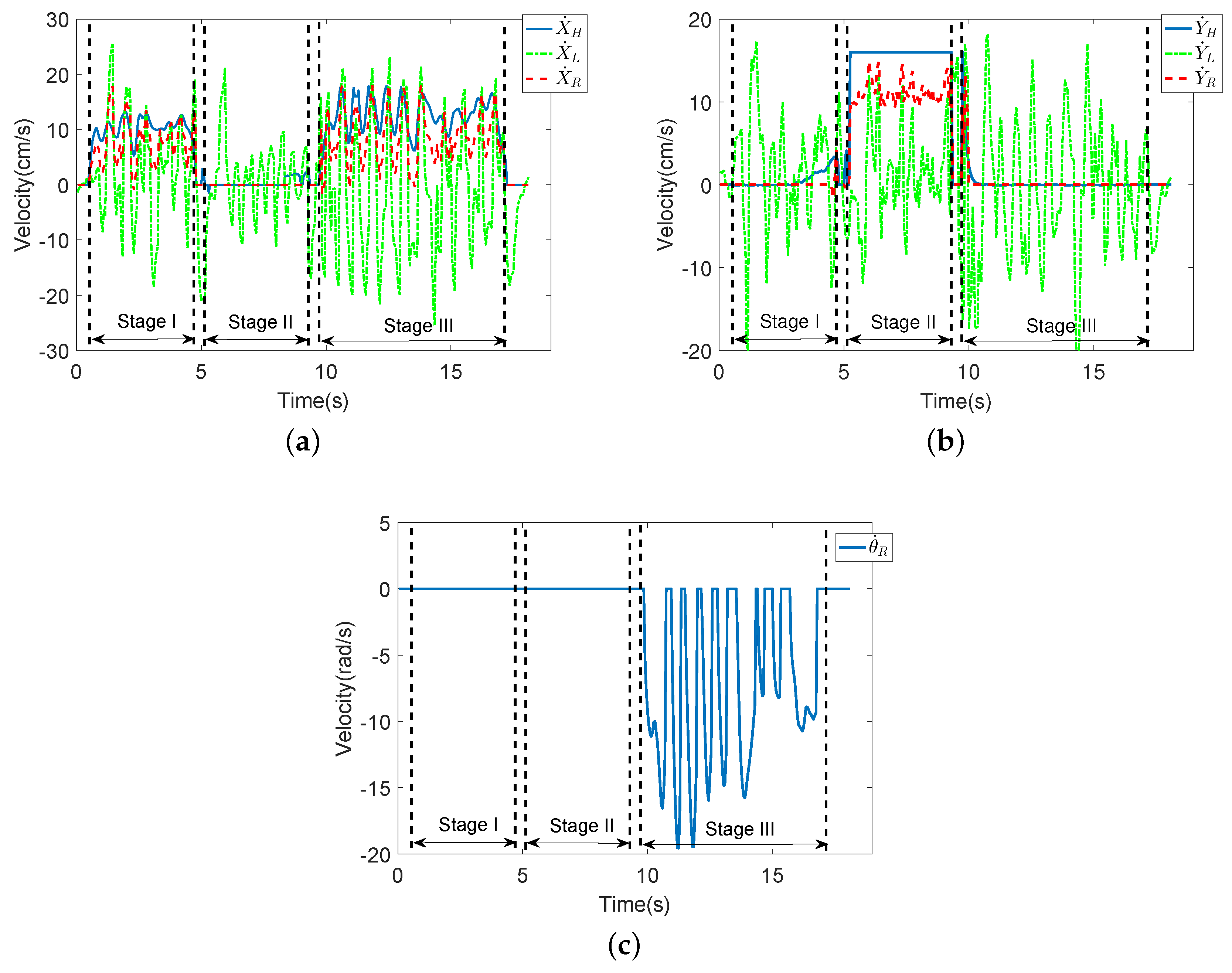

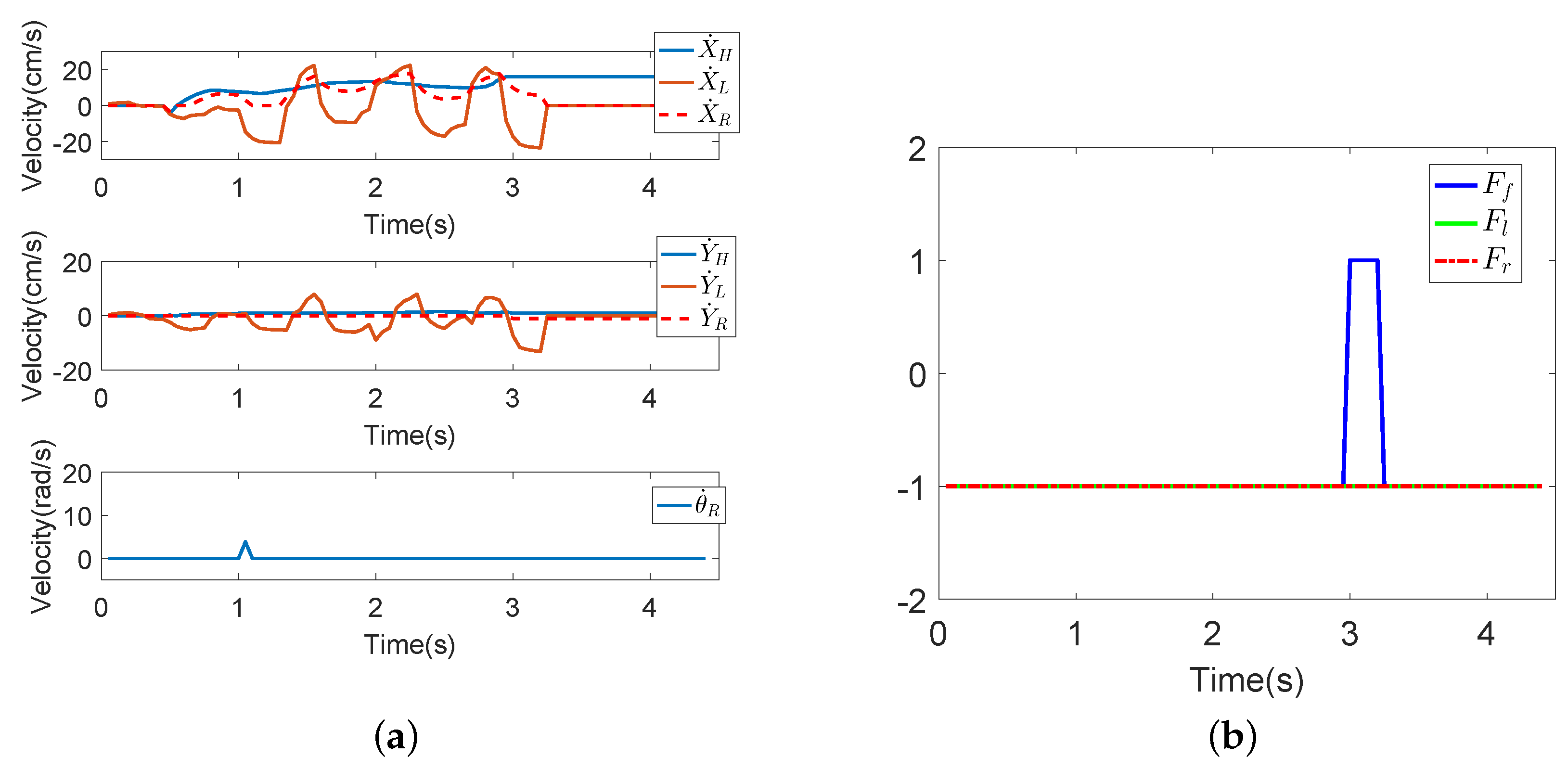

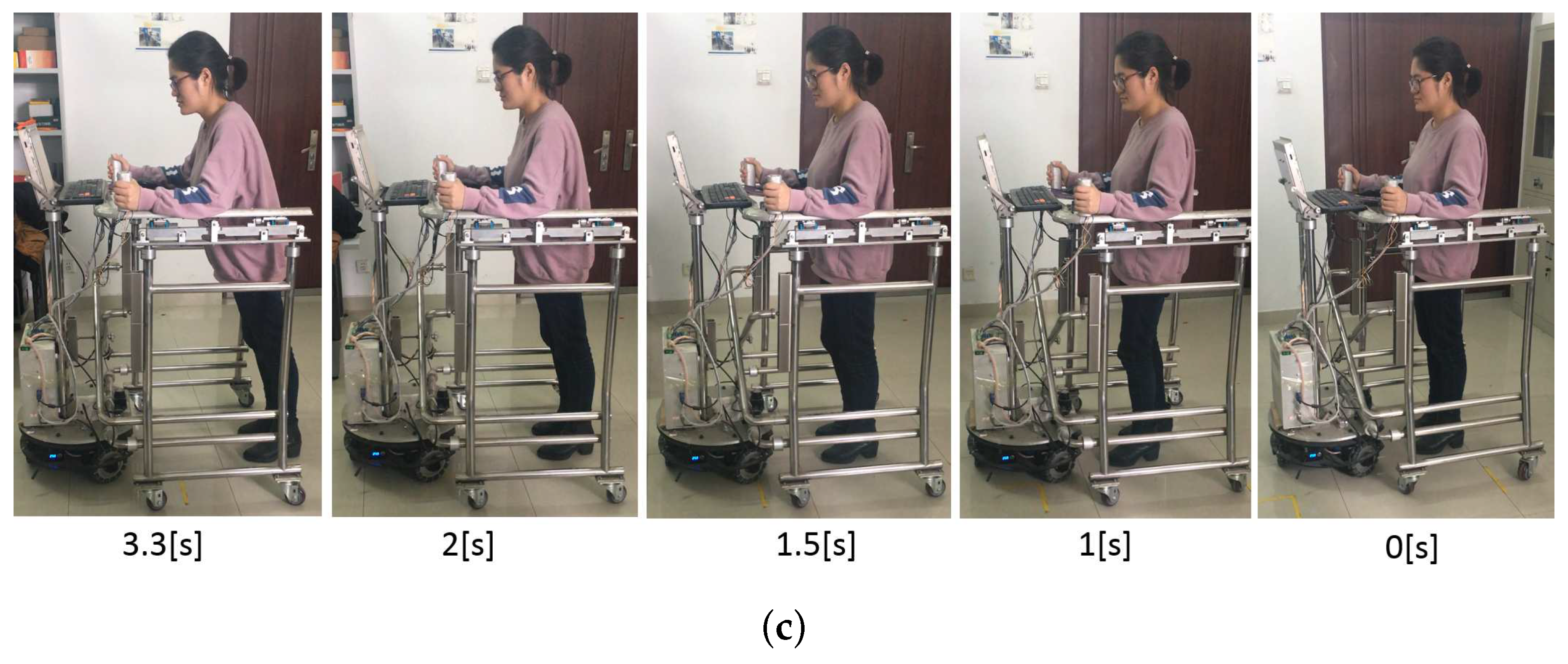

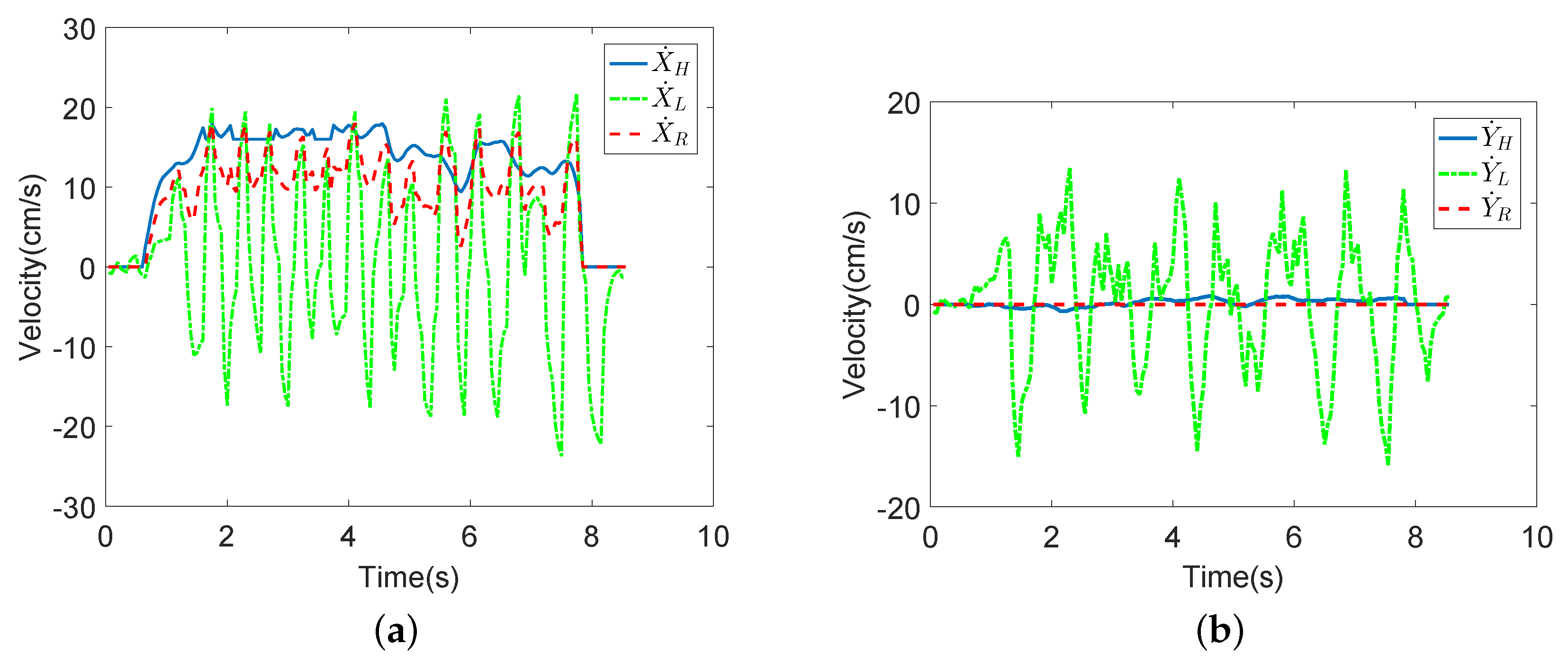

5.1. Coordinated Motion Fusion-Based Compliance Control Experiments in the Normal Walking State

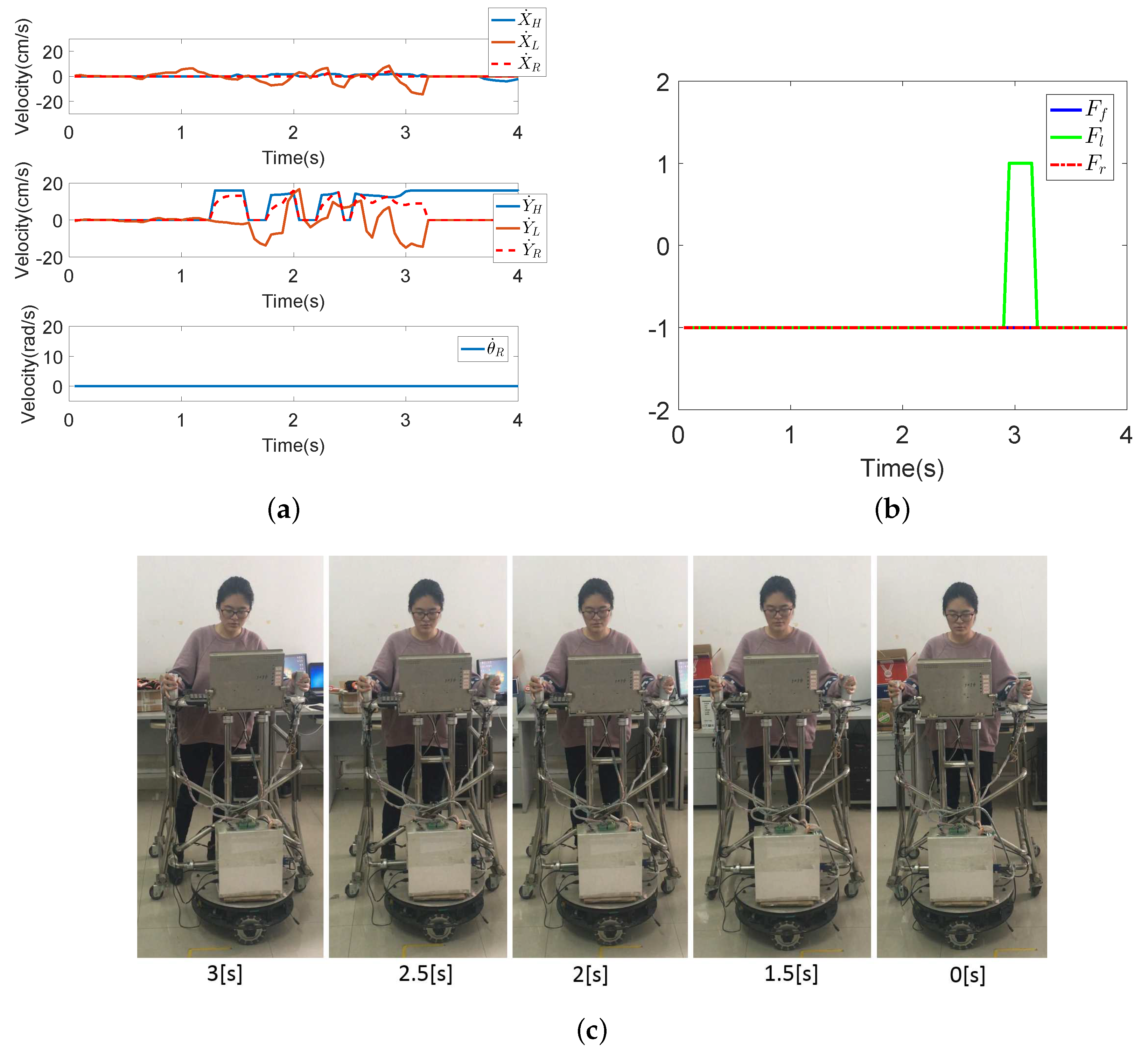

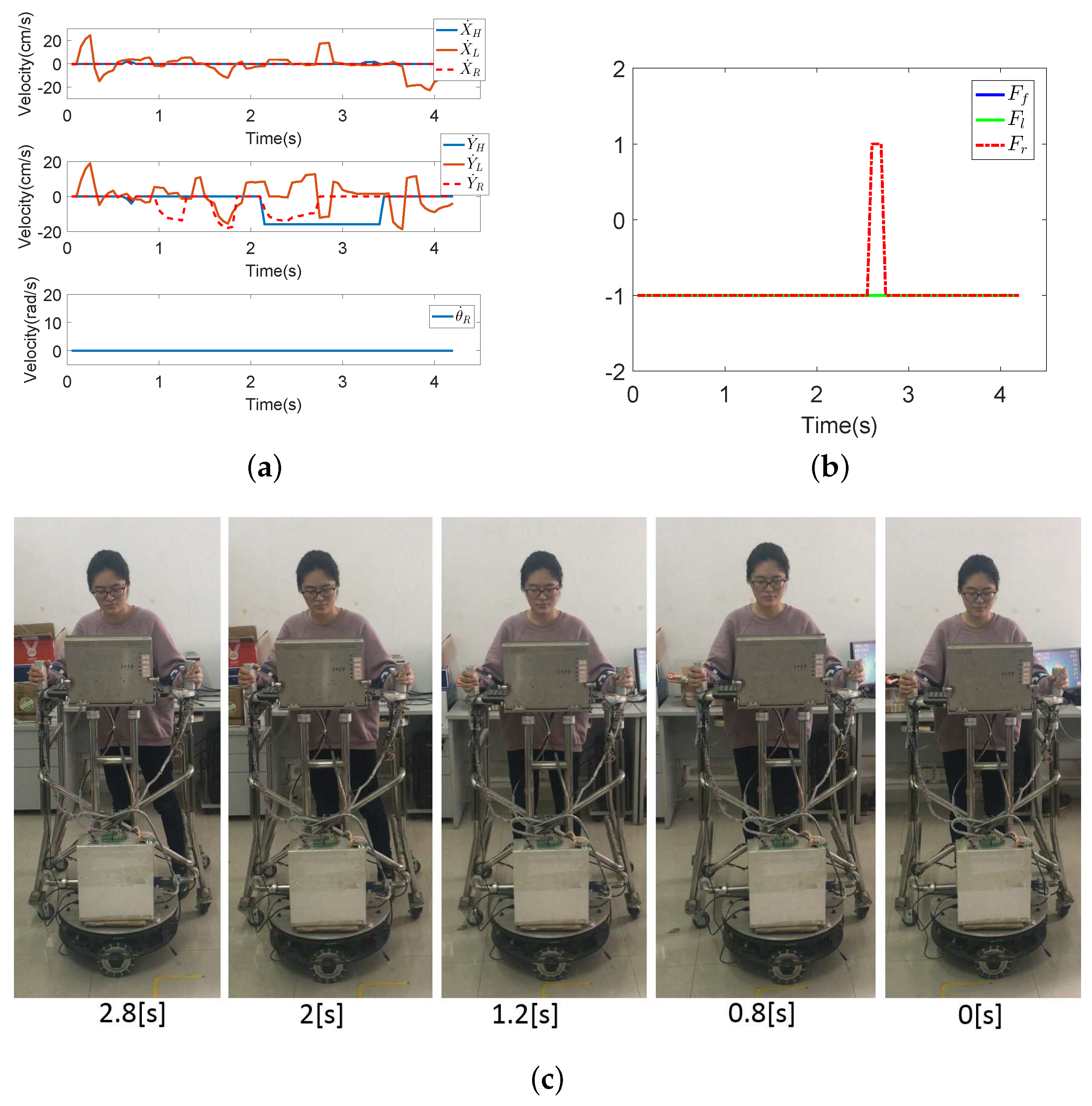

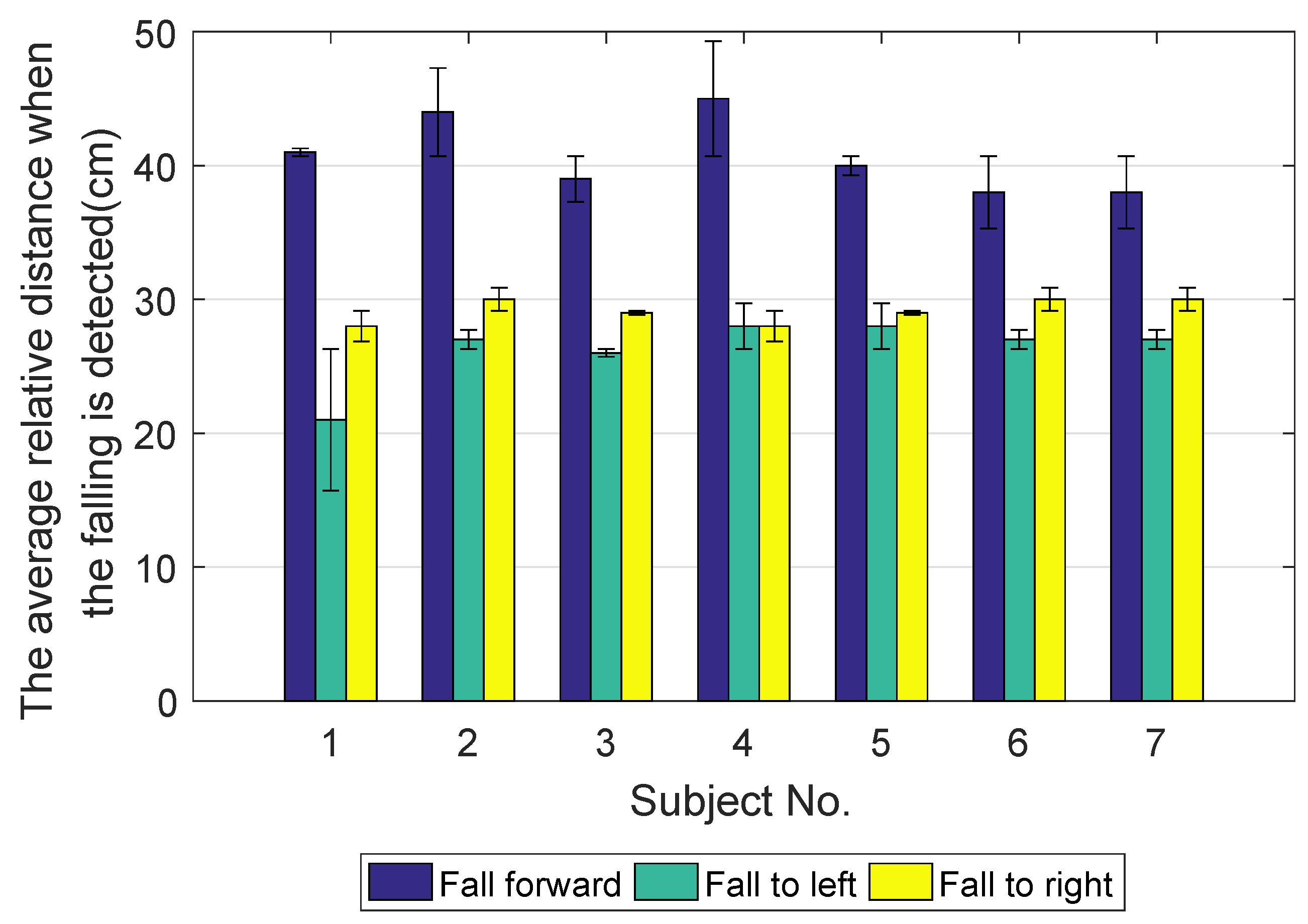

5.2. Coordinated Motion-Based Fall Detection Experiments in the Abnormal Walking State

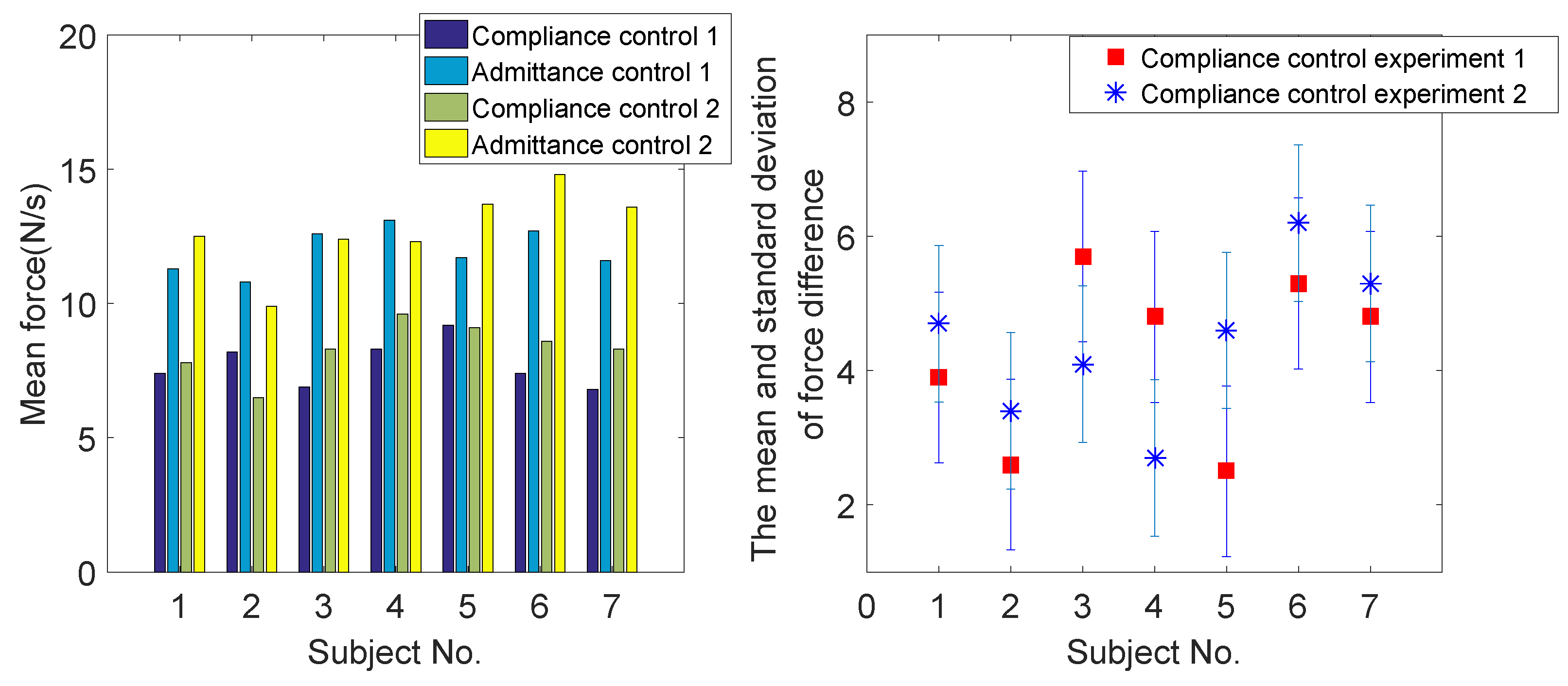

6. Comparative Experiment

6.1. Comparative Compliance Control Experiment in Normal Walking State

6.2. Comparative Fall Detection Experiments in the Abnormal Walking State

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Taghvaei, S.; Hirata, Y.; Kosuge, K. Vision-based human state estimation to control an intelligent passive walker. In Proceedings of the 2010 IEEE/SICE International Symposium on System Integration (SII), Sendai, Japan, 21–22 December 2010. [Google Scholar]

- Chugo, D.; Mastuoka, W.; Jia, S.; Takase, K. The wheel control of a robotic walker for standing and walking assistance with stability. In Proceedings of the 17th IEEE International Symposium on Robot Human Interactive Communication, Munich, Germany, 1–3 August 2008; pp. 297–302. [Google Scholar]

- Dubowsky, S.; Genot, F.; Godding, S.; Kozono, H.; Skwersky, A.; Yu, H.; Yu, L.S. PAMM-a robotic aid to the elderly for mobility assistance and monitoring: A ‘helping-hand’ for the elderly. In Proceedings of the IEEE International Conference on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000; pp. 570–576. [Google Scholar]

- Hirata, Y.; Muraki, A.; Kosuge, K. Motion control of intelligent passive-type walker for fall-prevention function based on estimation of user state. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 3498–3503. [Google Scholar]

- Parlitz, C.; Hgele, M.; Klein, P.; Dautenhahn, K. Care-O-bot 3-rationale for human-robot interaction design. In Proceedings of the 39th International Symposium on Robotics, Seul, Korea, 15–17 October 2008; pp. 275–280. [Google Scholar]

- Wakita, K.; Huang, J.; Di, P.; Sekiyama, K.; Fukuda, T. Human Walking Intention Based Motion Control of an Omnidirectional Type Cane Robot. IEEE/ASME Trans. Mechatron. 2013, 18, 285–296. [Google Scholar] [CrossRef]

- Rodriguez-Losada, D.; Matia, F.; Jimenez, A.; Galan, R.; Lacey, G. Implementing map based navigation in Guido, the Robotic Smart Walker. In Proceedings of the IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 3401–3406. [Google Scholar]

- Hirata, Y.; Muraki, A.; Kosuge, K. Motion control of intelligent walker based on renew of estimation parameters for user state. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 1050–1055. [Google Scholar]

- Hirata, Y.; Komatsuda, S.; Kosuge, K. Fall prevention control of passive intelligent walker based on human model. In Proceedings of the IEEE/RSJ International Conference on on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1222–1228. [Google Scholar]

- Huang, J.; Di, P.; Wakita, K.; Fukuda, T.; Sekiyama, K. Study of fall detection using intelligent cane based on sensor fusion. In Proceedings of the IEEE Symposium on on Micro-Nano Mechatronics and Human Science, Nagoya, Japan, 6–9 November 2008; pp. 495–500. [Google Scholar]

- Hans, M.; Graf, B.; Schraft, R. Robotic home assistant Care-o-bot: Past- present-future. In Proceedings of the 11th IEEE International Workshop Robot Human Interactive Communication, Berlin, Germany, 27 September 2002; pp. 380–385. [Google Scholar]

- Kulyukin, V. Human-robot interaction through gesture-free spoken dialogue. Auton. Robot. 2004, 16, 239–257. [Google Scholar] [CrossRef]

- Yu, Z.B.; Lee, M. Human motion based intent recognition using a deep dynamic neural model. Robot. Auton. Syst. 2015, 71, 134–149. [Google Scholar] [CrossRef]

- Carlson, T.; Leeb, R.; Chavarriaga, R.; Millán, J.R. The birth of the brain-controlled wheelchair. In Proceedings of the IEEE/RSJ International Conference Intelligent Robots System, Vilamoura, Portugal, 7–12 October 2012; pp. 5444–5445. [Google Scholar]

- Cifuentes, C.A.; Rodriguez, C.; Frizera, N.A.; Bastos-Filho, T.F.; Carelli, R. Multimodal Human Robot Interaction for Walker Assisted Gait. IEEE Syst. J. 2014, 10, 933–943. [Google Scholar] [CrossRef]

- Rodriguez, R.V.; Lewis, R.P.; Mason, J.S.D. Footstep recognition for a smart home environment. Int. J. Smart Home 2008, 2, 95–110. [Google Scholar]

- Stephenson, J.L.; Lamontagne, A.; Serres, S.J.D. The coordination of upper and lower limb movements during gait in healthy and stroke individuals. Gait Posture 2009, 29, 11–16. [Google Scholar] [CrossRef] [PubMed]

- Lu, C.K.; Huang, Y.C.; Lee, C.J. Adaptive guidance system design for the assistive robotic walker. Neurocomput. J. 2015, 170, 152–160. [Google Scholar] [CrossRef]

- Valado, C.; Caldeira, E.; Bastos-Filho, T.; Frizera-Neto, A.; Carelli, R. A new controller for a smart walker based on human-robot formation. Sensors 2016, 16, 1116. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Wang, Y.; Fukuda, T. Set-Membership-Based Fault Detection and Isolation for Robotic Assembly of Electrical Connectors. IEEE Trans. Autom. Sci. Eng. 2018, 15, 160–171. [Google Scholar] [CrossRef]

- Xu, W.; Huang, J.; Wang, Y.; Tao, C.; Cheng, L. Reinforcement learning-based shared control for walking-aid robot and its experimental verification. Adv. Robot. 2015, 29, 1463–1481. [Google Scholar] [CrossRef]

- Meng, Q.; Tholley, I.; Chung, P. Robots learn to dance through interaction with humans. Neural Comput. Appl. 2014, 24, 117–124. [Google Scholar] [CrossRef]

- He, X.; Kojima, R.; Hasegawa, O. Developmental word grounding through a growing neural network with a humanoid robot. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 2007, 37, 451–462. [Google Scholar] [CrossRef]

- Choi, J.; Lee, S.; Won, M. Self-learning navigation algorithm for vision-based mobile robots using machine learning algorithms. J. Mech. Sci. Technol. 2011, 25, 247–254. [Google Scholar] [CrossRef]

- Abdessemed, F. Svm-based control system for a robot manipulator. Int. J. Adv. Robot. Syst. 2012, 9, 247. [Google Scholar] [CrossRef]

- Mathur, A.; Foody, G.M. Multiclass and binary SVM classification: Implications for training and classification users. IEEE Geosci. Remote Sens. Lett. 2008, 5, 241–245. [Google Scholar] [CrossRef]

- Han, R.; Tao, C.; Huang, J.; Wang, Y.; Yan, H.; Ma, L. Design and control of an intelligent walking-aid robot. In Proceedings of the IEEE 6th International Conference on Modelling, Identification and Control, Melbourne, VIC, Australia, 3–5 December 2014; pp. 53–58. [Google Scholar]

- Li, P.; Kadirkamanathan, V. Fault detection and isolation in non-linear stochastic systems a combined adaptive monte carlo filtering and likelihood ratio approach. Int. J. Control 2004, 77, 1101–1114. [Google Scholar] [CrossRef]

- Yan, Q.Y.; Huang, J.; Xiong, C.H.; Yang, Z.; Yang, Z.H. Data-Driven Human-Robot Coordination Based Walking State Monitoring with Cane-Type Robot. IEEE Access 2018, 6, 8896–8908. [Google Scholar] [CrossRef]

- Lefebvre, T.; Xiao, J.; Bruyninckx, H.; De Gersem, G. Active compliant motion: A survey. Adv. Robot. 2005, 19, 479–499. [Google Scholar] [CrossRef]

- Marsland, S. Machine Learning: An Algorithmic Perspective; Chapman and Hall/CRC: Boca Raton, FL, USA, 2009; pp. 356–359. [Google Scholar]

- Huang, J.; Ri, M.H.; Wu, D.; Ri, S. Interval Type-2 Fuzzy Logic Modeling and Control of a Mobile Two-Wheeled Inverted Pendulum. IEEE Trans. Fuzzy Syst. 2018, 26, 2030–2038. [Google Scholar] [CrossRef]

- Scholkopf, B.; Smola, A.J. Learning with Kernels; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Ceseracciu, E.; Reggiani, M.; Sawacha, Z.; Sartori, M.; Spolaor, F.; Cobelli, C.; Pagello, E. SVM classification of locomotion modes using surface electromyography for applications in rehabilitation robotics. In Proceedings of the 19th IEEE International Symposium on Robot and Human Interactive Communication, Principe di Piemonte, Viareggio, Italy, 12–15 September 2010. [Google Scholar]

- Van Dorp, P.; Groen, F.C.A. Feature-based human motion parameter estimation with radar. Radar Sonar Navig. 2008, 2, 135–145. [Google Scholar] [CrossRef]

- Huang, J.; Xu, W.X.; Mohammed, S.; Shu, Z. Posture estimation and human support using wearable sensors and walking-aid robot. Robot. Auton. Syst. 2014, 73, 24–43. [Google Scholar] [CrossRef]

| The force values of the eight FSRs | |

| The human intent force and torque | |

| The desired walking velocity of the user | |

| The threshold values of the intention force/torque | |

| The proportionality constants of the robot velocity | |

| A switching value to restrain the relative distance between the user and the robot | |

| The threshold values of max relative distance between the human and the robot | |

| The center of a circle. | |

| The slopes of Lines a and b. | |

| The positions of the left leg and right leg. | |

| Each scan point position of the LRF. | |

| The desired velocities of the robot as detected by the LRF | |

| The fused HMI motion velocity |

| Subject No. | Male/Female | Summer | Winter | ||

|---|---|---|---|---|---|

| Shorts/Shirt | Success Rate | Tight Trousers/Loose Trousers | Success Rate | ||

| 1 | Female | Shirt | 100% | Tight trousers | 100% |

| 2 | Female | Shirt | 100% | Loose trousers | 100% |

| 3 | Male | Shorts | 100% | Tight trousers | 100% |

| 4 | Male | Shorts | 100% | Loose trousers | 100% |

| 5 | Male | Shorts | 100% | Loose trousers | 100% |

| 6 | Female | Shirt | 100% | Tight trousers | 100% |

| 7 | Female | Shirt | 100% | Loose trousers | 100% |

| a | ||||||||

|---|---|---|---|---|---|---|---|---|

| NB | NM | NS | Z | PS | PM | PB | ||

| NB | M | M | S | M | SB | SB | VB | |

| NM | S | M | S | S | M | SB | SB | |

| NS | VS | S | M | S | S | M | M | |

| Z | SB | M | S | VS | S | S | M | |

| PS | SB | SB | M | M | VS | S | S | |

| PM | VB | SB | SB | SB | M | M | SB | |

| PB | VB | VB | VB | VB | SB | SB | M |

| Subject No. | Age | Gender | Height | The Type of Disability |

|---|---|---|---|---|

| 1 | 30 | Female | 160 cm | No |

| 2 | 24 | Female | 160 cm | No |

| 3 | 21 | Male | 170 cm | No |

| 4 | 24 | Male | 170 cm | Left leg |

| 5 | 24 | Male | 174 cm | Right leg |

| 6 | 22 | Female | 158 cm | Left leg |

| 7 | 23 | Female | 155 cm | Left and right leg |

| Fall Forward | Fall to Left | Fall to Right | |

|---|---|---|---|

| The proposed fall detection algorithm | 36 (cm) | 26 (cm) | 29 (cm) |

| Comparative fall detection algorithm | 52 (cm) | 37 (cm) | 38 (cm) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, W.; Huang, J.; Cheng, L. A Novel Coordinated Motion Fusion-Based Walking-Aid Robot System. Sensors 2018, 18, 2761. https://doi.org/10.3390/s18092761

Xu W, Huang J, Cheng L. A Novel Coordinated Motion Fusion-Based Walking-Aid Robot System. Sensors. 2018; 18(9):2761. https://doi.org/10.3390/s18092761

Chicago/Turabian StyleXu, Wenxia, Jian Huang, and Lei Cheng. 2018. "A Novel Coordinated Motion Fusion-Based Walking-Aid Robot System" Sensors 18, no. 9: 2761. https://doi.org/10.3390/s18092761

APA StyleXu, W., Huang, J., & Cheng, L. (2018). A Novel Coordinated Motion Fusion-Based Walking-Aid Robot System. Sensors, 18(9), 2761. https://doi.org/10.3390/s18092761