Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor

Abstract

:1. Introduction

2. Related Work

3. Contributions

- -

- First, to the best of our knowledge, our work is the first study that employs image features extracted from both local and global regions of iris image for an iPAD system. To overcome the limitation of previous studies which use features extracted from only local or global (entire) iris images for the detection task, we additionally extracted image features from both local and global regions of an iris image using a deep CNN network to enhance the power of the extracted image features.

- -

- Second, we adaptively defined the local regions based on the detected boundaries of the pupil and iris so that the extracted features from these regions were robust to changes in pupil and iris sizes caused by illumination variation and distance changes between the camera and user’s eyes.

- -

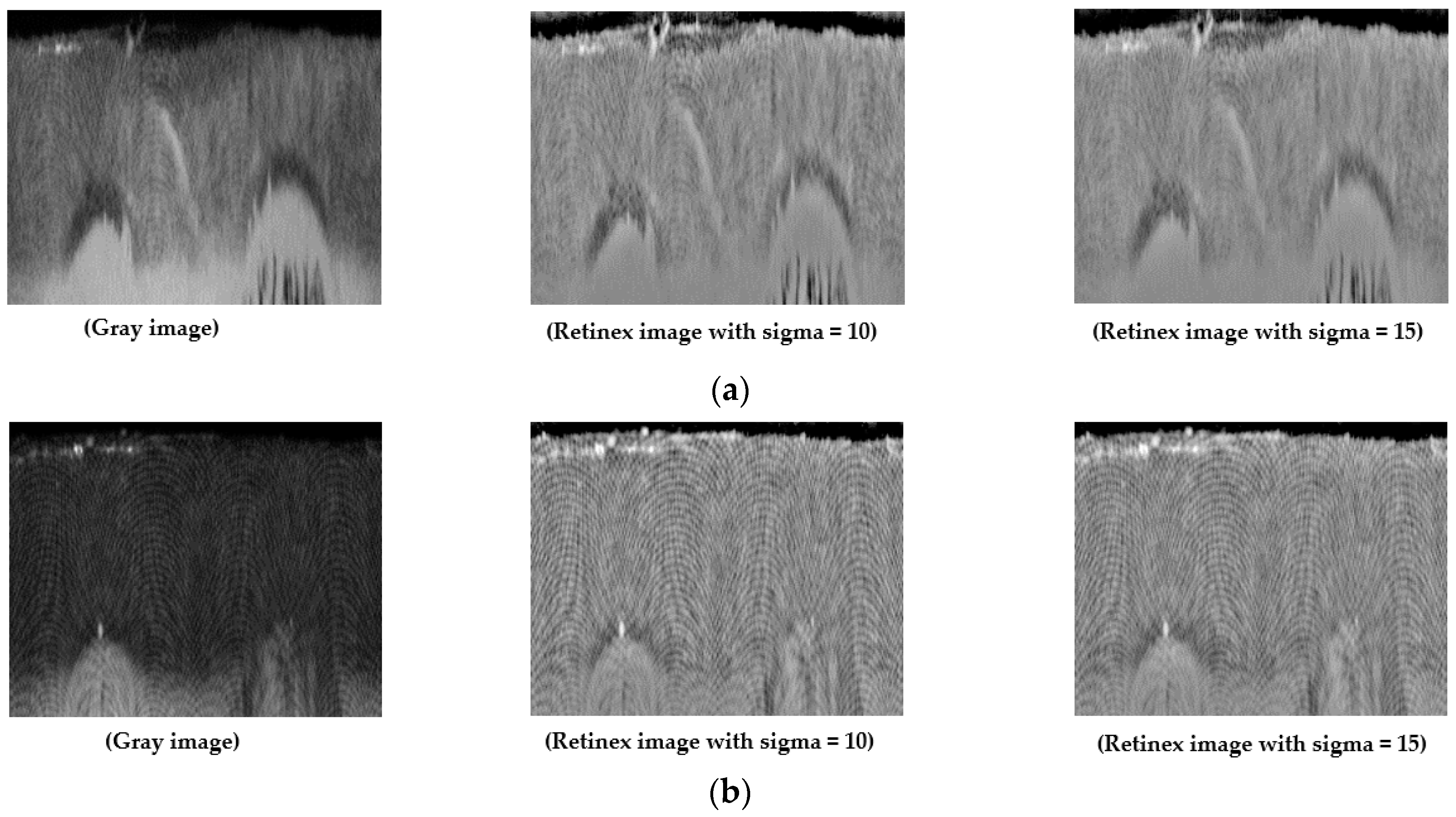

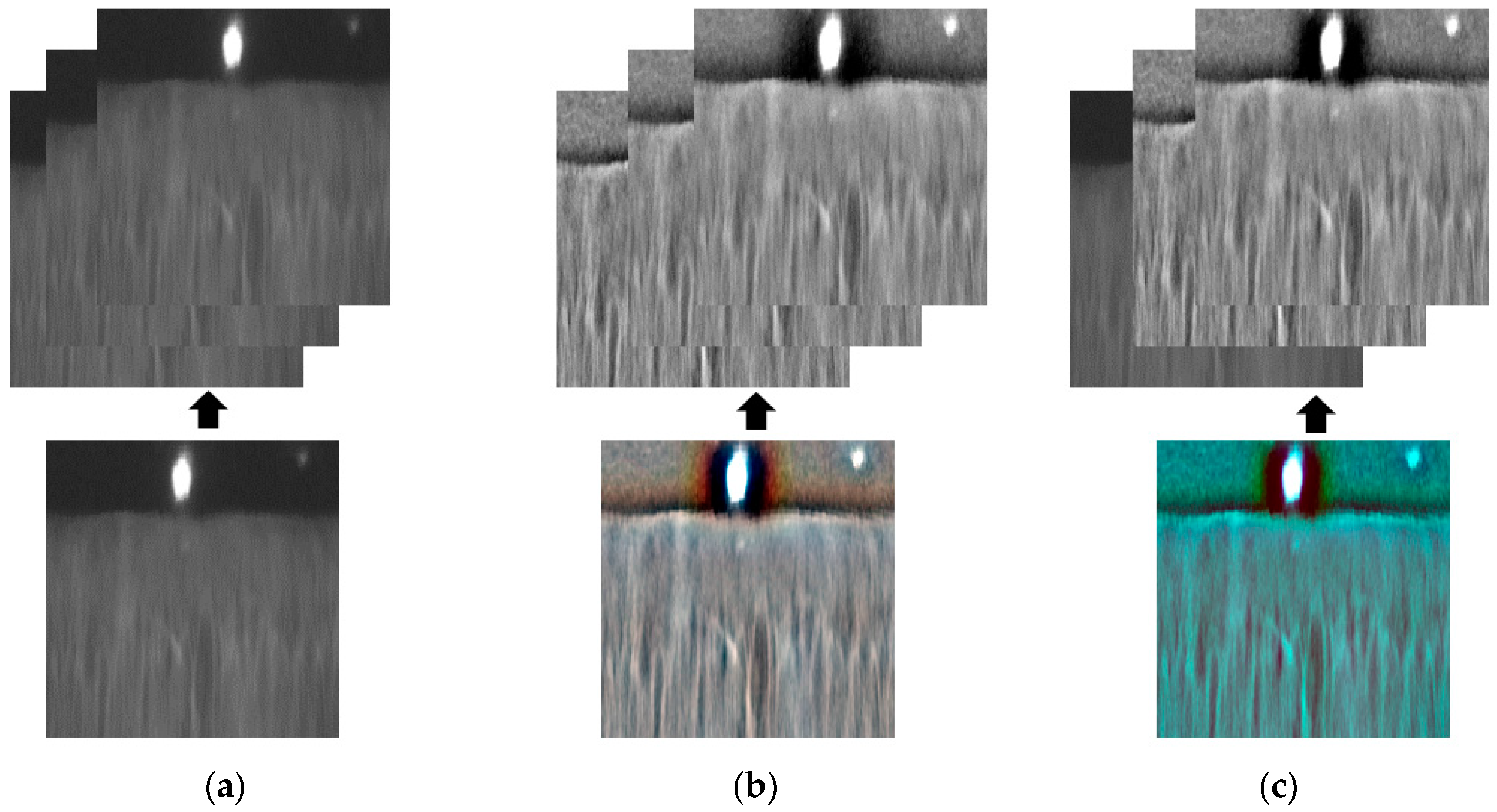

- Third, we used three kinds of input image for the detection task, including a three-channel gray-level image, a three-channel Retinex image, and a three-channel image of a fusion of the gray and Retinex image for each local and global region instead of using the gray image directly as in previous iPAD studies. Through extensive experimentation, we demonstrate the efficiency of the fusion images for the detection task.

- -

- Fourth, we trained deep CNNs to extract deep image features for each local and global iris region image. We enhanced the detection performance by combining the features extracted from local and global regions of an iris image using two combination rules of feature level fusion and score level fusion based on SVMs. Finally, we made our trained models of CNN and SVM with all the algorithms available through [36] for access by other researchers.

4. Proposed Method

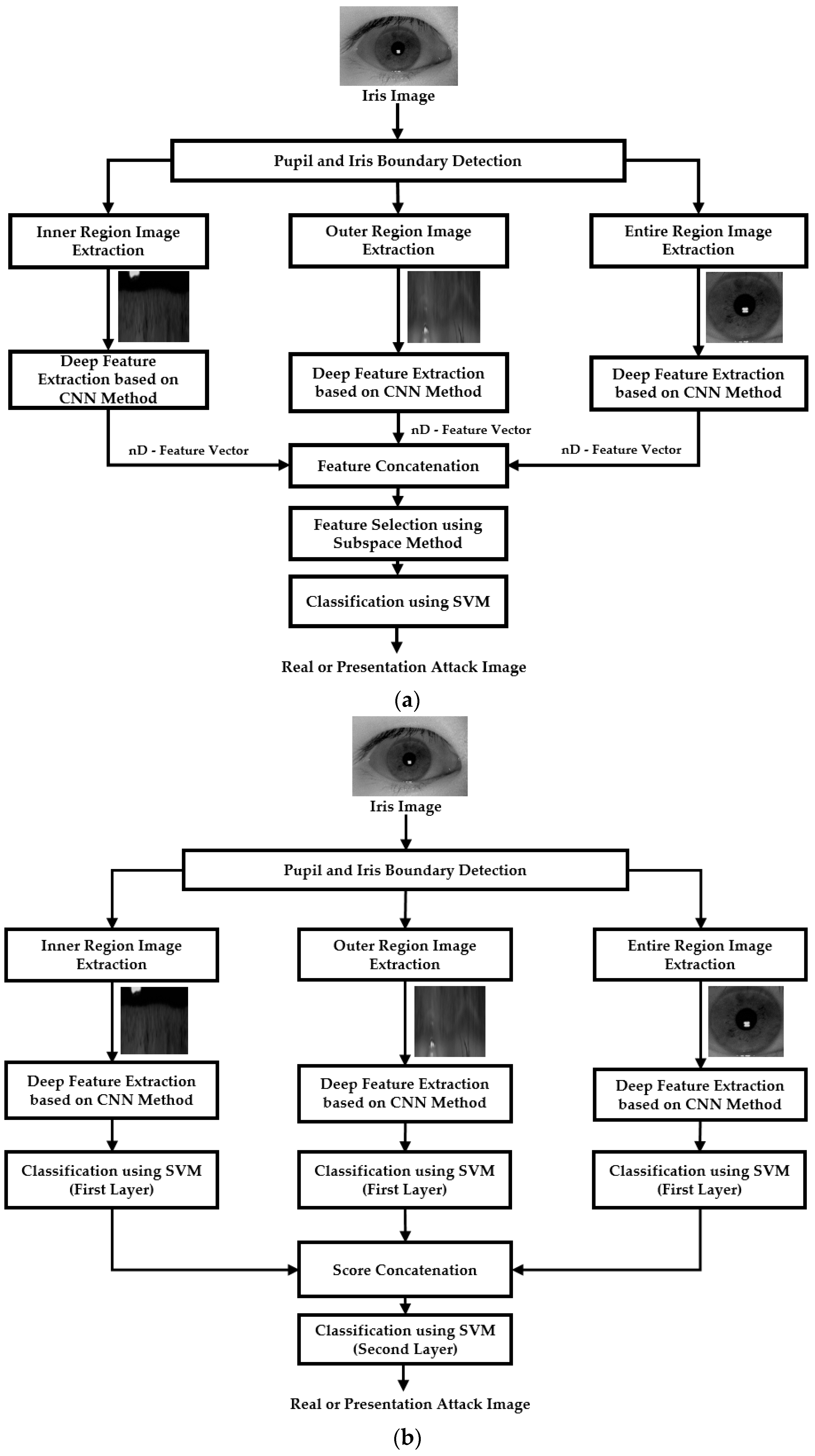

4.1. Overview of Proposed Method

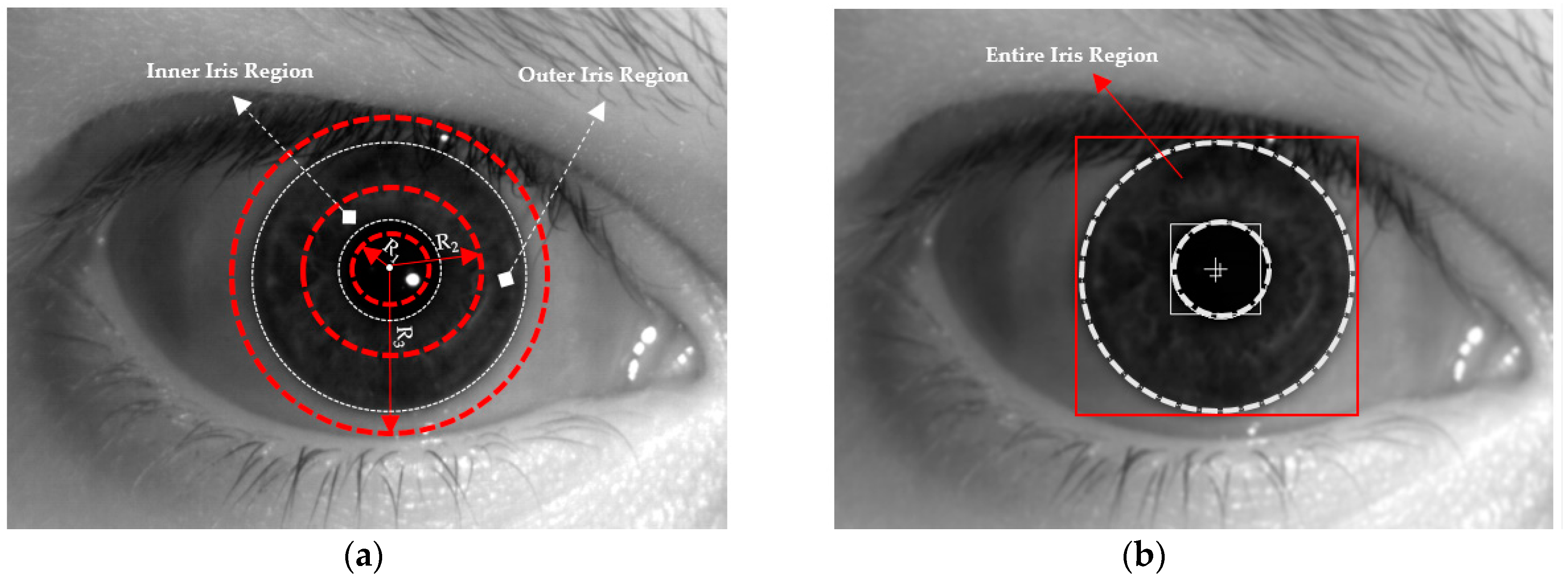

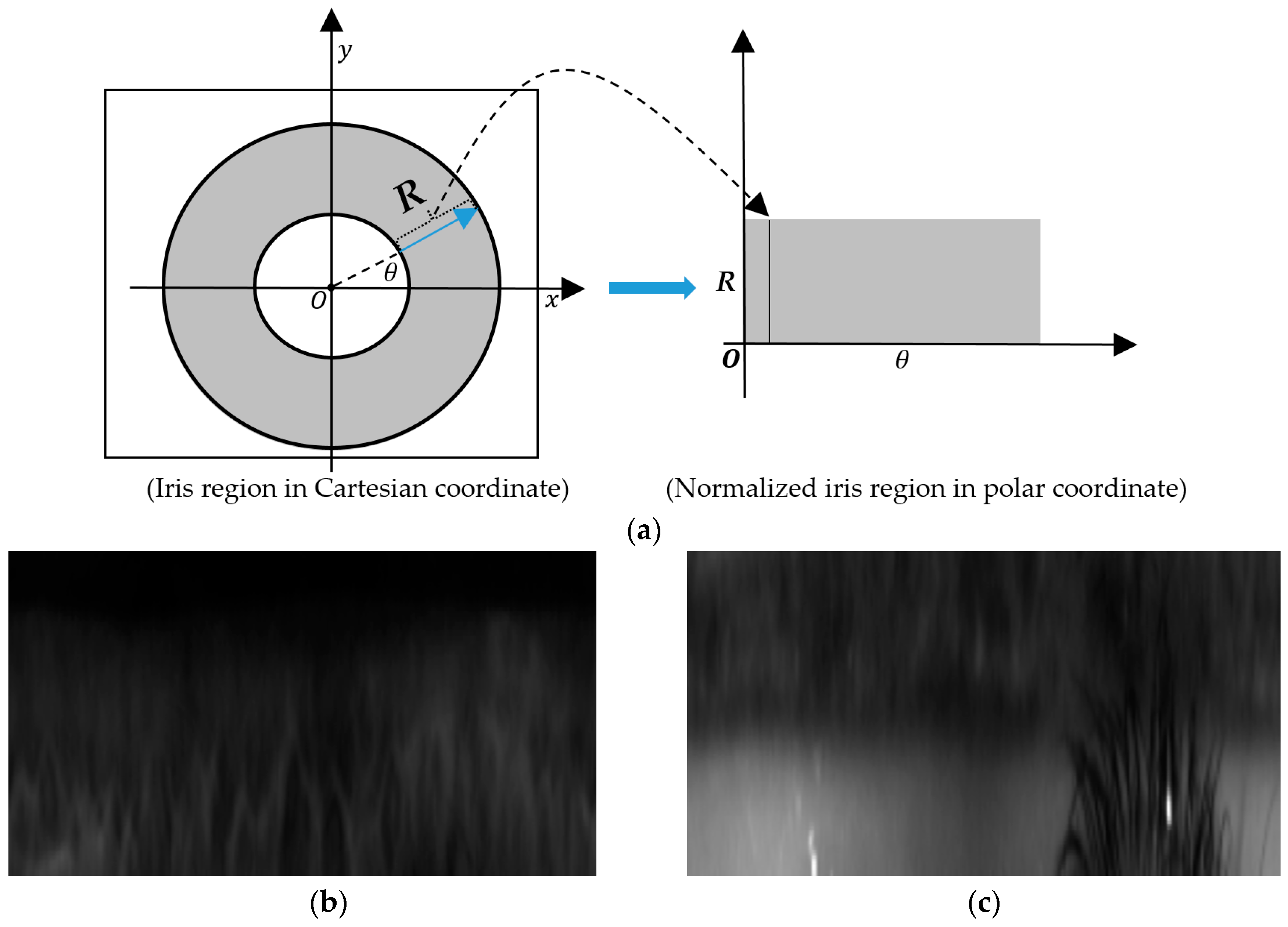

4.2. Iris Detection and Adaptive Definition of Inner and Outer Iris Regions

4.3. Retinex Filtering for Illumination Compensation

4.4. Feature Extraction by CNN Method

4.5. Fusion of Detection Results by Global and Local Regions

5. Experimental Results and Discussions

5.1. Experimental Datasets and Criteria for Detection Performance Measurement

5.2. Performance Evaluation of Individual Attack Method

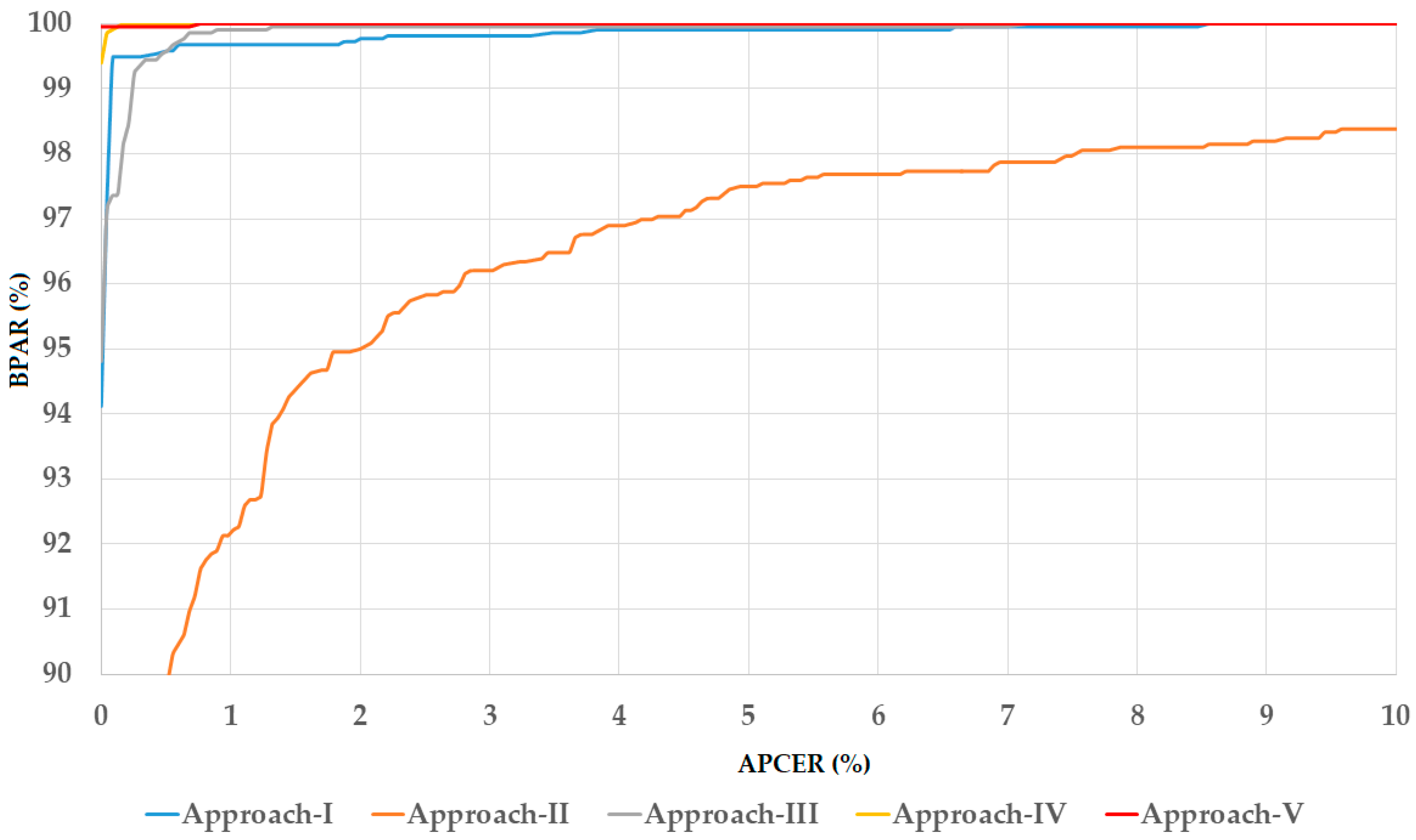

5.2.1. Detection Performance of Attack Method Based on Iris Image Printed on Paper

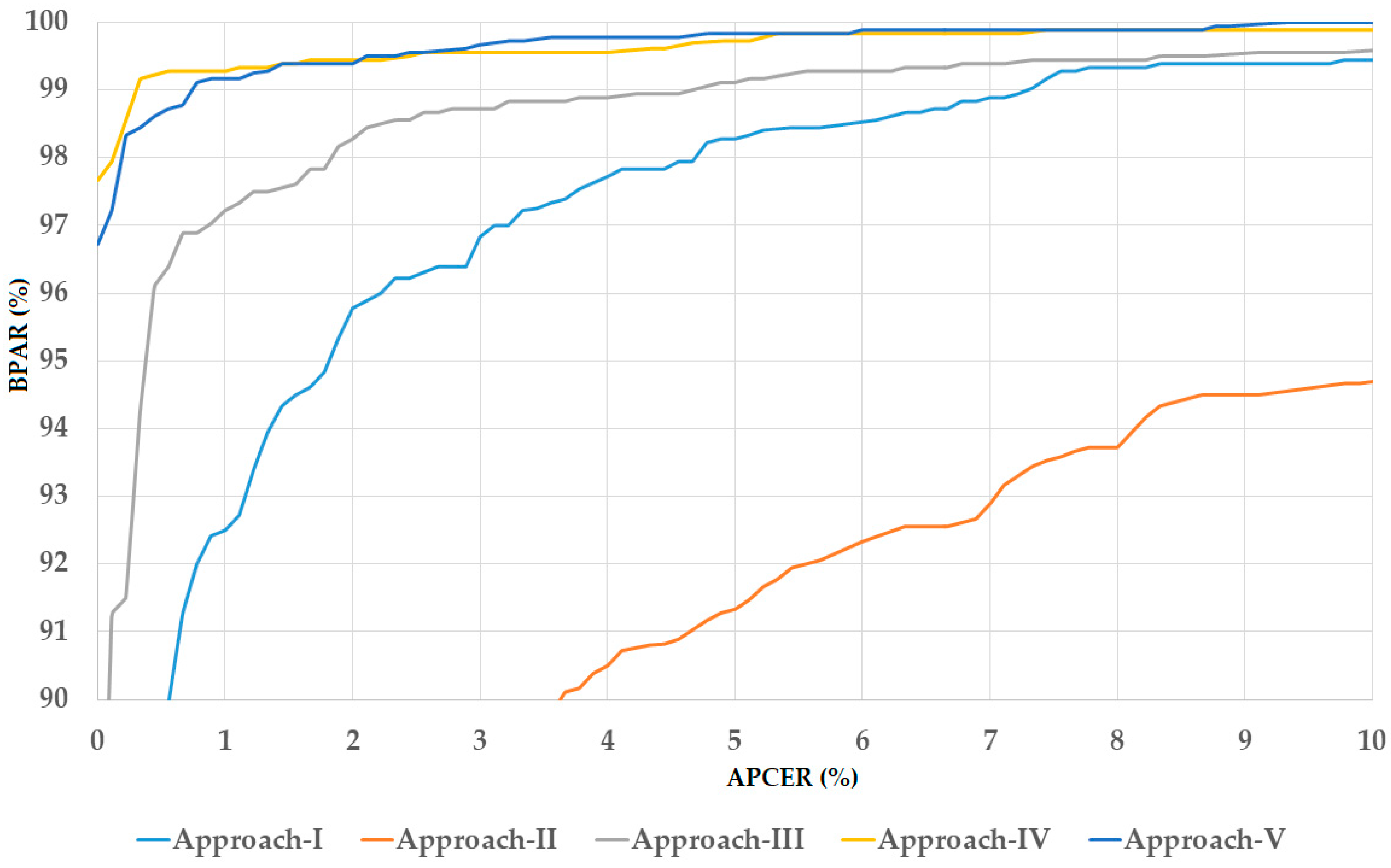

5.2.2. Detection Performance of Attack Method Based on Use of Contact Lens Using the LivDet-Iris-2017 Division Method

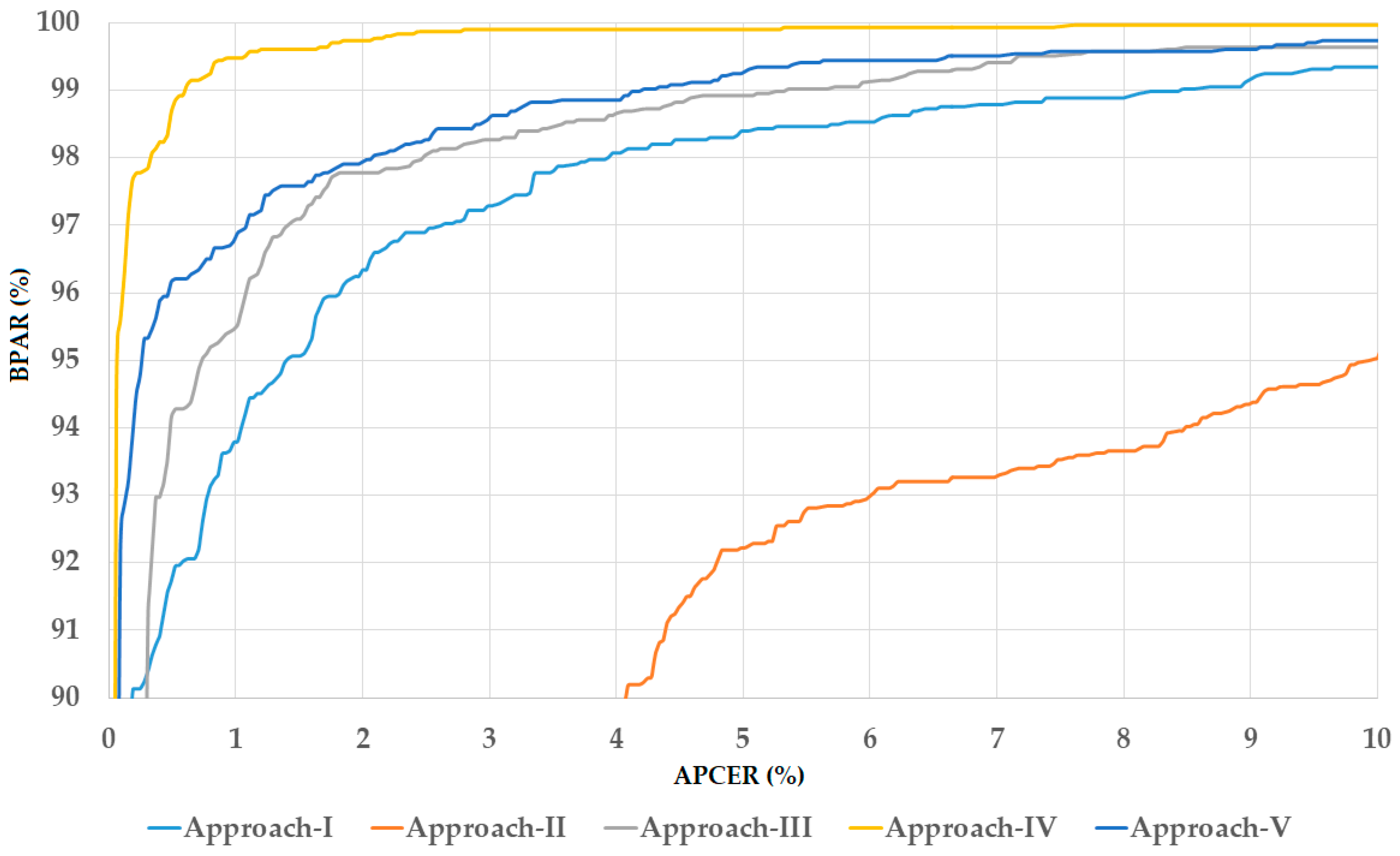

5.2.3. Detection Performance of Attack Method Based on Use of Contact Lens Using Our Division Method

5.3. Performance Evaluation of Combined Datasets for Considering General Attack Method

5.4. Comparative Experiments with Previous Methods and Discussions

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Nguyen, D.T.; Yoon, H.S.; Pham, D.T.; Park, K.R. Spoof detection for finger-vein recognition system using NIR camera. Sensors 2017, 17, 2261. [Google Scholar] [CrossRef] [PubMed]

- Jain, A.K.; Ross, A.; Prabhakar, S. An introduction to biometric recognition. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 4–20. [Google Scholar] [CrossRef] [Green Version]

- Shin, K.Y.; Park, Y.H.; Nguyen, D.T.; Park, K.R. Finger-vein image enhancement using a fuzzy-based fusion method with Gabor and Retinex filtering. Sensors 2014, 14, 3095–3129. [Google Scholar] [CrossRef] [PubMed]

- Daugman, J. How iris recognition works. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 21–30. [Google Scholar] [CrossRef]

- Givens, G.H.; Beveridge, J.R.; Phillips, P.J.; Draper, B.; Lui, Y.M.; Bolme, D. Introduction to face recognition and evaluation of algorithm performance. Comput. Stat. Data. Anal. 2013, 67, 236–247. [Google Scholar] [CrossRef]

- Gu, J.; Zhou, J.; Yang, C. Fingerprint recognition by combining global structure and local cues. IEEE Trans. Image Process. 2006, 15, 1952–1964. [Google Scholar] [PubMed]

- De Souza, G.B.; Da Silva Santos, D.F.; Pires, R.G.; Marana, A.N.; Papa, J.P. Deep texture features for robust face spoofing detection. IEEE Trans. Circuits Syst. II Express Briefs 2017, 64, 1397–1401. [Google Scholar] [CrossRef]

- Kim, S.; Ban, Y.; Lee, S. Face liveness detection using defocus. Sensors 2015, 15, 1537–1563. [Google Scholar] [CrossRef] [PubMed]

- Menotti, D.; Chiachia, G.; Pinto, A.; Schwartz, W.R.; Pedrini, H.; Falcao, A.X.; Rocha, A. Deep representation for iris, face and fingerprint spoofing detection. IEEE Trans. Inf. Forensic Secur. 2015, 10, 864–879. [Google Scholar] [CrossRef]

- Galbally, J.; Marcel, S.; Fierrez, J. Image quality assessment for fake biometric detection: Application to iris, fingerprint and face recognition. IEEE Trans. Image Process. 2014, 23, 710–724. [Google Scholar] [CrossRef] [PubMed]

- Raja, K.B.; Raghavendra, R.; Vemuri, V.K.; Busch, C. Smartphone based visible iris recognition using deep sparse filtering. Pattern Recognit. Lett. 2015, 57, 33–42. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Jillela, R.; Sridharan, S.; Ross, A. Long range iris recognition: A survey. Pattern Recognit. 2017, 72, 123–143. [Google Scholar] [CrossRef]

- Li, P.; Ma, H. Iris recognition in non-ideal imaging conditions. Pattern Recognit. Lett. 2012, 33, 1012–1018. [Google Scholar] [CrossRef] [Green Version]

- Shin, K.Y.; Nam, G.P.; Jeong, D.S.; Cho, D.H.; Kang, B.J.; Park, K.R.; Kim, J. New iris recognition method for noisy iris images. Pattern Recognit. Lett. 2012, 33, 991–999. [Google Scholar] [CrossRef]

- Nguyen, K.; Fookes, C.; Ross, A.; Sridharan, S. Iris recognition with off-the-shelf CNN features: A deep learning perspective. IEEE Access 2018, 6, 18848–18855. [Google Scholar] [CrossRef]

- Lee, M.B.; Hong, H.G.; Park, K.R. Noisy ocular recognition based on three convolutional neural networks. Sensors 2017, 17, 2933. [Google Scholar]

- Liu, N.; Zhang, M.; Li, H.; Sun, Z.; Tan, T. Deepiris: Learning pairwise filter bank for heterogeneous iris verification. Pattern Recognit. Lett. 2016, 82, 154–161. [Google Scholar] [CrossRef]

- Gangwar, A.; Joshi, A. DeepIrisNet: Deep iris representation with application in iris recognition and cross-sensor iris recognition. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–18 September 2016; pp. 2301–2305. [Google Scholar]

- Al-Waisy, A.S.; Qahwaji, R.; Ipson, S.; Al-Fahdiwi, S.; Nagem, T.A.M. A multi-biometric iris recognition system based on a deep learning approach. Pattern Anal. Appl. 2018, 21, 783–802. [Google Scholar] [CrossRef]

- Arsalan, M.; Hong, H.G.; Naqvi, R.A.; Lee, M.B.; Kim, M.C.; Kim, D.S.; Kim, C.S.; Park, K.R. Deep learning-based iris segmentation for iris recognition in visible light environment. Symmetry 2017, 9, 263. [Google Scholar] [CrossRef]

- Arsalan, M.; Naqvi, R.A.; Kim, D.S.; Nguyen, P.H.; Owais, M.; Park, K.R. IrisDenseNet: Robust iris segmentation using densely connected fully convolutional networks in the images by visible light and near-infrared light camera sensors. Sensors 2018, 18, 1501. [Google Scholar] [CrossRef] [PubMed]

- Erdorgmus, N.; Marcel, S. Spoofing face recognition with 3D masks. IEEE Trans. Inf. Forensic Secur. 2014, 9, 1084–1097. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Pham, T.D.; Baek, N.R.; Park, K.R. Combining deep and handcrafted image features for presentation attack detection in face recognition using visible-light camera sensors. Sensors 2018, 18, 699. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.T.; Park, Y.H.; Shin, K.Y.; Kwon, S.Y.; Lee, H.C.; Park, K.R. Fake finger-vein image detection based on Fourier and wavelet transforms. Digit. Signal Process. 2013, 23, 1401–1413. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Baek, N.R.; Pham, D.T.; Park, K.R. Presentation attack detection for iris recognition system using NIR camera sensor. Sensors 2018, 18, 1315. [Google Scholar] [CrossRef] [PubMed]

- Gragnaniello, D.; Poggi, G.; Sansone, C.; Verdoliva, L. An investigation of local descriptors for biometric spoofing detection. IEEE Trans. Inf. Forensic Secur. 2015, 10, 849–863. [Google Scholar] [CrossRef]

- Doyle, J.S.; Bowyer, K.W. Robust detection of textured contact lens in iris recognition using BSIF. IEEE Access 2015, 3, 1672–1683. [Google Scholar] [CrossRef]

- Hu, Y.; Sirlantzis, K.; Howells, G. Iris liveness detection using regional features. Pattern Recognit. Lett. 2016, 82, 242–250. [Google Scholar] [CrossRef]

- Komogortsev, O.V.; Karpov, A.; Holland, C.D. Attack of mechanical replicas: Liveness detection with eye movement. IEEE Trans. Inf. Forensic Secur. 2015, 10, 716–725. [Google Scholar] [CrossRef]

- Raja, K.B.; Raghavendra, R.; Busch, C. Color adaptive quantized pattern for presentation attack detection in ocular biometric systems. In Proceedings of the ACM International Conference on Security of Information and Networks, Newark, NJ, USA, 20–22 July 2016; pp. 9–15. [Google Scholar]

- Silva, P.; Luz, E.; Baeta, R.; Pedrini, H.; Falcao, A.X.; Menotti, D. An approach to iris contact lens detection based on deep image representation. In Proceedings of the IEEE Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 157–164. [Google Scholar]

- Yambay, D.; Doyle, J.S.; Bowyer, K.W.; Czajka, A.; Schucker, S. LivDet-iris 2013—Iris liveness detection competition 2013. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–8. [Google Scholar]

- Yambay, D.; Walczak, B.; Schuckers, S.; Czajka, A. LivDet-iris 2015—Iris liveness detection. In Proceedings of the IEEE International Conference on Identity, Security and Behavior Analysis, New Delhi, India, 22–24 February 2017; pp. 1–6. [Google Scholar]

- Yambay, D.; Becker, B.; Kohli, N.; Yadav, D.; Czajka, A.; Bowyer, K.W.; Schuckers, S.; Singh, R.; Vatsa, M.; Noore, A.; et al. LivDet iris 2017—Iris liveness detection competition 2017. In Proceedings of the International Conference on Biometrics, Denver, CO, USA, 1–4 October 2017; pp. 733–741. [Google Scholar]

- He, L.; Li, H.; Liu, F.; Liu, N.; Sun, Z.; He, Z. Multi-patch convolution neural network for iris liveness detection. In Proceedings of the IEEE 8th International Conference on Biometrics Theory, Applications and Systems, Buffalo, NY, USA, 6–9 September 2016; pp. 1–7. [Google Scholar]

- Dongguk Iris Spoof Detection CNN Model Version 2 (DFSD-CNN-2) with Algorithm. Available online: http://dm.dgu.edu/link.html (accessed on 9 July 2018).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional neural networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; Available online: https://arxiv.org/abs/1409.1556 (accessed on 9 June 2018).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van de Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Pham, D.T.; Nguyen, D.T.; Kim, W.; Park, S.H.; Park, K.R. Deep learning-based banknote fitness classification using the reflection images by a visible-light one-dimensional line image sensor. Sensors 2018, 18, 472. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. ArXiv 2016, arXiv:1506.01497. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look one: Unified, real-time object detection. ArXiv 2016, arXiv:1506.02640. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Levi, G.; Hassner, T. Age and gender classification using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Li, J.; Qiu, T.; Wen, C.; Xie, K.; Wen, F.Q. Robust face recognition using the deep C2D-CNN model based on decision-level fusion. Sensors 2018, 18, 2080. [Google Scholar] [CrossRef] [PubMed]

- Cheng, C.; Ross, A. A multi-task convolutional neural network for joint iris detection and presentation attack detection. In Proceedings of the IEEE Winter Applications of Computer Vision Workshops, Lake Tahoe, NV, USA, 15 March 2018; pp. 44–51. [Google Scholar]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, D.T.; Kim, K.W.; Hong, H.G.; Koo, J.H.; Kim, M.C.; Park, K.R. Gender recognition from human-body images using visible-light and thermal camera videos based on a convolutional neural network for image feature extraction. Sensors 2017, 17, 637. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 27. [Google Scholar] [CrossRef]

- LIBSVM Tools for SVM Classification. Available online: https://www.csie.ntu.edu.tw/~cjlin/libsvm/ (accessed on 10 July 2018).

- Park, Y.H.; Kwon, S.Y.; Pham, D.T.; Park, K.R.; Jeong, D.S.; Yoon, S. A high performance banknote recognition system based on a one-dimensional visible light line sensor. Sensors 2015, 15, 14093–14115. [Google Scholar] [CrossRef] [PubMed]

- Presentation Attack Video Iris Dataset (PAVID). Available online: http://nislab.no/biometrics_lab/pavid_db (accessed on 10 July 2018).

- International Organization for Standardization. ISO/IEC JTC1 SC37 Biometrics. In ISO/IEC WD 30107–3: 2014 Information Technology—Presentation Attack Detection-Part 3: Testing and Reporting and Classification of Attacks; International Organization for Standardization: Geneva, Switzerland, 2014. [Google Scholar]

- Raghavendra, R.; Busch, C. Presentation attack detection algorithms for finger vein biometrics: A comprehensive study. In Proceedings of the 11th International Conference on Signal-Image Technology and Internet-based Systems, Bangkok, Thailand, 23–27 November 2015; pp. 628–632. [Google Scholar]

- NVIDIA TitanX. Available online: https://www.nvidia.com/en-us/titan/titan-xp/ (accessed on 30 July 2018).

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef] [Green Version]

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. non-hancrafted features for computer vision classification. Pattern Recognit. 2017, 71, 158–172. [Google Scholar] [CrossRef]

- Cho, D.H.; Park, K.R.; Rhee, D.W.; Kim, Y.; Yang, J. Pupil and iris localization for iris recognition in mobile phones. In Proceedings of the 7th ACIS International Conference on Software Engineering, Artificial Intelligence, Networking, and Parallel/Distributed Computing, Las Vegas, NV, USA, 19–20 June 2006; pp. 197–201. [Google Scholar]

| Category | Method | Strength | Weakness |

|---|---|---|---|

| Using image features extracted from entire (global) iris region image | Uses handcrafted image features extracted from entire iris region image [26,27,28,29,30] |

| Detection accuracy is fair because of predesigned image feature extraction method |

| Uses learning-based method, i.e., CNN method [9,31,34] | Extracts efficient image features by a learning-based method using a large amount of training samples |

| |

| Uses combination of deep and handcrafted-image features [25] | Enhances the detection performance by using both handcrafted and deep image features |

| |

| Using image features extracted from multiple local patches of normalized iris image |

|

|

|

| Combining features extracted from both local and global iris regions for detection task (Proposed method) |

|

| Processing time is longer than when using only image features extracted from global iris region |

| Operation Layer | Number of Filters | Size of Each Filter | Stride Value | Padding Value | Size of Output Image | |

|---|---|---|---|---|---|---|

| Input image | - | - | - | - | 224 × 224 × 3 | |

| Convolution Layer (two times) | Convolution | 64 | 3 × 3 × 3 | 1 × 1 | 1 × 1 | 224 × 224 × 64 |

| ReLU | - | - | - | - | 224 × 224 × 64 | |

| Pooling Layer | Max pooling | 1 | 2 × 2 | 2 × 2 | 0 | 112 × 112 × 64 |

| Convolution Layer (two times) | Convolution | 128 | 3 × 3 × 64 | 1 × 1 | 1 × 1 | 112 × 112 × 128 |

| ReLU | - | - | - | - | 112 × 112 × 128 | |

| Pooling Layer | Max pooling | 1 | 2 × 2 | 2 × 2 | 0 | 56 × 56 × 128 |

| Convolution Layer (four times) | Convolution | 256 | 3 × 3 × 128 | 1 × 1 | 1 × 1 | 56 × 56 × 256 |

| ReLU | - | - | - | - | 56 × 56 × 256 | |

| Pooling Layer | Max pooling | 1 | 2 × 2 | 2 × 2 | 0 | 28 × 28 × 256 |

| Convolution Layer (four times) | Convolution | 512 | 3 × 3 × 256 | 1 × 1 | 1 × 1 | 28 × 28 × 512 |

| ReLU | - | - | - | - | 28 × 28 × 512 | |

| Pooling Layer | Max pooling | 1 | 2 × 2 | 2 × 2 | 0 | 14 × 14 × 512 |

| Convolution Layer (four times) | Convolution | 512 | 3 × 3 × 512 | 1 × 1 | 1 × 1 | 14 × 14 × 512 |

| ReLU | - | - | - | - | 14 × 14 × 512 | |

| Pooling Layer | Max pooling | 1 | 2 × 2 | 2 × 2 | 0 | 7 × 7 × 512 |

| Inner Product Layer | Fully connected | - | - | - | - | 4096 |

| ReLU | - | - | - | - | 4096 | |

| Dropout Layer | Dropout (dropout = 0.5) | - | - | - | - | 4096 |

| Inner Product Layer | Fully connected | - | - | - | - | 4096 |

| ReLU | - | - | - | - | 4096 | |

| Dropout Layer | Dropout (dropout = 0.5) | - | - | - | - | 4096 |

| Inner Product Layer | Fully connected | - | - | - | - | 2 |

| Softmax Layer | Softmax | - | - | - | - | 2 |

| Classification Layer | Classification | - | - | - | - | 2 (Real/Presentation Attack) |

| Dataset | Number of Real Images | Number of Attack Images | Total | Image Data Collection Method |

|---|---|---|---|---|

| Warsaw-2017 | 5168 | 6845 | 12,013 | Recaptured printed iris patterns on paper |

| NDCLD-2015 | 4875 | 2425 | 7300 | Recaptured printed iris patterns on contact lens |

| Dataset | Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Test-Known Dataset | Test-Unknown Dataset | |||||

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | ||||

| Original dataset | 1844 | 2669 | 4513 | 974 | 2016 | 2990 | 2350 | 2160 | 4510 |

| Augmented dataset | 27,660 (1844 × 15) | 24,021 (2669 × 9) | 51,681 | 974 | 2016 | 2990 | 2350 | 2160 | 4510 |

| Test Dataset | Approach | Using Three-Channel Gray Images | Using Three-Channel Retinex Images | Using Three-Channel Fusion of Gray and Retinex Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | APCER | BPCER | ACER | ||

| (a) | ||||||||||

| Test-known dataset | Using Inner Iris Region | 0.103 | 0.099 | 0.101 | 0.103 | 0.000 | 0.051 | 0.000 | 0.000 | 0.000 |

| Using Outer Iris Region | 0.000 | 0.050 | 0.025 | 0.000 | 0.100 | 0.050 | 0.000 | 0.000 | 0.000 | |

| Using Entire Iris Region | 0.000 | 0.050 | 0.025 | 0.000 | 0.100 | 0.050 | 0.000 | 0.148 | 0.074 | |

| Test-unknown dataset | Using Inner Iris Region | 0.170 | 0.278 | 0.224 | 1.021 | 1.482 | 1.251 | 2.128 | 0.092 | 1.110 |

| Using Outer Iris Region | 5.617 | 0.046 | 2.832 | 1.830 | 3.750 | 2.790 | 15.106 | 0.694 | 7.900 | |

| Using Entire Iris Region | 0.298 | 0.324 | 0.311 | 0.894 | 0.556 | 0.725 | 0.638 | 0.602 | 0.620 | |

| (b) | ||||||||||

| Test-known dataset | Using Inner Iris Region | 0.103 | 0.198 | 0.151 | 0.103 | 0.000 | 0.051 | 0.000 | 0.050 | 0.025 |

| Using Outer Iris Region | 0.000 | 0.000 | 0.000 | 0.000 | 0.010 | 0.050 | 0.000 | 0.000 | 0.000 | |

| Using Entire Iris Region | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Feature Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Score Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Test-unknown dataset | Using Inner Iris Region | 0.213 | 0.324 | 0.268 | 4.596 | 2.130 | 3.363 | 0.085 | 0.509 | 0.297 |

| Using Outer Iris Region | 0.638 | 0.787 | 0.713 | 0.383 | 4.444 | 2.414 | 2.383 | 4.259 | 3.321 | |

| Using Entire Iris Region | 0.809 | 0.370 | 0.589 | 0.809 | 0.833 | 0.821 | 0.681 | 0.139 | 0.410 | |

| Using Feature Level Fusion Approach | 0.213 | 0.093 | 0.153 | 0.383 | 0.278 | 0.330 | 0.170 | 0.000 | 0.085 | |

| Using Score Level Fusion Approach | 0.128 | 0.046 | 0.087 | 0.213 | 0.232 | 0.222 | 0.000 | 0.046 | 0.023 | |

| Dataset | Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Test-Known Dataset | Test-Unknown Dataset | |||||

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | ||||

| Original NDCLD-2015 dataset | 600 | 600 | 1200 | 900 | 900 | 1800 | 900 | 900 | 1800 |

| Augmented dataset | 29,400 (600 × 49) | 29,400 (600 × 49) | 58,800 | 900 | 900 | 1800 | 900 | 900 | 1800 |

| Test Dataset | Approach | Using Three-Channel Gray Images | Using Three-Channel Retinex Images | Using Three-Channel Fusion of Gray and Retinex Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | APCER | BPCER | ACER | ||

| (a) | ||||||||||

| Test-known dataset | Using Inner Iris Region | 0.056 | 0.389 | 0.222 | 0.167 | 0.333 | 0.250 | 0.167 | 0.278 | 0.222 |

| Using Outer Iris Region | 0.000 | 0.278 | 0.139 | 0.056 | 0.111 | 0.083 | 0.000 | 0.222 | 0.111 | |

| Using Entire Iris Region | 0.000 | 0.278 | 0.139 | 0.000 | 0.167 | 0.083 | 0.056 | 0.056 | 0.056 | |

| Test-unknown dataset | Using Inner Iris Region | 1.278 | 11.889 | 6.583 | 0.444 | 11.722 | 6.083 | 0.333 | 13.278 | 6.806 |

| Using Outer Iris Region | 0.056 | 32.222 | 16.139 | 0.278 | 24.944 | 12.611 | 0.222 | 23.889 | 12.056 | |

| Using Entire Iris Region | 0.389 | 11.722 | 6.056 | 0.222 | 10.556 | 5.389 | 0.222 | 13.611 | 6.917 | |

| (b) | ||||||||||

| Test-known dataset | Using Inner Iris Region | 0.167 | 0.111 | 0.139 | 0.056 | 0.389 | 0.222 | 0.167 | 0.111 | 0.139 |

| Using Outer Iris Region | 0.000 | 0.278 | 0.139 | 0.222 | 0.000 | 0.111 | 0.000 | 0.167 | 0.083 | |

| Using Entire Iris Region | 0.000 | 0.278 | 0.139 | 0.111 | 0.000 | 0.056 | 0.000 | 0.111 | 0.056 | |

| Using Feature Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Score Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Test-unknown dataset | Using Inner Iris Region | 2.167 | 8.556 | 5.361 | 2.278 | 3.500 | 2.889 | 2.722 | 3.278 | 3.000 |

| Using Outer Iris Region | 3.611 | 10.389 | 7.000 | 5.167 | 5.500 | 5.333 | 5.611 | 7.667 | 6.639 | |

| Using Entire Iris Region | 1.333 | 2.389 | 1.861 | 1.556 | 2.833 | 2.194 | 1.389 | 2.111 | 1.750 | |

| Using Feature Level Fusion Approach | 0.778 | 2.667 | 1.722 | 0.333 | 0.889 | 0.611 | 0.333 | 0.833 | 0.583 | |

| Using Score Level Fusion Approach | 1.722 | 1.833 | 1.778 | 0.944 | 0.833 | 0.889 | 0.556 | 1.000 | 0.778 | |

| Test Dataset | Approach | Using Three-Channel Gray Images | Using Three-Channel Retinex Images | Using Three-Channel Fusion of Gray and Retinex Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | APCER | BPCER | ACER | ||

| (a) | ||||||||||

| Test-known Dataset | Using Inner Iris Region | 0.389 | 0.056 | 0.222 | 0.111 | 0.389 | 0.250 | 0.278 | 0.389 | 0.333 |

| Using Outer Iris Region | 0.000 | 0.167 | 0.083 | 0.000 | 0.056 | 0.028 | 0.000 | 0.056 | 0.028 | |

| Using Entire Iris Region | 0.000 | 0.056 | 0.028 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Test-Unknown Dataset | Using Inner Iris Region | 1.278 | 9.778 | 5.528 | 0.389 | 10.778 | 5.583 | 0.889 | 10.667 | 5.778 |

| Using Outer Iris Region | 0.111 | 36.611 | 18.361 | 0.111 | 24.944 | 12.528 | 0.278 | 31.389 | 15.833 | |

| Using Entire Iris Region | 0.111 | 24.667 | 12.389 | 0.278 | 19.444 | 9.861 | 0.556 | 12.944 | 6.750 | |

| (b) | ||||||||||

| Test-known Dataset | Using Inner Iris Region | 0.111 | 0.444 | 0.278 | 0.000 | 0.556 | 0.028 | 0.222 | 0.278 | 0.250 |

| Using Outer Iris Region | 0.000 | 0.167 | 0.083 | 0.000 | 0.000 | 0.000 | 0.056 | 0.000 | 0.028 | |

| Using Entire Iris Region | 0.000 | 0.000 | 0.000 | 0.000 | 0.056 | 0.028 | 0.000 | 0.000 | 0.000 | |

| Using Feature Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Score Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Test-Unknown Dataset | Using Inner Iris Region | 3.167 | 4.944 | 4.056 | 2.667 | 2.833 | 2.750 | 2.278 | 3.889 | 3.083 |

| Using Outer Iris Region | 2.778 | 14.000 | 8.389 | 2.444 | 7.333 | 4.889 | 3.833 | 7.556 | 5.694 | |

| Using Entire Iris Region | 1.944 | 3.389 | 2.667 | 2.000 | 4.333 | 3.167 | 1.333 | 2.278 | 1.806 | |

| Using Feature Level Fusion Approach | 1.222 | 1.778 | 1.500 | 0.389 | 0.611 | 0.500 | 1.056 | 0.833 | 0.944 | |

| Using Score Level Fusion Approach | 1.556 | 2.167 | 1.861 | 1.167 | 0.833 | 1.000 | 0.722 | 0.778 | 0.750 | |

| Dataset | Training Dataset | Testing Dataset | ||||

|---|---|---|---|---|---|---|

| Real Image | Attack Image | Total | Real Image | Attack Image | Total | |

| Original entire NDCLD-2015 (1st Fold) | 2340 | 1068 | 3408 | 2535 | 1357 | 3892 |

| Augmented dataset (1st Fold) | 28,080 (2340 × 12) | 26,700 (1068 × 25) | 54,780 | 2535 | 1357 | 3892 |

| Original entire NDCLD-2015 (2nd Fold) | 2535 | 1357 | 3892 | 2340 | 1068 | 3408 |

| Augmented dataset (2nd Fold) | 30,420 (2535 × 12) | 33,925 (1357 × 25) | 64,345 | 2340 | 1068 | 3408 |

| Approach | Using Three-Channel Gray Images | Using Three-Channel Retinex Images | Using Three-Channel Fusion of Gray and Retinex Images | ||||||

|---|---|---|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | APCER | BPCER | ACER | |

| (a) | |||||||||

| Using Inner Iris Region | 4.088 | 31.212 | 17.650 | 3.322 | 35.895 | 19.608 | 3.831 | 34.090 | 18.961 |

| Using Outer Iris Region | 1.851 | 3.502 | 2.676 | 1.921 | 2.766 | 2.344 | 1.767 | 3.461 | 2.614 |

| Using Entire Iris Region | 1.606 | 6.120 | 3.863 | 1.501 | 7.845 | 4.673 | 1.522 | 4.418 | 2.970 |

| (b) | |||||||||

| Using Inner Iris Region | 6.581 | 13.810 | 10.195 | 6.003 | 25.649 | 15.826 | 5.360 | 19.749 | 12.555 |

| Using Outer Iris Region | 2.581 | 1.666 | 2.123 | 2.175 | 0.883 | 1.529 | 2.180 | 1.706 | 1.943 |

| Using Entire Iris Region | 1.907 | 1.204 | 1.555 | 1.898 | 1.646 | 1.772 | 2.079 | 0.596 | 1.337 |

| Using Feature Level Fusion Approach | 1.481 | 0.823 | 1.152 | 1.777 | 0.140 | 0.959 | 1.649 | 0.281 | 0.965 |

| Using Score Level Fusion Approach | 1.731 | 0.599 | 1.165 | 1.884 | 0.094 | 0.989 | 1.800 | 0.214 | 1.007 |

| Training Dataset | Testing Dataset | |||||||

|---|---|---|---|---|---|---|---|---|

| Images from Warsaw-2017 Dataset | Images from NDCLD-2015 Dataset | Total | Test-Known Dataset | Test-Unknown Dataset | ||||

| Images from Warsaw-2017 Dataset | Images from NDCLD-2015 Dataset | Total | Images from Warsaw-2017 Dataset | Images from NDCLD-2015 Dataset | Total | |||

| 51,681 | 58,800 | 110,481 | 2990 | 1800 | 4790 | 4510 | 1800 | 6310 |

| Test Dataset | Approach | Using Three-Channel Gray Images | Using Three-Channel Retinex Images | Using Three-Channel Fusion of Gray and Retinex Images | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | APCER | BPCER | ACER | ||

| (a) | ||||||||||

| Test-known Dataset | Using Inner Iris Region | 0.160 | 0.034 | 0.097 | 0.053 | 0.206 | 0.130 | 0.000 | 0.171 | 0.085 |

| Using Outer Iris Region | 0.053 | 0.034 | 0.044 | 0.053 | 0.069 | 0.061 | 0.053 | 0.034 | 0.044 | |

| Using Entire Iris Region | 0.000 | 0.034 | 0.017 | 0.107 | 0.034 | 0.071 | 0.053 | 0.034 | 0.044 | |

| Test-Unknown Dataset | Using Inner Iris Region | 0.585 | 4.020 | 2.302 | 2.062 | 4.575 | 3.318 | 1.292 | 4.412 | 2.852 |

| Using Outer Iris Region | 3.692 | 14.183 | 8.934 | 3.292 | 10.458 | 6.875 | 5.108 | 11.765 | 8.436 | |

| Using Entire Iris Region | 0.923 | 2.386 | 1.654 | 0.800 | 3.726 | 2.263 | 0.431 | 5.621 | 3.026 | |

| (b) | ||||||||||

| Test-known Dataset | Using Inner Iris Region | 0.053 | 0.034 | 0.044 | 0.267 | 0.343 | 0.305 | 0.000 | 0.172 | 0.086 |

| Using Outer Iris Region | 0.000 | 0.069 | 0.034 | 0.053 | 0.000 | 0.027 | 0.053 | 0.000 | 0.027 | |

| Using Entire Iris Region | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Feature Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Using Score Level Fusion Approach | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | |

| Test-Unknown Dataset | Using Inner Iris Region | 0.339 | 4.935 | 2.637 | 3.877 | 3.595 | 3.736 | 2.339 | 3.105 | 2.722 |

| Using Outer Iris Region | 4.246 | 9.510 | 6.878 | 4.246 | 7.353 | 5.800 | 4.831 | 7.811 | 6.321 | |

| Using Entire Iris Region | 1.662 | 1.536 | 1.599 | 2.154 | 1.144 | 1.649 | 1.815 | 2.222 | 2.019 | |

| Using Feature Level Fusion Approach | 1.231 | 1.438 | 1.334 | 1.200 | 1.111 | 1.156 | 0.862 | 0.556 | 0.709 | |

| Using Score Level Fusion Approach | 0.400 | 2.386 | 1.393 | 1.015 | 2.712 | 1.864 | 1.354 | 2.418 | 1.886 | |

| Pupil and Iris Boundary Detection | Inner and Outer Region Image Extraction | Retinex Filtering | Deep Feature Extraction | Feature Selection by PCA | Classification by SVM | Total |

|---|---|---|---|---|---|---|

| 22.500 | 3.776 | 0.011 | 58.615 | 0.0001 | 0.00002 | 84.90212 |

| Method | Warsaw-2017 Dataset | NDCLD-2015 Dataset | ||||

|---|---|---|---|---|---|---|

| APCER | BPCER | ACER | APCER | BPCER | ACER | |

| CASIA method [34] | 3.40 | 8.60 | 6.00 | 11.33 | 7.56 | 9.45 |

| Anon1 method [34] | 6.11 | 5.51 | 5.81 | 7.78 | 0.28 | 4.03 |

| UNINA method [34] | 0.05 | 14.77 | 7.41 | 25.44 | 0.33 | 12.89 |

| CNN-based method [25,38] | 0.198 | 0.327 | 0.263 | 1.250 | 5.945 | 3.598 |

| MLBP-based method [57] | 0.154 | 0.285 | 0.224 | 4.056 | 7.806 | 5.931 |

| Feature Level Fusion of CNN and MLBP Features [25] | 0.154 | 0.131 | 0.142 | 1.167 | 3.028 | 2.098 |

| Score Level Fusion of CNN and MLBP Features [25] | 0.000 | 0.032 | 0.016 | 1.389 | 4.500 | 2.945 |

| Our proposed method | 0.000 | 0.032 | 0.016 | 0.167 | 0.417 | 0.292 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, D.T.; Pham, T.D.; Lee, Y.W.; Park, K.R. Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor. Sensors 2018, 18, 2601. https://doi.org/10.3390/s18082601

Nguyen DT, Pham TD, Lee YW, Park KR. Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor. Sensors. 2018; 18(8):2601. https://doi.org/10.3390/s18082601

Chicago/Turabian StyleNguyen, Dat Tien, Tuyen Danh Pham, Young Won Lee, and Kang Ryoung Park. 2018. "Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor" Sensors 18, no. 8: 2601. https://doi.org/10.3390/s18082601

APA StyleNguyen, D. T., Pham, T. D., Lee, Y. W., & Park, K. R. (2018). Deep Learning-Based Enhanced Presentation Attack Detection for Iris Recognition by Combining Features from Local and Global Regions Based on NIR Camera Sensor. Sensors, 18(8), 2601. https://doi.org/10.3390/s18082601