Modeling and Control of a Micro AUV: Objects Follower Approach

Abstract

1. Introduction

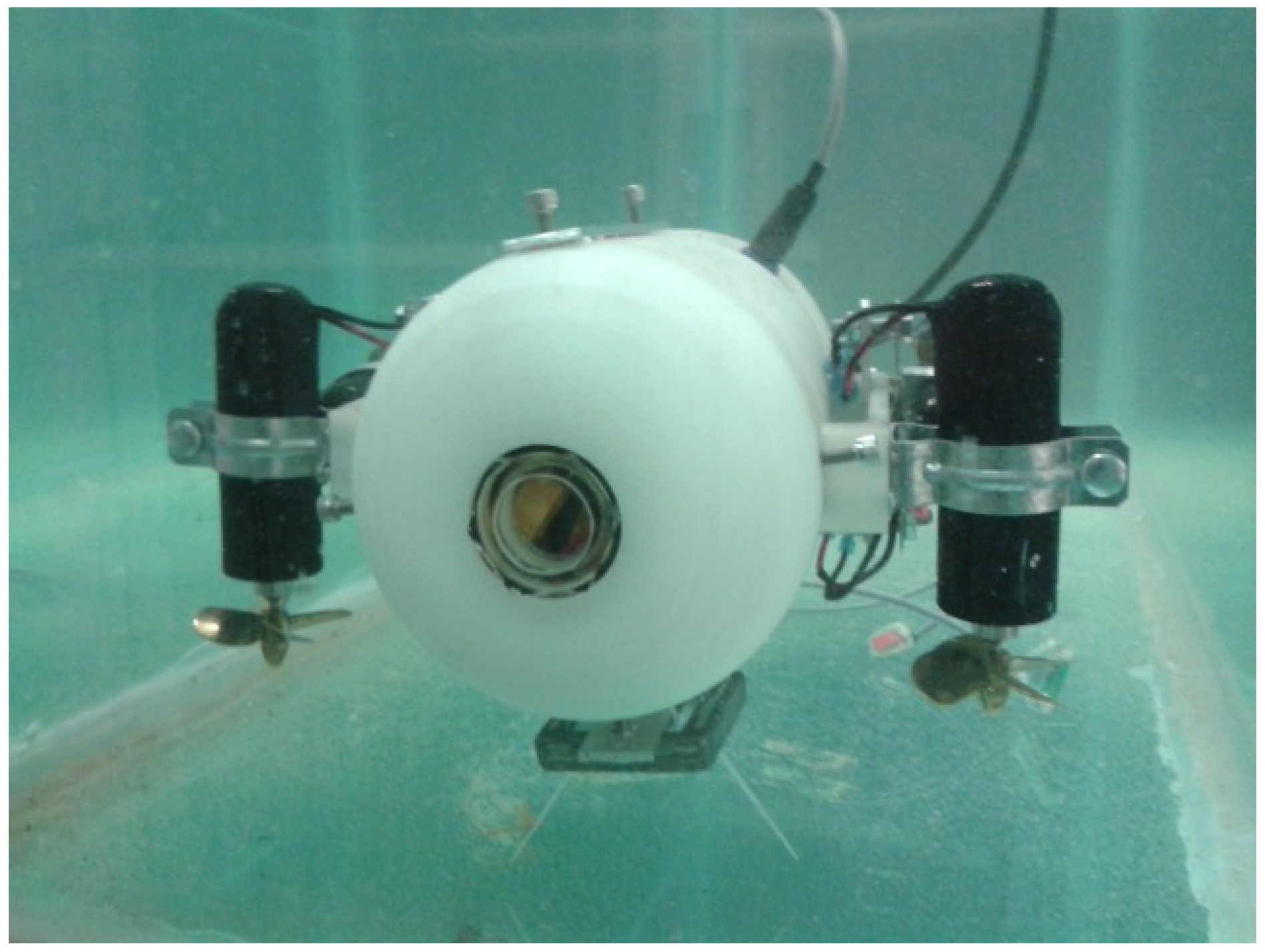

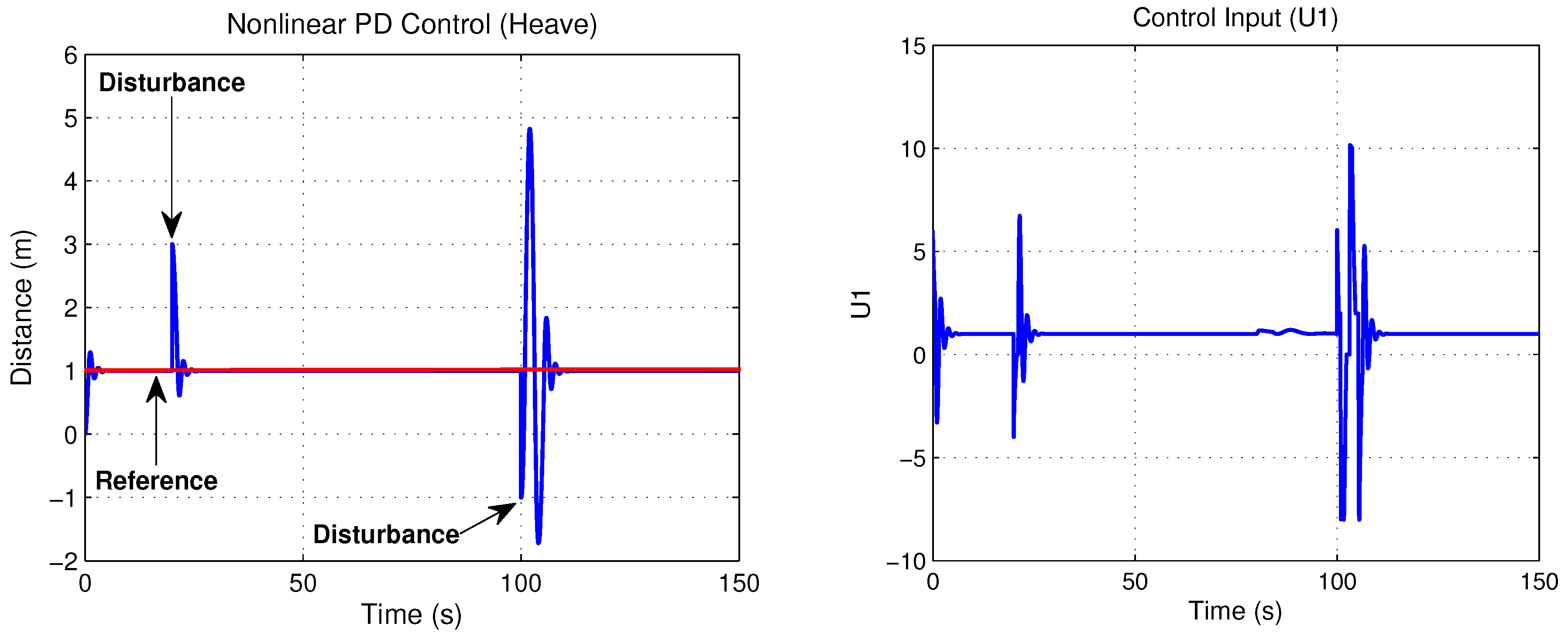

2. Prototype Description

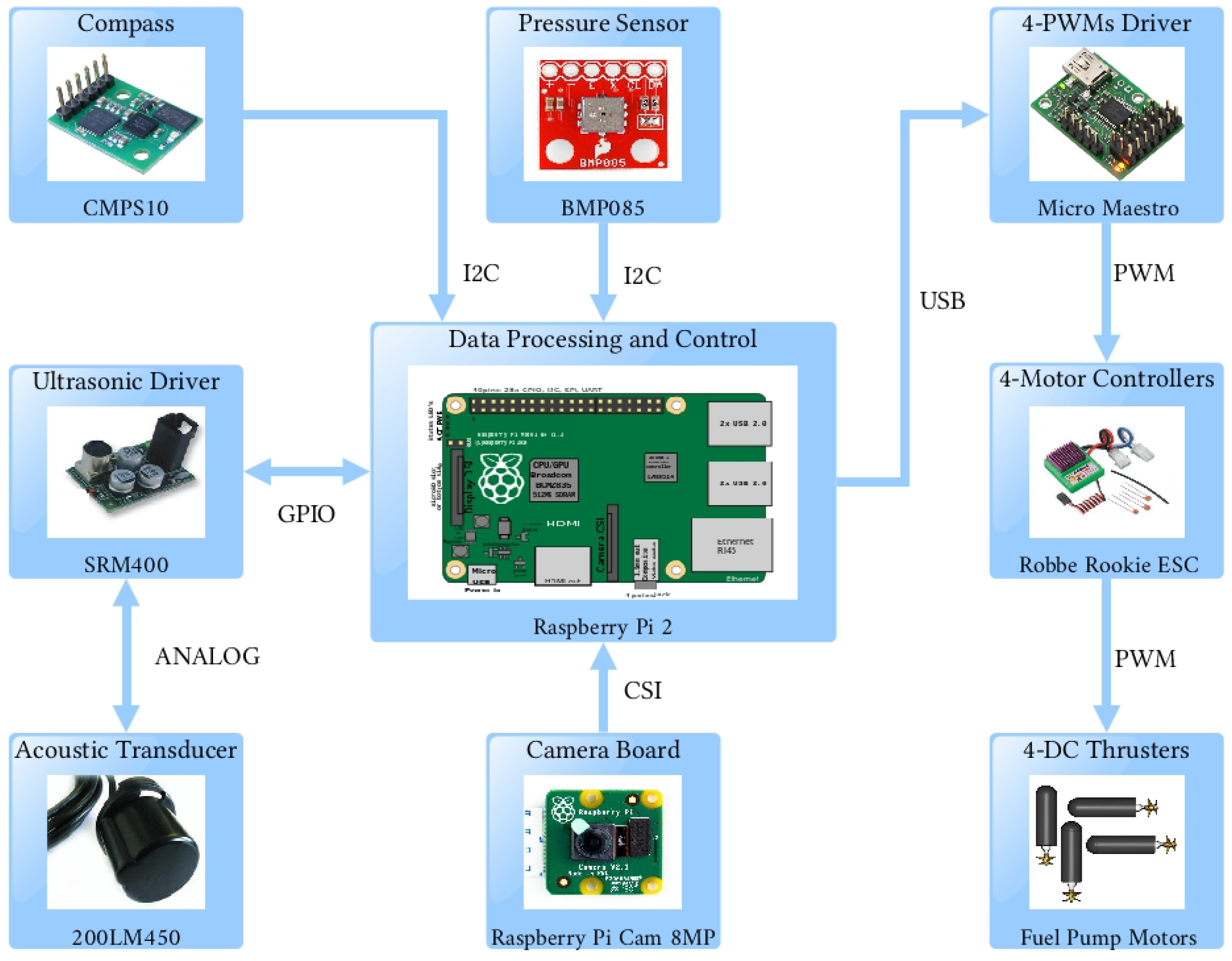

2.1. Embedded System

2.2. Computer Vision

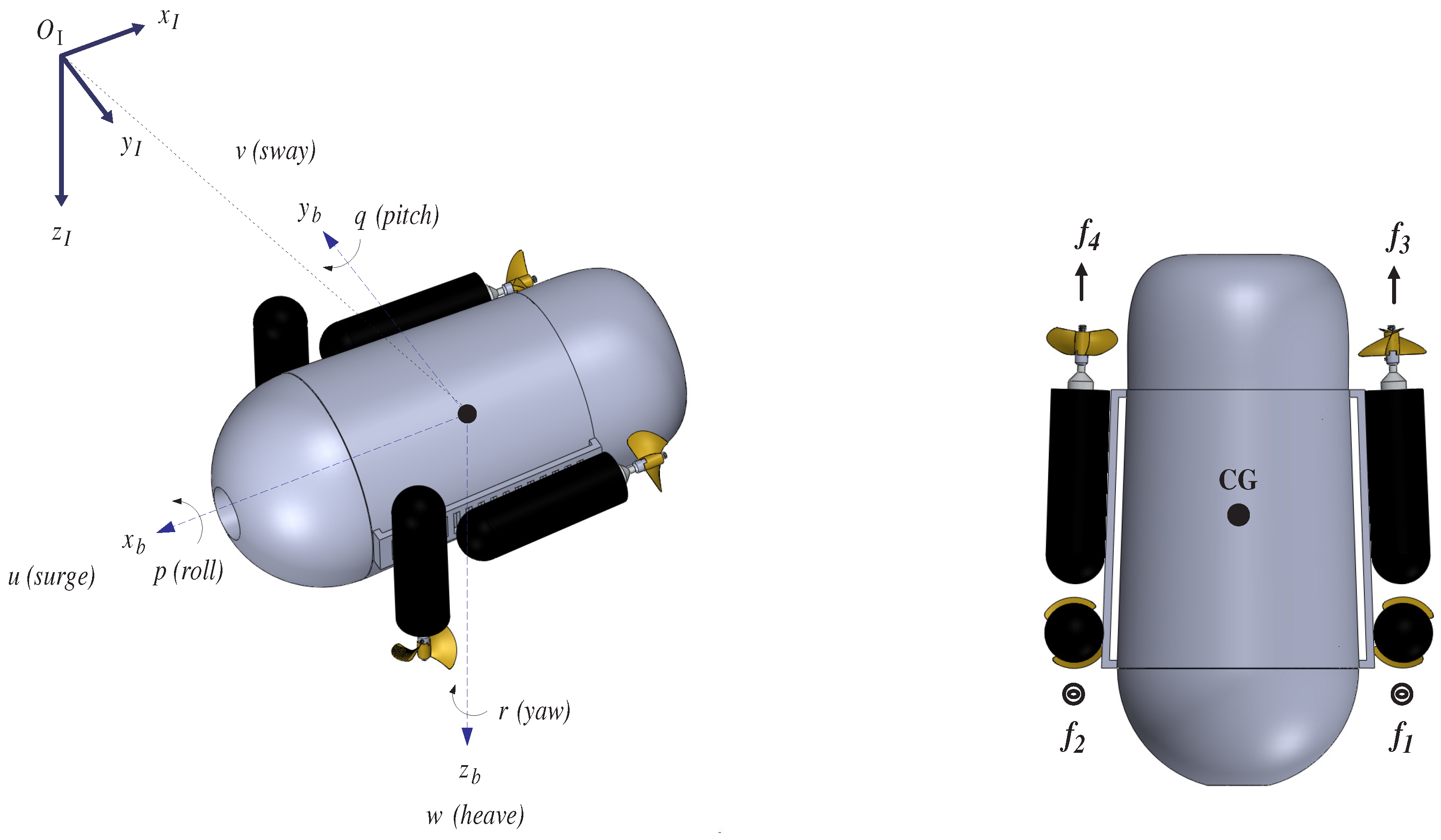

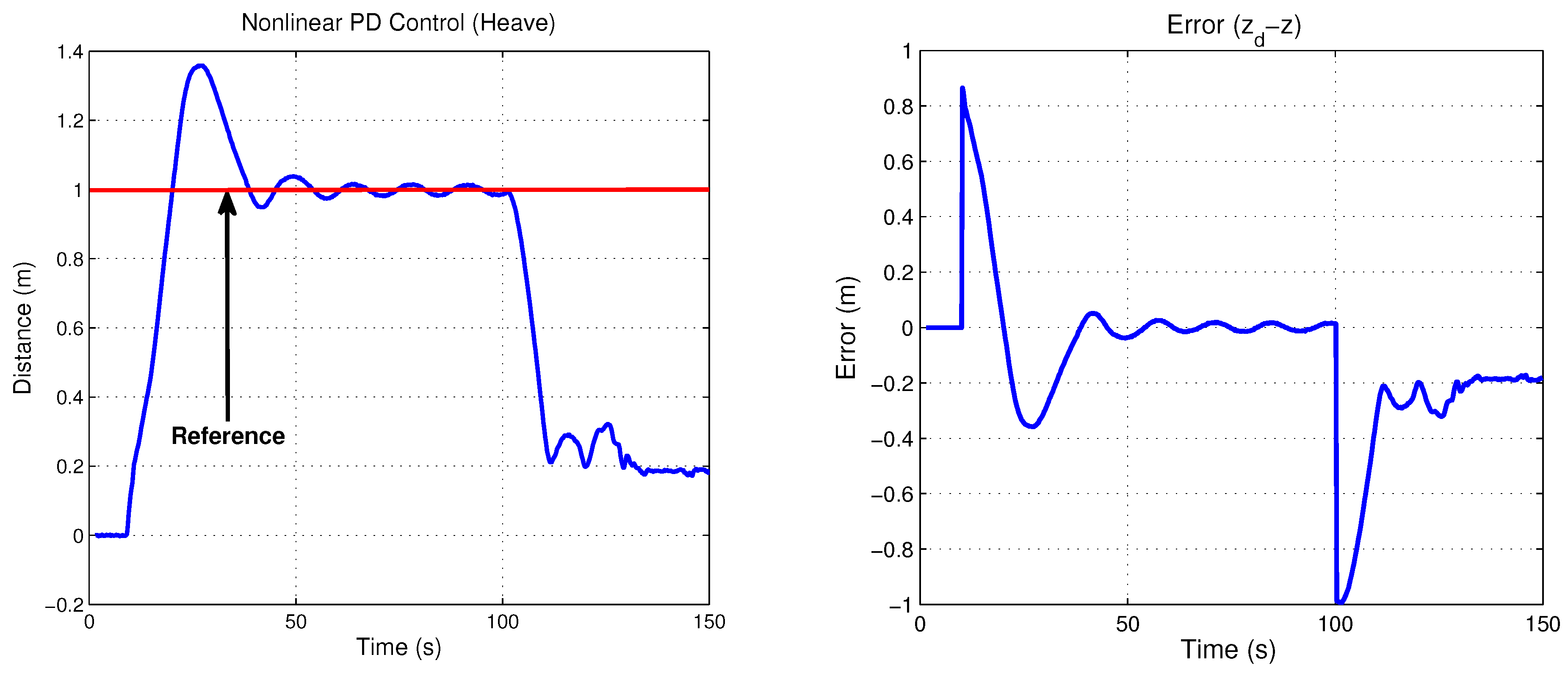

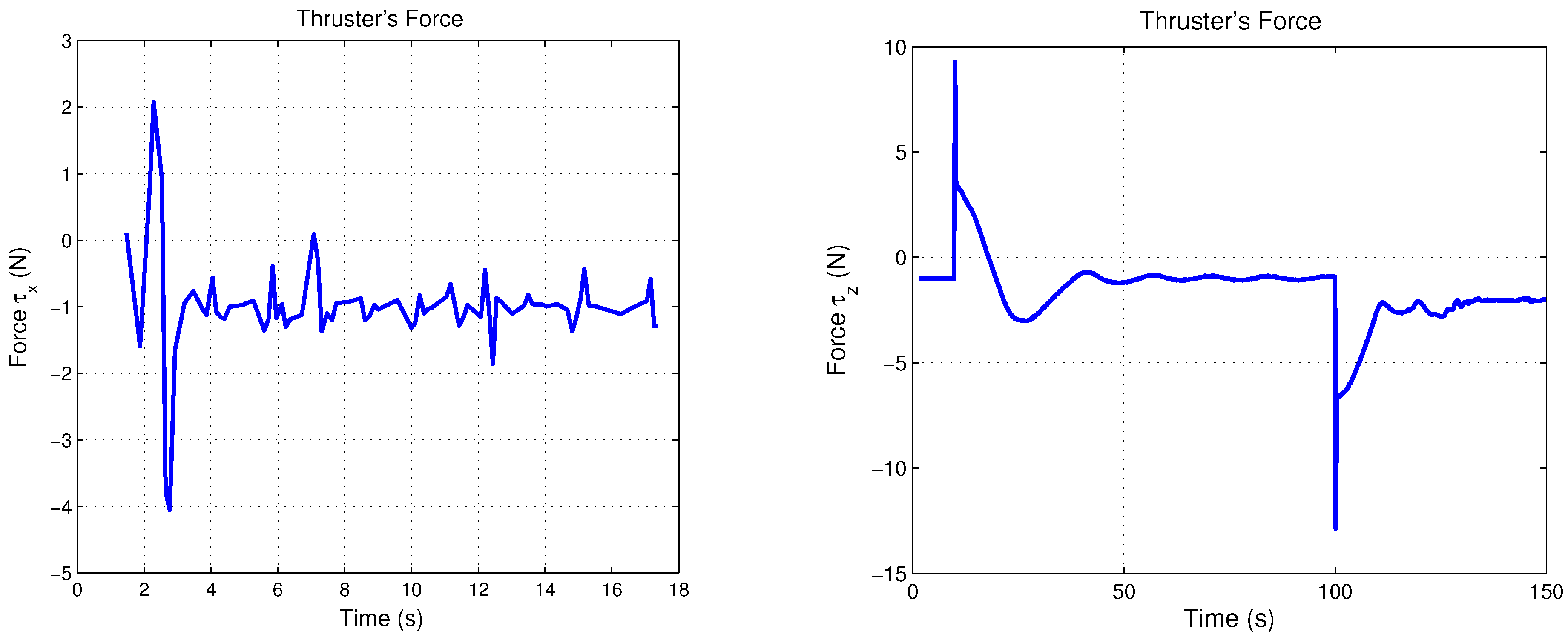

2.3. Prototype’s Movement Description

3. Dynamic Model

3.1. Gravity/Buoyancy Forces and Torques

3.2. Forces and Torques Generated by the Thrusters

4. Control Strategy

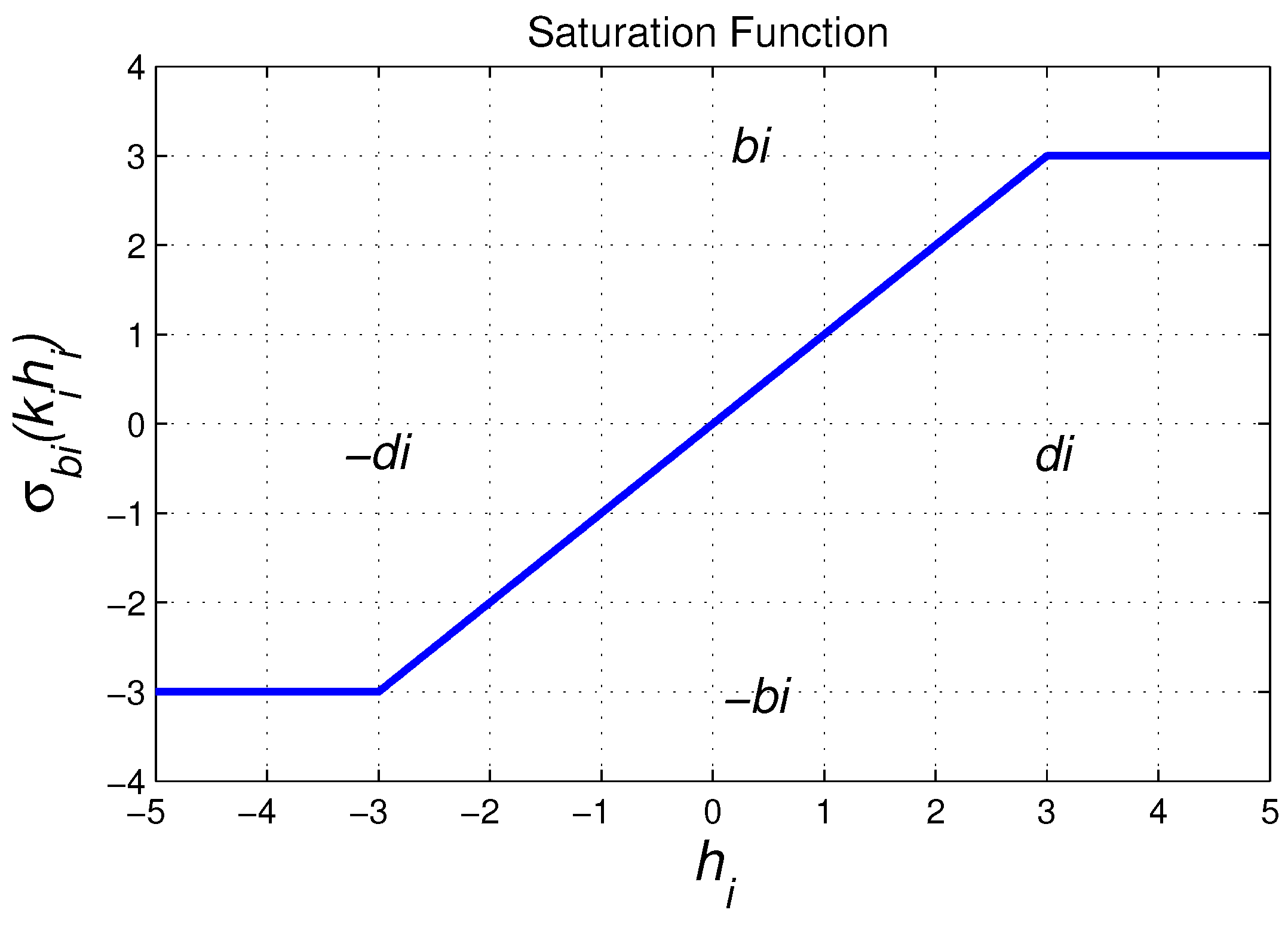

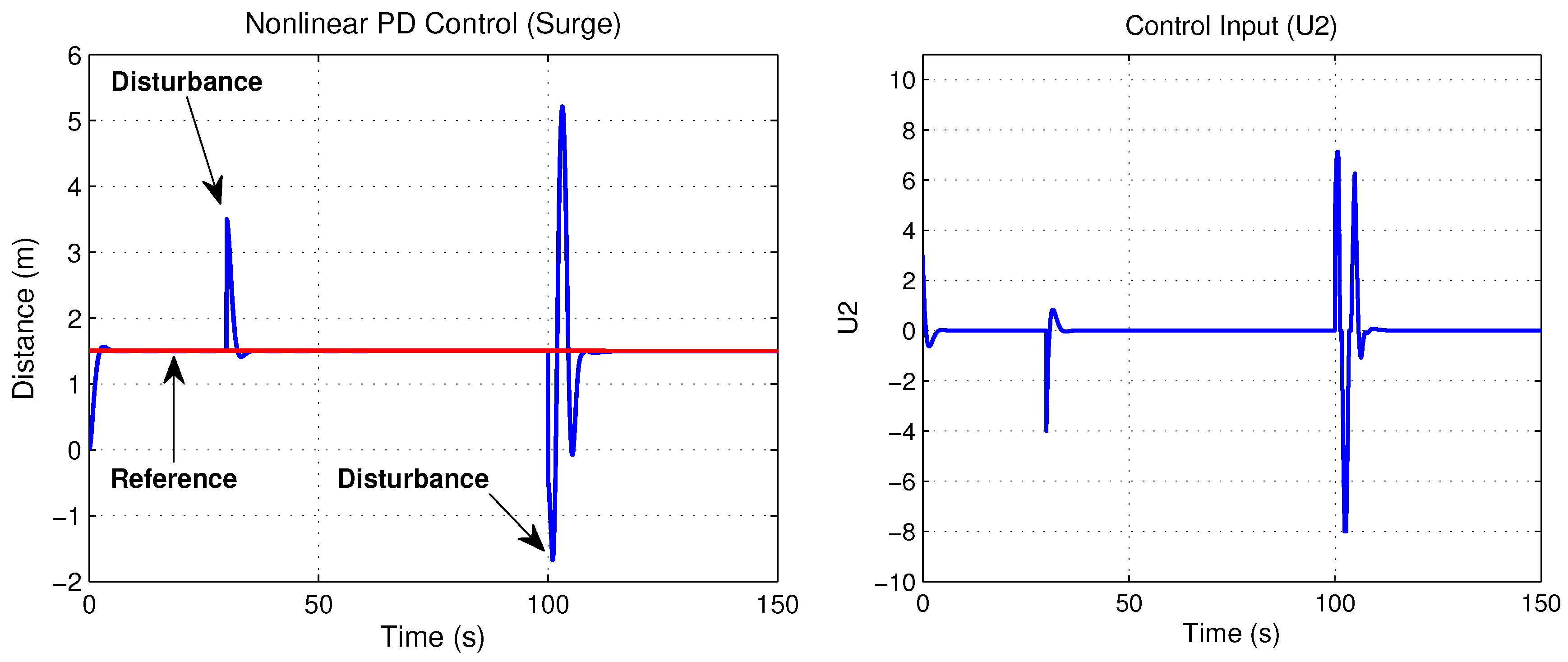

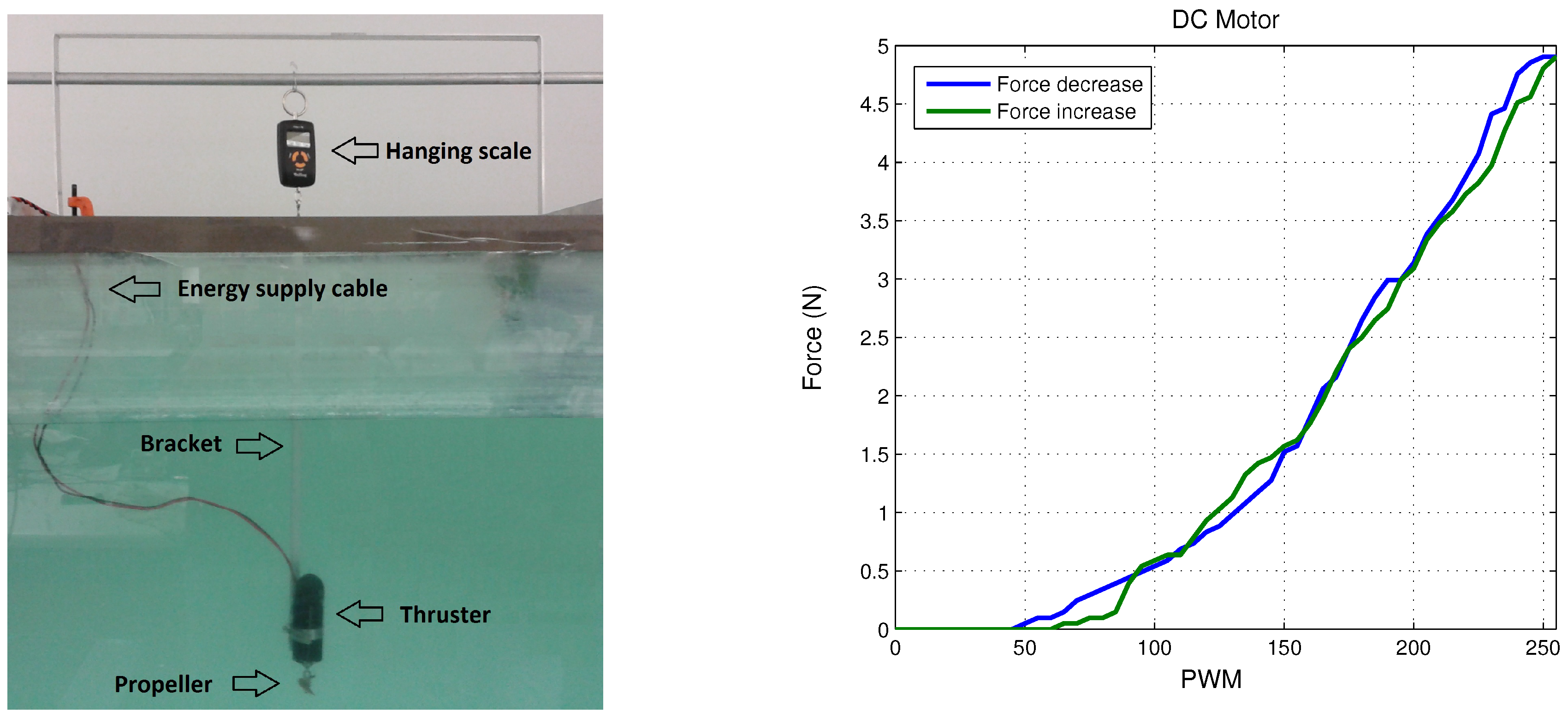

4.1. A Nonlinear PD Controller Based on Saturation Functions

4.2. Stability Proof

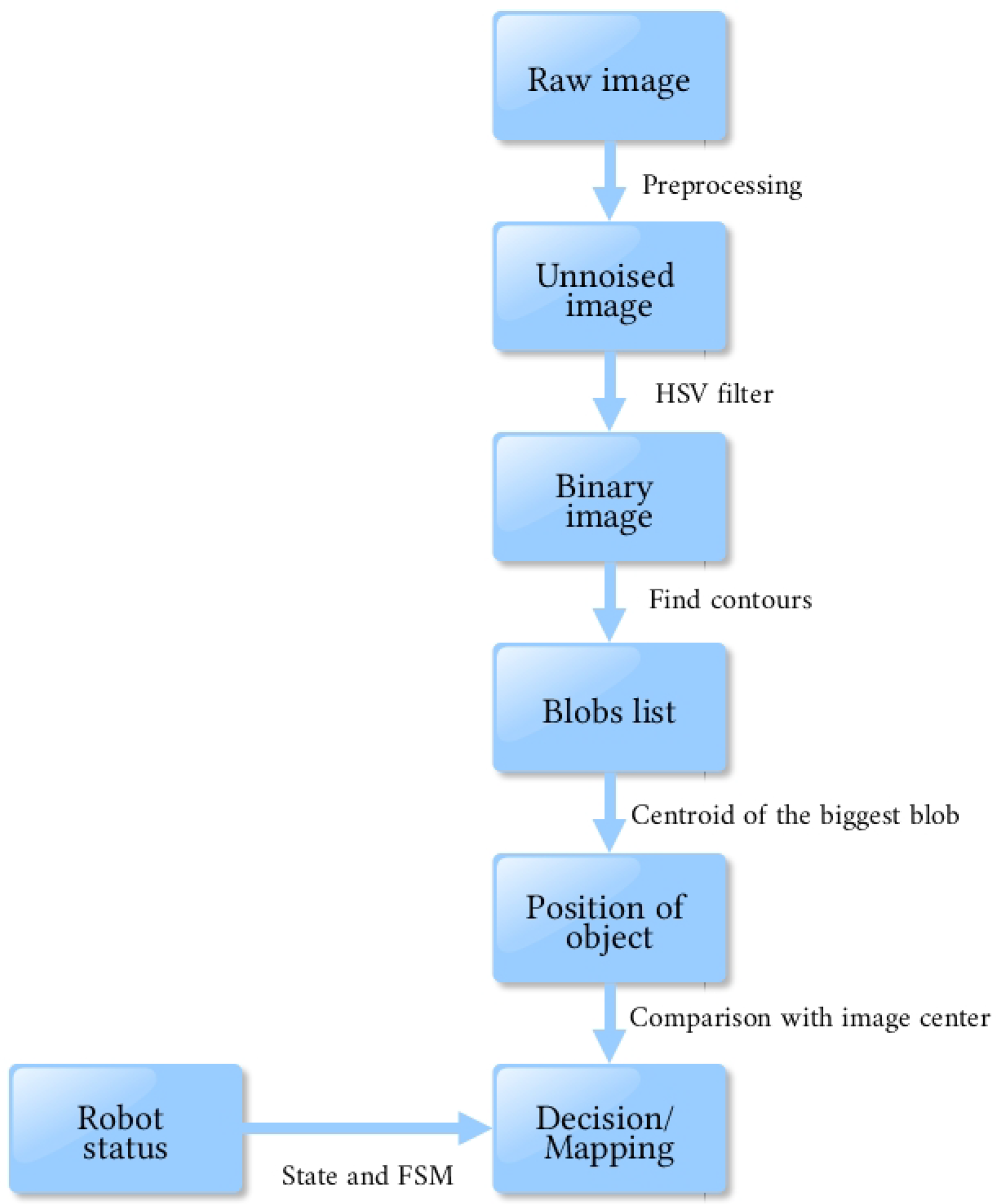

5. Computer Vision Algorithm

5.1. Data Processing Chain

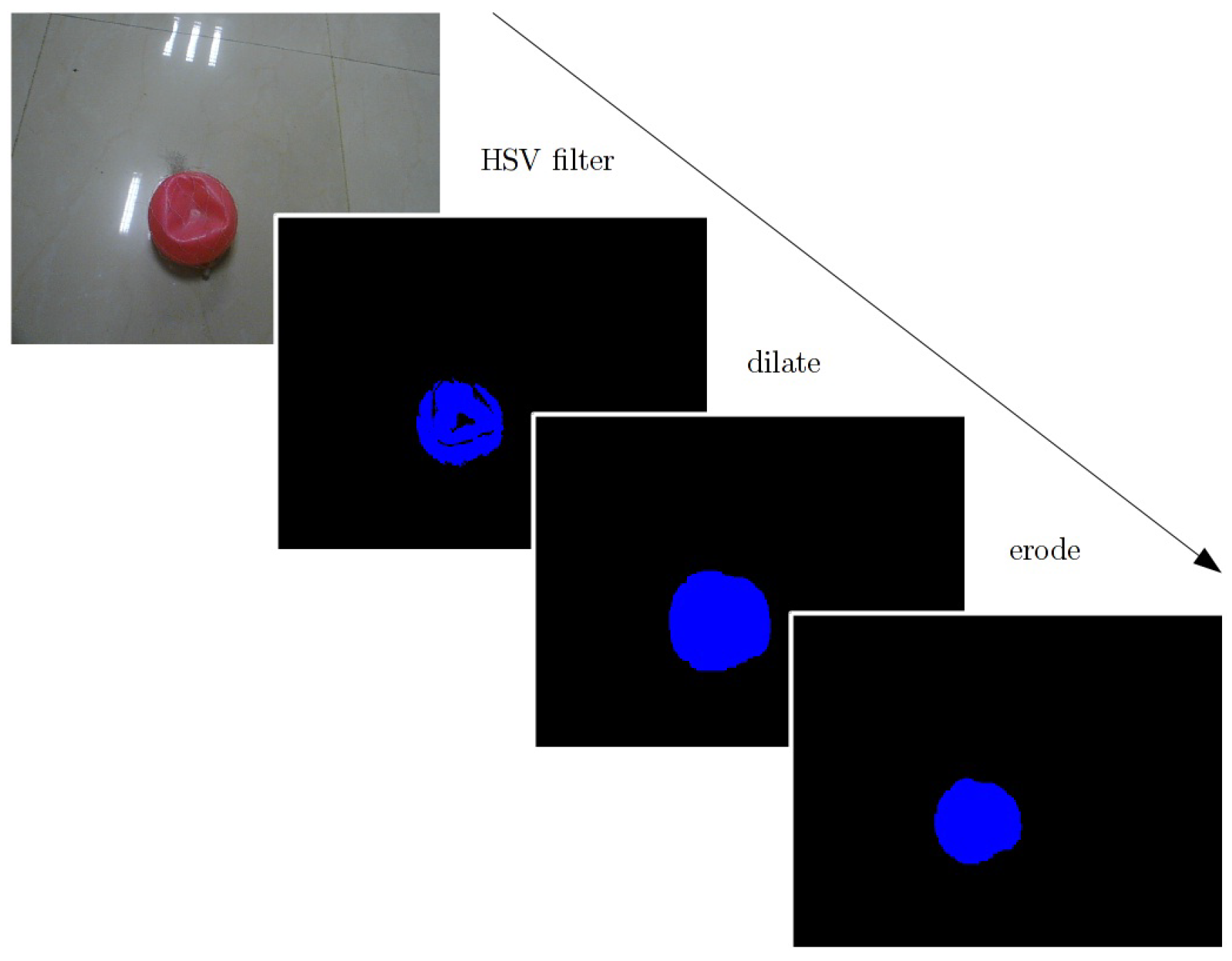

5.2. Image Preprocessing

5.3. Extracting Interesting Data

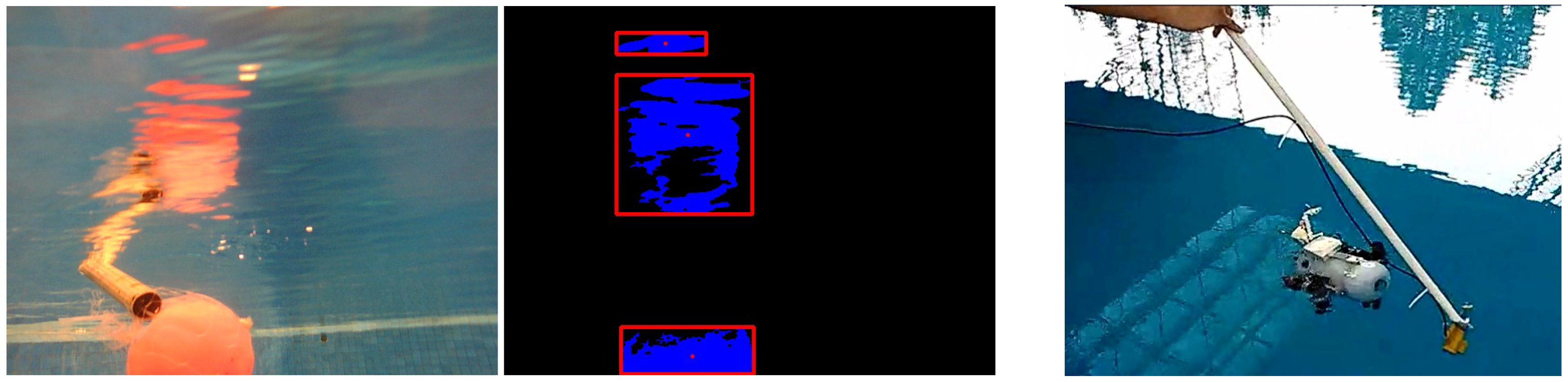

5.3.1. HSV Filtering

5.3.2. Blob Detection

5.4. Take Decision

- Remote control (no autonomous, the user sends orders).

- Stabilize (keep same position and posture).

- Go up and Go down (change only depth and stabilize).

- Explore (follow a planned path).

- Follow an object by the Raspberry Pi camera.

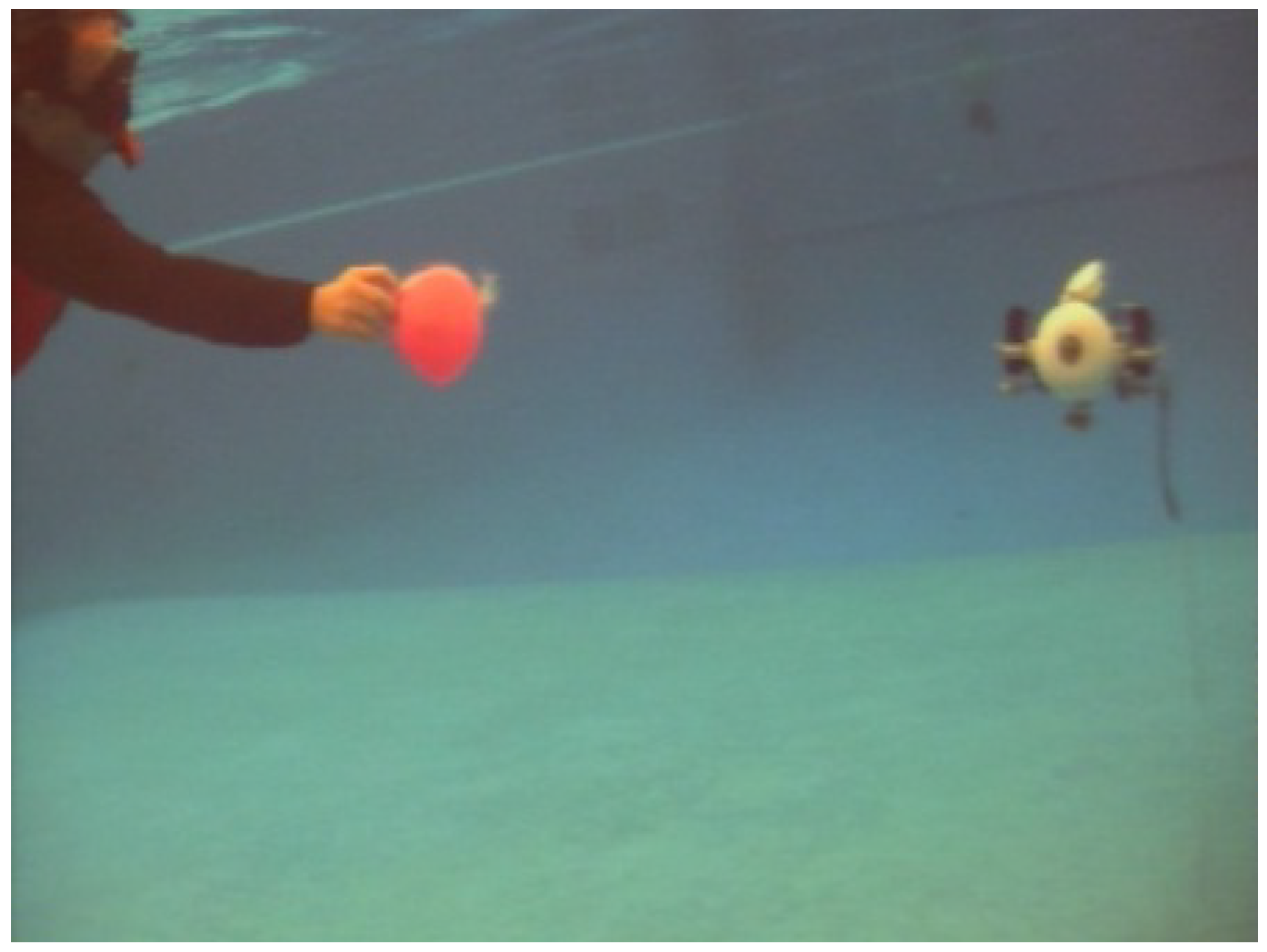

Ball Following through the Algorithm Vision

6. Simulation and Results

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Antonelli, G. Underwater Robots, STAR (Springer Tracts in Advance Robotics), 3rd ed.; Springer: Berlin, Germany, 2014; ISBN 978-3-319-02876-7. [Google Scholar]

- Carreras, M.; Ridao, P.; García, R.; Ribas, D.; Palomeras, N. Inspección visual subacuática mediante robótica submarina. Rev. Iber. Aut. Inf. Ind. 2012, 9, 34–45. [Google Scholar] [CrossRef]

- Clark, C.; Olstad, C.; Buhagiar, K.; Gambin, T. Archaelogy via Underwater Robots: Mapping and Localization within Maltese Cistern Systems. In Proceedings of the 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 17–20 December 2008; pp. 662–667. [Google Scholar] [CrossRef]

- Monroy, J.; Campos, E.; Torres, J. Attitude Control of a Micro AUV Trough an Embedded System. IEEE Lat. Am. Trans. 2017, 15, 603–612. [Google Scholar] [CrossRef]

- Watson, S.; Green, P. Depth Control for Micro-Autonomous Underwater Vehicles (μAUVs): Simulation and Experimentation. Int. J. Adv. Robot. Syst. 2014, 11, 1–10. [Google Scholar] [CrossRef]

- Fechner, S.; Kerdels, J.; Albiez, J. Design of a μAUV. In Proceedings of the 4th International Symposium on Autonomous Minirobots for Research and Edutainment, Buenos Aires, Argentina, 2–5 October 2007; pp. 99–106, ISBN 978-3-939350-35-4. [Google Scholar]

- Rodríguez, P.; Piera, J. Mini AUV, a platform for future use on marine research for the Spanish Research Council? Instrum. ViewPo. 2005, 4, 14–15. [Google Scholar]

- Fossen, T. Guidance and Control of Ocean Vehicles; John Wiley and Sons Ltd., University of Trondheim: Trondheim, Norway, 1999; ISBN 0471941131. [Google Scholar]

- Goldstein, T.; Poole, C.; Safko, J. Classical Mechanics, 2nd ed.; Adison-Wesley: Boston, MA, USA, 1983; ISBN 8185015538. [Google Scholar]

- Marsden, J. Elementary Classical Analysis; W.H. Freeman and Company: San Francisco, CA, USA, 1974. [Google Scholar]

- Fossen, T. Marine Control Systems: Guidance, Navigation, and Control of Ships, Rigs and Underwater Vehicles; Marine Cybernetics: Trondheim, Norway, 2002; ISBN 8292356002. [Google Scholar]

- SNAME, Technical and Research Committee. Nomenclature for Treating the Motion of a Submerged Body Through a Fluid; The Society of Naval Architects and Marine Engineers: New York, NY, USA, 1950; pp. 1–5. [Google Scholar]

- Campos, E.; Monroy, J.; Abundis, H.; Chemori, A.; Creuze, V.; Torres, J. A nonlinear controller based on saturation functions with variable parameters to stabilize an AUV. Int. J. Nav. Arch. Ocean Eng. 2018, 10. in press. [Google Scholar] [CrossRef]

- Kelly, R.; Carelli, R. A Class of Nonlinear PD-type Controller for Robot Manipulator. J. Field Robot. 1996, 13, 793–802. [Google Scholar] [CrossRef]

- Bascle, B.; Blake, A.; Zisserman, A. Motion Deblurring and Super-Resolution from an Image Sequence. In Proceedings of the 4th European Conference on Computer Vision, Cambridge, UK, 15–18 April 1996; pp. 571–582, ISBN 9783540611233. [Google Scholar]

- Pizer, S.; Amburn, E.; Austin, J.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.; Zimmerman, J. Adaptive Histogram Equalization and Its Variations. Comp. Vis. Grap. Im. Proc. Elsevier 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Bazeille, S.; Quidu, I.; Jaulin, L.; Malkasse, J. Automatic Underwater Image Pre Processing. In Proceedings of the SEA TECH WEEK Caracterisation Du Milieu Marin, Brest, France, 16–19 October 2006. [Google Scholar]

- Suzuki, S.; Abe, K. Topological Structural Analysis of Digitized Binary Images by Border Following. Comp. Vis. Grap. Im. Proc. Elsevier 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Pugi, L.; Allotta, B.; Pagliai, M. Redundant and reconfigurable propulsion systems to improve motion capability of underwater vehicles. Ocean Eng. 2018, 148, 376–385. [Google Scholar] [CrossRef]

- Yoon, S.; Qiao, C. Cooperative Search and Survey Using Autonomous Underwater Vehicles (AUVs). IEEE Trans. Par. Dist. Syst. 2011, 22, 364–379. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Monroy-Anieva, J.A.; Rouviere, C.; Campos-Mercado, E.; Salgado-Jimenez, T.; Garcia-Valdovinos, L.G. Modeling and Control of a Micro AUV: Objects Follower Approach. Sensors 2018, 18, 2574. https://doi.org/10.3390/s18082574

Monroy-Anieva JA, Rouviere C, Campos-Mercado E, Salgado-Jimenez T, Garcia-Valdovinos LG. Modeling and Control of a Micro AUV: Objects Follower Approach. Sensors. 2018; 18(8):2574. https://doi.org/10.3390/s18082574

Chicago/Turabian StyleMonroy-Anieva, Jesus Arturo, Cyril Rouviere, Eduardo Campos-Mercado, Tomas Salgado-Jimenez, and Luis Govinda Garcia-Valdovinos. 2018. "Modeling and Control of a Micro AUV: Objects Follower Approach" Sensors 18, no. 8: 2574. https://doi.org/10.3390/s18082574

APA StyleMonroy-Anieva, J. A., Rouviere, C., Campos-Mercado, E., Salgado-Jimenez, T., & Garcia-Valdovinos, L. G. (2018). Modeling and Control of a Micro AUV: Objects Follower Approach. Sensors, 18(8), 2574. https://doi.org/10.3390/s18082574