Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors

Abstract

1. Introduction

2. Related Work

2.1. Zero-Shot Learning

2.2. Wearable-Based Action and Pose Recognition

2.3. Wearable-Based Zero-Shot Action and Pose Recognition

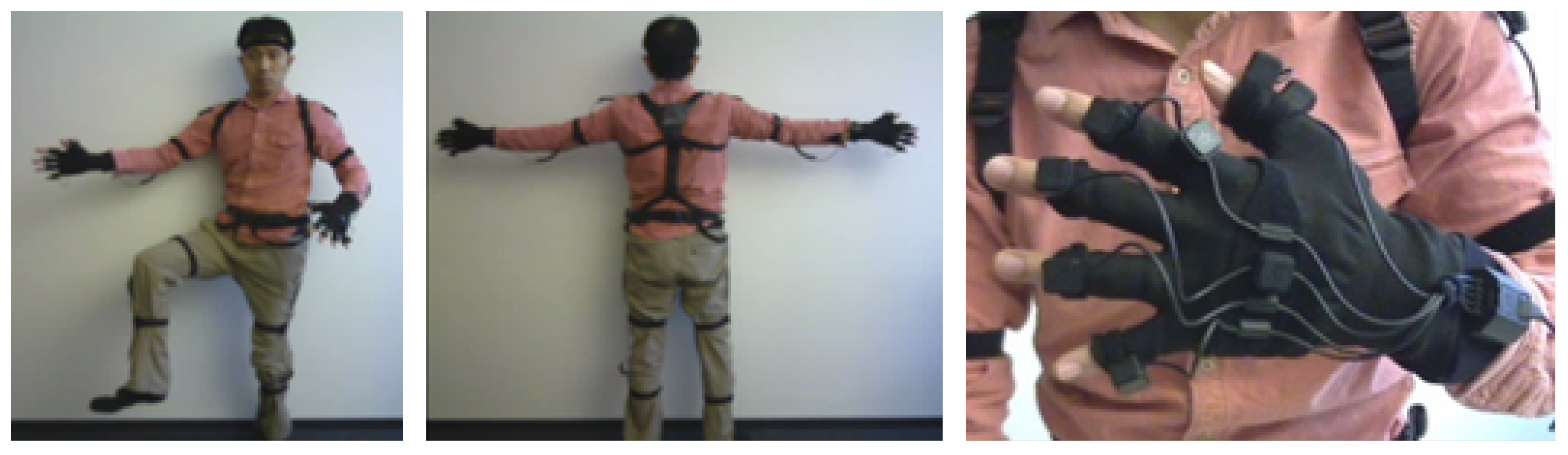

3. Dataset: HDPoseDS

3.1. Sensor

3.2. Target Poses

4. Proposed Method

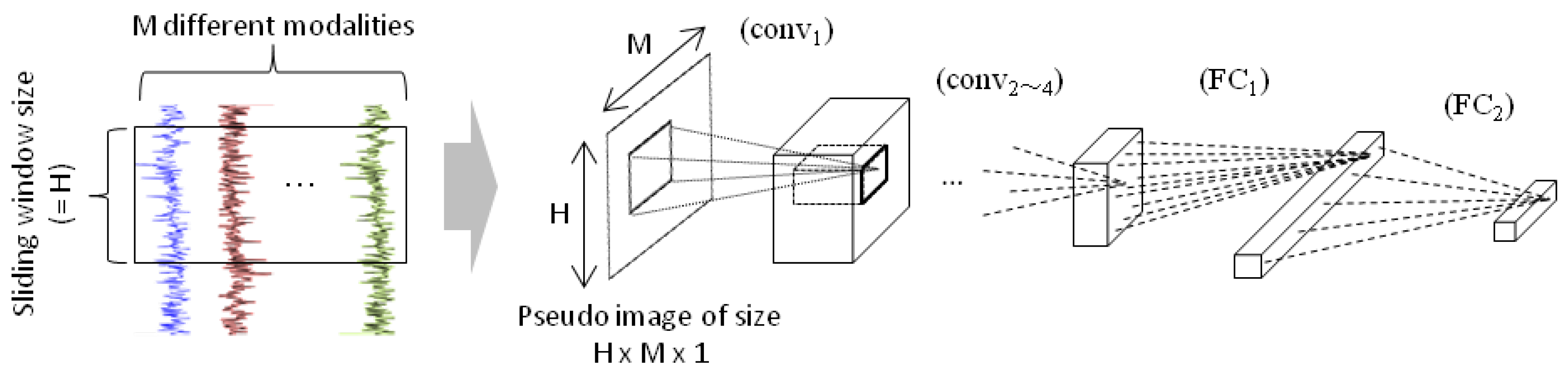

4.1. Attribute Estimation

4.2. Pose Classification with Attributes’ Importance

4.2.1. Naive Formulation

4.2.2. Incorporating Attributes’ Importance

5. Experiment

5.1. Evaluation Scheme

- (1).

- All the input data are converted to attribute vectors using the neural networks explained in Section 4.1. The sliding window size is 60, which corresponds to 1 s, and it’s shifted by 30 (0.5 s). This ends up with roughly 590 () attribute vectors per pose since HDPoseDS contains data from 10 subjects and each subject performed roughly 30 s for each pose.

- (2).

- For each class c, we construct a set of training data by combining the data from all the other classes than c and the pose definition of c based on attributes (Table 3). We use class c’s data as test data.

- (3).

- The labels of the test data are estimated using the method explained in Section 4.2.

- (4).

- We repeat this for all the 22 classes.

- (5).

- We calculate the F-measure for each class based on the precision and recall rate.

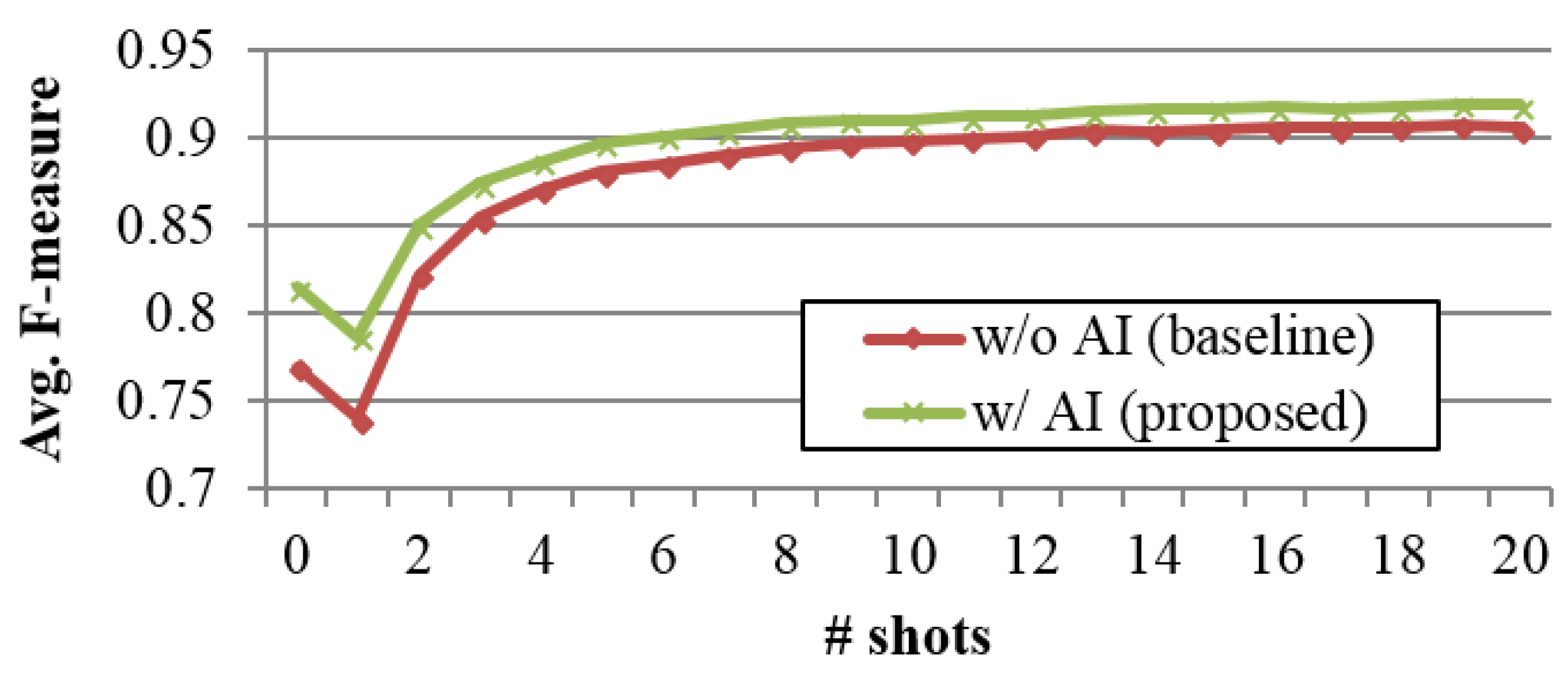

5.2. Results and Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Detailed Evaluation Results

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | T | R | |

| 1 | 584 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 584 | 1.00 |

| 2 | 48 | 135 | 126 | 0 | 0 | 0 | 0 | 71 | 13 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 19 | 6 | 110 | 528 | 0.26 |

| 3 | 0 | 0 | 538 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 3 | 542 | 0.99 |

| 4 | 0 | 0 | 0 | 540 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 540 | 1.00 |

| 5 | 0 | 0 | 0 | 0 | 548 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 548 | 1.00 |

| 6 | 0 | 0 | 0 | 0 | 0 | 553 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 553 | 1.00 |

| 7 | 0 | 0 | 0 | 0 | 0 | 0 | 571 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 571 | 1.00 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 257 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 154 | 0 | 169 | 0 | 0 | 0 | 580 | 0.44 |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 531 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 531 | 1.00 |

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 1.00 |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 10 | 127 | 0 | 0 | 0 | 0 | 0 | 419 | 0 | 0 | 0 | 0 | 0 | 556 | 0.23 |

| 12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 522 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 522 | 1.00 |

| 13 | 197 | 0 | 0 | 0 | 3 | 0 | 44 | 77 | 11 | 0 | 3 | 0 | 148 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 31 | 0 | 541 | 0.27 |

| 14 | 221 | 0 | 0 | 0 | 0 | 0 | 0 | 88 | 86 | 0 | 0 | 0 | 9 | 121 | 0 | 0 | 0 | 0 | 19 | 0 | 0 | 0 | 544 | 0.22 |

| 15 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 527 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 527 | 1.00 |

| 16 | 0 | 0 | 0 | 0 | 0 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 505 | 0 | 0 | 0 | 0 | 0 | 0 | 512 | 0.99 |

| 17 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 568 | 0 | 0 | 0 | 0 | 0 | 568 | 1.00 |

| 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 568 | 0 | 0 | 0 | 0 | 568 | 1.00 |

| 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 539 | 0 | 0 | 0 | 539 | 1.00 |

| 20 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 51 | 110 | 219 | 127 | 0 | 507 | 0.43 |

| 21 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 243 | 3 | 291 | 0 | 541 | 0.54 |

| 22 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 106 | 513 | 619 | 0.83 |

| T | 1050 | 135 | 664 | 540 | 551 | 560 | 615 | 497 | 641 | 579 | 130 | 522 | 157 | 121 | 527 | 505 | 1141 | 619 | 1107 | 241 | 562 | 626 | 12,090 | |

| P | 0.56 | 1.00 | 0.81 | 1.00 | 0.99 | 0.99 | 0.93 | 0.52 | 0.83 | 0.98 | 0.98 | 1.00 | 0.94 | 1.00 | 1.00 | 1.00 | 0.50 | 0.92 | 0.49 | 0.91 | 0.52 | 0.82 | 0.78 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | T | R | |

| 1 | 543 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 41 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 584 | 0.93 |

| 2 | 0 | 316 | 22 | 0 | 0 | 0 | 0 | 57 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 72 | 60 | 528 | 0.60 |

| 3 | 0 | 1 | 536 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 542 | 0.99 |

| 4 | 0 | 0 | 0 | 540 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 540 | 1.00 |

| 5 | 9 | 0 | 0 | 0 | 515 | 0 | 0 | 0 | 0 | 8 | 11 | 0 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 548 | 0.94 |

| 6 | 0 | 0 | 0 | 2 | 0 | 551 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 553 | 1.00 |

| 7 | 0 | 0 | 0 | 0 | 1 | 0 | 570 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 571 | 1.00 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 306 | 0 | 0 | 33 | 0 | 65 | 0 | 0 | 0 | 0 | 0 | 176 | 0 | 0 | 0 | 580 | 0.53 |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 531 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 531 | 1.00 |

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 1.00 |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 8 | 535 | 0 | 0 | 0 | 0 | 0 | 13 | 0 | 0 | 0 | 0 | 0 | 556 | 0.96 |

| 12 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 495 | 0 | 3 | 0 | 0 | 9 | 12 | 0 | 0 | 3 | 0 | 522 | 0.95 |

| 13 | 195 | 0 | 0 | 11 | 2 | 0 | 0 | 60 | 17 | 0 | 0 | 0 | 108 | 121 | 0 | 0 | 0 | 0 | 27 | 0 | 0 | 0 | 541 | 0.20 |

| 14 | 165 | 0 | 0 | 10 | 0 | 0 | 0 | 51 | 12 | 0 | 4 | 0 | 190 | 56 | 0 | 0 | 0 | 0 | 56 | 0 | 0 | 0 | 544 | 0.10 |

| 15 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 527 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 527 | 1.00 |

| 16 | 66 | 0 | 0 | 0 | 0 | 51 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 395 | 0 | 0 | 0 | 0 | 0 | 0 | 512 | 0.77 |

| 17 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 53 | 0 | 0 | 0 | 0 | 0 | 298 | 217 | 0 | 0 | 0 | 0 | 568 | 0.52 |

| 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 27 | 0 | 0 | 0 | 0 | 0 | 203 | 338 | 0 | 0 | 0 | 0 | 568 | 0.60 |

| 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 43 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 496 | 0 | 0 | 0 | 539 | 0.92 |

| 20 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 48 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 170 | 272 | 16 | 1 | 507 | 0.54 |

| 21 | 0 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 45 | 0 | 0 | 0 | 0 | 0 | 6 | 1 | 485 | 0 | 541 | 0.90 |

| 22 | 0 | 81 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 99 | 438 | 619 | 0.71 |

| T | 978 | 402 | 558 | 563 | 518 | 602 | 570 | 565 | 560 | 585 | 663 | 495 | 454 | 180 | 527 | 395 | 523 | 567 | 931 | 275 | 675 | 504 | 12,090 | |

| P | 0.56 | 0.79 | 0.96 | 0.96 | 0.99 | 0.92 | 1.00 | 0.54 | 0.95 | 0.97 | 0.81 | 1.00 | 0.24 | 0.31 | 1.00 | 1.00 | 0.57 | 0.60 | 0.53 | 0.99 | 0.72 | 0.87 | 0.78 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | T | R | |

| 1 | 331 | 0 | 0 | 42 | 49 | 30 | 40 | 6 | 14 | 7 | 5 | 13 | 12 | 4 | 12 | 11 | 2 | 1 | 5 | 0 | 0 | 0 | 584 | 0.57 |

| 2 | 17 | 206 | 78 | 31 | 8 | 13 | 4 | 28 | 3 | 1 | 1 | 5 | 5 | 4 | 7 | 4 | 1 | 4 | 8 | 8 | 42 | 48 | 528 | 0.39 |

| 3 | 8 | 35 | 437 | 6 | 2 | 2 | 2 | 3 | 1 | 0 | 0 | 1 | 1 | 0 | 8 | 5 | 0 | 0 | 1 | 1 | 5 | 24 | 542 | 0.81 |

| 4 | 56 | 0 | 1 | 303 | 24 | 41 | 17 | 9 | 15 | 27 | 11 | 4 | 8 | 3 | 3 | 8 | 3 | 2 | 4 | 0 | 0 | 0 | 540 | 0.56 |

| 5 | 66 | 0 | 0 | 26 | 293 | 19 | 39 | 5 | 11 | 25 | 21 | 14 | 7 | 3 | 8 | 2 | 5 | 3 | 1 | 0 | 0 | 0 | 548 | 0.53 |

| 6 | 69 | 1 | 1 | 77 | 26 | 245 | 18 | 16 | 22 | 15 | 14 | 5 | 11 | 6 | 8 | 8 | 2 | 3 | 7 | 1 | 0 | 0 | 553 | 0.44 |

| 7 | 69 | 0 | 0 | 25 | 57 | 21 | 291 | 9 | 23 | 6 | 20 | 10 | 12 | 5 | 6 | 7 | 5 | 3 | 3 | 1 | 0 | 0 | 571 | 0.51 |

| 8 | 42 | 2 | 2 | 46 | 28 | 33 | 20 | 109 | 19 | 13 | 43 | 4 | 38 | 19 | 7 | 8 | 13 | 7 | 103 | 11 | 13 | 1 | 580 | 0.19 |

| 9 | 45 | 0 | 0 | 50 | 45 | 32 | 36 | 12 | 225 | 12 | 16 | 9 | 16 | 8 | 5 | 6 | 5 | 4 | 3 | 2 | 0 | 0 | 531 | 0.42 |

| 10 | 14 | 0 | 0 | 46 | 29 | 13 | 7 | 3 | 5 | 354 | 53 | 4 | 1 | 1 | 5 | 2 | 18 | 11 | 0 | 0 | 0 | 0 | 569 | 0.62 |

| 11 | 21 | 0 | 0 | 23 | 35 | 17 | 28 | 7 | 9 | 54 | 233 | 6 | 3 | 1 | 3 | 2 | 74 | 40 | 0 | 1 | 0 | 0 | 556 | 0.42 |

| 12 | 78 | 1 | 0 | 27 | 55 | 16 | 29 | 4 | 14 | 9 | 12 | 168 | 20 | 12 | 25 | 10 | 20 | 14 | 3 | 1 | 3 | 0 | 522 | 0.32 |

| 13 | 118 | 1 | 1 | 44 | 44 | 30 | 36 | 37 | 32 | 8 | 14 | 26 | 41 | 43 | 12 | 15 | 4 | 4 | 24 | 4 | 4 | 0 | 541 | 0.08 |

| 14 | 83 | 0 | 1 | 38 | 43 | 36 | 31 | 41 | 33 | 11 | 17 | 26 | 62 | 42 | 15 | 16 | 7 | 4 | 26 | 8 | 4 | 1 | 544 | 0.08 |

| 15 | 72 | 2 | 6 | 32 | 28 | 24 | 13 | 5 | 9 | 11 | 6 | 23 | 8 | 3 | 263 | 6 | 3 | 4 | 6 | 3 | 1 | 0 | 527 | 0.50 |

| 16 | 92 | 2 | 7 | 40 | 29 | 37 | 26 | 9 | 27 | 9 | 11 | 17 | 11 | 3 | 11 | 162 | 8 | 4 | 6 | 0 | 1 | 0 | 512 | 0.32 |

| 17 | 8 | 0 | 0 | 7 | 11 | 5 | 9 | 1 | 4 | 27 | 134 | 8 | 1 | 1 | 2 | 2 | 232 | 116 | 0 | 0 | 0 | 0 | 568 | 0.41 |

| 18 | 6 | 0 | 0 | 8 | 9 | 4 | 6 | 2 | 5 | 26 | 109 | 7 | 2 | 1 | 2 | 1 | 143 | 234 | 0 | 1 | 0 | 0 | 568 | 0.41 |

| 19 | 16 | 1 | 1 | 11 | 5 | 13 | 8 | 51 | 6 | 2 | 1 | 3 | 12 | 7 | 5 | 3 | 0 | 0 | 360 | 17 | 17 | 1 | 539 | 0.67 |

| 20 | 7 | 5 | 3 | 7 | 3 | 6 | 4 | 34 | 3 | 4 | 5 | 3 | 7 | 8 | 21 | 1 | 2 | 10 | 138 | 165 | 57 | 15 | 507 | 0.32 |

| 21 | 10 | 29 | 4 | 6 | 4 | 5 | 4 | 12 | 1 | 1 | 1 | 7 | 9 | 4 | 7 | 4 | 1 | 0 | 64 | 15 | 343 | 9 | 541 | 0.63 |

| 22 | 7 | 87 | 61 | 5 | 2 | 2 | 4 | 6 | 1 | 0 | 1 | 2 | 2 | 1 | 3 | 5 | 0 | 2 | 24 | 16 | 50 | 340 | 619 | 0.55 |

| T | 1236 | 373 | 603 | 900 | 829 | 643 | 669 | 407 | 480 | 622 | 728 | 365 | 290 | 178 | 437 | 288 | 548 | 473 | 787 | 254 | 541 | 439 | 12,090 | |

| P | 0.27 | 0.55 | 0.72 | 0.34 | 0.35 | 0.38 | 0.43 | 0.27 | 0.47 | 0.57 | 0.32 | 0.46 | 0.14 | 0.23 | 0.60 | 0.56 | 0.42 | 0.50 | 0.46 | 0.65 | 0.63 | 0.77 | 0.44 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | T | R | |

| 1 | 526 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 58 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 584 | 0.90 |

| 2 | 8 | 421 | 0 | 0 | 0 | 0 | 0 | 46 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 4 | 12 | 36 | 528 | 0.80 |

| 3 | 0 | 0 | 542 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 542 | 1.00 |

| 4 | 0 | 0 | 0 | 540 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 540 | 1.00 |

| 5 | 16 | 0 | 0 | 0 | 498 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 34 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 548 | 0.91 |

| 6 | 0 | 0 | 0 | 1 | 0 | 523 | 0 | 0 | 0 | 0 | 0 | 0 | 28 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 553 | 0.95 |

| 7 | 0 | 0 | 0 | 0 | 0 | 0 | 565 | 0 | 3 | 0 | 0 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 571 | 0.99 |

| 8 | 0 | 0 | 0 | 5 | 0 | 0 | 0 | 188 | 0 | 0 | 0 | 0 | 179 | 120 | 0 | 0 | 0 | 0 | 88 | 0 | 0 | 0 | 580 | 0.32 |

| 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 525 | 0 | 0 | 0 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 531 | 0.99 |

| 10 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 569 | 1.00 |

| 11 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 478 | 0 | 2 | 0 | 0 | 0 | 72 | 4 | 0 | 0 | 0 | 0 | 556 | 0.86 |

| 12 | 36 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 483 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 522 | 0.93 |

| 13 | 193 | 0 | 0 | 10 | 0 | 0 | 0 | 10 | 9 | 0 | 0 | 0 | 191 | 191 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 605 | 0.32 |

| 14 | 103 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 3 | 0 | 6 | 0 | 291 | 136 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 544 | 0.25 |

| 15 | 22 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 505 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 527 | 0.96 |

| 16 | 27 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 485 | 0 | 0 | 0 | 0 | 0 | 0 | 512 | 0.95 |

| 17 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 51 | 0 | 2 | 0 | 0 | 0 | 449 | 66 | 0 | 0 | 0 | 0 | 568 | 0.79 |

| 18 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 7 | 0 | 24 | 1 | 0 | 0 | 24 | 512 | 0 | 0 | 0 | 0 | 568 | 0.90 |

| 19 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 28 | 0 | 0 | 0 | 0 | 37 | 0 | 0 | 0 | 0 | 0 | 474 | 0 | 0 | 0 | 539 | 0.88 |

| 20 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 163 | 344 | 0 | 0 | 507 | 0.68 |

| 21 | 0 | 69 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 50 | 0 | 0 | 0 | 0 | 0 | 0 | 34 | 388 | 0 | 541 | 0.72 |

| 22 | 0 | 114 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 47 | 458 | 619 | 0.74 |

| T | 931 | 604 | 542 | 556 | 498 | 523 | 565 | 277 | 540 | 569 | 542 | 483 | 905 | 449 | 505 | 485 | 545 | 583 | 726 | 382 | 450 | 494 | 12,154 | |

| P | 0.56 | 0.70 | 1.00 | 0.97 | 1.00 | 1.00 | 1.00 | 0.68 | 0.97 | 1.00 | 0.88 | 1.00 | 0.21 | 0.30 | 1.00 | 1.00 | 0.82 | 0.88 | 0.65 | 0.90 | 0.86 | 0.93 | 0.81 |

References

- Herath, S.; Harandi, M.; Fatih, P. Going deeper into action recognition: A survey. Image Vis. Comput. 2017, 60, 4–21. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. arXiv, 2017; arXiv:1707.03502. [Google Scholar]

- Larochelle, H.; Erhan, D.; Bengio, Y. Zero-data Learning of New Tasks. In Proceedings of the National Conference on Artificial Intelligence (AAAI), Chicago, IL, USA, 13–17 July 2008. [Google Scholar]

- Frome, A.; Corrado, G.S.; Shlens, J.; Bengio, S.; Dean, J.; Ranzato, M.A.; Mikolov, T. DeViSE: A Deep Visual-Semantic Embedding Model. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Fu, Y.; Xiang, T.; Jiang, Y.G.; Xue, X.; Sigal, L.; Gong, S. Recent Advances in Zero-Shot Recognition: Toward Data-Efficient Understanding of Visual Content. IEEE Signal Process. Mag. 2018, 35, 112–125. [Google Scholar] [CrossRef]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Learning to detect unseen object classes by between-class attribute transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Attribute-based classification for zero-shot visual object categorization. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 453–465. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Kuipers, B.; Savarese, S. Recognizing human actions by attributes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011. [Google Scholar]

- Cheng, H.T.; Griss, M.; Davis, P.; Li, J.; You, D. Towards zero-shot learning for human activity recognition using semantic attribute sequence model. In Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp), Zurich, Switzerland, 8–12 September 2013. [Google Scholar]

- Xu, X.; Hospedales, T.M.; Gong, S. Multi-Task Zero-Shot Action Recognition with Prioritised Data Augmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Li, Y.; Hu, S.H.; Li, B. Recognizing unseen actions in a domain-adapted embedding space. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Qin, J.; Liu, L.; Shao, L.; Shen, F.; Ni, B.; Chen, J.; Wang, Y. Zero-shot action recognition with error-correcting output codes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Iqbal, U.; Milan, A.; Gall, J. PoseTrack: Joint Multi-Person Pose Estimation and Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, Y.; Shen, C.; Wei, X.S.; Liu, L.; Yang, J. Adversarial PoseNet: A Structure-aware Convolutional Network for Human Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Güler, R.A.; Neverova, N.; Kokkinos, I. Densepose: Dense human pose estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Palatucci, M.; Pomerleau, D.; Hinton, G.E.; Mitchell, T.M. Zero-shot learning with semantic output codes. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Vancouver, BC, Canada, 6–11 December 2009. [Google Scholar]

- Cheng, H.T.; Sun, F.T.; Griss, M.; Davis, P.; Li, J.; You, D. NuActiv: Recognizing Unseen New Activities Using Semantic Attribute-Based Learning. In Proceedings of the International Conference on Mobile Systems, Applications, and Services (MobiSys), Taipei, Taiwan, 25–28 June 2013. [Google Scholar]

- Xu, X.; Hospedales, T.; Gong, S. Semantic embedding space for zero-shot action recognition. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Socher, R.; Ganjoo, M.; Manning, C.D.; Ng, A. Zero-shot learning through cross-modal transfer. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Jayaraman, D.; Grauman, K. Zero-shot recognition with unreliable attributes. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montrèal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Alexiou, I.; Xiang, T.; Gong, S. Exploring synonyms as context in zero-shot action recognition. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Wang, Q.; Chen, K. Alternative semantic representations for zero-shot human action recognition. In Proceedings of the Joint European Conference on Machine Learning & Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Skopje, Macedonia, 18–22 September 2017. [Google Scholar]

- Qin, J.; Wang, Y.; Liu, L.; Chen, J.; Shao, L. Beyond Semantic Attributes: Discrete Latent Attributes Learning for Zero-Shot Recognition. IEEE Signal Process. Lett. 2016, 23, 1667–1671. [Google Scholar] [CrossRef]

- Tong, B.; Klinkigt, M.; Chen, J.; Cui, X.; Kong, Q.; Murakami, T.; Kobayashi, Y. Adversarial Zero-Shot Learning with Semantic Augmentation. In Proceedings of the National Conference On Artificial Intelligence (AAAI), San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Montrèal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Liu, H.; Sun, F.; Fang, B.; Guo, D. Cross-Modal Zero-Shot-Learning for Tactile Object Recognition. IEEE Trans. Syst. Man Cybern. Syst. 2018. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. 2014, 46, 33. [Google Scholar] [CrossRef]

- Mukhopadhyay, S.C. Wearable sensors for human activity monitoring: A review. IEEE Sens. J. 2015, 15, 1321–1330. [Google Scholar] [CrossRef]

- Guan, X.; Raich, R.; Wong, W.K. Efficient Multi-Instance Learning for Activity Recognition from Time Series Data Using an Auto-Regressive Hidden Markov Model. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Bulling, A.; Ward, J.A.; Gellersen, H. Multimodal recognition of reading activity in transit using body-worn sensors. ACM Trans. Appl. Percept. 2012, 9, 2. [Google Scholar] [CrossRef]

- Adams, R.J.; Parate, A.; Marlin, B.M. Hierarchical Span-Based Conditional Random Fields for Labeling and Segmenting Events in Wearable Sensor Data Streams. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Zheng, Y.; Wong, W.K.; Guan, X.; Trost, S. Physical Activity Recognition from Accelerometer Data Using a Multi-Scale Ensemble Method. In Proceedings of the Innovative Applications of Artificial Intelligence Conference (IAAI), Bellevue, WA, USA, 14–18 July 2013. [Google Scholar]

- Yang, J.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Jiang, W.; Yin, Z. Human activity recognition using wearable sensors by deep convolutional neural networks. In Proceedings of the ACM International Conference on Multimedia (MM), Brisbane, Australia, 26–30 October 2015. [Google Scholar]

- Ronao, C.A.; Cho, S.B. Deep convolutional neural networks for human activity recognition with smartphone sensors. In Proceedings of the International Conference on Neural Information Processing (ICONIP), Istanbul, Turkey, 9–12 November 2015. [Google Scholar]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Hammerla, N.Y.; Halloran, S.; Ploetz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Wang, W.; Miao, C.; Hao, S. Zero-shot human activity recognition via nonlinear compatibility based method. In Proceedings of the International Conference on Web Intelligence (WI), Leipzig, Germany, 23–26 August 2017. [Google Scholar]

- Al-Naser, M.; Ohashi, H.; Ahmed, S.; Nakamura, K.; Akiyama, T.; Sato, T.; Nguyen, P.; Dengel, A. Hierarchical Model for Zero-shot Activity Recognition using Wearable Sensors. In Proceedings of the International Conference on Agents and Artificial Intelligence (ICAART), Madeira, Portugal, 16–18 January 2018. [Google Scholar]

- Xian, Y.; Schiele, B.; Akata, Z. Zero-Shot Learning—The Good, the Bad and the Ugly. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Kumar Verma, V.; Arora, G.; Mishra, A.; Rai, P. Generalized Zero-Shot Learning via Synthesized Examples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

Sample Availability: The experimental data used in this study are available from the authors at http://projects.dfki.uni-kl.de/zsl/data/. |

| ID | Pose | Variation | Involved Body Joint |

|---|---|---|---|

| 1 | Standing | no big variation | - |

| 2 | Sitting | hands on a table, on knees, or straight down | elbows, hands |

| 3 | Squatting | hands hold on to sth, on knees, or straight down | elbows, hands |

| 4, 5 | Raising arm (L, R) | a hand on hip, or straight down | elbow, hand |

| 6, 7 | Pointing (L, R) | a hand on hip, or straight down | elbow, hand |

| 8 | Folding arm | wrist curled or straight, hands clenched or normal | wrist, hands |

| 9 | Deep breathing | head up or front | head |

| 10 | Stretching up | head up or front | head |

| 11 | Stretching forward | waist straight or half-bent | waist |

| 12 | Waist bending | no big variation | - |

| 13, 14 | Waist twisting (L, R) | head left (right) or front, arms down or left (right) | head, shoulders, elbows |

| 15, 16 | Heel to back (L, R) | a hand hold on to sth, straight down, or stretch horizontally | shoulder, elbow, hand |

| 17, 18 | Stretching calf (L, R) | head front or down | head |

| 19 | Boxing | head front or down | head |

| 20 | Baseball hitting | head left or front | head |

| 21 | Skiing | head front or down | head |

| 22 | Thinking | head front or down, wrist reverse curled or normal, hand clenched or normal | head, wrist, hands |

| Joint | Type | Value |

|---|---|---|

| head | classification | up, down, left, right, front |

| shoulder | classification | up, down, left, right, front |

| elbow | regression | 0 (straight)–1 (bend) |

| wrist | regression | 0 (reverse curl)–1 (curl) |

| hand | classification | normal, grasp, pointing |

| waist | classification | straight, bend, twist-L, twist-R |

| hip joint | regression | 0 (straight)–1 (bend) |

| knee | regression | 0 (straight)–1 (bend) |

| Pose\Joint | He | S(L) | S(R) | E(L) | E(R) | Wr(L) | Wr(R) | Ha(L) | Ha(R) | Wa | HJ(L) | HJ(R) | K(L) | K(R) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Standing | F | D | D | 0 | 0 | 0.5 | 0.5 | N | N | S | 0 | 0 | 0 | 0 |

| 2 | Sitting | F | D | D | 0 | 0 | 0.5 | 0.5 | N | N | S | 0.5 | 0.5 | 0.5 | 0.5 |

| 3 | Squatting | F | D | D | 0 | 0 | 0.5 | 0.5 | N | N | S | 1 | 1 | 1 | 1 |

| 4 | Raising arm (L) | F | U | D | 0 | 0 | 0.5 | 0.5 | N | N | S | 0 | 0 | 0 | 0 |

| 5 | Raising arm (R) | F | D | U | 0 | 0 | 0.5 | 0.5 | N | N | S | 0 | 0 | 0 | 0 |

| 6 | Pointing (L) | F | F | D | 0 | 0 | 0.5 | 0.5 | P | N | S | 0 | 0 | 0 | 0 |

| 7 | Pointing (R) | F | D | F | 0 | 0 | 0.5 | 0.5 | N | P | S | 0 | 0 | 0 | 0 |

| 8 | Folding arm | F | D | D | 0.5 | 0.5 | 0.5 | 0.5 | N | N | S | 0 | 0 | 0 | 0 |

| 9 | Deep breathing | F | L | R | 0 | 0 | 0.5 | 0.5 | N | N | S | 0 | 0 | 0 | 0 |

| 10 | Stretching up | F | U | U | 0 | 0 | 0 | 0 | N | N | S | 0 | 0 | 0 | 0 |

| 11 | Stretching forward | F | F | F | 0 | 0 | 0 | 0 | N | N | S | 0 | 0 | 0 | 0 |

| 12 | Waist bending | F | D | D | 0 | 0 | 0.5 | 0.5 | N | N | B | 0 | 0 | 0 | 0 |

| 13 | Waist twisting (L) | L | L | L | 0 | 0.5 | 0.5 | 0.5 | N | N | TwL | 0 | 0 | 0 | 0 |

| 14 | Waist twisting (R) | R | R | R | 0.5 | 0 | 0.5 | 0.5 | N | N | TwR | 0 | 0 | 0 | 0 |

| 15 | Heel to back (L) | F | D | D | 0 | 0 | 0.5 | 0.5 | G | N | S | 0 | 0 | 1 | 0 |

| 16 | Heel to back (R) | F | D | D | 0 | 0 | 0.5 | 0.5 | N | G | S | 0 | 0 | 0 | 1 |

| 17 | Stretching calf (L) | F | F | F | 0 | 0 | 0 | 0 | N | N | S | 0 | 0.3 | 0 | 0.3 |

| 18 | Stretching calf (R) | F | F | F | 0 | 0 | 0 | 0 | N | N | S | 0.3 | 0 | 0.3 | 0 |

| 19 | Boxing | F | D | D | 1 | 1 | 0.5 | 0.5 | G | G | S | 0 | 0 | 0 | 0 |

| 20 | Baseball hitting | L | D | D | 0.5 | 0.5 | 0.5 | 0.5 | G | G | S | 0.5 | 0 | 0.5 | 0 |

| 21 | Skiing | F | D | D | 0.5 | 0.5 | 0.5 | 0.5 | G | G | S | 0.3 | 0.3 | 0.3 | 0.3 |

| 22 | Thinking | F | D | D | 0.5 | 1 | 0.5 | 0 | N | N | S | 1 | 1 | 0.5 | 0.5 |

| Pose | He | S(L) | S(R) | E(L) | E(R) | Wr(L) | Wr(R) | Ha(L) | Ha(R) | Wa | HJ(L) | HJ(R) | K(L) | K(R) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Standing | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | Sitting | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 3 | Squatting | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 4 | Raising arm (L) | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 |

| 5 | Raising arm (R) | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 |

| 6 | Pointing (L) | 1 | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 |

| 7 | Pointing (R) | 1 | 1 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 1 |

| 8 | Folding arm | 1 | 1 | 1 | 1 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 9 | Deep breathing | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 10 | Stretching up | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 11 | Stretching forward | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 12 | Waist bending | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 13 | Waist twisting (L) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 14 | Waist twisting (R) | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 15 | Heel to back (L) | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 16 | Heel to back (R) | 1 | 0 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 | 1 |

| 17 | Stretching calf (L) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 18 | Stretching calf (R) | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 19 | Boxing | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 20 | Baseball hitting | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 21 | Skiing | 0 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 1 | 1 |

| 22 | Thinking | 0 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 | 1 |

| Pose | DAP [7] | NN w/o AI | NN w/random AI | NN w/AI (Proposed) |

|---|---|---|---|---|

| Standing | 0.7148 | 0.6953 | 0.3639 | 0.6944 |

| Sitting | 0.4072 | 0.6796 | 0.4567 | 0.7438 |

| Squatting | 0.8922 | 0.9745 | 0.7637 | 1.0000 |

| RaiseArmL | 1.0000 | 0.9791 | 0.4212 | 0.9854 |

| RaiseArmR | 0.9973 | 0.9662 | 0.4250 | 0.9522 |

| PointingL | 0.9937 | 0.9541 | 0.4090 | 0.9721 |

| PointingR | 0.9629 | 0.9991 | 0.4688 | 0.9947 |

| FoldingArm | 0.4773 | 0.5345 | 0.2206 | 0.4387 |

| DeepBreathing | 0.9061 | 0.9734 | 0.4457 | 0.9804 |

| StretchingUp | 0.9913 | 0.9861 | 0.5947 | 1.0000 |

| StretchingForward | 0.3703 | 0.8778 | 0.3625 | 0.8707 |

| WaistBending | 1.0000 | 0.9735 | 0.3795 | 0.9612 |

| WaistTwistingL | 0.4241 | 0.2171 | 0.0995 | 0.2642 |

| WaistTwistingR | 0.3639 | 0.1547 | 0.1150 | 0.2928 |

| HeelToBackL | 1.0000 | 1.0000 | 0.5458 | 0.9787 |

| HeelToBackR | 0.9931 | 0.8710 | 0.4042 | 0.9729 |

| StretchingCalfL | 0.6647 | 0.5463 | 0.4160 | 0.8068 |

| StretchingCalfR | 0.9570 | 0.5956 | 0.4498 | 0.8897 |

| Boxing | 0.6549 | 0.6748 | 0.5434 | 0.7494 |

| BaseballHitting | 0.5856 | 0.6957 | 0.4328 | 0.7739 |

| Skiing | 0.5277 | 0.7977 | 0.6334 | 0.7830 |

| Thinking | 0.8241 | 0.7801 | 0.6420 | 0.8230 |

| avg. | 0.7595 | 0.7694 | 0.4361 | 0.8149 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ohashi, H.; Al-Naser, M.; Ahmed, S.; Nakamura, K.; Sato, T.; Dengel, A. Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors. Sensors 2018, 18, 2485. https://doi.org/10.3390/s18082485

Ohashi H, Al-Naser M, Ahmed S, Nakamura K, Sato T, Dengel A. Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors. Sensors. 2018; 18(8):2485. https://doi.org/10.3390/s18082485

Chicago/Turabian StyleOhashi, Hiroki, Mohammad Al-Naser, Sheraz Ahmed, Katsuyuki Nakamura, Takuto Sato, and Andreas Dengel. 2018. "Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors" Sensors 18, no. 8: 2485. https://doi.org/10.3390/s18082485

APA StyleOhashi, H., Al-Naser, M., Ahmed, S., Nakamura, K., Sato, T., & Dengel, A. (2018). Attributes’ Importance for Zero-Shot Pose-Classification Based on Wearable Sensors. Sensors, 18(8), 2485. https://doi.org/10.3390/s18082485