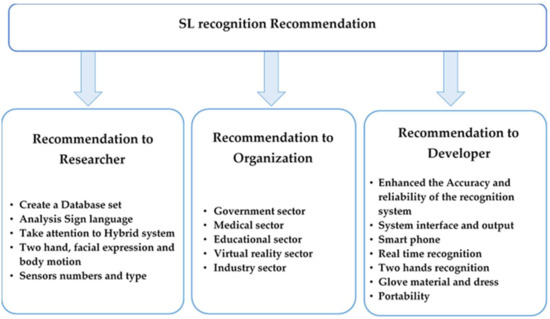

Abstract

Loss of the ability to speak or hear exerts psychological and social impacts on the affected persons due to the lack of proper communication. Multiple and systematic scholarly interventions that vary according to context have been implemented to overcome disability-related difficulties. Sign language recognition (SLR) systems based on sensory gloves are significant innovations that aim to procure data on the shape or movement of the human hand. Innovative technology for this matter is mainly restricted and dispersed. The available trends and gaps should be explored in this research approach to provide valuable insights into technological environments. Thus, a review is conducted to create a coherent taxonomy to describe the latest research divided into four main categories: development, framework, other hand gesture recognition, and reviews and surveys. Then, we conduct analyses of the glove systems for SLR device characteristics, develop a roadmap for technology evolution, discuss its limitations, and provide valuable insights into technological environments. This will help researchers to understand the current options and gaps in this area, thus contributing to this line of research.

1. Introduction

According to the statistics of the World Federation of the Deaf and the World Health Organization, approximately 70 million people in the world are deaf–mute. A total of 360 million people are deaf, and 32 million of these individuals are children [1]. The majority of speech- and hearing-impaired people cannot read or write in regular languages. Sign language (SL) is the native language used by the deaf and mute to communicate with others. SL relies primarily on gestures rather than voice to convey meaning, and it combines the use of finger shapes, hand movements, and facial expressions [2]. This language has the following main defects: a lot of hand movements, a limited vocabulary, and learning difficulties. Furthermore, SL is unfamiliar to those who are not deaf and mute, and disabled people face serious difficulties in communicating with able individuals. This communication barrier adversely affects the lives and social relationships of deaf people [3]. Thus, dumb people need to use a translator device to communicate with able individuals. This can be achieved by developing a glove equipped with sensors and an electronic circuit. Several benefits of using this device are that no complex data processing is needed [4]; there are no limitations on movements such as sitting behind a desk or chair; hand shape recognition is not affected by the background condition [5,6]; it is a lightweight, SLR-based glove device that can be carried to make mobility easy and comfortable [7,8]; and it is a recognition system that can be employed for learning SL for both dumb and able people [9]. Furthermore, numerous kinds of applications are currently involved in gesture recognition systems such as SLR, substitutional computer interfaces, socially assistive robotics, immersive gaming, virtual objects, remote control, medicine-health care, gesture recognition of hand/body language, etc. [10,11,12,13,14,15]. These benefits are described in detail in Section 5.1. The goal of this study is to survey glove systems used for SLR to obtain knowledge on current systems in this field. In addition, a roadmap is presented for technology evolution; it exhibits features of SLR systems and discusses the limitations of current technology. This objective can be achieved by answering the following six research questions: (1) How many types of relevant studies are published on glove-based SLR? (2) To what extent have SLR systems been elaborated on in the preliminary studies in terms of detail and technologies? (3) Which types of movements have been recognized in previous studies? (4) What types of sensors have been used in the primary publications? (5) What languages did the glove systems develop for SLR in the preliminary studies? (6) How were the effectiveness and efficiency of the SLR systems evaluated in the initial studies? The aim was to understand the options and gaps available in this field to support researchers by providing valuable insights into technological environments. The steps taken in this study to provide Systematic Literature Review and Systematic Mapping Study are in accordance with the steps explained in the David Moher paper [16] and other research papers [11,17,18,19,20,21].

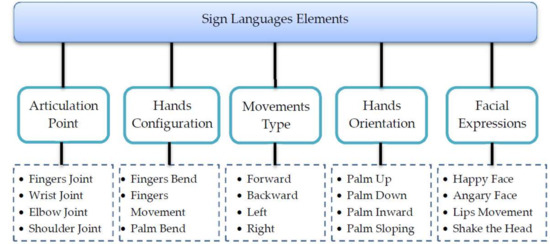

SL is a visual–spatial language based on positional and visual components, such as the shape of fingers and hands, the location and orientation of the hands, and arm and body movements. These components are used together to convey the meaning of an idea. The phonological structure of SL generally has five elements (Figure 1). Each gesture in SL is a combination of five building blocks. These five blocks represent the valuable elements of SL and can be exploited by automated intelligent systems for SL recognition (SLR) [22].

Figure 1.

The essential elements related to sign language gesture formation.

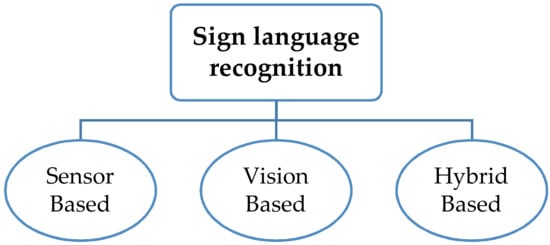

Scholarly interventions to overcome disability-related difficulties are multiple and systematic and vary according to the context. One of the important interventions is SLR systems that are utilized to translate the signs of SL into text or speech to establish communication with individuals who do not know these signs [18]. SLR systems based on the sensory glove are among the most significant endeavors aimed at procuring data for the motion of human hands. Three approaches (Figure 2), namely, vision based, sensor-based, and a combination of the two, are adopted to capture hand configurations and recognize the corresponding meanings of gestures [23].

Figure 2.

Sign language recognition approaches.

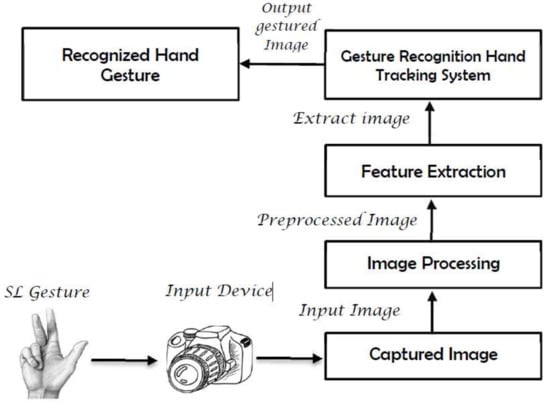

Vision-based systems use cameras as primary tools to obtain the necessary input data (Figure 3). The main advantage of using a camera is that it removes the need for sensors in sensory gloves and reduces the building costs of the system. Cameras are quite cheap, and most laptops use a high specification camera because of the blur caused by a web camera. However, despite the high specification camera, which most smartphones possess [18], there are various problems such as the limited field of view of the capturing device, high computational costs [24,25], and the need for multiple cameras to obtain robust results (due to problems of depth and occlusion [26,27]); these issues are inherent to this system and render the entire system futile for the development of real-time recognition applications. In [28], two new feature extraction techniques of Combined Orientation Histogram and Statistical (COHST) Features and Wavelet Features are presented for the recognition of static signs of numbers 0 to 9, of American Sign Language (ASL). System performance is measured by extracting four different features—Orientation Histogram, Statistical Measures, COHST Features, and Wavelet Features—using a neural network. The best performance of the system reaches 98.17% accuracy with Wavelet features. In [29], a system using Wavelet transform and neural networks (NN) is presented to recognize the static gestures of alphabets in Persian sign language (PSL). It is able to recognize 32 selected PSL alphabets with an average recognition rate of 94.06%. In [30], ASL recognition is performed using Hough transform and neural NN. Here, only 20 different signs of alphabets and numbers were used. The performance of the system was measured by varying the threshold level for Canny edge detection and the number of samples for each sign used. The average recognition rate obtained was 92.3% for the threshold value of 0.25. In [31], a vision-based system with B-Spline Approximation and a support vector machines (SVM) classifier were used for the recognition of Indian Sign Language Alphabets and Numerals. Fifty samples of each alphabet from A–Z and numbers from 0–5 were used, and the system achieved an average accuracy of 90% and 92% for alphabets and numbers, respectively. In [32], a 3D model of the hand posture is generated from two 2D images from two perspectives that are weighted and linearly combined to produce single 3D features aimed at classifying 50 isolated ArsL words using a Hybrid pulse-coupled neural network (PCNN) as the feature generator technique, followed by nondeterministic finite automaton (NFA). Then, the ‘‘best-match” algorithm is used to find the most probable meaning of a gesture. The recognition accuracy reaches 96%. In [33], a combination of local binary patterns (LBP) and principal component analysis (PCA) is used to extract features that are fed into a Hidden Markov Model (HMM) to recognize a lexicon of 23 isolated ArSL words. Occlusion is not resolved, as any occlusion states are handled as one object, and recognition is carried out. The system achieves a recognition rate of 99.97% in signer-dependent mode. A dynamic skin detector based on the face color tone is used in [34] for hand segmentation. Then, a skin-blob tracking technique is used to identify and track the hands. A dataset of 30 isolated words is used. The proposed system has a recognition rate of 97%. Different transformation techniques (viz. Fourier, Hartley, and Log-Gabor transforms) were used in [27], for the extraction and description of features from an accumulation of sign frames into a single image of an Arabic Sign language dataset. Three transformation techniques were applied in total, as well as slices from an accumulation of sign frames. The system was tested using three classifiers: k-nearest neighbor (KNN), SVM, and. Overall, the system’s accuracy reached over 98% for Hartley transform, which is comparable with other works using the same dataset.

Figure 3.

A flow chart of the processing steps used in the vision-based system for SLR.

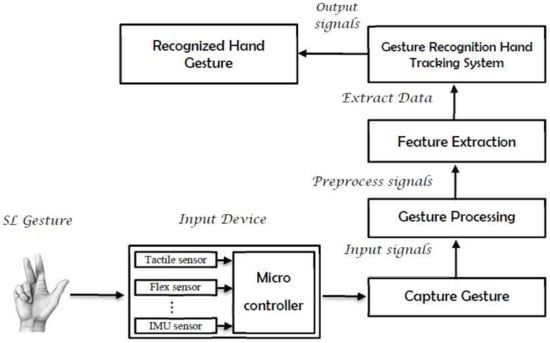

The use of a certain type of instrumented gloves that are fitted with various sensors, namely, flexion (or bend) sensors, accelerometers (ACCs), proximity sensors, and abduction sensors, is an alternative approach with which to acquire gesture-related data (Figure 4). These sensors are used to measure the bend angles for fingers, the abduction between fingers, and the orientation (roll, pitch, and yaw) of the wrist. Degrees of freedom (DoF) that can be realized using such gloves vary from 5 to 22, depending on the number of sensors embedded in the glove. A major advantage of glove-based systems over vision-based systems is that gloves can directly report relevant and required data (degree of bend, pitch, etc.) in terms of voltage values to the computing device [35], thus eliminating the need to process raw data into meaningful values. By contrast, vision-based systems need to apply specific tracking and feature extraction algorithms to raw video streams, thereby increasing the computational overhead [10,36]. Later, we will review the articles related to this approach in detail.

Figure 4.

The main phases with regard to collecting and recognizing SL gestures data using the glove-based system.

The third method of collecting raw gesture data employs a hybrid approach that combines glove- and camera-based systems. This approach uses mutual error elimination to enhance the overall accuracy and precision. However, not much work has been carried out in this direction due to the cost and computational overheads of the entire setup. Nevertheless, augmented reality systems produce promising results when used with hybrid tracking methodology [3].

2. Materials and Methods

Painstaking and intensive endeavors to find realistic and feasible solutions to overcome communication obstacles are major challenges encountered by the deaf and mute. Therefore, the implementation of a system that identifies SL in its software and hardware branches has been emphasized.

2.1. Pertaining to System Materials

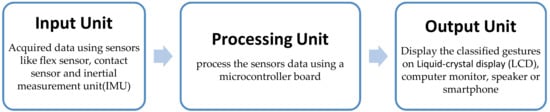

With regard to hardware components, the glove-based recognition system is composed of three main units (Figure 5): input, processing, and output.

Figure 5.

The main hardware components of the glove-based system.

2.1.1. Input Unit

Owing to scientific and technical developments in the field of electronic circuits, sensors have attracted attention, which have resulted in investments in sensor features in many small and large applications. The sensor is the main player in measuring hand data in terms of bending (shape), movement, rotation, and position of the hand.

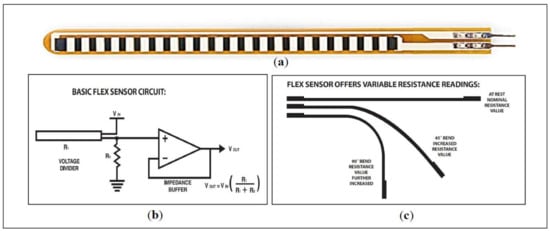

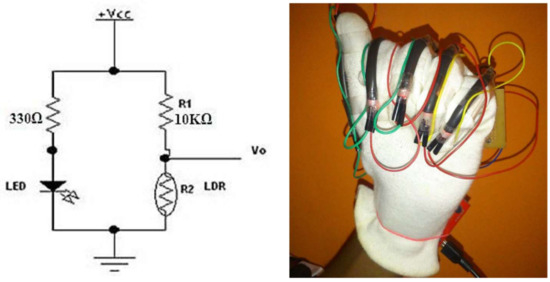

a. Sensors Used to Detect Finger Bending

The most prominent movement that can be performed by the four fingers (pinkie, ring, middle, and index) is bending towards the palm and then returning to the initial position. The thumb has unique advantages over the other fingers, thus enabling it to move freely in six degrees of freedom (DOF). In general, the predominant movement in SL related to fingers is bending. Finger tilt can be detected by using different methods, as shown in the literature. Flex sensor (Figure 6), which determines the amount of finger curvature used by a wide number of researchers and developers, is the most common of these kinds of sensors [21,37,38,39,40]. The Flex sensor technology is based on resistive carbon elements. When the substrate is bent, the sensor produces a resistance output correlated to the bend radius—the smaller the radius, the higher the resistance value. Thus, the resistance of the flex sensor increases as the body of the component bends. The flex sensor is very thin and light weight, so it is also very comfortable, and the most common available sizes are 2.2 inches and 4.5 inches. The price of the flex sensor is between $9 and $15, depending on the size. The optical sensor used to measure the angle of the finger curvature—in order to determine its shape by the amount of light passing through the channel—depends on the optic technology [41,42,43]. Optical sensors are electronic detectors that convert light, or a change in light, into an electronic signal. Furthermore, the combined pair of light-emitting diode-light dependent resistor (LED-LDR) is used (Figure 7), but not frequently, to detect bend of the finger. LDR is a component that has a (variable) resistance that changes with the light intensity that falls upon it [44]. Both of these optic technologies work on the measure of light intensity so that when the finger is straight, the density of received light will be very significant, and the opposite will be true when the finger bends. Both are suitable for handicapped individuals whose fingers can barely perform even very small motions.

Figure 6.

(a) Flex sensor, (b) flex bend levels, and (c)voltage divider circuit [2].

Figure 7.

A circuit diagram of LED-LDR and the sensors’ positions on the glove [37].

A tactile sensor (also known as a force sensitive resistor) is a robust polymer-thick film device whose resistance changes when a force is applied (Figure 8). This sensor can measure force between 1 kN to 100 kN. The tactile sensor resistance changes as more pressure or force is applied. When there is no pressure applied, the sensor looks like an open circuit, and as the pressure increases, the resistance decreases [35]. Thus, the tactile sensor is employed to calculate the amount of force placed on the finger, by which it can be determined whether the finger is either curved or straight [39,45]. A tactile sensor has a round, 0.16 inch, 0.5 inch, and 1 inch area diameter. The average cost of a tactile sensor is between $6 and $25.

Figure 8.

Tactile Sensor of 0.5 inch in size [8].

Another principle was exploited for the purpose of figuring out the shape of the finger by an applied magnetic field and to determine the voltage variation over an electrical conductor. Accordingly, the above could be achieved through the use of Hall Effect Magnetic Sensor (HEMS). The unipolar Hall Effect sensor (MH183) used can detect the south poles of the magnet and is readily available. Effect sensors are placed on the tips of the fingers, and the magnet is placed on the palm with the south pole facing the top, as shown in Figure 9. When the South Pole is brought to the front face of the Hall sensor, it generates a 0.1–0.4V output. For the same purpose, other articles relied on the use of the ACC sensor technique to ascertain the shape of the fingers [4,11,14,46]. The sensor is lightweight with a high level of recognition accuracy, and has a low manufacturing cost.

Figure 9.

The sensory glove consists of Four Hall sensors on the tip of the four fingers [36].

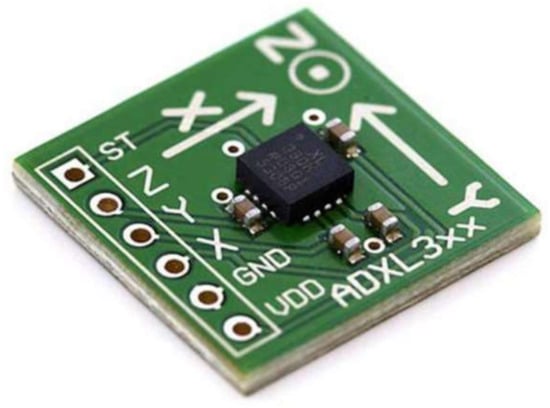

b. Sensors Used to Detect Movement and the Orientation of the Hand

Considering that SL postures are made up of hand and wrist movements, they should be considered. Despite the benefits of using sensors to determine the shape of the finger, hand movements cannot be distinguished by these sensors. The features of the ACC sensor enable it to distinguish the movement and rotation of the wrist as another factor in addition to its capability to determine the shape of the finger. Therefore, the three-axis ACC that supplies the differences in acceleration along every axis is used to capture the orientation and movement of the wrist to correctly represent the important function of a sensor glove. The ADXL335 (Adafruit Industries, New York, NY, USA) (Figure 10) is a thin, small, low power, complete 3-axis accelerometer with signal conditioned voltage outputs. It measures acceleration with a minimum full-scale range 3g. This device measures the static acceleration of gravity in tilt-sensing applications and dynamic acceleration resulting from motion, shock, or vibration. The ADXL335 contains a polysilicon surface micro machined structure built on top of a silicon wafer. Polysilicon springs suspend the structure over the surface of the wafer and provide resistance against acceleration forces. A differential capacitor, consisting of independent fixed plates attached to the moving mass, measures the deflection of the structure. Acceleration unbalances the capacitor, which, in turn, results in a sensor output with amplitude proportional to the acceleration experienced. The price varies from $9 to $24 depending on ACC version.

Figure 10.

The ADXL335 3-axis ACC with a three-output analog pin x, y, and z [47].

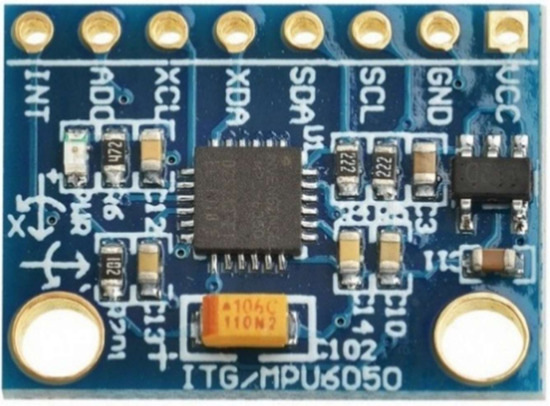

Combining a 3-axis ACC and a 3-axis gyroscope on the same board makes it suited for measuring tracked motion; the device presents data regarding accelerations in all three directions plus rotations around each axis [42,48,49]. Gyroscopes measure angular velocity and how fast something is spinning about an axis. If one is trying to monitor the orientation of an object in motion, an accelerometer may not provide enough information to know exactly how it is oriented. Unlike accelerometers, gyros are not affected by gravity, so they make a great complement to each other. One usually sees angular velocity represented in units of rotations per minute (RPM), or degrees per second (°/s). The three axes of rotation are either referenced as x, y, and z, or roll, pitch, and yaw. The IMU namely MPU6050 (InvenSense, San Jose, CA, USA) (Figure 11) is a 6 degree of freedom chip from Invensense company, containing a tri axis accelerometer and a tri axis gyroscope. It is operated off a 3.3V supply and communicates via I2C serial protocol at a maximum speed of 400 kHz. The accelerometer measurement is read by the microcontroller unit (MCU), using 16 -bitAnalog to Digital Conversion (ADC). The price of MPU6050 is about $30.

Figure 11.

The six DoF IMU, MPU6050 chip consists of a 3-axis ACC and 3-axis gyroscope [50].

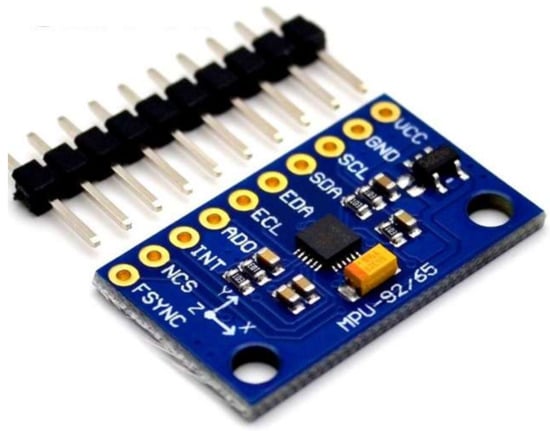

Furthermore, adding a 3-axis magnetometer to a 3-axis accelerometer and a 3-axis gyroscope will obtain a 9-axis inertial measurement unit (IMU) sensor which provides 9DoF plus roll, yaw, and pitch information during motion and orientation of the hand. Amagnetic field sensor is a small-scale microelectromechanical systems(MEMS) device for detecting and measuring magnetic fields (Magnetometer) [46,51,52]. The IMU version MPU-9250 (Figure 12) replaces the popular end of Life (EOL) MPU-9150 and decreases power consumption by 44 percent. According to InvenSense, “Gyro noise performance is 3× better, and compass full-scale range is over 4× better than competitive offerings.” The MPU-9250 uses 16-bit Analog-to-Digital Converters (ADCs) for digitizing all nine axes, making it a very stable 9 Degrees of Freedom board. The price of the MPU-9250 is about $15.

Figure 12.

The 9 DoF IMU, MPU-9250 breakouts [53].

2.1.2. Processing Unit

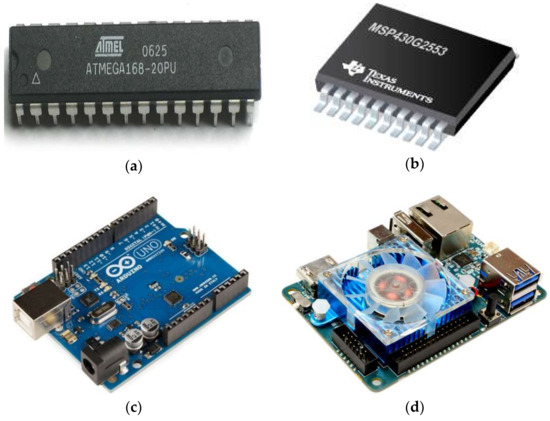

The microcontroller is the system’s mind that is accountable for gathering the data from the sensors provided by the glove and performing the required processing of these data to recognize and transfer the sign to the output port to be presented in the final stage. A high-performance microcontroller with a microchip with 8-bit AVR microcontrollers based on reduced instruction set computer (RISC), which combines 32KB in-system programming (ISP) flash memory with read–write capabilities called ATmega (Figure 13a), was used in [8,9,35,50]. A micro-controller MSP430G2553 (TEXAS INSTRUMENTS, Dallas, TX, USA), (Figure 13b) with an 8-channel, 10-bit (analogue to digital converter) ADC was used [37]. The central processor modules ARM7 and ARM9 are used by [54,55], respectively. Furthermore, as found in the literature, an open-source electronics platform called Arduino has been used. Several Arduino boards are available on the market such as Arduino Nano, Uno, Mega, etc.; for instance, Arduino Uno (Arduino, Italy), (Figure 13c) is based on the ATmega328P microcontroller and has 14 digital inputs/outputs, 6 analog inputs, a 16 MHz quartz crystal, and USB connection [6,8,21,37,56,57,58,59]. Odroid XU4 (Hardkernel2, Anyang, South Korea) (Figure 13d), produced by Hardkernel2, uses a Samsung Exynos5 Octa 5410 processor that consists of 4 CPU cores each with Cortex™-A15 cores; it was used for the development of the data glove [59].

Figure 13.

(a) ATmega microcontroller, (b) MSP430G2553 microcontroller, (c) Arduino Uno board, and (d) Odroid XU4 minicomputer.

2.1.3. Output Unit

Customarily, a user interacts with the device through output devices, so the output devices play an important role in achieving the best performance of devices implemented in the field of SLR. The main device adopted by researchers as the output was the screen of computer [3,4,35,40,48,60,61,62]. Other devices attracting the attention of researchers are the liquid-crystal display (LCD) [12,35,57,63], speaker [38,46,54,64], or both [6,51,55,65,66,67,68]. Ultimately, the smartphone is another alternative chosen for system output [9,42,48,59,69].

2.2. Gesture Learning Methods

Software, which is an essential component of every system, plays an important role in data processing in addition to the possibility of improving system outputs. The development of software for SLR systems is related to the methods used in the classification process to recognize gestures. One of the common direct methods to perform static posture recognition is prototype matching (also known as statistical template matching), which operates on the basis of statistics to determine closest match of acquired information values with pre-defined training samples called ‘templates’ [3]. In fact, this method is characterized by the lack of a need for complicated training processes or wide calibration, thus increasing its speed. From a pattern recognition standpoint, the artificial neural network (ANN) is the most popular method used for machine learning in the recognition field [67,70]. Therefore, it is possible to train this technique to distinguish both static and dynamic gestures, as well as posture classification, based on the data obtained from the data glove [41,43,54,70,71]. Long-term Fuzzy Logic has been used in many fields that need human decision-making; one of these areas is recognition of sign language [4,21,42,72]. Likewise, there is another useful algorithm; in fact, it falls within the scope of machine learning employed to provide accurate and less complicated classification through dimensionality reduction with improved clustering, which is known as Linear Discriminant Analysis (LDA) [46,61]. Hidden Markov Models (HMMs) is a popular technique that has shown its potential in numerous applications such as computer vision, speech recognition, molecular biology, and SLR [53,73,74,75,76]. Besides the HMM, the KNN is also used to classify hand gestures [63], and the KNN classifier with support vector machines (SVM) has been applied in posture classification in [77]. The KNN is applied in work on the recognition of ASL signs [40].

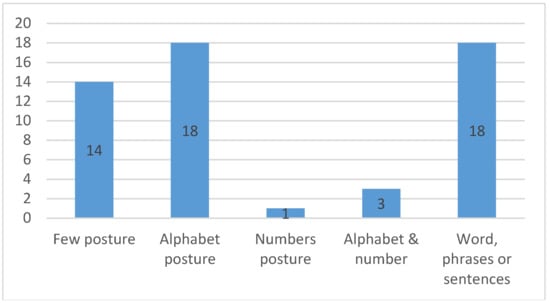

2.3. Training Datasets

The attention given to the sensory glove is due to its capability to capture the data required to photograph the shape and movement of the hand for the purpose of recognizing the gestures of SL. Despite the fact that SL has plenty of postures, many studies have concentrated on a selective set of postures that may be as small as some letters of the alphabet [21,44,47,51,78]; or the postures of the most frequently used words [37,41,45,55,56,72,77,79]; or a combination of the alphabet, numbers, and words [6] to perform their experiments and develop an SLR system. Others have contributed to the expansion of the database of gestures allocated for the purpose of developing a system to distinguish them and make them include either whole alphabets [4,11,35,46,50,57,64,65,70], numbers within the range 0 to 9 [10], or both, in the same system [39,42,69]. Furthermore, others contributed to their endeavor to distinguish certain words and phrases, even sentences, chosen to cover a wide range of real-life circumstances, such as family, shopping, education, sports, etc. [54,73,74,75,78,80,81,82,83]. Figure 14 illustrates the number of articles in each variety of the aforementioned gestures.

Figure 14.

Number of articles on each variety of gestures.

Table 1 shows further details regarding the database used in most of the previous studies, such as the type of gestures used with the frequency of conducting these gestures. In addition, the table clarifies who created the signs alongside the number of performers. The total numbers of samples used in the experiments are also given.

Table 1.

The most important details with regard to training datasets used in previous work.

3. The Analysis Results

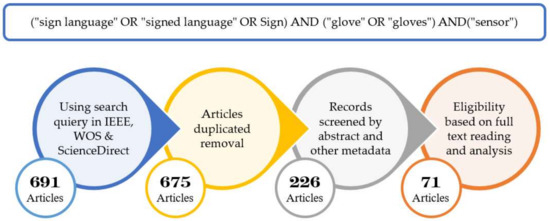

The databases, namely, Institute of Electrical and Electronics Engineers (IEEE), Web of Science (WOS), and ScienceDirect, have been adopted in this study, as they cover high-quality scientific publications (e.g., peer-reviewed journals, articles, conference proceedings, and workshop papers) related to computer science. Likewise, as suggested by [66], 691 articles, including 590 from ScienceDirect, 53 from IEEE Xplore, and 48 from Web of Science, were obtained as primary research findings. Sixteen duplicate records were eliminated through manual removal, thus yielding 675 unique papers for consideration. Screening of abstracts and metadata led to the further exclusion of 449 papers because they did not match any of our search queries. A total of 226 studies remained for full-text analysis. The analysis excluded 155 other records because of the failure to meet the inclusion criteria. Studies should be relevant to the development of an SLR system using a sensory glove, and should have accessible, online, full-text versions. Moreover, only publications written entirely in English have been accepted. Figure 15 shows the article selection process adopted in this study.

Figure 15.

The searches query and article selection processes adopted in this study.

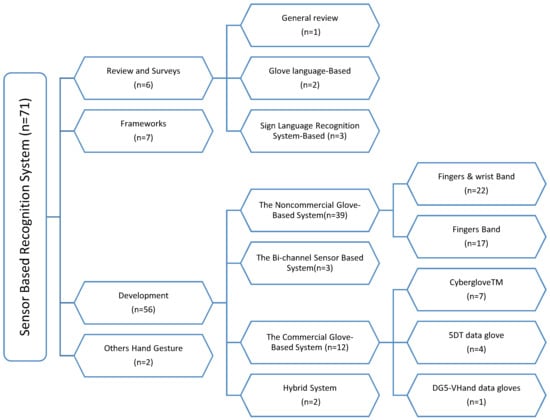

In the final screening, 71 studies that satisfy the inclusion criteria were identified. The papers included in the identification of important items for each study were read numerous times, and all of this information was listed in a single Excel file. This information concerned the type of article, motivation, problems, obstacles, and challenges. Also identified was the deaf language targeted by the study with a focus on the types of sensors and tools used, as well as the methods and algorithms that were adopted in these studies. Most of the records (78.87%; 56/71) are development papers that refer to genuine endeavors to develop a recognition system or share expertise in doing so. Other papers (9.85%; 7/71) are proposals for models or frameworks to resolve the predicament of deaf and dumb people. A few articles (8.45%; 6/71) demonstrate existing work on recognizing a specific SL or general recognition system to investigate the desired features and disadvantages and the applications of new assistance tools. The smallest portion of studies (2.81%; 2/71) includes articles that focus on hand gesture detection and its applications in SLR. These patterns were observed to create four general categories of articles (review and survey, development, framework, and other hand gestures) and answer the questions that were initially posted. Thereafter, the groups were refined into the literature taxonomy (Figure 16). The main category was also divided into subcategories while ensuring that no overlap occurred. The observed categories are listed in the following sections.

Figure 16.

A taxonomy of literature concerning sensor-based sign language recognition.

3.1. Review and Survey Articles

The importance of review articles is well recognized among those who work in research that aims to absorb new phenomena, introduce them to the research community, and extract meta-statistics to understand the possibilities and effects of phenomena on instituting change. Despite the importance of these type of articles, among the 71 studies, only six belonged to the review and survey category, which consisted of three groups, namely, general review [84], SLR system-based [17,84,85], and glove-based [11,21]. General review papers provide a complete picture of the glove system used with an explanation of its applications. Moreover, this type of paper analyzes device characteristics, provides a roadmap for technology development, and addresses the limitations of existing technologies and trends in research boundaries. Articles related to the glove-based system review the development issues of several current systems that are used as an interpreter ofspecific SL to communicate with the deaf and dumb. Through these papers, a definite comparative approach is achieved for the selection of suitable technology and for the translator systems of a particular SL. A recognition-based system provides an overview of the research onSLR, analyzes the essential components of SL, captures several methods and the recognition techniques that may help to develop a sign language recognition system with a wide vocabulary, and highlights the strengths and weaknesses of the developed systems.

3.2. Development System for SL

The majority of studies (56/71 papers) obtained through the research process belonged to the development category. These articles were classified into different topics and applications. The selected studies were categorized into subcategories based on the types of gloves and sensors used.

3.2.1. Non-Commercial Glove-Based System

Broadly defined, a non-commercial glove sensor is an electronic component, unit, or subsystem that distinguishes hand movements or changes in finger bending and sends data to other electronic devices, often to a computer processor. This sensor is developed and manufactured by self-effort. Most of the papers belonged to this category (39/71). The articles in this category can be distributed into the following two subcategories depending on the portion of the upper limb of the body that needs to be distinguished.

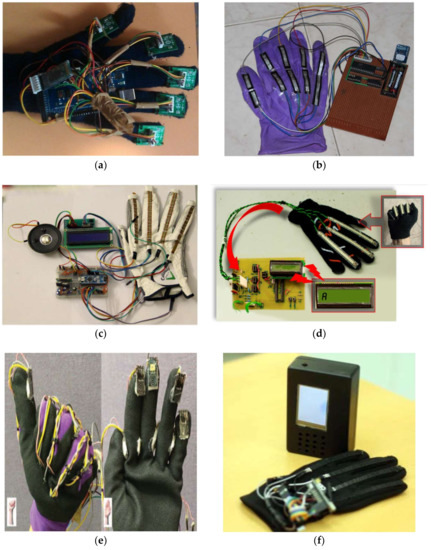

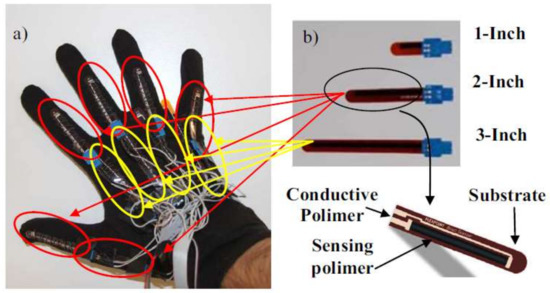

First subcategory, finger band (17/39): All articles that are listed in this category are relevant to the development of a model, system, or device for gesture recognition via finger-bending detection. These articles reported the potential types of sensors that can be employed to determine the amount of finger curvature by identifying advantages and drawbacks. They also elucidate the dataset size that can be distinguished in addition to mentioning the accuracy achieved whilst implementing the system. Figure 17 lists samples of the developed glove, which can capture finger bend. Ten flex sensors were used to acquire finger bending data. Two sensors were attached to each of two joints over each finger. The high-speed, low-power analog Multiplexer (MPC506A, TEXAS INSTRUMENTS, Dallas, TX, USA) was used to collect 10 analog input signals from flex sensors in order to process data using the MSP430G2231 microcontroller (TEXAS INSTRUMENTS, Dallas, TX, USA). The code in microcontroller-received data from the Multiplexer was then arranged in the form of a packet with a marker character, and this packet was sent using an EZ430-RF2560 Bluetooth transceiver chipset (TEXAS INSTRUMENTS, Dallas, TX, USA) to a cellphone. The Bluetooth module is transmitting at a 11520 baud rate. Once the angles have been found using software installed on mobile, they are compared to the known poses from the database. Then, an error parameter is computed for each of these poses. Finally, a text to speech synthesizer is used to convert the gathered information to voice [44]. In [9], a gesture recognition glove was developed with 5 three-axis ADXL 335 ACC sensors. The ATmega 2560 microcontroller (Microchip Technology, Chandler, AZ, USA) decodes American Sign Language (ASL) gestures by considering the axis orientation with respect to gravity and their corresponding voltage. Via a Bluetooth module (SPP-TTL INTERFACE), the alphabet/word is sent to an android application to convert them into text and voice. A finger recognition system was presented to help handicapped persons express their intents by using a finger only. Five optical fiber sensors surrounded each finger. In order to represent the strength of the optical signals, 8 digits were used to measure each finger bend. The system used a 3-layered neural network module to learn the input gestures with the MATLAB program. The input nodes represent the chosen feature values; the hidden nodes use TANSIG (Tan-Sigmoid) as the transfer function. The Purelin (Linear) transfer function was used for one output node. The six different values served as outputs for the six predefined hand gestures. The Back-Propagation algorithm is used for training, and the tenfold validation method is applied to 792 records [7]. In [2], five flex sensors were mounted on the glove to recognize British and Indian sign language. The size of the flex sensors used was about 112 mm and about 0.43mm in thickness. The MSP430F149 microcontroller (TEXAS INSTRUMENTS, Dallas, TX, USA) compared gestures to equivalent electrical signals corresponding to memory storage. The valid word was displayed on the LCD and spoken out of the speaker. The sign to Letter Translator (S2L) system was developed as in [12]. The system consists of a glove, six flex sensors, discrete components, a microcontroller, and an LCD. Five flex sensors were mounted on each finger and one on the wrist. The analog inputs of six sensors are converted to two-bit zeros, one corresponding to each letter for the five flex sensors. The final output was produced via some ‘if’ conditions. An LED-LDR pair was used in [37] to detect finger bending (one pair for each finger). The MSP430G2553 microcontroller (TEXAS INSTRUMENTS, Dallas, TX, USA) converts the analog voltage values to digital samples and the ASCII code of the 10 English alphabet. The ZigBee Bluetooth was used to transmit recognized gestures. The received ASCII code is displayed on the computer, and the corresponding audio is played. The ELECTRONIC SPEAKING GLOVE was presented in [38]. The flex sensor was very thin and light weight (4.5 inches in size). The high-performance, low-power AVR® 8-bit TMEGA32L Micro-controller with 10-bit ADC, RISC Architecture was used to recognize ASL alphabets via the template matching algorithm. The SpeakJet IC introduced the preconfigured 72 speech elements (allophones). In [41], the reliability of resistance sensors on the ASL translation via virtual image/interaction was investigated. Two flex sensors were used to carry out the experiment. The movement signal of the index finger and middle finger was sent to the Arduino Uno microcontroller. The system tested the six letters “A, B, C, D, F, and K”, as well as the number “8” using Fuzzy Logic via MATLAB software. The data of four gestures were acquired using a data glove equipped with five flex sensors. The change in resistance of flex sensors was fed into the Arduino Nano to implement the template matching algorithm in order to recognize the specific gesture and prerecorded audio command that was stored in the Secure Digital (SD) memory card; this was then transmitted through a speaker and display the text through a 16 × 2 LCD display [68]. The hardware structure of [63] consists of three flex sensors, an INA 126 instrumentation amplifier, an analog-to-digital converter (ADC), a 16F877A microcontroller, and an LCD. The sensors were placed on three fingers, namely, the ring, middle, and index fingers, to identify the letters of the alphabet from the finger spelling of ASL. Twenty-six characters of ASL were stored in the Programmable Intelligent Computer (PIC) microcontroller library. The obtained data of gestures were compared with 26 characters of ASL that were stored in the PIC’s micro-controller library, and the right letter was displayed on the LCD. The data glove successfully recognized up to 70% of ASL characters. The system proposed in [57] contained a pair of sensory gloves embedded with flex and contact sensors. Each glove consists of nine flex sensor, eight contact sensors, ATmega328P microcontrollers (Microchip Technology, Chandler, AZ, USA) and an XBee Bluetooth module (Digi International, Minnetonka, MN, USA). The flex sensors F1 to F5, namely, ‘outer flex sensors’, were used to calculate the bending changes on top of the five fingers, and the flex sensors F6 to F9, namely, ‘inner flex sensors’, were employed to detect the changes in orientation beneath the finger (excluding the thumb). A data set of 36 unique ASL gestures was used to evaluate the system; the recognition engine based on template matching resides in the master microcontroller, which processes the input and identifies the gesture. The system accuracy was from 83.1% to 94.5% with a cost of about $30. The ASL glove-based gesture recognition system is presented in [60]. The variation in electrical resistance of five custom-designed flex sensors was processed as the input to an Arduino ATMega328 microcontroller; then, it was compared with stored values corresponding to gestures. The system performance achieves accuracy of up to 80%. The cost of the system is approximately less than USD 5 in laboratory conditions using off-the-shelf components.

Figure 17.

Finger bend detection glove used in the literature. (a) The glove consists of five 3-axis ACCs and (b) the ten custom-made flex sensors; (c) the translator system consists of five flex sensors mounted on glove, LCD and speaker;(d) the glove consists of three flex sensors used to detect few gestures; (e) the glove is equipped with five contact (pressure) sensors; (f) the wireless translator system is embedded with the five flex sensors and LCD.

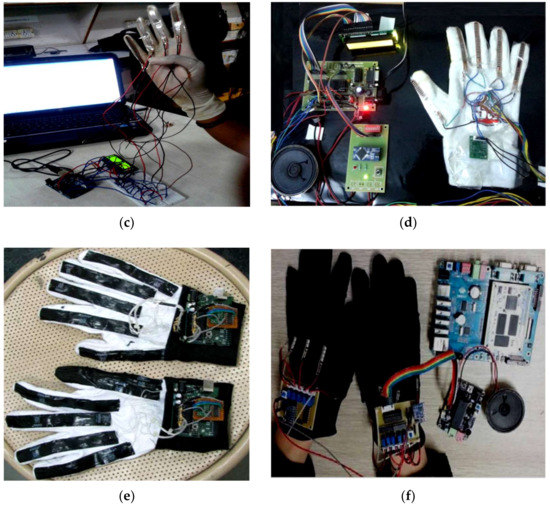

Second subcategory, fingers and wrist band (22/39): This category encompasses every study conducted to develop a model, system, or device for gesture recognition by detecting finger tilts and wrist orientation. These articles reviewed several types of sensors for detecting the movements of fingers and wrists. The advantages and disadvantages of recognition systems were also presented. The datasets and samples that were used were described, and the accuracy achieved whilst the system was implemented was stated. Figure 18 lists samples of the developed glove that can capture the flexion and abduction of fingers, as well as measuring hand motion. GesTALK, a standalone system, was presented to convert static gestures into speech [66]. The system can vocalize letters based on static gestures and can utter a string of words by concatenating words. The system vocalizes ASL and Pakistan Sign Language (PSL) letters expressed through static gestures made by the deaf individual. The glove consisted of 11 resistive elements, one for each finger and one for the abduction. Furthermore, the pitch and roll of the wrist were measured using two sensors. The system could recognize 24 out of 26 letters with an accuracy of 90%. A wireless sensory glove was developed in [4], as a new design of American SL to recognize the fingerspelling gesture. In addition to a 3D ACC, the glove had five 4.5-inch flex sensors and five contact sensors. The training data were collected from six speech-impaired subjects through ASL fingerspelling gestures. The system extracted 21 features from the sensors, and the experiment showed that the data glove can be enhanced by 12.3% using a simple multivariate Gaussian model. The glove-based system was proposed to track hand gestures. The system detects hand motions by using attitude and inertial measurements [59]. The prototype system consisted of six inertial measurement units (IMUs); five were at the upper-part of each finger, and one was on the wrist. Each IMU module provides 9-D ACC, gyroscope, and magnetometer measurements at a rate of 100 Hz, as well as attitude (orientation) estimated using an Extended Kalman Filter. The recognition process was carried out through Appling Linear Discriminant Analysis (LDA) on input signals sent to an embedded Odroid XU4 computer using a USB hub. The system achieved 85% accuracy. AcceleGlove, which comprises six ADXL202, ACC sensors was developed for Vietnamese SL posture recognition [58]. The system was modeled for the 23 Vietnamese alphabets, as well as two postures for “space” and “punctuation”. The data collected from the IMUs related to wrist and fingers joints. The fuzzy rule-based system was used to perform the gesture classification process. Twenty out of the 23 letters achieved 100% recognition, and the average system accuracy reached 92%. An intelligent glove was developed to capture the postures of the hand and translate these postures into simple text depending on the data acquisition and control system [50]. Three types of sensors were used in this data glove prototype: five flex sensors, five force sensors, one mounted on a finger, six DoF MPU6050 chip, tri axis ACC, and tri axis gyroscope sensors. All of these sensors were connected to Arduino mega, and the result was displayed on an LCD or smart phone. The electronic system could recognize the ASL alphabet (20 out of 26 letters) and achieve an average accuracy of 96%. A holistic approach was also presented to develop a real-time smart glove system to convert static SL gestures into speech by using statistical template matching [3]. The “Sign Language Trainer & Voice Convertor” software acquired five custom-made flex sensors and one 3-axis ACC along with an Arduino Duemilanove Microcontroller Board.

Figure 18.

The prototype sensory glove for measuring finger bend and hand movement used in literature. (a) Six 9-DoF IMUs mounted on the glove for ArSL recognition; (b) a pair of optical sensors located on each finger and one ACC on the palm; (c) the translator system consists of five flex sensors placed on the back of each finger and ACC; (d) the data glove is embedded with five flex sensors, ACC, LCD, and speaker; (e) the system consists of two gloves equipped with ten custom-made flexion sensors and two ACC sensors; (f) the system consists of two gloves equipped with ten custom-made flexion sensors, two ACC sensors, and a speaker.

A haptic glove was presented as a vital means to acquire data from different hand signs, and the LabVIEW program served as the user interface with the aid of Arduino platform [47]. Arduino Mega was employed to collect gesture signals from the five custom-made flex sensors, contact sensors, and one ADXL335 ACC, placed on the glove. The flex sensors were mounted on each finger, and the contact sensors were placed between each adjacent finger. An electronic glove was equipped with five flex sensors, an ACC, and a contact sensor to recognize static and dynamic gestures and convert these gestures into visual information on an LCD, voice through a speaker, or both [67]. The embedded system is composed of data gloves with flex sensors and an ACC to detect finger tilt and hand rotation; thereafter, these signs are processed by a microcontroller, and the playback voice is the output of the system [79]. The glove maps the hand orientation and bending of fingers with the help of an ACC and Hall Effect sensors. The device is modelled to transform SL gestures to textual messages [36]. Four Hall Effect sensors (MH183), which were placed on all fingers except the thumbs, were used to detect the south poles of the magnet placed on the palm in order to measure finger tilt. The ADXL535 was also included to record the hand orientation via the triplet of x, y, z axes (voltages) information. The data acquired from the glove was passed to MATLAB script to detect the number of gestures (0–9) performed by the user and the accuracy of the recognition (about 96%). In another study, two elements, namely, the 3D positioning method and optical detection technique, were used to create a low-cost glove to interpret gestures [56]. A set of Light-emitting diode (LED) had an emission peak of 940 nm (model LTE-4602), a compatible photodiode (same model), and a polymeric fiber (PMMA). These optical sensors were used to capture finger tilt and the LSM330DLC chip; 3-axis ACC and 3-axis gyroscope were used to determine hand motion. A translator system was presented to interpret Urdu SL gestures by using a wearable data glove [6]. Five flex sensors of 4.5-inches in length were placed on each finger, and an accelerometer MMA 7361 (SparkFun Electronics, Niwot, CO, USA), was placed on the palm. The glove also contained Arduino Mega 2560, drive Graphical Liquid Crystal Display (GLCD), and playback module WTV020 SD. The Principal Component Analysis (PCA) was selected to obtain coefficients of the training set and create a 300 × 8 (m × n) data matrix. These matrices of coefficients were used to transform both the saved mean of sensor values for each gesture and real-time input components. The PCA was performed using MATLAB 7. The system performance reached 90% accuracy. The glove-based approach is used for hand movement recognition systems. Thus, the approach can recognize several words, numbers, and the alphabet of the Malaysian SL when the speaker wears the glove with 10 tilt sensors (two sensors for each finger); a 3-axis ACC to determine the motion of the hand via roll, pitch, and yaw values; and a microcontroller and Bluetooth module to send the converted data to a cellphone. The system was tested on a few gestures of Malaysian Sign Language using a matching template, and the accuracies ranged from 78.33% to 95% [69]. Twenty-six hand gestures correspond to the letters of the alphabets, and 10 other gestures represent numbers, which can be recognized by the data glove model presented in this work [51]. Five flex sensors were used, one on each finger and thumb fed to the Arduino uno via an analog pin (A0 to A4). In order to determine the palm tilt, a ADXL335 ACC was used, and 28 (256) gestures were the system’s capacity to store learned gestures for the matching process. Combining flex sensors with nine degrees of freedom, ACC sensor and ARM9 allowed the degree of finger bending and hand orientation to be measured [54]. Therefore, this combination can distinguish several SL postures with the assistance of the matching method. The system consists of two gloves; each glove contained five 4.5 flex sensors of 95 millimeters in length. Additionally, one 9-DoF IMU was used to detect the orientation and movement of the palm. The system was tested with the phonetic alphabet such as a, b, c, zh, ch, and other phonetic letters with an average accuracy of more than 88%.

3.2.2. Commercial Glove-Based System

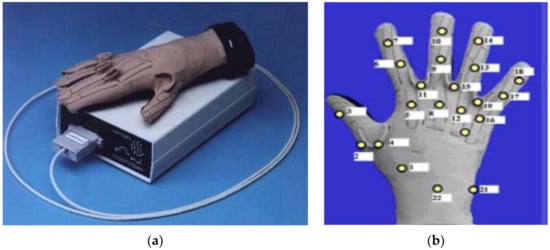

The commercial glove-based system is a means to handle the quandary of communication for deaf and mute individuals. The system uses bend-sensing technology to capture hand and finger actions into digital joint-angle measurements. Several studies (12/71 papers) used different commercial gloves to recognize different SLs. Some of them (7/12) used a popular commercial glove called CyberGlove (GLV) (Figure 19). The lightweight elastic glove is equipped with 22 thin and flexible sensors that are virtually undetectable. The glove consists of three sensors attached to each finger, four abduction sensors between fingers, a palm-arch sensor, and sensors to measure wrist flexion and abduction. The price is approximately $40,000 per pair for 22-sensor Cybergloves. An ASL translator (into written English words) was developed using artificial neural networks (ANN). The systems, based on the CybergloveTM glove and a Flock of Birds 3-D motion tracker, were used to capture the flexion of fingers and trajectory of the hand. The sensors data were processed by two neural networks, namely, a word recognition network and velocity network, with 60 ASL words. The recognition accuracy of these two systems is 92% and 95%, respectively [81]. A 3D hand model was designed to display posture related to reconditioned words. A 3D hand model was constructed using java 3D. The system segmented and recognized the continuous words, which were recorded using CyberGlove in real-time. The recognition efficiency was tested using the index tree [43]. A CybergloveTM and a FlockofBirdss 3-D motion tracker are used to collect hand motion and bending. The 15 bending angles, three positions, and three orientation readings from the Flock of Birds were classified using a multi-layer neural network. The ANN consists of 151 input variables, 100 hidden neurons, and 50 output neurons. A Levenberg–Marquardt back propagation algorithm was used for training 50 words in ASL, and the system reached about 90% recognition accuracy [74]. A segment-based probabilistic was presented to detect and recognize the continuous gestures of ASL. A Bayesian network (BN) was used to segment the continuous signs. A two-layer conditional random field (CRF), along with support vector machine (SVM) classifiers, were applied for the purpose of recognition. The system performance had average accuracy of 89% [61]. A new approach was proposed by fusing the data acquired from sensor gloves, and the hand-tracking system used the Dempster–Shafer theory for evidence. The recognition performance of 100 two-handed ArSL achieved an accuracy of about 96.2% [86]. The CyberGlove was used to collected finger tilt and hand motion. A linear decision tree with Fisher’s linear discriminant (FLD) was used to classify 27 Signing Exact English (SEE) handshapes. Hand movement trajectory was classified using vector quantization principal component analysis (VQPCA). The system yielded recognition accuracy up to 96.1% [87]. Moreover, a few (4/12) experimented with another commercial data glove known as the 5DT data glove to develop their SLR systems. The 5DT Data Glove Ultra is available in two variants, with five or 14 sensors per glove. The 5DT Data Glove 5 Ultra (Figure 20) is equipped with five fiber optic sensors to measure the flexion and one extra to measure the orientation (pitch and roll) hand. The 5DT Data Glove 14 Ultra consists of 14 fiber optic sensors (two sensors per finger), as well as the abduction between fingers. The glove is also equipped with two tilt sensors to measure the pitch and roll of the hand. The cost of one glove is approximately $995. A 3D model for finger and hand motion detection was developed to motivate the creation of a learning system based on gloves. The system used 5DT data glove 14 ultra-motion to capture Japanese fingerspelling gestures. This system helps a learner to recognize motion errors intuitively [70]. The manuscript presented software development to assist individuals suffering from speech and hearing impairment depending on the tuning location of the fingers with 5DT gloves. The 5DT Glove with five sensors was used to translate hand signs into words and phrases. Gesture recognition was performed using a multi-layered neural network. The NN configuration consists of one input layer (five input neurons), three hidden layers, and one output layer (twenty-six output neurons). Different algorithms, namely, back propagation, resilient propagation, quick propagation, scaled conjugated gradient, and Manhattan propagation, were used to train the network using the Matlab program [82]. The real-time architecture was presented to recognize Spanish SL in terms of gesture and motion recognition. A 5DT Data Glove 14 Ultra was used for real-time sign recognition. A distance-based hierarchical classifier has been proposed to recognize 30 signs of the Spanish alphabet [78].

Figure 19.

Glove systems. (a) Virtual Technologies’ CyberGlove and control box and (b) the location of 22 bend sensors on the glove [43].

Figure 20.

The 5DT Data GloveTM developed by Fifth Dimension Technologies; the glove measures seven DOF [74].

Only one work (1/12) in this literature review adopted the DG5 VHand data glove in the task implementation. Two real-time translators were developed for American SL. A DG5-VHand glove (Figure 21) was equipped with five bend sensors and a 3-axis ACC, which allows for sensing both the hand movements and the hand orientation. The gloves are suitable for wireless operations and are powered with a battery. The device costs about $750 for a wireless left-hand glove. A novel technique classified sequential data for Arabic SL using a pair of DG5 VHand gloves. The Modified k-Nearest Neighbor (MKNN) was used for the classification of a sensor-based dataset. The dataset consisted of 40 sentences, which were captured using two DG5-VHand gloves. The performance of the proposed solution reached 98.9% recognition accuracy [40].

Figure 21.

The A DG5-VHand glove equipped with five flex sensors and one 3-axis ACC [49].

3.2.3. Bi-Channel Sensor-Based System

Transacting with multimodal sensors by fusing data from various channels is not required to fully understand the connotations of sign posture. Therefore, hand posture recognition based on data fusion of multi-channel electromyography (EMG) and inertial sensors, such as a three-axis ACC, was proposed. The possible benefits of the combination of ACC and EMG signals are utilized to achieve multiple degrees of hand freedom [77]. A decision tree and multi stream hidden Markov were used to classify 72 Chinese Sign Language (CSL) words. The MMA7361, 3-axis ACC was mounted on the back of the forearm near the wrist to capture information about hand orientations and trajectories. The EMG sensors were located over five sites on the surface of the forearm muscles. The overall recognition rate of the system was up to 72.5%. The sensing system for German SL with a small database was investigated by using a single channel of single electromagnetism (EMG) and single acceleration (ACC) to recognize signs for seven-word level vocabularies [83]. The experiment was conducted on seven sign gestures (70 samples from each subject), with the k-Nearest Neighbor (k-NN, with k = 5) classifier and SVM. The system achieved an average accuracy of 99.82% for subject-dependent recognition and 88.75% for the general condition. Information from the three-axis ACC and five-channel electromyogram of the signer’s hand was analyzed using intrinsic-mode entropy towards automatic Greek SLR. An experiment was conducted on a 60-word lexicon with three native signers and repeated 10 times. The system used intrinsic-mode entropy (IMEn) by using a PC along with MATLAB R2007a. The experiment showed that the recognition rate reached 93% classification accuracy [76].

3.2.4. Hybrid System for SLR

A small category includes papers associated with combining vision- and glove-based approaches for acquiring SLR data. The hybrid system was proposed to take advantage of the use of inertial sensor measurements and contribute to enhancing the value of acquired vision data. The system based on RGB color model (Red, Green and Blue) cameras or depth sensors and the Accelerometer Glove is presented in [53]. This issue needs further investigation. The Accelerometer Glove contained seven IMU sensors, five located on the fingers (one sensor on each finger), one on the wrist, and one on the arm. The database was created with 10 repetitions of each of the 40 gestures registered for the five signers. This results in a total of 2000 recordings used to validate the solution. The recognition engine was based on sign language gestures by using Parallel Hidden Markov Models (PaHMM). The system accuracy reached 99.75%. The prototype for a one-way SL translator consisted of AcceleGlove and a camera mounted on a hat. Acceleglove was equipped with five 2-axis ACCs located on rings to capture finger flexion. Two more on the back of the palm read the hand orientation. Another two ACCs were used to detect the bend angles of the shoulder and elbow along with the upper arm. The users perform 665 sentences of ASL, with a camera filming the process to aid in the identification of incorrect signs. In order to train the system, a HMM-based recognizer carried out phrase-level sign recognition with a per sign accuracy of 94% [52].

3.3. Frameworks for SLR

Several of the obtained articles (7/71) fit the developed category concerning our taxonomy, because they did not develop a new system. Rather, they (3/7) served as an eligible framework of the template [48,49,52]. Other articles (3/7) addressed the system design [42,73,75], whereas one article presented the development and use of a method or technique for the SL system [72]. In [42], a framework was proposed for wireless glove-based two hands gesture recognition. The framework was established for the real-time translation of Taiwanese sign language. Flex and inertial sensors were embedded into each glove. The bending of fingers was acquired by flex sensors, the palm orientation was acquired by G-sensor, and the gyroscope was used to obtain the motion trajectory of the hand. The sampled signal will be sent to a cell phone via Bluetooth. In the cellphone, the encoded digital signal is compared with the SL database using a lookup table, and the meaning of a valid gesture is displayed. Using Google translator, the cell phone produces a voice. Through the proposed architecture and algorithm, the recognition accuracy will be acceptable. In [49], the framework for a sensory glove for Arabic sign language signs (ArSL) is presented. The glove design is based on statistical analysis for all words of ArSL in terms of a single hand via as few sensors as possible. The proposed design was implemented using the PROTEUS simulation program. The system consists of two gloves; each glove contains six flex sensors (length of sensor = 5 cm), one for each finger plus one for the palm, four contact sensors for fingers (index, ring, middle, and pinky) and one MPU 6050 for hand orientation and motion. In [48], the framework consists of two algorithms: sign descriptor stream segmentation and text auto-correction; it also presents a hand gesture descriptor to develop the software architecture of this time-sensitive complex application. The motion-capture gloves produce text and audible speech. A framework of the Interpreter Glove system was proposed in [48]. The framework consists of four main blocks, namely, the glove, the training environment, the mobile applications, and the backend server. The system produced a gesture descriptor, namely, “Hagdil”, based on the structure and kinematics of the human hand. A Hagdil descriptor stores all the substantial characteristics of the human hand: parallel and perpendicular positions of the fingers relative to the palm, wrist position, and absolute position of the hand. Then, it encodes the Hagdil gestures descriptor (18 hand parameters) before transmitting it to the cellphone. Finally, the Levenshtein distance calculation algorithm is employed for auto-correction for text that corresponds to the gesture.

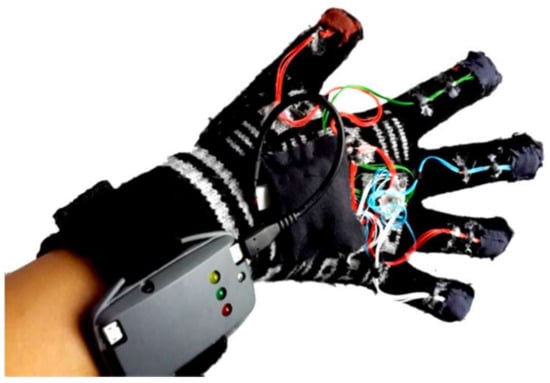

3.4. Other Hand Gesture Recognition

The progress of science and technology has facilitated the development of hand movement detection devices. Gesture recognition is a system that translates hand movement through software, hardware, or a mixture of both to help hearing- and speech-impaired individuals communicate with a machine. The recognition system can support disabled people, medical staff, scientists, and others. One study [14] presented a simple, low-power, low-cost system that could detect finger flexion–extension movements. The glove consists of ten resistors (two for each finger) connected to a front-end microcontroller, which send the acquired data to the personal computer (PC)through the Bluetooth module (Figure 22).

Figure 22.

Adopted bent sensors and positions. (a) the image of the sensorized glove and (b) the adopted bent sensors [14].

Five gestures, namely, rock, paper, scissor, rest, and thumb-up, were recognized using the glove equipped with six 3-axis ACCs. One ACC was placed on each finger to measure fingers bend, and one was placed on the back of the palm to capture hand motions and positions (Figure 23). The six ACCs were connected to a microcontroller, and the received raw data are mapped and arranged in an array before it is transferred serially to a Bluetooth module [88].

Figure 23.

Six sensor-type 3-axis ACC mounted on a data glove for gesture recognition [88].

4. Distribution Results

Three digital databases were chosen to obtain information from works related to SLR based on a sensory glove. The outcomes of the review are classified in four general categories, namely, development, framework, review, and survey, and the last category is other hand gesture. The final number of articles gathered from IEEE Xplore is 35, composed of 24 articles for development, 4 for framework, 5 for review and survey, and 2 for other hand gesture. The closing number of articles gathered from the WoS database is 26, composed of 23 articles for development, 2 for framework, 1 for review and survey, and 0 for other hand gesture. Finally, the closing number of articles gathered from ScienceDirect is 10, composed of 9 articles for development, 1 for framework, 0 for review and survey, and 0 for other hand gesture. Table 2 lists the citations and Impact Factor of the cited papers in this study ordered by number of citations. The citation number of articles was found using Google Scholar, and the impact factor of the journals was extracted from the official website of each journal.

Table 2.

The metric information of the articles in the previous study.

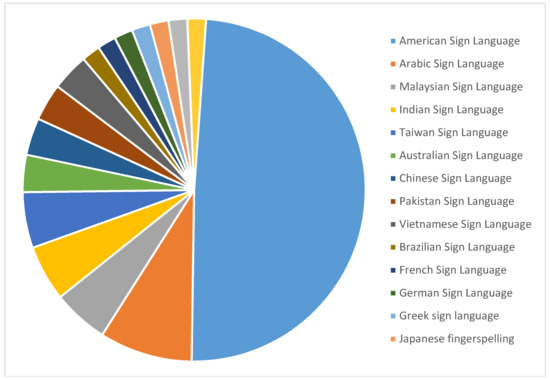

4.1. Distribution by Sign Language Nationality

Figure 24 illustrates the number of articles on SL (16) that were included in this study. The most prominent SLs that have been mentioned are ASL (28 articles), ArSl (5 articles), MSL (3 articles), Indian sign language (ISL) (3 articles), and Taiwan sign language (TSL) (3 articles). However, in this study, only one or two articles were published for other SLs. ASL gained the most attention from researchers conducting their scientific studies in the field of gesture recognition. The number of research articles submitted in this area during the previous ten years is 28, which equates to 48%.

Figure 24.

Number of articles published for each form of SL.

4.2. Distribution by Gesture Type

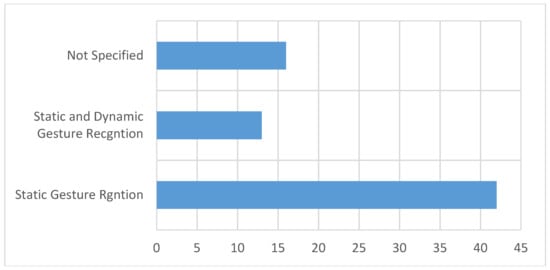

The SL similar to vocal language is composed of alphabets, words, and sentences, all of which are gesture expressions that may be static or dynamic. Somehow, the static gesture is less complicated than the dynamic one. Figure 25 shows that a large number of researchers (42/71) have developed a mechanism to distinguish static gestures, whereas few researchers (13/71) have developed a system to recognize dynamic gestures. Our review of the research work found that a number of other studies (16/71) did not distinguish the type of gesture targeted in their work.

Figure 25.

Number of articles that recognize static and dynamic gestures.

4.3. Distribution by Number of Hands

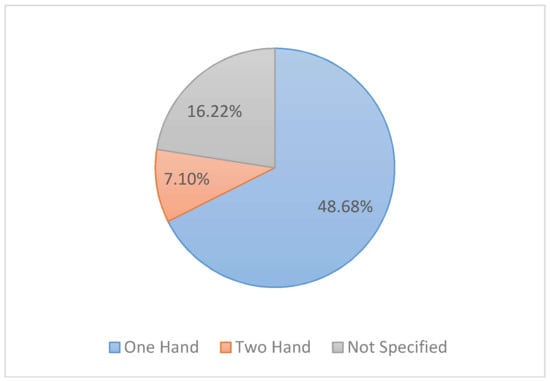

Figure 26 illustrates the number of articles that executed gestures with one or both hands. It is easy to note the significant difference between the number of studies (48/71 papers) on the recognition of one hand and the number of studies (7/71 papers) on the recognition of two hands.

Figure 26.

Number of articles for gesture recognition based on the number of hands.

5. Discussion

This review provides a research-oriented overview of relevant studies on the latest achievements in the recognition of SL based on sensory gloves. The aim of this study is to clarify and highlight the potential research trends in this field. To achieve this goal, a taxonomy of relevant articles is proposed. Developing a taxonomy of the literature in the field of SLR offers many advantages. One of these benefits is to organize a variety of published work in a taxonomy to serve new SLR researchers who may feel confused with the large number of papers written on the subject without any kind of structure. Various studies discussed the topic from an introductory perspective, whereas other studies developed recognition techniques to obtain improved performance using existing commercial gloves. Several articles developed an actual data glove for SLR. The taxonomy of relevant articles sorts out various studies into a meaningful, manageable, and cohesive structure. Moreover, several important insights into the topic can be presented to researchers through the structure introduced by the taxonomy. One of these insights outlines possible research trends in this area. For example, the taxonomy in the current work on SLR based on sensory gloves shows that researchers tend to develop their own gloves and conduct experiments to offer a possible way of contributing to this area. Other areas include developing commercial gloves and techniques to procure the desired accuracy in the recognition process. A taxonomy may also reveal gaps in the literature. Organizing studies in literature into distinct classes highlights the weak and strong features of SLR in terms of research coverage. For example, the taxonomy in this study shows how groups of individuals worked to develop a glove using different types of sensors or investigated the recognition method using existing gloves and received notable attention in the development category (this phenomenon is evident as the bulk of the work falls in this category). Furthermore, the taxonomy shows the scarcity of studies on the development of a hybrid system to obtain data. The taxonomy also elucidates that studies in the development category focused on obtaining data on finger and wrist movements and did not attempt to include data on arm and shoulder movements, which are also related to SL signals. In addition, the subcategories of the taxonomy that belong to the survey and review branches have not received considerable attention in the literature. Three aspects are revealed in the literature review of this survey: the motivations behind SLR based on the sensory glove, the challenges in the appropriate use of these technologies, and the recommendations for alleviating these difficulties.

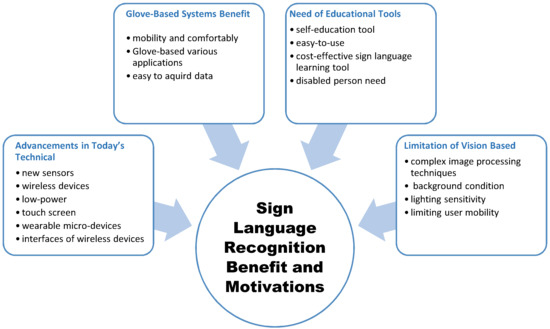

5.1. Motivations and Benefits of SLR Using Gloves

When humans talk to one another, they communicate through speech and gestures. Gestures may either supplement one’s speech or completely change it, especially in the case of hearing- and speech-impaired individuals. However, these disabled people have overwhelming difficulty in communicating with others and find themselves in awkward situations, because various gestures may be used by several people to convey the same message.The following are some of the benefits and motivations reported in the references that have been categorized according to the similar motives (Figure 27).

Figure 27.

Categories of benefits of SLR based on the sensor approach.

5.1.1. Advancements in Today’s Technology

The devices and tools that help disabled people live a normal and comfortable life have always been a domain that has attracted innovation. Various developments in the technological world today have helped diverse groups of people with disabilities in terms of research and product system development in assistive technologies that aid various disabled people to carry out their daily activities [3]. Such examples include wireless devices, low-power electronics, and the capability to design both the analogue front-end and digital processing back-end. Furthermore, integrated circuits have also inspired the production of a new range of micro wearable devices [44,58]. The interfaces of wireless devices available to us have become easier to use. Actually, most new wireless devices use a touchscreen instead of buttons [56]. This innovative interface can be more sophisticated with the integration of a system that is constantly aware of hand movements in space [56]. The interfaces of wireless devices available to us have become easy to use. This is also true in the development in the field of electronics in which many sensors can address bending measurement and recognize many degrees of freedom (DOF) of the human hand and its wide scope of movement. Similar new technologies can be used for various areas, such as SL translation [7,9]. Appropriate technology can be used to give a tremendous boost to the everyday life of deaf and dumb people and enhance the quality of their lives.

5.1.2. Educational Tools for SL

In developing countries, deaf and dumb children rarely receive education. In addition, adults with hearing and speech loss have a significant unemployment rate. The availability of an easy-to-use and cost-effective SL learning tool will help these individuals learn through natural means. Therefore, improving access to education and vocational rehabilitation services will reduce unemployment rates for people with hearing or speech disabilities [81]. On the other hand, SL is the main tool to communicate with the deaf and dumb. Thus, many normal people are encouraged to begin to learn SL through web sites, video clips, and mobile applications. With respect to gesture (arms, hands, and fingers) skill learning, which is used in SL, a specialist should provide objective advice to the learner [9]. In other words, the learner requires a self-education tool to instruct her/him on how to do the correct gesture, follow up by implementing that movement, and identify errors that may occur during implementation [70]. The SL glove appears to be very beneficial in assisting sign language education.

5.1.3. Advantages of Glove-Based Systems

Our hands are used to accomplish a large number of basic tasks in our daily lives. Thus, many studies have examined the development of techniques to investigate hand movements and increase the capability to simulate hand functions for the completion of basic tasks. Data acquired from hand movements are used in several engineering applications ranging from motion analysis to biomedical science [89]. Glove-based systems are important techniques that are used to obtain hand movement data [57]. Despite them having been around for more than three decades, this field of research is still extremely active [87]. It is also obvious that technological advancements in computing, materials, sensors, and processing-classification methods will contribute to making the new generation of glove systems more powerful, highly accurate, comfortable, cheap, and possible to use in many applications [36,66]. A glove can be an assistive interpreter tool for hearing- and speech-impaired persons to communicate with non-disabled individuals who do not understand SL [39]. Gloves have other benefits, such as mobility and comfort. Modern technologies and sophisticated electronic circuits, which are harnessed to recognize SL using gloves, have enabled gloves to overcome the need to be connected with a computer. Furthermore, these devices being lightweight makes it possible to carry them with ease and comfort [7,8]. Glove-based systems are also beneficial to various applications. New technologies for the man–machine interface (MMI) present natural ways to operate, control, and interact with machines. The MMI term refers to the capture and conversion of signals related to the appearance, behavior, or physiology of a human via a computer system [15]. All interface techniques that rely on sound or vision have contributed to a radical change in the mechanism of operating a computer [78]. Gesture recognition is an interaction method that plays an important role because of its common use of gestures and signs for human communication [4]. This fact increases the importance of recognizing a gesture, indicating that it is a growing field of research with incalculable application areas [82]. Numerous kinds of applications are currently involved in gesture recognition systems, such as SLR, substitutional computer interfaces, socially assistive robotics, immersive gaming, virtual objects, remote control, medicine-health care, etc. [10,11,12,13,14].

5.1.4. Limitation of the Vision-Based Method

Gesture recognition systems rely on two means to capture and record the shape and movement of the hand: they use a camera or a sensor. In gesture recognition systems, the use of a vision approach requires the adoption of at least one camera to capture hand images and interpret the suitable gesture. Sometimes, several devices, such as Kinect, leap motion, or even a colored glove, are appended to increase the capability to detect hand shape or movements [85,90]. The advantage of this approach is that the deaf person is not required to wear an uncomfortable device. Additionally, facial expressions can be included. Useful data can be easily obtained such as the depth and the color of images [2]. However, acquiring information related to the hand is more difficult when using the vision-based approach. Complex image processing is needed [4], and hand shape recognition is affected by the background condition. In addition, the lighting sensitivity may influence the accuracy of finger tracking [5,6]. Processes are performed using a computer, and the user always needs the camera, which makes it unusable for people with hearing impairment to use in their daily lives [17].

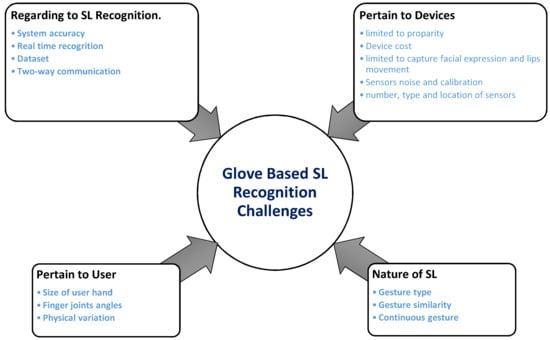

5.2. Challenges in SLR Using Gloves

A complex modelling framework is required to handle the features of the recognition system. After reviewing each study, we determined all the difficulties and problems that were addressed or encountered by previous researchers in SLR. The following are the most important obstacles classified into groups (Figure 28).

Figure 28.

Categories of challenges for glove-based SL recognition.

5.2.1. Nature of SL

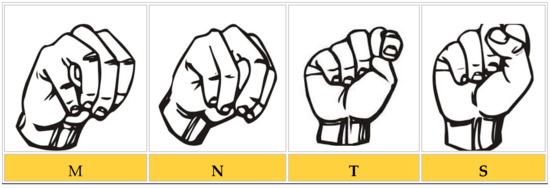

SL postures can either be static or dynamic. The classification of static hand postures is easier than that of dynamic hand postures, because movement history need not be considered [84]. Thus, most of the current recognition systems work well with static postures, because the error rates for dynamic postures are high due to insufficient training [18]. The similarity is another problem in SL; the movement or shape of a particular sign may be similar to another sign, so they may look similar in motion. For example, in the ASL alphabet, the letters “N”, “M”, “T”, and “S” are signed with a closed fist, as shown in Figure 29. At first glance, the posture of these five letters looks to be the same as [58]. This ambiguity may cause inaccurately classification, leading to low accuracy. The errors are mainly caused by letters equivalent to similar gestures such as V and U [59]. Continuous sign problem: In reality, signers perform signs in a continuous mode at a specified frequency and transition delay. Continuous gesture points denote sentences or phrases. The complexity of these points originates from a stream of signs being conducted that are not actually separated by discontinuity, such as speech [40].

Figure 29.

Similar postures in ASL, in terms of finger bending.

There is an additional challenge regarding the meaningless movements between continuous gestures. Such transition periods between gestures are transitional frames known as Movement Epenthesis (ME). Many studies label these movements as a classification problem. They are not a universal system problem: different SLs exist worldwide, and each one has its own vocabulary and gestures. These languages are not familiar to people outside this certain community [56]. There is no universal system that provides an objective means of communicating for deaf people around the world [45,72].

5.2.2. Pertain to User

In terms of the physical differentiation of each apprentice, the beginner cannot accurately synchronize his/her movement with that of a proficient person [40]. As a result, even if the beginner carries out the motion correctly according to his/her convictions, the listener may still have difficulties interpreting this motion; hence, the apprentice receives a low score because of the great variance from the skilled person [87]. Differences in several angular values of the finger joint should also be observed during gesture generation. These differences occur between different people, as well as within the same individual during perform gestures at different times [81]. Another issue is associated with signer dependency. A slight difference exists in the values, because the person cannot keep all his hands/fingers exactly the same [4]. One of the acquiesced facts is the variations among people. One of these differences, which has an effect on the action of the glove, is a difference in the size of the user’s hand [84]. The sensors are placed on gloves made of leather or cloth with several sizes; hence, the inappropriate use of the glove might adversely affect the accuracy of the performance [14].

5.2.3. Pertain to Devices