Digital Holography as Computer Vision Position Sensor with an Extended Range of Working Distances

Abstract

1. Introduction

2. Digital Holography as a Lens-Less Vision System

- Holography does not record an image of the object but instead the propagating wavefront diffracted by the object onto the hologram plate.

- Holography uses interferences with a reference laser beam to record the amplitude and phase of the propagating wavefront rather than intensity as in usual imaging methods.

- The 2D distribution of light intensity received by the hologram plate produces, after development, thickness (or surface height) variations that behave as a diffraction grating.

- Then, while illuminated by a laser beam similar to the reference beam used at the recording stage, the hologram diffracts the same wavefront as that diffracted by the object at the recording stage. To an observer’s eye, or another imaging device, the distribution of light received is as if the recorded object was still in place.

2.1. Specificities of Digital Holography

- The object size is limited, more or less to the same size as the image sensor itself.

- The maximal angle between the interfering beams must remain sufficiently small to ensure that fringes are sampled with at least two pixels per fringe, when off-axis setup is used.

- At the reconstruction stage, the object definition is limited proportionally to the reconstruction distance. The achievable lateral resolution is given by the speckle grain size: , where is the laser wavelength, d the distance from the image sensor to the reconstruction plane and h the lateral extension of the image sensor, assuming . In a same way, the axial resolution of restitution is given , by the following equation:

2.2. Numerical Object Reconstruction

2.3. Working Distance and Focus Determination

3. Application to In-Plane Position Sensing

3.1. Position Sensing

3.2. Experimental Setup and Actuators Used

- M-037.DG rotation motor; range of 360 deg., deg. minimum incremental motion and deg. backlash.

- Two crossed M-111 micro-translation stages with range, ultimate incremental motion, incremental repeatability and backlash.

- P-615.3CD piezo-controlled nanocube with XYZ range with resolution used along the YZ axis.

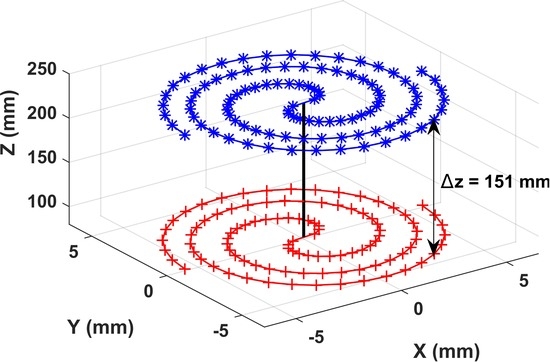

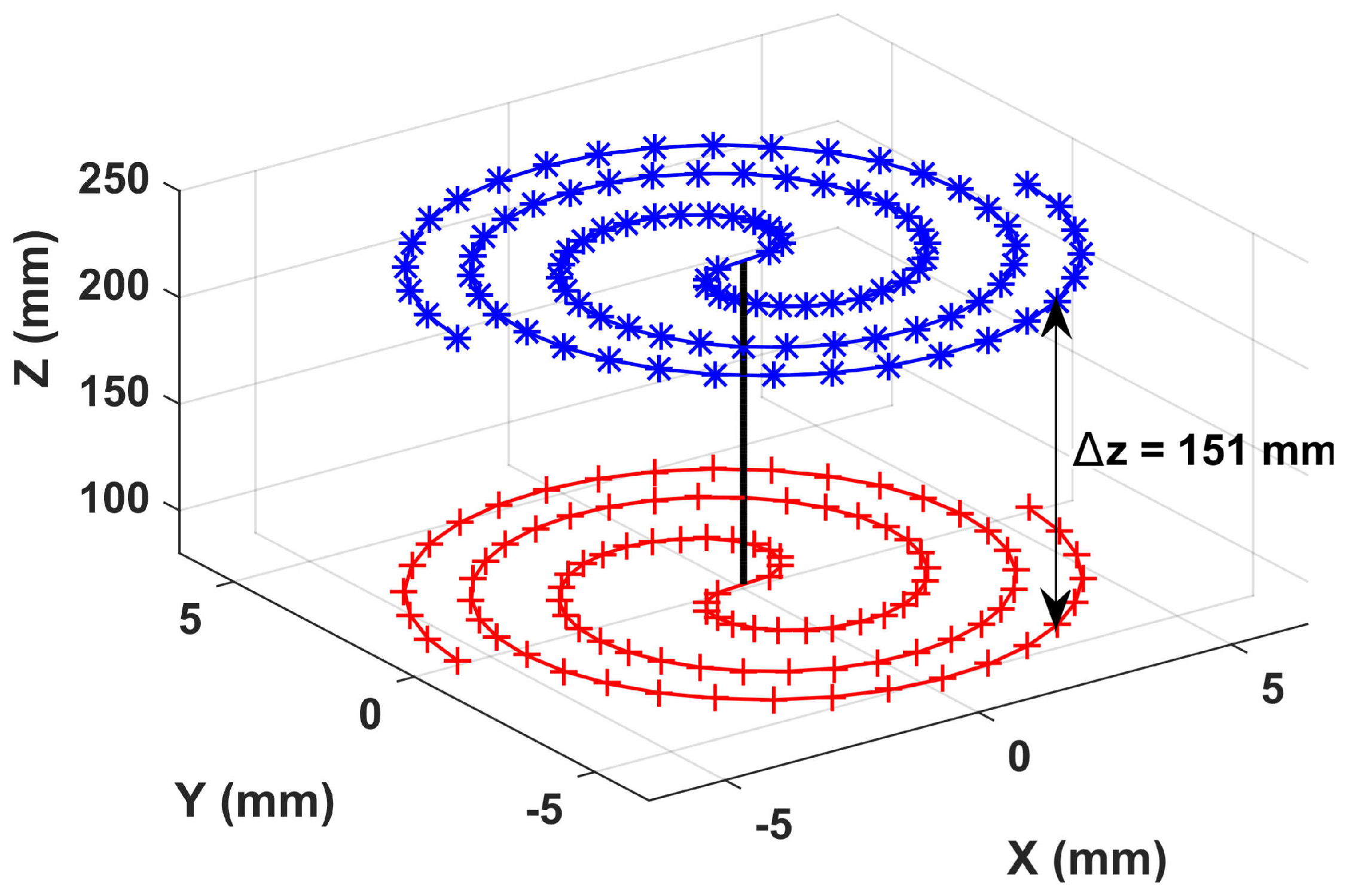

4. Results and Working Distance Range

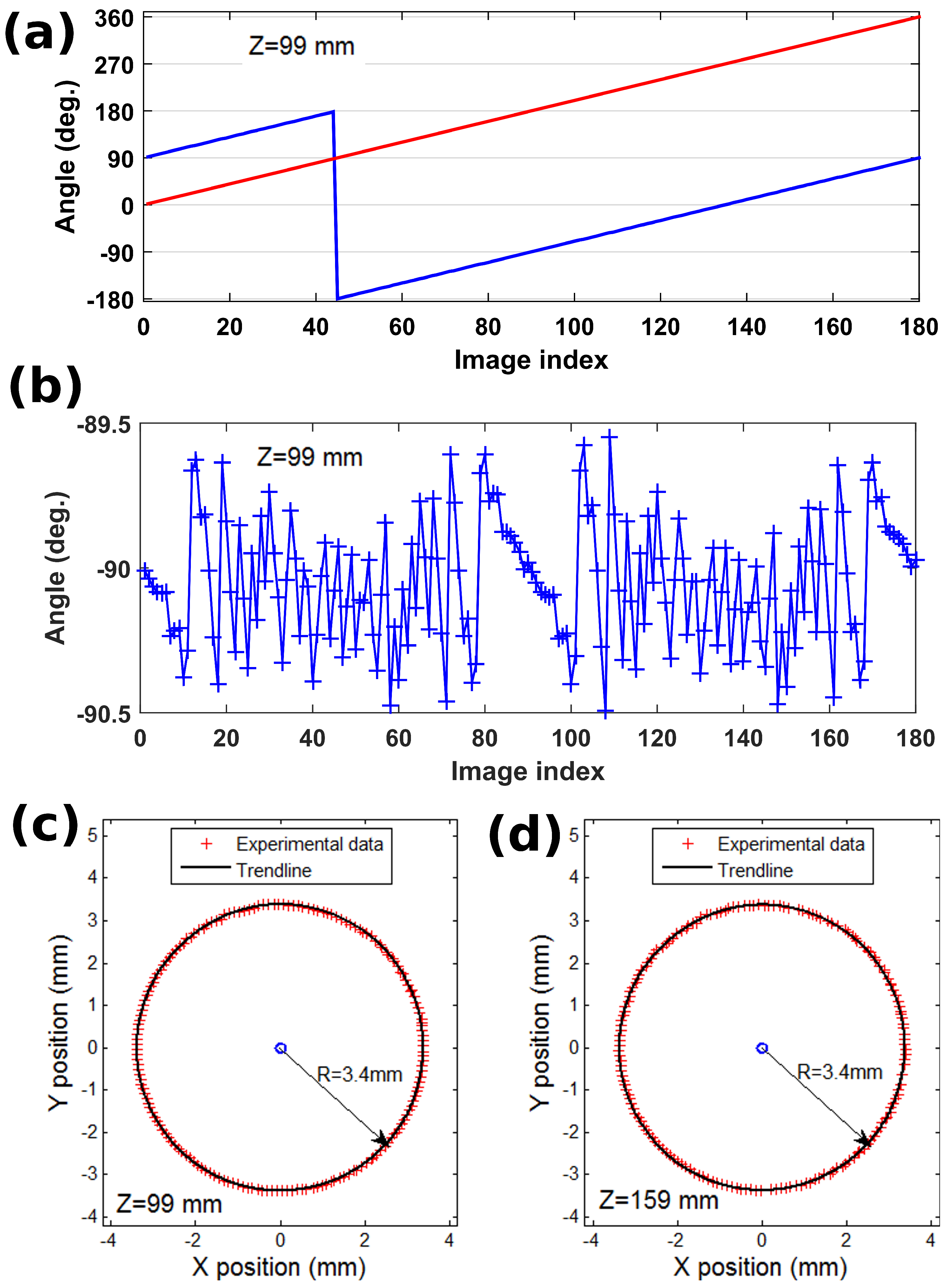

4.1. In-Plane Rotation

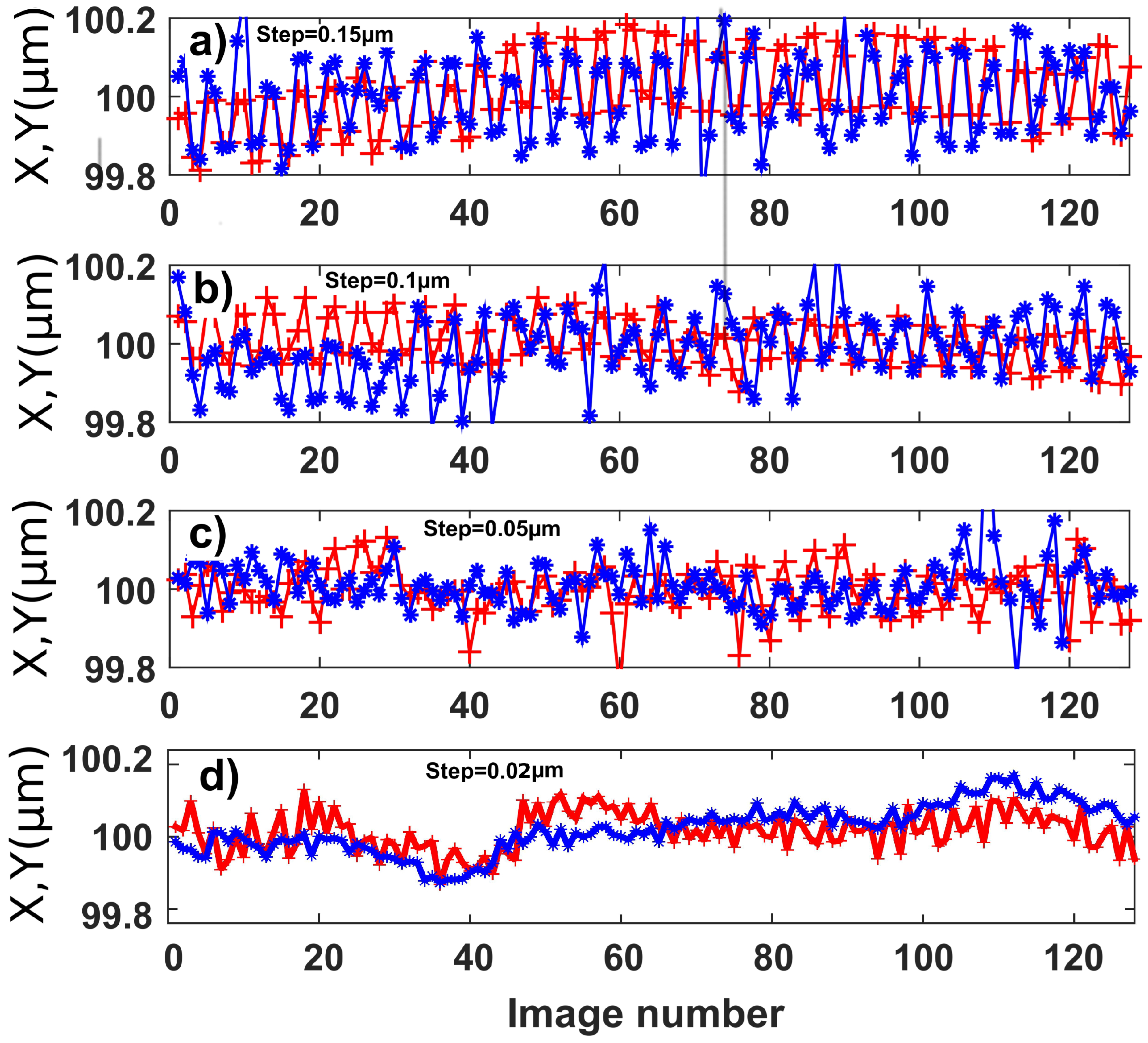

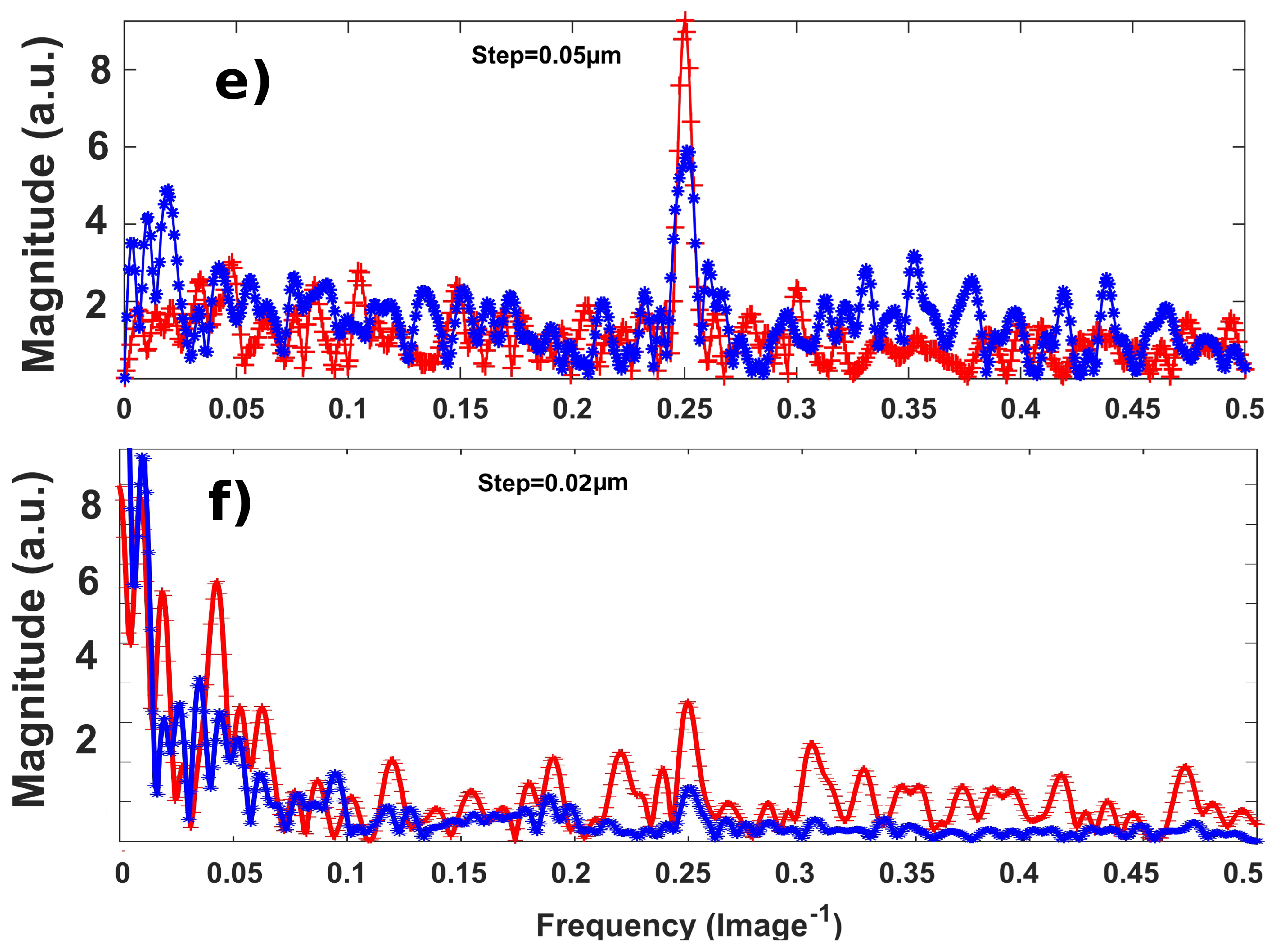

4.2. In-Plane Displacements

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kim, Y.S.; Yang, S.H.; Yang, K.W.; Dagalakis, N.G. Design of MEMS Vision Tracking System Based on a Micro Fiducial Marker. Sens. Actuators A Phys. 2015, 234, 48–56. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Guo, J.; Lei, X.; Zhu, C. A high-speed vision-based sensor for dynamic vibration analysis using fast motion extraction algorithms. Sensors 2016, 16, 572. [Google Scholar] [CrossRef] [PubMed]

- Gan, J.; Zhang, X.; Li, H.; Wu, H. Full Closed-Loop Controls of Micro/Nano Positioning System with Nonlinear Hysteresis Using Micro-Vision System. Sens. Actuators A Phys. 2017, 257, 125–133. [Google Scholar] [CrossRef]

- Shang, W.; Lu, H.; Wan, W.; Fukuda, T.; Shen, Y. Vision-Based Nano Robotic System for High-Throughput Non-Embedded Cell Cutting. Sci. Rep. 2016, 6. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Gong, Z.; Tang, K.; Lu, Z.; Ru, C.; Luo, J.; Xie, S.; Sun, Y. Locating End-Effector Tips in Robotic Micromanipulation. IEEE Trans. Robot. 2014, 30, 125–130. [Google Scholar] [CrossRef]

- Tamadazte, B.; Marchand, E.; Dembele, S.; Le Fort-Piat, N. CAD Model-Based Tracking and 3D Visual-Based Control for MEMS Microassembly. Int. J. Robot. Res. 2010, 29, 1416–1434. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, Z.; Wang, X.; Liu, H.; Zhao, Q.; Zhou, C.; Tan, M.; Pu, H.; Xie, S.; Sun, Y. Automated Robotic Measurement of 3-D Cell Morphologies. IEEE Robot. Autom. Lett. 2017, 2, 499–505. [Google Scholar] [CrossRef]

- Wu, D.; Chen, T.; Li, A. A high precision approach to calibrate a structured light vision sensor in a robot-based three-dimensional measurement system. Sensors 2016, 16, 1388. [Google Scholar] [CrossRef] [PubMed]

- Cappelleri, D.J.; Piazza, G.; Kumar, V. A Two Dimensional Vision-Based Force Sensor for Microrobotic Applications. Sens. Actuators A Phys. 2011, 171, 340–351. [Google Scholar] [CrossRef]

- Wang, X.; Ananthasuresh, G.K.; Ostrowski, J.P. Vision-Based Sensing of Forces in Elastic Objects. Sens. Actuators A Phys. 2001, 94, 142–156. [Google Scholar] [CrossRef]

- Wei, Y.; Xu, Q. An Overview of Micro-Force Sensing Techniques. Sens. Actuators A Phys. 2015, 234, 359–374. [Google Scholar] [CrossRef]

- Sugiura, H.; Sakuma, S.; Kaneko, M.; Arai, F. On-Chip Measurement of Cellular Mechanical Properties Using Moiré Fringe. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 3513–3518. [Google Scholar]

- Chang, R.J.; Shiu, C.C.; Cheng, C.Y. Self-Biased-SMA Drive PU Microgripper with Force Sensing in Visual Servo. Int. J. Adv. Robot. Syst. 2013, 10, 280. [Google Scholar] [CrossRef]

- Kokorian, J.; Buja, F.; van Spengen, W.M. In-Plane Displacement Detection With Picometer Accuracy on a Conventional Microscope. J. Microelectromech. Syst. 2015, 24, 618–625. [Google Scholar] [CrossRef]

- Ya’akobovitz, A.; Krylov, S.; Hanein, Y. Nanoscale Displacement Measurement of Electrostatically Actuated Micro-Devices Using Optical Microscopy and Digital Image Correlation. Sens. Actuators A Phys. 2010, 162, 1–7. [Google Scholar] [CrossRef]

- Gao, J.; Picciotto, C.; Wu, W.; Tong, W.M. From Nanoscale Displacement Sensing and Estimation to Nanoscale Alignment. J. Vac. Sci. Technol. B Microelectron. Nanometer Struct. 2006, 24, 3094. [Google Scholar] [CrossRef]

- Zimmermann, S.; Tiemerding, T.; Fatikow, S. Automated Robotic Manipulation of Individual Colloidal Particles Using Vision-Based Control. IEEE/ASME Trans. Mechatron. 2015, 20, 2031–2038. [Google Scholar] [CrossRef]

- Yamahata, C.; Sarajlic, E.; Krijnen, G.J.M.; Gijs, M.A.M. Subnanometer Translation of Microelectromechanical Systems Measured by Discrete Fourier Analysis of CCD Images. J. Microelectromech. Syst. 2010, 19, 1273–1275. [Google Scholar] [CrossRef]

- Gao, W. Precision Nanometrology; Springer Series in Advanced Manufacturing; Springer: London, UK, 2010. [Google Scholar]

- Sandoz, P. Nanometric position and displacement measurement of the six degrees of freedom by means of a patterned surface element. Appl. Opt. 2005, 44, 1449–1453. [Google Scholar] [CrossRef] [PubMed]

- Masa, P.; Franzi, E.; Urban, C. Nanometric Resolution Absolute Position Encoders. In Proceedings of the ‘13th European Space Mechanisms and Tribology Symposium–ESMATS 2009’, Vienna, Austria, 23–25 September 2009; pp. 1–3. [Google Scholar]

- Galeano-Zea, J.A.; Sandoz, P.; Gaiffe, E.; Pretet, J.L.; Mougin, C. Pseudo-Periodic Encryption of Extended 2-D Surfaces for High Accurate Recovery of Any Random Zone by Vision. Int. J. Optomech. 2010, 4, 65–82. [Google Scholar] [CrossRef]

- Galeano, J.Z.; Sandoz, P.; Gaiffe, E.; Launay, S.; Robert, L.; Jacquot, M.; Hirchaud, F.; Prétet, J.L.; Mougin, C. Position-Referenced Microscopy for Live Cell Culture Monitoring. Biomed. Opt. Express 2011, 2, 1307–1318. [Google Scholar] [CrossRef] [PubMed]

- Tan, N.; Clévy, C.; Laurent, G.J.; Sandoz, P.; Chaillet, N. Accuracy Quantification and Improvement of Serial Micropositioning Robots for In-Plane Motions. IEEE Trans. Robot. 2015, 31, 1497–1507. [Google Scholar] [CrossRef]

- Dubois, F.; Schockaert, C.; Callens, N.; Yourassowsky, C. Focus plane detection criteria in digital holography microscopy by amplitude analysis. Opt. Express 2006, 14, 5895–5908. [Google Scholar] [CrossRef] [PubMed]

- Ferraro, P.; Grilli, S.; Alfieri, D.; Nicola, S.D.; Finizio, A.; Pierattini, G.; Javidi, B.; Coppola, G.; Striano, V. Extended focused image in microscopy by digital holography. Opt. Express 2005, 13, 6738–6749. [Google Scholar] [CrossRef] [PubMed]

- Hong, A.; Zeydan, B.; Charreyron, S.; Ergeneman, O.; Pané, S.; Toy, M.F.; Petruska, A.J.; Nelson, B.J. Real-Time Holographic Tracking and Control of Microrobots. IEEE Robot. Autom. Lett. 2017, 2, 143–148. [Google Scholar] [CrossRef]

- Schnars, U.; Jüptner, W. Direct recording of holograms by a CCD target and numerical reconstruction. Appl. Opt. 1994, 33, 179–181. [Google Scholar] [CrossRef] [PubMed]

- Goodman, J. Introduction to Fourier Optics; W. H. Freeman: New York, NY, USA, 2017. [Google Scholar]

- IAroslavskii, L.P.; Merzliakov, N.S. Methods of Digital Holography; Izdatel Nauka: Moscow, Russia, 1977. [Google Scholar]

- Schnars, U.; Falldorf, C.; Watson, J.; Jüptner, W. Digital Holography; Springer: Berlin/Heidelberg, Germany, 2015; pp. 39–68. [Google Scholar]

- Zhang, T.; Yamaguchi, I. Three-dimensional microscopy with phase-shifting digital holography. Opt. Lett. 1998, 23, 1221–1223. [Google Scholar] [CrossRef] [PubMed]

- Cuche, E.; Bevilacqua, F.; Depeursinge, C. Digital holography for quantitative phase-contrast imaging. Opt. Lett. 1999, 24, 291–293. [Google Scholar] [CrossRef] [PubMed]

- Indebetouw, G.; Klysubun, P. Imaging through scattering media with depth resolution by use of low-coherence gating in spatiotemporal digital holography. Opt. Lett. 2000, 25, 212–214. [Google Scholar] [CrossRef] [PubMed]

- Seebacher, S.; Osten, W.; Jueptner, W.P. Measuring shape and deformation of small objects using digital holography. In Laser Interferometry IX: Applications; International Society for Optics and Photonics: Bellingham, WA, USA, 1998; Volume 3479, pp. 104–116. [Google Scholar]

- Jacquot, M.; Sandoz, P.; Tribillon, G. High resolution digital holography. Opt. Commun. 2001, 190, 87–94. [Google Scholar] [CrossRef]

- Bryngdahl, O.; Wyrowski, F. Digital Holography–Computer-Generated Holograms. Prog. Opt. 1990, 28, 1–86. [Google Scholar]

- Yaroslavsky, L. Digital Holography and Digital Image Processing: Principles, Methods, Algorithms; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Kreis, T. Handbook of Holographic Interferometry: Optical and Digital Methods; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Kreis, T.M.; Adams, M.; Jüptner, W.P. Methods of digital holography: A comparison. In Optical Inspection and Micromeasurements II; International Society for Optics and Photonics: Bellingham, WA, USA, 1997; Volume 3098, pp. 224–234. [Google Scholar]

- Pan, G.; Meng, H. Digital holography of particle fields: Reconstruction by use of complex amplitude. Appl. Opt. 2003, 42, 827–833. [Google Scholar] [CrossRef] [PubMed]

- Grilli, S.; Ferraro, P.; De Nicola, S.; Finizio, A.; Pierattini, G.; Meucci, R. Whole optical wavefields reconstruction by digital holography. Opt. Express 2001, 9, 294–302. [Google Scholar] [CrossRef] [PubMed]

- Poon, T.C. Digital Holography and Three-Dimensional Display: Principles and Applications; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Sandoz, P.; Jacquot, M. Lensless vision system for in-plane positioning of a patterned plate with subpixel resolution. JOSA A 2011, 28, 2494–2500. [Google Scholar] [CrossRef] [PubMed]

- Davis, J.A.; Moreno, I.; Cottrell, D.M.; Berg, C.A.; Freeman, C.L.; Carmona, A.; Debenham, W.H. Experimental implementation of a virtual optical beam propagator system based on a Fresnel diffraction algorithm. Opt. Eng. 2015, 54, 103101. [Google Scholar] [CrossRef]

- Jacquot, M.; Sandoz, P. Sampling of two-dimensional images: Prevention from spectrum overlap and ghost detection. Opt. Eng. 2004, 43, 214–224. [Google Scholar]

- Fonseca, E.S.R.; Fiadeiro, P.T.; Pereira, M.; Pinheiro, A. Comparative analysis of autofocus functions in digital in-line phase-shifting holography. Appl. Opt. 2016, 55, 7663–7674. [Google Scholar] [CrossRef] [PubMed]

- Langehanenberg, P.; Kemper, B.; Dirksen, D.; von Bally, G. Autofocusing in digital holographic phase contrast microscopy on pure phase objects for live cell imaging. Appl. Opt. 2008, 47, D176–D182. [Google Scholar] [CrossRef] [PubMed]

- Toy, M.F.; Kühn, J.; Richard, S.; Parent, J.; Egli, M.; Depeursinge, C. Accelerated autofocusing of off-axis holograms using critical sampling. Opt. Lett. 2012, 37, 5094–5096. [Google Scholar]

- Memmolo, P.; Distante, C.; Paturzo, M.; Finizio, A.; Ferraro, P.; Javidi, B. Automatic focusing in digital holography and its application to stretched holograms. Opt. Lett. 2011, 36, 1945–1947. [Google Scholar] [CrossRef] [PubMed]

- Doğar, M.; İlhan, H.A.; Özcan, M. Real-time, auto-focusing digital holographic microscope using graphics processors. Rev. Sci. Instrum. 2013, 84, 083704. [Google Scholar] [CrossRef] [PubMed]

- Sandoz, P.; Bonnans, V.; Gharbi, T. High-accuracy position and orientation measurement of extended two-dimensional surfaces by a phase-sensitive vision method. Appl. Opt. 2002, 41, 5503–5511. [Google Scholar] [CrossRef] [PubMed]

- Sandoz, P.; Zea, J.A.G. Space-frequency analysis of pseudo-periodic patterns for subpixel position control. In Proceedings of the International Symposium on Optomechatronic Technologies, ISOT 2009, Istanbul, Turkey, 21–23 September 2009; pp. 16–21. [Google Scholar]

- Guelpa, V.; Laurent, G.J.; Sandoz, P.; Zea, J.G.; Clévy, C. Subpixelic measurement of large 1D displacements: Principle, processing algorithms, performances and software. Sensors 2014, 14, 5056–5073. [Google Scholar] [CrossRef] [PubMed]

| Experim. WD (mm) | Experim. Z (mm) | Reconst. Distance (mm) | Standard Deviat. (deg.) | Linearity (%) |

|---|---|---|---|---|

| 20 | 82 | 82.5 | 0.201 | 0.2 |

| 37 | 99 | 97.8 | 0.219 | 0.3 |

| 97 | 159 | 156.2 | 0.267 | 0.3 |

| 121 | 183 | 183.0 | 0.229 | 0.3 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asmad Vergara, M.; Jacquot, M.; Laurent, G.J.; Sandoz, P. Digital Holography as Computer Vision Position Sensor with an Extended Range of Working Distances. Sensors 2018, 18, 2005. https://doi.org/10.3390/s18072005

Asmad Vergara M, Jacquot M, Laurent GJ, Sandoz P. Digital Holography as Computer Vision Position Sensor with an Extended Range of Working Distances. Sensors. 2018; 18(7):2005. https://doi.org/10.3390/s18072005

Chicago/Turabian StyleAsmad Vergara, Miguel, Maxime Jacquot, Guillaume J. Laurent, and Patrick Sandoz. 2018. "Digital Holography as Computer Vision Position Sensor with an Extended Range of Working Distances" Sensors 18, no. 7: 2005. https://doi.org/10.3390/s18072005

APA StyleAsmad Vergara, M., Jacquot, M., Laurent, G. J., & Sandoz, P. (2018). Digital Holography as Computer Vision Position Sensor with an Extended Range of Working Distances. Sensors, 18(7), 2005. https://doi.org/10.3390/s18072005