Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors

Abstract

1. Introduction

2. Background

2.1. UAV Fields of Application

2.2. Review of Tools for UAV Mission Definition

- They allow the user to flight manually the drone or to establish a set of points that conform a path to be followed by the UAV.

- They rely in standard map technologies such as Google Maps and offer a 2D point of view.

- In both cases, the application permits to create a flight plan and to automatically upload it to a drone for an automatic flight. In the case of Parrot, they use the MAVLink standard, which is a protocol currently used by many drones [45,46], used not only to automate flight but also to automate measurement process.

- They are intended to be used with the drones of the manufacturer, they are not open to be used with any arbitrary drone.

- The interface is oriented to simple flight plans defined through a time-ordered waypoints sequence, and to manual definition of other data such as altitudes or heading.

- The DJI most advanced automatic flight creation and execution is only available for the professional version of the application and for the top-tier aircraft.

3. Materials and Methods

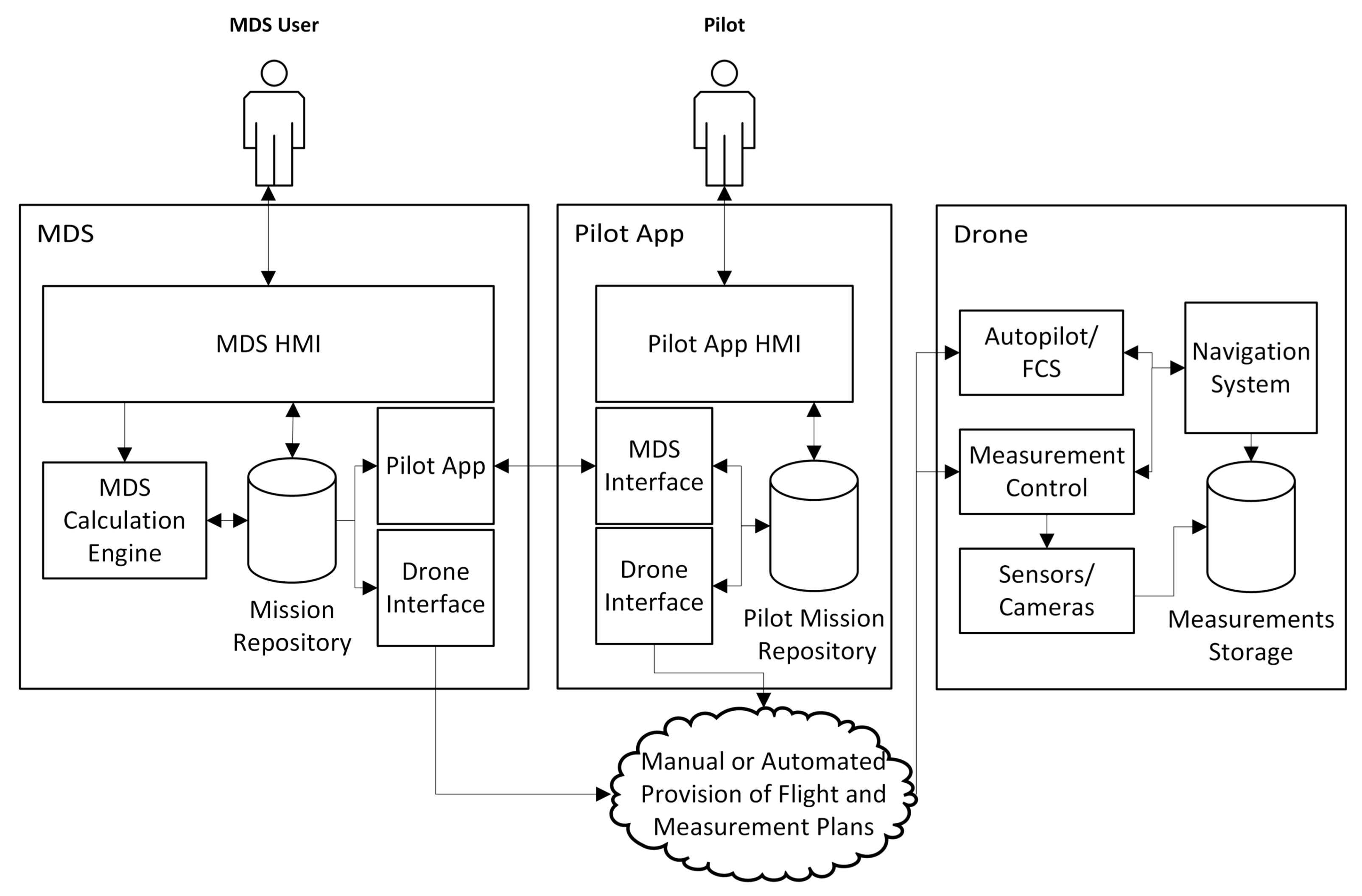

3.1. High Level Requirements and System Interactions

- The MDS users, assumed to be the clients of the MDS platform, in charge of designing an inspection operation, pre-visualising and, after qualifying it as satisfactory, forwarding it to the pilot assigned by the platform. They use a MDS web-based interface with the cloud platform.

- The drone autopilots or flight control systems, receiving the calculated flight plan to be automated in a relevant format (i.e., MAVLINK). In order to facilitate flight plan provision, the MDS cloud platform contains a connector to autopilot systems, using either automated or manual procedures to distribute the flight plans in the relevant format.

- The human pilots, receivers of the designed operation, and if accepted, with the responsibility to implement it (with the automated flight support by the autopilot). Their interface with the cloud platform is the pilot App, which is a mobile/web App used to distribute and visualise missions in the field.

- Define a Mission: Log into system; define the mission objectives; set the initial (take-off position) and the final (landing position) waypoints; request MDS system to generate flight plan and trajectory for the mission; mission gets saved in MDS User Mission list.

- Modify a Mission: Very similar to the previous one, but selecting a mission to be edited. Objectives and initial and final waypoints may be edited.

- Clone a Mission.

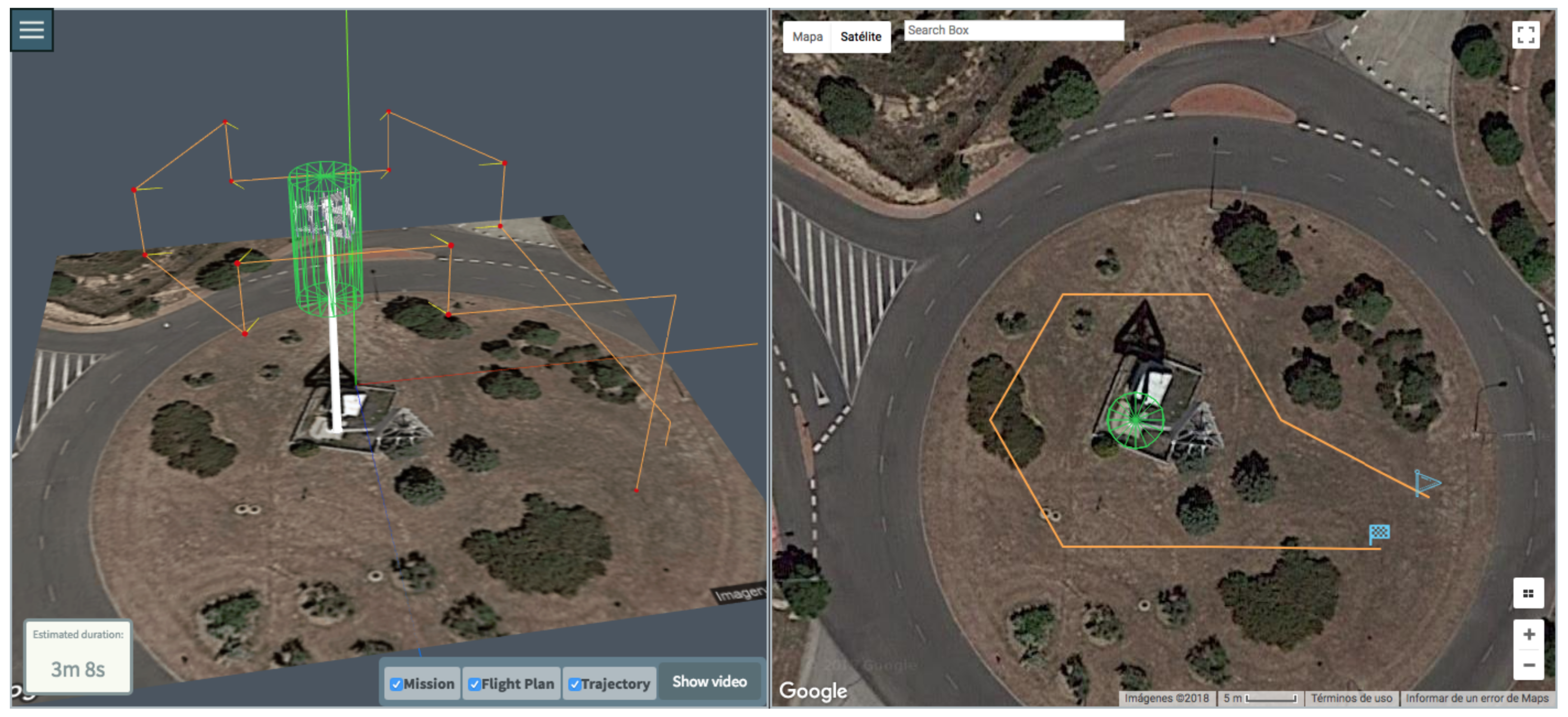

- Visualise a Mission: log into system; select mission from MDS User Mission list; select visualisation view; and request MDS system to produce and export visualisation results (video, test, …). Visualisation view can be: Mission in 2D or 3D (or textual description), Flight Plan in 2D or 3D (or textual description), Flight Plan in suitable autopilot format (i.e., Mavlink), Trajectory in 2D or 3D, and Simulated Flight Video.

- Approve a Mission: select a pilot from an available pilot list; select a previously defined mission from MDS User Mission list; send a notification to the pilot so he/she may decide to accept it; finally MDS system changes mission state to APPROVED.

- Receive a Mission Notification: receive and preview it (some key information should be provided in notification).

- Accept/Decline mission: log into the system; select a not DECLINED Mission from Pilot Mission list; and Accept/Decline it. As a result, the MDS system, changes mission state to ACCEPTED or DECLINED.

- Visualise a Mission: It is completely equivalent to the Mission Visualisation MDS user use case, but implemented in the pilot App. Only not DECLINED Missions should be visible.

- End mission execution: log into the system; select an ACCEPTED Mission from Pilot Mission list; and terminate it. As a result, the MDS system, changes mission state to END.

3.2. MDS Functional Architecture and Context

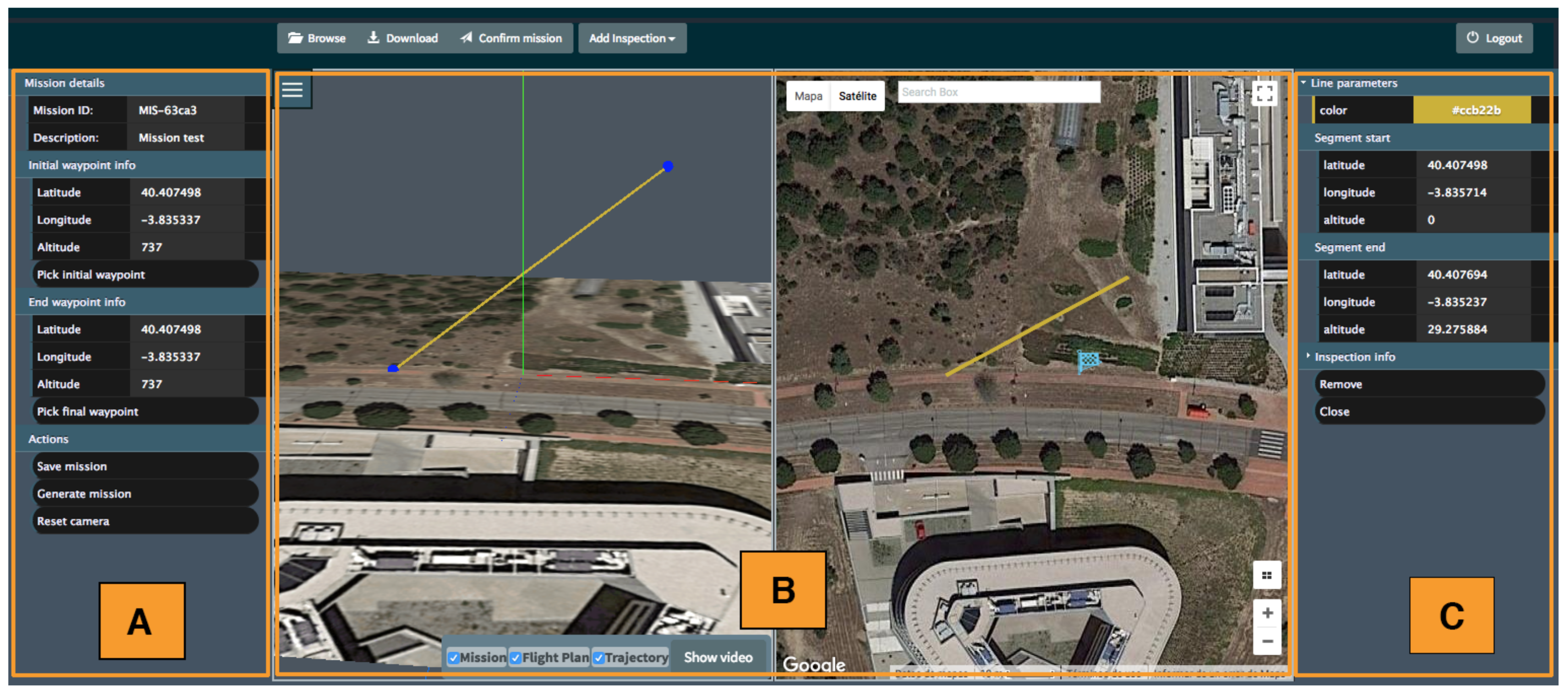

3.3. MDS Input Data Models and Associated Input HMI

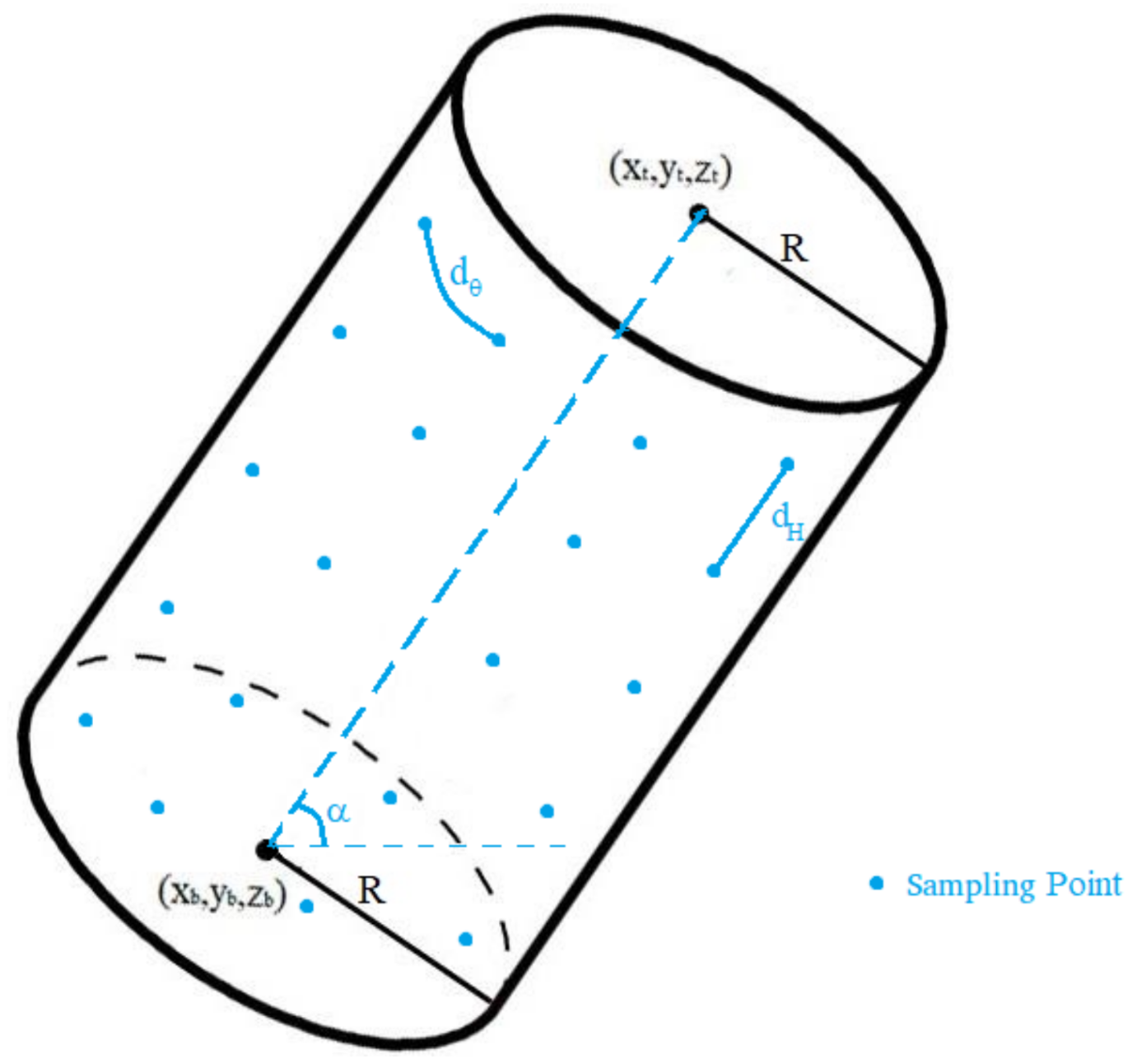

- Sensor name: ID of the sensor to be used.

- Measurement duration: Time needed to complete all the measures at each sampling position.

- Measurement period: Time between two measures at each sampling position.

- Linear sampling distance: Linear distance between two sampling positions.

- Angular sampling distance: Angular distance between two sampling positions.

- A.

- Mission details: This panel contains the general information associated to the current mission: the mission ID, a brief text description of the mission and the initial and final waypoints. It also contains buttons to manage missions status (save it, generate flight plan and trajectories, etc.).

- B.

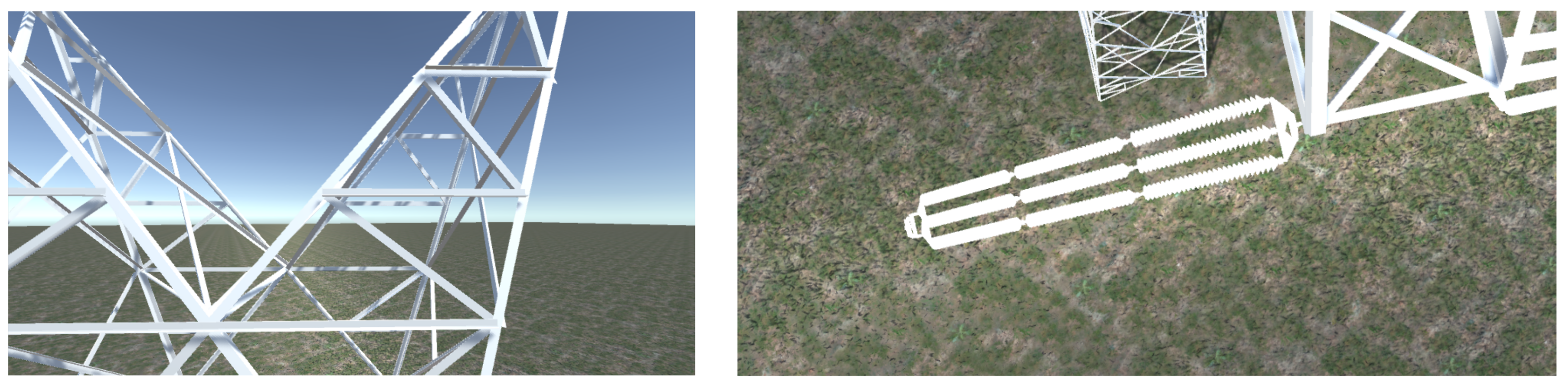

- 3D View and Map View: This central section is divided into two subsections arranged horizontally. The left one corresponds to the generated 3D View, using WebGL technology to generate/manipulate 3D views of the infrastructures, missions, flight plans and trajectories. This 3D view changes according to the center and scale of the 2D map from Google Maps, located at the right of the panel.

- C.

- Inspection details: This panel holds the details of a selected inspection within the mission specification. The information and parameters contained in this panel change in function of the inspection type.

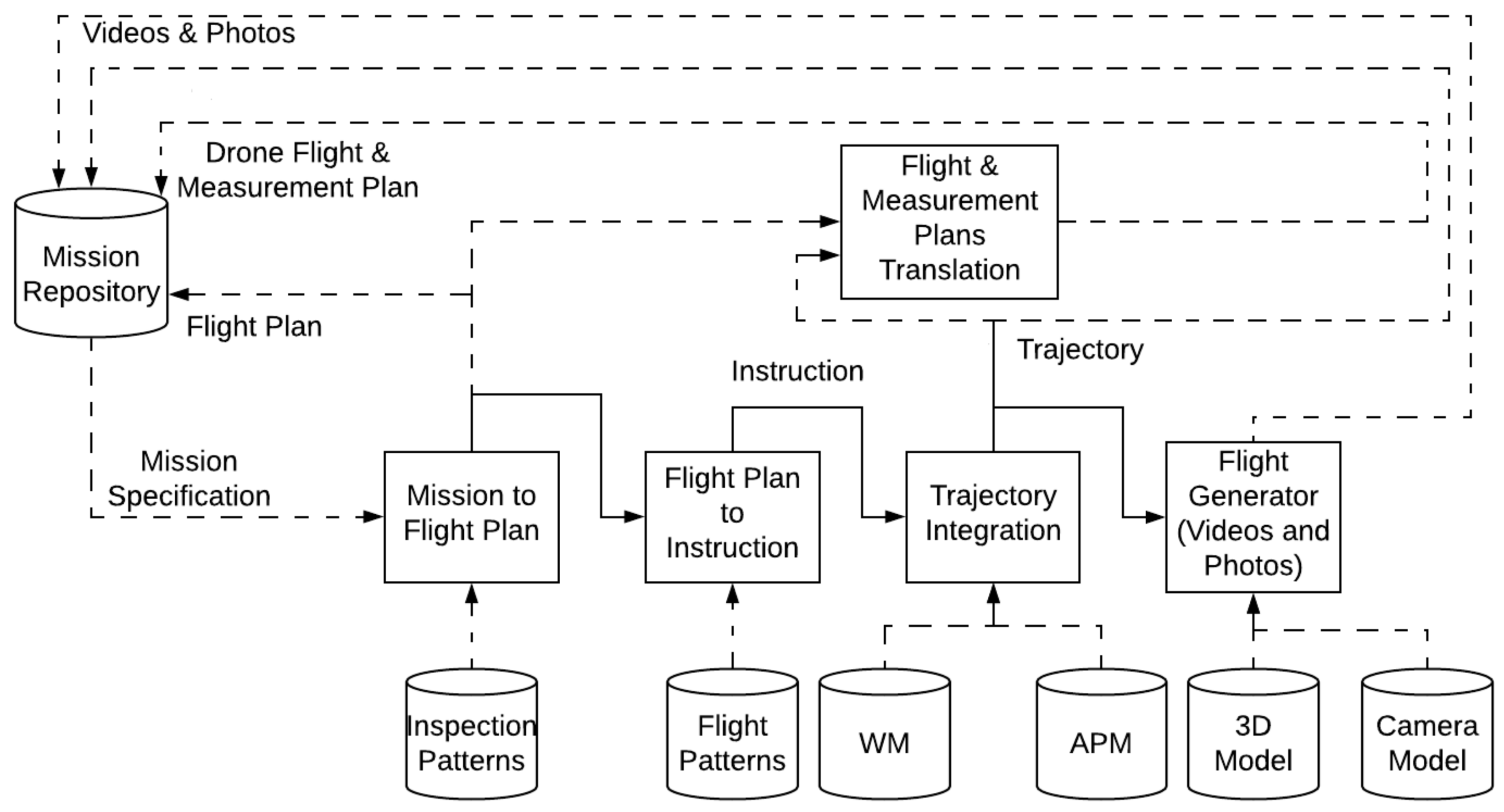

3.4. Mission Calculation and Associated Data Models

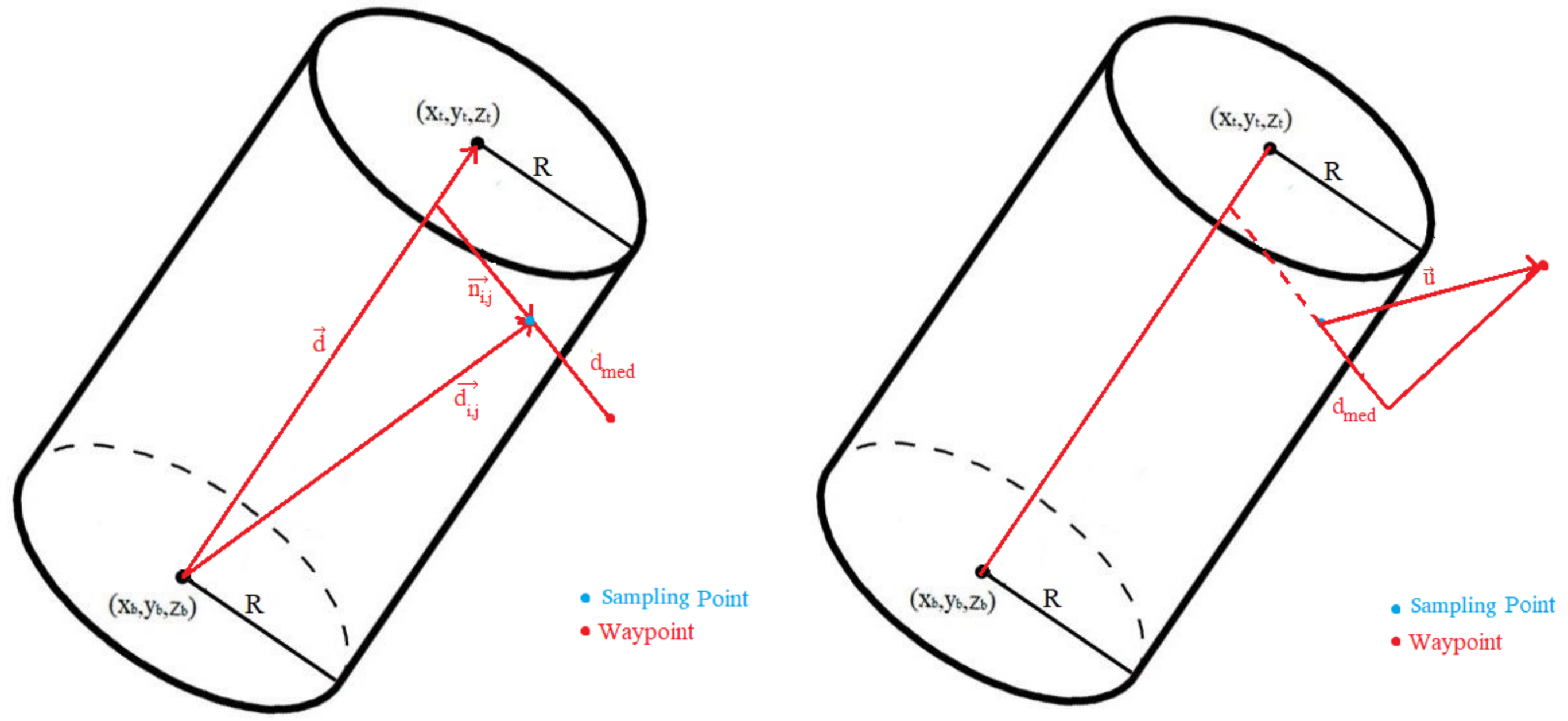

3.4.1. Flight Plan Calculation

- Bottom Base Circumference and Top Base Circumference, sampled at constant angular distance.

- Bottom Base Circle and Top Base Circle, sampled using polar coordinates at constant angular and linear sampling distances.

- Cylinder Wall, sampled using constant angular and height-aligned linear sampling distances. This is the sampling process to be detailed next.

- Normal to surface illumination.

- Defined constant camera tilt and drone yaw angles.

- Defined constant camera tilt, normal to surface drone yaw angle.

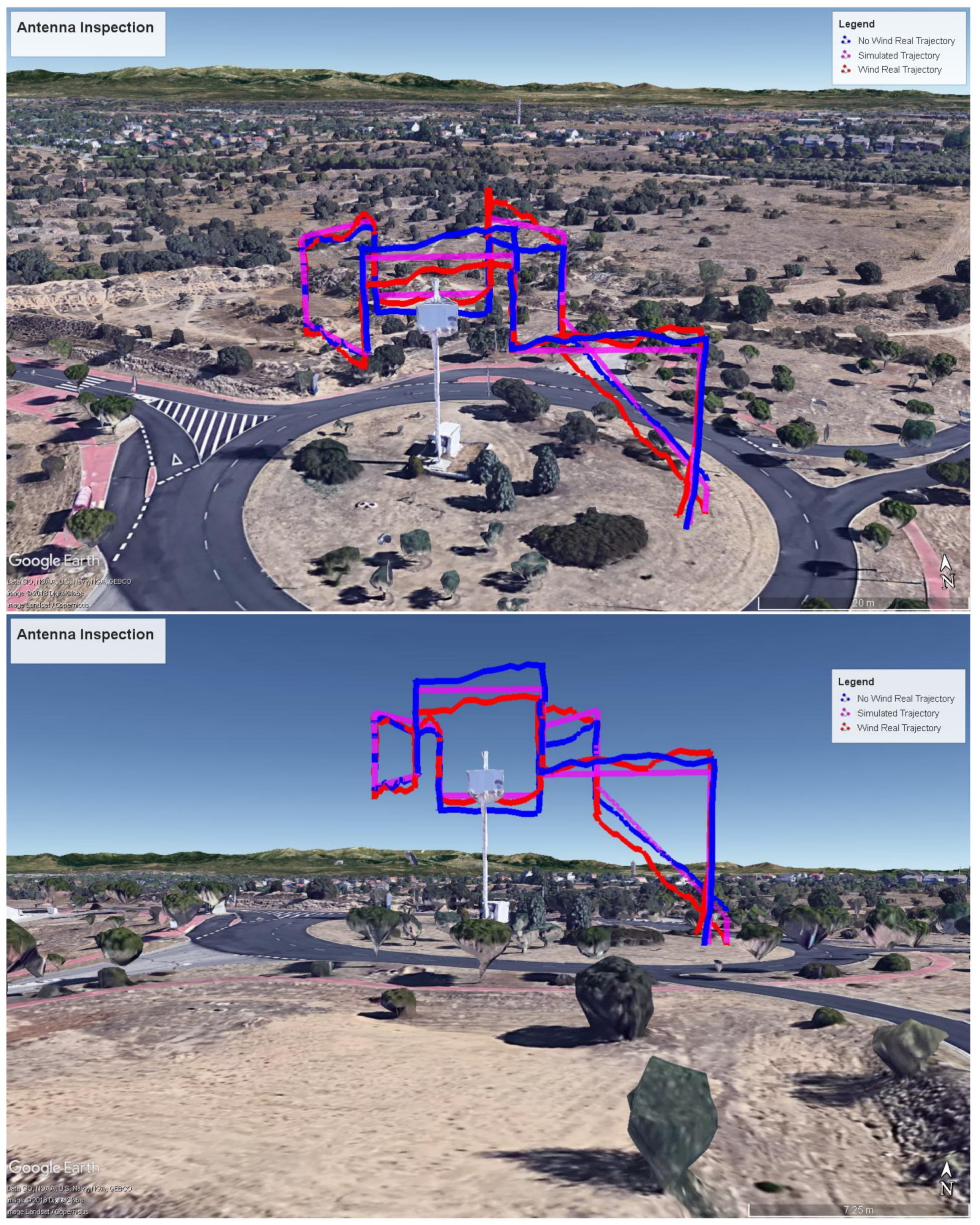

3.4.2. Trajectory Calculation

3.4.3. Flight Generator: Simulated Video/Sensing Generation

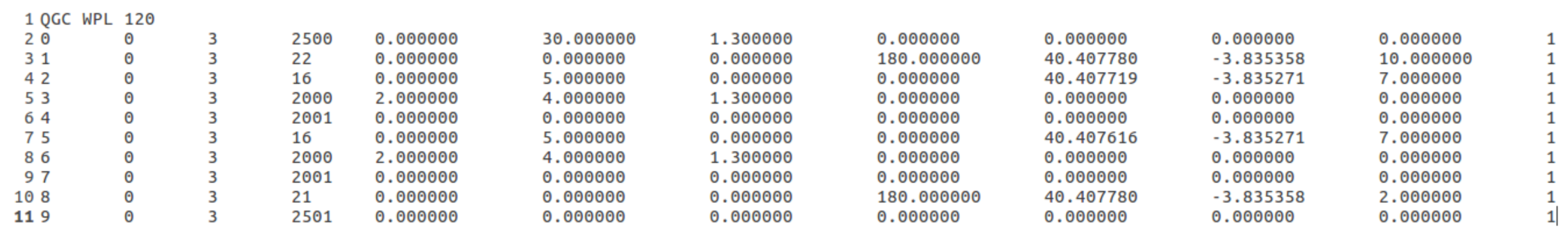

3.4.4. Flight and Measurement Plan Translations

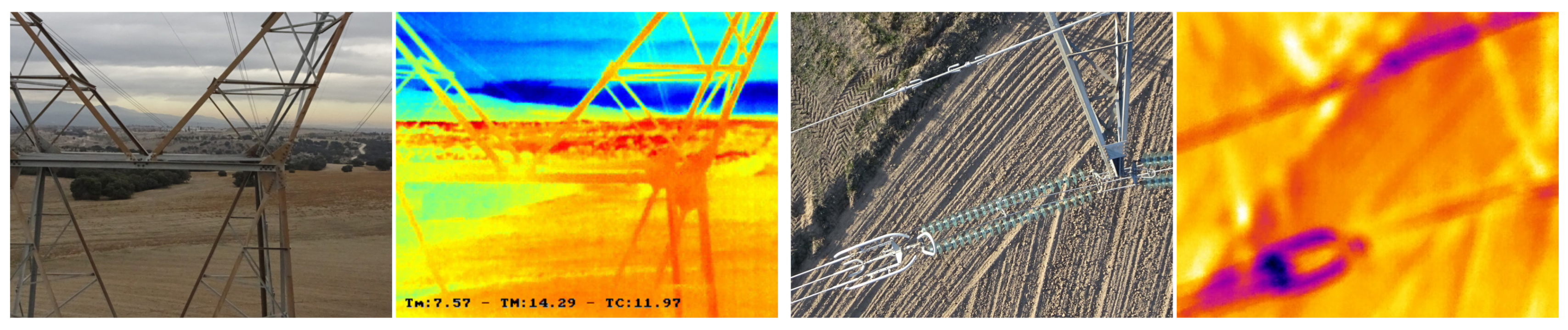

3.5. Drone Flight and Measurement Plan Execution

- For the Bebop 2 drone, the autopilot/FCS and measurement control systems are integrated and the system just follows the aforementioned instructions in the same order they are provided, controlling the different hardware components of the drone (basically motors and camera), and georeferencing the trajectory by making use of the on-board GPS system.

- For the DJI drone the procedure is more complex. The flight plan is executed using DJI drone SDK, while the measurements are taken by the cameras while triggered by the NUC. The NUC triggers picture taking, according to measurement plan, once it decides an inspection waypoint in the flight plan is reached, and the aircraft yaw is coherent with yaw constraints at that waypoint. To reduce GPS and IMU measurement errors a Kalman filter is used, along with some integrity tests to discard inertial and GPS measurement outliers. Gimbal tilt control in this drone is not possible (by automatic means), which imposes some limits on the potential illumination strategies.

4. Results

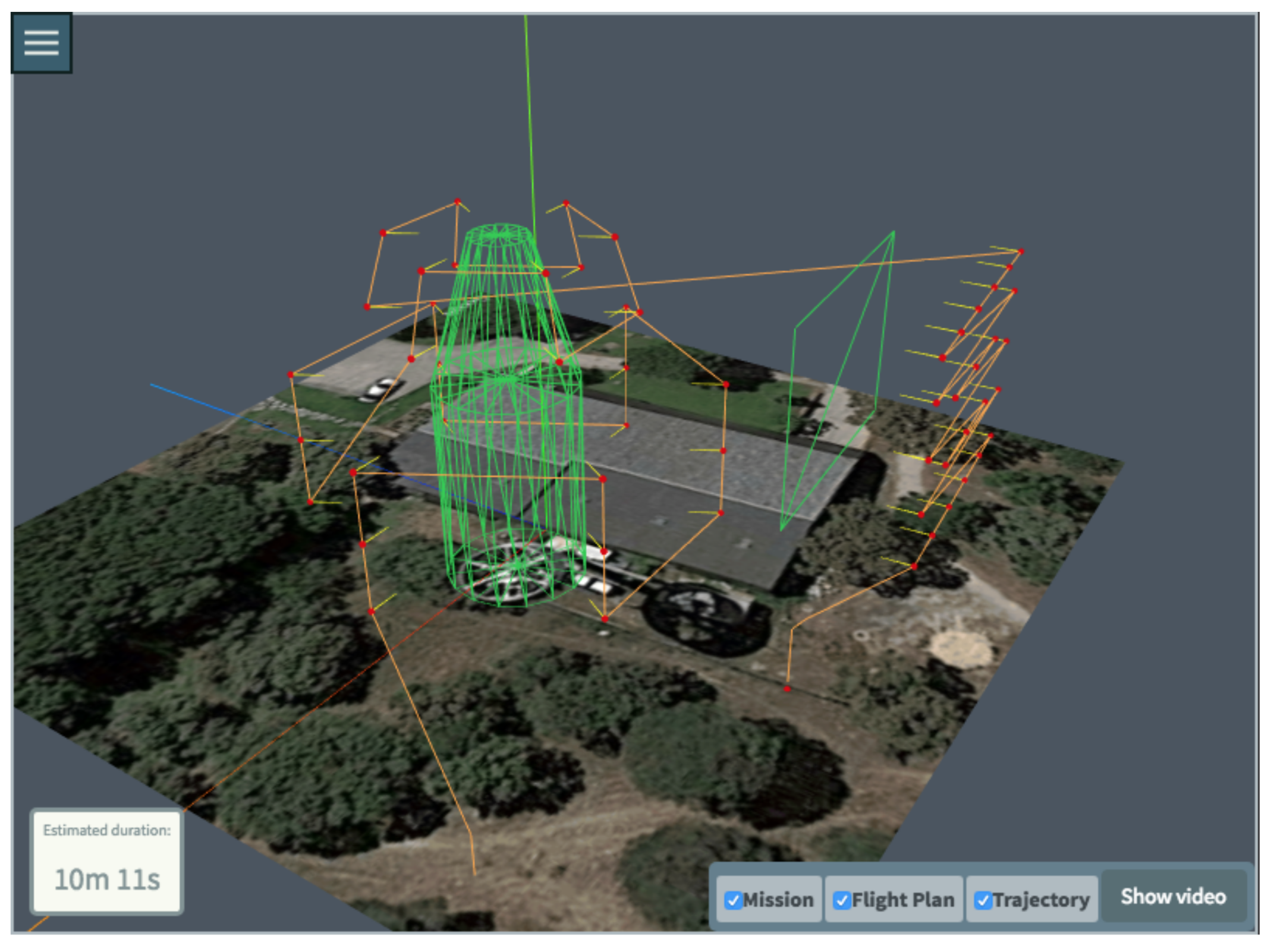

4.1. Mission Creation Process Results

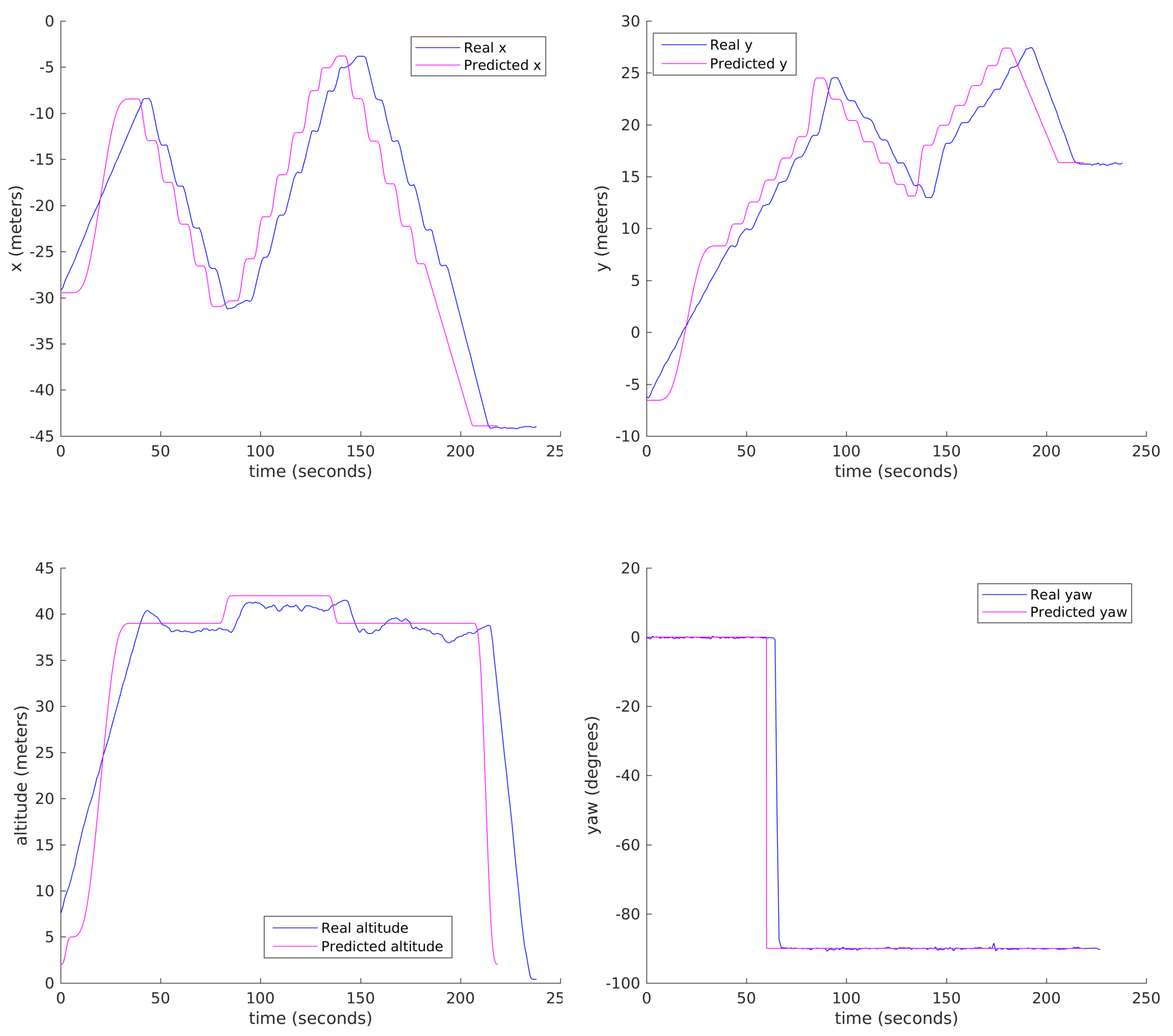

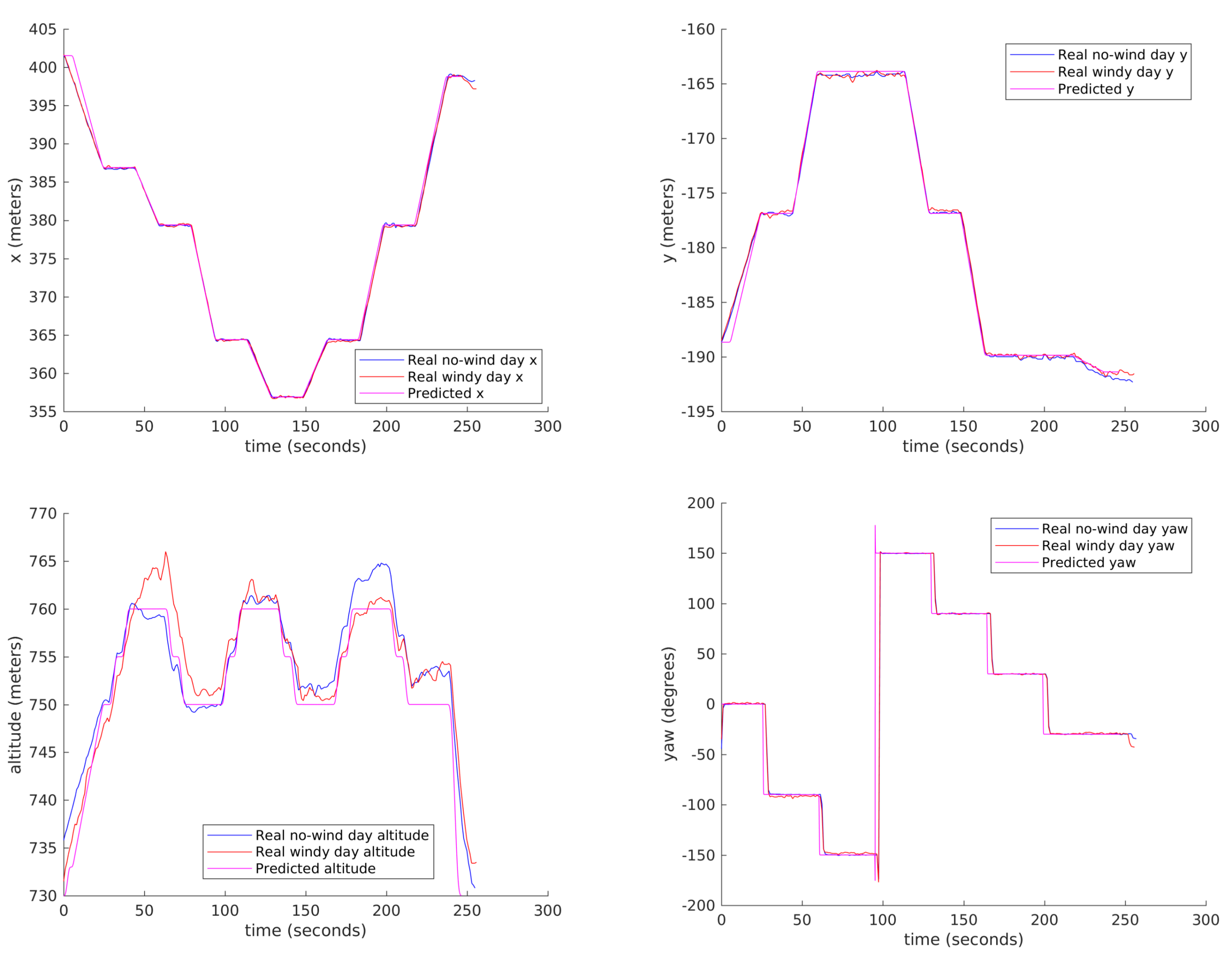

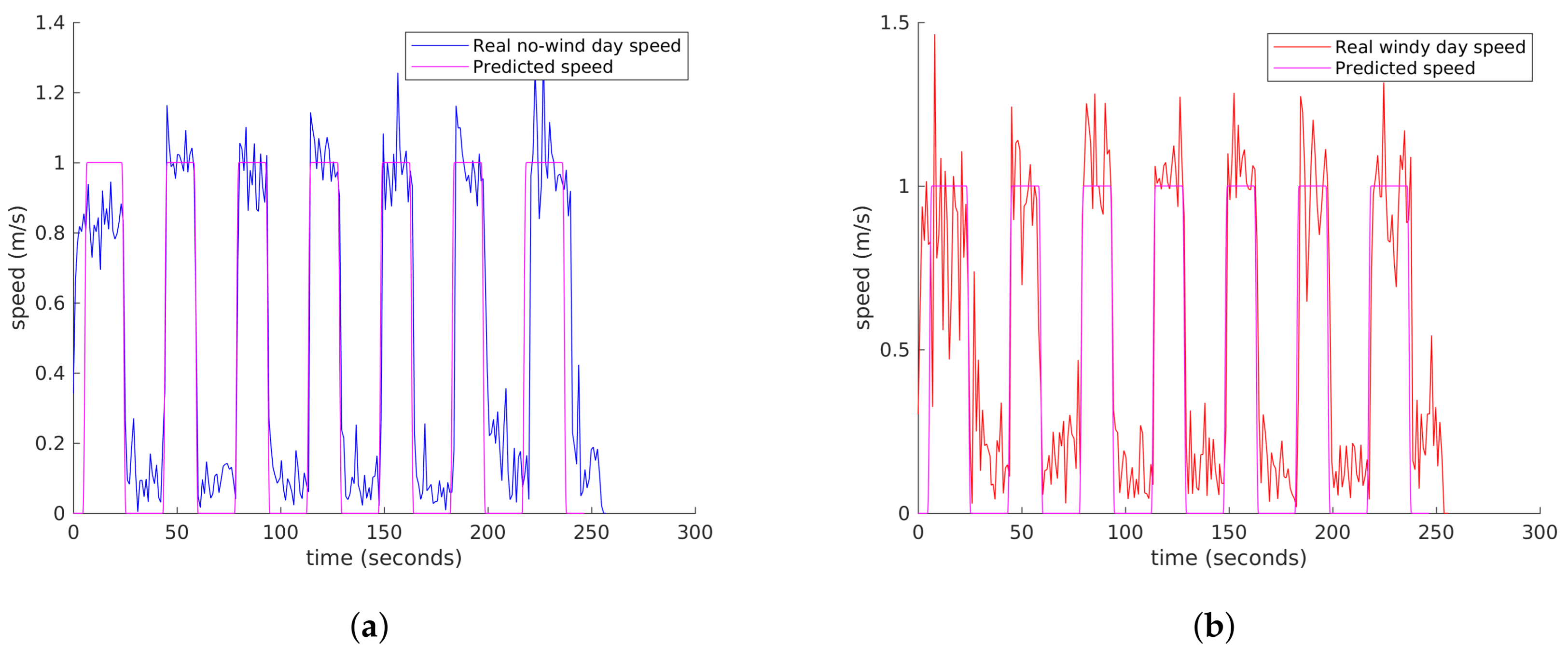

4.2. Mission Calculation and Comparison with Real Flights Execution

5. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Undertaking, S.J. European Drones Outlook Study. Unlocking the Value for Europe; Technical Report; SESAR Joint Undertaking: Brussels, 2016. [Google Scholar]

- Lum, C.; Mackenzie, M.; Shaw-Feather, C.; Luker, E.; Dunbabin, M. Multispectral Imaging and Elevation Mapping from an Unmanned Aerial System for Precision Agriculture Applications. In Proceedings of the 13th International Conference on Precision Agriculture, St. Louis, MO, USA, 31 July–4 August 2016. [Google Scholar]

- Hamza, M.; Jehangir, A.; Ahmad, T.; Sohail, A.; Naeem, M. Design of surveillance drone with X-ray camera, IR camera and metal detector. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017; pp. 111–114. [Google Scholar]

- Zipline. Zipline. 2017. Available online: http://www.flyzipline.com (accessed on 11 April 2018).

- Amazon. Amazon Prime Air. 2016. Available online: https://www.amazon.com/Amazon-Prime-Air/b?node=8037720011 (accessed on 11 April 2018).

- Stimpson, A.; Cummings, M.; Nneji, V.C.; Goodrich, K.H. Exploring Concepts of Operations for On-Demand Passenger Air Transportation. In Proceedings of the 17th AIAA Aviation Technology, Integration, and Operations Conference, Denver, CO, USA, 5–9 June 2017. [Google Scholar]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Ariff, O.K. Introduction to Unmanned Aircraft Systems; Marshall, D.M., Barnhart, R.K., Hottman, S.B., Shappee, E., Thomas Most, M., Eds.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Moore, A.; Schubert, M.; Rymer, N. Autonomous Inspection of Electrical Transmission Structures with Airborne UV Sensors and Automated Air Traffic Management. In Proceedings of the AIAA Information Systems-AIAA Infotech-Aerospace, Kissimmee, FL, USA, 8–12 January 2018. [Google Scholar]

- La Cour-Harbo, A. Quantifying risk of ground impact fatalities of power line inspection BVLOS flight with small unmanned aircraft. In Proceedings of the 2017 International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017. [Google Scholar]

- Carvajal, F.; Aguera, F.; Perez, M. Surveying a landslide in a road embankment using unmanned aerial vehicle photogrammetry. In Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics (UAV-g), Zurich, Switzerland, 14–16 September 2011; pp. 14–16. [Google Scholar]

- Fiori, L.; Doshi, A.; Martinez, E.; Orams, M.B.; Bollard-Breen, B. The use of unmanned aerial systems in marine mammal research. Remote Sens. 2017, 9, 543. [Google Scholar] [CrossRef]

- Hodgson, J.C.; Mott, R.; Baylis, S.M.; Pham, T.T.; Wotherspoon, S.; Kilpatrick, A.D.; Segaran, R.R.; Reid, I.; Terauds, A.; Koh, L.P. Drones count wildlife more accurately and precisely than humans. Methods Ecol. Evol. 2017. [Google Scholar] [CrossRef]

- Belmonte, N.; Staulo, S.; Fiorot, S.; Luetto, C.; Rizzi, P.; Baricco, M. Fuel cell powered octocopter for inspection of mobile cranes: Design, cost analysis and environmental impacts. Appl. Energy 2018, 215, 556–565. [Google Scholar] [CrossRef]

- Barkham, R.; Bokhari, S.; Saiz, A. Urban Big Data: City Management and Real Estate Markets; GovLab Digest: New York, NY, USA, 2018. [Google Scholar]

- Matsuoka, R.; Nagusa, I.; Yasuhara, H.; Mori, M.; Katayama, T.; Yachi, N.; Hasui, A.; Katakuse, M.; Atagi, T. Measurement of large-scale solar power plant by using images acquired by non-metric digital camera on board UAV. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 39, 435–440. [Google Scholar] [CrossRef]

- Arenella, A.; Greco, A.; Saggese, A.; Vento, M. Real Time Fault Detection in Photovoltaic Cells by Cameras on Drones. In Image Analysis and Recognition, Proceedings of the 14th International Conference, ICIAR 2017, Montreal, QC, Canada, 5–7 July 2017; Karray, F., Campilho, A., Cheriet, F., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 617–625. [Google Scholar]

- Quater, P.B.; Grimaccia, F.; Leva, S.; Mussetta, M.; Aghaei, M. Light Unmanned Aerial Vehicles (UAVs) for Cooperative Inspection of PV Plants. IEEE J. Photovolt. 2014, 4, 1107–1113. [Google Scholar] [CrossRef]

- Addabbo, P.; Angrisano, A.; Bernardi, M.L.; Gagliarde, G.; Mennella, A.; Nisi, M.; Ullo, S. A UAV infrared measurement approach for defect detection in photovoltaic plants. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017; pp. 345–350. [Google Scholar]

- Rossi, M.; Brunelli, D.; Adami, A.; Lorenzelli, L.; Menna, F.; Remondino, F. Gas-Drone: Portable gas sensing system on UAVs for gas leakage localization. In Proceedings of the 2014 IEEE SENSORS, Valencia, Spain, 2–5 November 2014; pp. 1431–1434. [Google Scholar]

- Kersnovski, T.; Gonzalez, F.; Morton, K. A UAV system for autonomous target detection and gas sensing. In Proceedings of the 2017 IEEE Aerospace Conference, Big Sky, MT, USA, 4–11 March 2017; pp. 1–12. [Google Scholar]

- Capolupo, A.; Pindozzi, S.; Okello, C.; Fiorentino, N.; Boccia, L. Photogrammetry for environmental monitoring: The use of drones and hydrological models for detection of soil contaminated by copper. Sci. Total Environ. 2015, 514, 298–306. [Google Scholar] [CrossRef] [PubMed]

- Vale, A.; Ventura, R.; Carvalho, P. Application of unmanned aerial vehicles for radiological inspection. Fusion Eng. Des. 2017, 124, 492–495. [Google Scholar] [CrossRef]

- Torii, T.; Sanada, Y. Radiation measurement by unmanned aircraft after Fukushima Daiichi nuclear power plant accident. In Proceedings of the Symposium ICAO, Montreal, QC, Canada, 23–25 March 2015. [Google Scholar]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Ventura, D.; Bruno, M.; Lasinio, G.J.; Belluscio, A.; Ardizzone, G. A low-cost drone based application for identifying and mapping of coastal fish nursery grounds. Estuar. Coast. Shelf Sci. 2016, 171, 85–98. [Google Scholar] [CrossRef]

- Rau, J.; Jhan, J.; Lo, C.; Lin, Y. Landslide mapping using imagery acquired by a fixed-wing UAV. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2011, 38, 1-C22. [Google Scholar] [CrossRef]

- Niethammer, U.; Rothmund, S.; Schwaderer, U.; Zeman, J.; Joswig, M. Open source image-processing tools for low-cost UAV-based landslide investigations. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2011, 38, C22. [Google Scholar] [CrossRef]

- Branco, L.H.C.; Segantine, P.C.L. MaNIAC-UAV—A methodology for automatic pavement defects detection using images obtained by Unmanned Aerial Vehicles. J. Phys. Conf. Ser. 2015, 633, 012122. [Google Scholar] [CrossRef]

- Máthé, K.; Buşoniu, L. Vision and Control for UAVs: A Survey of General Methods andof Inexpensive Platforms for Infrastructure Inspection. Sensors 2015, 15, 14887–14916. [Google Scholar] [CrossRef] [PubMed]

- Santos, T.; Moreira, M.; Almeida, J.; Dias, A.; Martins, A.; Dinis, J.; Formiga, J.; Silva, E. PLineD: Vision-based power lines detection for Unmanned Aerial Vehicles. In Proceedings of the 2017 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Coimbra, Portugal, 26–28 April 2017; pp. 253–259. [Google Scholar]

- Zhang, F.; Wang, W.; Zhao, Y.; Li, P.; Lin, Q.; Jiang, L. Automatic diagnosis system of transmission line abnormalities and defects based on UAV. In Proceedings of the 2016 4th International Conference on Applied Robotics for the Power Industry (CARPI), Jinan, China, 11–13 October 2016; pp. 1–5. [Google Scholar]

- Martinez, C.; Sampedro, C.; Chauhan, A.; Campoy, P. Towards autonomous detection and tracking of electric towers for aerial power line inspection. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 284–295. [Google Scholar]

- Priest, L. Detecting Changes at Cell Sites and Surrounding Areas Using Unmanned Aerial Vehicles. U.S. Patent 20170318477A1, 2 November 2017. [Google Scholar]

- Zahariadis, T.; Voulkidis, A.; Karkazis, P.; Trakadas, P. Preventive maintenance of critical infrastructures using 5G networks drones. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–4. [Google Scholar]

- De Melo, R.R.S.; Costa, D.B.; Alvares, J.S.; Irizarry, J. Applicability of unmanned aerial system (UAS) for safety inspection on construction sites. Saf. Sci. 2017, 98, 174–185. [Google Scholar] [CrossRef]

- Richman, B.; Bauer, M.P.; Michini, B.J.; Poole, A.J. Unmanned Aerial Vehicle Rooftop Inspection System. U.S. Patent 9,609,288, 28 March 2017. [Google Scholar]

- Benecke, N. iDeepMon: Intelligent Deep Mine Shaft Inspection and Monitoring. Available online: https://eitrawmaterials.eu/project/ideepmon/ (accessed on 11 April 2018).

- Freire, G.; Cota, R. Capture of images in inaccessible areas in an underground mine using an unmanned aerial vehicle. In Proceedings of the First International Conference on Underground Mining Technology, Sudbury, ON, Canada, 11–13 October 2017; Australian Centre for Geomechanics: Crawley, Australia, 2017. [Google Scholar]

- Saleri, R.; Pierrot-Deseilligny, M.; Bardiere, E.; Cappellini, V.; Nony, N.; De Luca, L.; Campi, M. UAV photogrammetry for archaeological survey: The Theaters area of Pompeii. In Proceedings of the Digital Heritage International Congress (DigitalHeritage), Marseille, France, 28 October–1 November 2013; Volume 2, pp. 497–502. [Google Scholar]

- Cefalu, A.; Haala, N.; Schmohl, S.; Neumann, I.; Genz, T. A Mobile Multi-Sensor Platform for Building Reconstruction Integrating Terrestrial and Autonomous UAV-Based Close Range Data Acquisition. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 63–70. [Google Scholar] [CrossRef]

- González-Jorge, H.; Martínez-Sánchez, J.; Bueno, M.; Arias, P. Unmanned aerial systems for civil applications: A review. Drones 2017, 1, 2. [Google Scholar] [CrossRef]

- Bebop drone. Standard, Parrot. Available online: http://developer.parrot.com/docs/bebop/ (accessed on 11 April 2018).

- Dà-Jiāng Innovations Science and Technology Co., Ltd. DJI. 2017. Available online: https://www.dji.com (accessed on 11 April 2018 ).

- Dietrich, T.; Andryeyev, O.; Zimmermann, A.; Mitschele-Thiel, A. Towards a unified decentralized swarm management and maintenance coordination based on mavlink. In Proceedings of the 2016 International Conference onAutonomous Robot Systems and Competitions (ICARSC), Braganca, Portugal, 4–6 May 2016. [Google Scholar]

- Atoev, S.; Kwon, K.R.; Lee, S.H.; Moon, K.S. Data analysis of the MAVLink communication protocol. In Proceedings of the 2017 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 2–4 November 2017; pp. 1–3. [Google Scholar]

- DroneDeploy. Available online: https://www.dronedeploy.com (accessed on 11 April 2018).

- DroneUp. Available online: https://www.droneup.com (accessed on 11 April 2018).

- Drone Base, Inc. Drone Base. Available online: https://www.dronebase.com (accessed on 11 April 2018).

- Lopez-Leones, J.; Vilaplana, M.A.; Gallo, E.; Navarro, F.A.; Querejeta, C. The aircraft intent description language: A key enabler for air-ground synchronization in trajectory-based operations. In Proceedings of the IEEE/AIAA 26th Digital Avionics Systems Conference, Dallas, TX, USA, 21–25 Octomber 2007. [Google Scholar]

- Lopez Leones, J. Definition of an aircraft intent description language for air traffic management applications. Ph.D. Thesis, University of Glasgow, Glasgow, UK, 2008. [Google Scholar]

- Besada, J.; Frontera, G.; Crespo, J.; Casado, E.; Lopez-Leones, J. Automated Aircraft Trajectory Prediction Based on Formal Intent-Related Language Processing. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1067–1082. [Google Scholar] [CrossRef]

- Frontera, G.; Besada, J.A.; Bernardos, A.M.; Casado, E.; Lopez-Leones, J. Formal Intent-Based Trajectory Description Languages. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1550–1566. [Google Scholar] [CrossRef]

- Waller, M.; Rigopoulos, J.; Blackman, D.; Berreen, T. Considerations in the application of dynamic programming to optimal aircraft trajectory generation. In Proceedings of the IEEE 1990 National Aerospace and Electronics Conference, Dayton, OH, USA, 21–25 May 1990; pp. 574–579. [Google Scholar]

- Hu, X.B.; Wu, S.F.; Jiang, J. On-line free-flight path optimization based on improved genetic algorithms. Eng. Appl. Artif. Intell. 2004, 17, 897–907. [Google Scholar] [CrossRef]

- Grabbe, S.; Sridhar, B.; Mukherjee, A. Sequential traffic flow optimization with tactical flight control heuristics. J. Guid. Control Dyn. 2009, 32, 810–820. [Google Scholar] [CrossRef]

- Frontera, G.; Besada, J.A.; Lopez-Leones, J. Generation of Aircraft Intent Based on a Microstrategy Search Tree. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1405–1421. [Google Scholar] [CrossRef]

- Qu, Y.H.; Pan, Q.; Yan, J.G. Flight path planning of UAV based on heuristically search and genetic algorithms. In Proceedings of the 31st Annual Conference of IEEE Industrial Electronics Society, Raleigh, NC, USA, 6–10 November 2005. [Google Scholar]

- Santana, L.V.; Brandao, A.S.; Sarcinelli-Filho, M.; Carelli, R. A trajectory tracking and 3d positioning controller for the ar. drone quadrotor. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 756–767. [Google Scholar]

- Pastor, E.; Santamaria, E.; Royo, P.; Lopez, J.; Barrado, C. On the design of a UAS flight plan monitoring and edition system. In Proceedings of the 2010 IEEE Aerospace Conference, Big Sky, MT, USA, 6–13 March 2010; pp. 1–20. [Google Scholar]

- Ruscio, D.D.; Malavolta, I.; Pelliccione, P.; Tivoli, M. Automatic generation of detailed flight plans from high-level mission descriptions. In Proceedings of the ACM/IEEE 19th International Conference on Model Driven Engineering Languages and Systems, Saint-malo, France, 2–7 October 2016; pp. 45–55. [Google Scholar]

- Bozhinoski, D.; Di Ruscio, D.; Malavolta, I.; Pelliccione, P.; Tivoli, M. Flyaq: Enabling non-expert users to specify and generate missions of autonomous multicopters. In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 801–806. [Google Scholar]

- Thomason, J.; Ratsamee, P.; Kiyokawa, K.; Kriangkomol, P.; Orlosky, J.; Mashita, T.; Uranishi, Y.; Takemura, H. Adaptive View Management for Drone Teleoperation in Complex 3D Structures. In Proceedings of the 22nd International Conference on Intelligent User Interfaces, Limassol, Cyprus, 13–16 March 2017; ACM: New York, NY, USA, 2017; pp. 419–426. [Google Scholar]

- Dias, P.S.; Gomes, R.M.; Pinto, J. Mission planning and specification in the Neptus framework. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation (ICRA), Orlando, FL, USA, 15–19 May 2006; pp. 3220–3225. [Google Scholar]

- Valenti, M.; Schouwenaars, T.; Kuwata, Y.; Feron, E.; How, J.; Paunicka, J. Implementation of a manned vehicle-UAV mission system. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Providence, RI, USA, 16–19 August 2004; pp. 16–19. [Google Scholar]

- Gentilini, D.; Farina, N.; Franco, E.; Tirri, A.E.; Accardo, D.; Moriello, R.S.L.; Angrisani, L. Multi agent path planning strategies based on Kalman Filter for surveillance missions. In Proceedings of the 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a better tomorrow (RTSI), Bologna, Italy, 7–9 September 2016; pp. 1–6. [Google Scholar]

- Sun, X.; Liu, Y.; Yao, W.; Qi, N. Triple-stage path prediction algorithm for real-time mission planning of multi-UAV. Electron. Lett. 2015, 51, 1490–1492. [Google Scholar] [CrossRef]

- Cavalcante, T.R.F.; de Bessa, I.V.; Cordeiro, L.C. Planning and Evaluation of UAV Mission Planner for Intralogistics Problems. In Proceedings of the 2017 VII Brazilian Symposium on Computing Systems Engineering (SBESC), Curitiba, Brazil, 6–10 November 2017; pp. 9–16. [Google Scholar]

- Frontera Sánchez, G. Applications of Formal Languages to Management of Manned and Unmanned Aircraft. Ph.D. Thesis, Universidad Politécnica de Madrid, Signal, Systems and Radiocommunications Department, Madrid, Spain, 2016. [Google Scholar]

- Nelson, M. UAV Mission Planning; Technical Report; Australia Department of Defense: Canberra, Australia, 1995.

- Tulum, K.; Durak, U.; Yder, S.K. Situation aware UAV mission route planning. In Proceedings of the 2009 IEEE Aerospace Conference, Big Sky, MT, USA, 7–14 March 2009; pp. 1–12. [Google Scholar]

- Zorbas, D.; Pugliese, L.D.P.; Razafindralambo, T.; Guerriero, F. Optimal drone placement and cost-efficient target coverage. J. Netw. Comput. Appl. 2016, 75, 16–31. [Google Scholar] [CrossRef]

- Li, J.; Besada, J.A.; Bernardos, A.M.; Tarrío, P.; Casar, J.R. A novel system for object pose estimation using fused vision and inertial data. Inf. Fusion 2017, 33, 15–28. [Google Scholar] [CrossRef]

- Mavlink. Standard, QgroundControl. Available online: http://qgroundcontrol.org/mavlink/start (accessed on 11 April 2017).

- Crespo, G.; Glez-de Rivera, G.; Garrido, J.; Ponticelli, R. Setup of a communication and control systems of a quadrotor type Unmanned Aerial Vehicle. In Proceedings of the 2014 Conference on Design of Circuits and Integrated Circuits (DCIS), Madrid, Spain, 26–28 November 2014; pp. 1–6. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Besada, J.A.; Bergesio, L.; Campaña, I.; Vaquero-Melchor, D.; López-Araquistain, J.; Bernardos, A.M.; Casar, J.R. Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors. Sensors 2018, 18, 1170. https://doi.org/10.3390/s18041170

Besada JA, Bergesio L, Campaña I, Vaquero-Melchor D, López-Araquistain J, Bernardos AM, Casar JR. Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors. Sensors. 2018; 18(4):1170. https://doi.org/10.3390/s18041170

Chicago/Turabian StyleBesada, Juan A., Luca Bergesio, Iván Campaña, Diego Vaquero-Melchor, Jaime López-Araquistain, Ana M. Bernardos, and José R. Casar. 2018. "Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors" Sensors 18, no. 4: 1170. https://doi.org/10.3390/s18041170

APA StyleBesada, J. A., Bergesio, L., Campaña, I., Vaquero-Melchor, D., López-Araquistain, J., Bernardos, A. M., & Casar, J. R. (2018). Drone Mission Definition and Implementation for Automated Infrastructure Inspection Using Airborne Sensors. Sensors, 18(4), 1170. https://doi.org/10.3390/s18041170