Edge-Aware Unidirectional Total Variation Model for Stripe Non-Uniformity Correction

Abstract

:1. Introduction

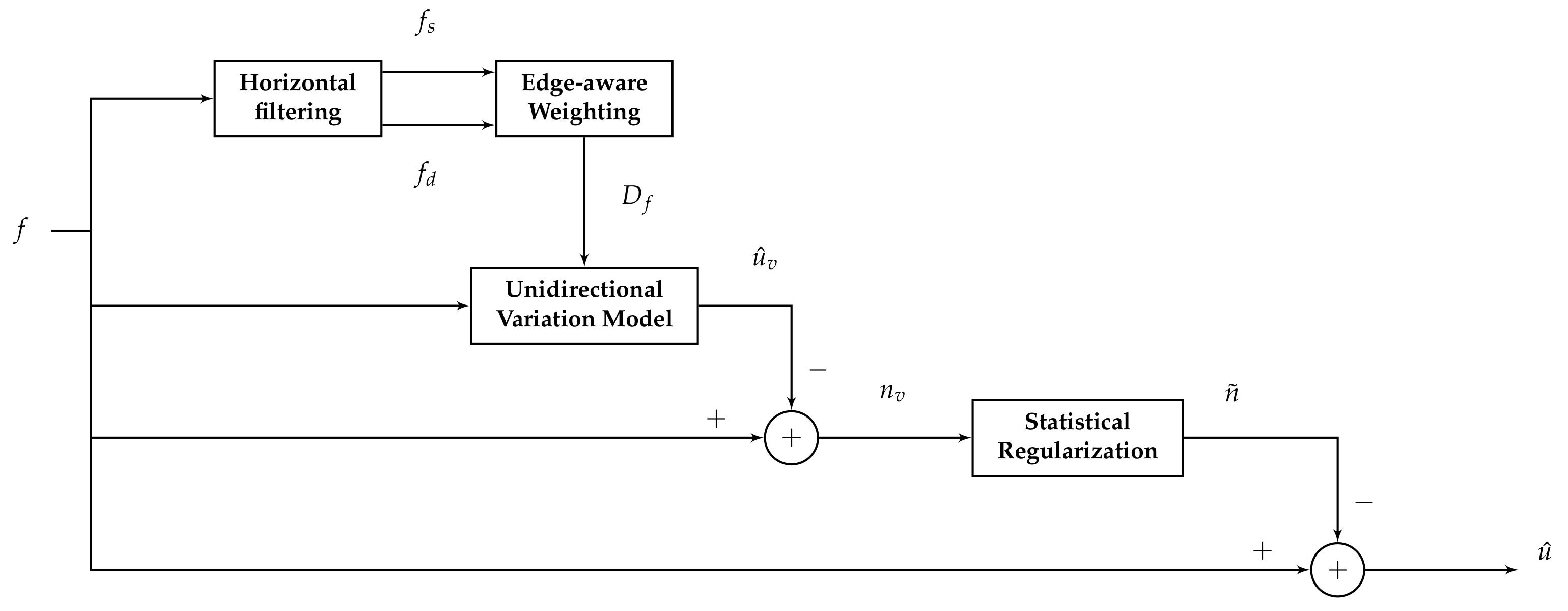

2. Experimental Details

2.1. Total Variation Optimization

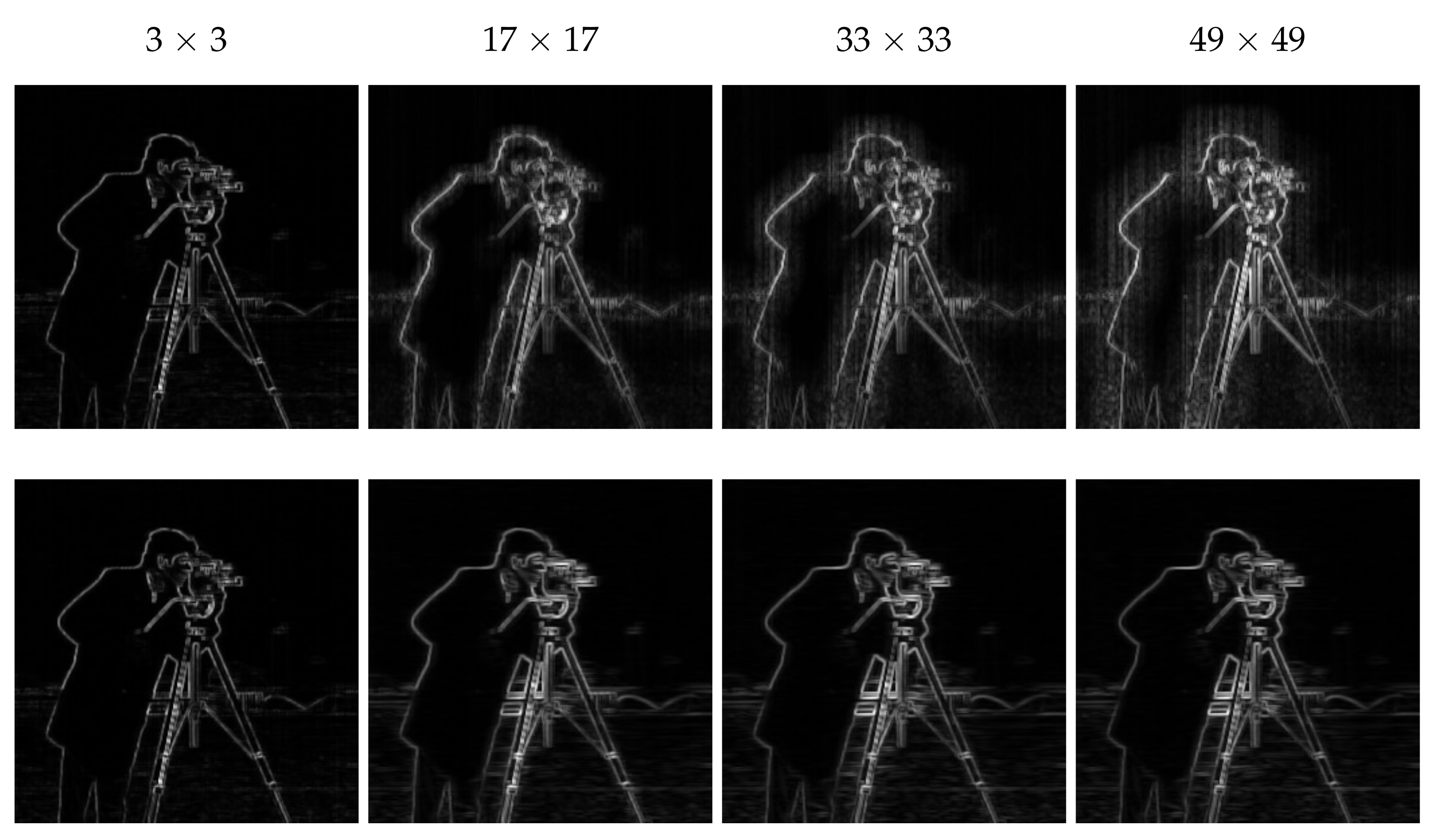

2.2. Edge-Aware Weighting

2.3. Horizontal Filtering

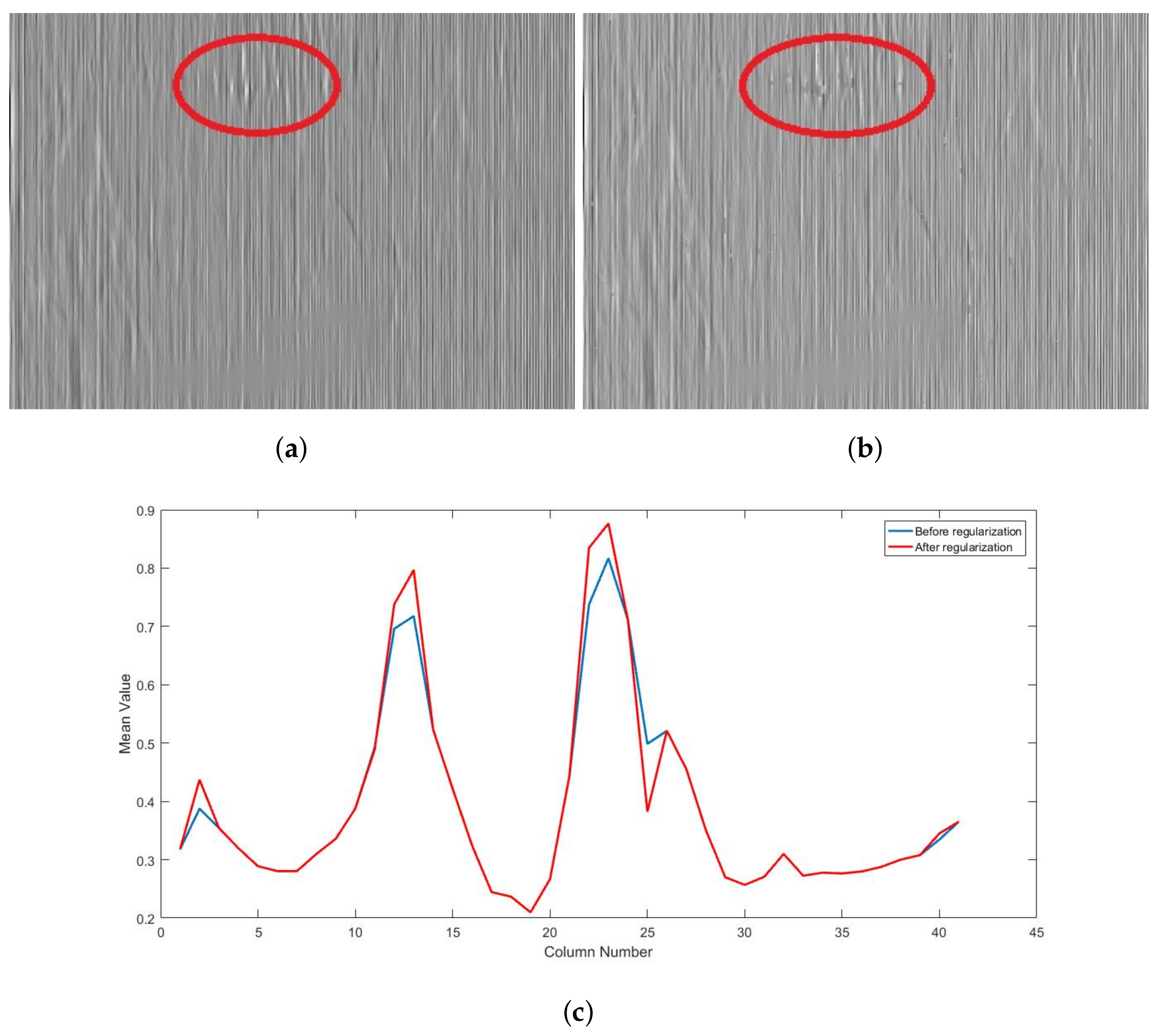

2.4. Statistical Regularization

- The non-uniformity along the same column is modeled as an unknown random variable that follows a Gaussian distribution with a mean and a standard deviation ,

- Non-uniformity noise in different columns of the array are considered to be independent of each other.

3. Results and Discussion

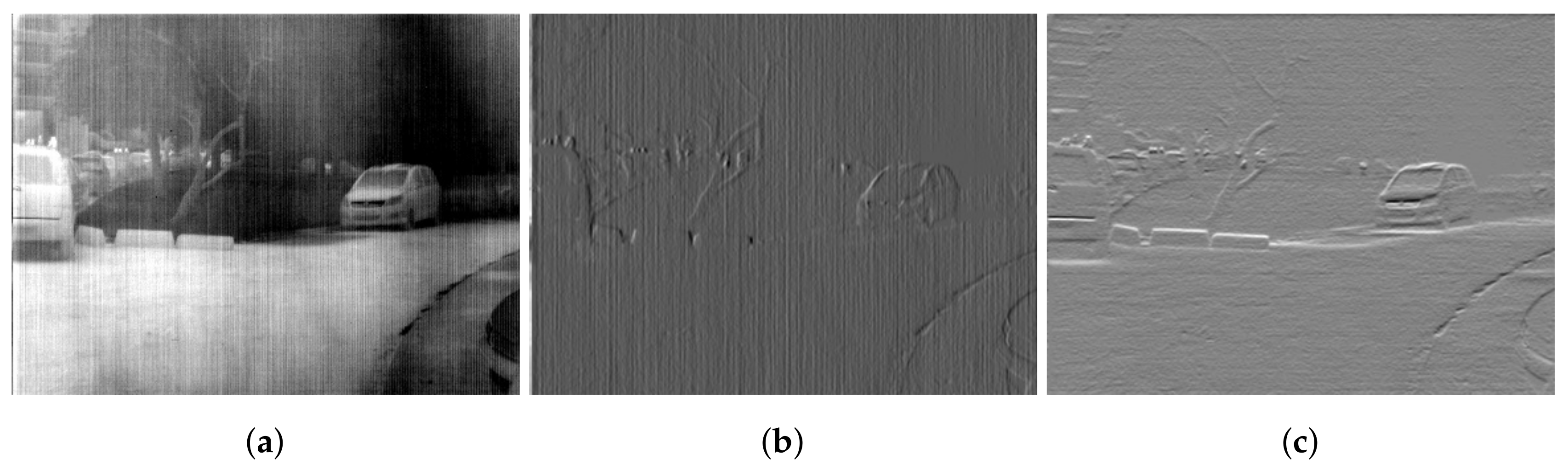

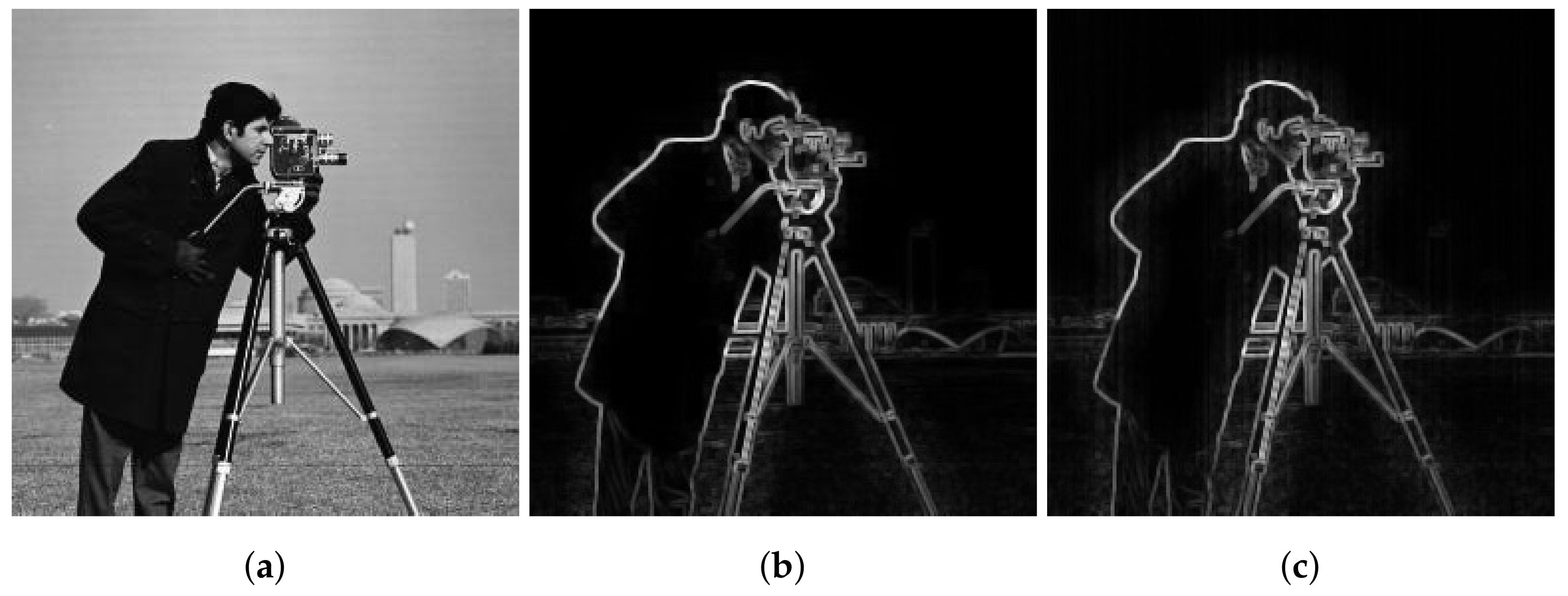

3.1. Edge Preserving Performance

3.2. Validation of the Statistical Regularization

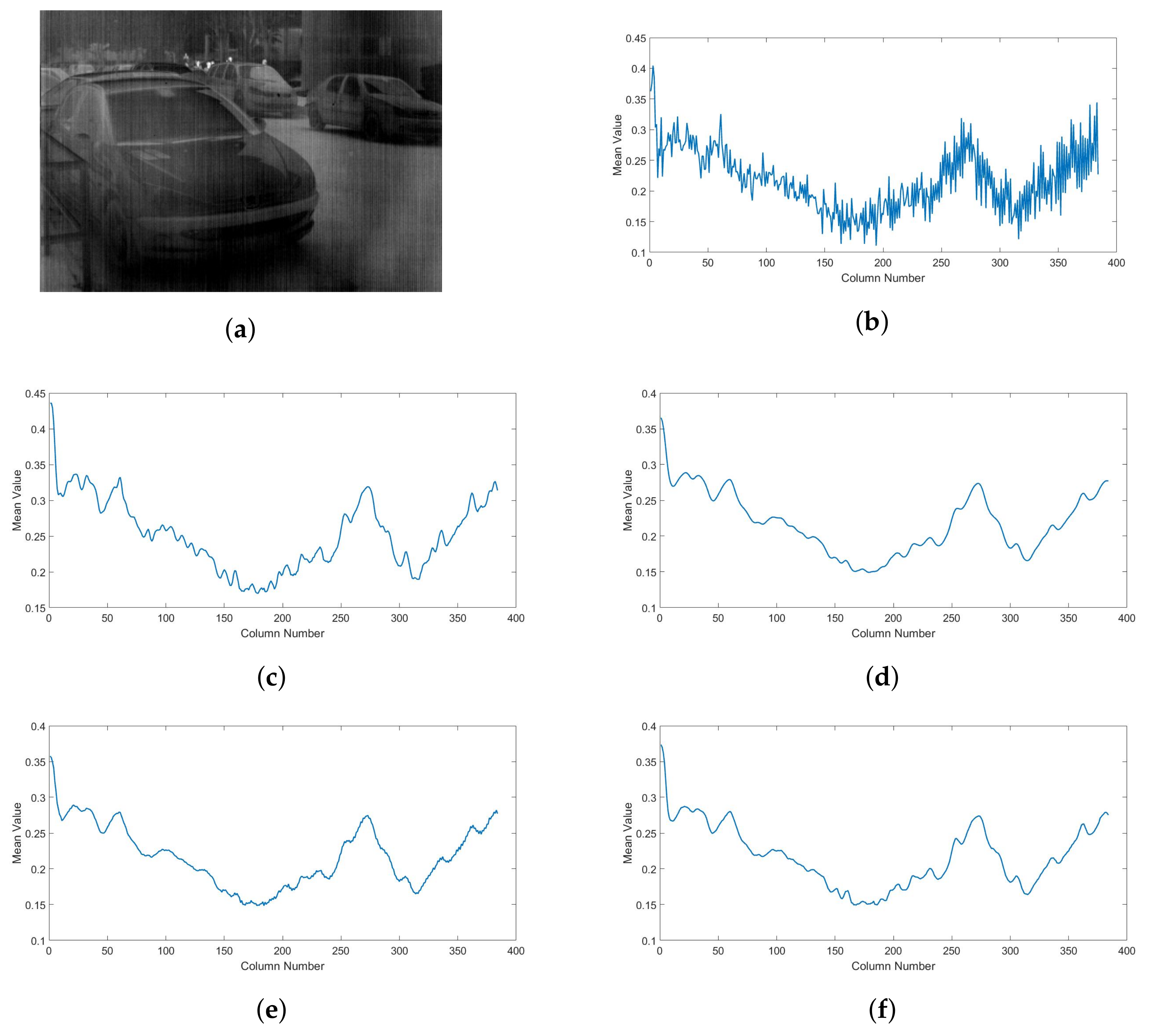

3.3. Real Experiments

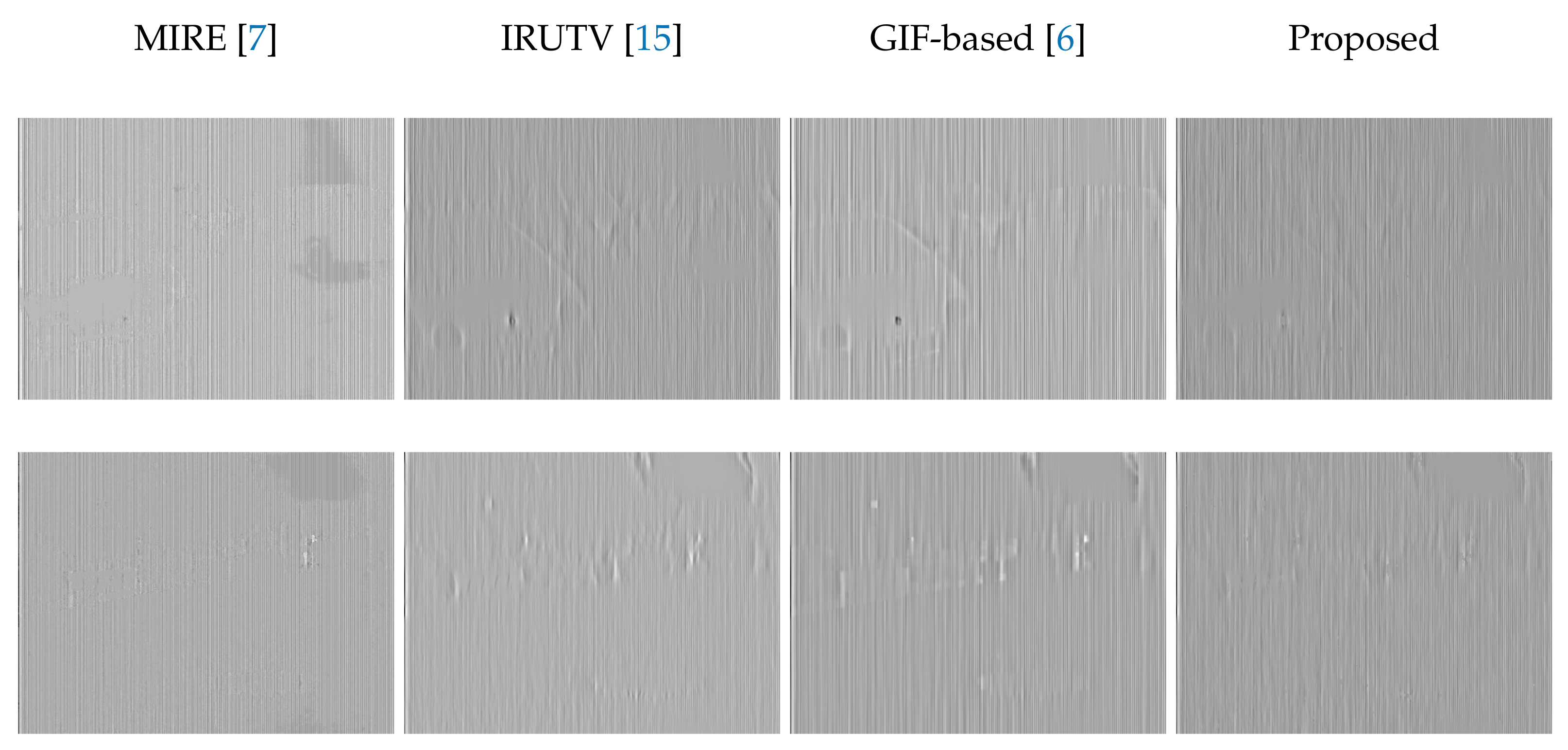

3.3.1. Qualitative Study

3.3.2. Quantitative Study

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Perry, D.L.; Dereniak, E.L. Linear theory of nonuniformity correction in infrared staring sensors. Opt. Eng. 1993, 32, 1854–1860. [Google Scholar] [CrossRef]

- Schulz, M.; Caldwell, L. Nonuniformity correction and correctability of infrared focal plane arrays. Infrared Phys. Technol. 1995, 36, 763–777. [Google Scholar] [CrossRef]

- Harris, J.G.; Chiang, Y.M. Nonuniformity correction of infrared image sequences using the constant-statistics constraint. IEEE Trans. Image Process. 1999, 8, 1148–1151. [Google Scholar] [CrossRef] [PubMed]

- Hardie, R.C.; Hayat, M.M.; Armstrong, E.; Yasuda, B. Scene-based nonuniformity correction with video sequences and registration. Appl. Opt. 2000, 39, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X. Scene-based nonuniformity correction algorithm based on interframe registration. JOSA A 2011, 28, 1164–1176. [Google Scholar] [CrossRef] [PubMed]

- Cao, Y.; Yang, M.Y.; Tisse, C.L. Effective Strip Noise Removal for Low-Textured Infrared Images Based on 1-D Guided Filtering. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 2176–2188. [Google Scholar] [CrossRef]

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity correction of infrared images by midway equalization. Image Process. Line 2012, 2, 134–146. [Google Scholar] [CrossRef]

- Cao, Y.; Li, Y. Strip non-uniformity correction in uncooled long-wave infrared focal plane array based on noise source characterization. Opt. Commun. 2015, 339, 236–242. [Google Scholar] [CrossRef]

- Chang, Y.; Yan, L.; Wu, T.; Zhong, S. Remote sensing image stripe noise removal: From image decomposition perspective. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7018–7031. [Google Scholar] [CrossRef]

- Liu, X.; Lu, X.; Shen, H.; Yuan, Q.; Jiao, Y.; Zhang, L. Stripe noise separation and removal in remote sensing images by consideration of the global sparsity and local variational properties. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3049–3060. [Google Scholar] [CrossRef]

- Münch, B.; Trtik, P.; Marone, F.; Stampanoni, M. Stripe and ring artifact removal with combined wavelet—Fourier filtering. Opt. Express 2009, 17, 8567–8591. [Google Scholar] [CrossRef] [PubMed]

- Kuang, X.; Sui, X.; Chen, Q.; Gu, G. Single infrared image stripe noise removal using deep convolutional networks. IEEE Photonics J. 2017, 9, 1–13. [Google Scholar] [CrossRef]

- Zhao, J.; Zhou, Q.; Chen, Y.; Liu, T.; Feng, H.; Xu, Z.; Li, Q. Single image stripe nonuniformity correction with gradient-constrained optimization model for infrared focal plane arrays. Opt. Commun. 2013, 296, 47–52. [Google Scholar] [CrossRef]

- Chang, Y.; Fang, H.; Yan, L.; Liu, H. Robust destriping method with unidirectional total variation and framelet regularization. Opt. Express 2013, 21, 23307–23323. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; He, C.; Fang, H.; Wang, X. Iteratively reweighted unidirectional variational model for stripe non-uniformity correction. Infrared Phys. Technol. 2016, 75, 107–116. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Goldstein, T.; Osher, S. The Split Bregman Method for L1-Regularized Problems. SIAM J. Imaging Sci. 2009, 2, 323–343. [Google Scholar] [CrossRef]

- Daubechies, I.; DeVore, R.; Fornasier, M.; Güntürk, C.S. Iteratively reweighted least squares minimization for sparse recovery. Commun. Pure Appl. Math. 2010, 63, 1–38. [Google Scholar] [CrossRef]

- Hua, M.; Bie, X.; Zhang, M.; Wang, W. Edge-aware gradient domain optimization framework for image filtering by local propagation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2838–2845. [Google Scholar]

- Kou, F.; Chen, W.; Wen, C.; Li, Z. Gradient domain guided image filtering. IEEE Trans. Image Process. 2015, 24, 4528–4539. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People Detection and Tracking from Aerial Thermal Views. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 1794–1800. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

| Images | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|

| noisy image | 26.07 | 24.23 | 25.85 | 26.03 | 26.28 | 26.09 | 25.91 | 25.18 | 26.24 |

| BM3D | 29.86 | 27.45 | 27.47 | 29.87 | 30.61 | 31.11 | 29.23 | 29.12 | 30.31 |

| MIRE | 30.45 | 29.42 | 29.43 | 31.23 | 32.58 | 31.11 | 30.80 | 30.94 | 31.12 |

| IRUTV | 31.85 | 26.85 | 31.08 | 31.95 | 30.81 | 28.61 | 29.12 | 26.68 | 28.51 |

| GIF_based | 34.16 | 30.50 | 33.38 | 33.75 | 33.14 | 31.56 | 30.66 | 30.66 | 32.00 |

| Proposed | 35.82 | 33.74 | 34.85 | 35.75 | 36.25 | 33.43 | 32.41 | 32.41 | 33.17 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boutemedjet, A.; Deng, C.; Zhao, B. Edge-Aware Unidirectional Total Variation Model for Stripe Non-Uniformity Correction. Sensors 2018, 18, 1164. https://doi.org/10.3390/s18041164

Boutemedjet A, Deng C, Zhao B. Edge-Aware Unidirectional Total Variation Model for Stripe Non-Uniformity Correction. Sensors. 2018; 18(4):1164. https://doi.org/10.3390/s18041164

Chicago/Turabian StyleBoutemedjet, Ayoub, Chenwei Deng, and Baojun Zhao. 2018. "Edge-Aware Unidirectional Total Variation Model for Stripe Non-Uniformity Correction" Sensors 18, no. 4: 1164. https://doi.org/10.3390/s18041164

APA StyleBoutemedjet, A., Deng, C., & Zhao, B. (2018). Edge-Aware Unidirectional Total Variation Model for Stripe Non-Uniformity Correction. Sensors, 18(4), 1164. https://doi.org/10.3390/s18041164