Accurate Decoding of Short, Phase-Encoded SSVEPs

Abstract

1. Introduction

2. Methods

2.1. Experimental Data

2.2. Signal Preprocessing

2.3. Feature Extraction

- Method I:

- Estimated phase based on the maximum likelihood condition: The intuition behind this feature is to treat the SSVEP BCI set-up as a telecommunication system that relies on phase shift keying (PSK), a coding technique based on modulating the carrier signal’s phase. The phase for epoch , where n represents the samples in time, was estimated by maximizing the likelihood of the , given the flickering stimulus , as follows [27]:where represents the maximum likelihood, is the stimulation frequency (12 or 15 Hz), and is the time period of the epochs (i.e., 0.5-s).

- Method II:

- Correlation with 0/ phase templates: From the training set, a template for each target was obtained by applying singular value decomposition (SVD) to , the matrix containing all k training epochs having target i as label. The template for target i was given by the component corresponding to the largest singular value.For each epoch , two features were then given by the (Pearson) correlation coefficients between and both templates.

- Method III:

- Phases of the 0/ templates: Similar to the previous feature, first, the training data was used to obtain a template for each target using the same SVD procedure as before. However, instead of calculating the correlation, this time the features were given by estimating the phase (using the ML method, Equation (1)) between an epoch and the two templates.

- Method IV:

- Correlation of one-period segments: In this method, a correlation coefficient with a reference signal was calculated, but unlike before, the reference signal was not a full epoch. The procedure is explained next.First, following the time-domain analysis of SSVEP [16,18,28,29], each training epoch was cut into consecutive, non-overlapping segments with length one period of the stimulation frequency after which the segments were averaged to obtain , where x is the length of one period of the stimulation frequency. In our case, the segment length (x) was equal to 1/12 or 1/15 s and, for each epoch, 6 or 7 segments were extracted and averaged, depending on the dataset under consideration.Next, the Pearson correlation coefficient matrix of the averaged segments was calculated as follows:where e is the number of (training) trials. Using this matrix, several segments were selected that will serve as a reference for the correlation with novel data. The following references have been selected:

- one segment corresponding to the column from that maximizes the correlation with the class labels;

- two segments, one for each target, that we selected from the column with the most centered mean of the correlation coefficient values, which indicates the epoch that can be considered a reference for the same target epochs;

- two segments based on data statistics (i.e., standard deviation): the two columns with maximum and minimum standard deviations.

For each epoch , five features were extracted, given by the (Pearson) correlation of with all references.

2.4. Feature Combination and Signal Alignment

2.5. Classification & Performance Evaluation

2.6. Statistics

3. Results

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Middendorf, M.; McMillan, G.; Calhoun, G.; Jones, K.S. Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Trans. Rehabil. Eng. 2000, 8, 211–214. [Google Scholar] [CrossRef] [PubMed]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Chen, K.; Ai, Q.; Xie, S.Q. Review: Recent development of signal processing algorithms for SSVEP-based brain computer interfaces. J. Med. Biol. Eng. 2014, 34, 299–309. [Google Scholar] [CrossRef]

- Mora-Cortes, A.; Manyakov, N.V.; Chumerin, N.; Van Hulle, M.M. Language model applications to spelling with brain-computer interfaces. Sensors 2014, 14, 5967–5993. [Google Scholar] [CrossRef] [PubMed]

- Nijholt, A. BCI for games: A ‘state of the art’ survey. In International Conference on Entertainment Computing; Springer: Pittsburgh, PA, USA, 2008; pp. 225–228. [Google Scholar]

- Chumerin, N.; Manyakov, N.V.; Combaz, A.; Robben, A.; van Vliet, M.; Van Hulle, M.M. Steady State Visual Evoked Potential Based Computer Gaming—The Maze; INTETAIN; Springer: Genova, Italy, 2011; pp. 28–37. [Google Scholar]

- Van Vliet, M.; Robben, A.; Chumerin, N.; Manyakov, N.V.; Combaz, A.; Van Hulle, M.M. Designing a brain-computer interface controlled video-game using consumer grade EEG hardware. In Proceedings of the Biosignals and Biorobotics Conference (BRC), Manaus, Brazil, 9–11 January 2012; pp. 1–6. [Google Scholar]

- Chumerin, N.; Manyakov, N.V.; van Vliet, M.; Robben, A.; Combaz, A.; Van Hulle, M.M. Steady-state visual evoked potential-based computer gaming on a consumer-grade EEG device. IEEE Trans. Comput. Intell. Ai Games 2013, 5, 100–110. [Google Scholar] [CrossRef]

- Ahn, M.; Lee, M.; Choi, J.; Jun, S.C. A review of brain-computer interface games and an opinion survey from researchers, developers and users. Sensors 2014, 14, 14601–14633. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.; Gao, X.; Gao, S.; Xu, D. Design and implementation of a brain-computer interface with high transfer rates. IEEE Trans. Biomed. Eng. 2002, 49, 1181–1186. [Google Scholar] [CrossRef] [PubMed]

- Kelly, S.P.; Lalor, E.C.; Reilly, R.B.; Foxe, J.J. Visual spatial attention tracking using high-density SSVEP data for independent brain-computer communication. IEEE Trans. Neural Syst. Rehabil. Eng. 2005, 13, 172–178. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.H.; Chang, H.C.; Lee, P.L.; Li, K.S.; Sie, J.J.; Sun, C.W.; Yang, C.Y.; Li, P.H.; Deng, H.T.; Shyu, K.K. Frequency recognition in an SSVEP-based brain computer interface using empirical mode decomposition and refined generalized zero-crossing. J. Neurosci. Methods 2011, 196, 170–181. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, Y.; Nakanishi, M.; Jung, T.P.; Gao, X. Hybrid frequency and phase coding for a high-speed SSVEP-based BCI speller. In Proceedings of the 36th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC), Chicago, IL, USA, 26–30 August 2014; pp. 3993–3996. [Google Scholar]

- Nakanishi, M.; Wang, Y.; Wang, Y.T.; Mitsukura, Y.; Jung, T.P. A high-speed brain speller using steady-state visual evoked potentials. Int. J. Neural Syst. 2014, 24, 1450019. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Wang, Y.; Nakanishi, M.; Gao, X.; Jung, T.P.; Gao, S. High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067. [Google Scholar] [CrossRef] [PubMed]

- Wittevrongel, B.; Van Hulle, M.M. Frequency-and Phase Encoded SSVEP Using Spatiotemporal Beamforming. PLoS ONE 2016, 11, e0159988. [Google Scholar] [CrossRef] [PubMed]

- Nakanishi, M.; Wang, Y.; Chen, X.; Wang, Y.T.; Gao, X.; Jung, T.P. Enhancing Detection of SSVEPs for a High-Speed Brain Speller Using Task-Related Component Analysis. IEEE Trans. Biomed. Eng. 2018, 65, 104–112. [Google Scholar] [CrossRef] [PubMed]

- Wittevrongel, B.; Van Hulle, M.M. Spatiotemporal beamforming: A transparent and unified decoding approach to synchronous visual Brain-Computer Interfacing. Front. Neurosci. 2017, 11, 630. [Google Scholar] [CrossRef] [PubMed]

- Lee, P.L.; Sie, J.J.; Liu, Y.J.; Wu, C.H.; Lee, M.H.; Shu, C.H.; Li, P.H.; Sun, C.W.; Shyu, K.K. An SSVEP-actuated brain computer interface using phase-tagged flickering sequences: A cursor system. Ann. Biomed. Eng. 2010, 38, 2383–2397. [Google Scholar] [CrossRef] [PubMed]

- Lopez-Gordo, M.; Prieto, A.; Pelayo, F.; Morillas, C. Use of phase in brain–computer interfaces based on steady-state visual evoked potentials. Neural Process. Lett. 2010, 32, 1–9. [Google Scholar] [CrossRef]

- Manyakov, N.; Chumerin, N.; Combaz, A.; Robben, A.; van Vliet, M.; Van Hulle, M. Decoding Phase-Based Information from Steady-State Visual Evoked Potentials with Use of Complex-Valued Neural Network. In Intelligent Data Engineering and Automated Learning-IDEAL 2011; Lecture Notes in Computer Science; Yin, H., Wang, W., Rayward-Smith, V., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6936, pp. 135–143. [Google Scholar]

- Manyakov, N.V.; Chumerin, N.; Van Hulle, M.M. Multichannel decoding for phase-coded SSVEP brain-computer interface. Int. J. Neural Syst. 2012, 22, 1250022. [Google Scholar] [CrossRef] [PubMed]

- Manyakov, N.V.; Chumerin, N.; Robben, A.; Combaz, A.; van Vliet, M.; Van Hulle, M.M. Sampled sinusoidal stimulation profile and multichannel fuzzy logic classification for monitor-based phase-coded SSVEP brain-computer interfacing. J. Neural Eng. 2013, 10, 036011. [Google Scholar] [CrossRef] [PubMed]

- Jia, C.; Gao, X.; Hong, B.; Gao, S. Frequency and phase mixed coding in SSVEP-based brain-computer interface. IEEE Trans. Biomed. Eng. 2011, 58, 200–206. [Google Scholar] [PubMed]

- Yin, E.; Zhou, Z.; Jiang, J.; Yu, Y.; Hu, D. A dynamically optimized SSVEP brain-computer interface (BCI) speller. IEEE Trans. Biomed. Eng. 2015, 62, 1447–1456. [Google Scholar] [CrossRef] [PubMed]

- Croft, R.J.; Barry, R.J. Removal of ocular artifact from the EEG: A review. Neurophysiol. Clin. Clin. Neurophysiol. 2000, 30, 5–19. [Google Scholar] [CrossRef]

- Proakis, J.G. Digital Communications, 4th ed.; McGraw-Hill: New York, NY, USA, 2011. [Google Scholar]

- Luo, A.; Sullivan, T.J. A user-friendly SSVEP-based brain–computer interface using a time-domain classifier. J. Neural Eng. 2010, 7, 026010. [Google Scholar] [CrossRef] [PubMed]

- Manyakov, N.V.; Chumerin, N.; Combaz, A.; Robben, A.; Van Hulle, M.M. Decoding SSVEP Responses using Time Domain Classification. In Proceedings of the International Conference on Fuzzy Computation and International Conference on Neural Computation, Valencia, Spain, 24–26 October 2010; pp. 376–380. [Google Scholar]

- Bekara, M.; Van der Baan, M. Local singular value decomposition for signal enhancement of seismic data. Geophysics 2007, 72, V59–V65. [Google Scholar] [CrossRef]

- Mandelkow, H.; de Zwart, J.A.; Duyn, J.H. Linear Discriminant analysis achieves high classification accuracy for the BOLD fMRI response to naturalistic movie stimuli. Front. Hum. Neurosci. 2016, 10. [Google Scholar] [CrossRef] [PubMed]

- Yousefnezhad, M.; Zhang, D. Deep Hyperalignment. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2017; pp. 1603–1611. [Google Scholar]

- Suykens, J.A.; Van Gestel, T.; De Brabanter, J. Least Squares Support Vector Machines; World Scientific: Singapore, 2002. [Google Scholar]

- Wittevrongel, B.; Hulle, M.M.V. Hierarchical online SSVEP spelling achieved with spatiotemporal beamforming. In Proceedings of the 2016 IEEE Statistical Signal Processing Workshop (SSP), Palma de Mallorca, Spain, 26–29 June 2016; pp. 1–5. [Google Scholar]

- Wittevrongel, B.; Van Hulle, M. Rapid SSVEP Mindspelling Achieved with Spatiotemporal Beamforming; Opera Medica et Physiologica: Nizhny Novgorod, Russia, 2016; Volume 1, p. 86. [Google Scholar]

- Kübler, A.; Neumann, N.; Wilhelm, B.; Hinterberger, T.; Birbaumer, N. Predictability of brain-computer communication. J. Psychophysiol. 2004, 18, 121–129. [Google Scholar] [CrossRef]

- Kübler, A.; Birbaumer, N. Brain-computer interfaces and communication in paralysis: Extinction of goal directed thinking in completely paralysed patients? Clin. Neurophysiol. 2008, 119, 2658–2666. [Google Scholar] [CrossRef] [PubMed]

- Brunner, P.; Ritaccio, A.L.; Emrich, J.F.; Bischof, H.; Schalk, G. Rapid communication with a “P300” matrix speller using electrocorticographic signals (ECoG). Front. Neurosci. 2011, 5. [Google Scholar] [CrossRef] [PubMed]

- Combaz, A.; Chatelle, C.; Robben, A.; Vanhoof, G.; Goeleven, A.; Thijs, V.; Van Hulle, M.M.; Laureys, S. A comparison of two spelling brain-computer interfaces based on visual P3 and SSVEP in Locked-In Syndrome. PLoS ONE 2013, 8, e73691. [Google Scholar] [CrossRef] [PubMed]

- Wittevrongel, B.; Van Hulle, M.M. Faster p300 classifier training using spatiotemporal beamforming. Int. J. Neural Syst. 2016, 26, 1650014. [Google Scholar] [CrossRef] [PubMed]

- Wittevrongel, B.; Van Wolputte, E.; Van Hulle, M.M. Code-modulated visual evoked potentials using fast stimulus presentation and spatiotemporal beamformer decoding. Sci. Rep. 2017. [Google Scholar] [CrossRef] [PubMed]

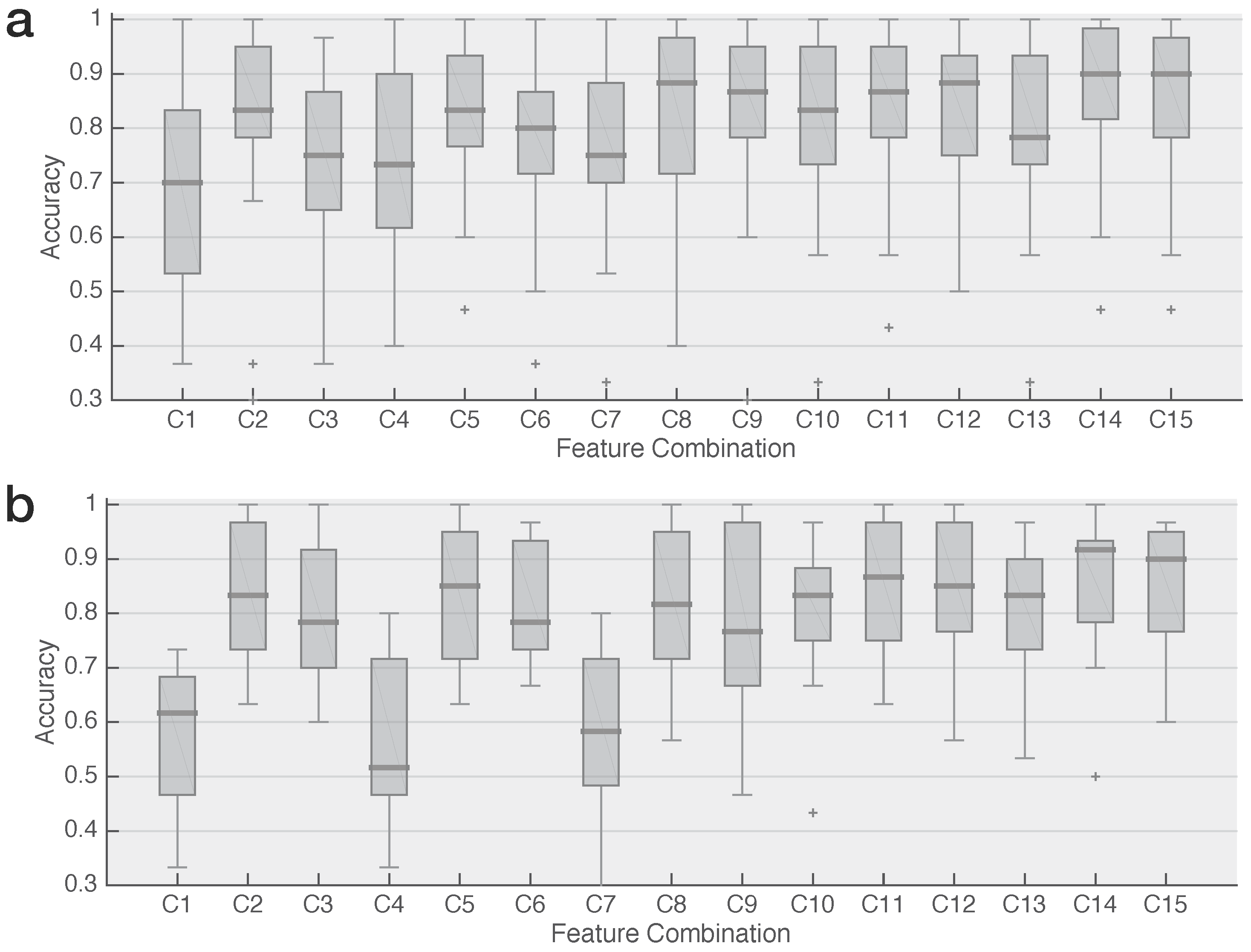

| Feature Set | FS-I | FS-II | FS-III | FS-IV |

|---|---|---|---|---|

| Dimensions per epoch | 4 | 8 | 8 | 15 |

| Combination | Feature Set (FS) | |||

|---|---|---|---|---|

| I | II | III | IV | |

| C1 | x | |||

| C2 | x | |||

| C3 | x | |||

| C4 | x | |||

| C5 | x | x | ||

| C6 | x | x | ||

| C7 | x | x | ||

| C8 | x | x | ||

| C9 | x | x | ||

| C10 | x | x | ||

| C11 | x | x | x | |

| C12 | x | x | x | |

| C13 | x | x | x | |

| C14 | x | x | x | |

| C15 | x | x | x | x |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Youssef Ali Amer, A.; Wittevrongel, B.; Van Hulle, M.M. Accurate Decoding of Short, Phase-Encoded SSVEPs. Sensors 2018, 18, 794. https://doi.org/10.3390/s18030794

Youssef Ali Amer A, Wittevrongel B, Van Hulle MM. Accurate Decoding of Short, Phase-Encoded SSVEPs. Sensors. 2018; 18(3):794. https://doi.org/10.3390/s18030794

Chicago/Turabian StyleYoussef Ali Amer, Ahmed, Benjamin Wittevrongel, and Marc M. Van Hulle. 2018. "Accurate Decoding of Short, Phase-Encoded SSVEPs" Sensors 18, no. 3: 794. https://doi.org/10.3390/s18030794

APA StyleYoussef Ali Amer, A., Wittevrongel, B., & Van Hulle, M. M. (2018). Accurate Decoding of Short, Phase-Encoded SSVEPs. Sensors, 18(3), 794. https://doi.org/10.3390/s18030794