A Feasibility Study on the Use of a Structured Light Depth-Camera for Three-Dimensional Body Measurements of Dairy Cows in Free-Stall Barns

Abstract

1. Introduction

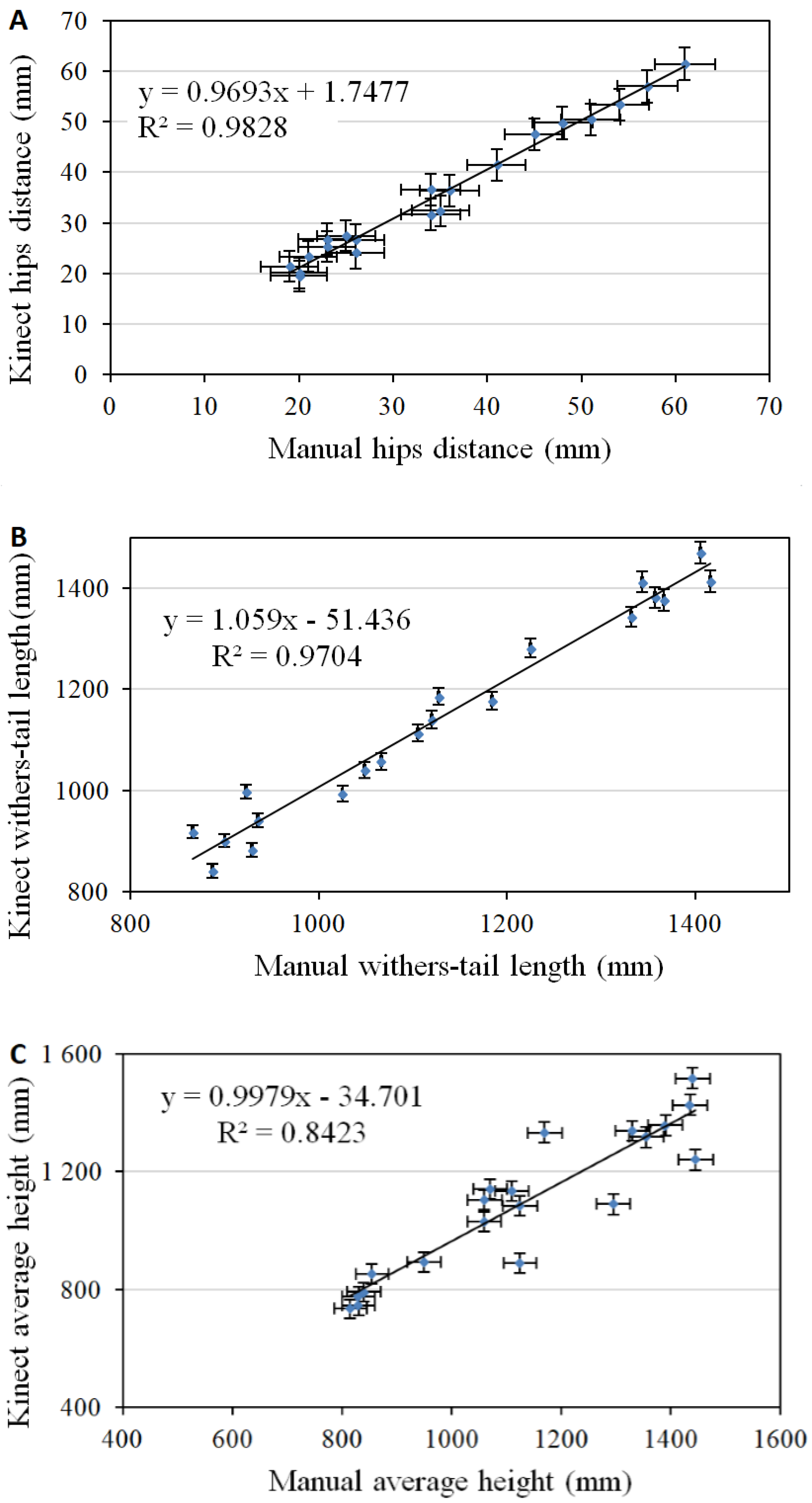

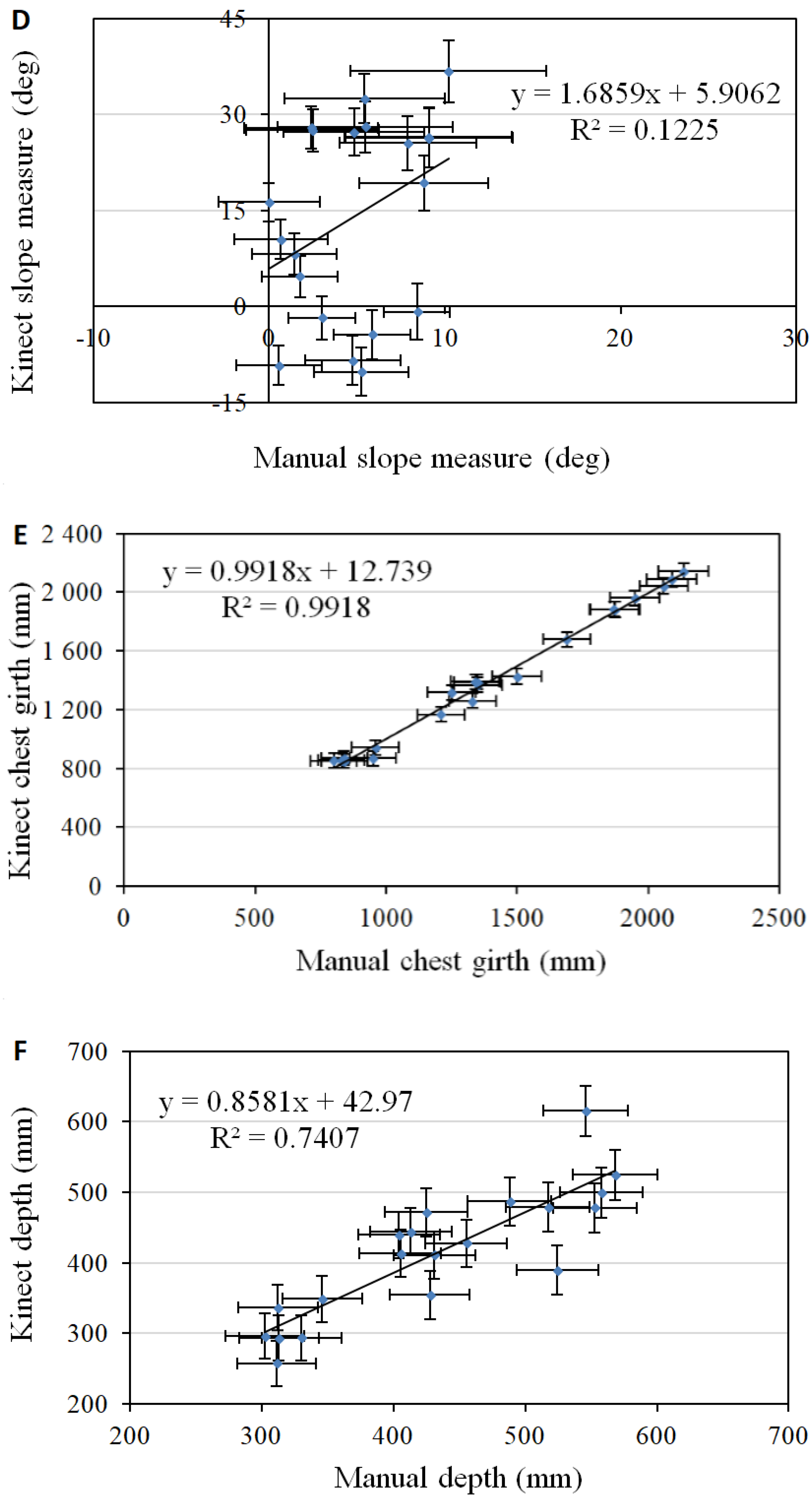

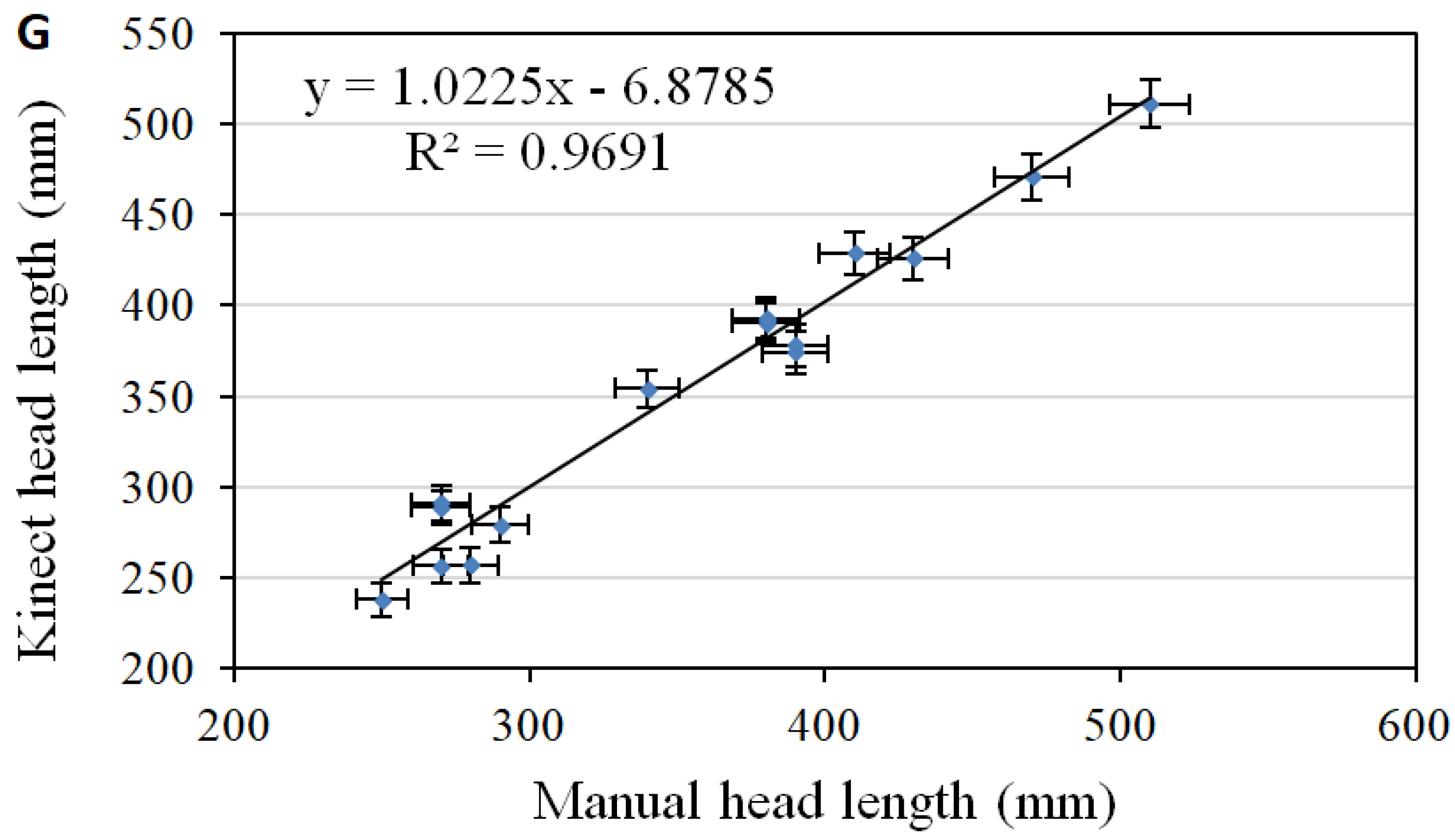

2. Material and Methods

2.1. Microsoft Kinect™ v1 RGB-Depth Camera

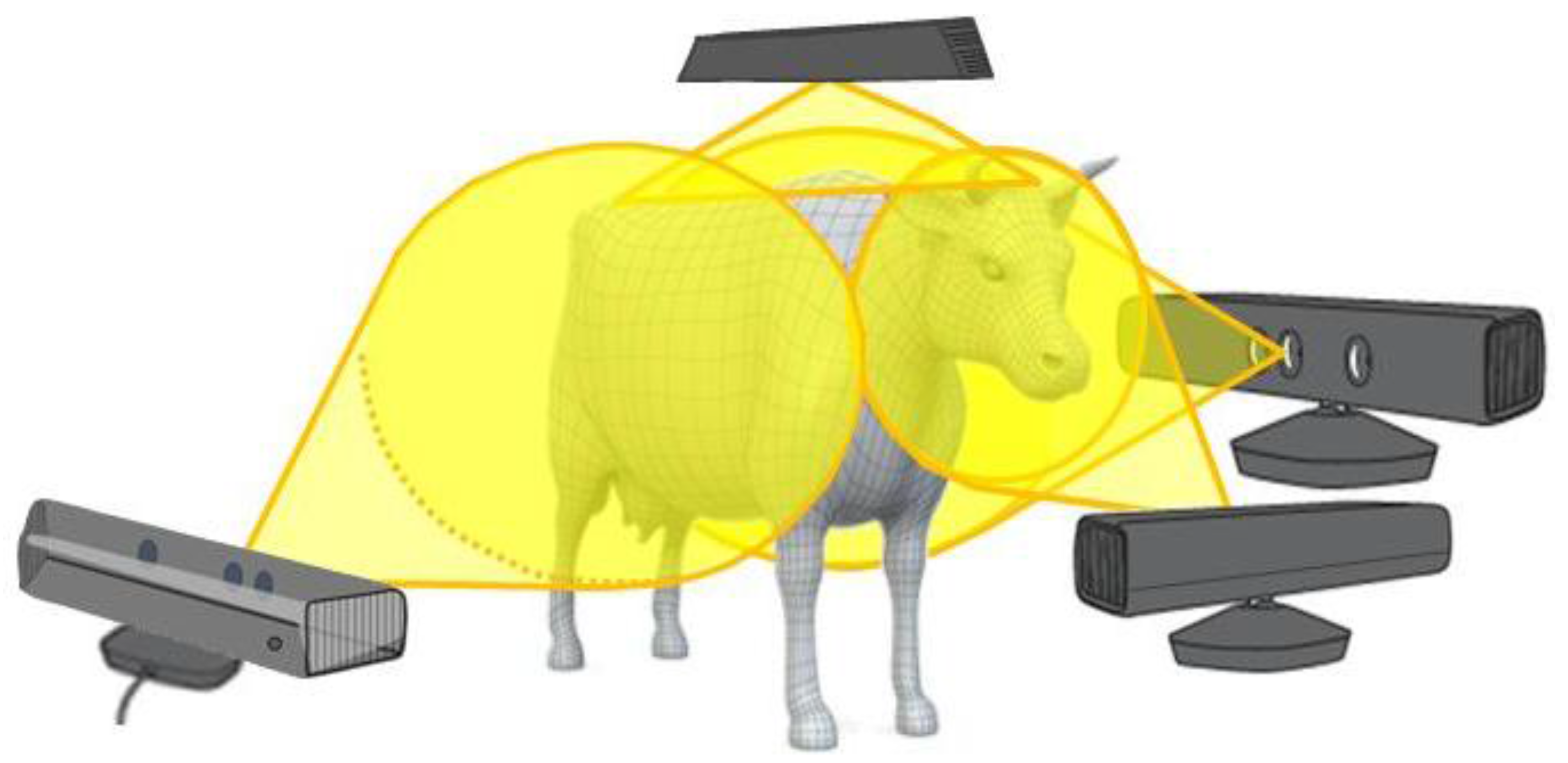

2.2. Sensors Positioning

2.3. Kinect Performance Verification

2.4. Measurement Uncertainty

2.5. Data Acquisition

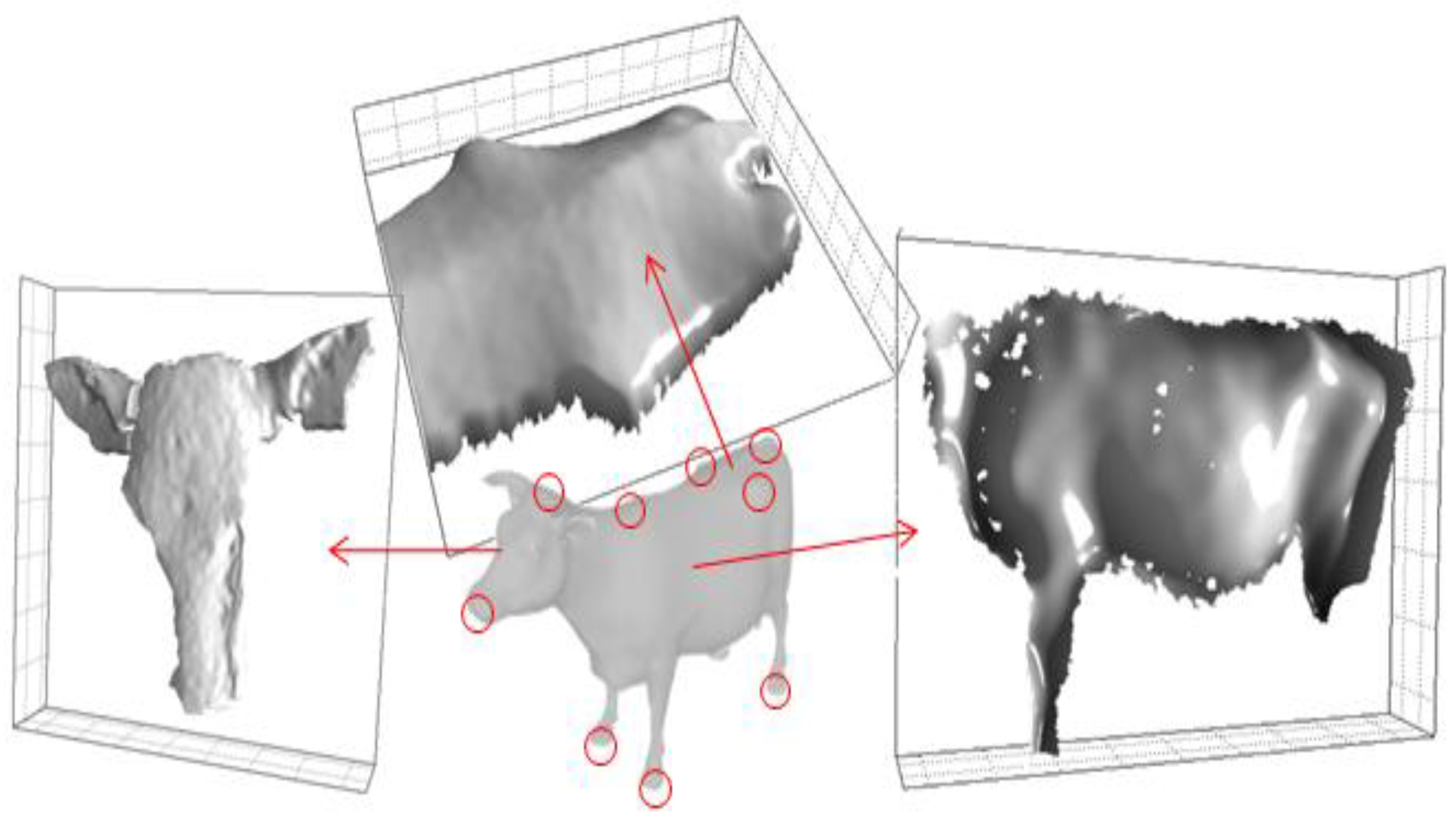

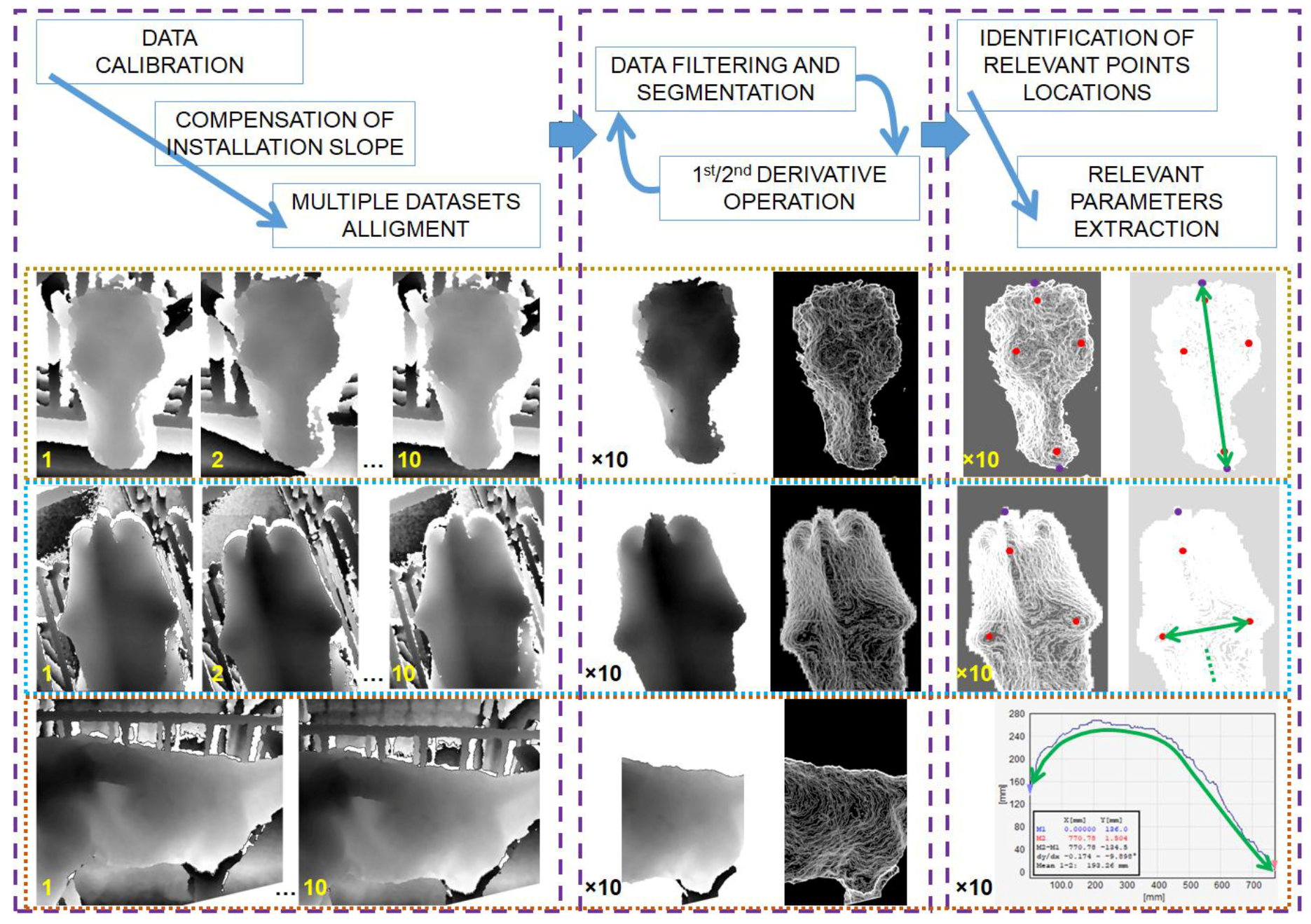

2.6. Data Processing

- (i)

- data correction;

- (ii)

- image filtering; and,

- (iii)

- relevant parameters extraction.

2.7. Body Parameters

3. Results and Discussion

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Van Hertem, T.; Bahr, C.; Schlageter Tello, A.; Viazzi, S.; Steensels, M.; Romanini, C.; Lokhorst, C.; Maltz, E.; Halachmi, I.; Berckmans, D. Lameness detection in dairy cattle: Single predictor v. multivariate analysis of image-based posture processing and behaviour and performance sensing. Animal 2016, 10, 1525–1532. [Google Scholar] [CrossRef] [PubMed]

- Tsai, D.M.; Huang, C.Y. A motion and image analysis method for automatic detection of estrus and mating behaviour in cattle. Comput. Electron. Agric. 2014, 104, 25–31. [Google Scholar] [CrossRef]

- Salau, J.; Haas, J.H.; Junge, W.; Thaller, G. Automated calculation of udder depth and rear leg angle in Holstein-Friesian cows using a multi -Kinect cow scanning system. Biosyst. Eng. 2017, 160, 154–169. [Google Scholar] [CrossRef]

- Norton, T.; Berckmans, D. Developing precision livestock farming tools for precision dairy farming. Anim. Front. 2017, 7, 18–23. [Google Scholar] [CrossRef]

- Windeyer, M.C.; Leslie, K.E.; Godden, S.M.; Hodgins, D.C.; Lissemore, K.D.; LeBlanc, S.J. Factors associated with morbidity, mortality, and growth of dairy heifer calves up to 3 months of age. Prev. Vet. Med. 2014, 113, 231–240. [Google Scholar] [CrossRef] [PubMed]

- Nilsson, M.; Herlin, A.; Ardö, H.; Guzhva, O.; Åström, K.; Bergsten, C. Development of automatic surveillance of animal behaviour and welfare using image analysis and machine learned segmentation technique. Animal 2015, 9, 1859–1865. [Google Scholar] [CrossRef] [PubMed]

- Richard, M.M.; Sloth, K.H.; Veissier, I. Real time positioning to detect early signs of welfare problems in cows. In Proceedings of the 7th European Conference on Precision Livestock Farming, Milan, Italy, 15–18 September 2015. [Google Scholar]

- Veissier, I.; Mialon, M.M.; Sloth, K.H. Early modification of the circadian organization of cow activity in relation to disease or estrus. J. Dairy Sci. 2017, 100, 3969–3974. [Google Scholar] [CrossRef] [PubMed]

- Azzaro, G.; Caccamo, M.; Ferguson, J.D.; Battiato, S.; Farinella, G.M.; Guarnera, G.C.; Puglisi, G.; Petriglieri, R.; Licitra, G. Objective estimation of body condition score by modeling cow body shape from digital images. J. Dairy Sci. 2011, 94, 2126–2137. [Google Scholar] [CrossRef] [PubMed]

- Halachmi, I.; Klopcic, M.; Polak, P.; Roberts, D.J.; Bewley, J.M. Automatic assessment of dairy cattle body condition score using thermal imaging. Comput. Electron. Agric. 2013, 99, 35–40. [Google Scholar] [CrossRef]

- Fontana, I.; Tullo, E.; Butterworth, A.; Guarino, M. An innovative approach to predict the growth in intensive poultry farming. Comput. Electron. Agric. 2015, 119, 178–183. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Gonzàlez, L.A.; Giora, D.; Sartori, L.; Cillis, D.; Marinello, F. Body measurements of dairy cows using a structure from motion (SfM) photogrammetry approach. In Proceedings of the 8th European Conference on Precision Livestock Farming, Nantes, France, 12–14 September 2017. [Google Scholar]

- Grandin, T. Assessment of Stress during Handling and Transport. J. Anim. Sci. 1997, 75, 249–257. [Google Scholar] [CrossRef] [PubMed]

- Porto, S.M.C.; Arcidiacono, C.; Anguzza, U.; Cascone, G. The automatic detection of dairy cow feeding and standing behaviours in free-stall barns by a computer vision-based system. Biosyst. Eng. 2015, 133, 46–55. [Google Scholar] [CrossRef]

- Spoliansky, R.; Edan, Y.; Parmet, Y.; Halachmi, I. Development of automatic body condition scoring using a low-cost 3-dimensional Kinect camera. J. Dairy Sci. 2016, 99, 7714–7725. [Google Scholar] [CrossRef] [PubMed]

- Halachmi, I.; Guarino, M. Editorial: Precision livestock farming: A ‘per animal’ approach using advanced monitoring technologies. Animal 2016, 10, 1482–1483. [Google Scholar] [CrossRef] [PubMed]

- Van Hertem, T.; Viazzi, S.; Steensels, M.; Maltz, E.; Antler, A.; Alchanatis, V.; Schlageter-Tello, A.; Lokhorst, C.; Romanini, C.E.B.; Bahr, C.; et al. Automatic lameness detection based on consecutive 3D-video recordings. Biosyst. Eng. 2014, 119, 108–116. [Google Scholar] [CrossRef]

- Viazzi, S.; Bahr, C.; Schlageter-Tello, A.; Van Hertem, T.; Romanini, C.E.B.; Pluk, A.; Berckmans, D. Analysis of individual classification of lameness using automatic measurement of back posture in dairy cattle. J. Dairy Sci. 2013, 96, 257–266. [Google Scholar] [CrossRef] [PubMed]

- Oczak, M.; Viazzi, S.; Ismayilova, G.; Sonoda, L.T.; Roulston, N.; Fels, M.; Bahr, C.; Hartung, J.; Guarino, M.; Berckmans, D. Classification of aggressive behaviour in pigs by activity index and multilayer feed forward neural network. Biosyst. Eng. 2014, 119, 89–97. [Google Scholar] [CrossRef]

- Lee, J.; Jin, L.; Park, D.; Chung, Y. Automatic recognition of aggressive behavior in pigs using a Kinect depth sensor. Sensors 2016, 16, 631. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Yang, W.; Winter, P.; Walker, L. Walk-through weighing of pigs using machine vision and an artificial neural network. Biosyst. Eng. 2008, 100, 117–125. [Google Scholar] [CrossRef]

- Weber, W.; Salau, J.; Haas, J.H.; Junge, W.; Bauer, U.; Harms, J.; Suhr, O.; Schönrock, K.; Rothfuß, H.; Bieletzki, S.; et al. Estimation of backfat thickness using extracted traits from an automatic 3D optical system in lactating Holstein–Friesian cows. Livest. Sci. 2014, 165, 129–137. [Google Scholar] [CrossRef]

- Hoffmann, G.; Schmidt, M.; Ammon, C. First investigations to refine video-based infrared thermography as a non-invasive tool to monitor the body temperature of calves. Animal 2016, 10, 1542–1546. [Google Scholar] [CrossRef] [PubMed]

- Da Borso, F.; Chiumenti, A.; Sigura, M.; Pezzuolo, A. Influence of automatic feeding systems on design and management of dairy farms. J. Agric. Eng. 2017, 48, 48–52. [Google Scholar] [CrossRef]

- Marchant, J.A.; Schofield, C.P. Extending the snake image processing algorithm for outlining pigs in scenes. Comput. Electron. Agric. 1993, 8, 261–275. [Google Scholar] [CrossRef]

- Brandl, N.; Jorgensen, E. Determination of live weight of pigs from dimensions measured using image analysis. Comput. Electron. Agric. 1996, 15, 57–72. [Google Scholar] [CrossRef]

- Schofield, C.P.; Marchant, J.A.; White, R.P.; Brandl, N.; Wilson, M. Monitoring pig growth using a prototype imaging system. J. Agric. Eng. Res. 1999, 72, 205–210. [Google Scholar] [CrossRef]

- Stajnko, D.; Brus, M.; Hočevar, M. Estimation of bull live weight through thermographically measured body dimensions. Comput. Electron. Agric. 2008, 61, 233–240. [Google Scholar] [CrossRef]

- Dubbini, M.; Pezzuolo, A.; De Giglio, M.; Gattelli, M.; Curzio, L.; Covi, D.; Yezekyan, T.; Marinello, F. Last generation instrument for agriculture multispectral data collection. CIGR J. 2017, 19, 158–163. [Google Scholar]

- Pastorelli, G.; Musella, M.; Zaninelli, M.; Tangorra, F.; Corino, C. Static spatial requirements of growing-finishing and heavy pigs. Livest. Sci. 2006, 105, 260–264. [Google Scholar] [CrossRef]

- Negretti, P.; Bianconi, G.; Finzi, A. Visual image analysis to estimate morphological and weight measurements in rabbits. World Rabbit Sci. 2007, 15, 37–41. [Google Scholar] [CrossRef][Green Version]

- Wet, L.D.; Vranken, E.; Chedad, A.; Aerts, J.M.; Ceunen, J.; Berckmans, D. Computer-assisted image analysis to quantify daily growth rates of broiler chickens. Br. Poult. Sci. 2003, 44, 524–532. [Google Scholar] [CrossRef] [PubMed]

- Mollah, M.B.; Hasan, M.A.; Salam, M.A.; Ali, M.A. Digital image analysis to estimate the live weight of broiler. Comput. Electron. Agric. 2010, 72, 48–52. [Google Scholar] [CrossRef]

- Ozkaya, S.; Yalcin, B. The relationship of parameters of body measures and body weight by using digital image analysis in pre-slaughter cattle. Arch. Tierz. Dummerstorf 2008, 51, 120–128. [Google Scholar] [CrossRef]

- Wu, J.; Tillett, R.; McFarlane, N.; Ju, X.; Siebert, J.P.; Schofield, P. Extracting the three-dimensional shape of live pigs using stereo photogrammetry. Comput. Electron. Agric. 2004, 44, 203–222. [Google Scholar] [CrossRef]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D Imaging Systems for Agricultural Applications–A Review. Sensors 2016, 6, 618. [Google Scholar]

- Maki, N.; Nakamura, S.; Takano, S.; Okada, Y. 3D Model Generation of Cattle Using Multiple Depth-Maps for ICT Agriculture. In Proceedings of the Complex, Intelligent, and Software Intensive Systems 2017, Torin, Italy, 10–13 July 2017; pp. 768–777. [Google Scholar]

- McPhee, M.J.; Walmsley, B.J.; Skinner, B.; Littler, B.; Siddell, J.P.; Cafe, L.M.; Alempijevic, A. Live animal assessments of rump fat and muscle score in Angus cows and steers using 3-dimensional imaging. J. Anim. Sci. 2017, 95, 1847–1857. [Google Scholar] [CrossRef] [PubMed]

- Kawasue, K.; Win, K.D.; Yoshida, K.; Tokunaga, T. Black cattle body shape and temperature measurement using thermography and KINECT sensor. Artif. Life Robot. 2017, 22, 464–470. [Google Scholar] [CrossRef]

- Khoshelham, K.; Elberink, S.O. Accuracy and resolution of kinect depth data for indoor mapping applications. Sensors 2012, 12, 1437–1454. [Google Scholar] [CrossRef] [PubMed]

- Andújar, D.; Dorado, J.; Fernández-Quintanilla, C.; Ribeiro, A. An approach to the use of depth cameras for weed volume estimation. Sensors 2016, 16, 972. [Google Scholar]

- Marinello, F.; Proto, A.R.; Zimbalatti, G.; Pezzuolo, A.; Cavalli, R.; Grigolato, S. Determination of forest road surface roughness by Kinect depth imaging. Ann. For Res. 2017, 60, 1–10. [Google Scholar] [CrossRef]

- Kongsro, J. Estimation of pig weight using a Microsoft Kinect prototype imaging system. Comput. Electron. Agric. 2014, 109, 32–35. [Google Scholar] [CrossRef]

- Kuzuhara, Y.; Kawamura, K.; Yoshitoshi, R.; Tamaki, T.; Sugai, S.; Ikegami, M.; Yasuda, T. A preliminarily study for predicting body weight and milk properties in lactating Holstein cows using a three-dimensional camera system. Comput. Electron. Agric. 2015, 111, 186–193. [Google Scholar] [CrossRef]

- Kim, J.; Chung, Y.; Choi, Y.; Sa, J.; Kim, H.; Chung, Y.; Park, D.; Kim, H. Depth-Based Detection of Standing-Pigs in Moving Noise Environments. Sensors 2017, 17, 2757. [Google Scholar] [CrossRef] [PubMed]

- Salau, J.; Haas, J.H.; Junge, W.; Thaller, G. A multi-Kinect cow scanning system: Calculating linear traits from manually marked recordings of Holstein-Friesian dairy cows. Biosyst. Eng. 2017, 157, 92–98. [Google Scholar] [CrossRef]

- Pezzuolo, A.; Guarino, M.; Sartori, L.; González, L.A.; Marinello, F. On-barn pig weight estimation based on body measurement by means of a Kinect v1 sensor. Comput. Electron. Agric. 2018, in press. [Google Scholar]

- Azzari, G.; Goulden, M.L.; Rusu, R.B. Rapid characterization of vegetation structure with a Microsoft Kinect sensor. Sensors 2013, 13, 2384–2398. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimedia 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Nissimov, S.; Goldberger, J.; Alchanatis, V. Obstacle detection in a greenhouse environment using the Kinect sensor. Comput. Electron. Agric. 2015, 113, 104–115. [Google Scholar] [CrossRef]

- Han, J.; Shao, L.; Xu, D.; Shotton, J. Enhanced computer vision with Microsoft Kinect sensor: A review. IEEE Trans. Cybern. 2013, 43, 1318–1334. [Google Scholar] [PubMed]

- Condeço, J.; Christensen, L.H.; Rosén, B.G. Software relocation of 3D surface topography measurements. Int. J. Mach. Tools Manuf. 2001, 41, 2095–2101. [Google Scholar] [CrossRef]

- Joint Committee for Guides in Metrology. Guide to the Expression for Uncertainty in Measurement; International Organisation for Standardization (ISO): Genève, Switzerland, 1995. [Google Scholar]

- Savio, E.; De Chiffre, L.; Schmitt, R. Metrology of freeform shaped parts. CIRP Ann. Manuf. Technol. 2007, 56, 810–835. [Google Scholar] [CrossRef]

- Marinello, F.; Bariani, P.; Carmignato, S.; Savio, E. Geometrical modelling of scanning probe microscopes and characterization of errors. Meas. Sci. Technol. 2009, 20, 084013. [Google Scholar] [CrossRef]

- Joint Committee for Guides in Metrology. Guide to the Expression for Uncertainty in Measurement; International Organisation for Standardization (ISO): Genève, Switzerland, 2008. [Google Scholar]

- Song, X.; Schutte, J.J.W.; Van der Tol, P.P.J.; Van Halsema, F.E.D.; Groot Koer-kamp, P.W.G. Body measurements of dairy calf using a 3-D camera in an automatic feeding system. In Proceedings of the AgEng 2014: International Conference of Agricultural Engineering, Zurich, Switzerland, 6–10 July 2014. [Google Scholar]

- Marinello, F.; Pezzuolo, A.; Gasparini, F.; Arvidsson, J.; Sartori, L. Application of the Kinect sensor for dynamic soil surface characterization. Precis. Agric. 2015, 5, 1–12. [Google Scholar] [CrossRef]

- Andersen, M.R.; Jensen, T.; Lisouski, P.; Mortensen, A.K.; Hansen, M.K.; Gregersen, T.; Ahrendt, P. Kinect Depth Sensor Evaluation for Computer Vision Applications; Electrical and Computer Engineering Technical Report ECE-TR-6; Aarhus University: Aarhus, Denmark, 2012; pp. 1–37. [Google Scholar]

- Poursaberi, A.; Bahr, C.; Pluk, A.; Van Nuffel, A.; Berckmans, D. Real-time automatic lameness detection based on back posture extraction in dairy cattle: Shape analysis of cow with image processing techniques. Comput. Electron. Agric. 2010, 74, 110–119. [Google Scholar] [CrossRef]

- Pluk, A.; Bahr, C.; Poursaberi, A.; Maertens, W.; Van Nuffel, A.; Berckmans, D. Automatic measurement of touch and release angles of the fetlock joint for lameness detection in dairy cattle using vision techniques. J. Dairy Sci. 2012, 95, 1738–1748. [Google Scholar] [CrossRef] [PubMed]

- Van Nuffel, A.; Zwertvaegher, I.; Van Weyenberg, S.; Pastell, M.; Thorup, V.M.; Bahr, C.; Sonck, B.; Saeys, W. Lameness detection in dairy cows: Part 2. Use of sensors to automatically register changes in locomotion or behaviour. Animals 2015, 5, 861–885. [Google Scholar] [CrossRef] [PubMed]

| Distance (mm) | Lateral Range (mm) | Lateral Resolution (mm) | Vertical Resolution (mm) | BGN (mm) |

|---|---|---|---|---|

| 400 | 415 × 310 | 0.65 × 0.65 | 0.7 | 1.1 |

| 1000 | 730 × 550 | 1.14 × 1.14 | 2 | 3.2 |

| 2000 | 1250 × 950 | 1.95 × 1.95 | 4 | 5.5 |

| Uncertainty Source | Uncertainty Estimation | |||

|---|---|---|---|---|

| Manual Meter (1 Measure) | Kinect Sensor (1 Measure) | Kinect Sensor (10 Measures) | Distribution | |

| Lateral Resolution (mm) | 1 | 0.65–2.0 | 0.3–0.7 | Rectangular |

| Vertical Resolution (mm) | - | 1.1–5.5 | 0.4–1.8 | Rectangular |

| Background Noise (mm) | - | 2.4 | 0.8 | Triangular |

| Length Calibration Non-Linearity (%) | 0.3 | 0.5 | 0.2 | Normal |

| Abbe Error (%) | 1.6 | 0.2 | 0.2 | Normal |

| Reference Points Localization (mm) | 4–15 | 10–18 | 2–6 | Normal |

| Expanded Uncertainty U (mm) | 7–30 | 16–40 | 3–15 | Normal |

| ID | Breed | Animal Category | Age (d) | Lactations Number |

|---|---|---|---|---|

| 1 | Holstein-Friesian | Calf | 14 | 0 |

| 2 | Holstein-Friesian | Calf | 40 | 0 |

| 3 | Holstein-Friesian | Calf | 56 | 0 |

| 4 | Holstein-Friesian | Calf | 13 | 0 |

| 5 | Holstein-Friesian | Calf | 53 | 0 |

| 6 | Holstein-Friesian | Calf | 32 | 0 |

| 7 | Red & white holstein | Calf | 172 | 0 |

| 8 | Red & white holstein | Calf | 168 | 0 |

| 9 | Holstein-Friesian | Calf | 127 | 0 |

| 10 | Holstein-Friesian | Calf | 218 | 0 |

| 11 | Holstein-Friesian | Calf | 193 | 0 |

| 12 | Holstein-Friesian | Cow | 245 | 0 |

| 13 | Holstein-Friesian | Cow | 241 | 0 |

| 14 | Holstein-Friesian | Cow | 561 | 0 |

| 15 | Holstein-Friesian | Cow | 456 | 0 |

| 16 | Holstein-Friesian | Cow | 586 | 0 |

| 17 | Holstein-Friesian | Cow | 800 | 1 |

| 18 | Holstein-Friesian | Cow | 2224 | 3 |

| 19 | Holstein-Friesian | Cow | 1993 | 3 |

| 20 | Holstein-Friesian | Cow | 1386 | 2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pezzuolo, A.; Guarino, M.; Sartori, L.; Marinello, F. A Feasibility Study on the Use of a Structured Light Depth-Camera for Three-Dimensional Body Measurements of Dairy Cows in Free-Stall Barns. Sensors 2018, 18, 673. https://doi.org/10.3390/s18020673

Pezzuolo A, Guarino M, Sartori L, Marinello F. A Feasibility Study on the Use of a Structured Light Depth-Camera for Three-Dimensional Body Measurements of Dairy Cows in Free-Stall Barns. Sensors. 2018; 18(2):673. https://doi.org/10.3390/s18020673

Chicago/Turabian StylePezzuolo, Andrea, Marcella Guarino, Luigi Sartori, and Francesco Marinello. 2018. "A Feasibility Study on the Use of a Structured Light Depth-Camera for Three-Dimensional Body Measurements of Dairy Cows in Free-Stall Barns" Sensors 18, no. 2: 673. https://doi.org/10.3390/s18020673

APA StylePezzuolo, A., Guarino, M., Sartori, L., & Marinello, F. (2018). A Feasibility Study on the Use of a Structured Light Depth-Camera for Three-Dimensional Body Measurements of Dairy Cows in Free-Stall Barns. Sensors, 18(2), 673. https://doi.org/10.3390/s18020673