1. Introduction

Timing technology is important in modern finance [

1], industries and scientific research [

2]. High frequency trading, real-time navigation and the verification of relativistic effects require accurate and high-resolute time and/or frequency information. Timing information is given by counting the periodic signals of referenced oscillators. Meanwhile, frequencies of the timing signal are multiples of the referenced oscillator period. A time-scale is accurate only if the participant oscillators produce frequencies consistent with their nominal values or are stable enough to be predictable. Furthermore, high resolution requires, in turn, a short period of oscillator output signal frequencies. Unfortunately, no high-performance oscillator produces constant and high-resolution signals.

The difference between an oscillator’s output signal from its nominal value can be divided into deterministic and random parts. The oscillator’s random behavior is well-documented by a class of noise processes called power-law noise (PLN) [

3]. While the random variations are defined in the frequency domain, it is often measured in the time domain by a class of structure functions and referred to as the frequency stability of the oscillator. For example, Allan (AVAR), modified Allan (MVAR) and Hadamard variance (HVAR) are commonly-used methods. These statistics can be improved by using a ‘total approach’ [

4]. Recently, Thêo- [

5] and parabolic variances [

6] were also proposed. The authors proposed an oscillator noise analysis method called stochastic ONA [

7]. The method predicts long-term frequency stabilities using convex optimization techniques. Specifically, the confidence regions of long-term Hadamard variances (HVAR) predicted from 14-day GPS precise clock data include HVAR estimated from 168-day measured data and are smaller than those estimated from 84-day time derivations.

On the other hand, distinctions between deterministic and random behavior are blurry [

8]. It is often difficult to differentiate drift from frequency noises [

9]. A main drawback of stochastic ONA is its requirement for drift-free input variances. For example, cesium frequency standards are conventionally believed to be free from drift [

10]. However, analysis of historical data and current practice show that the performance of TAI (International Atomic Time) improved when taking the frequency drifts of participant cesium clocks into account [

11].

This paper studies the estimation of oscillator stability under the influence of frequency drift. In

Section 2, the basic concepts and methods of time domain stability have been reviewed. Although time domain stability is related to the frequency domain, discrete sampling has different impacts on the two. The influence of discrete sampling on both domains has also been reviewed in this section. In

Section 3, we introduce a method called stochastic ONA, which extends the oscillator noise analysis problem to the prediction of long-term stability. In the following section, we describe methods to compute coefficient matrices used in stochastic ONA. We also introduce a method that greatly reduces the computational complexity of Walter’s characterization of AVAR and MVAR. From these works, we can then predict long-term frequency contaminated by deterministic linear frequency drift. The proposed model is tested against GPS precise clock data in

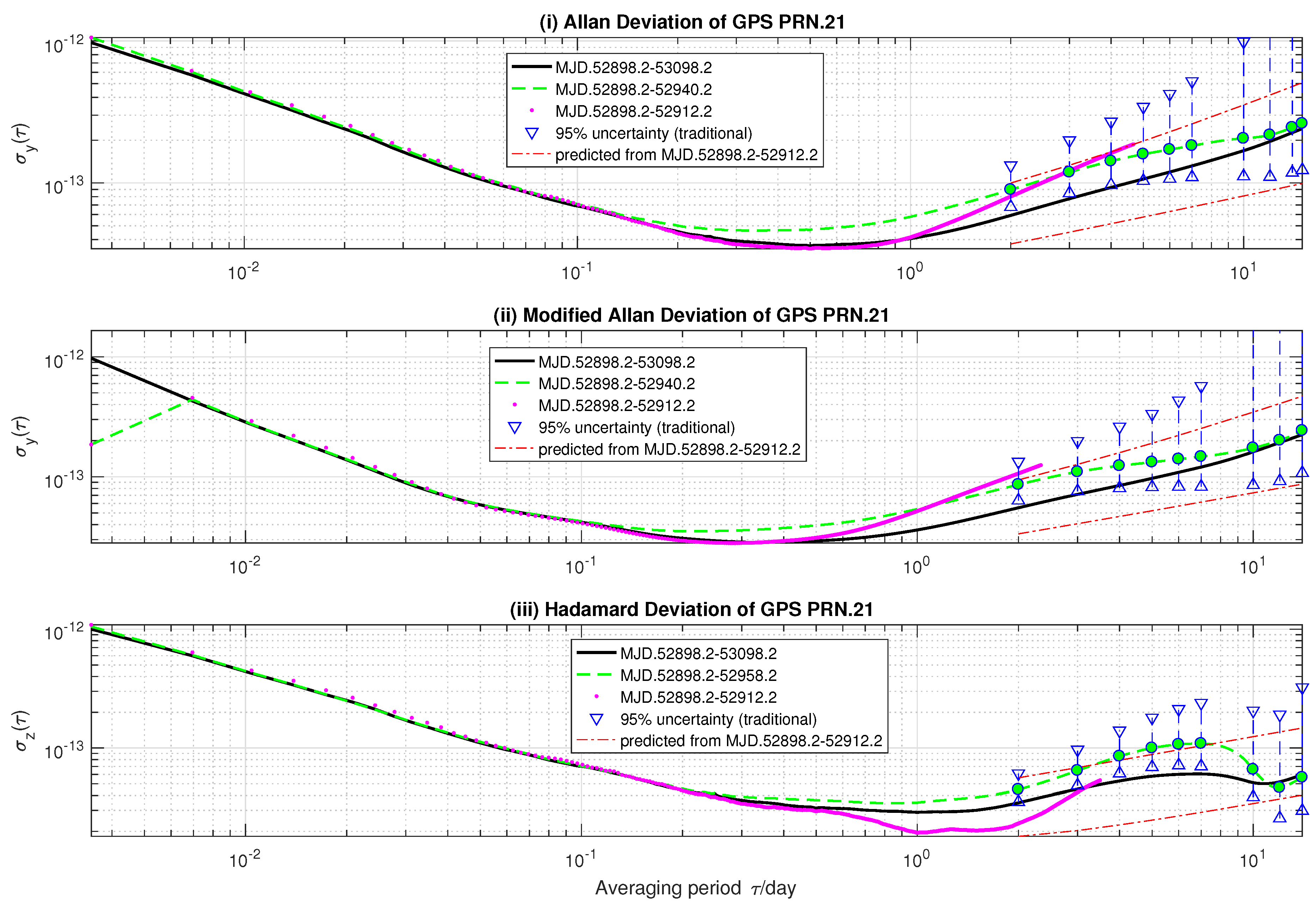

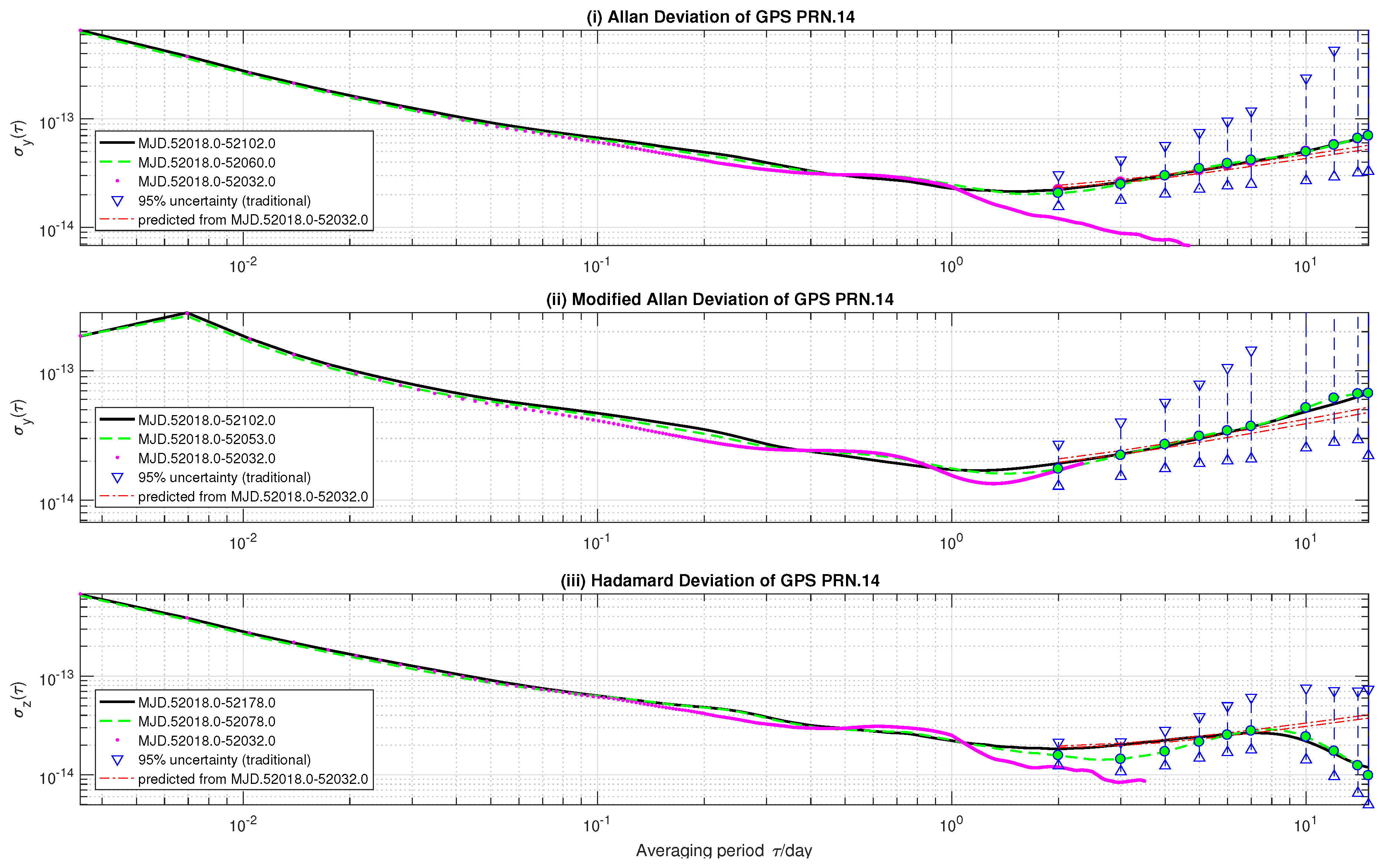

Section 5. The one-week AVAR, MVAR and HVAR predicted by stochastic ONA from 14-day measured data are consistent with those estimated from 84-day data. In addition, the fifteen-day variances predicted by stochastic ONA have more compact confidence regions than those estimated from 42–60-day data.

2. Review of Time Domain Stability

It is well documented that high performance oscillators are influenced by power-law noises (PLN). PLN processes are conventionally defined by their power spectral densities (PSD):

where

is the PSD of oscillator fractional frequency

,

PSD of time deviations

,

f (Fourier) frequency and

noise intensity coefficient,

,

,

. Often,

(white phase modulation, WHPM), 1 (flicker PM, FLPM), 0 (white frequency modulation, WHFM), −1 (flicker FM, FLFM), −2 (random walk FM, RWFM) [

12]. However in Global Positioning System (GPS) master control station (MCS) clock prediction,

= 2, 0, −2 and −4 (random run FM, RRFM), and the

coefficients are replaced by

[

4]:

However, PSD measured from an oscillator signal is not used solely in practice. Since the PSD estimates are “noisy” [

13], time domain statistics are often used instead. For instance, AVAR [

13]:

MVAR:

and HVAR:

A time-domain variance

can be related to its PSD:

Here,

is the averaging time,

sampling period and

the transfer function of

defined in [

12]:

Here, subscript

k is used as a generic form of different variances. The majority of measured data nowadays are digital.

estimated from finite data may have different values for Equation (

5). The former is usually denoted as

, and it can be viewed as a realization of sample variance variable

[

14]. The distribution function

(

for short) of random variable

can be formulated as:

for arbitrary positive real number

, where

e is Euler’s number,

the Gamma function,

the equivalence degrees of freedom (EDF):

variance is estimated from infinite samples and

variance of the random variable

.

If we denote:

where variance

is estimated from

and

the discrete sampling of time deviations

, then

does not equal Equation (

6). Instead of PSD, Kasdin shows that it is the symmetric two-time autocorrelation function:

which directly samples the continuous-time function [

15]. For example, instead of Equation (

1), Walter shows that PSD measured from discrete sampled time deviations

relates to

in the following way [

16]:

The autocorrelation function of PLN processes has the following asymptotic form when

[

15]:

for

, and:

for

, where:

To derive an expression for the discrete autocorrelation function, the deviation of Brownian motion is often replaced by the discrete Wiener process in time and frequency metrology [

15,

17]. If, in addition, the noise process is wide sense stationary,

can be recast as [

15]:

Here,

. From Equations (

12)–(

14), Walter derives

:

and

:

for Allan variance (AVAR) [

16]. It should be noted that variances estimated from discrete sampled data may be distorted when the averaging time

is near sampling period

. The distortions are caused by alias and measurement noise [

15]. Equations (

15) and (

16) do not take the distortions into account. Furthermore, the influences of frequency drift are not included in these equations. As a development of Walter’s work, we will formulate the effect of deterministic linear frequency drift in AVAR estimates and derive

and

for HVAR in

Section 4.

On the other hand, if

is a measurement of the time-domain variance

, we can estimate the

coefficients from

. It can be formed as a least square problem:

and called oscillator noise analysis. Suppose there are

M different input variances. Therefore, the coefficient matrix

can be divided into

M blocks:

whose

k-th block

,

,

,

,

is defined in Equation (

9).

can be formed by using the method we proposed in

Section 4. Similarly, the column vector of input variances

can be partitioned into

M blocks:

the

k-th block:

is comprised of

,

, ⋯,

estimated from time deviations

,

,

,

N.

h is a column vector of noise intensity coefficients and

W the weight matrix. The works in [

18,

19] give different ways to compute

W.

3. Stochastic ONA

We extended the oscillator noise analysis problem to the prediction of long-term stability. Since the likelihood (or conditional probability) of:

is zero, we estimate a

confidence region of

instead. This extension is realized by using convex optimization techniques (

Appendix A.2), and we call it stochastic ONA [

7].

The basic idea of stochastic ONA is:

and:

when

, where

is the chi-square distribution function defined in Equation (

7),

,

Clearly,

when

is small enough. The matrices

and

are obtained by substituting

in the coefficient matrix

with

and

. This can be cast into the following optimization problem:

where the

-dimensional column vector of the noise intensity coefficients

h subject to:

In practice, it is not always easy to find such an

. In addition, Equation (

21) does not model the uncertainty of input variances completely. For example, neither the correlations among the different averaging time, nor those among different variances calculated from the same underlying time series are taken into account. We therein prescribe a lower bound

as a threshold. Equation (

21) will be replaced by an alternative model if stochastic ONA fails to find an

that holds for the inequalities. While different variances estimated from the same time series are correlated, they contain independent pieces of information. It is difficult to formulate the correlations of structure functions precisely. It is even more difficult to solve stochastic programming under complex probabilistic constraints. When unformulated information only has a strong impact on a small population of the whole input variances, they can be treat as ‘violations’ using the techniques of compressive sensing. The auxiliary variables

and

are used as indicators for the violations. Specifically, the

-dimensional non-negative vector variable

and

are defined such that:

Since

,

for any norm

of

and

. We choose the

-norm (see

Appendix A.1 for details):

where

is the

i-th component of

, and

returns the absolute value of

. The probabilistic fact that only a minority of input variances violate Equation (

21) can be formulated using the property of

: the minimum of the

norm is approximately sparse when we have more variables than problem data. Therein, the optimization problem can be formulated as:

where optimization variables

subject to:

We adjust the values of input variance according to the result of Equation (

23). Consequently, we can find that

h holds for Equation (

21) with the adjusted variances. Suppose

is the optimum of Equation (

23). We label an input variance

as an ‘outlier’ when:

An outlier will be adjusted in the following way:

where

. We set

= 0.5 in this article.

By then, we can either minimize or maximize the values of:

under the restriction of Equation (

21). Here,

is not necessarily any of

,

,

. Neither

should be smaller than

, where

is the sampling interval and

N number of time deviations. If we denote the minimum and maximum of Equation (

25) as

and

, respectively,

can be approximately considered as an

confidence region of

. This is the predictive model used in stochastic ONA.

4. Models for Discrete-Time Variances

In this section, we introduce a way to compute coefficient matrices

,

and

used in stochastic ONA. Because

and

can be computed from

and the inverse of the chi-square distribution function (

7). Equation (

7) is well-defined if the degrees of freedom

is known.

, in turn, can be determined by

and

. Specifically, we: (i) formulate the influence of deterministic linear frequency drift on Allan (AVAR) and modified Allan (MVAR) variance; (ii) derive expressions for

and

of discrete-time Hadamard variance (HVAR). Computing the values of

and

for discrete-time AVAR, MVAR and HVAR is a daunting task, since we need to compute the gammafunctions

(

for MVAR) times. We reduce the computation of gamma functions to three times per

noise in the end of this section.

4.1. Drift Model

To formulate the influences of deterministic linear frequency drift on AVAR and MVAR, we first assume the oscillator output signal to be contaminated by drift. Suppose its time derivations

can be separated as:

where

and

are discrete sampling of the continuous-time signals

and

, respectively. We also denote the AVAR and MVAR estimated from

as

and Mod

, respectively. Apparently,

and:

Since

is unknown, AVAR and MVAR can only be measured from

. We denote them as

and Mod

, respectively. It can be shown, from Equations (

2) and (

3), that:

and:

In other words, the drift-free AVAR and MVAR can be divided from the influence of drift theoretically.

To predict long-term stability, the sign of

a makes no difference. We can therein treat

as a component of

h. The column vector

h of a rubidium frequency standard, for instance, is:

where

,

, 1, 0,

,

and

are noise intensity coefficients of white and flicker PM, white, flicker, random walk and random run FM, respectively. Accordingly, the

m-th row of

and

can be cast as:

and:

for AVAR, respectively. Here, we use the subscript

, 1, 0,

,

or

to indicate the dominant PLN process; other PLN processes will be ignored in the computation of the inverse chi-square distribution function. While

is given in Equation (

15) and

in Equation (

16) explicitly, it is a daunting task to compute

and

from these equations. As we show at the end of this section, the computation can be greatly shortened by taking the properties of the gamma function into account. Especially, we can simplify Equation (

15) in the case of GPS MCS clock prediction:

On the other hand, the simplified expression for

depends on the ratio of

m to

N. When

,

for

,

for

, and:

for

. Simplified expressions for

,

, will be given in

Appendix B.

4.2. Hadamard Variance

To differentiate the influence of frequency drift from the random behavior of an oscillator, for example RWFM or RRFM, we can combine AVAR with some statistics, which are convergent for RRFM and free from drift. We choose HVAR among those statistics. In order to form coefficient matrices , and , we derive here and of discrete-time HVAR.

Since discrete-time PSD is not a direct sampling of the corresponding continuous-time PSD, the discrete-time HVAR is not a discrete sampling of the continuous function defined by Equation (

5). On the other hand, the discrete-time symmetric two-time autocorrelation function is a direct sampling of its continuous counterpart (

10). If we can recast the continuous-time HVAR as a combination of autocorrelation functions, the discrete-time HVAR can be derived from directly sampling the autocorrelation function. Equivalently, if the discrete-time HVAR can be expanded as a combination of autocorrelation functions, an explicit expression of the variance can be derived by replacing the autocorrelation functions with Equations (

12)–(

14).

From Equations (

4) and (

10),

By substituting autocorrelation functions in the equation above with Equations (

12) and (

13), we attain:

Obviously, Equation (

35) converges when

. Because all of the power-law noises (PLN) mentioned before have a power index

, Equation (

35) holds for the problem discussed.

In addition,

, the sampling interval

ranges from several minutes to days, and HVAR is approximately independent of

t (We assume here that the random behaviors of an oscillator is unchanged. Otherwise, HVAR is either divergent or changes with time

t). Hence, we replace the symmetric two-time autocorrelation function in Equation (

35) with Equation (

14). The expression for

of discrete-time HVAR is therein derived:

autocorrelation functions in the equation above with Equations (

12) and (

13).

Likewise, we expand

with the symmetric two-time autocorrelation functions:

by assuming the third order differences of

,

m fixed, are normally distributed. It is easy to see that Equation (

37) holds for

after substituting the autocorrelation functions in the above equation with Equations (

12) and (

13). Furthermore,

is approximately independent of the parameter

t. Therefore, we replace the autocorrelation functions with Equation (

14).

of HVAR is therein cast as

By then, the coefficient matrices

,

and

in stochastic ONA can be constructed in the following way:

where

is defined in Equation (

28),

and

in Equation (

29). The

m-th rows of

and

are defined as:

and:

respectively. When the time series contains random run FM,

. While AVAR does not converge for

PLN, the noise process has little influence on short-term AVAR estimated from real data. In such a case, the inconsistency between Equations (

29) and (

40) will be treated as ‘violations’ by the optimization problem (

23). The unformulated influence of

PLN in Equation (

29) will be smoothed out by Equation (

24).

In GPS MCS clock prediction, only PLN of

, 0,

and

are considered. In such a case, the flicker noise components in

,

and

should be removed. Furthermore, the remaining components can be computed using the following simplified expression:

If, in addition,

,

for

,

for

,

for

, and:

for

. Expressions of

for

will be given in

Appendix B.

4.3. Quick Computation of Discrete-Time Variances

Although for Equations (

36) and (

38), Walter’s characterizations of AVAR and HVAR holds for real

values, they produce heavy computational burdens. If their computational complexity is represented by the evaluation of Gamma functions, then, for given

m, the complexity of Equations (

15), (

16), (

36) and (

38) is

and

for Walter’s characterization of MVAR. Here, we describe a method to reduce the computation complexity to three.

In order to estimate the values of Equations (

15), (

16), (

36) and (

38), we define an

N-dimensional column vector

. The

i-th component of

is:

From the properties of the gamma function, we recast Equations (

15), (

16), (

36) and (

38) as functions of

. For instance,

and:

It is obvious that:

On the other hand, for any

, there exists

ℓ such that:

for some

. Hence, the auxiliary parameter

is both sufficient and necessary in the computation of Equations (

15), (

16), (

36) and (

38).

To calculate the values of

, we start by searching for the least positive

such that:

and:

Then, we compute the value of

:

Other components of

can be estimated recursively: Given the value of

:

if

is unknown,

if

is unknown,

By using the auxiliary vector , we reduce the calculations of gamma functions to three times per noise.

6. Conclusions

In this article, we discussed the prediction of long-term stability with the presentation of deterministic linear frequency drift. The fundamental theory of time stability analysis and the influences of discrete sampling are first revisited. Based on these theories, we construct a method called stochastic ONA. Stochastic ONA extends the capability of conventional oscillator noise analysis to the prediction of long-term frequency stability. By then, we introduce methods to model long-term variances contaminated by frequency drift. Specifically, we: (i) formulated the influence of frequency drift on Allan (AVAR) and modified Allan (MVAR) variances; (ii) derived expressions for discrete Hadamard variance (HVAR); (iii) simplified the formulations for the case of GPS MCS (master control station) clock prediction; and (iv) introduce a method that reduces the computational complexity of Walter’s characterization of AVAR and MVAR.

To test stochastic ONA and the model, we predict -day AVAR, MVAR and HVAR based on 14-day GPS precise clock data. Due to limited space, we choose the result of GPS SVN 45 and 41 as representatives:

For the SVN.45 satellite clock, stochastic ONA cannot find a set of noise intensity coefficients for which Equation (

21) holds for input variances. In such a case, stochastic ONA predicts long-term stabilities based on Equation (

23). The criterion (

23) takes the probability distributions of input variances into account and produces robust results. All the referenced variances are included in the predicted confidence regions. On the other hand,

- and 2-day referenced AVAR and MVAR, and

= 2∼7-day HVAR are not included in the 95%-confidence regions estimated from 42-day clock data.

For the SVN.41 onboard clock, stochastic ONA does find noise intensity coefficient values that hold for the probabilistic constraints (

21). In this case, it predicts long-term stability of the satellite frequency standard based on the least square criterion (

17). Despite the compactness of predicted confidence intervals, only

-day referenced variances are included in these regions. Specifically,

-day referenced AVAR and MVAR are greater than the predicted ones, while the

-day referenced HVAR is smaller than the predictions. This suggests an overestimation of RWFM noise level and underestimation of frequency drift. Nevertheless, the inconsistency may be interpreted as the inappropriate power-law model used in this paper.

In summary, the method introduced in this paper can predict long-term stability superimposed with influences of frequency drift. Criterion (

23) takes the probability distributions of input variances into account by assuming the majority of input variances within their

confidence regions. The predictions made therein have large uncertainty, but are robust. In contrast, the least square criterion (

17) assumes non-existing symmetric distributions of input variances, which reduce both the uncertainty and robustness of the result. We should find an alternative for the least square criterion in a future study.