Enhancing Multi-Camera People Detection by Online Automatic Parametrization Using Detection Transfer and Self-Correlation Maximization †

Abstract

1. Introduction

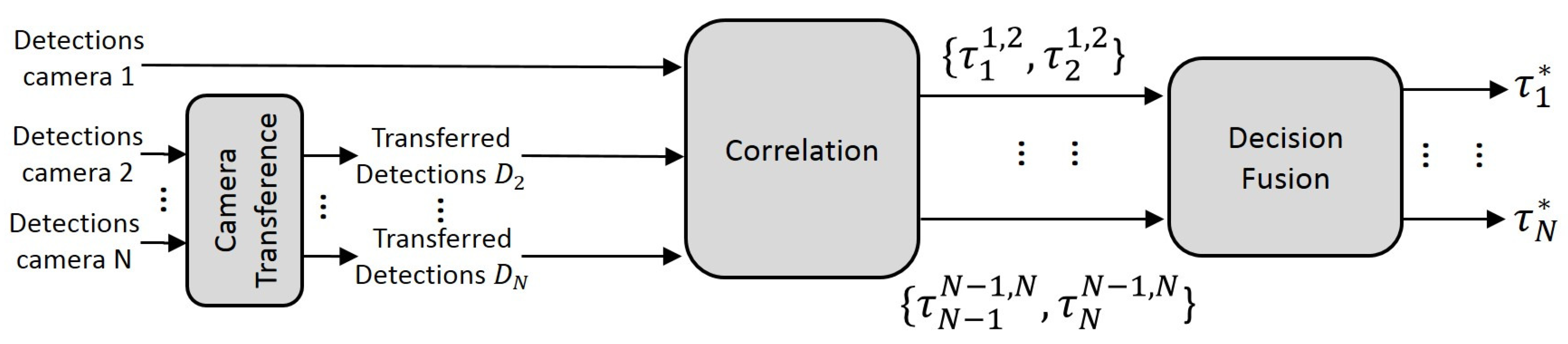

2. Framework Overview

- The frame-by-frame detections of all cameras are extracted, transferred, and homogenized (the position and volume of the transferred detections between cameras must be corrected) to the desired viewpoint . In this way, the object information is not reduced to a simple coordinate, allowing transferring more information (volume, height, aspect ratio) and processing the information for each camera viewpoint.

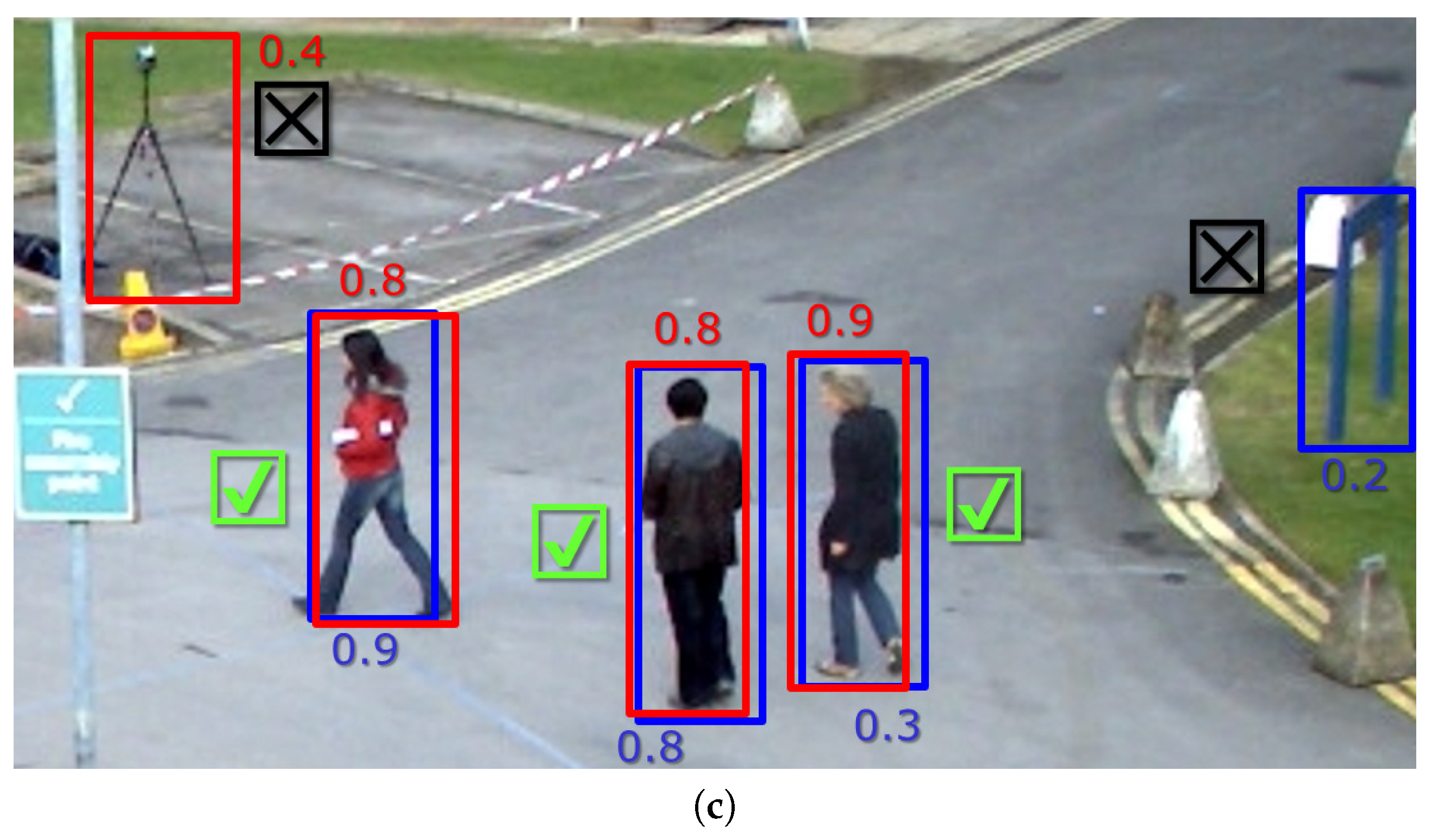

- The homogenized detections from the previous stage are correlated frame by frame, and an optimal decision threshold is selected for each camera and frame. The correlations are computed for each pair of transferred detection results ( and ), which determine an optimal pair of thresholds for each pair of cameras ( and , respectively). Finally, the pair-wise selected thresholds are combined by weighted voting to obtain the best adapted threshold for each individual camera (, ..., ).

3. Detection Transfer between Cameras

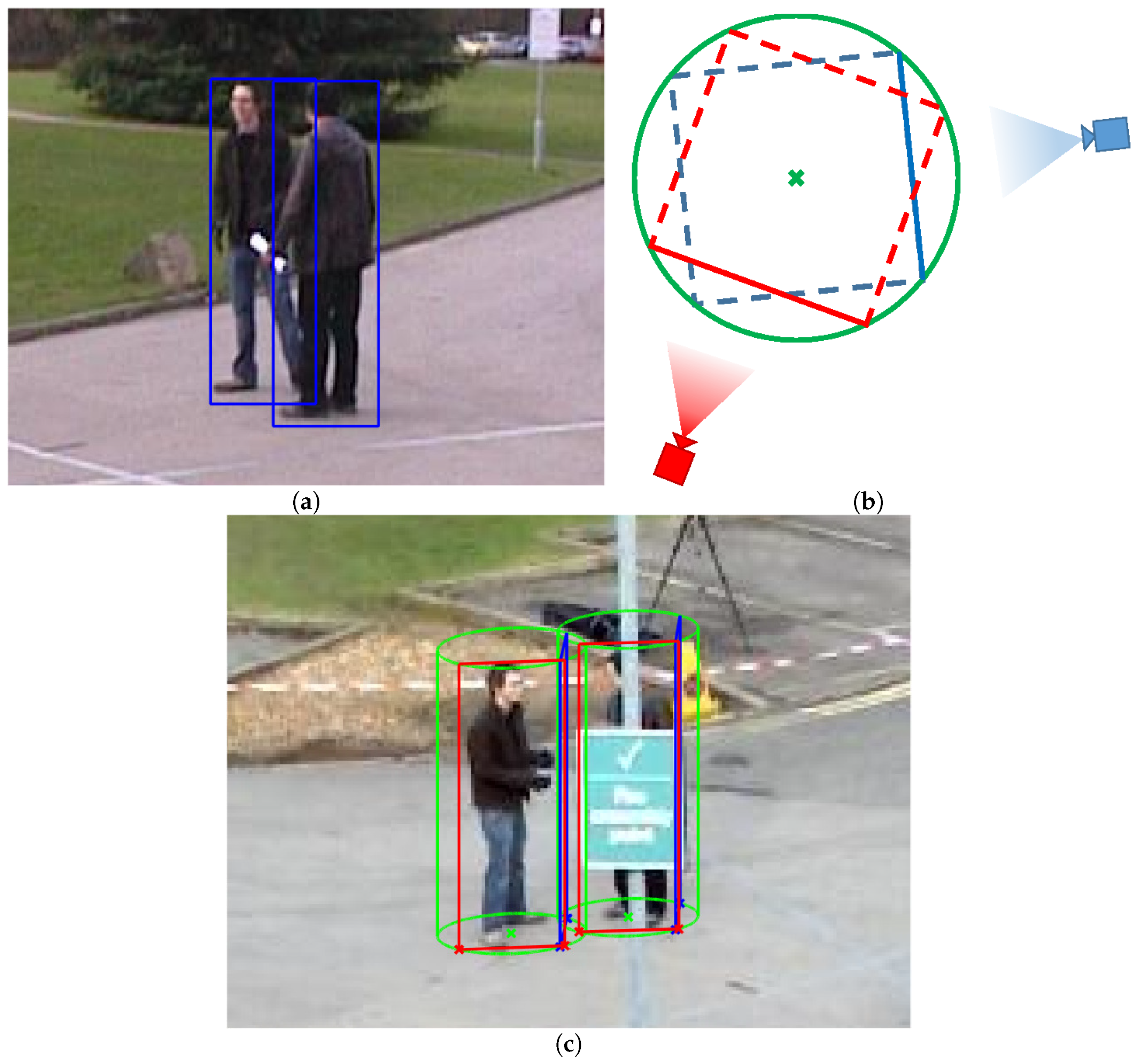

- Firstly, the base (bottom) segment of the detection bounding box is projected to the common plane, the ground floor plane in our scenario. This plane can be obtained using homographic techniques, or from the intrinsic and extrinsic parameters of the cameras. We use the base segment as it is in the common plane (we assume that every person is over the ground and therefore also the base segment of their corresponding bounding box), which allows accurately transferring it. Figure 2a shows two bounding boxes that will be transferred.

- Using the projected segment in the common plane, a circumference is defined so that the projected segment forms one of the sides of a square inscribed therein. In Figure 2b, the projected segment is represented with the continuous blue line, the square is represented with the discontinuous blue line, and the circumference is represented with a (green) circle.

- To define the bounding box base segment that will be transferred to the other camera, the inscribed square (blue) is rotated (represented with the discontinuous red line in Figure 2b) with an angle such that the closest side is perpendicular to the line connecting the new camera with the center of the circumference (green cross in Figure 2b). This improves the direct transfer results, i.e., without geometry and volume considerations. This side (continuous red line) corresponds to the projection of the transferred bounding box base segment.

- The height of the cylinder is estimated assuming a fixed aspect ratio, taking into account the object original height and the cameras’ distances to the object.

- Finally, this generated cylinder is transferred to the point of view of the new camera, again using a homography (inverse matrix) or from the intrinsic and extrinsic parameters of the new camera. An example of the resulting cylinders and transferred bounding boxes is shown in Figure 2c.

4. Correlation Stage

5. Experimental Results

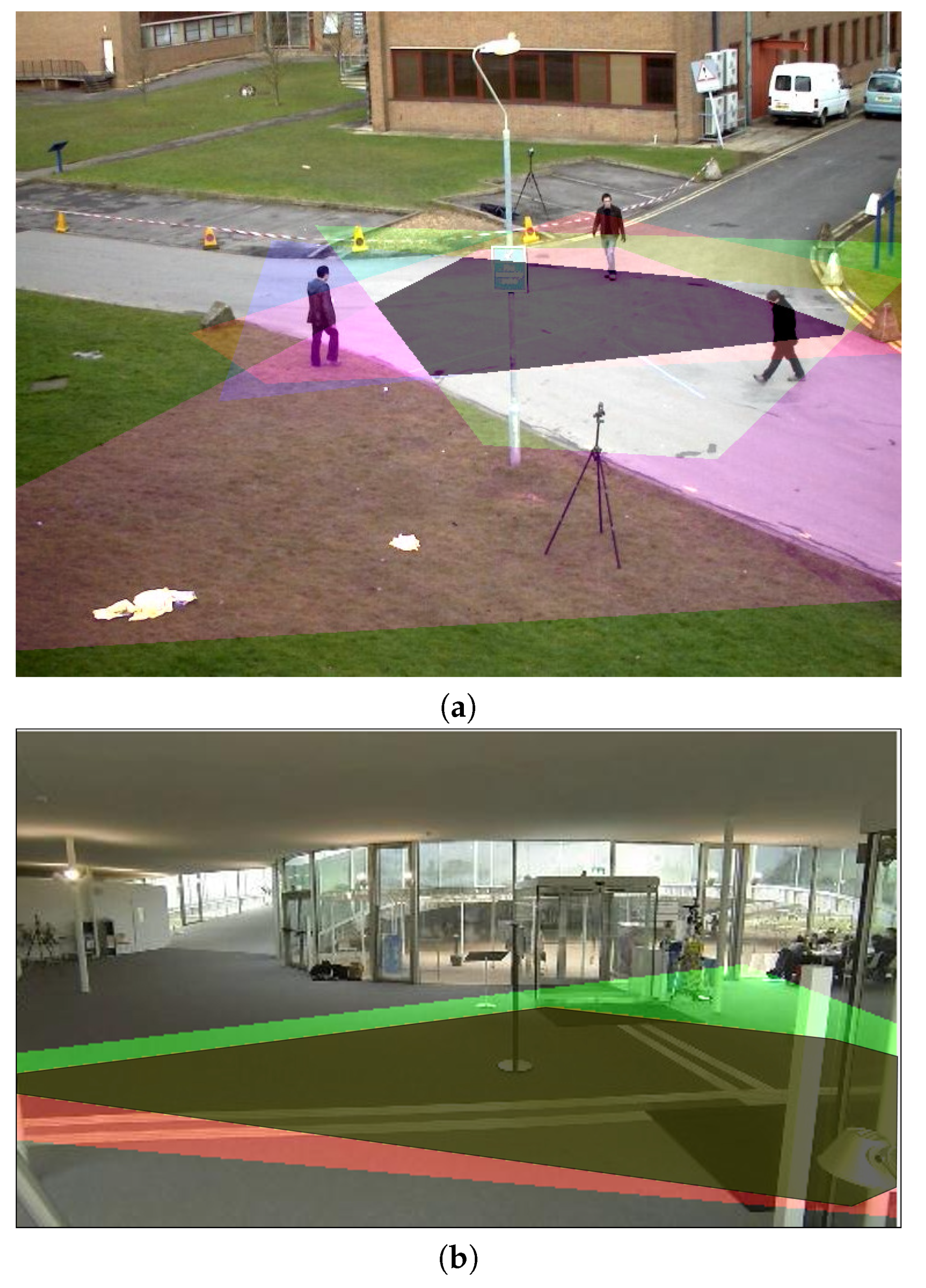

5.1. Experimental Setup

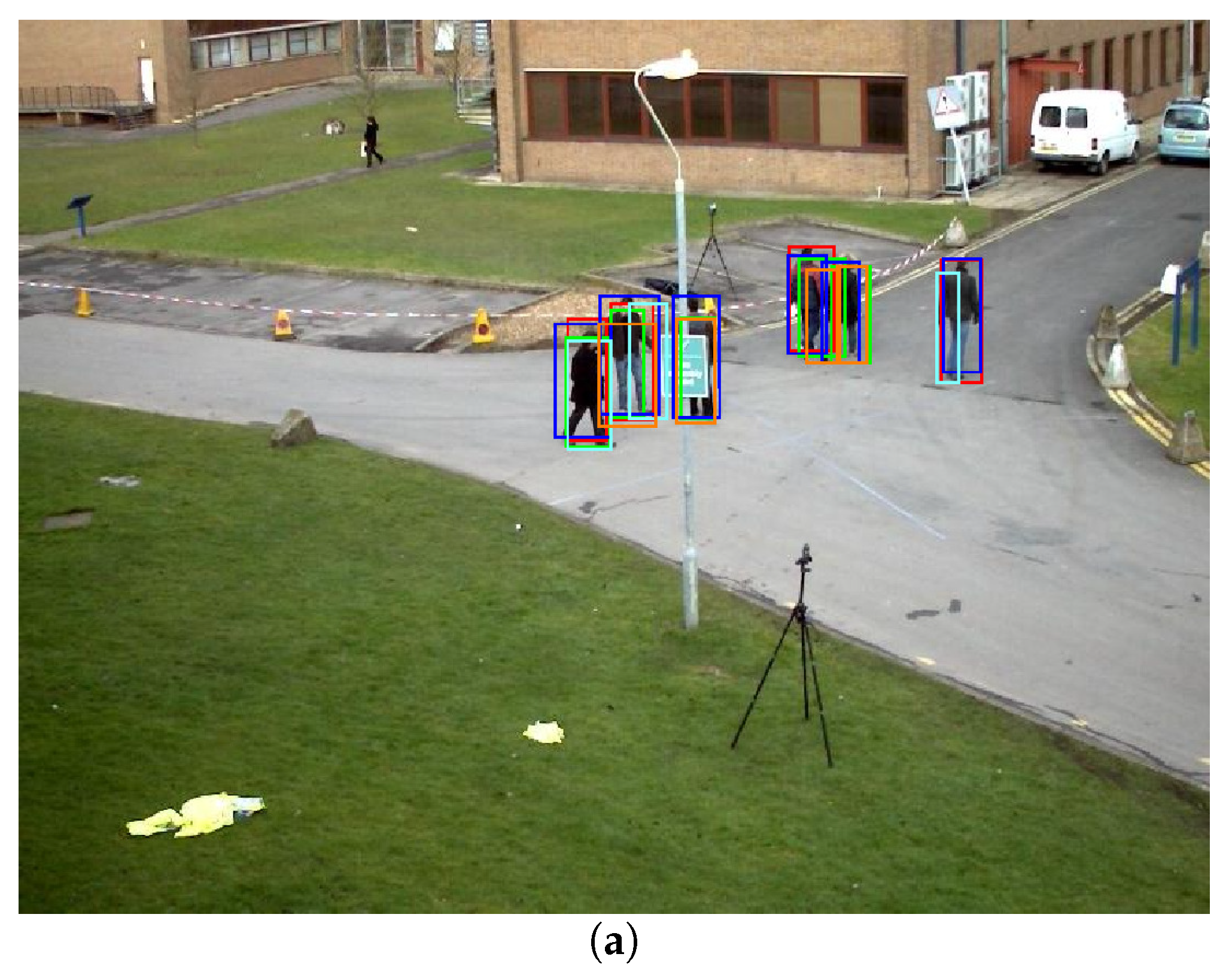

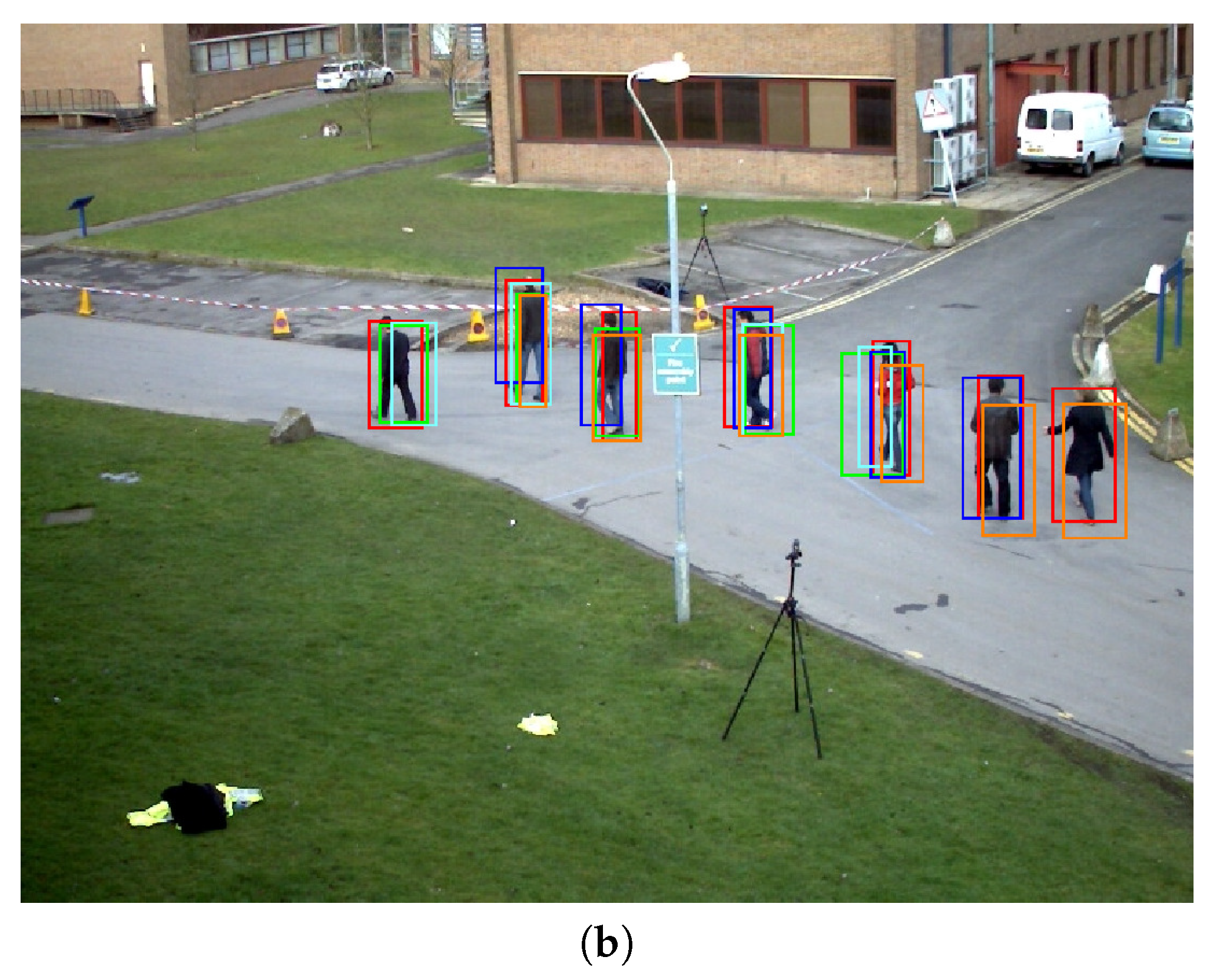

5.2. Proposal Results

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Wang, X.; Wang, M.; Li, W. Scene-Specific Pedestrian Detection for Static Video Surveillance. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 361–374. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Martin, A.; Martinez, J.M. People detection in surveillance: classification and evaluation. IET Comput. Vis. 2015, 9, 779–788. [Google Scholar] [CrossRef]

- Devyatkov, V.V.; Alfimtsev, A.N.; Taranyan, A.R. Multicamera Human Re-Identification based on Covariance Descriptor. Pattern Recognit. Image Anal. 2018, 28, 232–242. [Google Scholar] [CrossRef]

- Nieto, R.M.; Garcia-Martin, A.; Hauptmann, A.G.; Martinez, J.M. Automatic Vacant Parking Places Management System Using Multicamera Vehicle Detection. IEEE Trans. Intell. Transp. Syst. 2018, 1–12. [Google Scholar] [CrossRef]

- Pesce, M.; Galantucci, L.; Percoco, G.; Lavecchia, F. A Low-cost Multi Camera 3D Scanning System for Quality Measurement of Non-static Subjects. Procedia CIRP 2015, 28, 88–93. [Google Scholar] [CrossRef]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. NTU RGB+D: A Large Scale Dataset for 3D Human Activity Analysis. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 25–28 July 2016. [Google Scholar]

- Santos, T.T.; Morimoto, C.H. People Detection under Occlusion in Multiple Camera Views. In Proceedings of the XXI Brazilian Symposium on Computer Graphics and Image Processing, Campo Grande, 12–15 Octobrer 2008; pp. 53–60. [Google Scholar] [CrossRef]

- Kim, K.; Davis, L.S. Multi-camera Tracking and Segmentation of Occluded People on Ground Plane Using Search-guided Particle Filtering. In Proceedings of the 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 98–109. [Google Scholar]

- Santos, T.T.; Morimoto, C.H. Multiple camera people detection and tracking using support integration. Pattern Recognit. Lett. 2011, 32, 47–55. [Google Scholar] [CrossRef]

- Black, J.; Ellis, T.; Rosin, P. Multi view image surveillance and tracking. In Proceedings of the Workshop on Motion and Video Computing, Orlando, FL, USA, 9 December 2002; pp. 169–174. [Google Scholar] [CrossRef]

- Felzenszwalb, P.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part-Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Dollar, P.; Appel, R.; Belongie, S.; Perona, P. Fast Feature Pyramids for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Orlando, FL, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Garcia-Martin, A.; SanMiguel, J.C. Adaptive people detection based on cross-correlation maximization. In Proceedings of the International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 3385–3389. [Google Scholar] [CrossRef]

- Kilambi, P.; Ribnick, E.; Joshi, A.J.; Masoud, O.; Papanikolopoulos, N. Estimating Pedestrian Counts in Groups. Comput. Vis. Image Understand. 2008, 110, 43–59. [Google Scholar] [CrossRef]

- Milan, A.; Roth, S.; Schindler, K. Continuous Energy Minimization for Multitarget Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 58–72. [Google Scholar] [CrossRef] [PubMed]

- Tsai, R.Y. An efficient and accurate camera calibration technique for 3d machine vision. In Proceedings of the Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 22–26 June 1986; pp. 364–374. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. Available online: http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html (accessed on 5 November 2018).

| Sequence | # of Cameras | # of Frames | # of Pedestrians per Frame |

|---|---|---|---|

| PETS S2-L1 | 5 | 795 | 1.8 |

| PETS S3MF1 | 5 | 107 | 1.9 |

| EPFL-RLC | 3 | 2000 | 6.1 |

| Cam1 | Cam2 | Cam3 | Cam4 | Cam5 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sequence | P | R | F | P | R | F | P | R | F | P | R | F | P | R | F |

| PETS S2-L1 | 0.78 | 0.74 | 0.74 | 0.63 | 0.56 | 0.58 | 0.56 | 0.55 | 0.54 | 0.56 | 0.55 | 0.53 | 0.56 | 0.53 | 0.53 |

| PETS S3MF1 | 0.68 | 0.67 | 0.66 | 0.60 | 0.53 | 0.55 | 0.50 | 0.50 | 0.49 | 0.51 | 0.47 | 0.48 | 0.48 | 0.44 | 0.46 |

| EPFL-RLC | 0.91 | 0.66 | 0.73 | 0.80 | 0.56 | 0.63 | 0.71 | 0.45 | 0.52 | ||||||

| Cam1 | Cam2 | Cam3 | Cam4 | Cam5 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sequence | OFT | Ours | OFT | Ours | OFT | Ours | OFT | Ours | OFT | Ours | |||||

| PETS S2-L1 | 0.69 | 0.74 | 7.6 | 0.47 | 0.58 | 23.6 | 0.43 | 0.54 | 25.2 | 0.43 | 0.53 | 25.6 | 0.43 | 0.53 | 24.2 |

| PETS S3MF1 | 0.65 | 0.66 | 2.6 | 0.45 | 0.55 | 23.9 | 0.39 | 0.49 | 26.2 | 0.38 | 0.48 | 26.5 | 0.36 | 0.46 | 28.1 |

| EPFL-RLC | 0.65 | 0.73 | 12.9 | 0.53 | 0.63 | 20.3 | 0.38 | 0.52 | 36.8 | ||||||

| DPM [11] | |||||||||

| OFT | Ours | ||||||||

| Sequence | P | R | F | P | R | F | P | R | F |

| PETS S2-L1 | 0.41 | 0.33 | 0.35 | 0.54 | 0.41 | 0.45 | 31.6 | 25.9 | 29.0 |

| PETS S3MF1 | 0.47 | 0.34 | 0.38 | 0.60 | 0.46 | 0.50 | 25.8 | 35.9 | 31.8 |

| EPFL-RLC | 0.70 | 0.22 | 0.32 | 0.80 | 0.44 | 0.49 | 13.7 | 98.9 | 54.6 |

| ACF [12] | |||||||||

| OFT | Ours | ||||||||

| Sequence | P | R | F | P | R | F | P | R | F |

| PETS S2-L1 | 0.76 | 0.66 | 0.69 | 0.81 | 0.71 | 0.74 | 6.6 | 6.3 | 6.5 |

| PETS S3MF1 | 0.61 | 0.43 | 0.49 | 0.68 | 0.58 | 0.61 | 12.6 | 33.9 | 25.6 |

| EPFL-RLC | 0.80 | 0.36 | 0.45 | 0.85 | 0.46 | 0.54 | 6.8 | 27.5 | 20.4 |

| Faster R-CNN [13] | |||||||||

| OFT | Ours | ||||||||

| Sequence | P | R | F | P | R | F | P | R | F |

| PETS S2-L1 | 0.70 | 0.66 | 0.69 | 0.78 | 0.74 | 0.74 | 11.3 | 11.5 | 7.6 |

| PETS S3MF1 | 0.65 | 0.63 | 0.65 | 0.68 | 0.67 | 0.66 | 4.1 | 5.6 | 2.6 |

| EPFL-RLC | 0.83 | 0.55 | 0.65 | 0.91 | 0.66 | 0.73 | 9.5 | 20.2 | 12.9 |

| YOLO9000 [14] | |||||||||

| OFT | Ours | ||||||||

| Sequence | P | R | F | P | R | F | P | R | F |

| PETS S2-L1 | 0.74 | 0.65 | 0.68 | 0.81 | 0.71 | 0.74 | 9.2 | 10.5 | 9.9 |

| PETS S3MF1 | 0.64 | 0.58 | 0.60 | 0.69 | 0.63 | 0.65 | 7.8 | 9.0 | 8.7 |

| EPFL-RLC | 0.81 | 0.59 | 0.67 | 0.92 | 0.60 | 0.74 | 13.4 | 1.9 | 11.2 |

| Sequence | # of Pedestrians Per Frame | Detection Transfer Stage (ms) | Correlation Stage (ms) | Total (ms) |

|---|---|---|---|---|

| PETS S2-L1 | 1.8 | 387.2 | 4.3 | 391.5 |

| PETS S3MF1 | 1.9 | 294.8 | 5.8 | 300.6 |

| EPFL-RLC | 6.1 | 554.1 | 10.1 | 564.2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martín-Nieto, R.; García-Martín, Á.; Martínez, J.M.; SanMiguel, J.C. Enhancing Multi-Camera People Detection by Online Automatic Parametrization Using Detection Transfer and Self-Correlation Maximization †. Sensors 2018, 18, 4385. https://doi.org/10.3390/s18124385

Martín-Nieto R, García-Martín Á, Martínez JM, SanMiguel JC. Enhancing Multi-Camera People Detection by Online Automatic Parametrization Using Detection Transfer and Self-Correlation Maximization †. Sensors. 2018; 18(12):4385. https://doi.org/10.3390/s18124385

Chicago/Turabian StyleMartín-Nieto, Rafael, Álvaro García-Martín, José M. Martínez, and Juan C. SanMiguel. 2018. "Enhancing Multi-Camera People Detection by Online Automatic Parametrization Using Detection Transfer and Self-Correlation Maximization †" Sensors 18, no. 12: 4385. https://doi.org/10.3390/s18124385

APA StyleMartín-Nieto, R., García-Martín, Á., Martínez, J. M., & SanMiguel, J. C. (2018). Enhancing Multi-Camera People Detection by Online Automatic Parametrization Using Detection Transfer and Self-Correlation Maximization †. Sensors, 18(12), 4385. https://doi.org/10.3390/s18124385