Reliable and Accurate Wheel Size Measurement under Highly Reflective Conditions

Abstract

1. Introduction

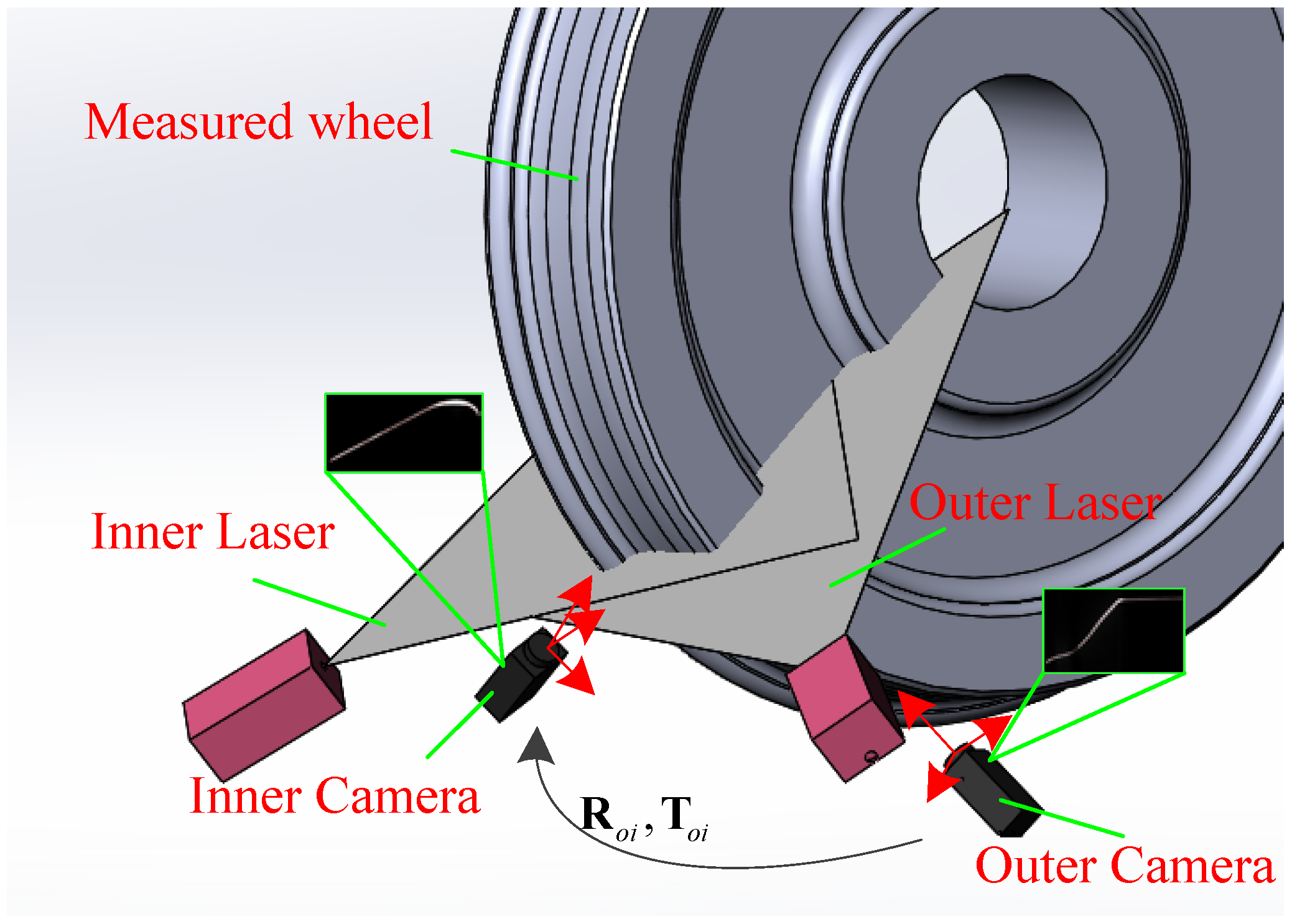

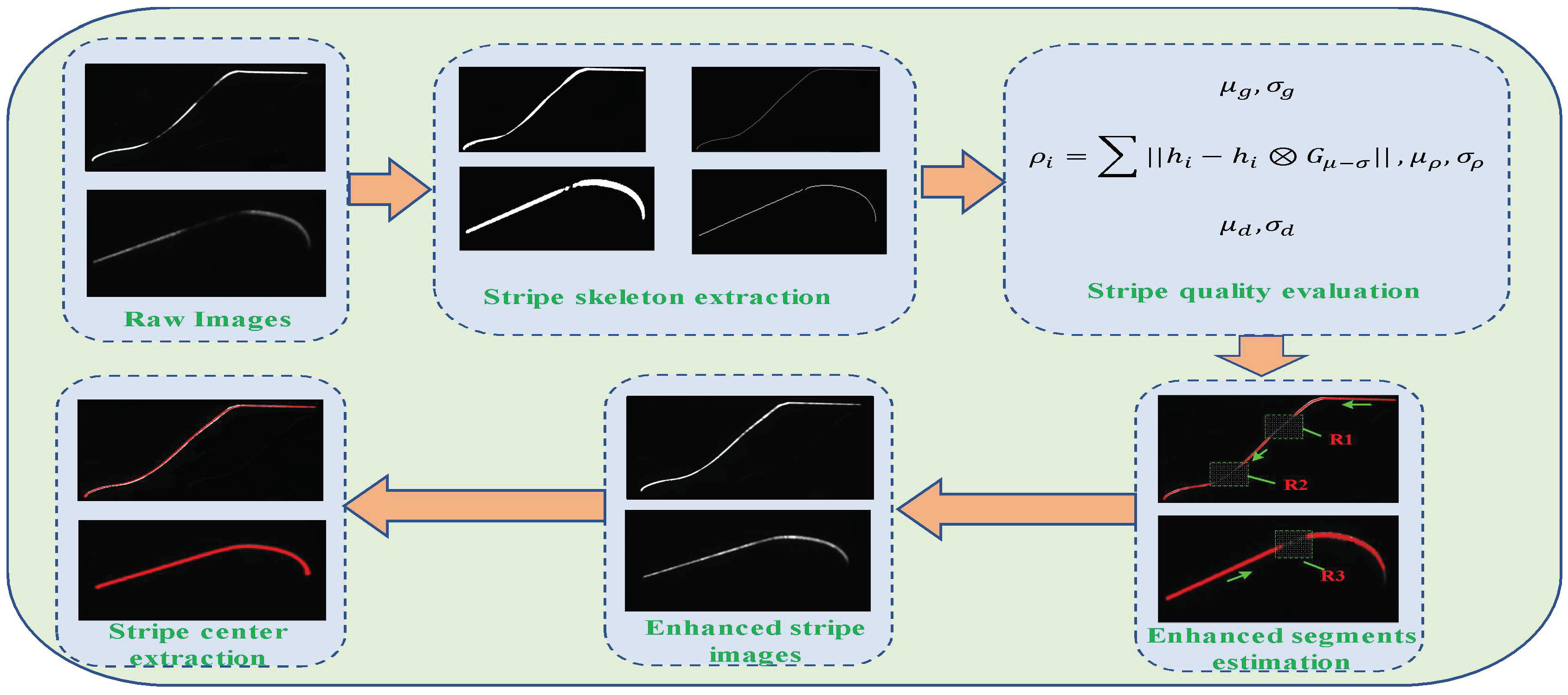

2. System Overview

- Step 1.

- Stripe images are synchronously captured from sensors installed around the measured wheel.

- Step 2.

- Image dilation and low threshold segmentation are adopted to obtain the stripe area in which valid stripes are located and the central skeleton points are extracted.

- Step 3.

- Stripe image quality evaluation criteria are established in accordance with the center extraction principle.

- Step 4.

- Based on the stripe quality criteria, the stripe segments with low brightness are obtained along the stripe skeleton trajectory.

- Step 5.

- MSR brightness enhancement is implemented to the low-quality stripe segments generated in step 4. Moreover, the valid enhanced stripe is segmented accurately based on the reflectivity.

- Step 6.

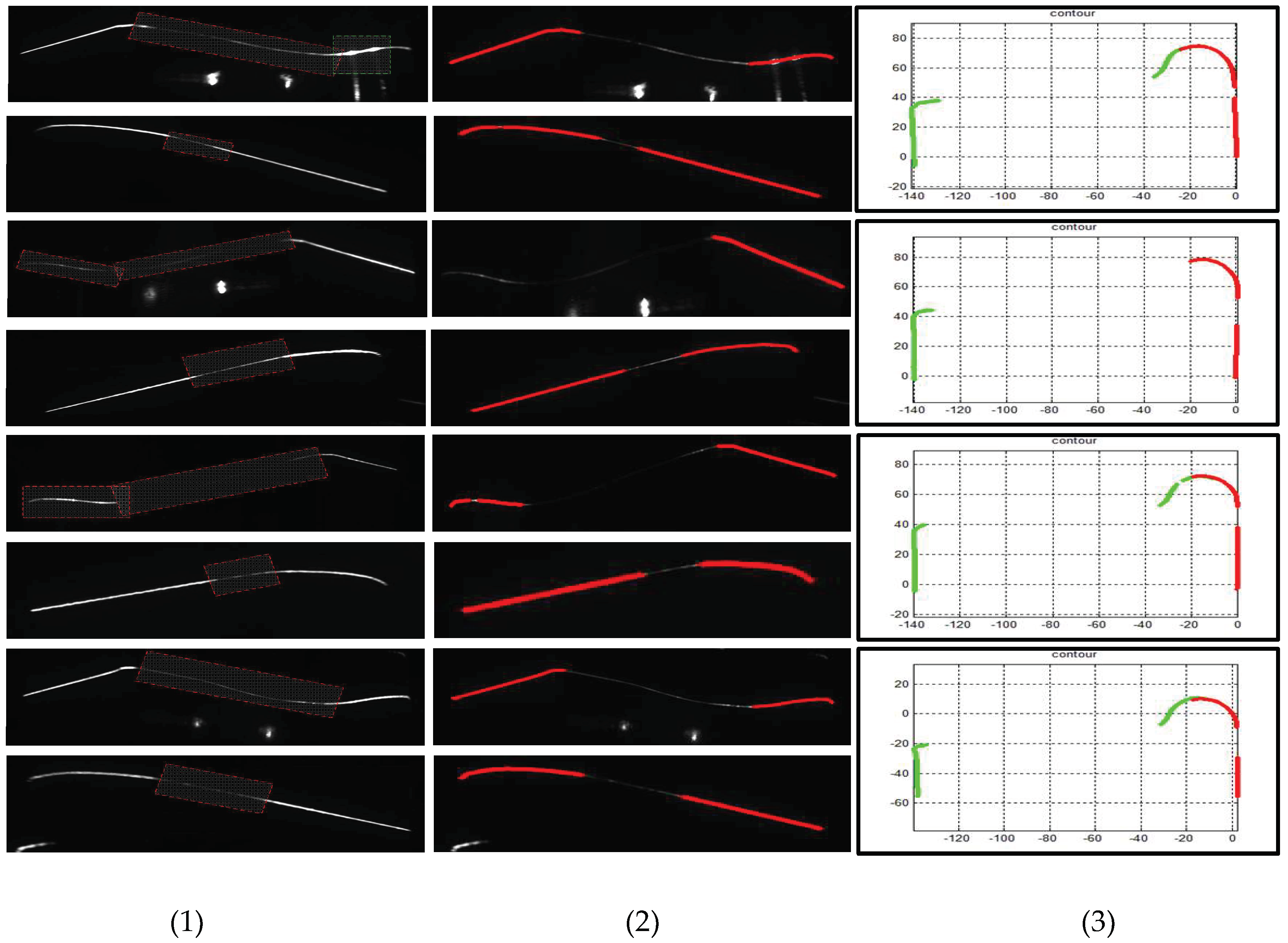

- The center points of the enhanced stripe are extracted and the wheel cross profile is reconstructed. Finally, wheel size parameters are calculated.

3. Algorithm Implementation

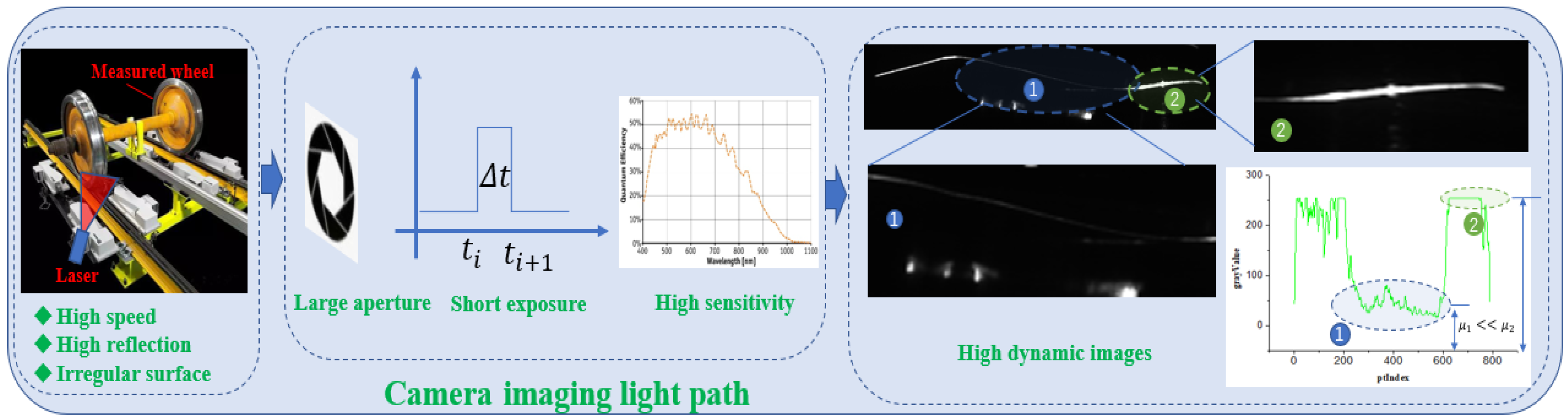

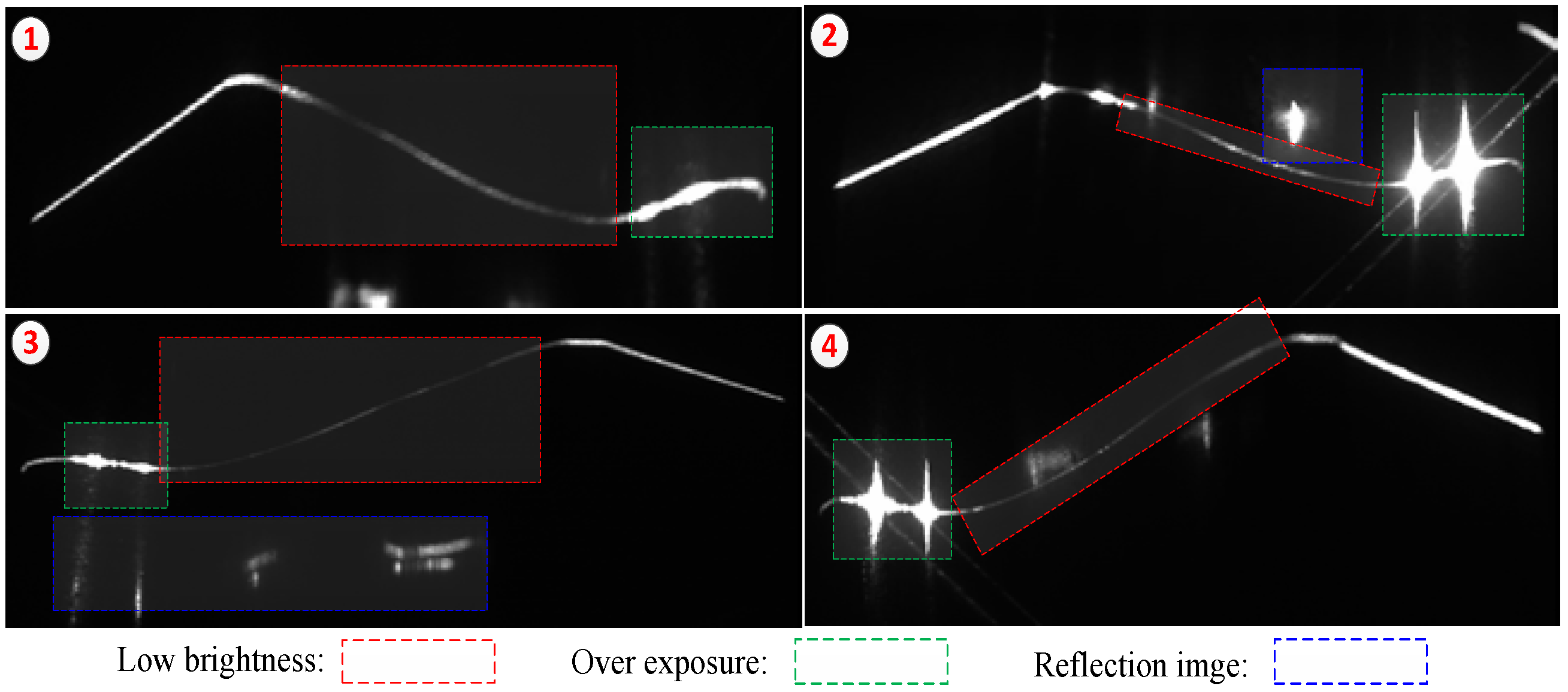

3.1. Stripe Imaging Model and Analysis

3.1.1. Stripe Imaging Description

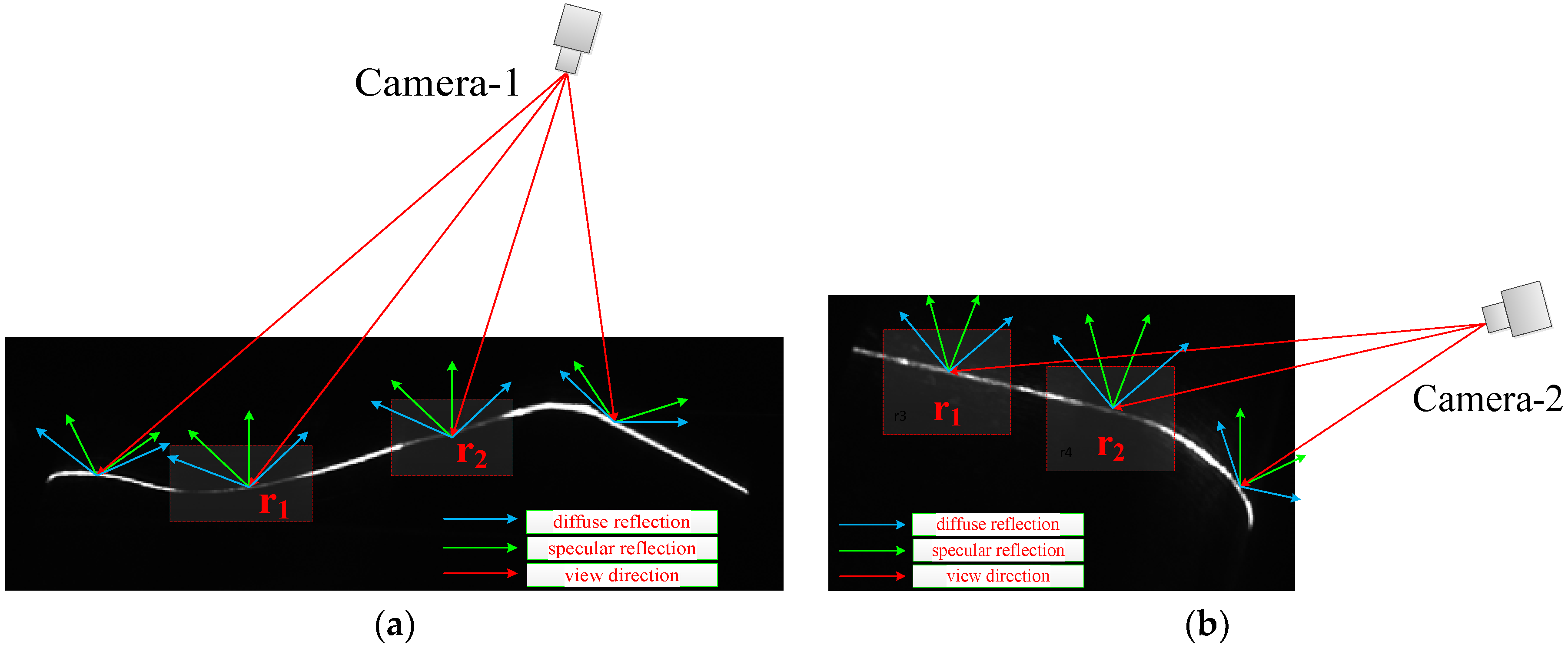

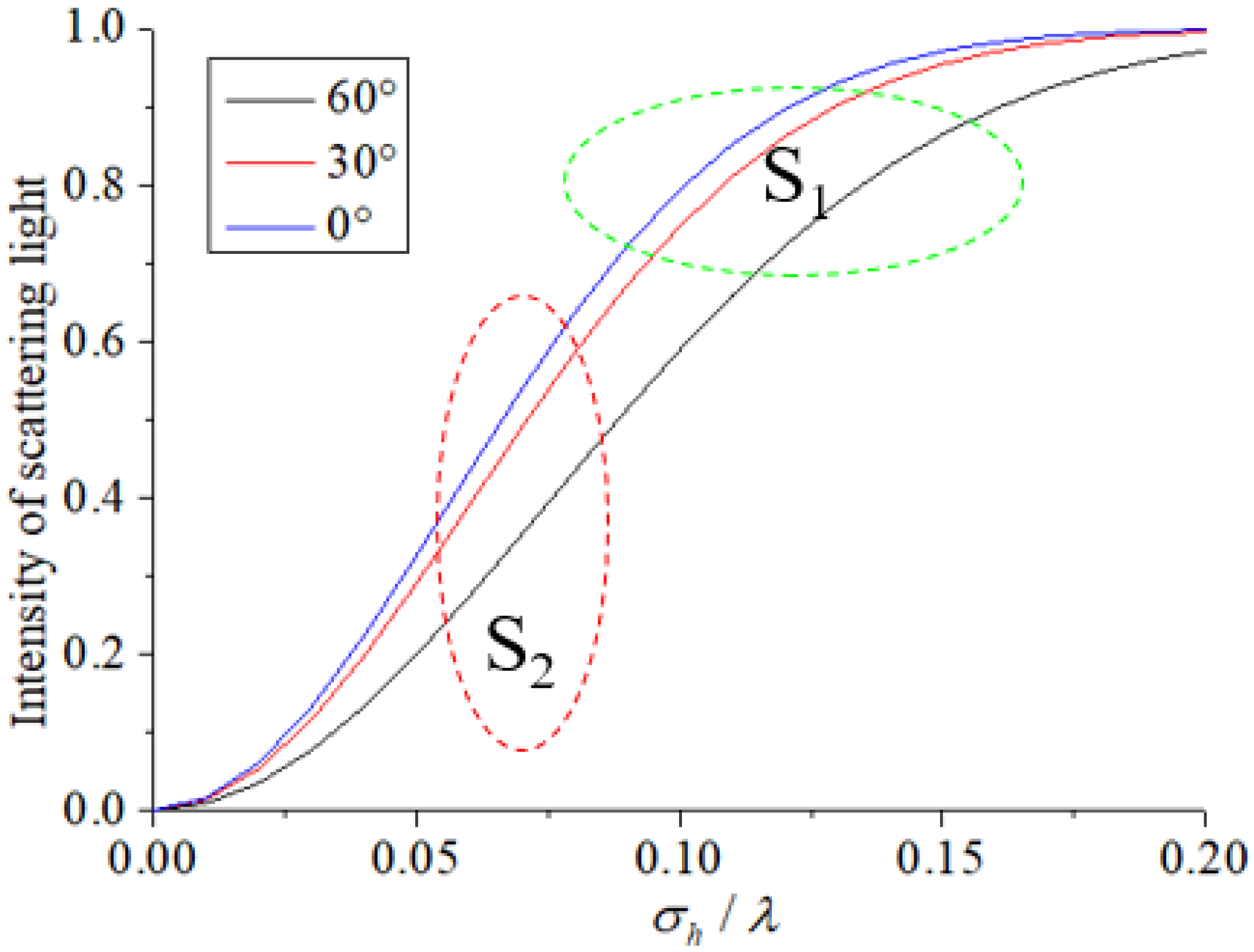

3.1.2. Stripe Brightness Analysis

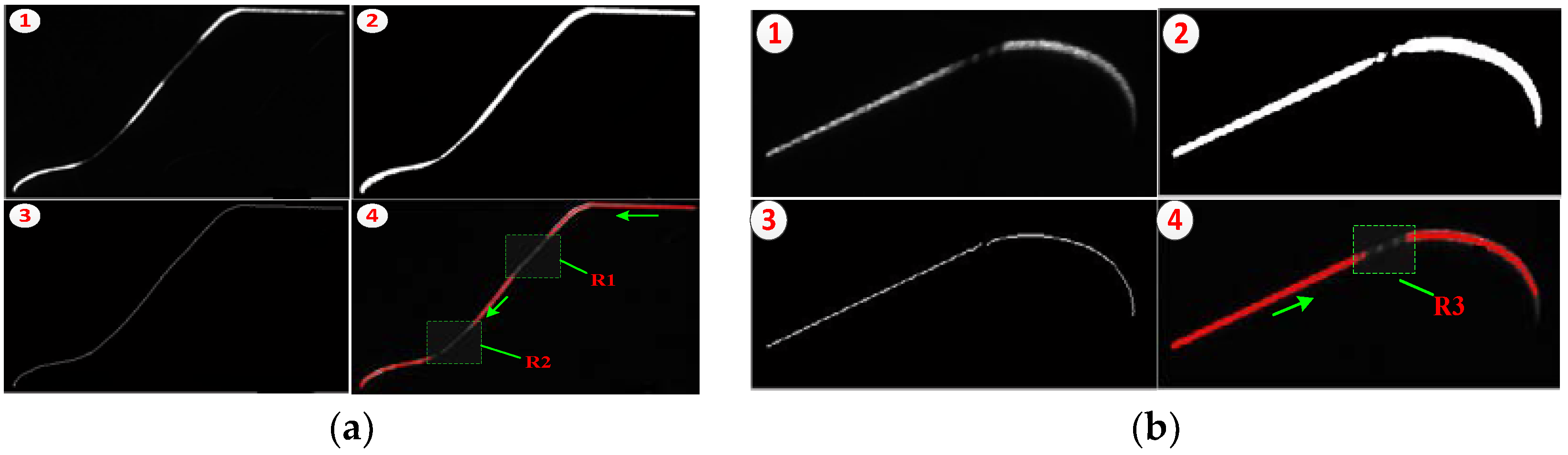

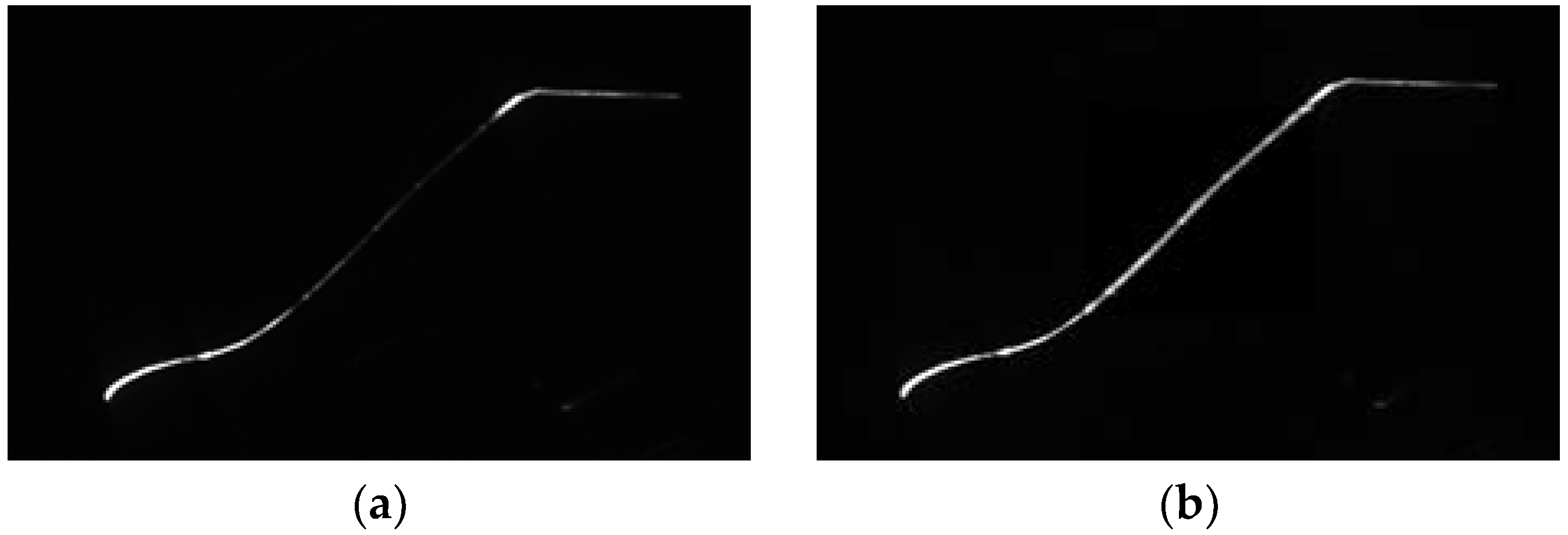

3.2. High-Dynamic Stripe Image Processing

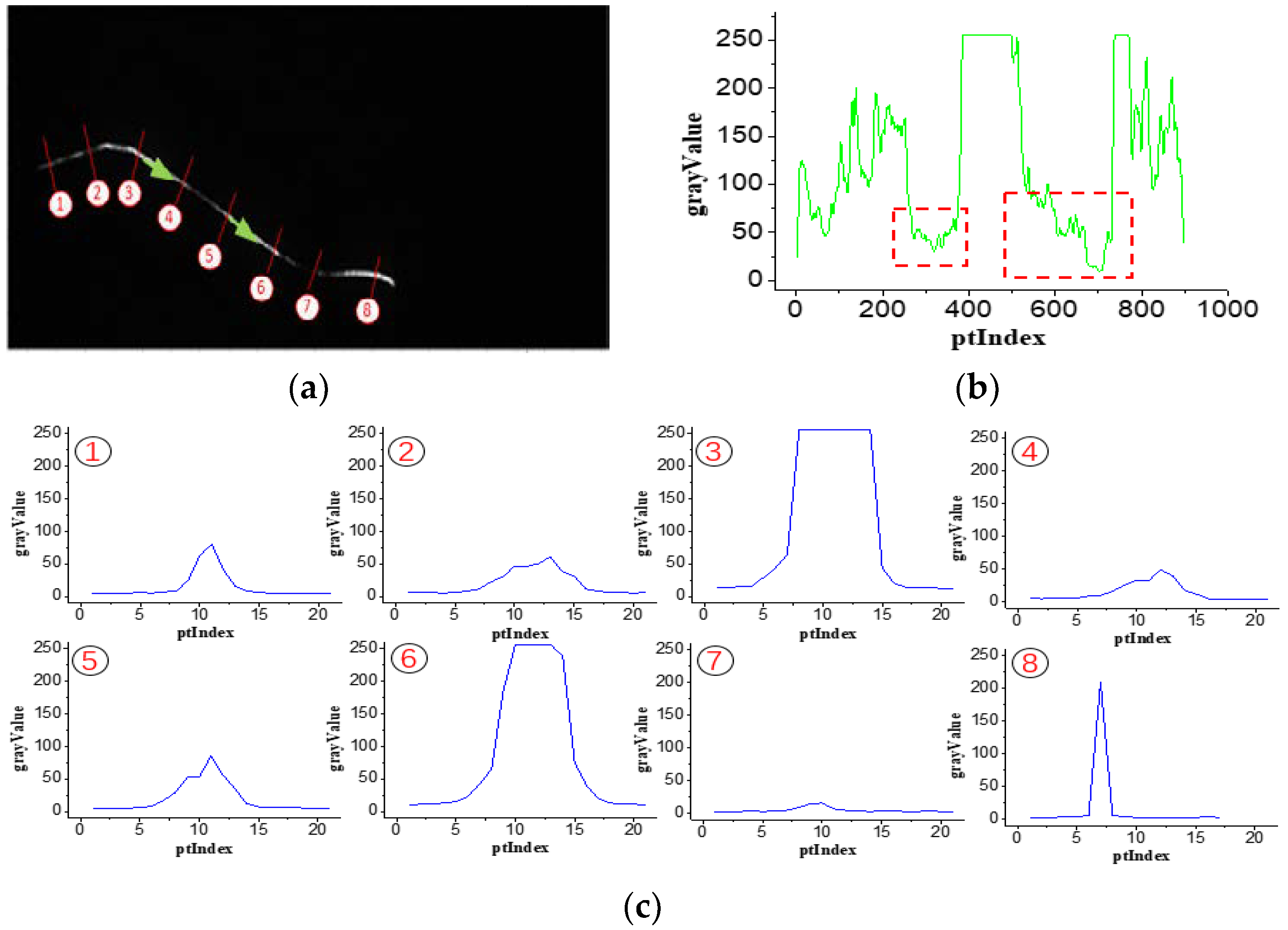

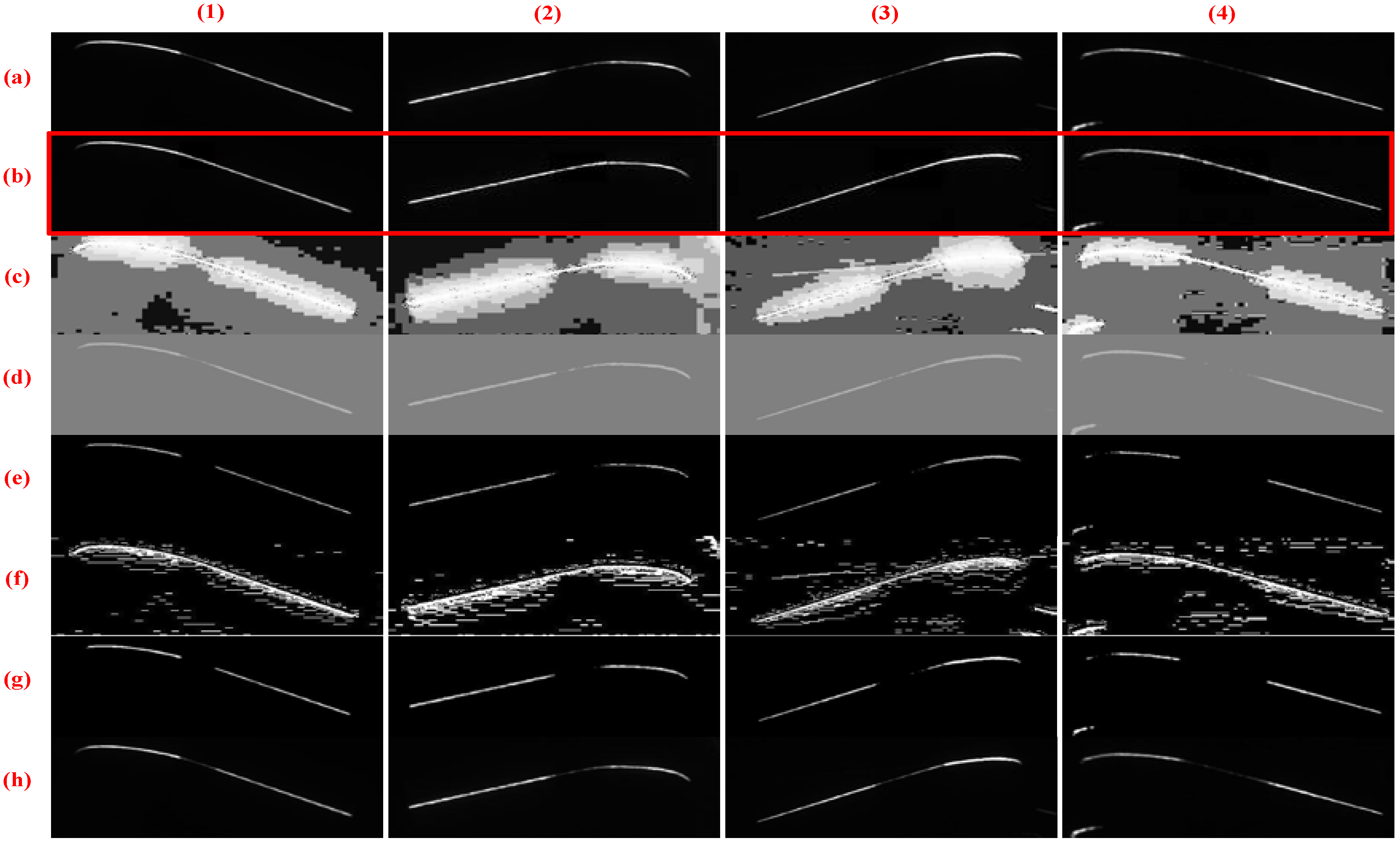

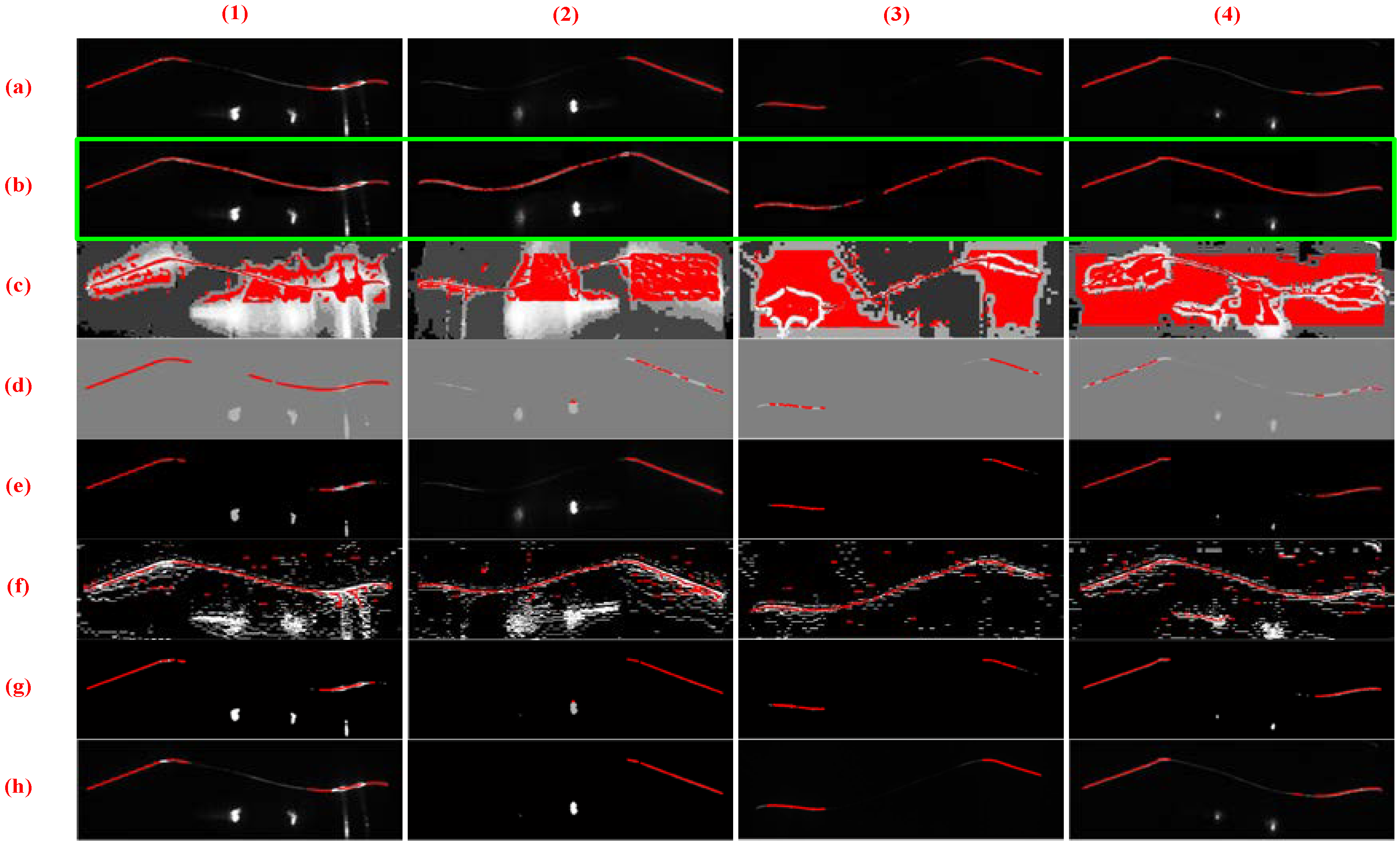

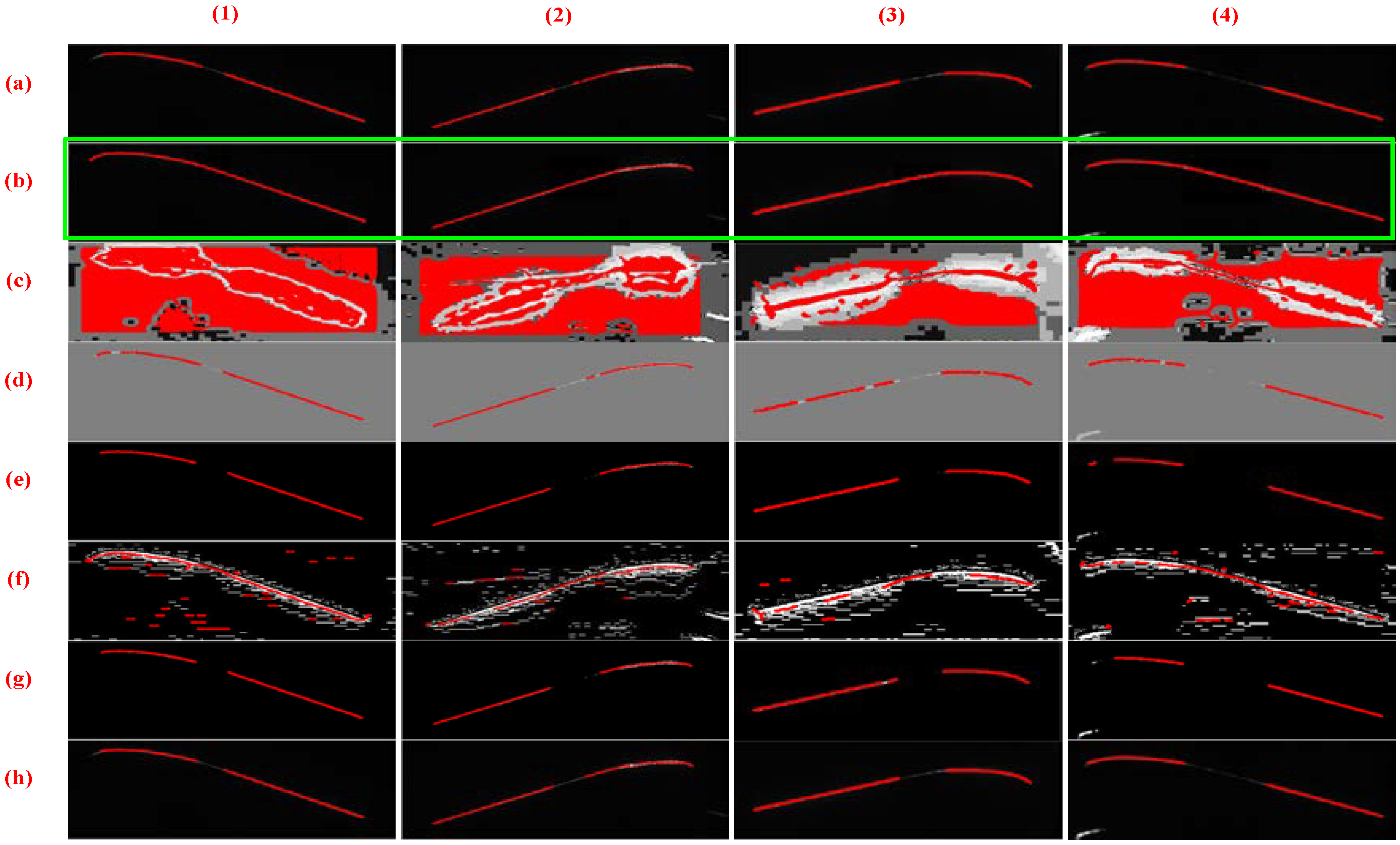

3.2.1. Quality Evaluation of Stripe Image

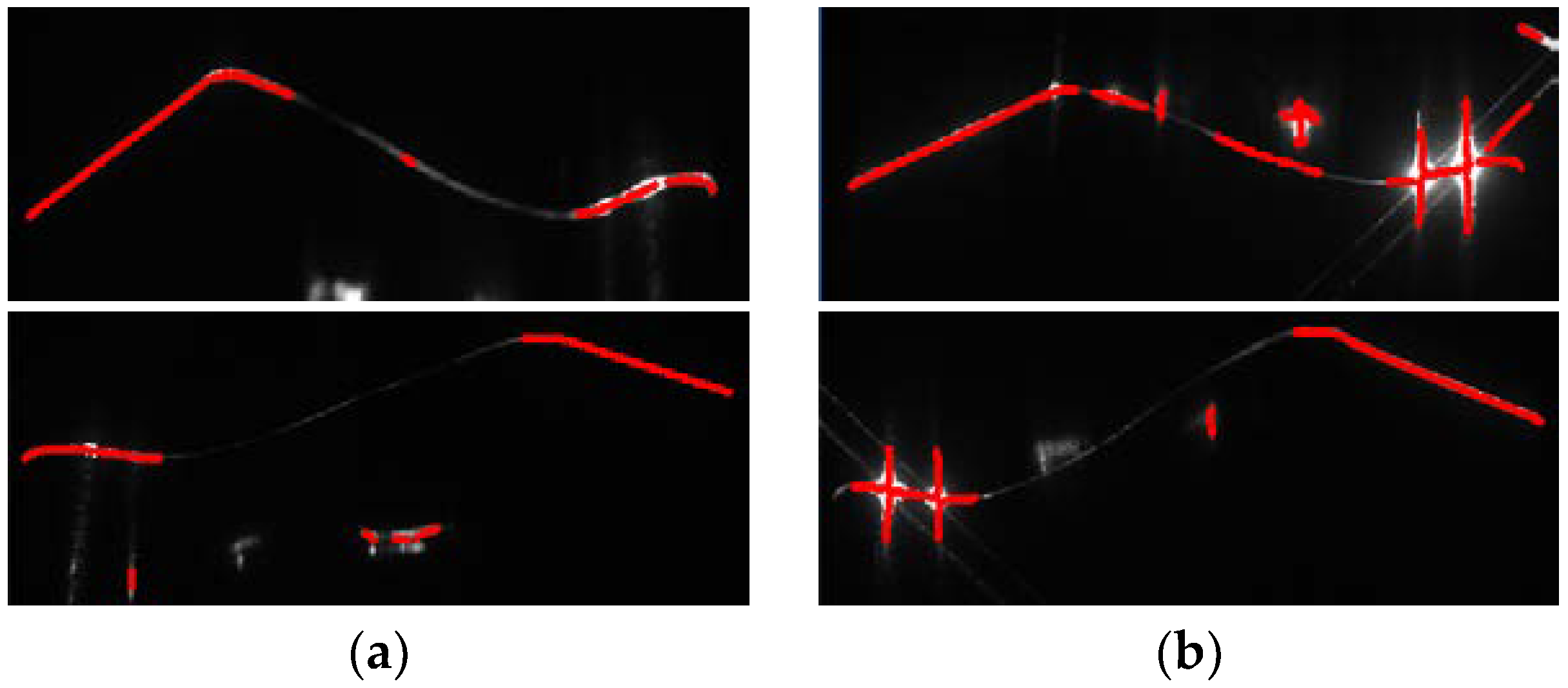

3.2.2. Enhanced Stripe Segments Location

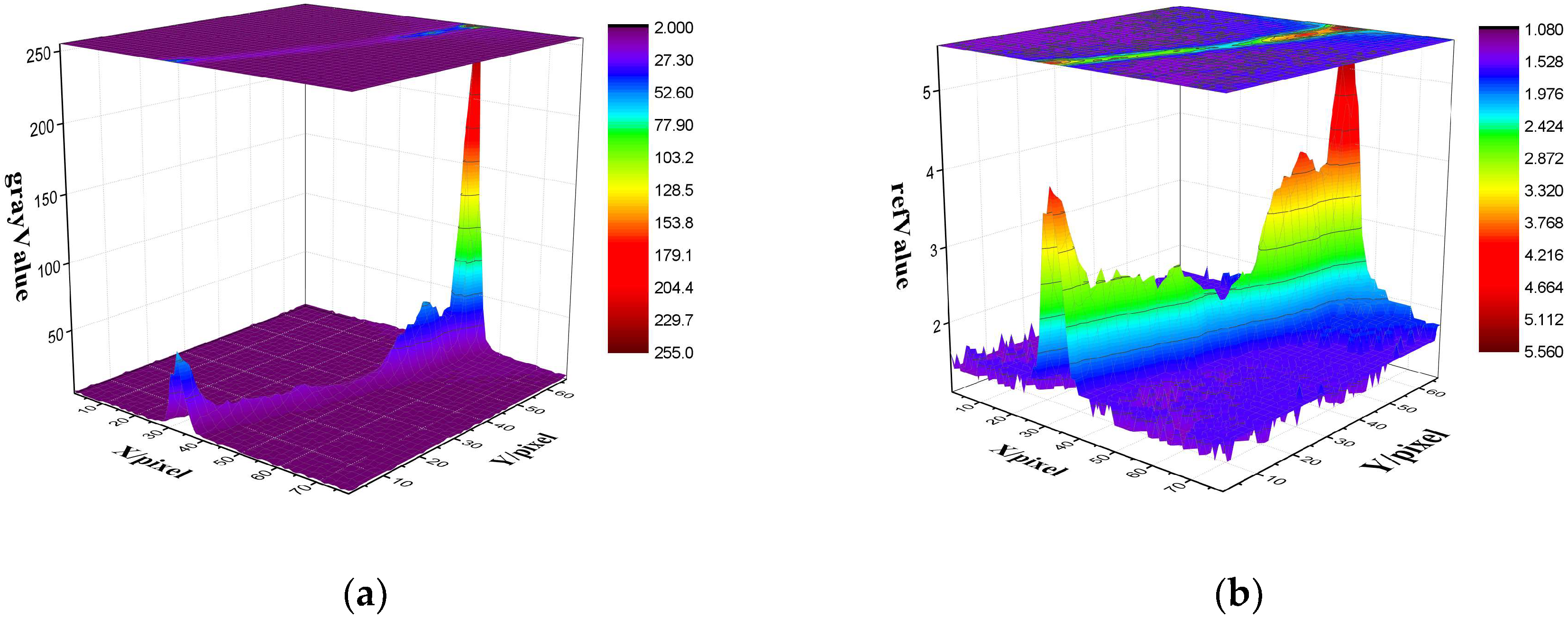

3.2.3. Image Brightness Enhancement

| Algorithm 1. High Dynamic Stripe Image Enhancement |

| Input: Low brightness stripe image I. |

| Output: High quality stripe image Ie. |

| 1: Function stripeImgHandler |

| 2: Compute surface reflectivity f; |

| 3: Normalize f to gray value and get enhanced image It; |

| 4: Calculate the initial vaue of segmentation threshold T; |

| 5: T = 0.5 × (min(T) + max(T)) |

| 6: Set segment flag = false; |

| 7: While(!flag) do |

| 8: Find the image index, g = find(f > T); |

| 9: Calculate stripe area segmentation threshold, |

| 10: Tn = 0.5 × (mean(f(g)) + mean(f(~ g))) |

| 11: flag = abs(T − Tn) < 0.1; |

| 12: T = Tn |

| 13: End while |

| 14: Segment It with T; |

| 15: return Ie; |

| 16: end function. |

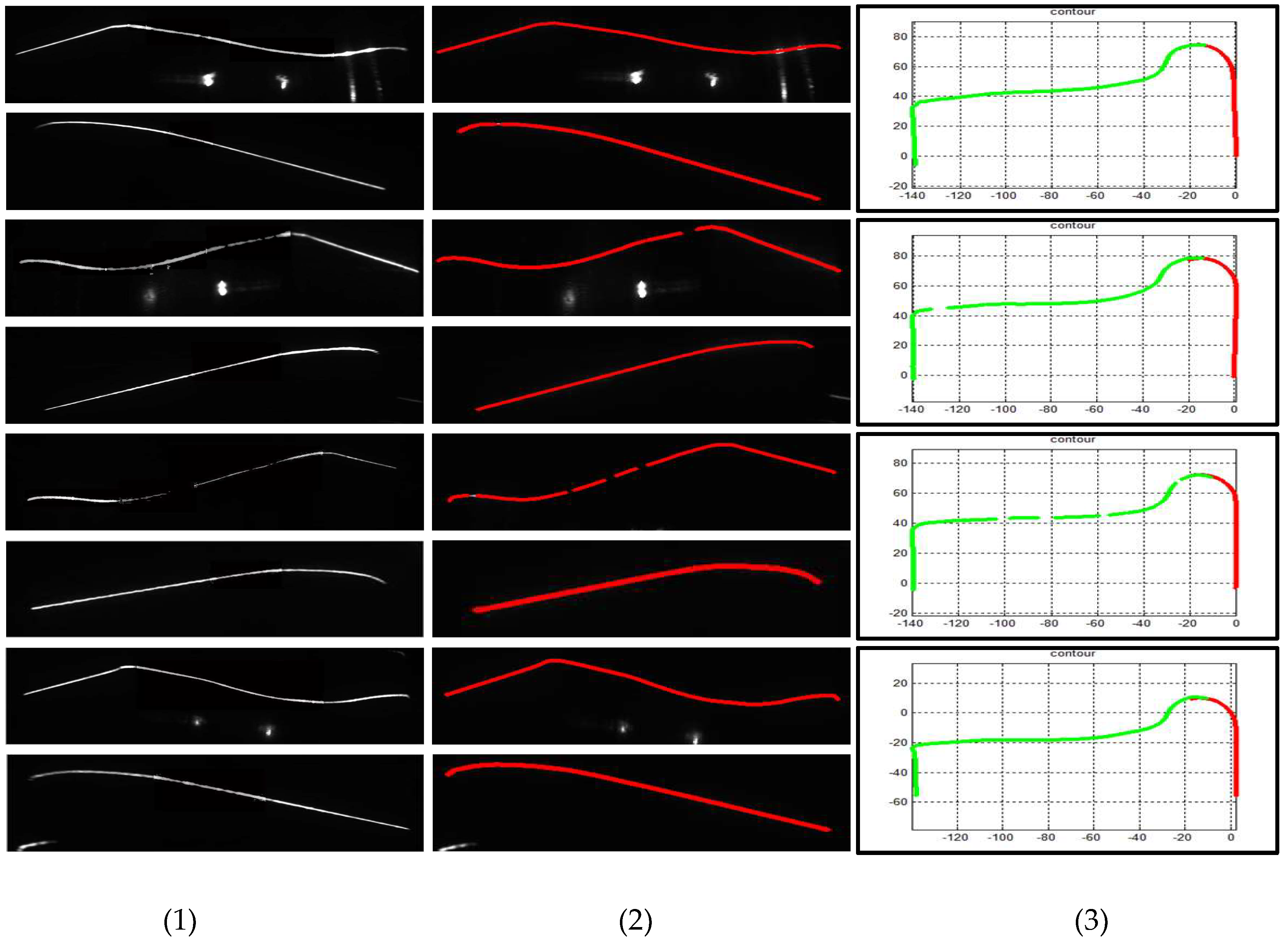

3.2.4. Stripe Segmentation

4. Experiments and Evaluations

4.1. Experimental Setup

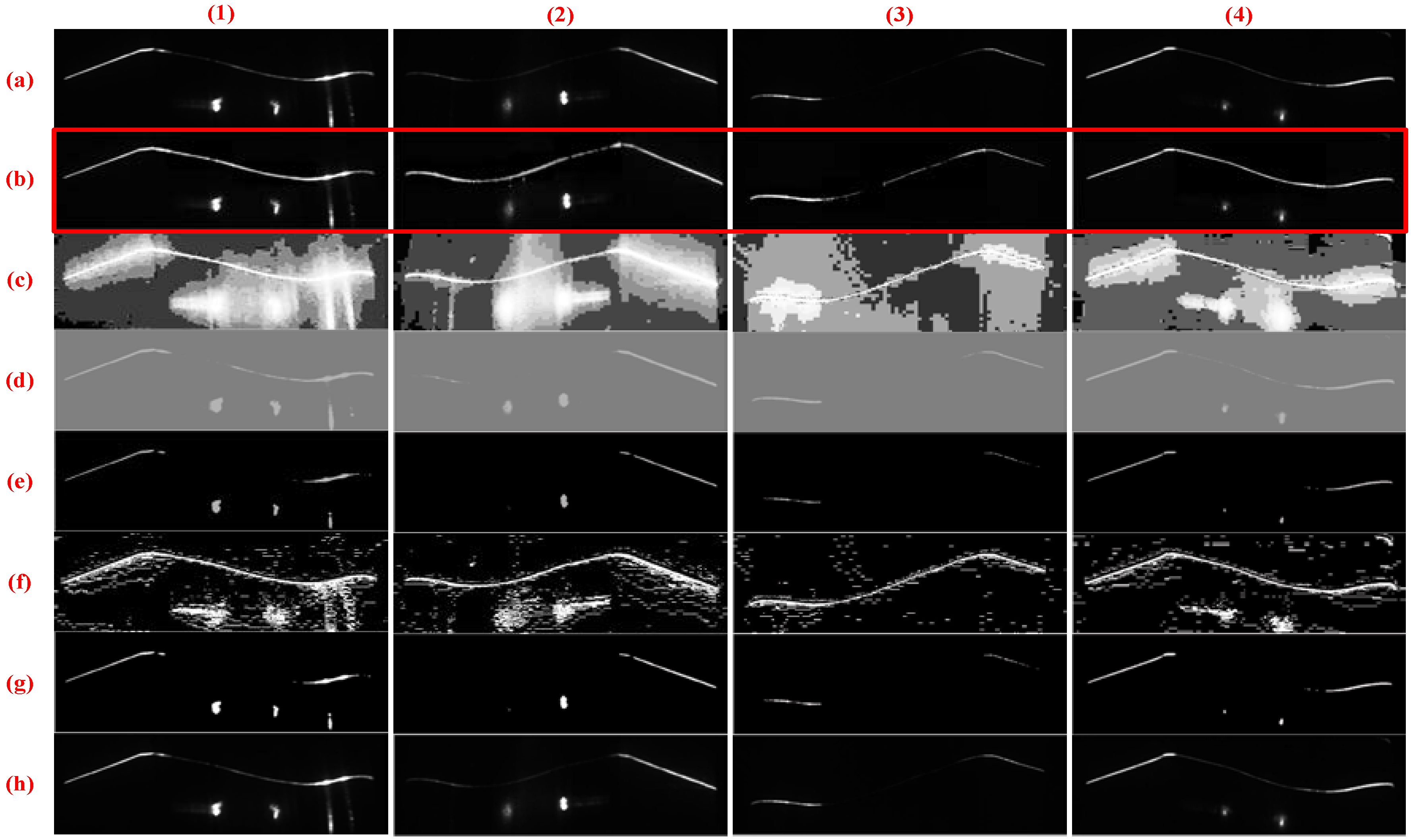

4.2. Comparison of Stripe Image Processing Results

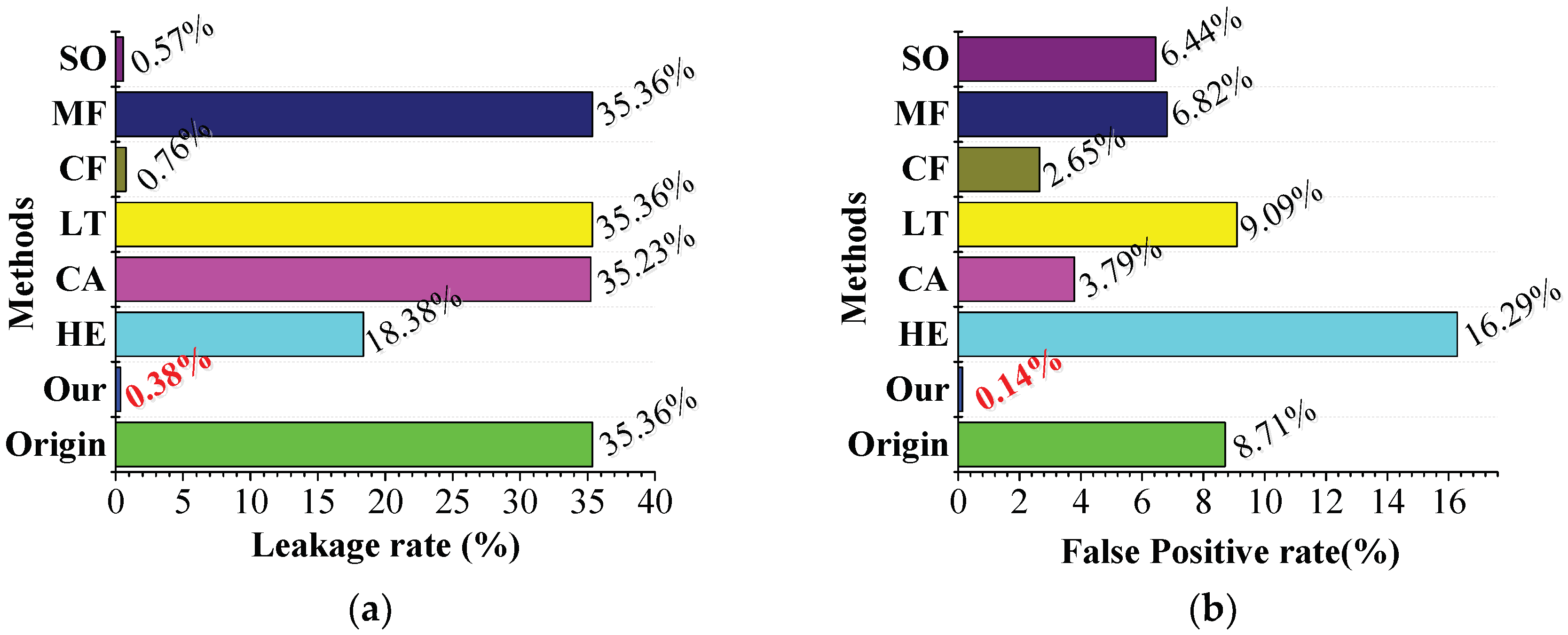

4.2.1. Comparison of Stripe Quality

4.2.2. Time Statistics

4.2.3. Comparison of Various Image Enhancement Methods

4.3. Performance Evaluation of Online Measurement

4.3.1. Measurement Accuracy

4.3.2. Detection Rate Statistics

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huh, S.; Shim, D.H.; Kim, J. Integrated navigation system using camera and gimbaled laser scanner for indoor and outdoor autonomous flight of UAVs. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 3158–3163. [Google Scholar]

- Krummenacher, G.; Cheng, S.; Koller, S. Wheel Defect Detection with Machine Learning. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1176–1187. [Google Scholar] [CrossRef]

- Liu, L.; Zhou, F.; He, Y. Vision-based fault inspection of small mechanical components for train safety. IEEE Trans. Intell. Transp. Syst. 2016, 10, 130–139. [Google Scholar] [CrossRef]

- Torabi, M.; Mousavi, S.M.; Younesian, D. A High Accuracy Imaging and Measurement System for Wheel Diameter Inspection of Railroad Vehicles. IEEE Trans. Ind. Electron. 2018, 65, 8239–8249. [Google Scholar] [CrossRef]

- Liu, Z.; Sun, J.; Wang, H.; Zhang, G. Simple and fast rail wear measurement method based on structured light. Opt. Lasers Eng. 2011, 49, 1343–1351. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, J.; Yan, K. Online measuring method of the wheel set wear based on CCD and image processing. Proc. SPIE 2005, 5633, 409–415. [Google Scholar]

- Xing, Z.; Chen, Y.; Wang, X. Online detection system for wheel-set size of rail vehicle based on 2D laser displacement sensors. Optik 2016, 127, 1695–1702. [Google Scholar] [CrossRef]

- Wang, B.; Liu, W.; Jia, Z. Dimensional measurement of hot, large forgings with stereo vision structured light system. Proc. Inst. Mech. Eng. 2011, 225, 901–908. [Google Scholar] [CrossRef]

- Di Stefano, E.; Ruffaldi, E.; Avizzano, C.A. Automatic 2D-3D vision based assessment of the attitude of a train pantograph. In Proceedings of the IEEE International Smart Cities Conference, Trento, Italy, 12–15 September 2016. [Google Scholar]

- Meng, H.; Yang, X.R.; Lv, W.G.; Cheng, S.Y. The Design of an Abrasion Detection System for Monorail Train Pantograph Slider. Railw. Stand. Des. 2017, 8, 031. [Google Scholar]

- Chi, M. Influence of wheel-diameter difference on running security of vehicle system. Traffic Transp. Eng. 2008, 8, 19–22. [Google Scholar]

- Gong, Z.; Sun, J.; Zhang, G. Dynamic Measurement for the Diameter of a Train Wheel Based on Structured-Light Vision. Sensors 2016, 16, 564. [Google Scholar] [CrossRef]

- Feng, Q.; Chen, S. New method for automatically measuring geometric parameters of wheel sets by laser. In Proceedings of the Fifth International Symposium on Instrumentation and Control Technology, Beijing, China, 24–27 October 2003; pp. 110–113. [Google Scholar]

- Gao, Y.; Feng, Q.; Cui, J. A simple method for dynamically measuring the diameters of train wheels using a one-dimensional laser displacement transducer. Opt. Laser Eng. 2014, 53, 158–163. [Google Scholar] [CrossRef]

- Zhang, Z.; Gao, Z.; Liu, Y.; Jiang, F. Computer Vision Based Method and System for Online Measurement of Geometric Parameters of Train Wheel Sets. Sensors 2012, 12, 334–346. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Su, Y.; Gao, Z.; Wang, G.; Ren, Y.; Jiang, F. A novel method to measure wheelset parameters based on laser displacement sensor on line. Opt. Metrol. Insp. Ind. Appl. 2010, 7855, 78551. [Google Scholar]

- Chen, X.; Sun, J.; Liu, Z.; Zhang, G. Dynamic tread wear measurement method for train wheels against vibrations. Appl. Opt. 2015, 54, 5270–5280. [Google Scholar] [CrossRef] [PubMed]

- Cheng, X. A Novel Online Detection System for Wheelset Size in Railway Transportation. Sensors 2016, 1, 1–15. [Google Scholar] [CrossRef]

- MERMEC Group. Wheel Profile and Diamter. Available online: http://www.mermecgroup.com/diagnostic-solutions/train-monitoring/87/1/wheelprofile-and-diameter.php (accessed on 15 January 2016).

- IEM, Inc. Wheel Profile System. Available online: http://www.iem.net/wheel-profilesystem-dp2 (accessed on 15 January 2016).

- KLD Labs, Inc. Wheel Profile Measurement. Available online: http://www.kldlabs.com/index.php?s=wheel+profile+measurement (accessed on 15 January 2016).

- Jin, Y.; Fayad, L.M.; Laine, A.F. Contrast enhancement by multiscale adaptive histogram equalization. In Proceedings of the International Symposium on Optical Science and Technology, San Diego, CA, USA, 29 July–3 August 2001. [Google Scholar]

- Hang, F.L.; Levine, M.D. Finding a small number of regions in an image using low-level features. Pattern Recognit. 2002, 35, 2323–2339. [Google Scholar]

- Farid, H. Blind inverse gamma correction. IEEE Trans. Image Process. 2001, 10, 1428–1433. [Google Scholar] [CrossRef]

- Lucchese, L.; Mitra, S.K. Color Image Segmentation: A State-of-the-Art Survey. Eng. Comput. 2001, 67, 1–15. [Google Scholar]

- Subr, K.; Majumder, A.; Irani, S. Greedy Algorithm for Local Contrast Enhancement of Images. In Proceedings of the International Conference on Image Analysis and Processing, Cagliari, Italy, 6–8 September 2005. [Google Scholar]

- Land, E.H. Recent Advances in Retinex Theory and Some Implications for Cortical Computations: Color Vision and the Natural Image. Proc. Natl. Acad. Sci. USA 1983, 80, 5163–5169. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Tarel, J.P.; Hautiere, N.; Caraffa, L. Vision Enhancement in Homogeneous and Heterogeneous Fog. IEEE Intell. Transp. Syst. 2012, 4, 6–20. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhou, F.; Zhang, G. Complete calibration of a structured light stripe vision sensor through planar target of unknown orientations. Image Vis. Comput. 2005, 1, 59–67. [Google Scholar] [CrossRef]

- Qi, L.; Zhang, Y.; Zhang, X.; Wang, S.; Xie, F. Statistical behavior analysis and precision optimization for the laser stripe center detector based on Steger’s algorithm. Opt. Express 2013, 21, 13442–13449. [Google Scholar] [CrossRef] [PubMed]

- Steger, C. An unbiased detector of curvilinear structures. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 113–125. [Google Scholar] [CrossRef]

| Wheel Sensors Parameters | ||||||||

|---|---|---|---|---|---|---|---|---|

| fx | fy | u0 | v0 | k1 | k2 | |||

| Camera 1 | 1978.7510 | 1978.6804 | 680.5250 | 537.8380 | −0.1383 | 0.1901 | ||

| Camera 2 | 1971.5685 | 1972.6837 | 697.6733 | 536.6688 | −0.1328 | 0.1476 | ||

| a | b | c | d | |||||

| PlaneFunc1 | 0.3190 | 0.8092 | −0.4934 | 204.2166 | ||||

| PlaneFunc2 | 0.1931 | −0.8568 | 0.4782 | −196.3384 | ||||

| T12 | R = , t = | |||||||

| Data | Methods | |||||||

|---|---|---|---|---|---|---|---|---|

| DATA1 | Origin | 0.767 | 0.230 | 0.060 | 0.176 | 62.016% | 0.142 | 0.214 |

| LT | 0.671 | 0.130 | 0.053 | 0.154 | 47.651% | 0.221 | 0.294 | |

| MF | 0.829 | 0.179 | 0.064 | 0.179 | 48.756% | 0.233 | 0.271 | |

| SO | 0.749 | 0.220 | 0.058 | 0.170 | 60.221% | 0.215 | 0.250 | |

| Our | 0.769 | 0.153 | 0.063 | 0.170 | 98.480% | 0.024 | 0.009 | |

| DATA2 | Origin | 0.820 | 0.184 | 0.007 | 0.023 | 53.280% | 0.116 | 0.172 |

| LT | 0.673 | 0.090 | 0.007 | 0.027 | 51.181% | 0.076 | 0.181 | |

| MF | 0.882 | 0.140 | 0.007 | 0.025 | 50.524% | 0.091 | 0.198 | |

| SO | 0.794 | 0.171 | 0.007 | 0.024 | 52.624% | 0.116 | 0.179 | |

| Our | 0.807 | 0.131 | 0.008 | 0.027 | 99.868% | 0.008 | 0.0 | |

| DATA3 | Origin | 0.838 | 0.180 | 0.008 | 0.020 | 38.523% | ----- | ----- |

| LT | 0.689 | 0.082 | 0.008 | 0.029 | 34.496% | 0.014 | 0.0 | |

| MF | 0.939 | 0.133 | 0.009 | 0.026 | 35.167% | 0.010 | 0.0 | |

| SO | 0.818 | 0.187 | 0.008 | 0.020 | 37.583% | ----- | ----- | |

| Our | 0.690 | 0.188 | 0.005 | 0.017 | 96.004% | 0.016 | 0.012 | |

| DATA4 | Origin | 0.590 | 0.191 | 0.005 | 0.026 | 53.667% | 0.673 | 0.0 |

| LT | 0.582 | 0.228 | 0.007 | 0.041 | 34.704% | 0.344 | 0.4743 | |

| MF | 0.676 | 0.228 | 0.004 | 0.027 | 35.241% | 0.233 | 0.384 | |

| SO | 0.563 | 0.178 | 0.005 | 0.028 | 53.846% | 0.677 | 0.0 | |

| Our | 0.564 | 0.264 | 0.006 | 0.028 | 90.595% | 0.038 | 0.038 |

| Methods | SD | SE | SS | SCE | TBA | Levels | AC |

|---|---|---|---|---|---|---|---|

| HE | ---- | 28 | ---- | 520 | 548 | 18 | 30 |

| CA | ---- | 15 | ---- | 427 | 442 | 14 | 31 |

| LT | ---- | 29 | ---- | 71 | 100 | 4 | 25 |

| CF | ---- | 90 | ---- | 57 | 147 | 5 | 30 |

| MF | ---- | 14 | ---- | 24 | 38 | 2 | 19 |

| SO | ---- | 24 | ---- | 27 | 51 | 2 | 26 |

| PM | 222 | 137 | 32 | 30 | 421 | 13 | 33 |

| Processing Methods | Flange Thickness | Tread Wear | Rim Thickness |

|---|---|---|---|

| WP | ------ | ------ | ------ |

| PM | 0.17 | 0.14 | 0.56 |

| HE | 5.82 | 5.40 | 8.28 |

| CA | ------ | ------ | ------ |

| LT | ------ | ------ | ------ |

| CF | 6.62 | 2.51 | 2.40 |

| MF | ------ | ------ | ------ |

| SO | 6.20 | 5.16 | 2.00 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, X.; Liu, Z.; Zhang, G. Reliable and Accurate Wheel Size Measurement under Highly Reflective Conditions. Sensors 2018, 18, 4296. https://doi.org/10.3390/s18124296

Pan X, Liu Z, Zhang G. Reliable and Accurate Wheel Size Measurement under Highly Reflective Conditions. Sensors. 2018; 18(12):4296. https://doi.org/10.3390/s18124296

Chicago/Turabian StylePan, Xiao, Zhen Liu, and Guangjun Zhang. 2018. "Reliable and Accurate Wheel Size Measurement under Highly Reflective Conditions" Sensors 18, no. 12: 4296. https://doi.org/10.3390/s18124296

APA StylePan, X., Liu, Z., & Zhang, G. (2018). Reliable and Accurate Wheel Size Measurement under Highly Reflective Conditions. Sensors, 18(12), 4296. https://doi.org/10.3390/s18124296