1. Introduction

Photogrammetry is a technique for measuring spatial geometric quantities through obtaining, measuring and analyzing images of targeted or featured points. Based on different sensor configurations, photogrammetric systems are categorized into offline and online systems [

1]. Generally, an offline system uses a single camera to take multiple and sequential images from different positions and orientations. The typical measurement accuracy lies between 1/50,000 and 1/100,000. That substantially results from the 1/20–1/30 pixels target measurement accuracy and the self-calibrating bundle adjustment algorithm [

2].

Unlike offline systems, an online system uses two or more cameras to capture photos synchronously and reconstruct space points at any time moment. They are generally applied to movement and deformation inspection during a certain time period. Online systems are reported in industrial applications such as foil material deformation investigation [

3], concrete probe crack detection and analysis [

4], aircraft wing and rotor blade deformation measurement [

5], wind turbine blade rotation measurement and dynamic strain analyses [

6,

7], concrete beams deflection measurement [

8], bridge deformation measurement [

9], vibration and acceleration measurement [

10,

11], membrane roof structure deformation analysis [

12], building structure vibration and collapse measurement [

13,

14], and steel beam deformation measurement [

15]. This noncontact and accurate technique is still finding more potential applications.

Online systems can also be applied to medical positioning and navigation [

16]. These systems are generally composed of a rigid two camera tracking system, LED or sphere Retro Reflect Targets (RRT) and hand held probes. NDI Polaris (NDI, Waterloo, ON, Canada) and Axios CamBar (AXIOS 3D, Oldenburg, Lower Saxony, Germany) are the leading systems. Generally, the measurement distance is less than 3 m while the coordinate measurement accuracy is about 0.3 mm and length accuracy about 1.0 mm. In order to achieve this, these camera pairs are calibrated and oriented with the help of Coordinate Measuring Machine (CMM) [

17] or special calibration frames in laboratory in an independent process before measurement.

Online systems equipped with high frame rate cameras are also employed in 3D motion tracking, such as the Vicon (Vicon, Denver, CO, USA) and Qualisys (Qualisys, Gothenburg, Sweden) systems. In these systems, the number of cameras and their positions vary with the measurement volumes which puts forward the requirement for calibration methods that can be operated on-site. Indeed, these 3D tracking systems are orientated by moving a ‘T’ or ‘L’ shaped object in the measurement volume. Generally speaking, motion tracking systems are featured in real-time 3D data, flexibility and easy operation, rather than high accuracy.

For multi-camera photogrammetric systems, interior, distortion and exterior parameters are crucial for 3D reconstruction and need to be accurately and precisely calibrated before any measurement. There are mainly two methods for calibrating and orienting multi-camera systems for large volume applications: the point array self-calibrating bundle adjustment method and the moving scale bar method. The point array self-calibrating bundle adjustment method is the most accurate and widely used method. Using convergent and rotated images of well distributed stable 3D points, bundle adjustment recovers parameters of the camera and coordinates of the point array. There is no need to have any prior information about the 3D point coordinates. For example, a fixed station triple camera system is calibrated in a rotatory test field composed of points and scale bars [

18,

19]. However, sometimes in practice, it is difficult to build a well distributed and stable 3D point array, so the moving scale bar method was developed.

Originally, the moving scale bar method was developed for exterior parameter determination. Hadem [

20] investigated the calibration precision of stereo and triple camera systems under different geometric configurations. Specifically, he simulated and experimented with camera calibration in a pure length test field, and he mentioned that a moving scale bar can be easily adapted to stereo camera system calibration. Patterson [

21,

22] determined the relative orientation of a stereo camera light pen system using a moving scale bar. For these attempts, interior orientations and distortion parameters of the cameras are pre-calibrated before measurement. The accuracy is assessed by length measurement performance according to VDI/VDE CMM norm. Additionally, the author mentioned that length measurement of the scale bar provides on-site accuracy verification. Mass [

23] investigated the moving scale bar method using a triple camera system and proposed that the method is able to determine interior orientations and is reliable enough to calibrate multi-camera systems. However, it is required that the cameras be fully or partially calibrated in an offline process [

24], and the measurement volume be small, for example 1.5 m × 1.7 m × 1.0 m [

23].

This paper proposes a method for calibrating and orienting stereo camera systems. The method uses just a scale bar and can be conducted on-site. Unlike previous moving scale bar methods, this method simultaneously obtains interior, distortion and exterior parameters of the cameras without any prior knowledge of the cameras’ characteristics. Additionally, compared with traditional point array bundle adjustment methods, the proposed method does not require construction of 3D point arrays, but achieves comparable accuracy.

The paper is organized as follows:

Section 1 introduces the background of this research, including development of online photogrammetry, state-of-the-art techniques for calibrating and orienting online photogrammetric cameras and their limitations.

Section 2 elaborates the mathematical models, computational algorithms and the precision and accuracy assessment theory of the proposed method.

Section 3 and

Section 4 report the simulations and experiments designed to test the method. Advantages of the method, advices for improving calibration performance and potential practical applications are summarized in the conclusions.

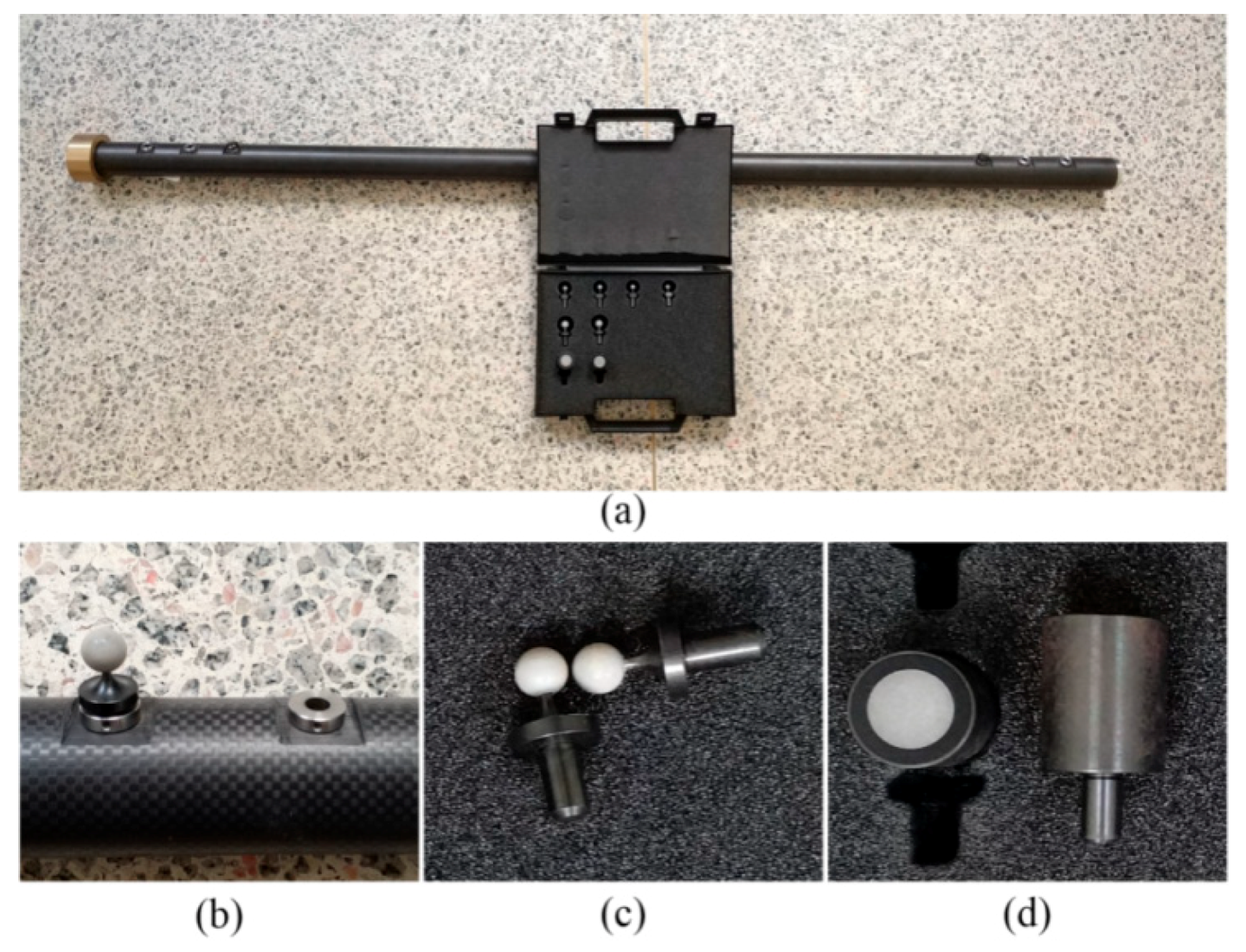

2. Materials and Methods

A scale bar is an alloy or carbon fiber bar that has two photogrammetric RRTs fixed at each end. The length between the two RRTs is measured or calibrated by instruments with high (several micrometer) accuracy. One of the measurement instruments is composed by an interferometer, a microscope and a granite rail [

25,

26]. Generally, a scale bar performs in photogrammetry as a metric for true scale, especially in multi-image offline systems. In this paper, the scale bar is used as a calibrating tool for multi-camera online systems.

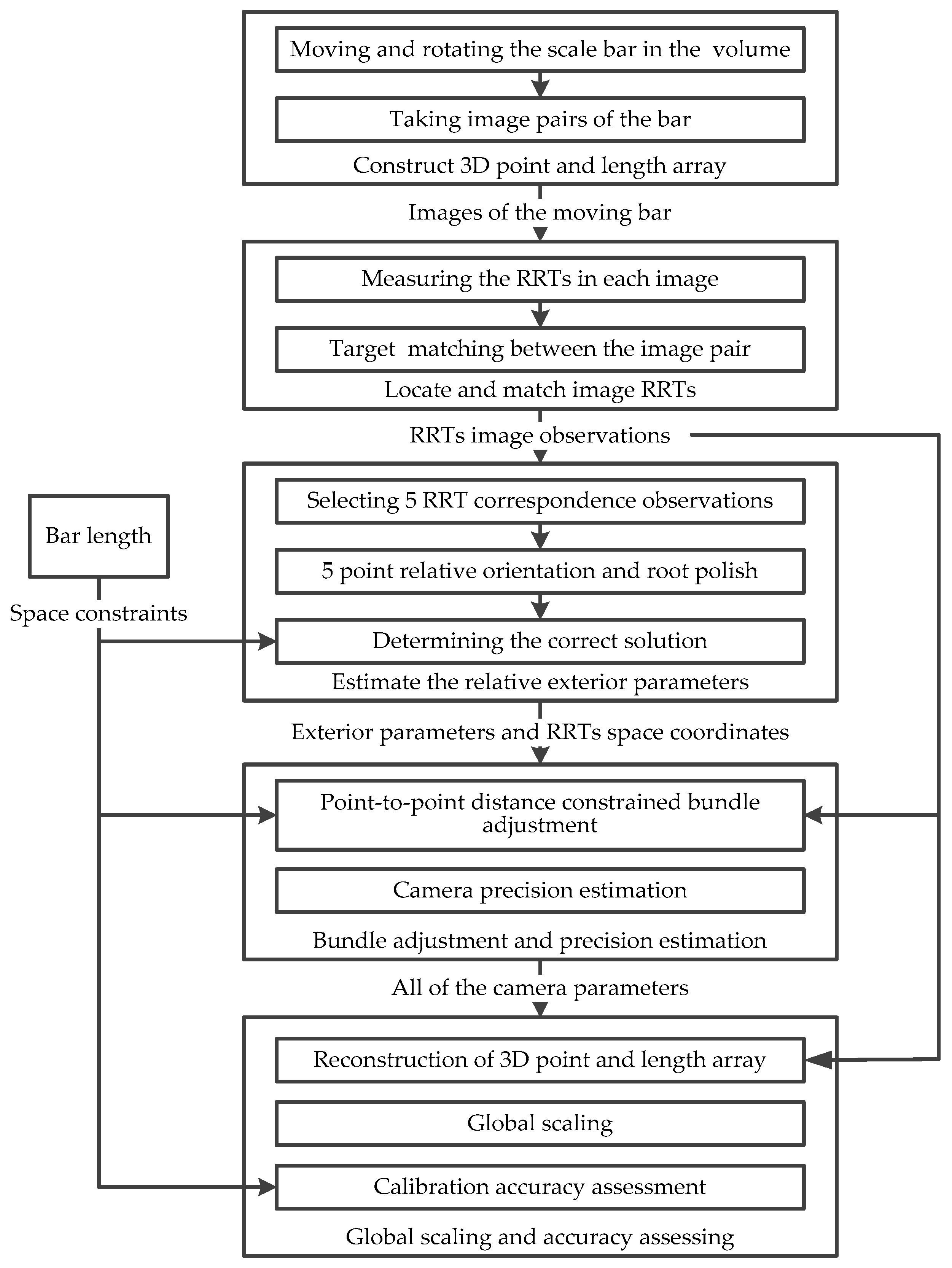

After being settled according to the measurement volume and surroundings, the camera pair is calibrated and oriented following the proposed method outlines in

Figure 1.

2.1. Construction of 3D Point and Length Array

The bar is moved in the measurement volume to different locations that are uniformly distributed in the measurement volume. At each location, the bar is rotated in different orientations. The position and orientation is called an attitude of the bar. After the moving and rotating process, a virtual 3D point array is built by the RRTs of the bar in each attitude. Meanwhile, because the distance between the two RRTs is the length of the scale bar, a virtual 3D length array is built by the bar length in each attitude. The cameras synchronically capture images of the measurement volume and the bar in each attitude.

2.2. Locating and Matching the RRTs in Images

The 2D coordinates of the two RRT in every image are determined by computing the grey value centroid of the pixels in each RRT region. Correspondences of the two RRTs between each image pair is determined by relative position of the points in image. More specifically, the right/left/up/down RRT in one image is matched to the right/left/up/down RRT in the other image. The 2D coordinates of the matched RRTs in all image pairs are used for exterior parameter estimation and all-parameter bundle adjustment.

2.3. Estimating the Relative Exterior Parameters

The five-point method [

27] is used to estimate the essential matrix between the two cameras. At this stage, we only know a guess of the principle distance. The principle point offset coordinates, distortion parameters of each camera are unknown and set to zeros.

Improperly selected five image point pairs may lead to computing degeneration and thus failure of the method. So to avoid this problem, an algorithm is designed for automatically selecting the most suitable five point pairs, taking into account both maximizing distribution dispersion and avoiding collinearity. The strategy is to find five point pairs that are located near the center and four corners of the two camera images by minimizing the following five functions:

Figure 2 illustrates all the image RRT points and the selected five point pairs.

The computed essential matrices are globally optimized by the root polish algorithm [

28] using all the matched RRTs. After that, the essential matrices are decomposed into the rotation matrices and translation vectors from which we can get the exterior angle and translation parameters. Generally, at least two geometric network structures of the cameras can be obtained and only one is physically correct. In this method, the equalization of the reconstructed lengths in the 3D length array is employed as a spatial constraint to determine the true solution, which is more robust than the widely used image error analysis.

In this part, the relative exterior parameters are inaccurate, the principle distance is just a guess and principle point offset as well as distortions are not dealt with. All of the inaccurate and unknown parameters need further refinement through bundle adjustment to achieve high accuracy and precision.

2.4. Self-Calibrating Bundle Adjustment and Precision Estimation

For traditional 3D point array self-calibrating bundle adjustment, large amount of convergent images are essential to handle the severe correlations between unknown parameters and to achieve reliable and precise results, so in theory, calibrating cameras through bundle adjustment using only one image pair of pure 3D point array is impossible, but moving a scale bar gives not only 3D points but also point-to-point distances which, as spatial constraints, greatly strengthen the two-camera network and can be introduced into bundle adjustment to enable self-calibration.

The projecting model of a 3D point into the image pair is expressed by the following implicit collinear equations [

29,

30]:

In Equation (2), the subscripts

l and

r mean the left and right camera;

xy is the image coordinate vector.

I is the interior parameter vector including the principle distance, the principle point offset, the radial distortion and decentering distortion parameters;

Er is the exterior parameter vector of the right camera relative to the left including three angles and three translations; and

Xi is the coordinate vector of a 3D point. The linearized correction equations for an image point observation are:

In Equation (3),

v is the residual vector of an image point that is defined by the disparity vector between the “true” (without error) coordinate

and the measured image point coordinate

;

l is the reduced observation vector that is defined by the disparity vector between the measured image point coordinate

and the computed image coordinate

using the approximate camera parameters.

Figure 3 illustrates the

x axis component of

v and

l of an image point

i.

A is the Jaccobian matrix of

f with respect to camera interior, distortion and exterior parameters;

B is the Jaccobian matrix of

f with respect to space coordinates;

δ and

are the corrections of the camera parameters and the spatial coordinates, respectively.

n scale bars provide 2

n 3D points and

n point-to-point distances. Considering the

m-th bar length:

where,

m1 and

m2 denote the two endpoints of the bar. Because Equation (4) is nonlinear, they need to be linearized before participating the bundle adjustment. The linearized correction equation for a spatial point-to-point distance constraint is:

where,

C is the Jaccobian matrix of Equation (4) with respect to the coordinates of each endpoint.

Point-to-point distances are incorporated into bundle adjustment to avoid rank defect of the normal equation and also to eliminate correlations between unknown parameters. For a two camera system imaging

n scale bars, the extended correction equation that involves all the image point observations and point-to-point distance constraints can be written as:

where the subscripts (

i,

j, k) denote the

k-th (

k = 1, 2) endpoint of the

j-th (

j = 1, 2, …,

n) distance in the

i-th (

i = 1, 2) image. The normal equation is:

In Equation (7),

P is a diagonal weight matrix of all the image point coordinate and spatial distance observations. Items in Equation (7) are determined by block computation:

Assuming that the a priori standard deviations of the image point observation and the spatial distance observation are

sp and

sl, respectively, and the a priori standard deviation of unit weight is

s0, the weight matrices

Pp and

Pl are determined by:

Solving Equation (7), we obtain the corrections for camera parameters and endpoint coordinates:

Again, using the block diagonal character of N22, δ can be computed camera by camera and can be computed length by length. The estimated camera parameters and 3D point coordinates are updated by the corrections iteratively until the bundle adjustment converges. The iteration converges and is terminated when the maximum of the absolute coordinate corrections of all the 3D points is smaller than 1 μm.

The proposed algorithm is time efficient because block computations eliminate the need for massive matrix inverse or pseudo inverse computation. In addition, the algorithm is unaffected by invisible observations and allows for gross observation detection in the progress of adjustment iteration. Additionally, this method allows both internal precision and external accuracy assessment of the calibrating results. The internal precision is represented by the variance-covariance matrix of all the adjusted unknowns:

where

N is the normal matrix in Equation (7) and the a posteriori standard deviation of unit weight is determined by:

where

n is the number of point-to-point distances and the size of

v equals 8

n for a two camera system.

2.5. Global Scaling and Accuracy Assessing of the Calibration Results

After bundle adjustment, 3D endpoints can be triangulated using the parameters. Generally, the adjusted results include systematic errors that are caused by wrong scaling and cannot be eliminated through bundle adjustment. Again, the point-to-point distances are utilized to rescale the results. Assuming that the triangulated bar lengths are:

The rescaling factor is calculated by:

where

L is the nominal length of the scale bar and

is the average of the triangulated bar lengths in Equation (12). Then the final camera parameters, 3D coordinates and triangulated bar lengths are:

Besides internal precision, this method provides on-site 3D evaluation of the calibration accuracy. The triangulated lengths provide large amount of length measurements that are distributed in various positions and orientations in the measurement volume. As a result, an evaluation procedure can be carried out following the guidelines of VDI/VDE 2634 norm. Because all the lengths are physically identical, calibration performance assessment through length measurement error is much easier.

Since the length of the scale bar is calibrated by other instruments, the nominal length

L has error. Assuming that the true length of the scale bar is

L0, we introduce a factor

K to describe the disparity between

L and

L0:

Essentially, Equation (15) describes the calibration error of the scale bar length in another way. The triangulated bar lengths

in Equation (14) can be rewritten as:

The absolute error of

is:

It can be derived that the Average (AVG) and Root Mean Square (RMS) values of the error are:

where, RMSE(

Li) is the Root Mean Square Error (RMSE) of the triangulated scale lengths.

Further, we define the relative precision of length measurement by:

In Equation (19), the relative precision is independent of factor

K and it keeps unchanged under different

K value. For example, in a calibration process using a nominal

L = 1000 mm (

K = 1) bar, ten of the triangulated bar lengths

are:

whose RMSE is 0.020 mm and the relative precision

is 1/50,068.

If

K of the instrument is 1.000002 (the absolute calibration error is 0.002 mm), the nominal length is 1000.002 mm. According to Equation (16), the rescaled lengths

are:

whose relative precision

is also 1/50,068.

And further, if

K is amplified to 1.2 (the absolute calibration error is 200 mm, which is not possible in practice), the rescaled lengths

are:

whose relative precision

is again 1/50,068.

The above example proves Equation (19). The relative precision of length measurement is invariant under different scale bar nominal lengths (different K values in Equation (15)), which makes it a good assessment of the calibrating performance of the camera pair.

Additionally, interior, distortion parameters and the relative rotating angles between the two cameras are not affected by the scale factor K. These parameters are calibrated with a uniform accuracy, no matter how large the instrument measurement error is, even if we assign a wrong value to L. The two cameras can be calibrated precisely without knowing the true length L0.

3. Simulations and Results

A simulation system is developed to verify the effectiveness and evaluate the performance of the proposed method. The system consists of the generating module of control length array, camera projective imaging module, the self-calibrating bundle adjustment module and the 3D reconstruction module. The generating module simulates scale bars that evenly distribute over the measurement volume. The length, positions, orientations of the bar and the scale of the volume can be modified. The imaging module projects endpoints of the bars into the image pair utilizing assigned interior, distortion and exterior parameters. The bundle adjustment module implements the proposed method and calibrates all the unknown parameters of the camera pair. The reconstruction module triangulates all the endpoints and lengths by forward intersection utilizing the calibrating results.

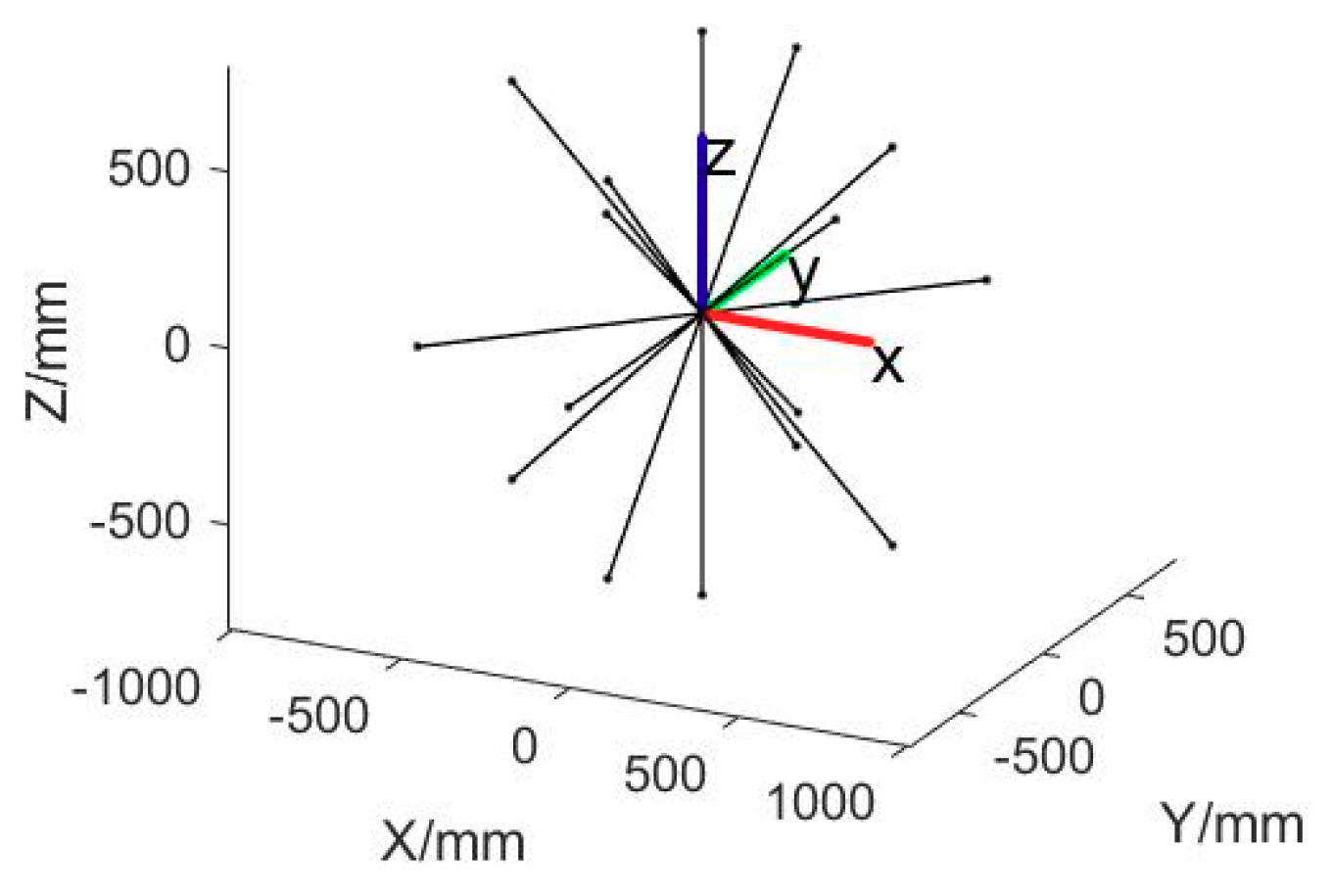

3.1. Point and Length Array Construction and Camera Pair Configurations

The bar is set to be 1 m long. Bar positions are evenly distributed in the volume and is one bar length apart from each other. The scale bar is moved to each position and posed in different orientations. It is worth noticing that, if the bar is moved and rotated in a single plane, self-calibrating of the camera parameters fails. That is because there is a great correlation between the interior parameters (principle distance, principle point coordinates) and the exterior translation parameters, and planar objects do not provide sufficient information to handle the correlation. As a result, after bundle adjustment, these parameter determinations show very large standard deviations, which means that the calibration results are not precise and thus not reliable.

We use multi plane motion and out-of-plane rotations to provide the bundle adjustment process with diverse orientation length constraints and thus optimize the parameters to adapt to different orientations. As a result, uniform 3D measurement accuracy can be achieved in different orientations.

Figure 4 shows the six orientations of the bar in one position.

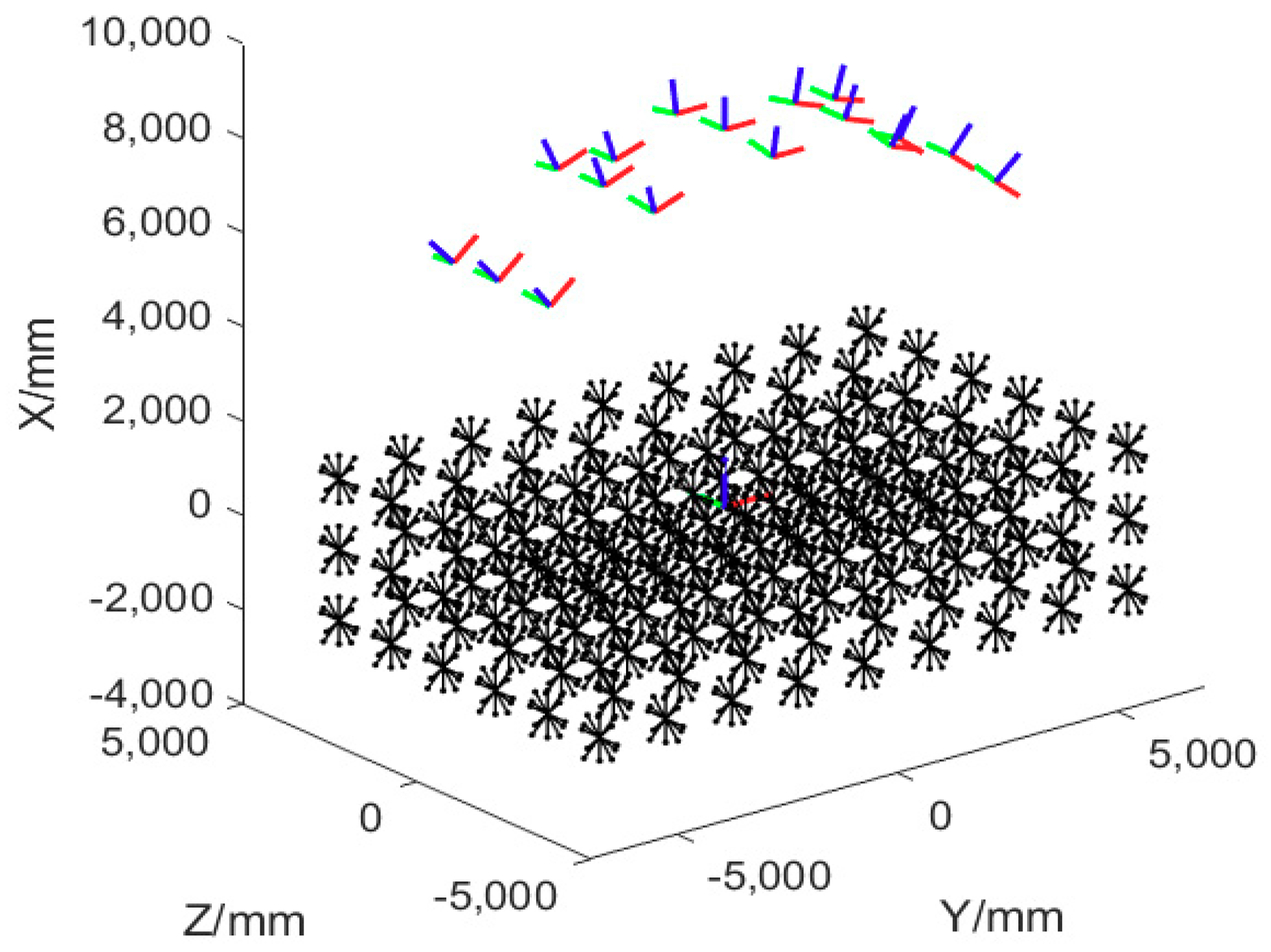

Figure 5 demonstrates the simulated point and length array and the camera pair.

In this simulation, the measurement volume is 12 m (length) × 8 m (height) × 4 m (depth). The resolution of the cameras is 4872 × 3248 pixels and the pixel size is 7.4 µm × 7.4 µm. The interior and distortion parameters are set with the values of the real cameras.

The cameras are directed at the center of the measurement volume, and they are 8 m away from the volume and 5 m apart from each other which thus forms a 34.708 degrees intersection angle. Normally distributed image errors of σ = 0.2 µm are added to the projected image coordinates of each endpoint. For the cameras simulated, σ = 0.2 µm indicates a 1/37 pixels image measurement precision which can be achieved through utilizing RRTs and appropriate image measurement algorithm.

3.2. Accuracy and Precision Analysis

In the simulation, only a guess value of 20 mm is assigned to the principle distance. Other interior and distortion parameters are set to zeros. The five point and root polish methods give good estimations of the relative exterior parameters and thus the proposed self-calibrating bundle adjustment converges generally within three iterations.

In the simulation, sp and sl are set to 0.0002 mm and 0.2 mm respectively, and s0 is set to equal sp. The self-calibrating bundle adjustment refines and optimizes all of the camera parameters and spatial coordinates. A posterior standard deviation of unit weight = 0.00018 mm is obtained, which indicates a good consistency between the a priori and a posterior standard deviation.

The standard deviations of the interior and distortion parameters of the two cameras, the relative exterior parameters and the 3D coordinates of the endpoints can be computed following Equation (10).

Table 1 lists the interior, distortion parameter determinations and their standard deviations from the bundle adjustment. The undistortion equation of an image point (

x,

y) is:

in which,

xu and

yu are the distortion-free/undistorted coordinates of the point;

x0 and

y0 are the offset coordinates of the principle point in the image; Δ

x and Δ

y are the distortions along image

x and

y axis respectively. Δ

x and Δ

y are calculated by:

in which,

;

K1,

K2 and

K3 are the radial distortion parameters;

P1 and

P2 are the tangential distortion parameters.

Table 2 lists the relative exterior parameter determinations and their standard deviations from the bundle adjustment, and

Table 3 lists the mean standard deviations of the 3D coordinates of all the end points from the bundle adjustment.

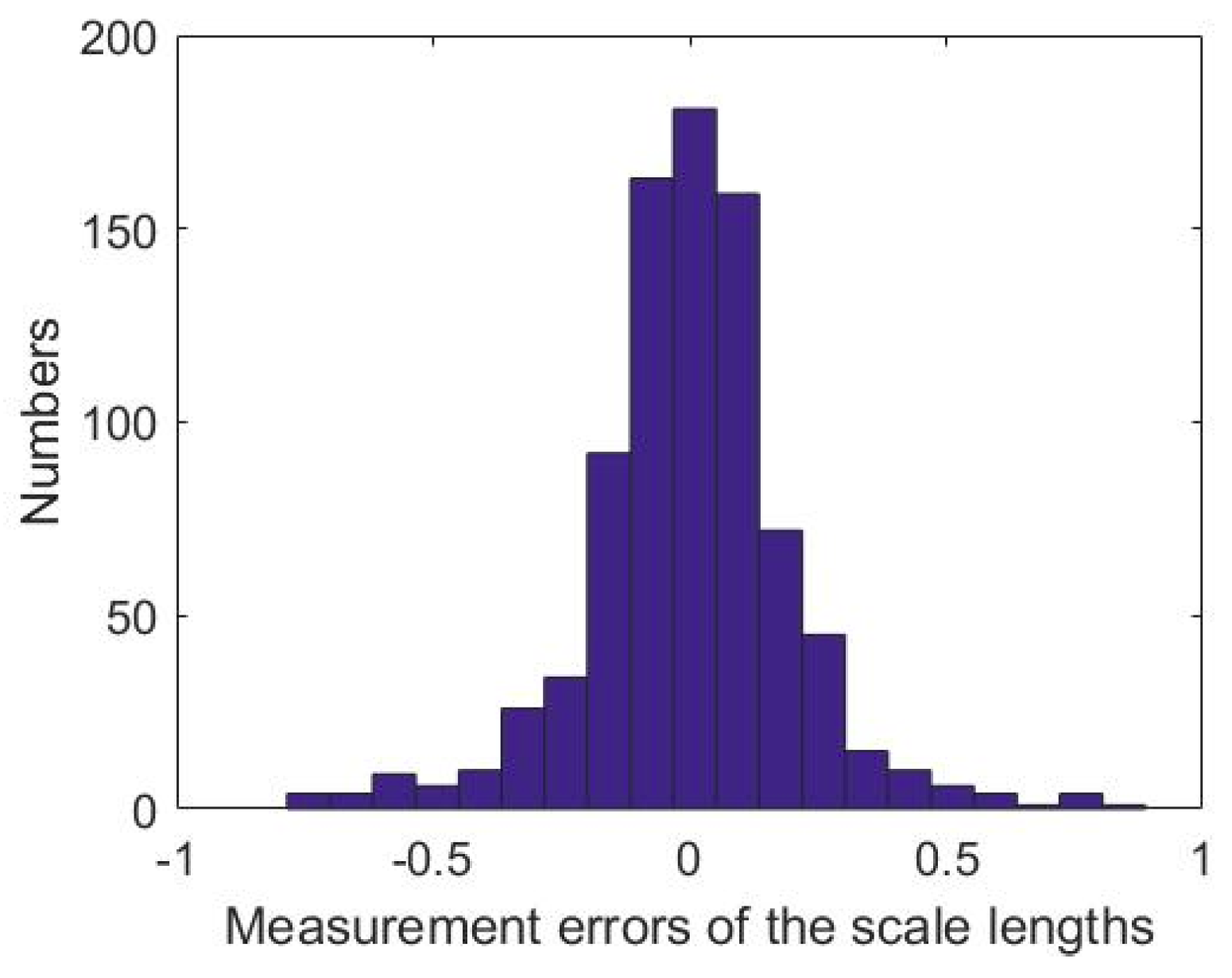

From the above results, it can be seen that the bundle adjustment successfully and precisely determines the interior, distortion, and exterior parameters of both cameras as well as the spatial coordinates of the endpoints. Besides internal standard deviations, the reconstructed lengths of the scale bar provide an external evaluation of the calibration accuracy.

Table 4 exhibits the results of the triangulated distances versus the known bar length.

Figure 6 shows the histogram of the errors of the reconstructed lengths. The errors demonstrate a normal distribution which means that no systematic error components exist and that the functional and the stochastic models of the method are correctly and completely built up.

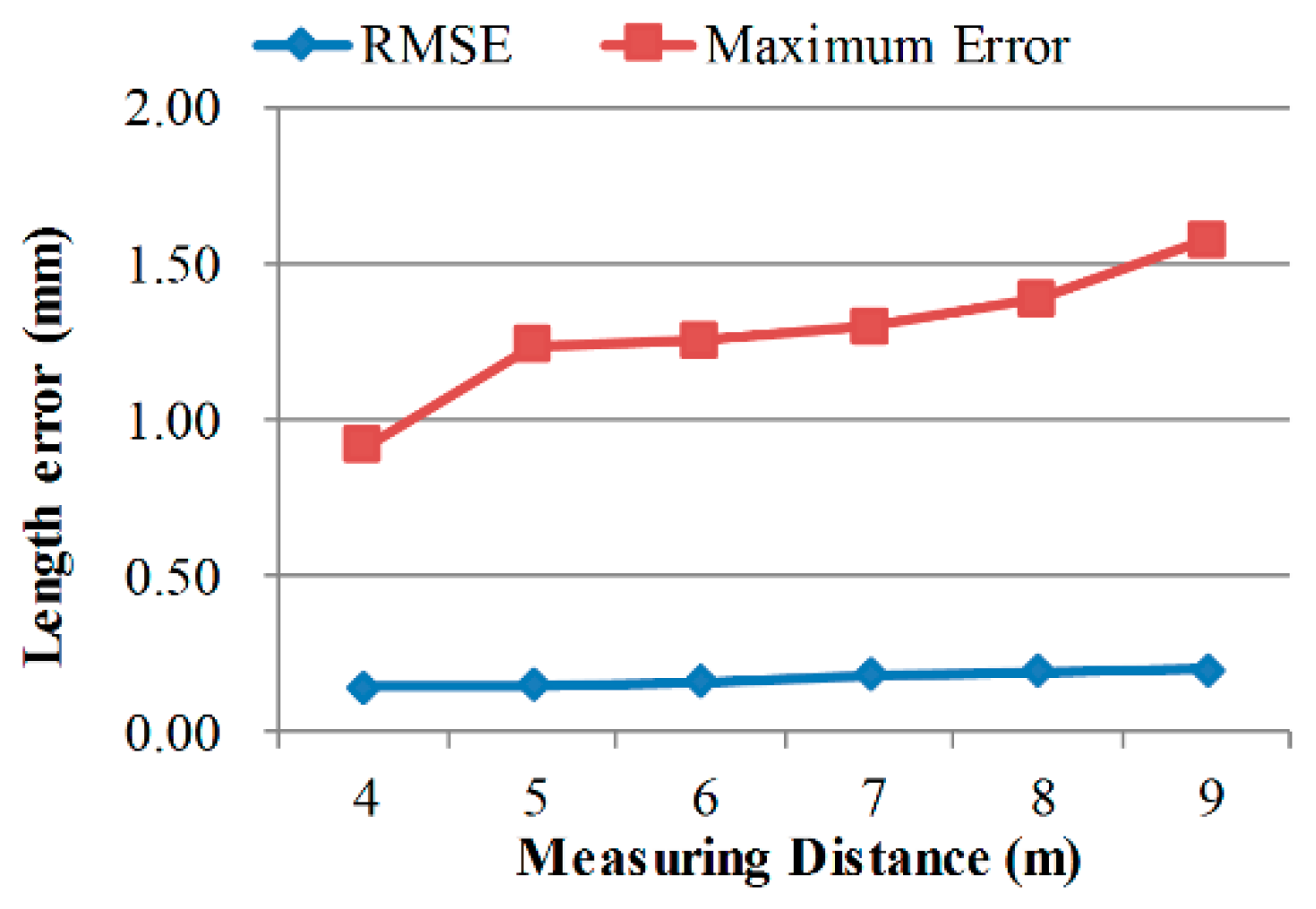

3.3. Performances of the Method under Different Spatial Geometric Configurations

In this part, simulations are carried out to analyze the performance of the proposed method when calibrating camera pairs in different measurement volume scales, using different bar lengths and with different intersection angles.

For a stereo camera system with a specific intersection angle, the scale of measurement volume is dependent on the measuring distance.

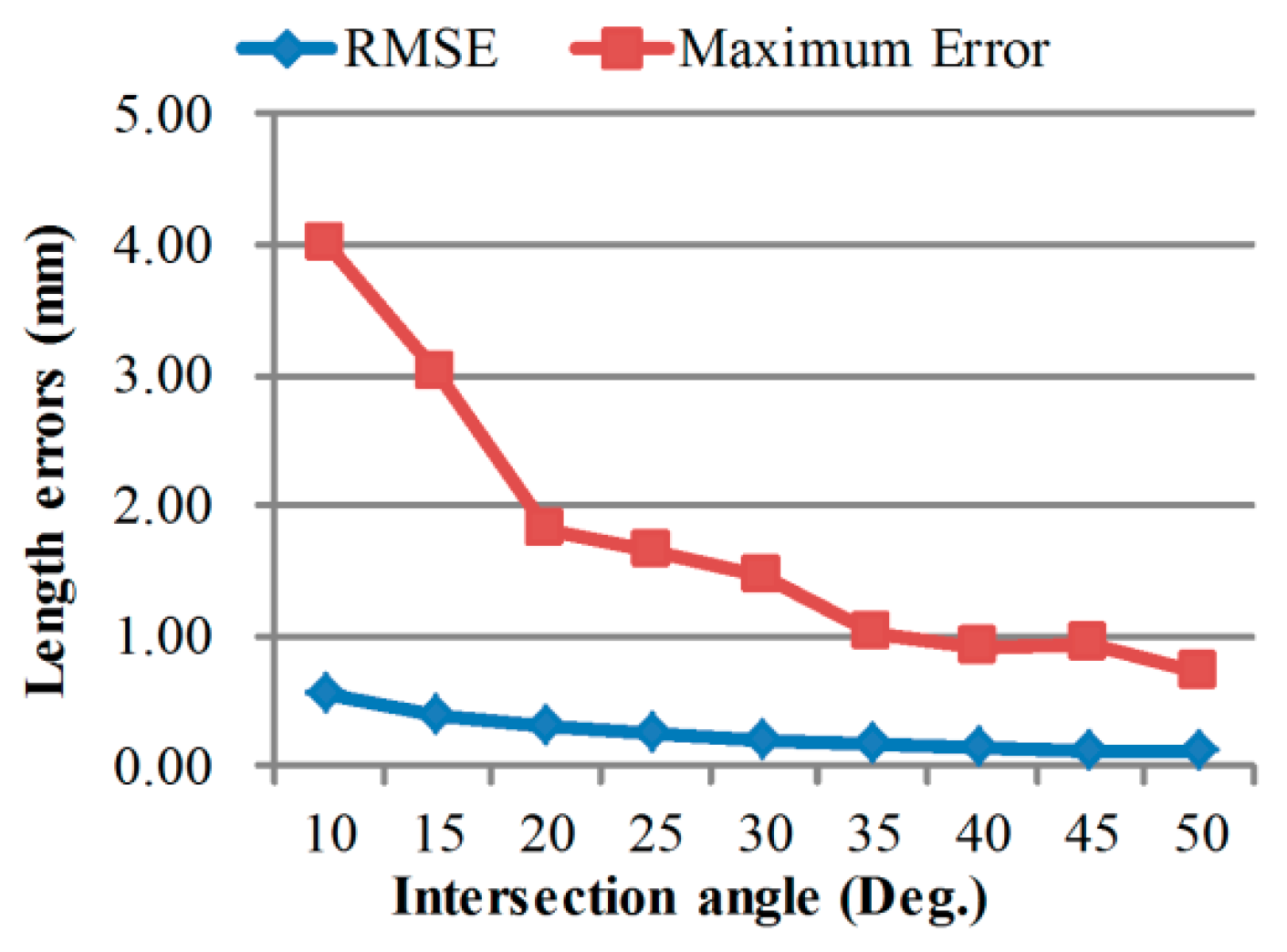

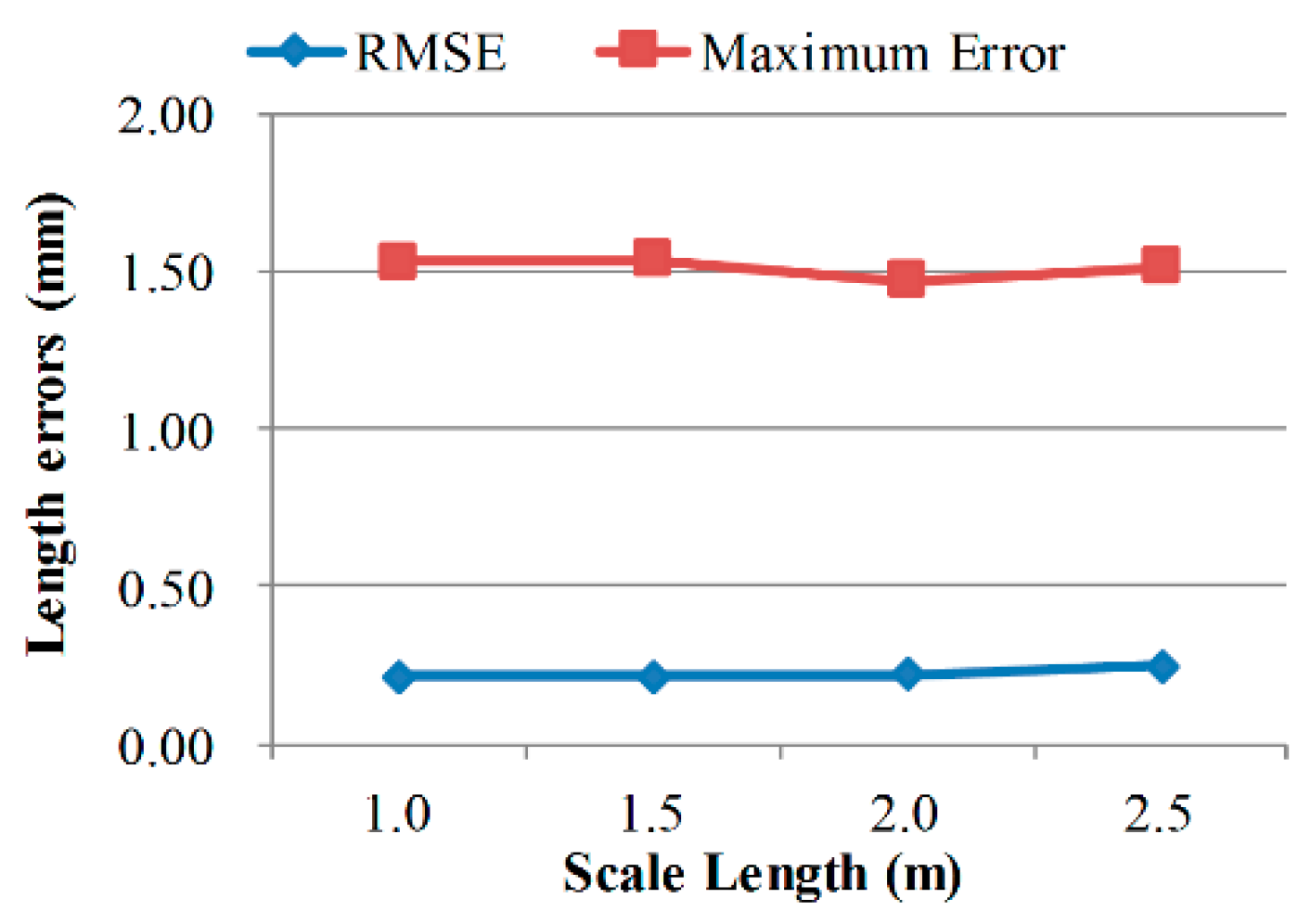

Figure 7,

Figure 8 and

Figure 9 exhibit the calibrating performances, represented by bar length measurement errors, under different geometric configurations.

Calibration accuracy improves in smaller volumes and with larger intersection angles, which is consistent with normal knowledge. What is interesting is that, calibrating accuracy almost keeps unchanged when using scale bars with different lengths. Thus, it can be deduced that, the measuring error of longer distances in the same volume using the calibrated camera pair will be similar to the scale bar length measuring error. Further, the extended relative precision of length measurement in this volume is:

where,

k is a confidence interval integer,

D is the scale of the measurement volume, RMSE(

Li) is the RMSE of bar length measurement as in Equation (18). For the calibrating results in

Table 4, the relative precision is nearly 1/25,000 when

k equals 3.

3.4. Accuracy Comparison with the Point Array Self-Calibrating Bundle Adjustment Method

The point array self-calibration bundle adjustment method is widely used in camera calibration and orientation. This method takes multiple photos of an arbitrary but stable 3D array of points, and then conducts a bundle adjustment to solve the interior, distortion, extrinsic camera parameters and 3D point coordinates. Generally, only one camera is calibrated by the point array bundle adjustment while in our method the two cameras are calibrated simultaneously.

The scale bar endpoints in

Figure 5 are used to calibrate each camera by the point array self-calibrating bundle adjustment method. For each camera, seventeen convergent pictures of the point array are taken at stations evenly distributed in front of the point array. One of the pictures is taken at the station for stereo measurement and at least one picture is taken orthogonally.

Figure 10 demonstrates the camera stations and the point array (the scene is rotated for better visualization of the camera stations).

Image errors of

σ = 0.2 µm are added to each simulated image point. Then, point array self-calibration bundle adjustment is conducted using these image data to solve the parameters of the two cameras respectively. Besides reconstruction errors of the bar lengths, measurement errors of a 10 m length along the diagonal of the volume are also introduced to make the comparison between these two methods.

Table 5 lists the results of 200 simulations of each method. It can be seen that the proposed method is more accurate and precise. The point array bundle adjustment method shows larger systematic errors and Maximum Errors.

5. Conclusions

This paper proposes a method for simultaneously calibrating and orienting stereo cameras of 3D vision systems in large measurement volume scenarios. A scale bar is moved in the measurement volume to build a 3D point and length array. After imaging the 3D array, the two cameras are calibrated through self-calibration bundle adjustment that is constrained by point-to-point distances. External accuracy can be obtained on-site through analyzing bar length reconstruction errors. Simulations validate effectiveness of the method regarding to the self-calibrating of interior, distortion and exterior camera parameters and meanwhile test its accuracy and precision performance. Moreover, simulations and experiments are carried out to test the influence of the scale bar length, measurement volume, target type and intersection angle on calibration performance. The proposed method does not require stable 3D point array in the measurement volume, and its accuracy will not be affected by the scale bar length. Furthermore, cameras can be accurately calibrated without knowing the true length of the bar. The method achieves better accuracy over the state-of-the-art point array self-calibration bundle adjustment method.

In order to accurately calibrate the interior and distortion parameters, plenty of well/evenly distributed image points are needed, so the bar needs to be moved uniformly in as many positions as possible within the measurement volume. In order to handle the correlation between interior and exterior parameters in bundle adjustment, and thus to guarantee the reliability of the calibration results, the bar needs to be moved in a 3D manner, such as in multi planes and with out-of-plane rotation. Additionally, to achieve uniform triangulation accuracy in different orientations, the bar needs to be rotated uniformly in diverse orientations.

This method can be easily conducted in medium scale volumes within human arm reach, and can be extended to large scale measurement applications with the help of UAVs to carry and operate the scale bar. It can also be used in calibrating small or even micro scale stereo vision systems such as structured light scanner. Compared with planer calibration patterns, scale bars are easier to calibrate, less restricted by camera viewing angle, and has higher image measurement accuracy which will improve calibration accuracy and convenience. Our future works include studies of a rigorous relationship between the motion of the bar and the measurement volume, the relationship between calibrating performance and the number as well as distribution of bar motion positions in the volume, and application of this method in practice.