Variables Influencing the Accuracy of 3D Modeling of Existing Roads Using Consumer Cameras in Aerial Photogrammetry

Abstract

:1. Introduction

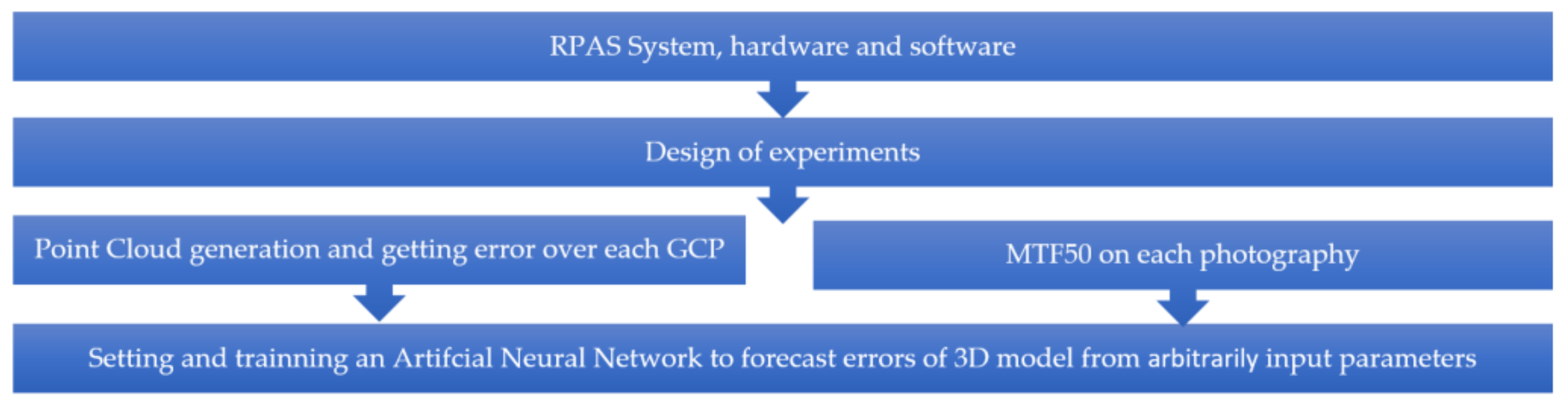

2. Methodology

2.1. RPAS System, Hardware, and Software

2.2. Experiments Design

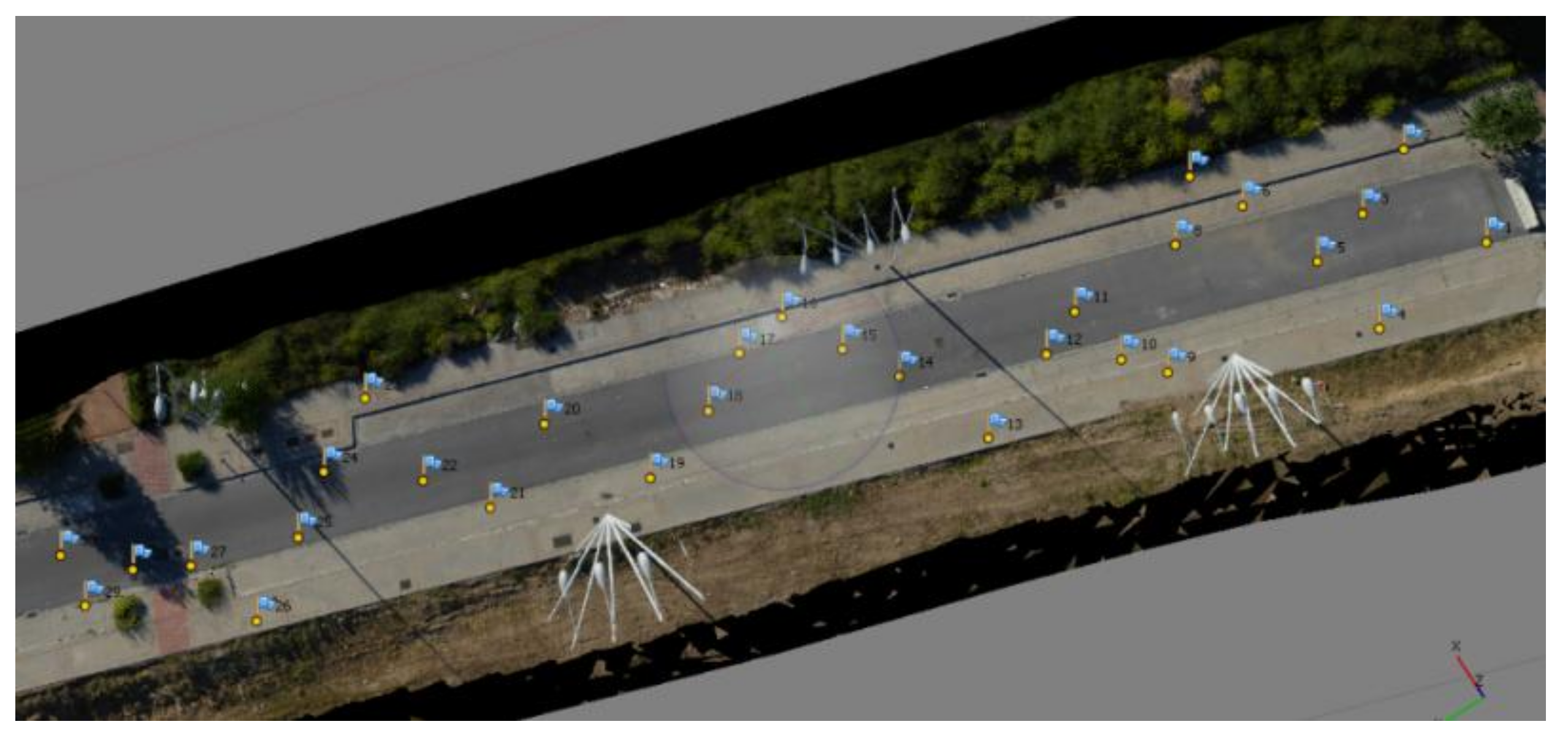

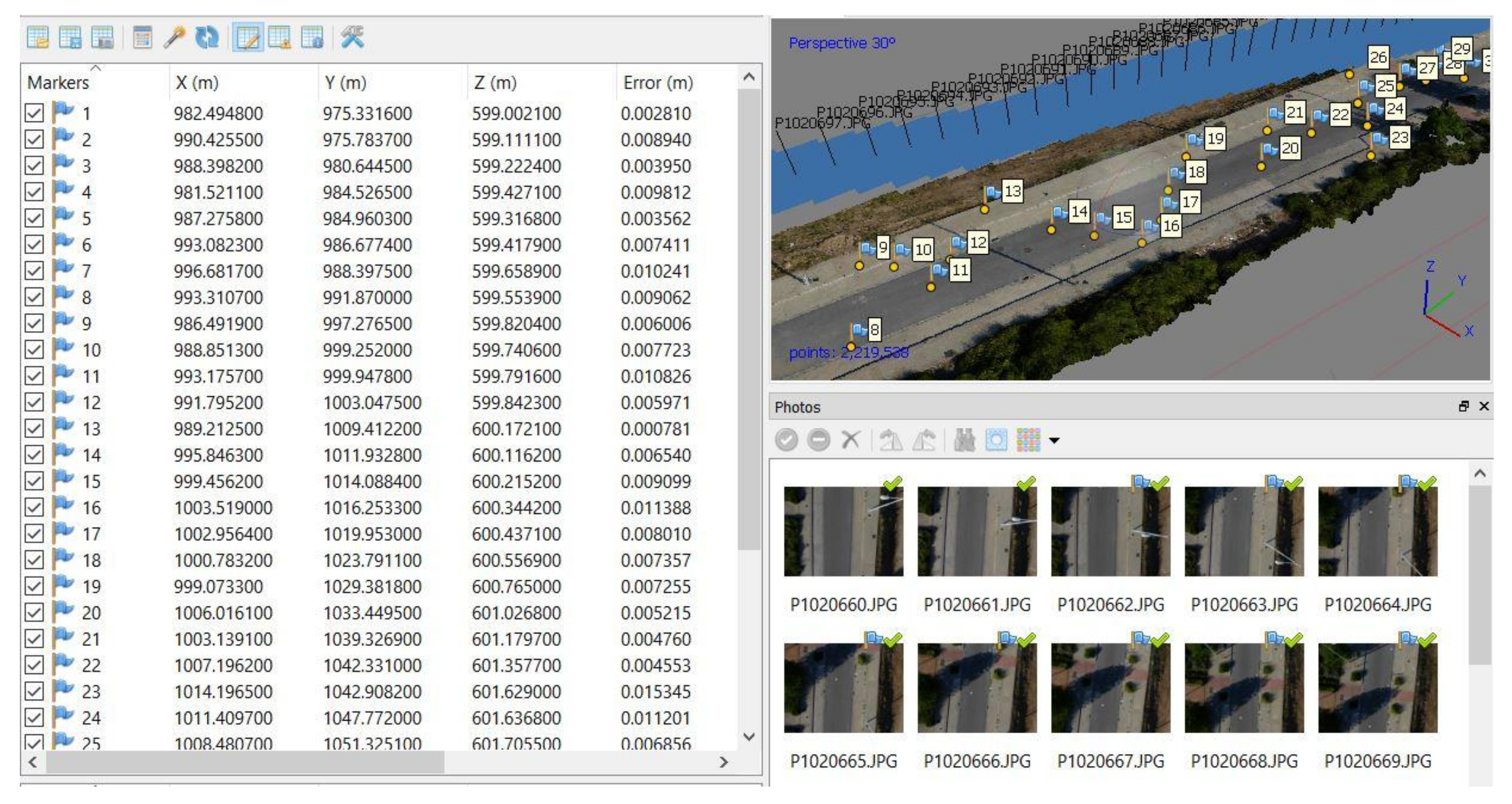

2.3. Development of PC and 3D-RMSE Computation

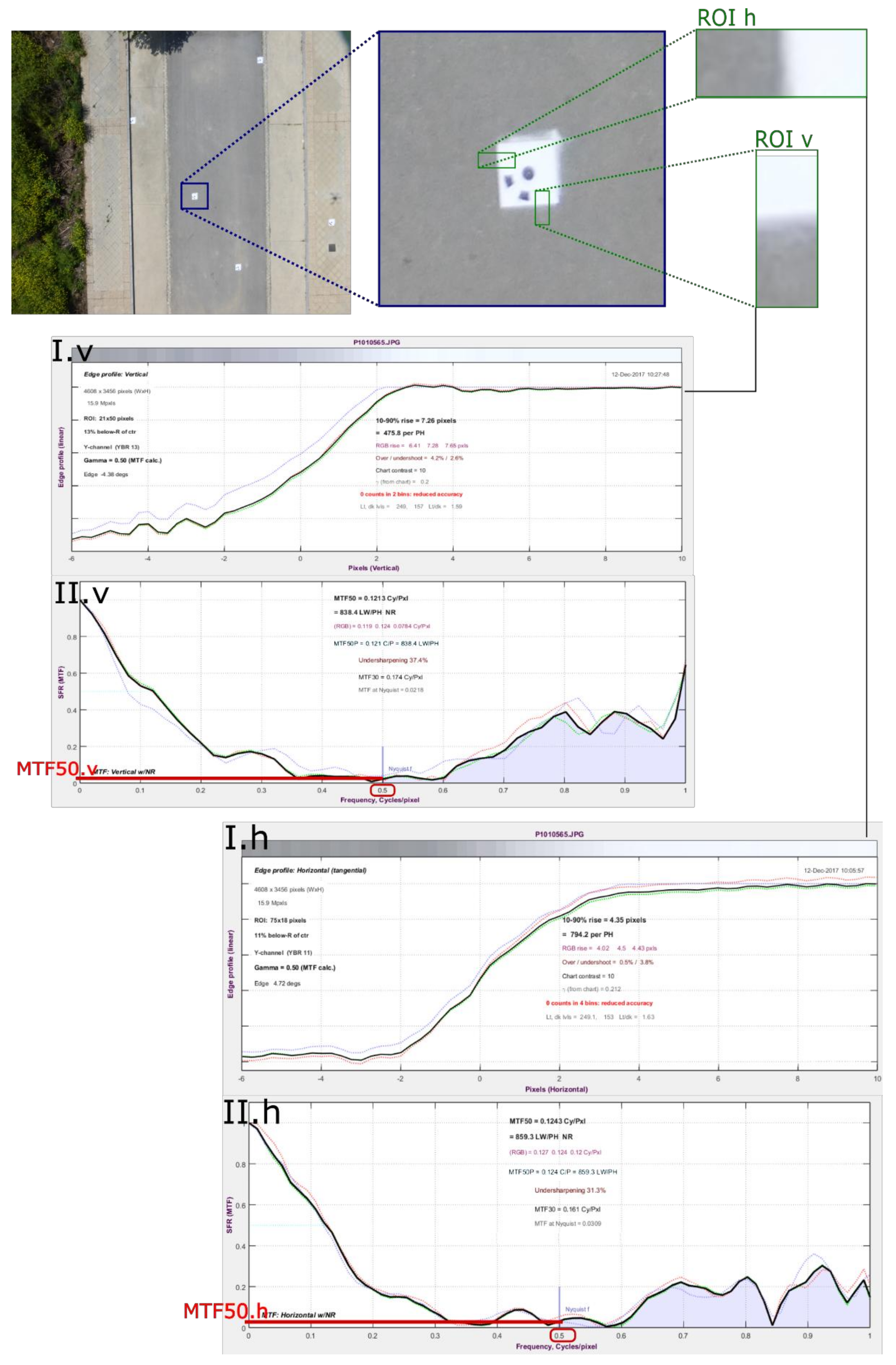

2.4. MTF50

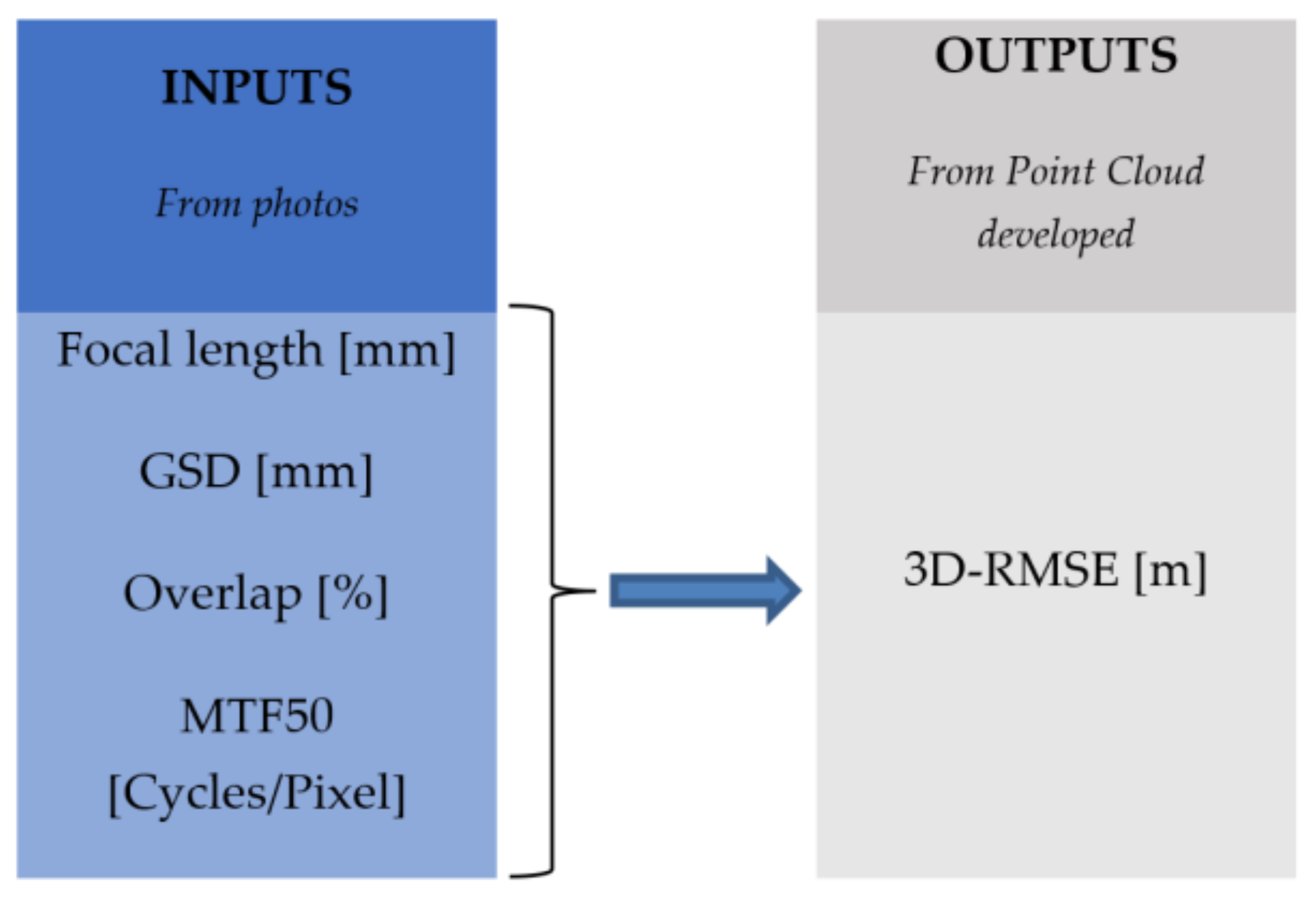

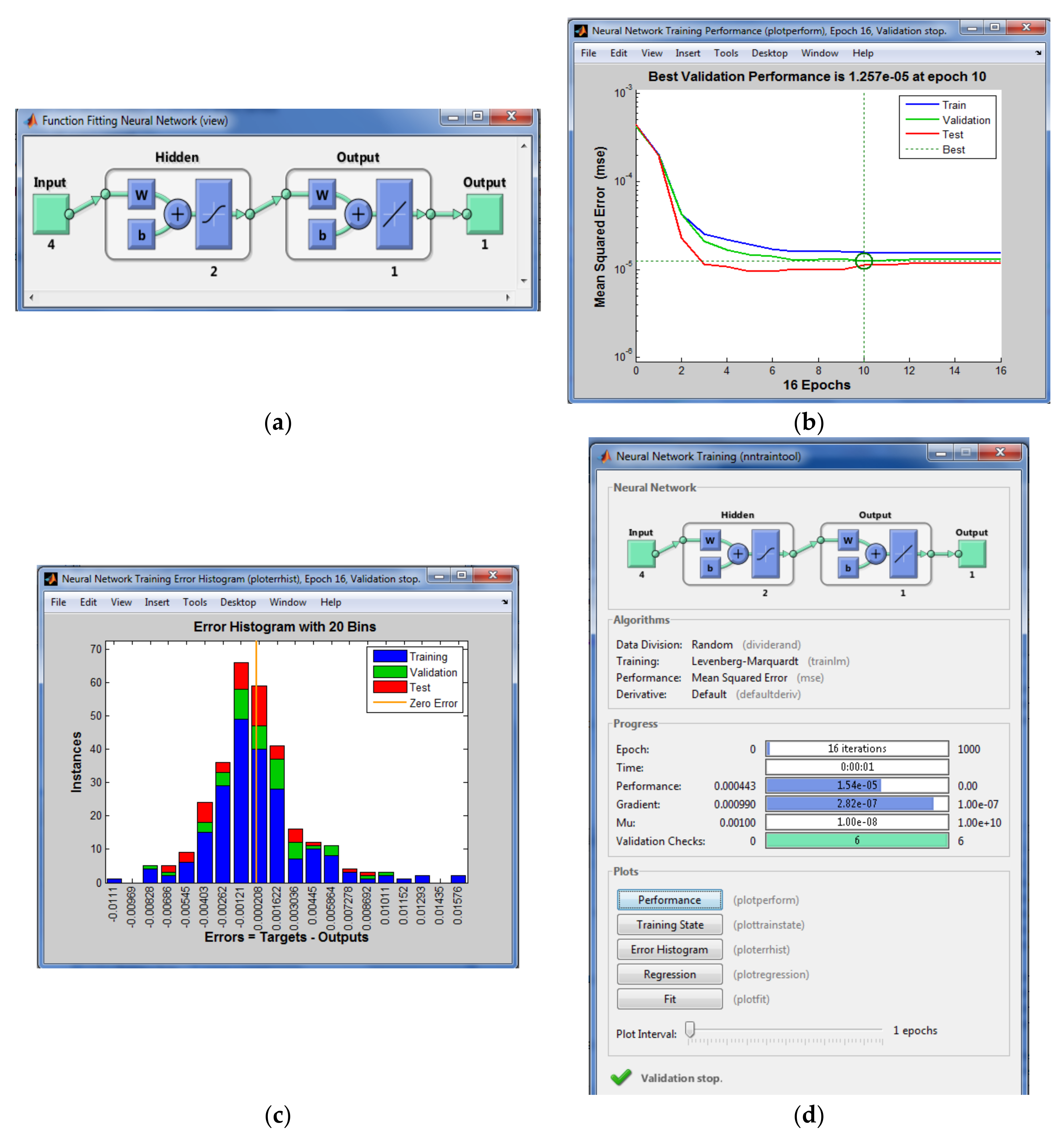

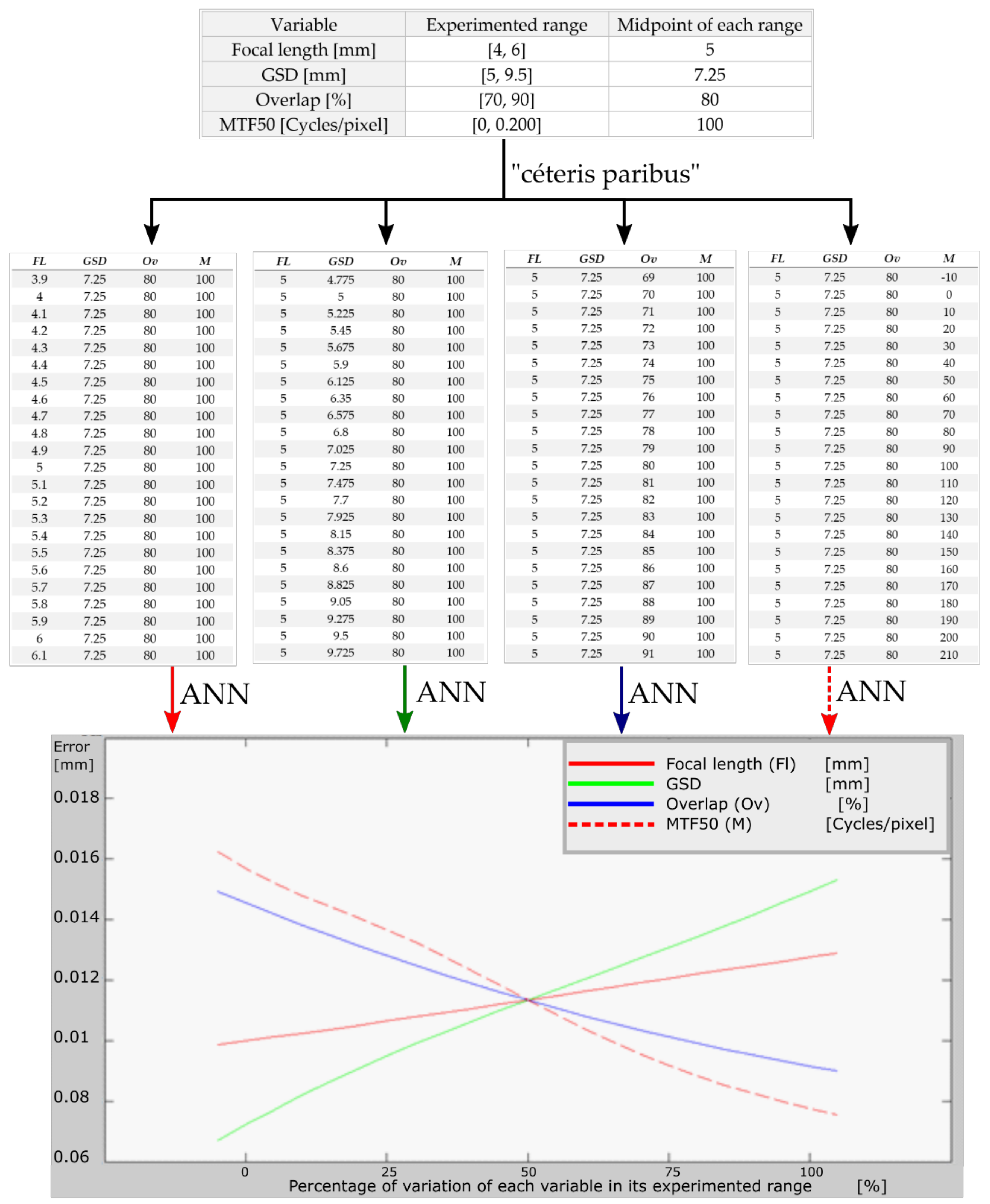

2.5. Setting and Training a Neural Network to Forecast 3D-RMSE of 3D Model from Arbitrary Input Parameters

- -

- There were 60 photographic packs under different conditions controlling the variables under study. Each photographic pack generated a PC, so that 60 PCs were generated. Each PC had 30 GCPs. The 3D-RMSE of each GCP in each PC was determined. Then, we calculated the 3D-RMSE average of each 6 GCPs (by homogeneous zones), so we got 5 3D-RMSE samples of each flight (or each PC).

- -

- The MTF50 in each photo on the x-axis and the y-axis was measured in order to use their average in the ANN.

- -

- Based on the above, 60 (PC) × 5 (3D-RMSE samples GCP/PC) = 300 samples. Therefore, 300 samples were generated to train, validate, and test the ANN. Chosen randomly, 210 training samples, 45 validating samples, and 45 testing samples were used to set the ANN.

3. Analysis and Discussion of the Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using Unmanned Aerial Vehicles (UAV) for High-Resolution Reconstruction of Topography: The Structure from Motion Approach on Coastal Environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef] [Green Version]

- Hugenholtz, C.H.; Walker, J.; Brown, O.; Myshak, S. Earthwork Volumetrics with an Unmanned Aerial Vehicle and Softcopy Photogrammetry. J. Surv. Eng. 2015, 141, 06014003. [Google Scholar] [CrossRef]

- Nelson, A.; Reuter, H.I.; Gessler, P. Chapter 3 DEM Production Methods and Sources. Dev. Soil Sci. 2009, 33, 65–85. [Google Scholar] [CrossRef]

- Timofte, R.; Zimmermann, K.; Van Gool, L. Multi-view traffic sign detection, recognition, and 3D localisation. Mach. Vis. Appl. 2014, 25, 633–647. [Google Scholar] [CrossRef]

- Golparvar-Fard, M.; Balali, V.; de la Garza, J.M. Segmentation and Recognition of Highway Assets Using Image-Based 3D Point Clouds and Semantic Texton Forests. J. Comput. Civ. Eng. 2015, 29, 04014023. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Ai, M.; Hu, Q.; Li, J.; Wang, M.; Yuan, H.; Wang, S. A Robust Photogrammetric Processing Method of Low-Altitude UAV Images. Remote Sens. 2015, 7, 2302–2333. [Google Scholar] [CrossRef] [Green Version]

- Balali, V.; Jahangiri, A.; Machiani, S.G. Multi-class US traffic signs 3D recognition and localization via image-based point cloud model using color candidate extraction and texture-based recognition. Adv. Eng. Inform. 2017, 32, 263–274. [Google Scholar] [CrossRef]

- Aber, J.S.; Marzolff, I.; Ries, J.B. Small-Format Aerial Photography: Principles, Techniques and Geoscience Applications; Elsevier Scientific: Amsterdam, The Netherlands, 2010; ISBN-10: 0444532609. [Google Scholar]

- Cochrane, G.R. Manual of photogrammetry. American Society of Photogrammetry. N. Z. Geog. 1982, 38. [Google Scholar] [CrossRef]

- Guillem, S.; Herráez, J. Restitución Analítica; Publication service of the Polytechnic University of Valencia: Valencia, Spain, 1995. [Google Scholar]

- Faugeras, O.; Luong, Q.-T.; Papadopoulo, T. The Geometry of Multiple Images: The Laws that Govern the Formation of Multiple Images of a Scene and Some of Their Applications; MIT Press: Cambridge, MA, USA, 2001; ISBN 9780262062206. [Google Scholar]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef] [Green Version]

- Rupnik, E.; Nex, F.; Toschi, I.; Remondino, F. Aerial multi-camera systems: Accuracy and block triangulation issues. ISPRS J. Photogramm. Remote Sens. 2015, 101, 233–246. [Google Scholar] [CrossRef]

- Martinez-Carricondo, P. Técnicas Fotogramétricas Desde Vehículos Aéreos no Tripulados Aplicadas a la Obtención de Productos Cartográficos Para la Ingeniería Civil. Ph.D. Thesis, University of Almería, Almería, Spain, 2016. [Google Scholar]

- Brown, D. The bundle adjustment—Progress and prospects. In XIII Congress of the ISPRS. International Archives of Photogrammetry; International Society for Photogrammetry and Temote Sensing: Helsinki, Finland, 1976; Volume 21, p. 33. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2000; ISBN-10: 0521540518. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef] [Green Version]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Hast, A.; Nysjö, J.; Marchetti, A. Optimal RANSAC-Towards a Repeatable Algorithm for Finding the Optimal Set. J. WSCG 2013, 21, 21–30. [Google Scholar]

- Den Hollander, R.J.M.; Hanjalic, A. A Combined RANSAC-Hough Transform Algorithm for Fundamental Matrix Estimation. In Proceedings of the British Machine Vision Conference 2007, Warwick, UK, 10–13 September 2007. [Google Scholar]

- Lourakis, M.I.A.; Argyros, A.A. SBA: A Software Package for Generic Sparse Bundle Adjustment. ACM Trans. Math. Softw. 2009, 36, 1–30. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Rosnell, T.; Honkavaara, E. Point cloud generation from aerial image data acquired by a quadrocopter type micro unmanned aerial vehicle and a digital still camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [PubMed]

- Hernandez-Lopez, D.; Felipe-Garcia, B.; Gonzalez-Aguilera, D.; Arias-Perez, B. An Automatic Approach to UAV Flight Planning and Control for Photogrammetric Applications. Photogramm. Eng. Remote Sens. 2013, 79, 87–98. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.; Rumbao, I.; Berrocal, J.; Porras, A. Positional Quality Assessment of Orthophotos Obtained from Sensors Onboard Multi-Rotor UAV Platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Tahar, K.N. An evaluation on different number of ground control points in unmanned aerial vehicle photogrammetric block. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-2/W2, 93–98. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef] [Green Version]

- Eltner, A.; Schneider, D. Analysis of Different Methods for 3D Reconstruction of Natural Surfaces from Parallel-Axes UAV Images. Photogramm. Rec. 2015, 30, 279–299. [Google Scholar] [CrossRef]

- Habib, A.; Quackenbush, P.; Lay, J.; Wong, C.; Al-Durgham, M. Calibration and stability analysis of medium-format digital cameras. In Proceedings of SPIE—The International Society for Optical Engineering; Tescher, A.G., Ed.; International Society for Optics and Photonics: Ottawa, ON, Canada, 2006; p. 631218. [Google Scholar]

- Atkinson, K.B.; Keith, B. Close Range Photogrammetry and Machine Vision; Whittles: Caithness, UK, 2001; ISBN-10: 1870325737. [Google Scholar]

- Gašparović, M.; Dubravko, G.A. Two-step camera calibration method developed for micro uav’s. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1. [Google Scholar] [CrossRef]

- Pérez, M.; Agüera, F.; Carvajal, F. Low cost surveying using an unmanned aerial vehicle. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-1/W2, 311–315. [Google Scholar] [CrossRef]

- Chen, T.; Shibasaki, R.; Lin, Z. A Rigorous Laboratory Calibration Method for Interior Orientation of an Airborne Linear Push-Broom Camera. Photogramm. Eng. Remote Sens. 2007, 73, 369–374. [Google Scholar] [CrossRef]

- Chi, T.; Gao, Y.; Guyton, M.C.; Ru, P.; Shamma, S. Spectro-temporal modulation transfer functions and speech intelligibility. J. Acoust. Soc. Am. 1999, 106, 2719–2732. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cheung, D.T. MTF modelling of backside-illuminated PV detector arrays. Infrared Phys. 1981, 21, 301–310. [Google Scholar] [CrossRef]

- Schuster, J. Numerical simulation of the modulation transfer function (MTF) in infrared focal plane arrays: Simulation methodology and MTF optimization. In Physics and Simulation of Optoelectronic Devices XXVI; Osiński, M., Arakawa, Y., Witzigmann, B., Eds.; SPIE: Bellingham, WA, USA, 2018; Volume 10526, p. 53. [Google Scholar]

- Dereniak, E.L.; Dereniak, T.D. Geometrical and Trigonometric Optics; Cambridge University Press: Cambridge, UK, 2008; ISBN 9780521887465. [Google Scholar]

- Geary, J.M. Introduction to Lens Design: With Practical ZEMAX Examples; Willmann-Bell: Richmond, VA, USA, 2002; ISBN 9780943396750. [Google Scholar]

- Gašparović, M.; Geodetskog, D. Testing of Image Quality Parameters of Digital Cameras for Photogrammetric Surveying with. Geod. List Glas. Hrvat. Geod. društva 2016, 70, 256–266. [Google Scholar]

- Kraft, T.; Geßner, M.; Meißner, H.; Cramer, M.; Gerke, M.; Przybilla, H.J.; Kraft, T.; Gessner, M.; Meissner, H. Evaluation of a metric camera system tailored for high precision uav applications. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1. [Google Scholar] [CrossRef]

- Honkavaara, E.; Jaakkola, J.; Markelin, L.; Becker, S. Evaluation of Resolving Power and MTF of DMC. Available online: https://www.researchgate.net/publication/228374332_Evaluation_of_resolving_power_and_MTF_of_DMC (accessed on 10 November 2018).

- Efe, M. Improved analytical modulation transfer function for image intensified charge coupled devices. Comp. Sci. 2010, 18. [Google Scholar] [CrossRef]

- Estribeau, M.; Magnan, P. Fast MTF measurement of CMOS imagers using ISO 12333 slanted-edge methodology. Proceedings of SPIE—The International Society for Optical Engineering, Supaero, France, 19 February 2004; 2004; p. 243. [Google Scholar]

- García-Balboa, J.L.; Reinoso-Gordo, J.F.; Ariza-López, F.J. Automated Assessment of Road Generalization Results by Means of an Artificial Neural Network. GIScience Remote Sens. 2012, 49, 558–596. [Google Scholar] [CrossRef]

- Gašparović, M.; Jurjević, L. Gimbal Influence on the Stability of Exterior Orientation Parameters of UAV Acquired Images. Sensors 2017, 17, 401. [Google Scholar] [CrossRef] [PubMed]

- Isasi Viñuela, P.; Galván León, I.M. Redes de neuronas artificiales: Un enfoque práctico; Prentice Hall: Upper Saddle River, NJ, USA, 2004; ISBN 9788420540252. [Google Scholar]

| Flight Mission | Focal Length (mm) | GSD (mm) | Overlap (%) |

|---|---|---|---|

| 1 | 4 | 7 | 90 |

| 2 | 4 | 7 | 80 |

| 3 | 4 | 7 | 70 |

| 4 | 6 | 5 | 90 |

| 5 | 6 | 5 | 80 |

| 6 | 6 | 5 | 70 |

| 7 | 4 | 5 | 90 |

| 8 | 4 | 5 | 80 |

| 9 | 4 | 5 | 70 |

| 10 | 6 | 7 | 90 |

| 11 | 6 | 7 | 80 |

| 12 | 6 | 7 | 70 |

| 13 | 4 | 9.5 | 90 |

| 14 | 4 | 9.5 | 80 |

| 15 | 4 | 9.5 | 70 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-Quiñones, J.J.; Reinoso-Gordo, J.F.; León-Robles, C.A.; García-Balboa, J.L.; Ariza-López, F.J. Variables Influencing the Accuracy of 3D Modeling of Existing Roads Using Consumer Cameras in Aerial Photogrammetry. Sensors 2018, 18, 3880. https://doi.org/10.3390/s18113880

González-Quiñones JJ, Reinoso-Gordo JF, León-Robles CA, García-Balboa JL, Ariza-López FJ. Variables Influencing the Accuracy of 3D Modeling of Existing Roads Using Consumer Cameras in Aerial Photogrammetry. Sensors. 2018; 18(11):3880. https://doi.org/10.3390/s18113880

Chicago/Turabian StyleGonzález-Quiñones, Juan J., Juan F. Reinoso-Gordo, Carlos A. León-Robles, José L. García-Balboa, and Francisco J. Ariza-López. 2018. "Variables Influencing the Accuracy of 3D Modeling of Existing Roads Using Consumer Cameras in Aerial Photogrammetry" Sensors 18, no. 11: 3880. https://doi.org/10.3390/s18113880

APA StyleGonzález-Quiñones, J. J., Reinoso-Gordo, J. F., León-Robles, C. A., García-Balboa, J. L., & Ariza-López, F. J. (2018). Variables Influencing the Accuracy of 3D Modeling of Existing Roads Using Consumer Cameras in Aerial Photogrammetry. Sensors, 18(11), 3880. https://doi.org/10.3390/s18113880