Computerized Ultrasonic Imaging Inspection: From Shallow to Deep Learning

Abstract

1. Introduction

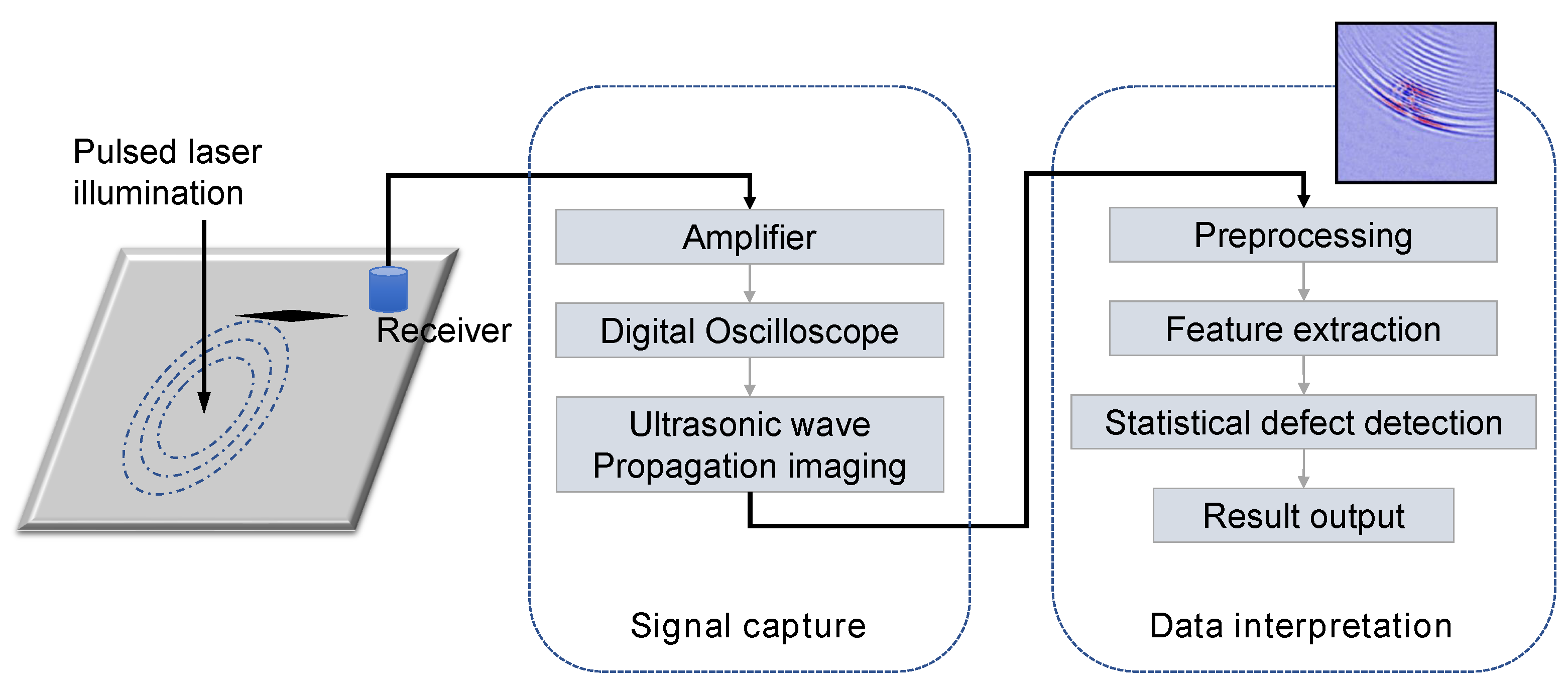

- The objective of this study is to build an efficient automatic ultrasonic image analysis system for NDT. To this end, an ultrasonic inspection image database was established, which consists of 6849 instances. The whole dataset was carefully annotated using binary labels of 0 and 1, denoting the normal and defective case, respectively. We expect to extract critical visual information by using machine learning techniques so as to achieve human-level ultrasonic image understanding.

- Various state-of-the-art computer vision techniques, including efficient visual descriptors [11] and convolutional neural networks (CNN) [12], have been reviewed and evaluated for the application of ultrasonic echo image pattern classification. Most of them have yet to be applied to the field of ultrasonic image investigation. By presenting side-by-side comparison results, we expect this research can contribute to the field of automatic ultrasonic imaging investigation for NDT by setting a benchmark for future research.

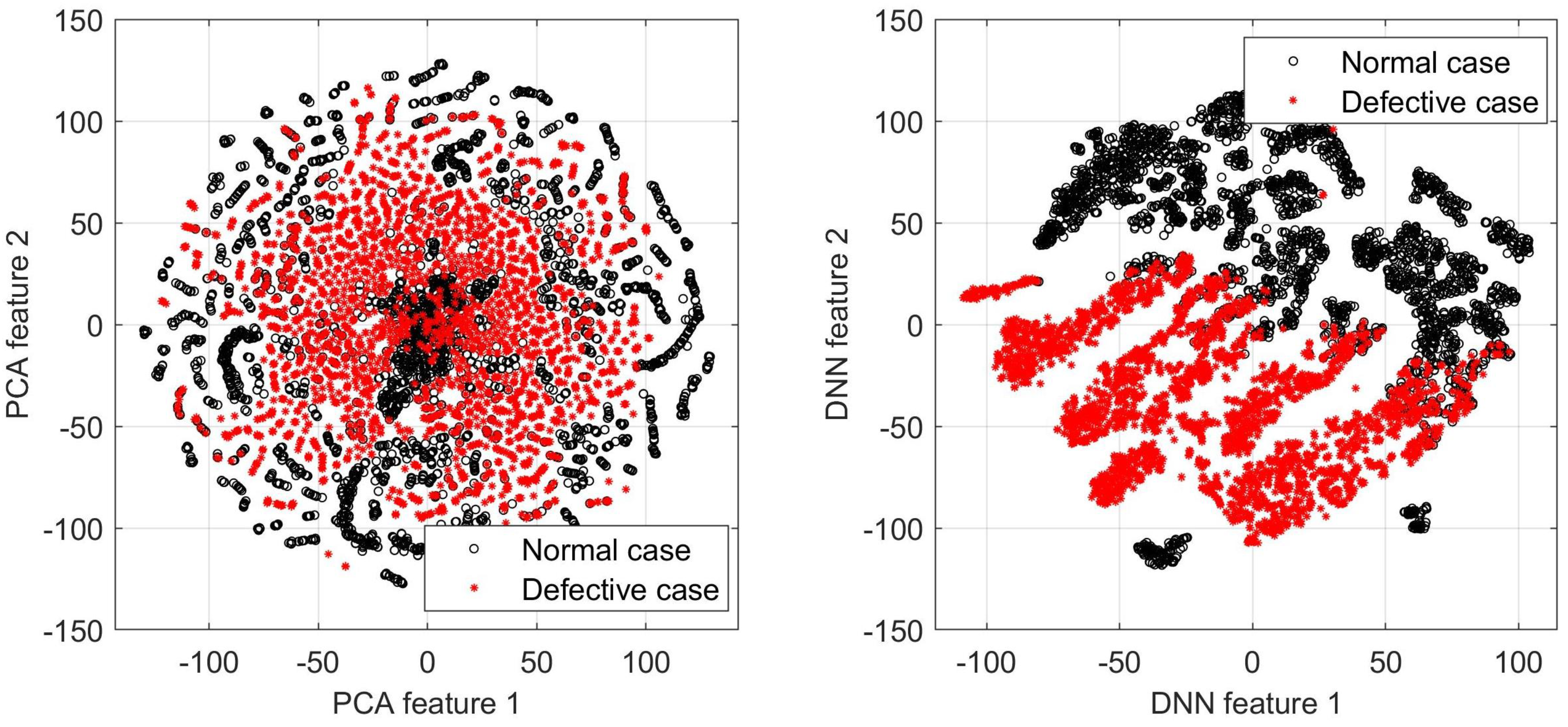

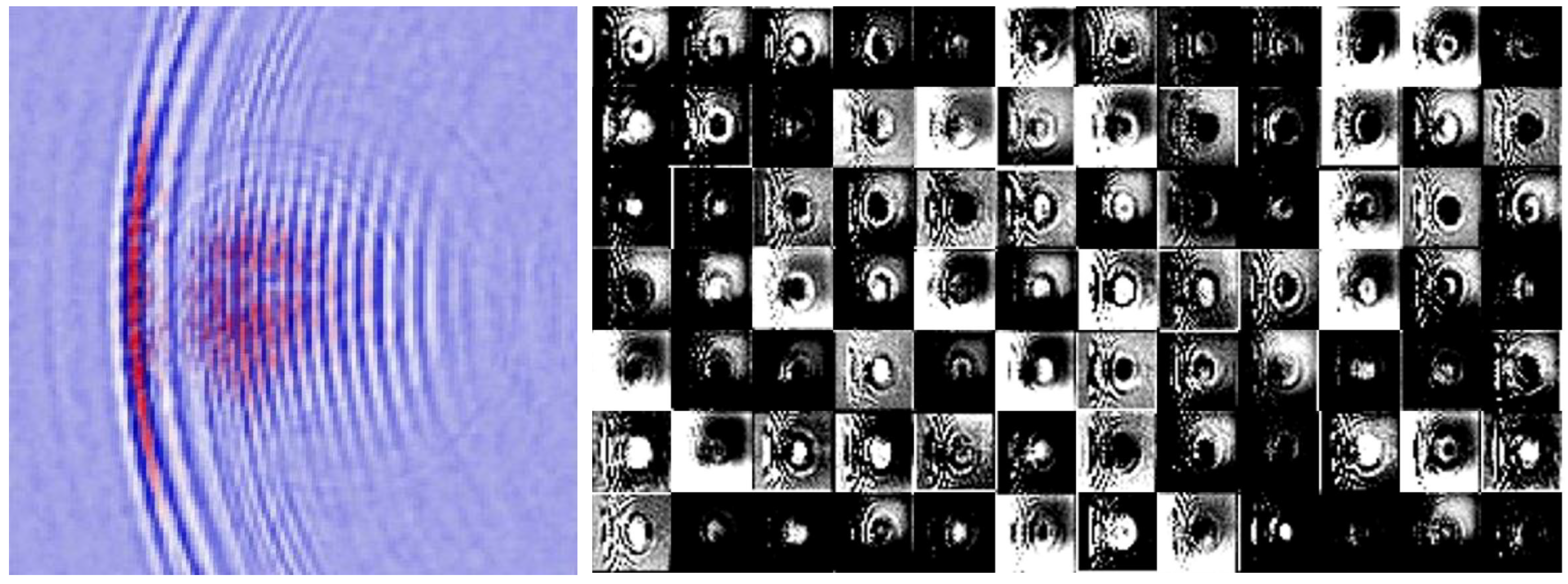

- It has been acknowledged that, nowadays, the advent of Artificial Intelligence is largely driven by deep neural networks, which enable machines to close the gap to human-level performance for cognition. However, the weak interpretability becomes a significant obstacle in applying deep learning to critical applications [13], i.e., NDT in our focus. That is, no one was sure exactly which features deep learning used to classify an ultrasonic image as healthy or defective. In this study, we conducted extensive experiments to validate convolution neural networks (CNN) for ultrasonic image analysis. Through visualization of the internal representation learned by CNN and in-depth discussion, we demonstrate that the critical visual patterns indicating defective ultrasonic images can be expertly distilled by the neural networks.

2. Related Work

2.1. Feature Representations for Ultrasonic Signal in NDT

2.2. Ultrasonic Image Classification Using Statistical Machine Learning

3. Computer Vision Frameworks under Evaluation

3.1. Conventional Scheme: Visual Feature Extraction + Statistical Classifier

3.1.1. Feature 1: Local Binary Patterns (LBP)

3.1.2. Feature 2: Histogram of Oriented Gradients (HOG)

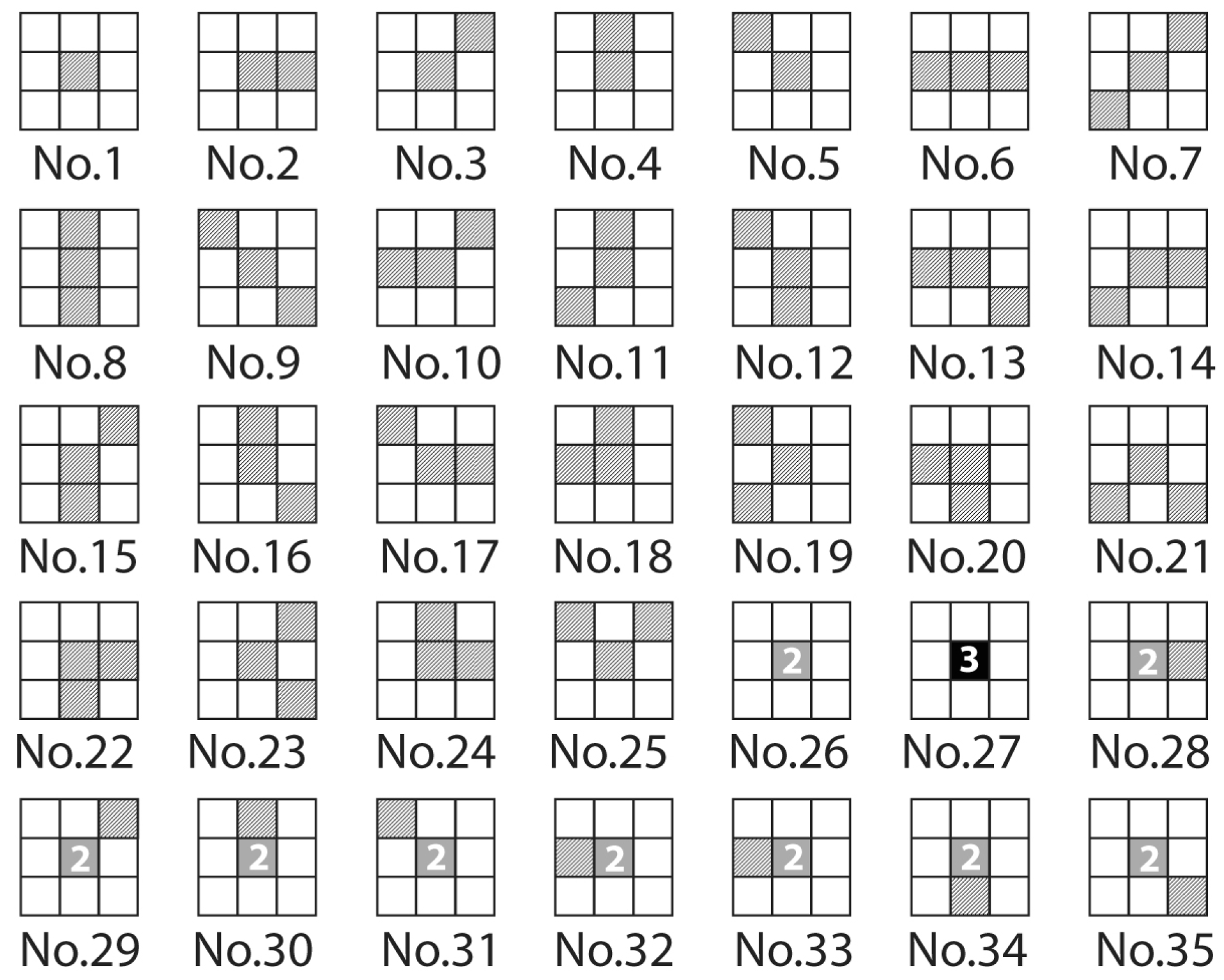

3.1.3. Feature 3: Higher-Order Local Auto-Correlations (HLAC)

3.1.4. Feature 4: Gradient Local Auto-Correlations (GLAC)

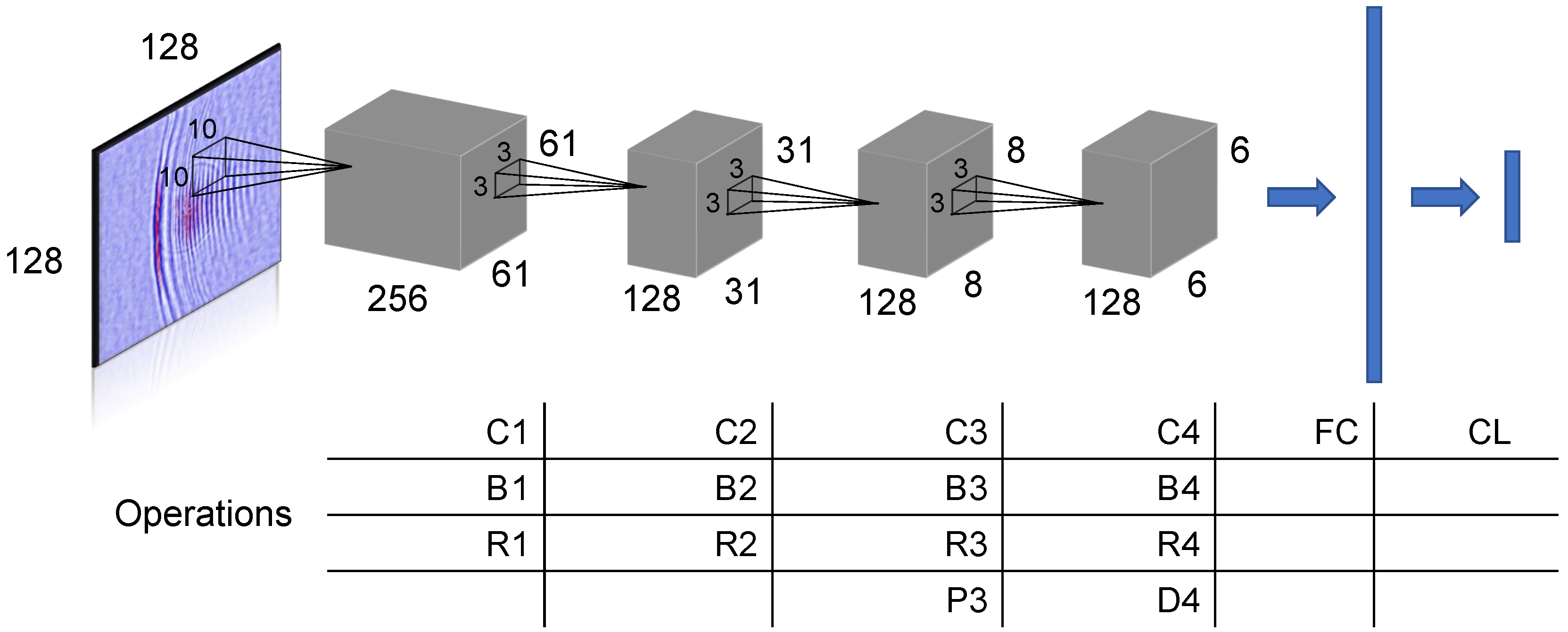

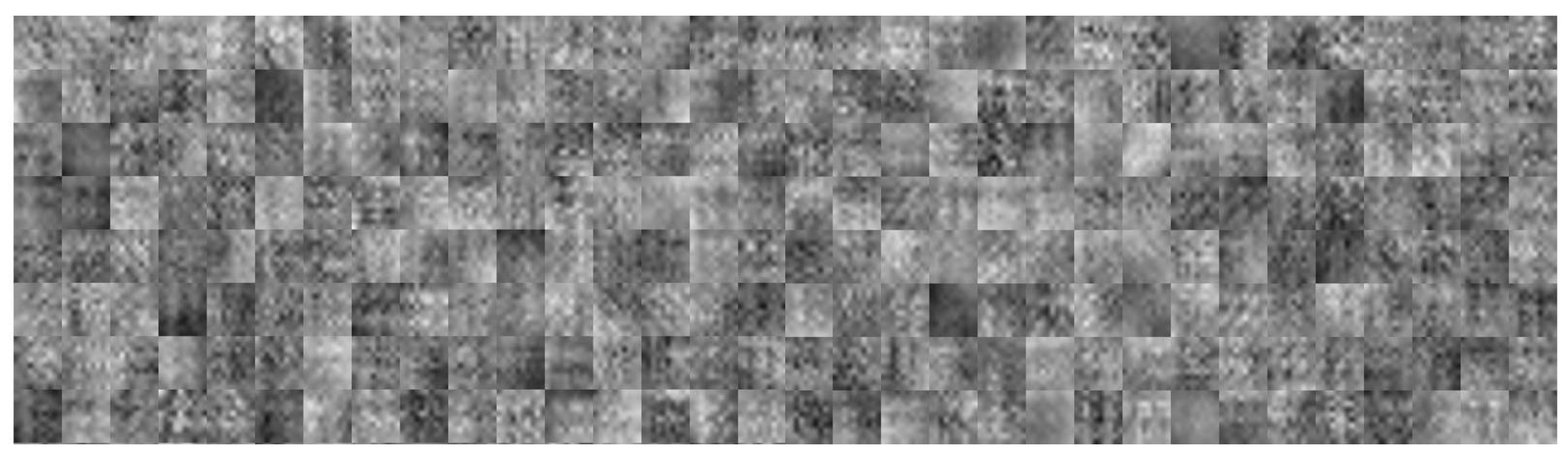

3.2. Ultrasonic Image Investigation with Deep Learning

| Algorithm 1: Train Neural Networks (x,, W) |

| Initialization: W, for return |

3.2.1. Proposed System 1: USseqNet

3.2.2. Proposed System 2: USresNet

4. Experimental Validations

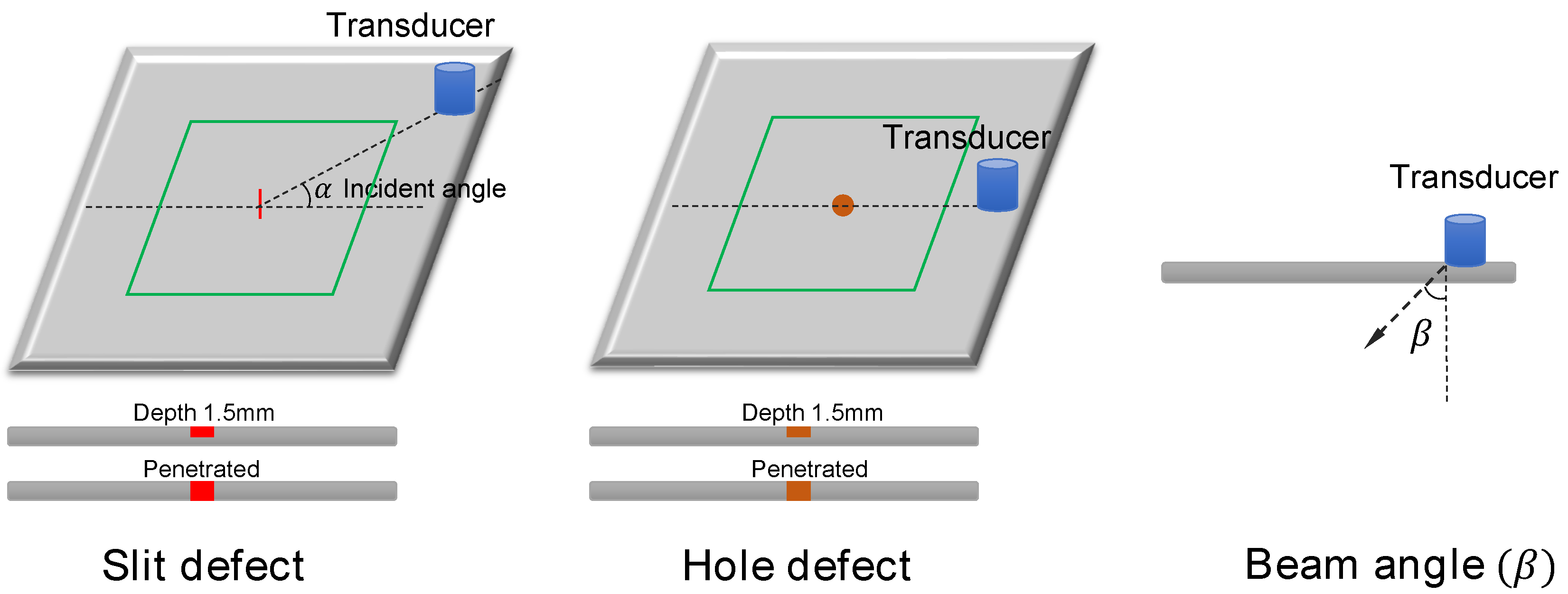

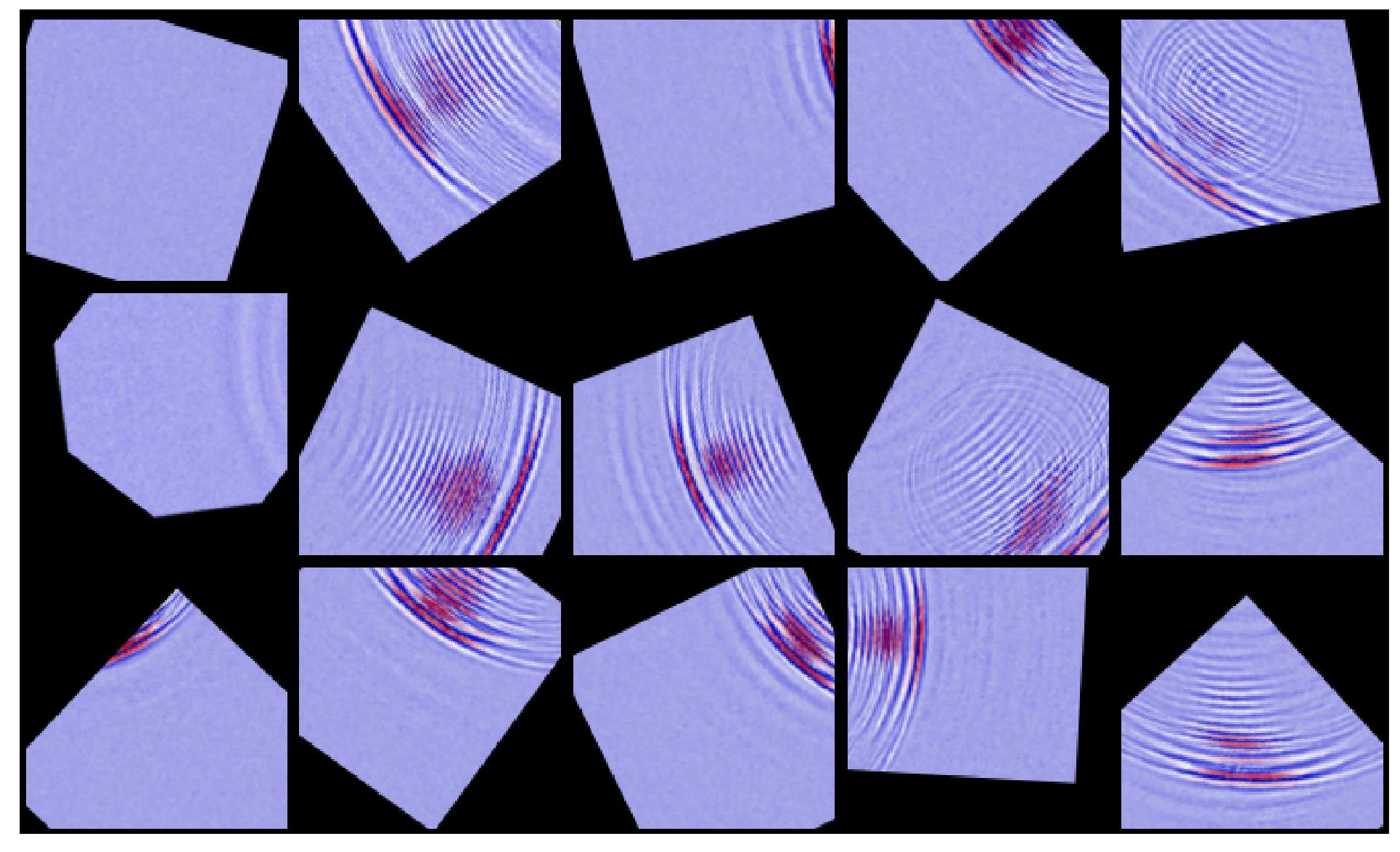

4.1. Ultrasonic Propagation Image Data Collection

4.2. Experimental Settings

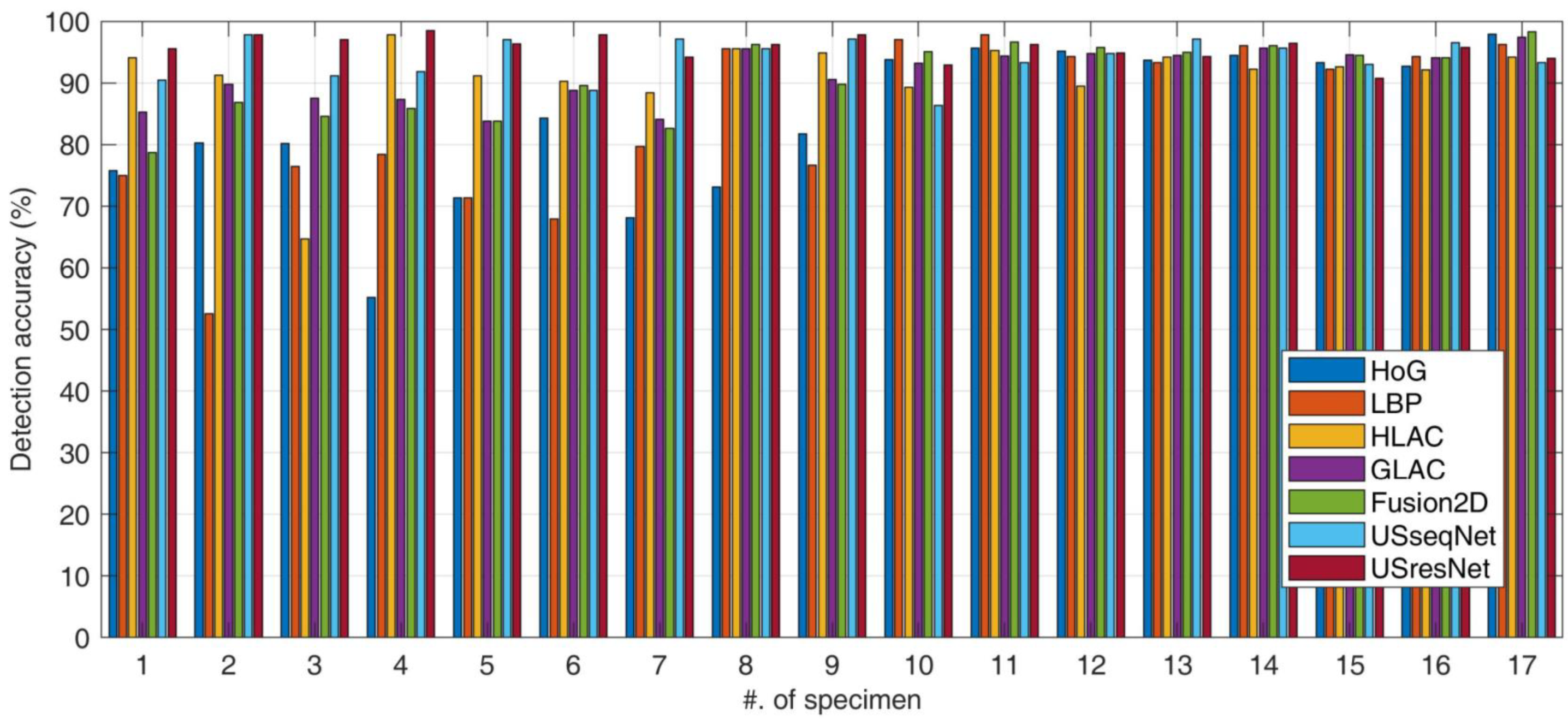

4.3. Empirical Evaluation Results

- True Positive (TP): number of defect images correctly detected.

- True Negative (TN): number of normal images classified as no-defect.

- False Positive (FP): number of normal images incorrectly detected to have defects.

- False Negative (FN): number of defect images incorrectly classified as no-defect.

4.4. Data and Model Visualization

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chang, P.C.; Flatau, A.; Liu, S.C. Health monitoring of civil infrastructure. Struct. Health Monit. 2003, 2, 257–267. [Google Scholar] [CrossRef]

- Maierhofer, C.; Reinhardt, H.W.; Dobmann, G. (Eds.) Non-Destructive Evaluation of Reinforced Concrete Structures: Non-Destructive Testing Methods; Elsevier: New York, NY, USA, 2010. [Google Scholar]

- Washer, G.A. Developments for the non-destructive evaluation of highway bridges in the USA. NDT E Int. 1998, 31, 245–249. [Google Scholar] [CrossRef]

- Drinkwater, B.W.; Wilcox, P.D. Ultrasonic arrays for non-destructive evaluation: A review. NDT E Int. 2006, 39, 525–541. [Google Scholar] [CrossRef]

- Yashiro, S.; Toyama, N.; Takatsubo, J.; Shiraishi, T. Laser-Generation Based Imaging of Ultrasonic Wave Propagation on Welded Steel Plates and Its Application to Defect Detection. Mater. Trans. 2010, 51, 2069–2075. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Farrar, C.R.; Worden, K. Structural Health Monitoring: A Machine Learning Perspective; John Wiley Sons: New York, NY, USA, 2012. [Google Scholar]

- Margrave, F.W.; Rigas, K.; Bradley, D.A.; Barrowcliffe, P. The use of neural networks in ultrasonic flaw detection. Measurement 1999, 25, 143–154. [Google Scholar] [CrossRef]

- Ahmed, K.; Redouane, D.; Mohamed, K. 2D Gabor functions and FCMI algorithm for flaws detection in ultrasonic images. World Acad. Sci. Eng. Technol. 2005, 9, 184–188. [Google Scholar]

- Nosato, H.; Sakanashi, H.; Murakawa, M.; Higuchi, T.; Otsu, N.; Terai, K.; Hiruta, N.; Kameda, N. Histopathological diagnostic support technology using higher-order local autocorrelation features. In Proceedings of the 2009 Symposium on Bio-Inspired Learning and Intelligent Systems for Security, Edingburgh, UK, 20–21 August 2009; pp. 61–65. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Bengio, Y. Deep Learning of Representations for Unsupervised and Transfer Learning. Available online: http://proceedings.mlr.press/v27/bengio12a/bengio12a.pdf (accessed on 2 November 2018).

- Ye, J.; Iwata, M.; Takumi, K.; Murakawa, M.; Tetsuya, H.; Kubota, Y.; Yui, T.; Mori, K. Statistical impact-echo analysis based on grassmann manifold learning: Its preliminary results for concrete condition assessment. In Proceedings of the 7th European Workshop on Structural Health Monitoring (EWSHM), Nantes, France, 8–11 July 2014. [Google Scholar]

- Simas Filho, E.F.; Souza, Y.N.; Lopes, J.L.; Farias, C.T.; Albuquerque, M.C. Decision support system for ultrasound inspection of fiber metal laminates using statistical signal processing and neural networks. Ultrasonics 2013, 53, 1104–1111. [Google Scholar] [CrossRef] [PubMed]

- Simas Filho, E.F.; Silva, M.M., Jr.; Farias, P.C.; Albuquerque, M.C.; Silva, I.C.; Farias, C.T. Flexible decision support system for ultrasound evaluation of fiber–metal laminates implemented in a DSP. NDT E Int. 2016, 79, 38–45. [Google Scholar] [CrossRef]

- Cruz, F.C.; Simas Filho, E.F.; Albuquerque, M.C.; Silva, I.C.; Farias, C.T.; Gouvêa, L.L. Efficient feature selection for neural network based detection of flaws in steel welded joints using ultrasound testing. Ultrasonics 2017, 73, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Kesharaju, M.; Nagarajah, R. Feature selection for neural network based defect classification of ceramic components using high frequency ultrasound. Ultrasonics 2015, 62, 271–277. [Google Scholar] [CrossRef] [PubMed]

- Lawson, S.W.; Parker, G.A. Automatic detection of defects in industrial ultrasound images using a neural network. Available online: https://pdfs.semanticscholar.org/3009/3dc2a6402e14cef4523caa708173d7de1acb.pdf (accessed on 2 November 2018).

- C’shekhar, N.S.; Zahran, O.; Al-Nuaimy, W. Advanced neural-fuzzy and image processing techniques in the automatic detection and interpretation of weld defects using ultrasonic Time-of-Diffraction. In Proceedings of the 4th International Conference on NDT, Hellenic Society for NDT, Chania, Greece, 11–14 October 2007; pp. 11–14. [Google Scholar]

- Kechida, A.; Drai, R.; Guessoum, A. Texture analysis for flaw detection in ultrasonic images. J. Nondestruct. Eval. 2012, 31, 108–116. [Google Scholar] [CrossRef]

- Liu, C.; Harley, J.B.; Bergés, M.; Greve, D.W.; Oppenheim, I.J. Robust ultrasonic damage detection under complex environmental conditions using singular value decomposition. Ultrasonics 2015, 58, 75–86. [Google Scholar] [CrossRef] [PubMed]

- Guarneri, G.A.; Junior, F.N.; de Arruda, L.V. Weld Discontinuities Classification Using Principal Component Analysis and Support Vector Machine. Available online: http://www.sbai2013.ufc.br/pdfs/3606.pdf (accessed on 2 November 2018).

- Harley, J.B. Predictive guided wave models through sparse modal representations. Proc. IEEE 2016, 104, 1604–1619. [Google Scholar] [CrossRef]

- Chen, C.H.; Lee, G.G. Neural networks for ultrasonic NDE signal classification using time-frequency analysis. In Proceedings of the 1993 IEEE International Conference on Acoustics, Speech, and Signal Processing, Minneapolis, MN, USA, 27–30 April 1993; Volume 1, pp. 493–496. [Google Scholar]

- Meng, M.; Chua, Y.J.; Wouterson, E.; Ong, C.P.K. Ultrasonic signal classification and imaging system for composite materials via deep convolutional neural networks. Neurocomputing 2017, 257, 128–135. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1615–1630. [Google Scholar] [CrossRef] [PubMed]

- Christopher, M.B. Pattern Recognition and Machine Learning; Springer Science Business Media: New York, NY, USA, 2006. [Google Scholar]

- He, D.C.; Wang, L. Texture unit, texture spectrum, and texture analysis. IEEE Trans. Geosci. Remote Sens. 1990, 28, 509–512. [Google Scholar]

- Kobayashi, T.; Ye, J. Acoustic feature extraction by statistics based local binary pattern for environmental sound classification. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 3052–3056. [Google Scholar]

- Shinohara, Y.; Otsu, N. Facial expression recognition using fisher weight maps. In Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition, Seoul, Korea, 19 May 2004; pp. 499–504. [Google Scholar]

- Kobayashi, T.; Otsu, N. Image feature extraction using gradient local auto-correlations. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2008; pp. 346–358. [Google Scholar]

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.E.; Mohamed, A.R.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Hinton, G.E. Neural networks: Tricks of the trade. Lect. Notes Comput. Sci. 2012, 7700, 599–619. [Google Scholar]

- Sze, V.; Chen, Y.H.; Yang, T.J.; Emer, J.S. Efficient processing of deep neural networks: A tutorial and survey. Proc. IEEE 2017, 105, 2295–2329. [Google Scholar] [CrossRef]

- Yashiro, S.; Takatsubo, J.; Miyauchi, H.; Toyama, N. A novel technique for visualizing ultrasonic waves in general solid media by pulsed laser scan. NDT E Int. 2008, 41, 137–144. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Nguyen, A.; Fuchs, T.; Lipson, H. Understanding neural networks through deep visualization. arXiv, 2015; arXiv:1506.06579. [Google Scholar]

| Item | Setting | |

|---|---|---|

| 1 | Probe frequency | 1 MHz |

| 2 | Beam angle | 90 |

| 3 | Pulse repetition frequency | 500 Hz |

| 4 | Incident angle | 0, 22.5, 45, 67.5, 90 |

| Specimen #. | Flaw Type | Depth | Transducer Side | Defect Size |

|---|---|---|---|---|

| 1–3 | Hole | Penetrated | Front | 1 mm | 3 mm | 5 mm |

| 4–6 | Hole | 1.5 mm | Front | 1 mm | 3 mm | 5 mm |

| 7–9 | Hole | 1.5 mm | Back | 1 mm | 3 mm | 5 mm |

| 10–11 | Slit | Penetrated | Front | 5 mm | 10 mm |

| 12–14 | Slit | 1.5 mm | Front | 3 mm | 5 mm | 10 mm |

| 15–17 | Slit | 1.5 mm | Back | 3 mm | 5 mm | 10 mm |

| Type | Parameter Sample Range | |

|---|---|---|

| 1 | Reflection | X-axis, Y-axis |

| 2 | Rotation angle | [−20, 20] degrees |

| 3 | Scaling | [0.5, 1] |

| 4 | Translation | X: [−50,10], Y: [−10,50] |

| HoG | LBP | HLAC | GLAC | USseqNet | USresNet | ||

|---|---|---|---|---|---|---|---|

| Precision (%) | 89.27 | 89.95 | 91.43 | 96.10 | 95.98 | 95.28 | 93.98 |

| Recall (%) | 92.70 | 92.73 | 92.44 | 90.75 | 91.82 | 91.46 | 95.67 |

| Accuracy (%) | 90.80 | 91.20 | 91.90 | 93.54 | 94.00 | 93.48 | 94.73 |

| F-score (%) | 90.95 | 91.32 | 91.93 | 93.35 | 93.86 | 93.33 | 94.47 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, J.; Ito, S.; Toyama, N. Computerized Ultrasonic Imaging Inspection: From Shallow to Deep Learning. Sensors 2018, 18, 3820. https://doi.org/10.3390/s18113820

Ye J, Ito S, Toyama N. Computerized Ultrasonic Imaging Inspection: From Shallow to Deep Learning. Sensors. 2018; 18(11):3820. https://doi.org/10.3390/s18113820

Chicago/Turabian StyleYe, Jiaxing, Shunya Ito, and Nobuyuki Toyama. 2018. "Computerized Ultrasonic Imaging Inspection: From Shallow to Deep Learning" Sensors 18, no. 11: 3820. https://doi.org/10.3390/s18113820

APA StyleYe, J., Ito, S., & Toyama, N. (2018). Computerized Ultrasonic Imaging Inspection: From Shallow to Deep Learning. Sensors, 18(11), 3820. https://doi.org/10.3390/s18113820