Abstract

This work considers using camera sensors to detect fire smoke. Static features including texture, wavelet, color, edge orientation histogram, irregularity, and dynamic features including motion direction, change of motion direction and motion speed, are extracted from fire smoke to train and test with different combinations. A robust AdaBoost (RAB) classifier is proposed to improve training and classification accuracy. Extensive experiments on well known challenging datasets and application for fire smoke detection demonstrate that the proposed fire smoke detector leads to a satisfactory performance.

1. Introduction

Detection fire smoke at the early stage has drawn a lot of attentions recently due to its importance to social security and economic development. Conventional point fire smoke detector sensors are effective for indoor applications, but they have difficulties to detect smoke in large outdoor areas, it is because point fire smoke detector typically detect the presence of certain particles generated by smoke and fire by ionization [1], photometry [2], or smoke temperature [3,4]. They require a close proximity to fire and smoke, which are not effective for open spaces. For particles to reach these sensors to activate alarms, many sensors are needed to cover a large area which is not cost effective.

Video based fire smoke detection using cameras is of great interest in large and open spaces [5]. Closed circuit television (CCTV) surveillance systems are widely installed in many public areas to date. These systems can be used to provide early fire smoke detection if a reliable fire detection software is installed in the system. Gottuk et al. [6] test three commercially available video based fire detection systems against conventional spot systems in a shipboard scenario. Video based systems are found to be more effective in flame detection. These systems are economically viable as CCTV cameras are already available for traffic monitoring [7] and surveillance [8] applications. Braovic, M et al. [9] propose an expert system for fast segmentation and classification of regions on natural landscape images that is suitable for real-time automatic wildfire monitoring and surveillance systems. It is noted that smoke is always visible before fire in most outdoor scenarios. This motivates us to research on detecting smoke in the absence or presence of flame from a single frame of video.

There are some technical challenges in video based fire smoke detection. First, it is observed to be inferior to particle-sampling based detectors in terms of false alarm rate. It is mainly due to the variability in smoke density, scene illumination, interfering objects. Second smoke and fire are difficult to be modeled, most of the existing image processing methods do not characterize smoke well [10]. Current fire detection algorithms are based on the use of static and motion information in video to detect flames [11,12,13,14,15]. Many efforts have been made to reduce the false alarm rate and missing detection rate. Table 1 shows some static and dynamic features used in these approaches. As shown, the most used features are color, texture, energy and dynamic features.

Table 1.

Literatures about fire smoke feature extraction.

On the other side, for classification, some researchers [15,16,18,19,20,21,22] use thresholds or parameters from analysis of extracted features, which is less time-consuming but not adaptive in some complex environments. Some other researchers [30,31] use well known AdaBoost to training models and classify. AdaBoost based component classifiers can solve the overfitting problem relatively with high precision, one weak classifier of component classifiers is a learner which can return a hypothesis that roughly outperform random guessing. For the component classifiers, the weights updates of them in every step mainly depend on the last errors,

gives the weights of component classifiers, and is the errors. Definitely, the weights should be positive, so the error is required to be less than 0.5, and AdaBoost also requires the error not much less than 0.5, so that the boosting function can make sense of these cascade classifiers. Therefore, we must select a series of proper base classifiers and set a best parameter for every base classifier prudently and it is also time-consuming.

Deep learning is also considered for smoke fire detection. In [32], a binary classifier is trained using annotated patches from scratch, second, learning and classification using cascade convolutional neural network (CNN) fire detector. Muhammad et al. [33] propose an adaptive prioritization mechanism for fire smoke detection. CNN and the internet of multimedia things (IoMT) for disaster management are used for early fire detection framework. Through deep learning, features can be learned automatically. However, these deep learning methods for fire smoke detection are still limited by learning static features. Wu et al. [34] combine deep learning method and conventional feature extraction method to recognize the fire smoke areas. CNN is used in Caffe framework to achieve a Caffemodel with static features. This deep learning approach can work well to certain extent, but the dynamic feature is not trained directly for most situations.

As for fire smoke classification, thresholds or parameters from extracted features are usually used in classification which is less time-consuming. However, it is not adaptive to deal with complex environments [15,16,18,19,20,21,22]. SVM and AdaBoost have been considered for classifier in [23,24,25,26,27,28,29]. SVM can achieve good accuracy with a sufficiently large training set. Some kernel functions can make it possible to solve the nonlinear problem in high dimensional space [35,36,37,38]. One popular kernel used in SVM is RBF (RBFSVM). RBFSVM determines the number and location of the centers and the weight values automatically [39]. It uses a regularization parameter, C, to balance the model complexity and accuracy. Another parameter known as Gaussian width, , can be used to avoid the over-fitting problem. However, it is difficult to select proper values of C and . AdaBoost based component classifiers can solve the overfitting problem with relatively high precision. One weak classifier of component classifiers is a learner which can return a hypothesis that roughly outperform random guessing.

In this paper, static features, include texture, wavelet, color, hog, irregularity, and dynamic features, include motion direction, change of motion direction and motion speed, are extracted and trained with different combinations. A robust AdaBoost (RAB) classifier is designed for detection. It can overcome the weights update problem and solve the dilemma between high accuracy and over-fitting. RBFSVM (Radial Basic Function—Support Vector Machine) [35] is chosen as the base classifier with some improvements made to guarantee the validity and accuracy of the boosting function. An effective algorithm for locating the original fire position is introduced in this paper for practical fire smoke detection.

The remainder of this article is organized as follows: Video based fire smoke detection using camera are described in Section 2, including static feature in Section 2.1 and dynamic feature extraction in Section 2.2. The proposed Robust AdaBoost classifier for fire smoke detection is presented in Section 3. Performance of the proposed method are evaluated by extensive experiments in Section 4. The paper is concluded in Section 5.

2. Features Extraction

Fire and smoke training data usually come from pre-collected image datasets [40]. Due to the difficulty in collecting this type of data, these sets are limited and most of them include other random information besides fire smoke as shown in Figure 1. In [29,34], image data sets are used for static feature extraction and set threshold values for classifying dynamic features in real-time video detection. Although this approach can work well to some extent, it is difficult to set a single threshold value that works for situation.

Figure 1.

(a–d) show the fire and smoke image data sets with none-smoke objects.

In this paper, different smoke and non-smoke videos taken from camera are used as training samples. Motion regions of all video sequences are used for static feature extraction, and dynamic features are extracted depending on the correlations among successive frames. These training videos are collected and preprocessed so that the videos contain only fire smoke moving objects.

Robust Orthonormal Subspace Learning (ROSL) [41] method is used to segment foregrounds from images.

where M denotes the observed matrix of video, A is the low-rank background matrix, and M is the foreground matrix. This method represents A under the ordinary orthonormal subspace , where coefficients . The dimension k of the subspace is set as ( is a constant and ). E is the sparse matrix which represents foregrounds. Foreground segmentation results are shown in Figure 2. Motion regions are selected frame by frame. In order to choose the exact sample data sets that we need, tiny motion regions which may be created by minute jitter or too big ones should be eliminated.

Figure 2.

Foreground segmentation.

2.1. Static Features Extraction

Color, texture and energy in wavelet domain are three commonly used static features as shown in Table 1. Here we consider three other static features including Edge Orientation Histogram (EOH) [42], irregularity and sparsity, and three dynamic features including motion direction, change of motion direction and motion speed.

2.1.1. Color, Texture and Energy

Color moments are measures that can be used to differentiate images. Stricker and Orengo [43] propose three central moments of an image color distribution such as mean, standard deviation and skewness. Here we use HSV (Hue, Saturation, and Value) to define three moments for each three color channels: mean, standard deviation and skewness of the ith color channel at the jth image pixel :

LBP feature has been used to capture spatial characteristics of an image as reported in [22,44]. The LBP features are extracted as texture descriptor of smoke in this study. When smoke is found in a region, the edge of background will be blurred so that the high frequency energy of this region will decrease. Gabor wavelet is used to get three high frequency components in horizontal (HL), vertical (LH) and diagonal (HH) directions. The energy of image in region is defined as

where is the energy of image in region and is high frequency wavelet coefficients of pixel at in region and is the entire wavelet coefficients of the same pixel. We have

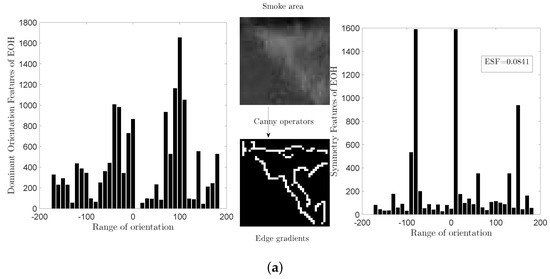

2.1.2. Edge Orientation Histogram

Gradients of an image plays an important role in object detection. The Scale Invariant Feature Transform (SIFT) [45] achieves an impressive performance on image registration and object detection. As a variant of SIFT, Histograms of Oriented Gradients (HOG) [46] are used for human detection. HOG can capture local gradient-orientation structures within an image but it is computed on a dense grid of uniform space over an image. Therefore, a high dimensional feature vector is produced to describe each detection window, which is not suitable for real-time application like fire smoke detection. Levi and Weiss [42] propose the Edge Orientation Histogram (EOH) method which measures the response of linear edge detectors at different subareas of the input image.

In this paper, we use Canny operator to generate image edge and the gradient which contains horizontal component and vertical component . The edge gradient is then converted into polar coordinate. That is

where m is the magnitude and denotes the orientation.

The edge orientations are mapped to the range with an interval of 10°. The numbers of edge orientation in these 36 angle ranges are counted using two angle threshold matrices as and ,

For each angle range , the EOH is obtained by summing all the gradient magnitudes whose orientations belongs to this range,

The EOH features can be extracted with two different methods- Dominant Orientation Features (DOF) and Symmetry Features (SF) [42]. When we try to find the dominant edge orientation in a specific area rather than the ratio between two different orientations, we define a slightly different set of features which measures the ratio between a single orientation and the others. That is

where records the ratio of every single orientation from to , is a tiny positive value to avoid division by zero. According to Equation (7), the domain orientation is located in .

According to [42], we define the symmetry features in as

To normalize this feature, we reset it as

where , and are rectangles of the same size and are positioned at opposite sides of the symmetry axes. Not only the symmetry features can be used to find symmetry, but it can also find places where symmetry is absent. From Equation (9), a small means that the image orients more in compared to its opposite side range.

The symmetry value of the entire detected region is computed as

is small when the detected motion region is symmetric.

Figure 3 shows the two different EOH features ( and ) of smoke and car images. In this paper, we concatenate these two EOH features to characterize the edge features.

Figure 3.

Histogram of Edge Orientation: Left figure of (a,b): Dominant Orientation Features. Right figure of (a,b): Symmetry Features.

2.1.3. Irregularity and Sparsity

Fire smoke have no regular shapes in diffusion, this irregularity feature is defined as

where is the irregularity, c is the edge perimeter of detected region and s is the area.

For the sparsity feature, it is mainly for light smoke as described in [22]. We extract the sparse foreground with ROSL [41], and E in Equation (2) is the foreground matrix. Figure 4 shows the extracted sparse smoke without backgrounds.

Figure 4.

Sparsity of foreground.

The sparsity value is calculated as

where is the matrix of region M, is the size of . Figure 4 shows the foreground motion region. The norm counts the numbers of non-zero pixels in foreground.

2.2. Dynamic Features Extraction

Fire smoke has special dynamic features that provides information for recognition. Motion direction, change of direction and motion speed are extracted as three dynamic features. Before extracting these features, we need to find the locations of the detected motion region in consecutive frames. The centroid of current motion region is first calculated. Because a moving object in two adjacent frames has similar location and shape, these two motion regions have maximum overlap ratio and the shortest distance. Figure 5 shows the mechanism of finding the locations of these two corresponding motion regions. In Figure 5, A, B and C are the centroid of three successive frames, A1 and C1 are the two overlapping regions, region A has the maximum overlap ratio computed with current detected region B for the last frame while region C has the maximum overlapping ratio computed with current detected region B for the next frame, the overlapping ratio is calculated as

where represents the area of each region shown in the figure.

Figure 5.

Overlapping ratio computing.

From Figure 5, motion region B is detected moving from A to C in three consecutive frames. Hence, its motion direction can be calculated with the orientation of and . Define the horizontal component of as and the vertical component of as , the motion direction of region B is calculated as

As smoke moves slowly and its motion is not obvious in adjacent two frames, we use the statistic measurement to extract the motion features by calculating the mean values of corresponding motion region directions from and instead of that in adjacent two frames. The final motion direction feature of one motion region in frame is defined by

We set the frame interval as . Each motion region can be calculated by following the scheme above ( in the first four frames and last four frames cannot be calculated), such that we can also obtain one motion direction change in frame

Another dynamic feature is called motion speed, while we can easily achieve the motion speed with the distance of two corresponding motion regions in consecutive two frames as

where is the frame rate, the motion speed from to is calculated as

Combining different features can enhance the robustness of features. According to [24], features can be concatenated together to form a feature vector in cascade fusion as follows:

where represents every single features of one image.

Figure 6 shows the framework of fire smoke detection in this paper including motion region detection, feature extraction and samples training and fire smoke classification. As for the classifier, we propose a robust AdaBoost method in the following section.

Figure 6.

Framework of fire smoke detector.

3. The Proposed Robust AdaBoost Classifier for Fire Smoke Detection

3.1. AdaBoost

Given a set of samples , , represents the input space, and , is the label. The goal of AdaBoost is to train a series of weak classifiers to be a stronger one. Boosting algorithms improve the performance of a learning algorithm by calling a weak learner in a succession of cycles, in each cycle, it maintains a weight distribution over the weak learner with input set S and returns a hypothesis . The weight distribution is initially set uniform as ,

We use the distribution to train the base classifier with training dataset and get errors ,

The weight of weak classifier can be achieved with error by , when , , and increases with the decrease of . That is, the smaller the classification error is, the more important the role of this weak classifier is of the component classifiers. For the next cycle, the weights of samples are updated as

where is the normalization factor. This distribution shows that the weights of the misclassified samples will be increased and the weight of the correctly classified samples will be reduced. In this way, AdaBoost will be forced to pay its attention to the samples that have been misclassified in the next cycle.

Combine all component weak classifiers,

where M is the total number of cycles. The final classifier is defined as

AdaBoost calls this algorithm repeatedly in a series cycles and can get a lower training error with a higher accuracy.

3.2. Proposed Robust AdaBoost

One important theoretical property of AdaBoost is that component classifiers need to be only slightly better than random guessing, the weight will be negative if and be larger than 1 when , that means we should spend time on selecting moderate parameter so that the error created by this weak classifier is slightly less than 0.5, otherwise, the classifier with classification error slightly more than 0.5 will be discarded. When the weak classifier error is less than 0.1, its weight will be relatively high, it can just take control of errors in a tiny limited range. However, AdaBoost treat this limited controlled errors as the only key for updating weights. Either too strong or too weak a classifier will not satisfy the boosting requirements. The weight calculation of base classifier is modified as

As ,, . Figure 7 shows these two weight computing methods of conventional AdaBoost and robust AdaBoost in this paper. also decrease with an increasing . No matter how weak or strong the base classifier is, it can achieve its corresponding weight. The stronger the base classifier is, the more important it will be. While n is large enough, the weight of the base classifier that slightly outperforms random guessing () will almost equal to zero. When approaches to zero, the weight approaches to zero. Therefore, our proposed method is consistent with boosting theory.

Figure 7.

Base classifier weights update.

AdaBoost can decrease its training errors through its learning. Its error upper bound is given by

when , , . According to Equations (30), (31) and (35), the error upper bound can be calculated as

The error upper bound of the component classifiers is . It means that we can minimize by selecting moderate base classifier in every cycle, and make the training errors decrease moderately.

When it comes to binary classification, Equation (32) can be written as

For the proposed Robust AdaBoost,

Figure 8 shows the different changes with different errors of conventional AdaBoost and the proposed robust AdaBoost (n = 1, 3, 6, 9, 20, 100). Definitely, the goal of boosting is to achieve a series base classifiers with lower errors. When we focus on the with error less than 0.5, it is clear that the proposed robust AdaBoost is consistent with the boosting function, Furthermore, it is also appropriate for the entire domain of definition [0,1], which is more robust than conventional AdaBoost.

Figure 8.

Weights normalization values of AdaBoost.

3.3. Feasibility Analysis for Choosing RBFSVM as Base Classifier

This paper aims at applying RBFSVM as component classifier in boosting. There are two vital parameters, Gaussian width and regularization parameter C, that are relevant to classification performance. The classification performance of RBFSVM can mainly depend on value with a roughly suitable C [47]. We can adjust RBFSVM to be an appropriate base classifier of boosting component classifiers by simply changing over a large range of C.

In [48], uses a large initial value and decreases it slightly to increase the learning capacity of RBFSVM so that its error is less than 50%. In this paper, instead of adjusting errors to be less than 0.5, we focus on how to make these classifiers uncorrelated and improve the performance of every base classifier. Because of the robustness described in Section 3, our weight computing method can keep balance with errors in [0,1].

3.3.1. Diversity

In order to make the boosting function more efficient for the component classifiers, a roughly large is set initially. There is another important factor affecting the generation performance of ensemble methods called Diversity [49,50]. It is also appropriate in AdaBoost, so that these component classifiers have irrelevance and the boost function can make sense of boosting these same classifiers with different properties.

Similar to [48], the diversity is calculated as follows: If is the hypothesis of the mth SVM classifier on the sample , and is the combined prediction label of all the existing component classifiers, the diversity of the mth component classifier on the sample is calculated as

and the diversity value of RAB-RBFSVM in m th cycle for N samples is

Here we do not compare with a preset threshold, as it is hard to find a single threshold for different training data. is decreased step by step to find a proper new that can minimize the error and maximize the diversity simultaneously. The step length of the decreasing is

3.3.2. Samples Processing: Denoising Initial Weights

With the update of , several RBFSVM classifiers using different s are tested on the same samples in one cycle. From Equation (30), we know that the weights of misclassified samples will be increased. However, if a sample is misclassified too many times in one cycle, there is a large possibility that this sample is a noise of this training data, and its weight should be decreased relatively. The misclassified times of the sample should be inversely proportional to its weight, and the weights of misclassified samples as follows:

where is the total times of misclassification in one cycle, and is the max value, Equations (42)–(44) calculate the possibility of classification of each sample . is the final weight of samples in each cycle.

The conventional AdaBoost starts its boost with the same weights of samples, which means it does not take the balance of negative and positive samples into consideration in the first step. In RAB-RBFSVM+, the initial weights are calculated with different scales of negative and positive samples. For binary classification,

is the number of negative samples and positive, and are the initial weights of negative and positive samples. Algorithm 1 shows the entire process of our proposed RAB-RBFSVM+.

| Algorithm 1 Improved Robust AdaBoost classifier with RBFSVM: RAB-RBFSVM+. |

Input: Training datasets with labels .

|

4. Experimental Results

In this section, we evaluate the performance of Robust AdaBoost (RAB) and the improved Robust AdaBoost RBFSVM (RAB-RBFSVM+). We compare the proposed algorithm with the conventional AdaBoost based component classifiers using other weak classifiers. The data sets from UCID Repository [51] are utilized to verify the effectiveness of the proposed robust AdaBoost classifier.

Different feature combinations are also compared using different algorithms we test above. The smoke and non-smoke videos are collected from public video clips (The related video clips can be downloaded from http://signal.ee.bilkent.edu.tr/VisiFire/Demo/SampleClips and http://imagelab.ing.unimore.it/visor). The smoke clips in this data sets cover indoor and outdoor with different illumination, short or long distance surveillance scenes. Seven smoke videos and ten non-smoke videos are used in the experiment. In the training datasets of smoke, we select or reprocess the smoke videos so that they only have smoke motion regions. In this paper, we extracted 5699 motion regions (include 2766 positive samples and 2933 negative samples) for training and test. Finally, we test three videos with smoke, non-smoke or mixed sequences.

4.1. Advantages of the Proposed Classifier

According Equation (34) and Figure 6, the weight parameter has different domain of values with different n in , as shown in Table 2.

Table 2.

changes with different n.

Theoretically, we expect that the well-performed classifier with lower errors could have higher weight while the poor one has roughly lower weight, so that the performance of AdaBoost could depend much more on these well performed classifiers, therefore, we appropriate a large n. However, we also prefer to have a robust algorithm for classifier with large errors rather than discard it directly, when n is too large, will close to zero with large error and its computing complexity will increase too, it is unnecessary and not rational to use an extreme large n. Table 2 shows the range changes of with respect to n, when , the changes from a relatively large value to 1, and when , the value of is also large, it is lack of effectiveness for boosting well-performed classifiers with . Additionally, when , classifier with large errors will be discarded directly since its weight is too small. These ns in Table 2 are tested and the results are shown in Figure 9. When the number of cycles is large, their differences are not very distinct. When n is less than 10 ( ) in left subfigure, the right subfigure of Figure 9 demonstrates that the performance degrades when or . In this paper, we set .

Figure 9.

Classification errors using different with different ns.

The base classifiers are listed as KNN (K-Nearest Neighbor), Fisher Linear Discriminant, Naive Bayes classifier, DTC(decision tree classifier) and SVM (Support Vector Machines), and RBFSVM, there are no further improved methods used except Robust AdaBoost and the initial is set as for RBFSVM in this section. Figure 10 shows the performances of the two AdaBoost methods. AB represents AdaBoost method, and RAB represents Robust AdaBoost method. The results of the 50th, the 100th, the 150th, and the 200th cycles are listed in Table 3.

Figure 10.

Performances of AdaBoost and Robust AdaBoost with different component classifiers. (a) Training errors of AB-KNN and RAB-KNN; (b) Training errors of AB-Fisher and RAB-Fisher; (c) Training errors of AB-Naive Bayes and RAB Naive Bayes; (d) Training errors of AB-Decision Tree and RAB-Decision Tree; (e) Training errors of AB-Linear SVM and RAB-Linear SVM; (f) Training errors of AB-RBFSVM and RAB-RBFSVM.

Table 3.

Errors of AdaBoost and Robust AdaBoost with different component classifiers (Bold numbers show the lowest errors of AdaBoost, Robust AdaBoost and classifier without boosting).

For Fisher Linear Discriminant, Robust AdaBoost performs as well as AdaBoost. For Naive Bayes, KNN, and Decision Tree classifiers, the Robust AdaBoost gives a little better boosting performance than AdaBoost, and both of them perform better than a single classifier. For Linear SVM and RBFSVM, the performance of Robust AdaBoost is much better than AdaBoost with lower errors and more stable changes. It is clear that the normal AdaBoost cannot boost SVM classifiers. The proposed RAB gives a better boosting result. Table 3 presents that Robust AdaBoost based RBFSVM component classifiers has the best result and it is also more efficient than the other classifiers without boosting.

Figure 11 shows the error changes of three different RAB-RBFSVM methods: RAB-RBFSVM without any improvements except the Robust AdaBoost; RAB-RBFSVM with improvements of diversity (RAB-RBFSVM−), and RAB-RBFSVM with both improvements of diversity and sample processing (RAB-RBFSVM+). The results 50th, 100th, 200th, 300th, 400th, 500th cycle are shown in Table 4.

Figure 11.

Performances of RAB-RBFSVM and Performances of RAB-RBFSVM, RAB-RBFSVM− and RAB-RBFSVM+

Table 4.

Errors of RAB-RBFSVM and RAB-RBFSVM+ in different numbers of cycles.

From Figure 10 and Table 4, RAB-RBFSVM− method with diversity performs better than RAB-RBFSVM, and our proposed method, RAB-RBFSVM+ with improvements of diversity and samples processing, achieves the best results with more stable changes and lower errors, which indicates the effectiveness of these improvements for RAB-RBFSVM.

In order to compare the proposed classifier with other different classifiers using common datasets from UCID, we test AdaBoost with KNN (K-Nearest Neighbor)component classifiers, (AB-KNN), AdaBoost with Fisher Linear Discriminant component classifiers, (AB-FLD), AdaBoost with Decision Tree component classifiers, (AB-DT), AdaBoost with Naive Bayes component classifiers, (AB-NB), AdaBoost with Linear SVM component classifiers, (AB-SVM), Diverse AdaBoostSVM, (DAB-SVM), our proposed Robust AdaBoost RBFSVM (RAB-RBFSVM+), in addition to these component classifiers, single RBFSVM classifier and linear SVM classifier are also compared. Table 5 shows the generation errors of different classifiers. Note that the proposed method generates lower errors on the datasets.

Table 5.

Generation errors of different classifiers: AB-KNN, AB-FLD, AB-DT, AB-NB, AB-SVM, DAB-SVM, RAB-RBFSVM+ (For every single dataset, bold number gives the lowest error while underline number gives the second lowest error).

4.2. Performance Advantages on Fire Smoke Detector

In this section, we will compare our proposed RAB-RBFSVM+ with other methods using our extracted fire smoke features in different combinations. Different static and dynamic features are extracted as described in Section 2. Extensive experiments are carried out to test these features in different combinations, and the effectiveness of our proposed method. For brevity, the static features are simply marked with their initials (Color-‘C’, Texture-‘T’, Wavelet energy-‘W’, Edge orientation histogram-‘E’, irregularity-‘I’ and sparsity-‘S’), dynamic features are marked as ‘MD’ (Motion direction), ‘MDC’ (change of motion direction) and ‘MS’ (motion speed), ‘Static’, ‘Dynamic’ and ‘All’ represent all the static features, dynamic features and all features combinations separately. We divide them into six groups according to the combination rules.

The proposed RAB-RBFSVM+ method outperforms all other methods tested as shown in Figure 12. It generates lower errors along with more stable performance for all feature combinations. Moreover, the proposed classifier with single static features gives better performance than single dynamic features in Figure 12a.

Figure 12.

Generation errors of different classifiers with different feature datasets.

For further comparison, we test feature combinations with the proposed RAB-RBFSVM+ as shown in Figure 12b–f to find out which feature combination can achieve more satisfied performance for fire smoke detection. We list the experimental statistics in Table 6. The proposed detector using CES+MD+MS, TCW+MDC and TCW+MDC+MS feature combinations generate the three lowest errors. The feature combinations are chosen for application to fire smoke detection. Different videos with or without fire smoke objects are tested. For comparison, the following measures are considered,

where is the number of truth positives which gives the number of fire regions classified as fire, is the number of truth negatives, i.e., the number of non-fire regions which are classified as non-fire, is the number of false positives, i.e., the number of non-fire regions classified as fire, and is the number of false negatives, i.e., the number of fire regions classified as non-fire. and are the total number of positives and negatives respectively. We compare the proposed method with different feature combinations and other two methods, [29,34], the rates described above are listed in Table 7. Our proposed method can achieve higher accuracy and detection rate along with lower false alarm rate as shown in Table 7.

Table 6.

Errors of RAB-RBFSVM+ with different fire smoke features.

Table 7.

Accuracy of our proposed method with different feature combinations, [29,34] (%).

Figure 13 shows the performance of our proposed detection method with TCW+MDC+MS features. Red boxes give the detected smoke or fire regions while green boxes give the motion regions without smoke or fire. There is no false smoke window detected by the proposed method in waste basket video and cars video, and small number of false smoke windows enclosing shaking trees in the last video due to the small size of these objects and low image quality of the noisy video.

Figure 13.

Detection results in three videos with or without smoke-fire. Smoke video with waste basket at frame (a) 121 (b) 166 and (c) 326. non-smoke video with cars on high way at frame (d) 11 (e) 26 and (f) 106. smoke-fire video with pedestrian at frame (g) 61 (h) 228 and (i) 244. Left image of each sub figure is the original frame image while the right one is the detected result.

5. Conclusions

This paper proposes a new fire smoke detector based on videos with Robust AdaBoost (RAB-RBFSVM) classifier. Features are extracted from different videos take by cameras. Static and dynamic features in different combinations are tested for fire smoke detection. The proposed classifier gives an efficient way of solving dilemma between errors and weights computing of AdaBoost. Further improvements including diversity and samples denoising for keeping balance of positive and negative training datasets are introduced to improve the performance of our detector. Tests with common datasets UCID and the extracted features from public video clips indicate that our proposed classifier outperforms the other classifiers. Extensive experiments on fire smoke detection are conducted and the results indicate effectiveness and performance advantages of our proposed fire smoke detector.

Author Contributions

All author have made significant contributions to this work. X.W. and X.L. proposed the structure of the paper. X.W. realized the algorithm and wrote the draft. H.L. gave important guiding advices to the implementation and the experiment.

Funding

This work was supported by the National Natural Science Foundation of China (No. 61871123), Key Research and Development Program in Jiangsu Province (No. BE2016739) and a Project Funded by the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Acknowledgments

The authors would like to thank the editor and the anonymous reviewers for their valuable and helpful comments, as well as the important guiding significance to our researches.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical Sensor Systems and Associated Algorithms for Fire Detection: A Review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef] [PubMed]

- Adib, M.; Eckstein, R.; Hernandez-Sosa, G. SnO2 Nanowire-Based Aerosol Jet Printed Electronic Nose as Fire Detector. IEEE Sens. J. 2018, 18, 494–500. [Google Scholar] [CrossRef]

- Cheon, J.; Lee, J.; Lee, I.; Chae, Y.; Yoo, Y.; Han, G. A Single-Chip CMOS Smoke and Temperature Sensor for an Intelligent Fire Detector. IEEE Sens. J. 2009, 9, 914–921. [Google Scholar] [CrossRef]

- Soliman, H.; Sudan, K.; Mishra, A. A smart forest-fire early detection sensory system: Another approach of utilizing wireless sensor and neural networks. In Proceedings of the 2010 IEEE Sensors, Pittsburgh, PA, USA, 1–4 November 2010; pp. 1900–1904. [Google Scholar]

- Millangarcia, L.; Sanchezperez, G.; Nakano, M.; Toscanomedina, K.; Perezmeana, H.; Rojascardenas, L. An Early Fire Detection Algorithm Using IP Cameras. Sensors 2012, 12, 5670–5686. [Google Scholar] [CrossRef] [PubMed]

- Gottuk, D.T.; Lynch, J.A.; Rose-Pehrsson, S.L.; Owrutsky, J.; Williams, F.W. Video image fire detection for shipboard use. Fire Saf. J. 2006, 41, 321–326. [Google Scholar] [CrossRef]

- Kastrinaki, V.; Zervakis, M.; Kalaitzakis, K. A survey of video processing techniques for traffic applications. Image Vis. Comput. 2003, 21, 359–381. [Google Scholar] [CrossRef]

- Shah, M.; Javed, O.; Shafique, K. Automated visual surveillance in realistic scenarios. IEEE MultiMedia 2007, 14, 30–39. [Google Scholar] [CrossRef]

- Braovic, M.; Stipanicev, D.; Krstinic, D. Cogent Confabulation based Expert System for Segmentation and Classification of Natural Landscape Images. Adv. Electr. Comput. Eng. 2017, 17, 85–94. [Google Scholar] [CrossRef]

- Xiong, Z.; Caballero, R.; Wang, H.; Finn, A.M.; Lelic, M.A.; Peng, P.Y. Video-based smoke detection: Possibilities, techniques, and challenges. In Proceedings of the IFPA, Fire Suppression and Detection Research and Applications—A Technical Working Conference (SUPDET), Orlando, FL, USA, 5–8 March 2007. [Google Scholar]

- Phillips Iii, W.; Shah, M.; da Vitoria Lobo, N. Flame recognition in video. Pattern Recogn. Lett. 2002, 23, 319–327. [Google Scholar] [CrossRef]

- Straumann, W.; Rizzotti, D.; Schibli, N. Method and Device for Detecting Fires Based on Image Analysis. European Patent EP 1,364,351, 26 February 2002. Available online: https://patents.google.com/patent/EP1364351B1/en (accessed on 2 November 2018).

- Chen, T.H.; Wu, P.H.; Chiou, Y.C. An early fire-detection method based on image processing. In Proceedings of the 2004 International Conference on Image Processing, ICIP’04, Singapore, 24–27 October 2004; Volume 3, pp. 1707–1710. [Google Scholar]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Flame detection in video using hidden markov models. In Proceedings of the IEEE International Conference on Image Processing, ICIP 2005, Genoa, Italy, 11–14 September 2005; Volume 2, p. II-1230. [Google Scholar]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Cetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recogn. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Chunyu, Y.; Jun, F.; Jinjun, W.; Yongming, Z. Video fire smoke detection using motion and color features. Fire Technol. 2010, 46, 651–663. [Google Scholar] [CrossRef]

- Calderara, S.; Piccinini, P.; Cucchiara, R. Vision based smoke detection system using image energy and color information. Mach. Vis. Appl. 2011, 22, 705–719. [Google Scholar] [CrossRef]

- Toreyin, B.U.; Dedeoglu, Y.; Cetin, A.E. Contour based smoke detection in video using wavelets. In Proceedings of the 2006 14th European Signal Processing Conference, Florence, Italy, 4–8 September 2006; pp. 1–5. [Google Scholar]

- Çelik, T.; Özkaramanlı, H.; Demirel, H. Fire and smoke detection without sensors: Image processing based approach. In Proceedings of the 2007 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 1794–1798. [Google Scholar]

- Surit, S.; Chatwiriya, W. Forest fire smoke detection in video based on digital image processing approach with static and dynamic characteristic analysis. In Proceedings of the 2011 First ACIS/JNU International Conference on Computers, Networks, Systems and Industrial Engineering (CNSI), Jeju Island, Korea, 23–25 May 2011; pp. 35–39. [Google Scholar]

- Long, C.; Zhao, J.; Han, S.; Xiong, L.; Yuan, Z.; Huang, J.; Gao, W. Transmission: A new feature for computer vision based smoke detection. In Proceedings of the International Conference on Artificial Intelligence and Computational Intelligence, Sanya, China, 23–24 October 2010; pp. 389–396. [Google Scholar]

- Tian, H.; Li, W.; Wang, L.; Ogunbona, P. Smoke detection in video: An image separation approach. Int. J. Comput. Vis. 2014, 106, 192–209. [Google Scholar] [CrossRef]

- Yuan, F. A fast accumulative motion orientation model based on integral image for video smoke detection. Pattern Recogn. Lett. 2008, 29, 925–932. [Google Scholar] [CrossRef]

- Yuan, F. A double mapping framework for extraction of shape-invariant features based on multi-scale partitions with AdaBoost for video smoke detection. Pattern Recogn. 2012, 45, 4326–4336. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, Z.; Xu, M. Forest fire smoke video detection using spatiotemporal and dynamic texture features. J. Electr. Comput. Eng. 2015, 2015, 40. [Google Scholar] [CrossRef]

- Ye, W.; Zhao, J.; Wang, S.; Wang, Y.; Zhang, D.; Yuan, Z. Dynamic texture based smoke detection using Surfacelet transform and HMT model. Fire Saf. J. 2015, 73, 91–101. [Google Scholar] [CrossRef]

- Tung, T.X.; Kim, J.M. An effective four-stage smoke-detection algorithm using video images for early fire-alarm systems. Fire Saf. J. 2011, 46, 276–282. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Z.; Han, S.; Qu, C.; Yuan, Z.; Zhang, D. SVM based forest fire detection using static and dynamic features. Comput. Sci. Inf. Syst. 2011, 8, 821–841. [Google Scholar] [CrossRef]

- Cai, M.; Lu, X.; Wu, X.; Feng, Y. Intelligent video analysis-based forest fires smoke detection algorithms. In Proceedings of the 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 1504–1508. [Google Scholar]

- Zhao, Y.; Li, Q.; Gu, Z. Early smoke detection of forest fire video using CS Adaboost algorithm. Optik-Int. J. Light Electron Opt. 2015, 126, 2121–2124. [Google Scholar] [CrossRef]

- Yuan, F.; Fang, Z.; Wu, S.; Yang, Y. Real-time image smoke detection using staircase searching-based dual threshold AdaBoost and dynamic analysis. Image Process. Iet 2015, 9, 849–856. [Google Scholar] [CrossRef]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep convolutional neural networks for forest fire detection. In Proceedings of the 2016 International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Baik, S.W. Early Fire Detection using Convolutional Neural Networks during Surveillance for Effective Disaster Management. Neurocomputing 2017, 288, 30–42. [Google Scholar] [CrossRef]

- Wu, X.; Lu, X.; Leung, H. An adaptive threshold deep learning method for fire and smoke detection. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 1954–1959. [Google Scholar]

- Steinwart, I. Sparseness of support vector machines. J. Mach. Learn. Res. 2003, 4, 1071–1105. [Google Scholar]

- Maji, S.; Berg, A.C.; Malik, J. Classification using intersection kernel support vector machines is efficient. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2008, CVPR 2008, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Ring, M.; Eskofier, B.M. An approximation of the Gaussian RBF kernel for efficient classification with SVMs. Pattern Recogn. Lett. 2016, 84, 107–113. [Google Scholar] [CrossRef]

- Zheng, S.; Liu, J.; Tian, J. An efficient star acquisition method based on SVM with mixtures of kernels. Pattern Recogn. Lett. 2005, 26, 147–165. [Google Scholar] [CrossRef]

- Scholkopf, B.; Sung, K.K.; Burges, C.J.; Girosi, F.; Niyogi, P.; Poggio, T.; Vapnik, V. Comparing support vector machines with Gaussian kernels to radial basis function classifiers. IEEE Trans. Signal Process. 1997, 45, 2758–2765. [Google Scholar] [CrossRef]

- Bugarić, M.; Jakovčević, T.; Stipaničev, D. Adaptive estimation of visual smoke detection parameters based on spatial data and fire risk index. Comput. Vis. Image Underst. 2014, 118, 184–196. [Google Scholar] [CrossRef]

- Shu, X.; Porikli, F.; Ahuja, N. Robust orthonormal subspace learning: Efficient recovery of corrupted low-rank matrices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3874–3881. [Google Scholar]

- Levi, K.; Weiss, Y. Learning object detection from a small number of examples: The importance of good features. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 2, p. II. [Google Scholar]

- Stricker, M.A.; Orengo, M. Similarity of color images. In Proceedings of the SPIE Conference on Storage and Retrieval for Image and Video Databases III, Birmingham, UK, 11–14 September 1995; Volume 2420, pp. 381–392. [Google Scholar]

- Tian, H.; Li, W.; Wang, L.; Ogunbona, P. A novel video-based smoke detection method using image separation. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo (Icme), Melbourne, Australia, 9–13 July 2012; pp. 532–537. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2005, San Diego, CA, USA, 20–26 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Valentini, G.; Dietterich, T.G. Bias-variance analysis of support vector machines for the development of SVM-based ensemble methods. J. Mach. Learn. Res. 2004, 5, 725–775. [Google Scholar]

- Li, X.; Wang, L.; Sung, E. AdaBoost with SVM-based component classifiers. Eng. Appl. Artif. Intell. 2008, 21, 785–795. [Google Scholar] [CrossRef]

- Melville, P.; Mooney, R.J. Creating diversity in ensembles using artificial data. Inf. Fusion 2005, 6, 99–111. [Google Scholar] [CrossRef]

- Kuncheva, L.I.; Whitaker, C.J. Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Lichman, M. UCI Machine Learning Repository; University of California: Oakland, CA, USA, 2013. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).