1. Introduction

Collecting and generating vehicle information in an urban traffic monitoring system is a fundamental task of intelligent transportation systems (ITSs). Nowadays, as a result of the rapid pace of urbanization, vision-based vehicle detection faces great challenges. In addition to the challenges of outdoor visual processing, such as illumination changes, poor weather conditions, shadows, and cluttered backgrounds, vehicle detection by a stationary camera is further hindered by its own unique challenges, including various appearances and vehicle poses, and partial occlusion due to the loss of depth information or to traffic congestion.

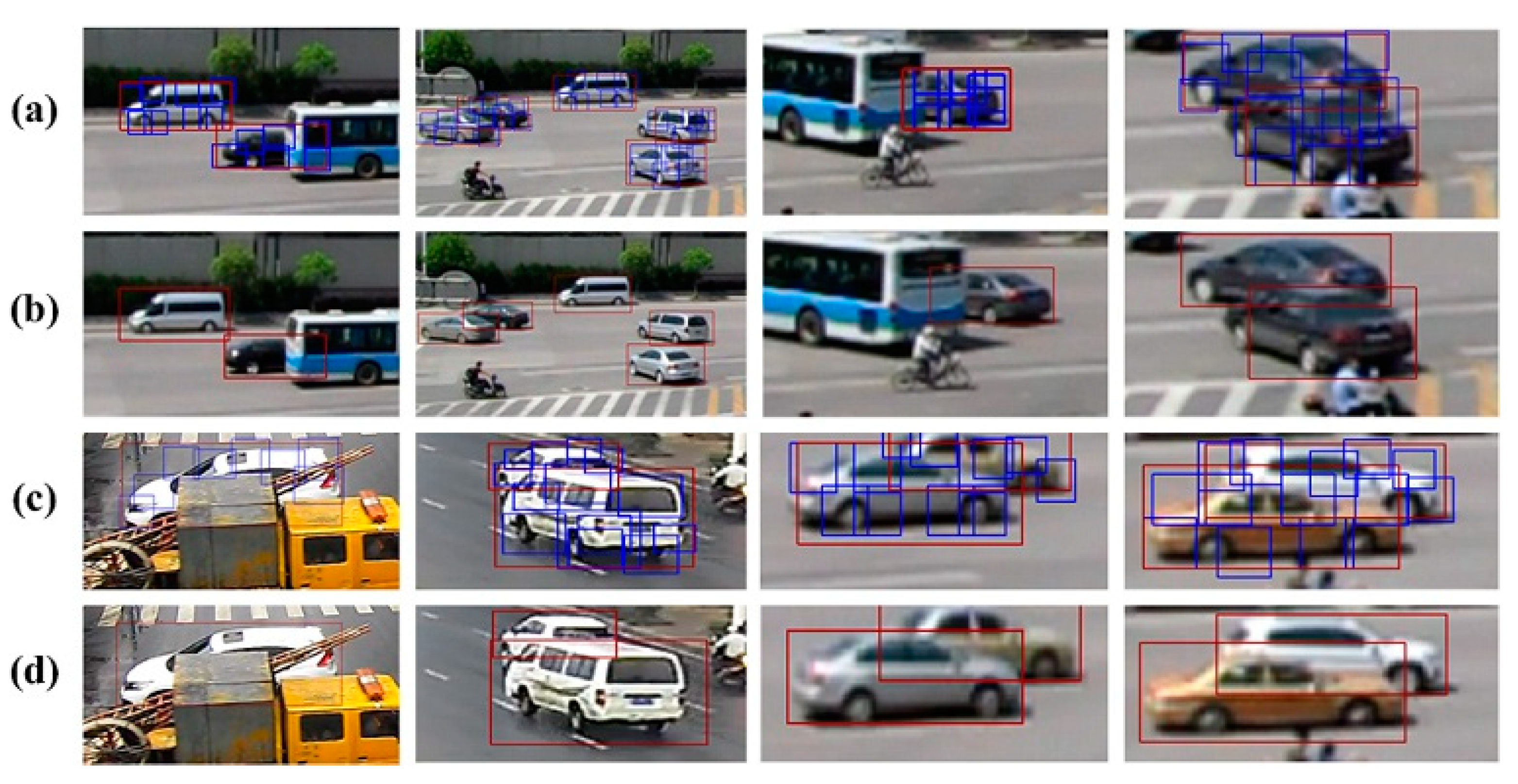

In fact, heavy traffic congestion has become common in many large cities, and as a result, many vehicle detection approaches that apply motion information to detect vehicles are not suitable, because the congestion causes vehicles to slow down, which reduces their motion (see

Figure 1 for an example). During the past decade, vision-based object detection has been formulated as a binary classification problem with the goal of separating a target object from the background, and many of these algorithms achieve good performance with robust object detection.

Among the various binary classification approaches, the deformable part model (DPM) has been regarded with increasing interest. Evaluated using PASCAL Visual Object Classes (VOC) challenge datasets [

1], DPM achieved state-of-the-art results in average precision (AP) for vehicle detection on the 2010 and 2011 benchmarks [

2]. Since objects are detected as deformable configurations of parts, DPM should perform better at finding partially occluded objects; however, that performance has not been fully demonstrated for vehicle detection. Moreover, fusing semantic scene information by DPM methods, particularly in congested and complex traffic conditions, needs to be improved.

Motivated by prior work on deformable part models, mixtures of DPMs [

3], and scene modeling [

4], this paper proposes a probabilistic framework for vehicle detection based on fusing the results of structured part models and viewpoint inference. As the configuration of vehicle parts varies greatly as a function of monitoring viewpoints, structured part models are learned for each possible viewpoint using the part detection results. On the other hand, potential viewpoints of vehicles could be predicted by spatial context–based inference as vehicle motion is constrained by road structures and traffic signals.

The framework is depicted in

Figure 2, which consists of two parts, offline learning and online processing. In this research, all the possible viewpoints and occlusion states for the stationary video cameras are summarized and the viewpoint-related discriminative part models are learned using an existing approach, described in [

5]. All possible viewpoints in a certain location are generated by spatial context–based inference, where the logic constraint is obtained by trajectory analysis. This research extends DPM-based vehicle detection to multiview DPMs within a single probabilistic framework, which greatly improves the overall efficiency of vehicle detection. Such a method could be extended to on-road vehicle detection.

The remainder of this paper is organized as follows. In

Section 2, an overview of the related work is given. The online probabilistic framework of vehicle detection is proposed in

Section 3 and the offline discriminative part model learning is described in

Section 4. Viewpoint detection based on spatial context inference is proposed in

Section 5. Experimental results and analysis are presented in

Section 6, followed by a conclusion and recommendations for future work in

Section 7.

2. Related Works

Vision-based vehicle detection is widely used in ITSs in many parts of the world. Moving object detection methods are applied in many simple background environments, such as bridges, highways, and urban expressways. These methods can be described or defined as background modeling, frame differencing, and optical flow. They all have the ability to handle slight illumination changes; however, all of the techniques exhibit some performance limitations, as they are unable to detect stationary vehicles and incorrectly classify some moving objects as moving vehicles. Moreover, in relation to the congestion issue for urban surveillance, these methods may incorrectly identify several closely spaced vehicles as a single vehicle, or may not be able to detect any vehicle due to the lack of motion in a congested scene. Therefore, some research efforts attempt to utilize visual features of vehicles to detect them.

Simple features such as color, texture, edge, and object corners are usually used to represent vehicles. Then the features are provided to a deterministic classifier to identify the vehicles [

6,

7,

8,

9]. Due to the unavailability or unreliability of some classification features in some instances, such as partial object occlusion, the utility of these methods can be limited to specific applications.

Many recent studies on part-based models have been conducted to recognize objects while maintaining efficient performance under occluded conditions [

10,

11]. Using these methods, a vehicle is considered to be composed of a window, a roof, two rear-view mirrors, wheels, and other parts [

12,

13]. After part detection, the spatial relationship, motion cues, and multiple part models are usually used to detect vehicles [

14]. Wang et al. [

15] applied local features around the roof and two taillights (or headlights) to detect vehicles and identify partial occlusion. Li et al. proposed an AND-OR graph method [

16,

17] to detect front-view and rear-view vehicles. Saliency-based object detection has also been proposed [

18].

Instead of manually identifying vehicle parts, they can be identified and learned automatically using a deformable part-based model. DPM was first proposed by Felzenszwalb [

1] and achieved state-of-the-art results in PASCAL object detection challenges before the deep learning framework appeared, which had low real-time performance. Variants of this pathfinding work have been proposed by many subsequent research efforts. Niknejad et al. [

19] employed this model for vehicle detection in which a vehicle is decomposed into five components: front, back, side, front truncated, and back truncated. Each component contained a root filter and six part filters, which were learned using a latent support vector machine and a histogram of oriented gradients features. In [

5], the DPM was combined with a conditional random field (CRF) to generate a two-layer classifier for vehicle detection. This method can handle vehicle occlusion in the horizontal direction; however, experiments using artificial occlusion samples might suggest limited effectiveness of their approach in real-world traffic congestion conditions. In particular, in order to handle partial occlusions, various approaches were proposed to estimate the degree of visibility of parts, in order to properly weight the inaccurate scores of root and part detectors [

20,

21,

22,

23]. Wang et al. [

24] proposed an on-road vehicle detection method based on a probabilistic inference framework. The relative location relationships among vehicle parts is used to overcome the challenges from multiview and partial observation.

Additionally, some researchers directly built occlusion patterns from annotated training data to detect occluded vehicles. Pepikj et al. [

25] modeled occlusion patterns in specific street scenes with cars parked on either side of the road. The established occlusion patterns demonstrated the ability to aid object detection under partially occluded conditions. Wang et al. [

26] established eight types of vehicle occlusion visual models. The suspected occluded vehicle region is loaded into a locally connected deep model of the corresponding type to make the final determination. However, it is hard to establish a comprehensive set of all possible occlusion patterns in real-world traffic scenes.

Although the above approach effectively handles localized vehicle occlusion, more sophisticated methods are still needed for complex urban traffic conditions that are severely occluded between vehicles or multiple nonvehicle objects. While deformable part models have become quite popular, their value has not been demonstrated in video surveillance by stationary cameras. Meanwhile, by observation, it is found that for a specific monitoring scene, the vehicle motion pattern corresponding to road structures can be obtained with offline learning, and the obtained vehicle motion pattern can be used in deformable part models to enrich and improve the vehicle detection performance. In this paper, we summarize all the possible viewpoints and occlusion states in stationary video cameras, train the structured part models for each viewpoint by combining DPM with CRF in [

5] to handle occlusion, and propose a probabilistic framework addressing the spatial contextual inference of viewpoint and part model detection results. The pros and cons of above related work are concluded in

Table 1.

3. Online Probabilistic Framework

Given a frame in a traffic video, the online vehicle detection procedure is depicted in

Figure 3. For each dominant viewpoint

, the features vector

is generated by the DPM, which includes the scores of root, part filters, and best possible placement of parts. The viewpoint-related CRF is treated as the second-layer classifier, which uses the information from the DPM to detect the occluded vehicles. The detection conditional probability

and location are output by the CRF. As the viewpoint is a location-specific parameter, this work uses a table-checking method to obtain the probability of a certain viewpoint

. In this research,

are described in detail in Section V. The problem of vehicle detection can be represented by the following framework:

where

is the estimated location of a vehicle center for viewpoint k,

is the knowledge of scene information, and

. The objective is to find the

that maximizes

. For the viewpoint from a fixed location that can be treated as independent from vehicle detection, the estimation can be converted to

where

is an adjustment function to avoid the effect of error of viewpoint probability. If

,

, otherwise

.

Below, the training of structured part-based vehicle models including DPM and CRF is given, and the estimation to

of Equation (2) is described in

Section 4. Typical types of road structures and the method of generating the possible viewpoints table will be defined, and the estimation to

of Equation (2) will be given in

Section 5.

4. Structured Part-Based Vehicle Models

The viewpoints of vehicles are closely related to the spatial contextual information of a traffic scene and the effect of possible occlusion states. In this paper, a summary of all possible vehicle viewpoints from the fixed camera location is proposed (as shown in

Figure 4). Since cameras are usually installed at a certain distance above the ground, vehicle images for front viewpoints and rear viewpoints are similar in their visual feature space [

17]. Similarly, this same observation yields four additional pairs of common viewpoints: up-front and up-rear, up-right-front and up-left-rear, up-left-front and up-right-rear, right and left. As a result, the training samples are merged from the original 11 categories to five, and models are learned on five viewpoints accordingly, i.e.,

k = 5. These are labelled as up, left/right, up-front/up-rear, up-right-front/up-left-rear, and up-left-front/up-right-rear.

4.1. Deformable Part Model

The vehicle model for the

kth viewpoint is based on a star model of a pictorial structure and is described by a root model and several parts models. A set of permitted locations for each part with respect to the root is combined with the cost of deformation to each part. Formally, it is an n + 2 tuple, as defined by Equation (3):

where

is the root filter,

n is the number of parts

and

b is the bias term. Each part model

Pi is defined by a 3-tuple (

Fi,

vi,

di), where

Fi is the

ith part filter,

vi is a bidimensional vector that specifies the fixed position for part

i relative to the root position, and

di is a four-dimensional vector that specifies the coefficients of a quadratic function defining a deformation cost for each placement of part

i relative to

vi. The score is given by the following formula:

where

is a subwindow in the space-scale pyramid H with the upper left corner in

Pi.

gives the displacement of the

ith part relative to its anchor position and

are deformation features.

4.2. CRF Model

Occlusion can be defined in DPM by a grammar model; however, it is time consuming. Niknejad [

5] provided a method using DPM and CRF as a two-layer classifier to detect occluded vehicles. In the first layer, DPM generates root and parts scores and the relative configuration for parts. In the second layer, the CRF uses the output from the DPM to detect the occluded objects.

4.2.1. Occlusion State

The occlusion states are finite variables relating to the viewpoints and can be defined based on the visibility of root and part filters

. We sum up all possible occlusion states for each viewpoint.

Figure 5 depicts an example of parts clustering of a vehicle up-front model from up to down direction. The occlusion state

sj has the character of

.

4.2.2. CRF Model

{

Pi} are set to be the nodes in lowest layer of the CRF, and {

sj} are set to be other nodes in the CRF, as shown in

Figure 6. Given a bounding box

i with label

yi, we can calculate the detection conditional probability by maximizing the probability over all occlusion states

sj:

where

is the features vector and

is a CRF parameter.

is generated by the DPM and includes the scores for parts and best possible placement of the parts. The probability

with the

kth viewpoint is converted into

with labeled vehicle

yi in the

kth viewpoint.

In the CRF, the maximum of

can be converted to get the largest energy function

over all occlusion stages.

contains energy from both parts and their relations.

where

,

are the components of

in the CRF model and correspond to the part information and the relative spatial relation with other parts, respectively, and

features depend on a single hidden variable in the CRF model, while

features depend on the correlation between pairs of parts.

A belief propagation algorithm is generated to estimate each occlusion state. The detection conditional probability is as follows:

Then the detection probability can be calculated by:

Using a Bayesian formula, the likelihood of each occlusion state is calculated as follows:

where

is constant for all occlusion stages and calculated by maximizing over all possible occlusion states [

5]. The marginal distribution for visibility over each individual part

or pairs of parts corresponding to edges in the graph is calculated as follows:

For the root

, the energy is calculated by concatenating the weight vectors of the trained root filter and HOG(Histogram of Oriented Gradient) feature at the given position:

For parts

, the score of parts is summed according to their appearance in the direction view as the following functions:

For the spatial correlation, a normal distribution is used through the following formula:

where

,

are the relative positions of parts

,

in the

x and

y directions.

, are parameters that correspond to the relative spatial relation between parts , in the x and y directions, respectively. are mean and covariance values that correspond to the relative spatial relation between parts , in the x direction that was extracted for all positive samples from the DPM training data.

4.2.3. Model Learning

For each viewpoint k, the root filter and part filters , are learned using the image samples of the category. Here, n is set as a preassigned constant value, which simplifies the calculation. The MLE (maximum likelihood estimation) method is used to estimate the parameters from the training samples.

5. Viewpoint Detection by Spatial Context-Based Inference

Normally, distributions on where, when, and what types of vehicle activities occur have a strong correlation with the road structure, which is defined by road geometry, number of traffic lanes, and traffic rules. Given a type of road structure, the viewpoint of a subject vehicle at any specific location can be predicted by the scene model.

5.1. Scene Modeling

A scene model can be manually described or automatically extracted from the static scene appearance. On the other hand, a scene model can be extracted from regular vehicle motion patterns, e.g., trajectories, which tends to be a better method than the other two methods.

5.1.1. Trajectory Coarse Clustering

During an uncongested traffic period, general vehicle trajectories can be obtained from coarse vehicle trajectories via a blob tracker based on background subtraction and length-width ratio constraints. Through a period of observation, it is possible to obtain thousands of trajectories from a scene.

Before clustering, some outliers caused by tracking errors must to be removed. Trajectories with large average distances to neighbors and with very short lengths are rejected as outliers. After that, the main flow direction (MFD) vector

is used to group the trajectories:

where

and

are the position vectors of the starting point and ending point of a trajectory, respectively.

Each cluster is described as a Gaussian function with a mean

and covariance matrix

. The overall distribution

considering all MFD vectors can be modeled as a mixture of Gaussians (MoG):

Here,

is the number of classes,

is the prior probability, and

is the normal distribution for the

kth class. Then, each vector

in the training period is assigned to a class according to

The number of Gaussians in the mixture is determined by the number of clusters.

5.1.2. Classification Filtering

The detailed filtering procedure was developed in the authors’ previous work [

27]. First, clusters that are significantly broader than the remaining clusters are removed:

where

is the determinant of the covariance matrix of the

mth cluster and

is that of any other.

Second, clusters that have obvious overlaps are merged. Here, the Bhattacharyya distance–based error estimation method is used [

28]. The expected classification error E (in %) between two classes is defined as

where

b describes the Bhattacharyya distance. In this paper, the threshold is set to 1.5. Then, if the Bhattacharyya distance

, the two clusters will be merged.

Lastly, isolated outliers are removed. Such error is generated by incorrect tracking due to occlusion or formed when overlaps split. In such cases, the maximum and minimum peak coefficients based adaptive mean and variance estimation method can be used [

29].

5.1.3. Trajectory Fine Clustering

After coarse clustering, trajectories moving in opposite directions are separated, regardless of their relative spatial proximity. The road structure of the scene can be represented by path models with entries, exits, and paths between them. However, trajectories with similar moving directions are clustered regardless of the magnitude of their relative spatial separation. During the fine clustering procedure, each class of trajectories is further clustered according to different spatial distributions.

The trajectory similarity is defined by the Hausdorff distance, which considers the location relationship between trajectories and is a simple algorithmic calculation.

Considering two trajectories

and

, where

,

are the spatial coordinates, for an observation

on A, its nearest observation on B is

The Hausdorff distance between A and B is

where

. A summary of the fine clustering procedure is as follows:

- (1)

The first trajectory of the dataset initializes the first route model.

- (2)

Other trajectories are compared with the existing route models. If the calculated distance by Equation (12) is smaller than threshold

, the route model is updated (as shown in

Figure 7). Otherwise, a new model is initialized.

- (3)

If two route models are sufficiently overlapped, they are merged.

5.2. Viewpoint Inference

5.2.1. Some Phenomena in Imaging

As show in

Figure 8, according to the imaging principle, plane

, determined by the camera's optical axis

, and projection point P of the camera's center on ground plane

correspond to the center line

in the image plane

. Let

be the line segment of the interaction of the camera’s field of vision (FOV), plane

, and

. Then,

is the corresponding line segment in plane

.

- (1)

When a car goes from point A to point B, its image in plane starts from point a to point b with viewpoints from up-front to up.

- (2)

When a car appears in the camera’s far FOV and comes along line segment , the viewpoint is right.

- (3)

When a car is in the camera’s near FOV and comes along line segment , the viewpoint is up.

In conclusion, vehicle viewpoint is directly determined by location and motion direction, which can be described by the trajectory gradient.

5.2.2. Inference of Viewpoint Probability Distribution

In the far FOV, the vehicle area is small and its viewpoints can be treated as two kinds, left/right and up-front/up-back. In the near FOV, the vehicle viewpoint is set to be up. In the middle FOV, the viewpoints of vehicles are divided by trajectory gradient, as shown in

Figure 9, where region

is in the up-front/up-rear viewpoint, region

is in the up-left-front/up-right-rear viewpoint, and region

is in the up-right-front/up-left-rear viewpoint.

For a fixed location

, if it is located in N path models, then the probability of vehicle viewpoint

i,

, in

r is

where

is the number of total trajectories passing through the cross-section with

r in model

t and

is the number of trajectories with viewpoint

i in the

tth model. An example of the detection result of viewpoint probability with a stationary camera is shown in

Figure 10.

5.2.3. Implementation

The classification of near FOV, middle FOV, and far FOV can be done according to the camera parameters and the size of the vehicle; however, it can be difficult to obtain the relevant parameters for a fixed camera needed to help determine the appropriate FOV region. In this paper, a simple division method is used that classifies the top one-sixth of an image as without consideration for the target area, the next one-fifth from the top is defined as the far FOV of the camera, the bottom one-fifth is set to be near FOV for the camera, and the remainder of the middle of the image is defined as the middle FOV for the camera.

| Pseudocode of algorithm |

| 1 Function fusingpartbasedObjectRecognition () |

2 Build image pyramid

for s = 1 to k

IS*G→IG

calumniate IS+1 by subsampling IS with factor

next |

3 Build HOG feature pyramid

for s = 1 to k

calumniate gradient orientation image and gradient magnitude image

for y = 1 to H step 8

for x = 1 to W step 8

set as gradient orientation histogram based on IΨ,S and weighted by IMag,S

next

next

calculate through normalization of with respect to four blocks containing current cell

next |

4 Calculate matching score functions

for s = 1 to k

for y = 1 to H step 8

for x = 1 to W step 8

for i = 1 to M

calculate mi(x,y,s,ui)

if i≠1 then

generalized distance transform calculate Pi(x,y,s) for subsequent backward DP step

end if

next

next

next

next |

5 Find all local max

Find all local maximal of root part above similarity threshold |

7. Conclusions

In this paper, we propose a vehicle detection method by combining scene modeling and deformable part models for fixed cameras, particularly for use in congested traffic conditions. The proposed method consists of constructing a DPM + CRF model for each viewpoint to represent vehicles, training scene models, viewpoint inference, and vehicle object detection by addressing both partial observation and varying viewpoints within a single probabilistic framework.

Compared with the current methods, there are two main innovations in this paper. First, we use the scene context information to constrain the possible location and viewpoint of a vehicle, which is reasonable since the camera is often in a fixed location in a real traffic scene. In practical applications, the judicious usage of scene information can potentially eliminate some interference and reduce required computation times. Second, based on the combined DPM and CRF model, the viewpoint-related vehicle models are built and the occlusion states are defined individually. Compared to the MDPM, the proposed method provides semisupervised viewpoint direction, which performs well for a variety of traffic surveillance conditions. Experimental results demonstrate the efficiency of the proposed work on vehicle detection by a stationary camera, especially for solving the occlusion problem in congested conditions.