Build a Robust Learning Feature Descriptor by Using a New Image Visualization Method for Indoor Scenario Recognition

Abstract

:1. Introduction

2. Related Work

3. Algorithm

3.1. Grey Value

3.2. HOG Feature Descriptor

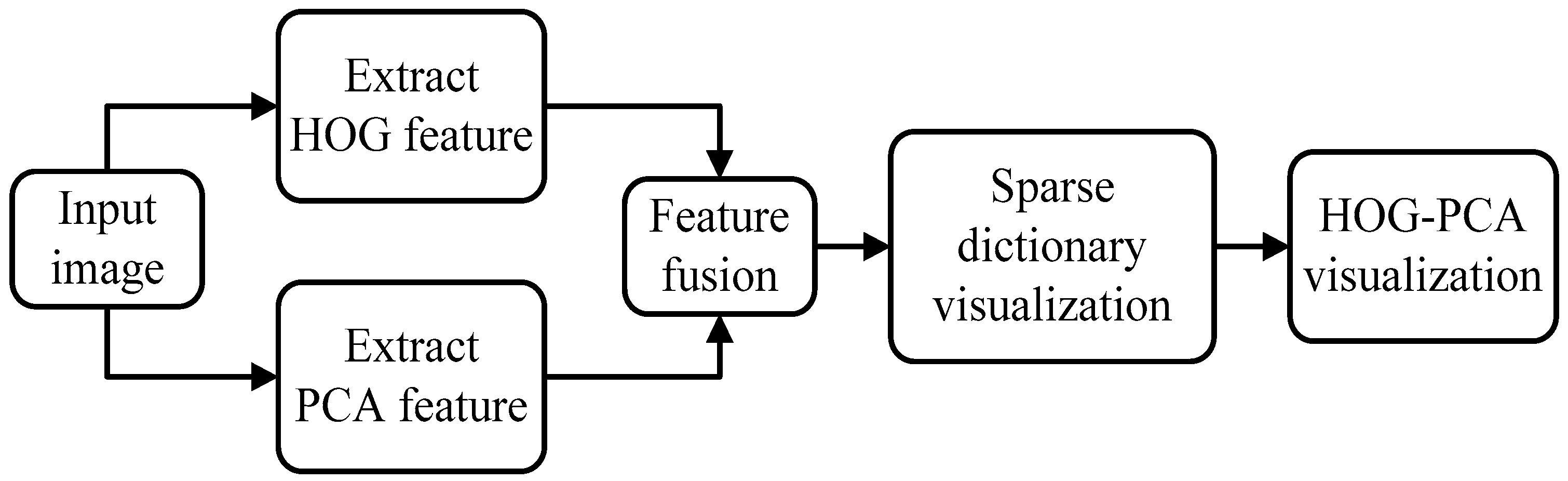

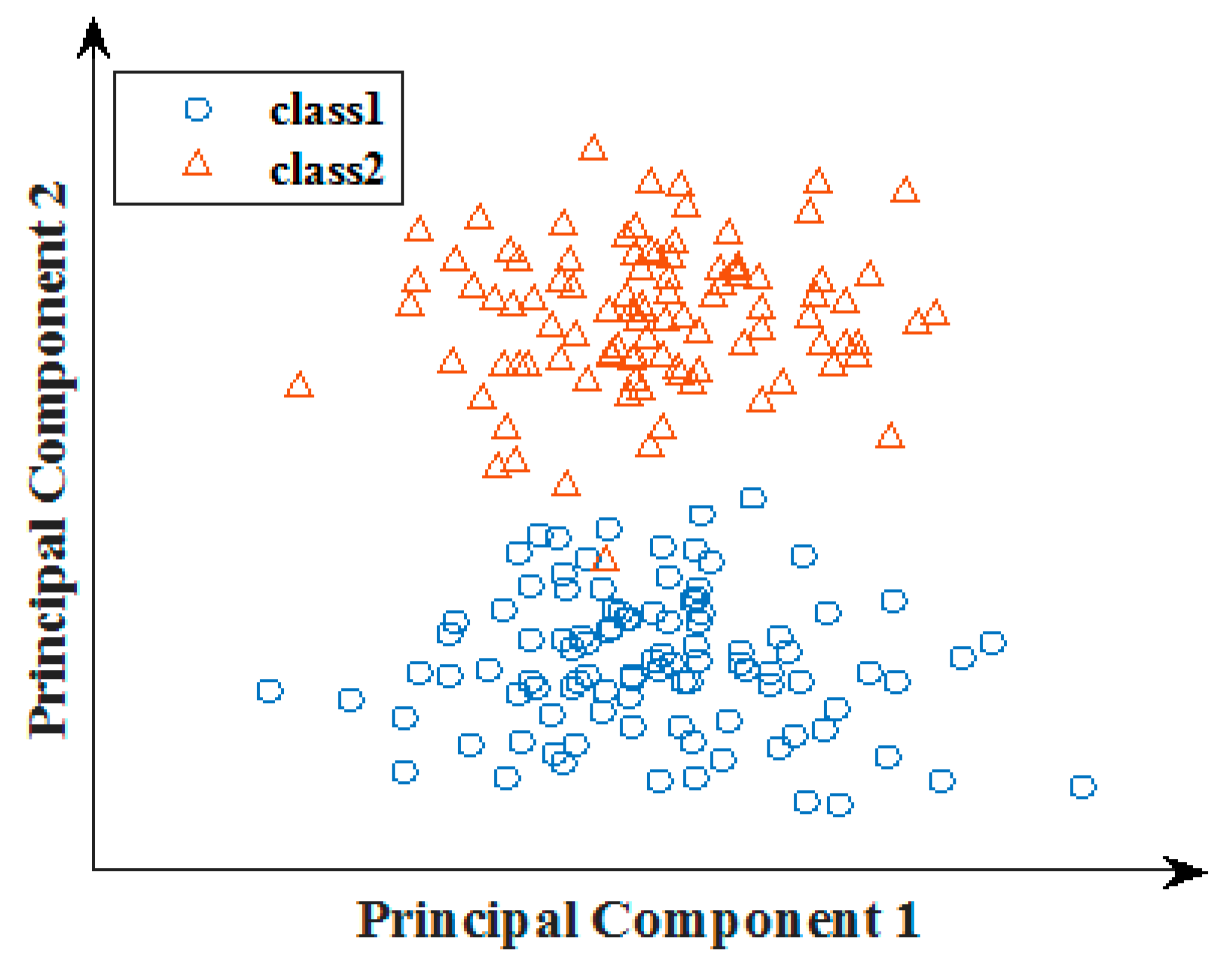

3.3. HOGP Feature Descriptor

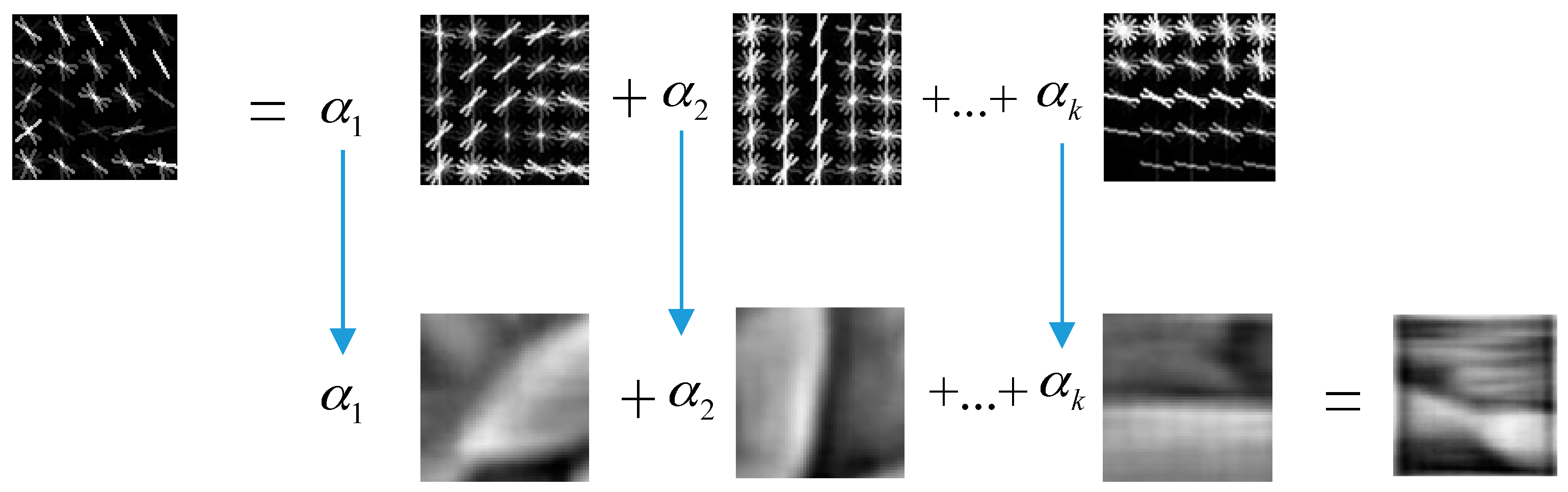

3.4. Visualization Method

4. Results

4.1. Experiment Environment

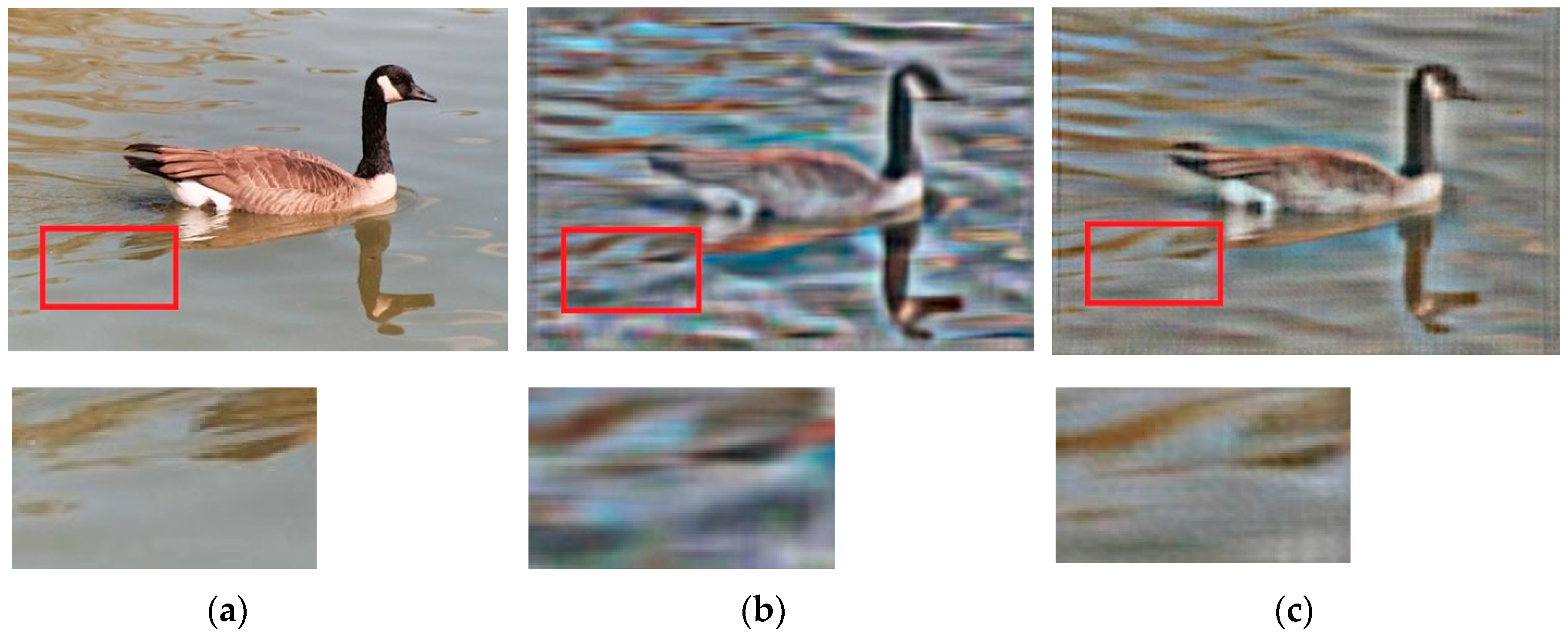

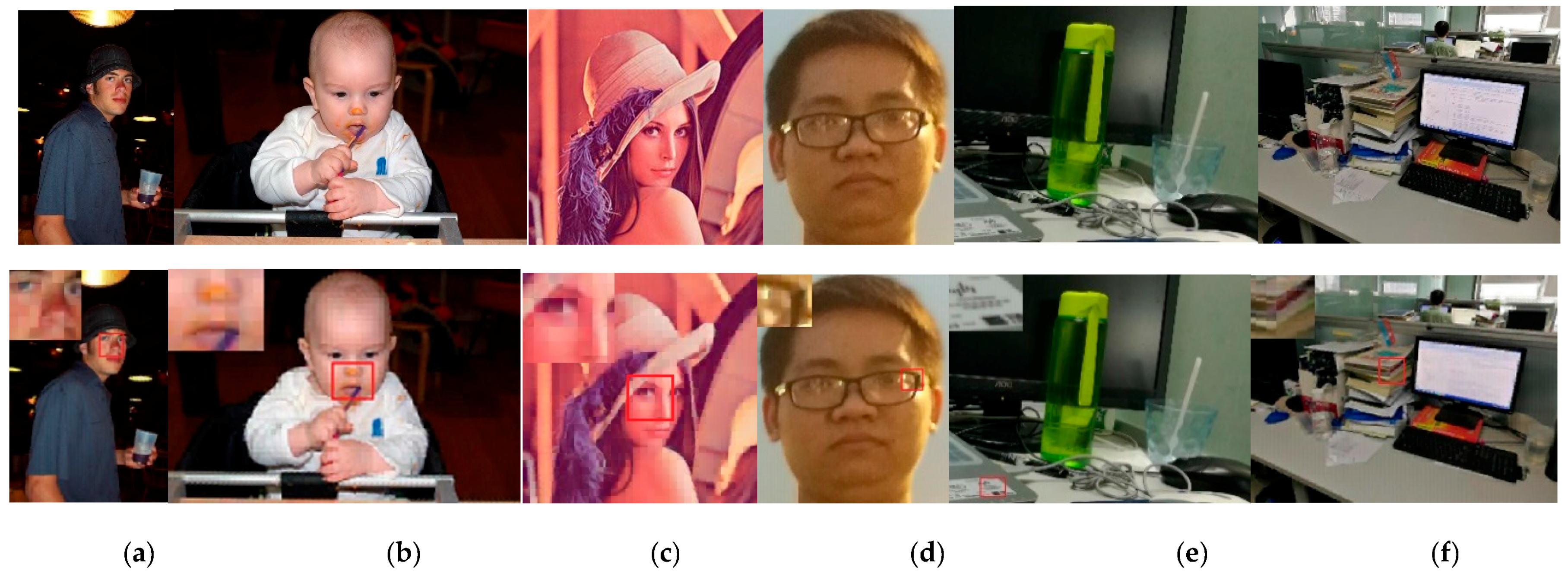

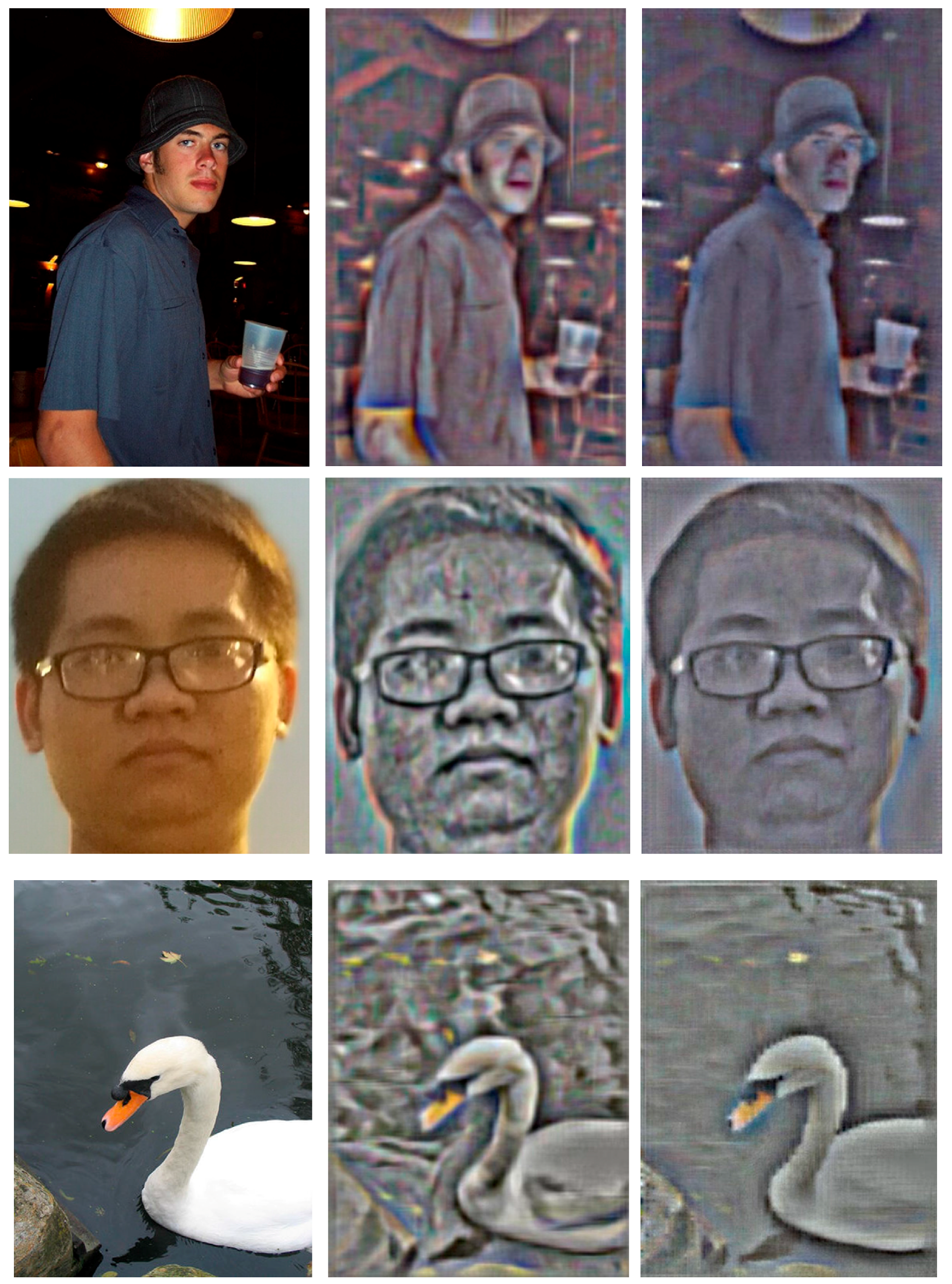

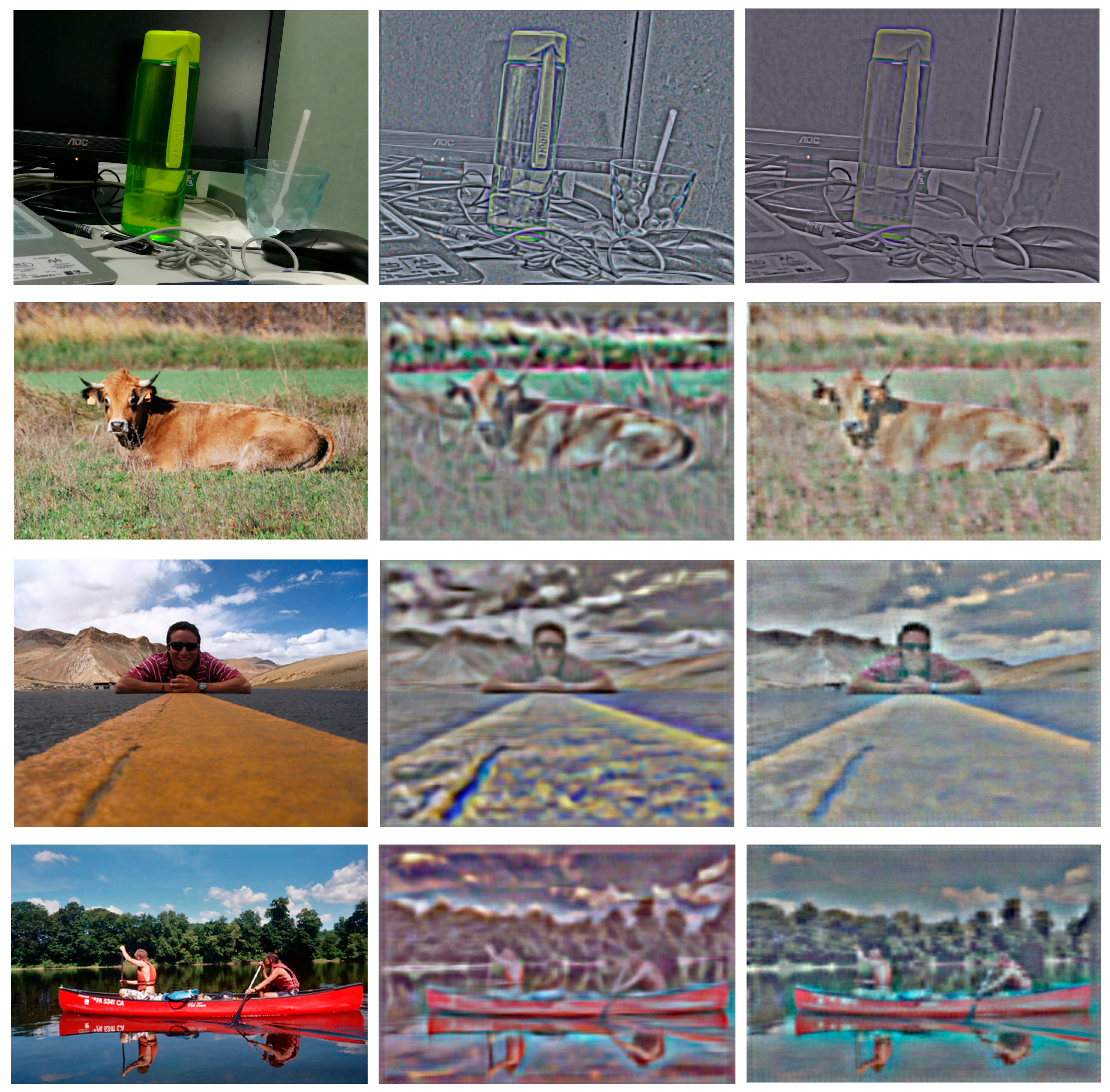

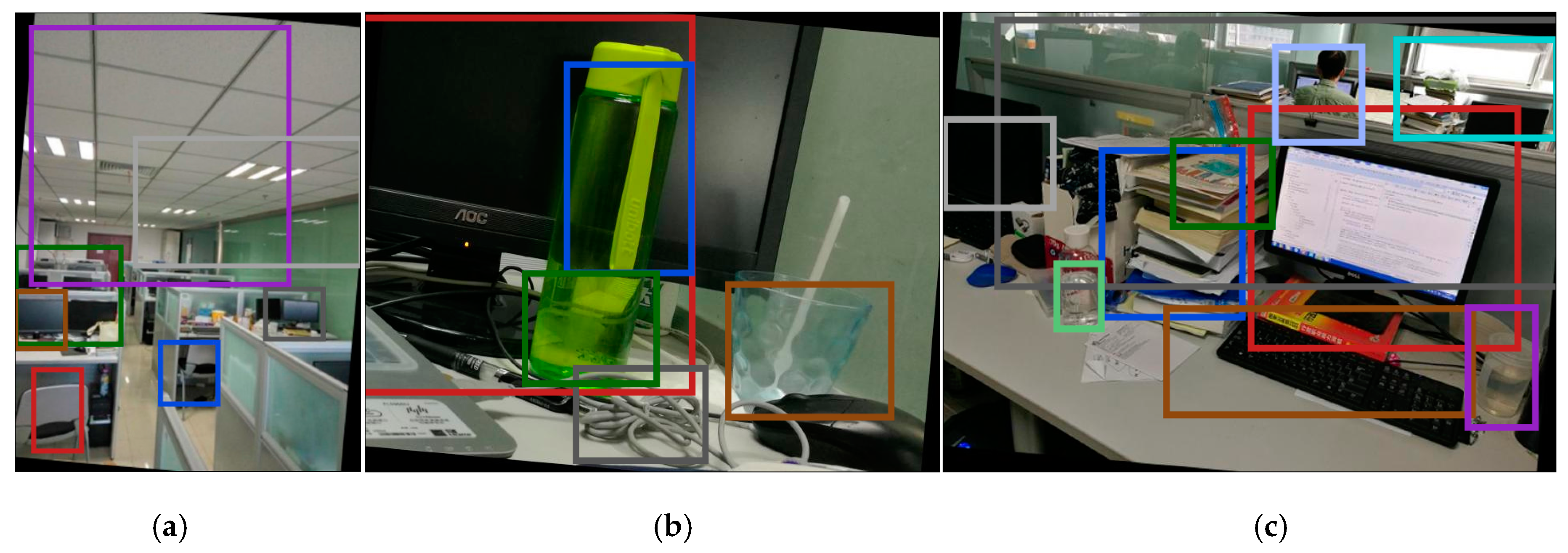

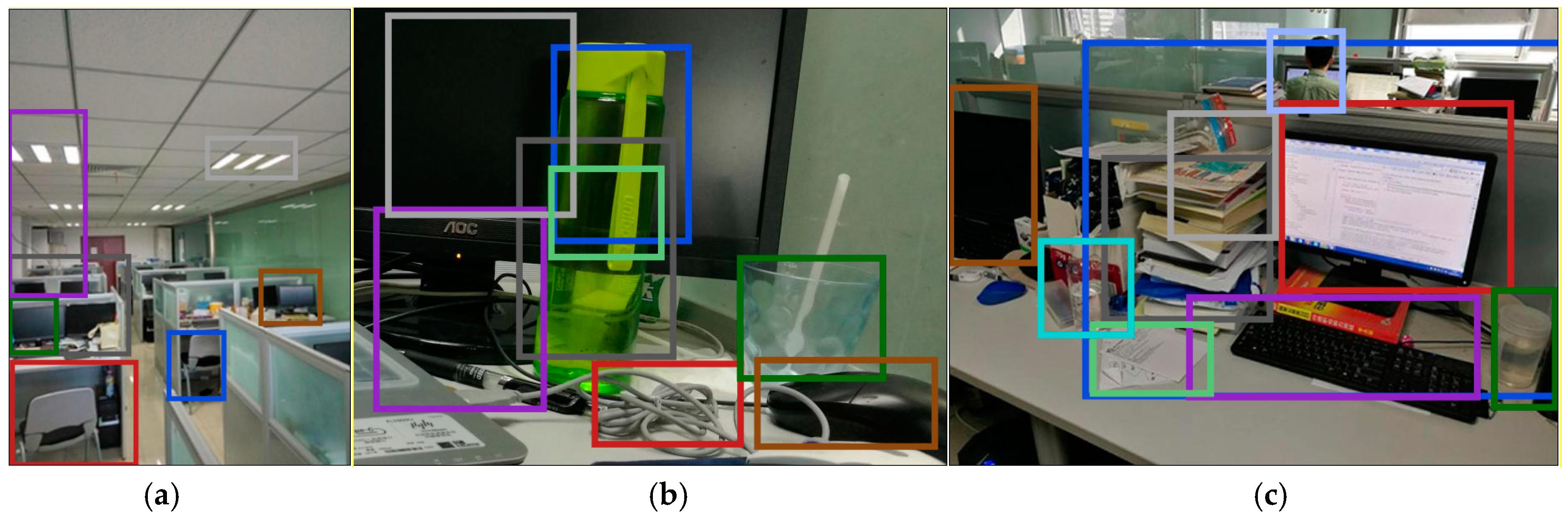

4.2. HOGP Feature Visualization

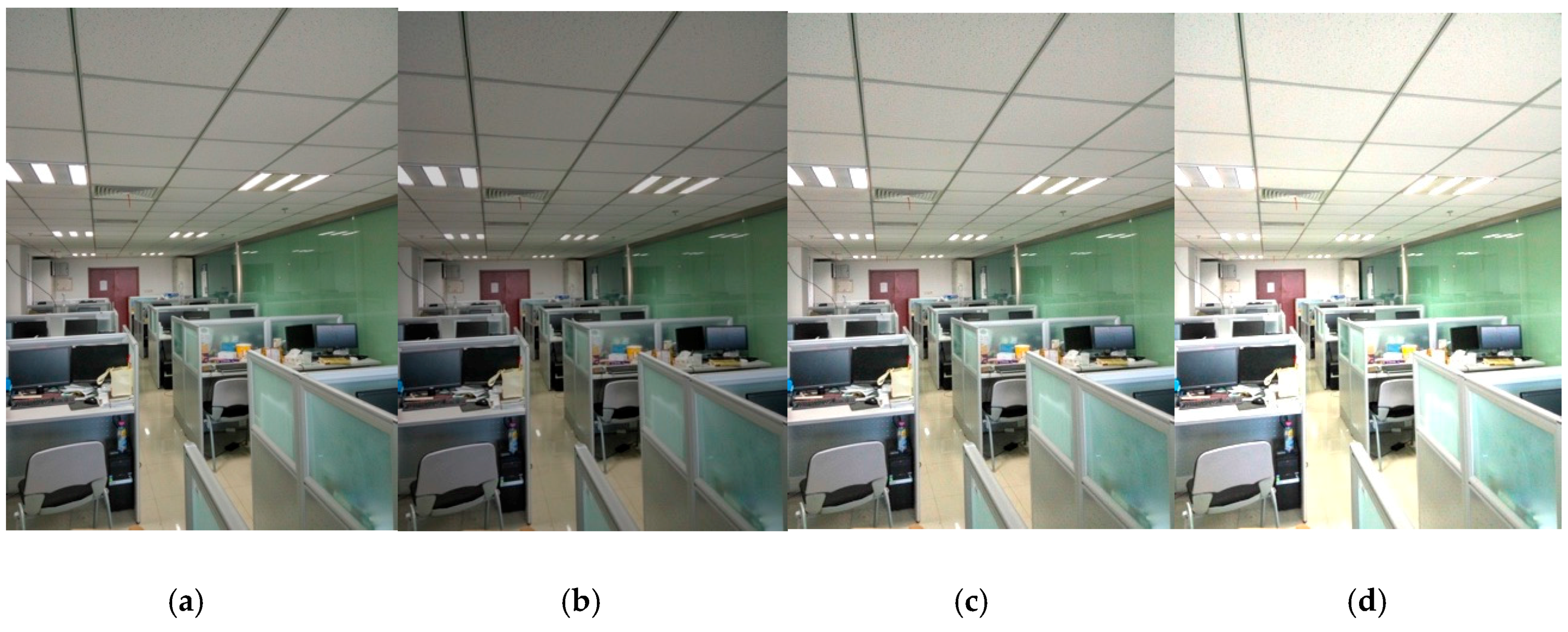

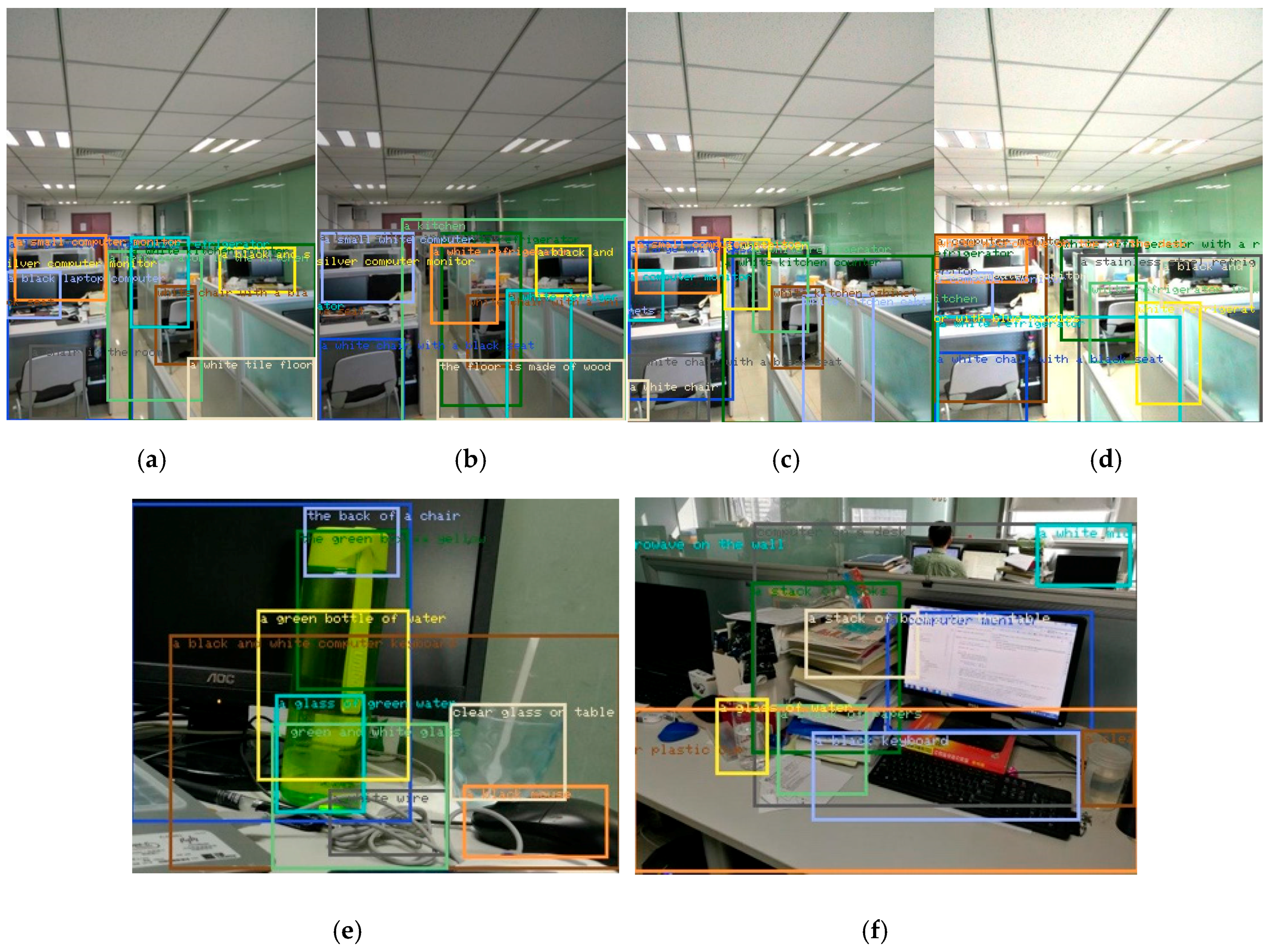

4.3. Qualitative Results Based on Feature Visualization

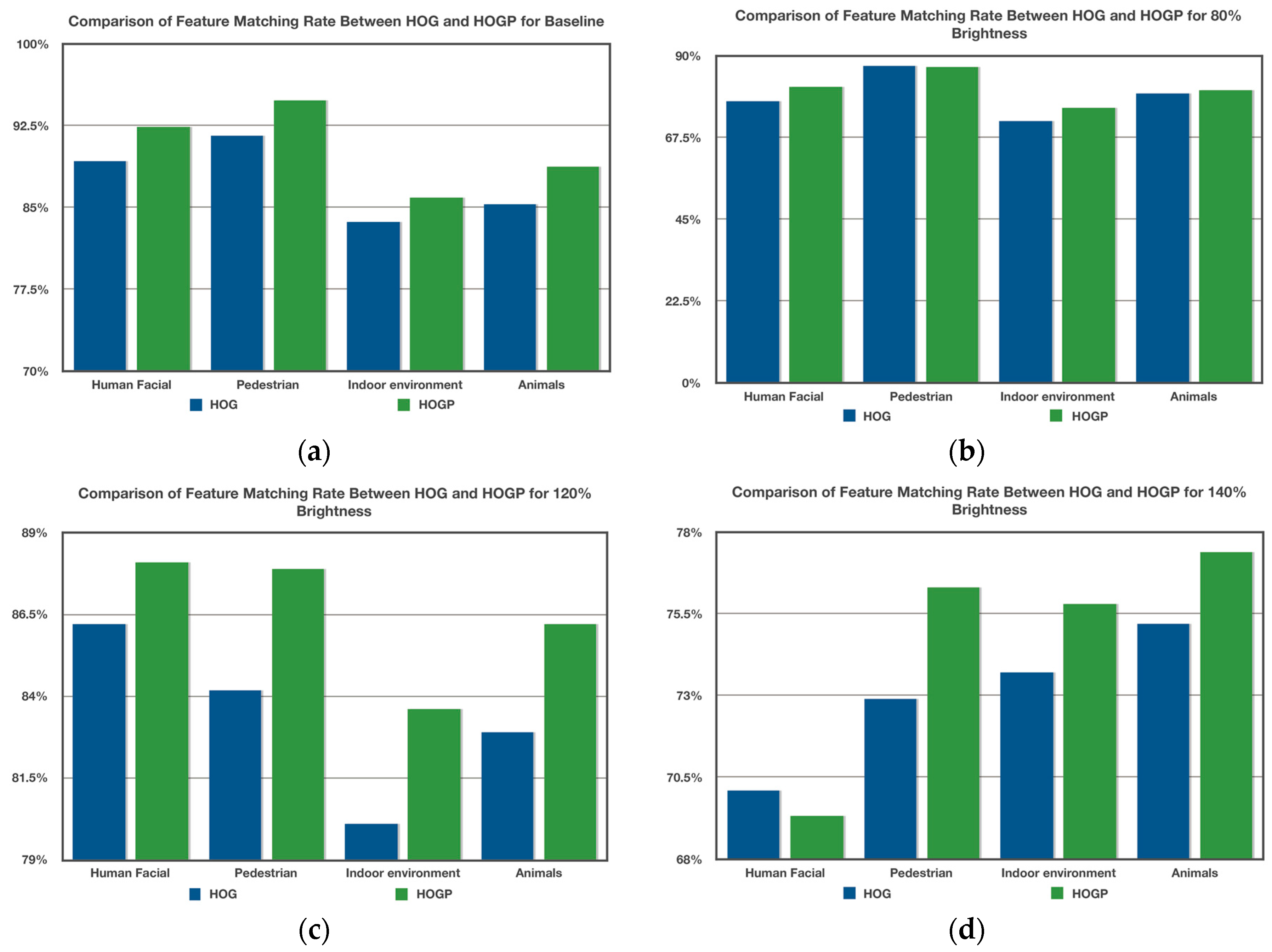

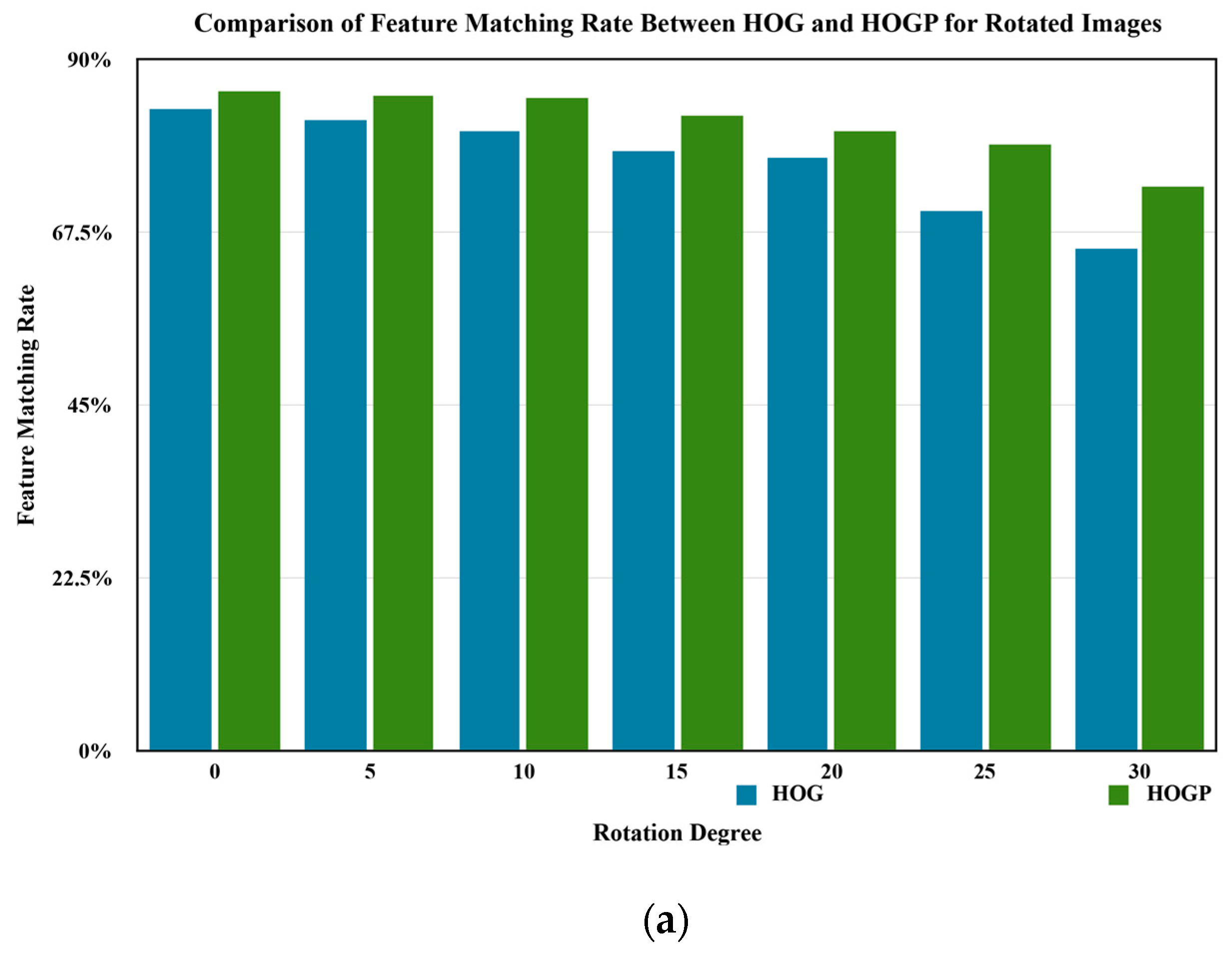

4.4. Quantitative Evaluation in the Image Feature Matching Rate

4.5. Runtime Comparison

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Van De Sande, K.; Gevers, T.; Snoek, C. Evaluating colour descriptors for object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1582–1596. [Google Scholar] [CrossRef] [PubMed]

- Pandey, M.; Lazebnik, S. Scene recognition and weakly supervised object localization with deformable part-based models. In Proceedings of the 2011 IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 1307–1314. [Google Scholar]

- Silberman, N.; Fergus, R. Indoor scene segmentation using a structured light sensor. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2011; pp. 601–608. [Google Scholar]

- Li, L.; Sumanaphan, S. Indoor Scene Recognition. 2011. Available online: https://pdfs.semanticscholar.org/0392/ae94771ba474d75242c28688e8eeb7bef90d.pdf (accessed on 26 June 2017).

- Chang, W.Y.; Chen, C.S.; Jian, Y.D. Visual tracking in high-dimensional state space by appearance-guided particle filtering. IEEE Trans. Image Process. 2008, 17, 1154–1167. [Google Scholar] [CrossRef] [PubMed]

- Oh, H.; Won, D.Y.; Huh, S.S.; Shim, D.H.; Tahk, M.J.; Tsourdos, A. Indoor UAV control using multi-camera visual feedback. J. Intell. Robot. Syst.: Theory Appl. 2011, 61, 57–84. [Google Scholar] [CrossRef]

- Card, S.K.; Mackinlay, J.D.; Shneiderman, B. Readings in Information Visualization: Using Vision to Think; Morgan Kaufmann: Burlington, MA, USA, 1999. [Google Scholar]

- Tory, M.; Moller, T. Evaluating visualizations: Do expert reviews work? IEEE Comput. Graph. Appl. 2005, 25, 8–11. [Google Scholar] [CrossRef] [PubMed]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Vondrick, C.; Khosla, A.; Pirsiavash, H.; Malisiewicz, T.; Torralba, A. Visualizing object detection features. Int. J. Comput. Vision 2016, 119, 145–158. [Google Scholar] [CrossRef]

- Pietikäinen, M. Local binary patterns. Scholarpedia. Scholarpedia 2010, 5, 9775. Available online: http://www.scholarpedia.org/article/Local_Binary_Patterns (accessed on 26 June 2017).

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 2010, 19, 1635–1650. [Google Scholar] [CrossRef] [PubMed]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Huang, K.; Yu, Y.; Tan, T. Boosted local structured HOG-LBP for object localization. In Proceedings of the 2011 IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 1393–1400. [Google Scholar]

- Zeng, C.; Ma, H. Robust head-shoulder detection by PCA-based multilevel HOG-LBP detector for people counting. In Proceedings of the 2010 20th International Conference on Pattern Recognition (ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 2069–2072. [Google Scholar]

- Ke, Y.; Sukthankar, R. PCA-SIFT: A More Distinctive Representation for Local Image Descriptors. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Washington, DC, USA, 27 June–2 July 2004; pp. 506–513. [Google Scholar]

- Yan, J.; Zhang, X.; Lei, Z.; Liao, S.; Li, S.Z. Robust multi-resolution pedestrian detection in traffic scenes. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013; pp. 3033–3040. [Google Scholar]

- Trung, Q.N.; Phan, D.; Kim, S.H.; Na, I.S.; Yang, H.J. Recursive Coarse-to-Fine Localization for Fast Object Detection. Int. J. Control Autom. 2014, 7, 235–242. [Google Scholar] [CrossRef]

- López, M.M.; Marcenaro, L.; Regazzoni, C.S. Advantages of dynamic analysis in HOG-PCA feature space for video moving object classification. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Brisbane, QLD, Australia, 19–24 April 2015; pp. 1285–1289. [Google Scholar]

- Savakis, A.; Sharma, R.; Kumar, M. Efficient eye detection using HOG-PCA descriptor. Imaging Multimed. Anal. Web Mob. World 2014, 9027. Available online: https://www.researchgate.net/profile/Andreas_Savakis/publication/262987479_Efficient_eye_detection_using_HOG-PCA_descriptor/links/564a6d2108ae44e7a28db727/Efficient-eye-detection-using-HOG-PCA-descriptor.pdf (accessed on 26 June 2017).

- Kim, J.Y.; Park, C.J.; Oh, S.K. Design & Implementation of Pedestrian Detection System Using HOG-PCA Based pRBFNNs Pattern Classifier. Trans. Korean Inst. Electr. Eng. 2015, 64, 1064–1073. [Google Scholar] [CrossRef]

- Agrawal, A.K.; Singh, Y.N. An efficient approach for face recognition in uncontrolled environment. Multimed. Tools Appl. 2017, 76, 3751–3760. [Google Scholar] [CrossRef]

- Dhamsania, C.J.; Ratanpara, T.V. A survey on Human action recognition from videos. In Proceedings of the 2016 Online International Conference on Green Engineering and Technologies (IC-GET), Coimbatore, India, 19 November 2016; pp. 1–5. [Google Scholar]

- Weinzaepfel, P.; Jégou, H.; Pérez, P. Reconstructing an image from its local descriptors. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 337–344. [Google Scholar]

- Vondrick, C.; Khosla, A.; Malisiewicz, T.; Torralba, A. Hoggles: Visualizing object detection features. In Proceedings of the 2013 IEEE International Conference on Computer Vision (ICCV), Sydney, Australia, 1–8 December 2013; pp. 1–8. [Google Scholar]

- Mahendran, A.; Vedaldi, A. Understanding deep image representations by inverting them. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5188–5196. [Google Scholar]

- Chu, B.; Yang, D.; Tadinada, R. Visualizing Residual Networks. arXiv, 2017; arXiv:1701.02362. Available online: https://arxiv.org/abs/1701.02362(accessed on 26 June 2017).

- Pandey, P.K.; Singh, Y.; Tripathi, S. Image processing using principle component analysis. Int. J. Comput. Appl. 2011, 15. [Google Scholar]

- Bajwa, I.S.; Naweed, M.; Asif, M.N.; Hyder, S.I. Feature based image classification by using principal component analysis. ICGST Int. J. Graph. Vis. Image Process. 2009, 9, 11–17. [Google Scholar]

- Keller, J.M.; Gray, M.R.; Givens, J.A. A fuzzy k-nearest neighbor algorithm. IEEE Trans. Syst. Man Cybern. 1985, 15, 580–585. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The PASCAL visual object classes (VOC) challenge. Int. J. Comput. Vision 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Kuo, B.C.; Landgrebe, D.A. Nonparametric weighted feature extraction for classification. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1096–1105. [Google Scholar]

| Pixel/Time(s) | Man (333 × 5000) | Desktop (1969 × 1513) | Lena (256 × 256) | Boy (443 × 543) |

|---|---|---|---|---|

| HOG + sparse dictionary | 92.191 | 1423.114 | 36.532 | 123.521 |

| HOGP + sparse dictionary | 91.342 | 1378.683 | 29.563 | 111.049 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiao, J.; Wang, X.; Deng, Z. Build a Robust Learning Feature Descriptor by Using a New Image Visualization Method for Indoor Scenario Recognition. Sensors 2017, 17, 1569. https://doi.org/10.3390/s17071569

Jiao J, Wang X, Deng Z. Build a Robust Learning Feature Descriptor by Using a New Image Visualization Method for Indoor Scenario Recognition. Sensors. 2017; 17(7):1569. https://doi.org/10.3390/s17071569

Chicago/Turabian StyleJiao, Jichao, Xin Wang, and Zhongliang Deng. 2017. "Build a Robust Learning Feature Descriptor by Using a New Image Visualization Method for Indoor Scenario Recognition" Sensors 17, no. 7: 1569. https://doi.org/10.3390/s17071569

APA StyleJiao, J., Wang, X., & Deng, Z. (2017). Build a Robust Learning Feature Descriptor by Using a New Image Visualization Method for Indoor Scenario Recognition. Sensors, 17(7), 1569. https://doi.org/10.3390/s17071569