1. Introduction

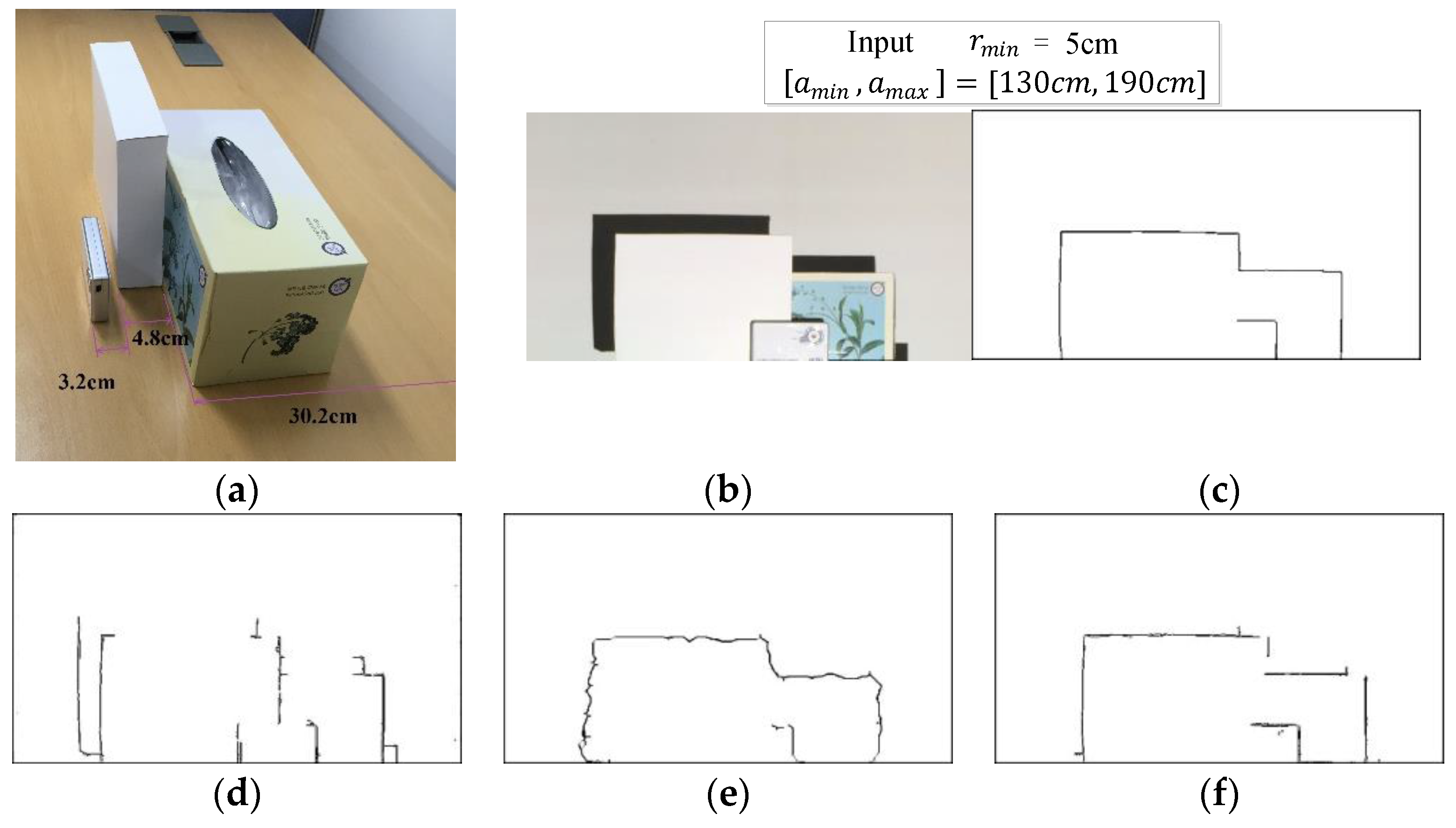

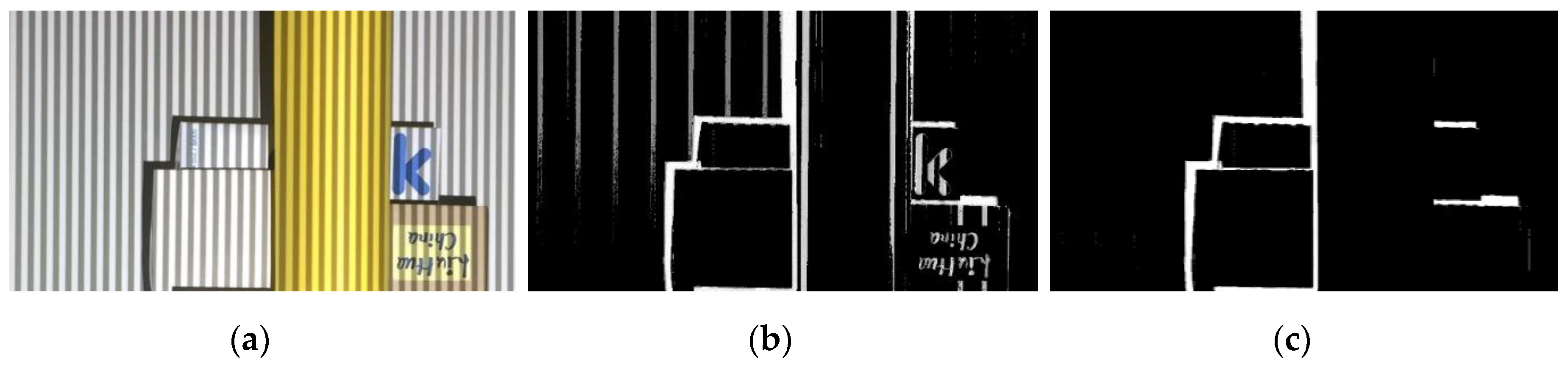

The goal of this work, as illustrated in

Figure 1, is to accurately find all depth edges that have the minimum depth difference,

, in the specified range of distances,

from the camera/projector. We call this “depth edge filtering”. We propose a structured light based framework that employs a single color pattern of vertical stripes with an associated binary pattern. Due to the accurate control of the parameters involved, the proposed method can be employed for applications where detection of object shape is essential—for example, when a robot manipulator needs to grasp an unknown object by identifying inner parts with a certain depth difference.

There has been a considerable amount of research on structured light imaging in the literature [

1,

2,

3,

4,

5]. Here, we only mention some recent works to note. Barone et al. [

1] presented a coded structured light technique for surface reconstruction using a small set of stripe patterns. They coded stripes in De Bruijn sequence and decomposed color stripes to binary stripes so that they can take advantage of using a monochromatic camera. Ramirez et al. provided a method [

2] to extract correspondences of static objects through structured light projection based on De Bruijn sequence. To improve the quality of depth map, Shen et al. presented a scenario [

3] for depth completion and denoising. Most works have aimed at surface reconstruction and there have been a few works for the purpose of depth edge filtering. One notable technique was presented to create a depth edge map for nonphotorealistic rendering [

6]. They capture a sequence of images in which different light sources illuminate the scene from various positions. Then, they use shadows in each image to assemble a depth edge map. However, this technique is incapable of the control of parameters such as range of distances from the camera/projector and depth difference.

A while ago, in [

7,

8], similar control of parameterizing structured light imaging was presented. They employed structured light with a pattern comprising black and white horizontal stripes of equal width, and detected depth edges with depth difference

in a specified range of distances. Since the exact amount of pattern offset along depth discontinuities in the captured image can be related to the depth value from the camera, they detected depth edges by finding detectable pattern offset through thresholding of Gabor amplitude. They automatically computed the width of stripe by relating it with the amount of pattern offset.

A major drawback of the previous methods is that they did not address the issue of shadow region. Regions that the projector light cannot reach create shadow regions and result in double edges.

Figure 1d shows the result of the method in [

8] for the given parameters where, in the shadow regions, double edges and missing edges appear. Furthermore, due to the use of simple black and white stripes, the exact amount pattern offset may not be measurable depending on the object location from the camera. This deficiency requires additionally employing several structured lights with width of stripe doubled, tripled, etc.

In this work, we present an accurate control of depth edge filtering by overcoming the disadvantages of the previous works [

7,

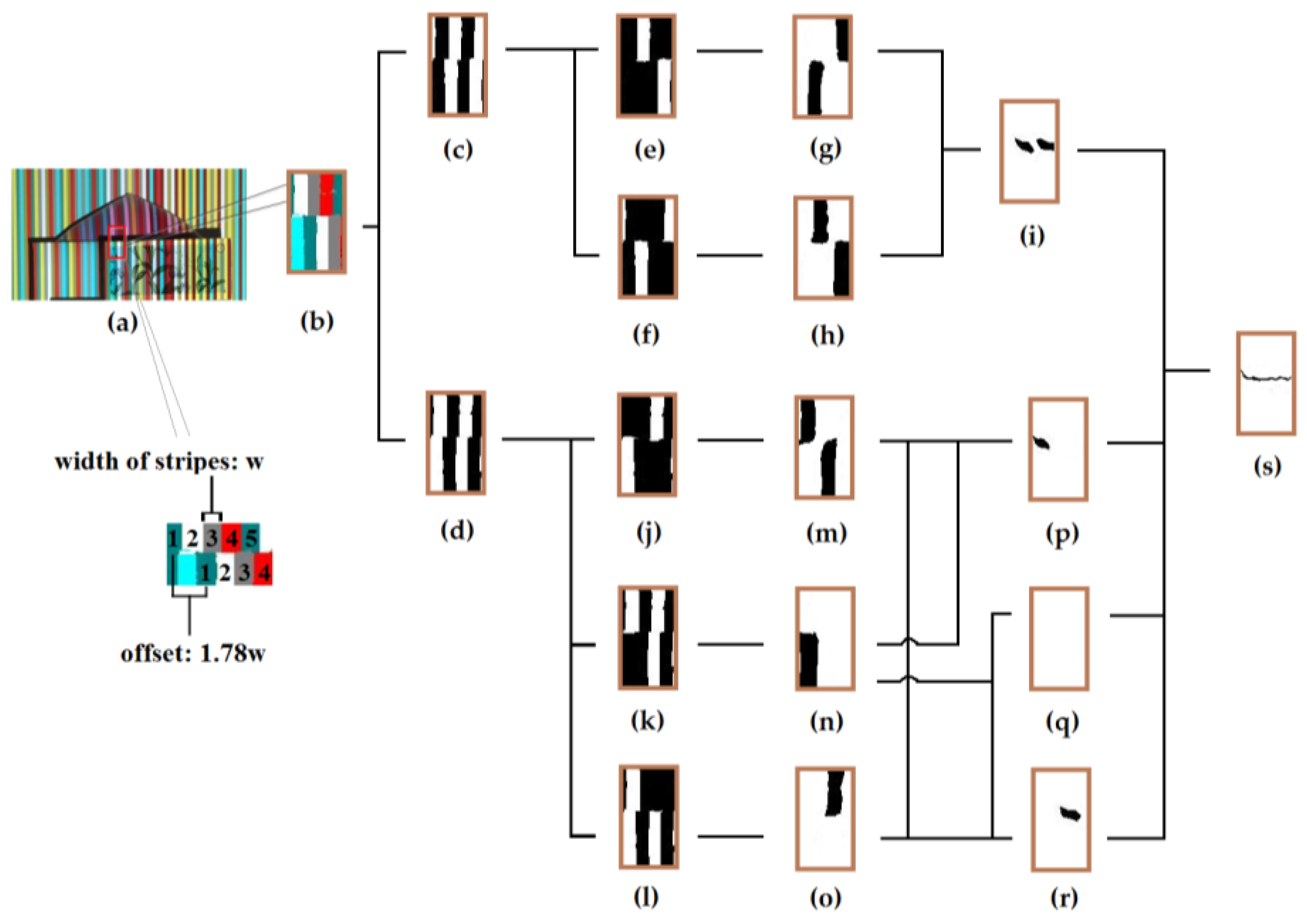

8]. We provide an overview of our method in

Figure 2. We opt to use a single color pattern of vertical stripes with an associated binary pattern as shown in

Section 3. The use of the binary pattern helps with recovering the original color of the color stripes accurately. We give the details in

Section 3. Given the input parameters,

and

, stripe width,

, is automatically computed to create the structured light patterns necessary to detect depth edges having depth difference greater or equal to

. We capture structured light images by projecting the structured light patterns on the scene. We first recover the original color of the color stripes in the structured light images in order not to be affected by the textures on the scene. Then, for each region of homogeneous color, we use a Support Vector Machine (SVM) classifier to decide whether a given region is from shadow or not. After that, we obtain color stripes pattern images by filling in shadow regions using the color stripes that otherwise have been projected there. We finally apply Gabor filtering to the pattern images to produce the depth edges with depth difference greater or equal to

.

We have compared the depth edge filtering performance of our method with that of [

8] and the Kinect sensor. Experimental results clearly show that our method finds the desired depth edges most correctly while the other methods cannot. The main contribution of our work lies in an accurate control of depth edge filtering using a novel method of effective identification of color stripes and shadow removal in the structured light image.

2. Parameterized Structured Light Imaging

By parameterized structured light imaging, we refer to the technologies using structured light imaging that can control associated parameters. To the best of our knowledge, Park et al.’s work [

7] was the first of its kind. In our case, the controlled parameters are the minimum depth difference,

, a target range of distances,

, and the width of stripe,

. The basic idea in [

7] to detect depth edges is to exploit pattern offset along depth discontinuities. To detect depth discontinuities, they consecutively project a white light and structured light onto the scene and extract a binary pattern image by differencing the white light and structured light images. This differencing effectively removes texture edges. After removal of texture edges, they basically detected the locations where pattern offset occurs to produce depth edges. In contrast, we achieve the effect of texture edge removal by recovering the original color stripes in the color structured image. Details will be given in the next section.

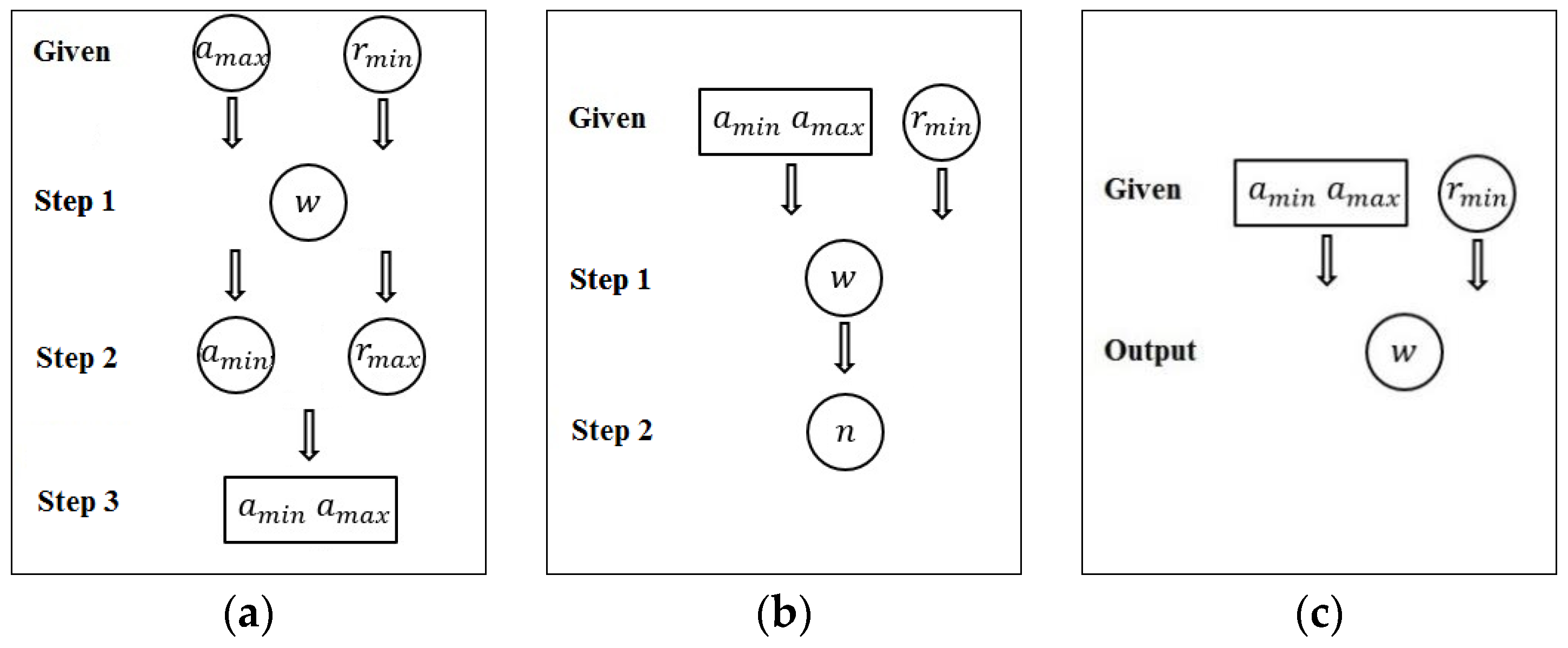

The control of parameters developed in [

7] can be seen in

Figure 3a where

and

are given as the input parameters; then, the width,

, and

are determined. However, it was awkward that

is found at a later step from other parameters. A substantial improvement over this method was made in [

8] so that

and

are given as the input parameters. Given the input parameters, the method provides the width of stripes,

, and number of structured light images,

as shown in

Figure 3b. They also showed its application to the detection of silhouette information for visual hull reconstruction [

9]. In our work, we achieve much simpler control of the key parameters by employing a color pattern as can be seen in

Figure 3c. While the methods in [

7,

8] need several structured light images, we use a single color pattern and an associated binary pattern.

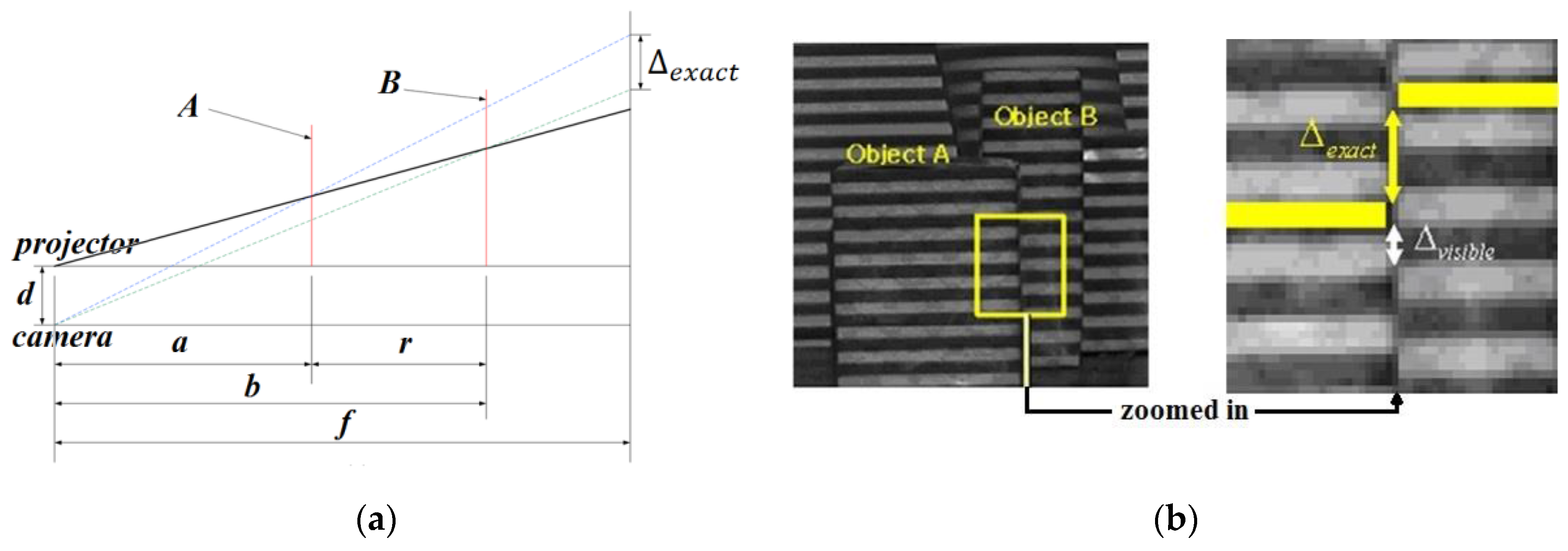

To better describe our method, let us revisit the key Equation (1) for the modelled imaging geometry of a camera, projector and object in [

7,

8]. This Equation can easily be derived from the geometry in

Figure 4 using similar triangles:

Here, , and are the distances of object locations A and B from the projector/camera and virtual image plane from the camera, respectively. denotes the exact amount of pattern offset when the depth difference of object locations A and B is .

Since, in [

7,

8], they used simple black and white stripes with equal width,

may not be measurable depending on the object location from the camera. The observable amount of pattern offset,

, is periodic as the distance of object location from the camera is increased or decreased. With

r and

d fixed, the relation between

and a depicts that there are ranges of distances where detection of depth edges is difficult due to the lack of visible offset even though

is significant. Refer to

Figure 5. They have set the minimum amount of pattern offset that is needed to reliably detect depth edges to

. In order to extend the detectable range, additional structured lights with width of stripe

,

, etc. are employed to fill the gap of

in

Figure 5, and the corresponding range,

, of object locations is extended. In contrast, because we use color stripes pattern,

is equivalent to

. Thus, there is no need to employ several pattern images.

3. Use of Color Stripes Pattern

We opt to use color stripe patterns by which we can extend the range of distances by filling in the gap of

in

Figure 5. We consider a discrete spatial multiplexing method as a proper choice [

10] because it shows negligible errors and only a simple matching algorithm is needed. We employ four colors: red, cyan, yellow and white. We also make use of two versions for each color: bright and dark. That is, their RGB (Red, Green and Blue) values are [

L,0,0], [0,

L,

L], [

L,

L,0], and [

L,

L,

L],

L = 255 or 128, where

L denotes lightness intensity. To create a color pattern, we exploit De Brujin sequences [

11] of length 3, that is, any sequence of three color stripes is unique in a neighborhood. This property helps identify each stripe in the image captured by the camera.

Additionally, we use an associated binary stripes of which RGB values can be represented as [

L,

L,

L],

L = 255 or 128. That is, we also make use of bright (

L = 255) and dark (

L = 128) versions for binary stipes. We have designed the stripe patterns so that in both color stripes and binary stripes, bright and dark stripes appear alternately. The color stripes are associated with the binary stripes so that bright stripes in the color pattern correspond to dark stripes in the binary pattern. Refer to

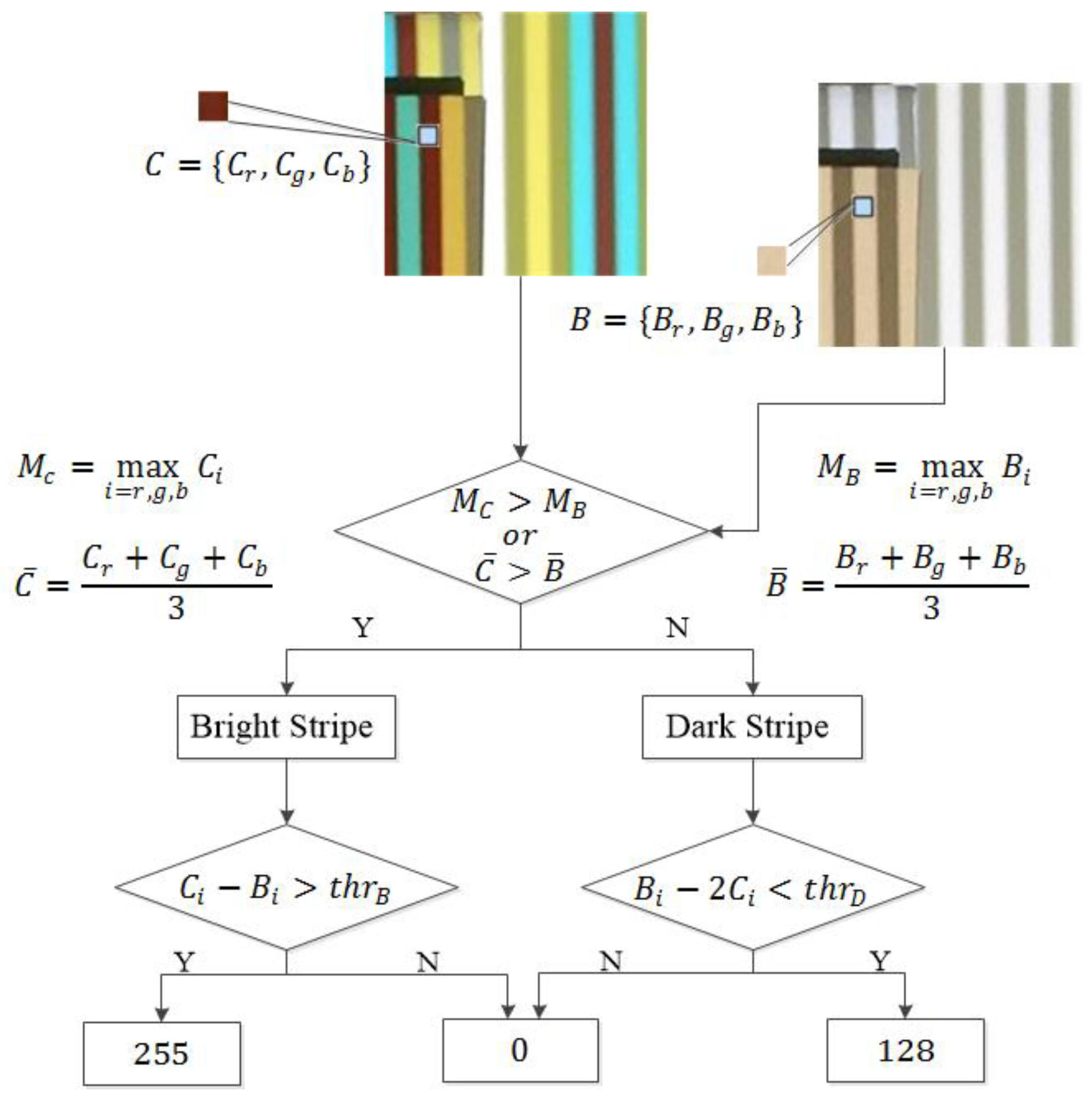

Figure 6. This setting indeed greatly facilitates the solution when recovering the original color of color stripes in the color structured light image by referencing the lightness of binary stripes in the binary structured light image.

The most attractive advantage of employing color stripes pattern of De Bruijin sequence is that

is the same as the amount of visible pattern offset

. We can safely set the minimum amount of pattern offset necessary for detecting depth edges to

. In addition,

was used in [

7,

8]. Thus, the width of stripe width,

, is computed using Equation (2) [

8]:

4. Recovery of the Original Color of Stripes

The problem of recovering the original color of color stripes in the structured light image is to determine the lightness

L in each color channel. We exploit the associated binary image as reference to avoid decision errors.

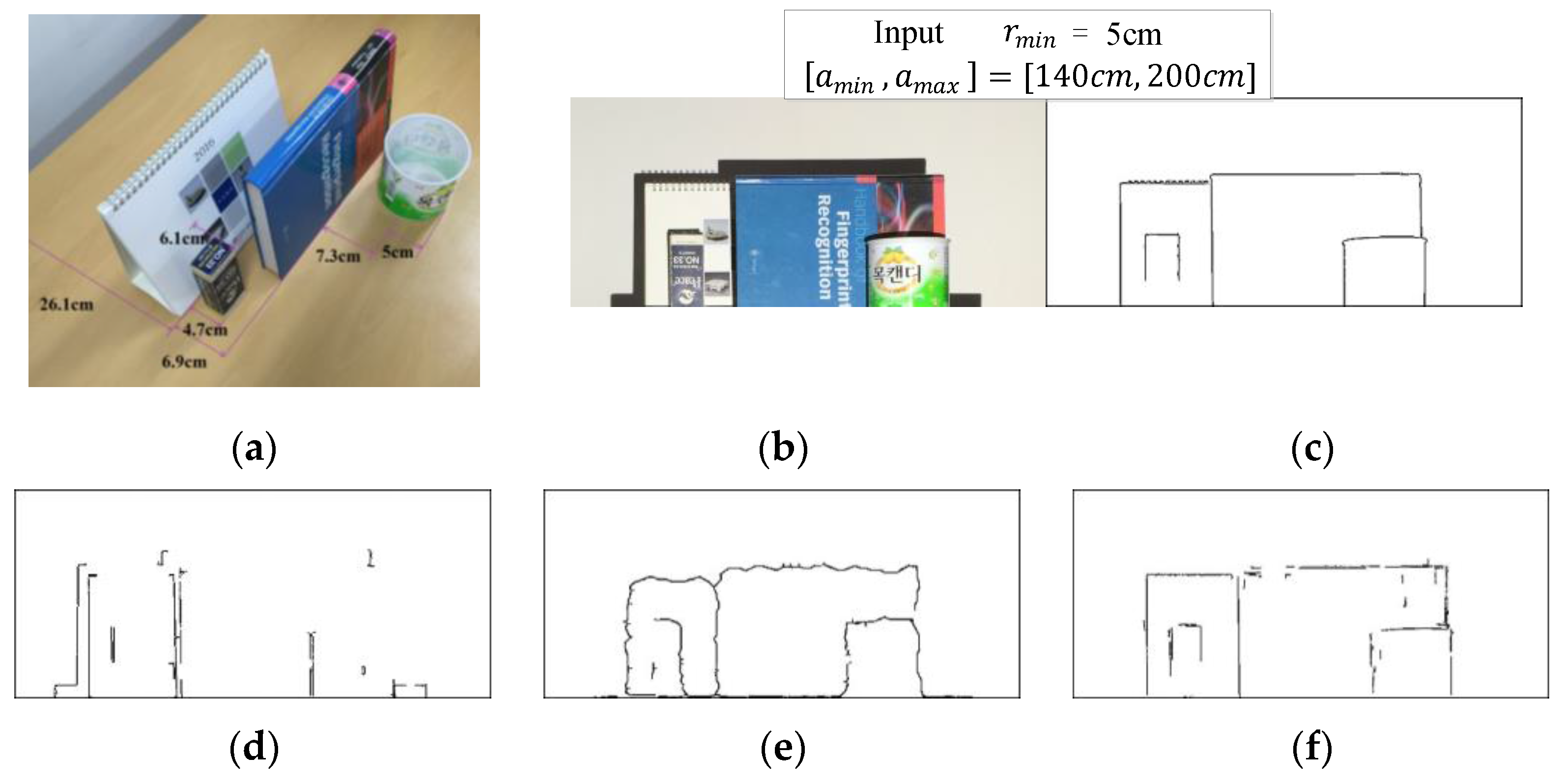

Figure 7 shows the procedure of recovering the original color of color stripes. The procedure consists of two steps. For every pixel in the color structured image, we first decide whether it comes from a bright (

L = 256) color stripe or dark (

L = 128) color stripe. Then, we recover the value of

L in each color channel.

Let us denote a pixel in the color structured light image and its corresponding pixel in the binary structure light images, C and B, respectively. and , , represent their RGB values. Since bright stripes in the color pattern correspond to dark stripes in the binary pattern, it is very likely that a pixel from a bright color stripe appears brighter than its corresponding pixel from the binary stripe when they are projected onto the scene. Thus, in most cases, we can make a correct decision simply by comparing the max value of with the max value of , . However, since RGB values of stripes in the captured images are affected by the object surface color, we may have decision errors, especially for pixels on the object surface that have high values in one channel. For example, when object surface color is pure blue [0,0,255] and color stripe is bright red [255,0,0], the RGB values of a pixel on the object surface in the color and binary structured images can appear as [200,5,200] and [100,100,205], respectively. In this case, only comparison of max channel value gives a wrong answer. Hence, we employ an additional criterion that compares the average value of all three channels. Through numerous experiments, we have confirmed that this simple scheme achieves correct pixel classification into bright or dark ones.

Next, we decide the value of each channel,

L. Luminance and ambient reflected light can vary in different situations. We take an adaptive thresholding scheme to make a decision. In case of a pixel in bright color stripes,

and

We decide that if

then

; otherwise,

is determined as Equation (3) and

is computed from training samples:

In the case of a pixel in dark color stripes,

and

We decide that if

then

Otherwise,

is computed as Equation (4), and

and

are estimated from training samples:

We set a bias to ensure that most of the time is positive. This is necessary to deal with any positive close to when is negative.

The relationship between the original color and captured color is nonlinear. We seek to use a simple statistical method to determine parameters,

,

and

. We collect a series of images. Each set is comprised of three images,

and

, that are captured by projecting black [0,0,0], gray [128,128,128] and white [255,255,255] lights, respectively.

,

and

can be viewed as three image matrices that experimentally simulate the observed black, gray and white color. Note that we took every image in the same ambient environment. Usually, the more training samples we collect, the more representative parameters we can get. However, hundreds of samples are sufficient for our estimation in practice. We use multifarious objects in different shapes and with various textures to build scenes. We estimate

as follows:

N is the number of sets, and are element-wise functions and means the ith set. In bright stripes, we already know that should be 127 when the channel value is assigned 255 in patterns. Equation (5) is a sampling process about the relationship between the maximum and minimum of when projected on the scene. gives the smallest . If is greater than in any channel, we like to believe its value is 255.

We initially model

as

as in Equation (6). This model shares the idea behind Equation (3). It makes

become the smallest value that

could be. However in dark stripes,

is close to zero. Simply scaling does not affect its sign, which might lead to an inappropriate decision. In order to alleviate external interference, we slightly adjust the threshold model above according to

. We increase the threshold when

is rather large or decrease it otherwise. Since

,

and

are observed lightness values of

L = 0, 128 and 255, respectively,

is an estimation of

. Thus, we adjust the threshold value based on the difference between

and

. Hence, we approximate

as in Equation (7). The threshold for dark stripes is altered slightly in terms of the sign of

.

,

and

were used in our experiments:

Lastly, we check whether the recovered color is one of the four colors we adopt to use. If not, we change it to the most probable color in four. We achieve this in two steps: (1) compare recovered color with four default colors to see how many channels match; (2) among the colors having the most matching channels, choose the color with minimum threshold difference over mismatching channels.

Figure 8 shows an experimental result on the recovery of the original color of color stripes. In

Figure 8b, the gray and green areas in the lower part and the noisy areas around main objects correspond to shadow regions. Because shadow regions are colorless, color assignment is meaningless. As previously stated, we can ignore texture edges on object surfaces by considering the original color of color stripes.

Although empirically determined parameters are used, the whole thing works pretty well in non-shadow regions. However, recovered color is meaningless in shadow regions where stripe patterns are totally lost. We detect shadow regions and extend color stripes there that otherwise would have been projected. Details follow in the next section.

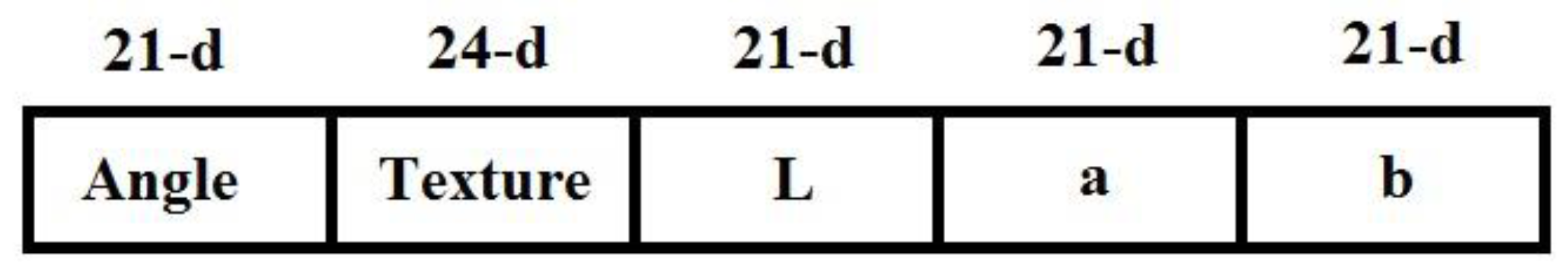

6. Depth Edge Detection

We use Gabor filtering for depth edge detection as in [

7,

8]. They applied Gabor filtering to black and white stripe patterns to find where the spatial frequency of the stripe pattern breaks. Because depth edge detection using Gabor filtering can only be applied to a binary pattern, we consider bright stripes patterns and dark stripes patterns separately to create binary patterns as can be seen in

Figure 11c,d, respectively. Along the depth edge in

Figure 11, the upper stripe is supposed to have a different color from the lower one. Then, we exploit color information to detect potential depth edge locations by applying Gabor filtering to binary pattern images where binary stripes for each color are deleted in turn. Note that, as long as there are pattern offsets in the original color pattern image, the amount of offset in the binary patterns, which are obtained by deleting the binary stripe for each color, becomes larger than the original offset amount. This makes the response of Gabor filtering more vivid to changes in periodic patterns. Similar to the previous work [

7], we additionally make use of texture edges to improve localization of depth edges. In order to get texture edges, we synthesize a gray scale image of the scene without stripe patterns simply by averaging max channel value of color pattern image and binary pattern image for each pixel.

Figure 11 illustrates the process of detecting depth edges of which the offset amount is

. A Gabor filter of size

×

is used.

Figure 11e,f shows the pattern without dark cyan and gray stripes, respectively.

Figure 11g,h is their responses of Gabor filter which have been binarized. The regions of low Gabor amplitude, shown in black, indicate locations of potential depth edges. We process the bright stripes pattern in the same way.

Figure 11i,p,q,r includes all the possible combinations of colors along the edges. Thus, the union of them yields depth edges. We simply apply thinning operation to the result of the union in order to get the skeleton.

7. Experimental Results

We have coded our method in Matlab (2015b, MathWorks, Natick, MA, USA) and the codes have not been optimized. We have used 2.9 GHz Intel Core i5 CPU, 8GB 1867 MHz DDR3 memory (Santa Clara, CA, USA) and Intel Graphics ( Iris Graphics 6100, Santa Clara, CA, USA).

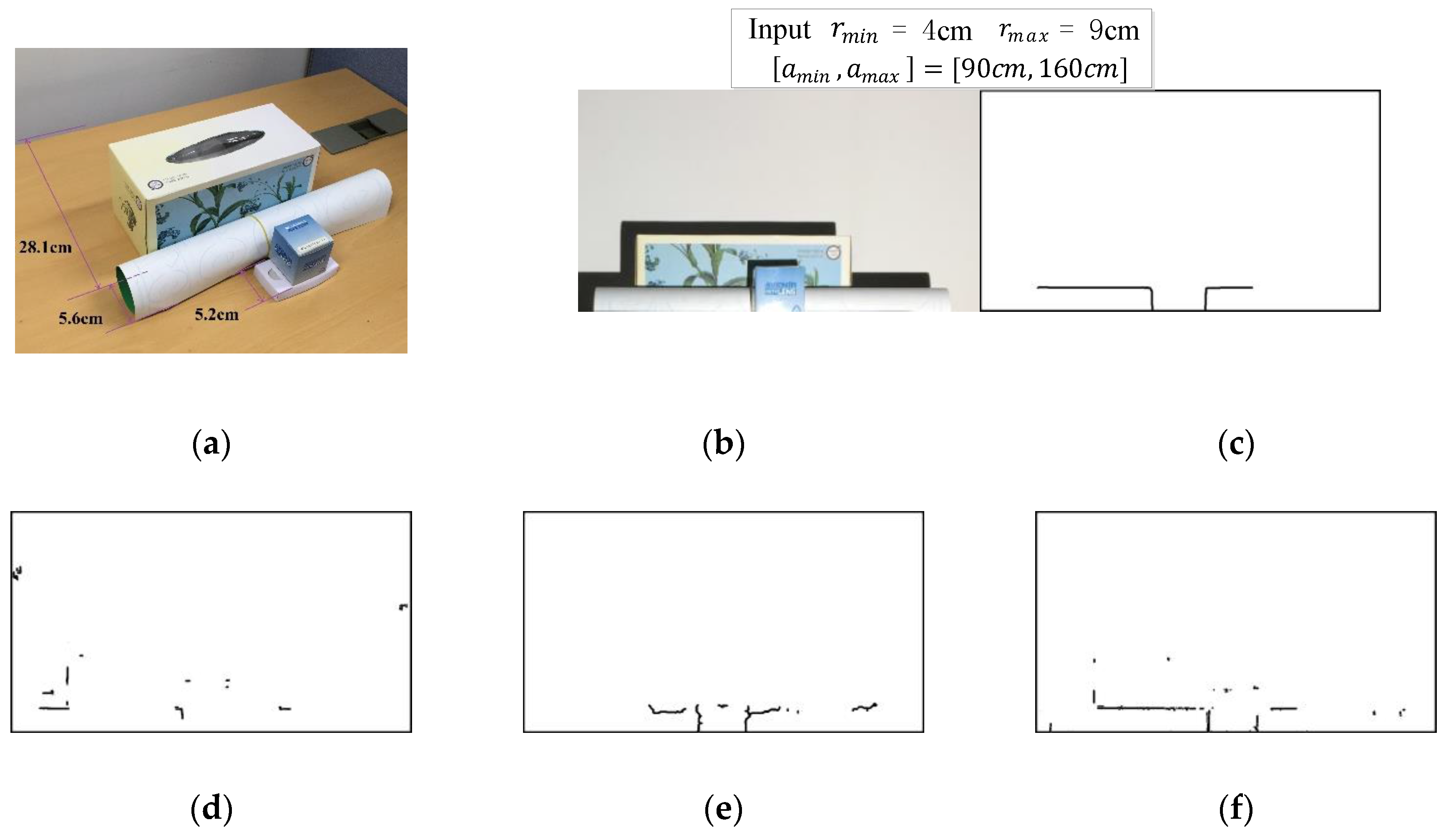

Figure 12 shows an example of experimental results. We have compared the performance of our method with those of the previous method [

8] and using the Kinect sensor. To produce depth edges from a Kinect depth map, for every pixel, we scan depth values in its circular region of radius 5, and output the pixel if any pixel within its circle has depth difference of

. The result clearly shows that our method finds the depth edges most correctly for the given parameters while the other methods cannot.

Figure 12e shows the result of depth edge detection from the Kinect depth map where straight depth edge segments are not detected as straight. This is because depth values provided by the Kinect sensor along depth edges are not accurate due to interpolation. Irrespective of false positives or false negatives, there are two main causes: inaccurate color recovery and stripes will result in false edges. However, color recovery errors are well contained because we check on the De Bruijn constraint when identifying the original color of stripes. When a shadow region is not detected, false positives occur. On the other hand, when some non-shadow regions are treated as shadow near boundaries, incorrect depth edges are produced.

Table 1 lists computation time for each step of our method shown in

Figure 2.

Figure 13 depicts an additional experimental result where we find depth edges that satisfy the depth constraint of

. We can achieve this by two consecutive applications of Gabor filtering to the pattern images: The first and second Gabor filter yield depth edges with

and

, respectively, and we remove the depth edges with

. We can see that our method outperforms the others. While we have provided raw experimental results without any postprocessing operations, the result could be easily enhanced by employing simple using morphological operations.