LiDAR-IMU Time Delay Calibration Based on Iterative Closest Point and Iterated Sigma Point Kalman Filter

Abstract

:1. Introduction

2. Coordinate Transformation between LiDAR and IMU

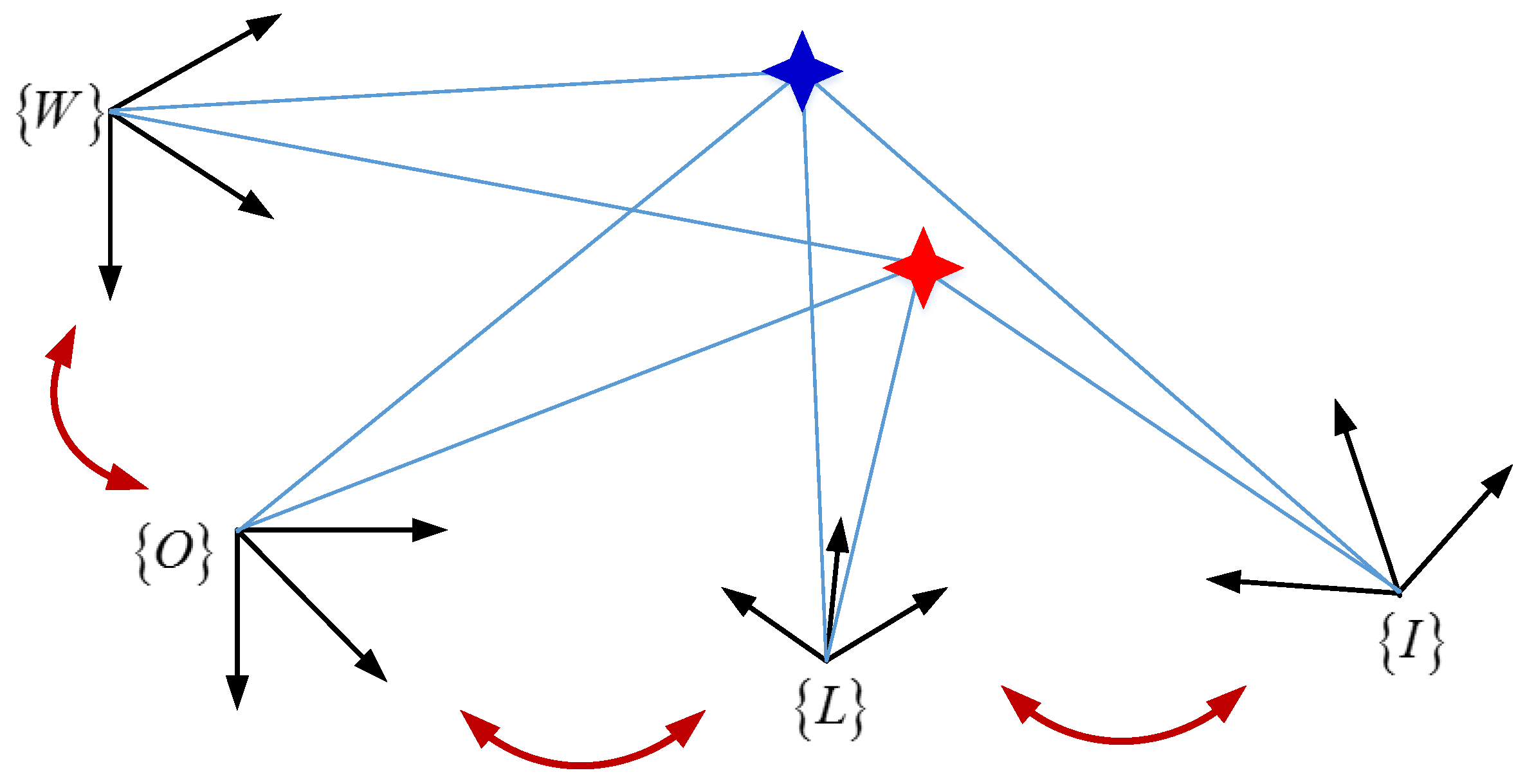

2.1. Coordinate Frame

- (1)

- LiDAR frame, {L}, is represented in this frame of reference, in which the axes are defined as right, forward and up.

- (2)

- IMU frame, {I}, is defined by the IMU, in which angular rotation rates and linear accelerations are measured, with its origin at a point on the IMU body.

- (3)

- Object frame, {O}, is the coordinate of moving object, the axes in the object frame are forward, right and down.

- (4)

- World frame, {W}, is considered to be the fundamental coordinate frame and serves as an absolute reference for both the {I} and the {L}.

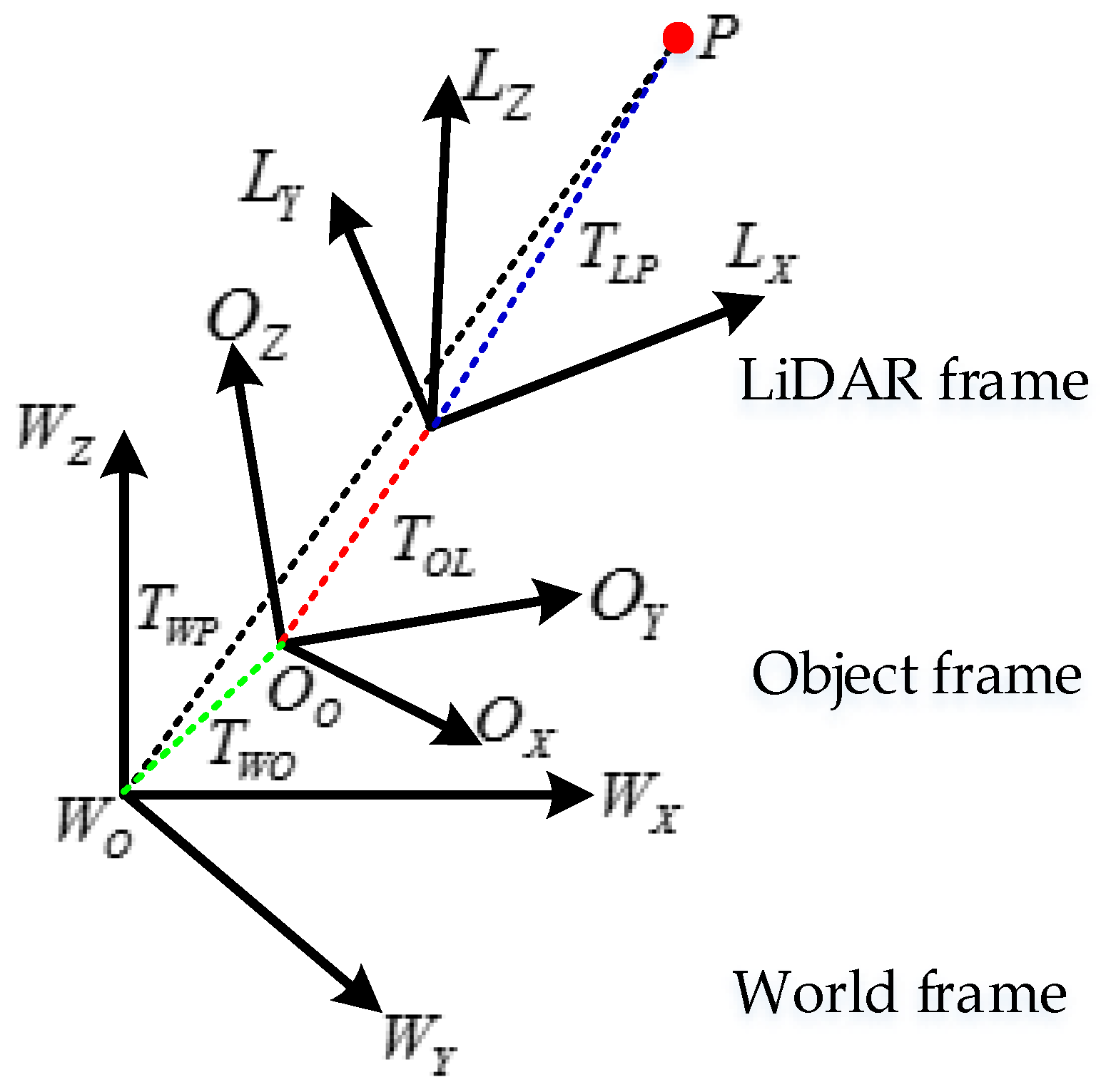

2.2. Transformation from LiDAR Frame to IMU Frame

2.2.1. Transformation from LiDAR Frame to the World Frame

2.2.2. Transformation from LiDAR Frame to IMU Frame

3. LiDAR and IMU Measurement Model

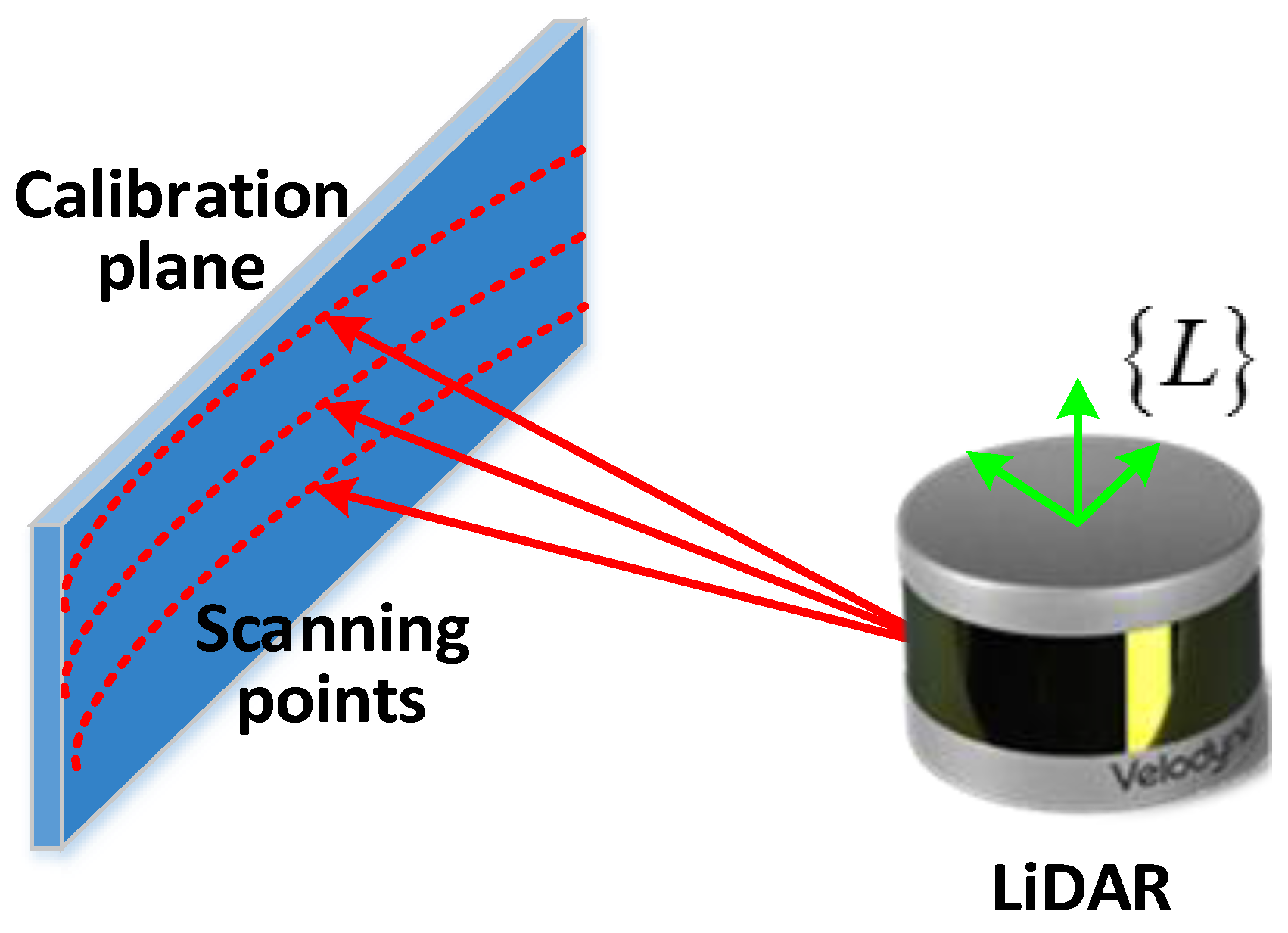

3.1. LiDAR Measurement Model

3.2. IMU Measurement Model

3.3. Time Delay Error Model

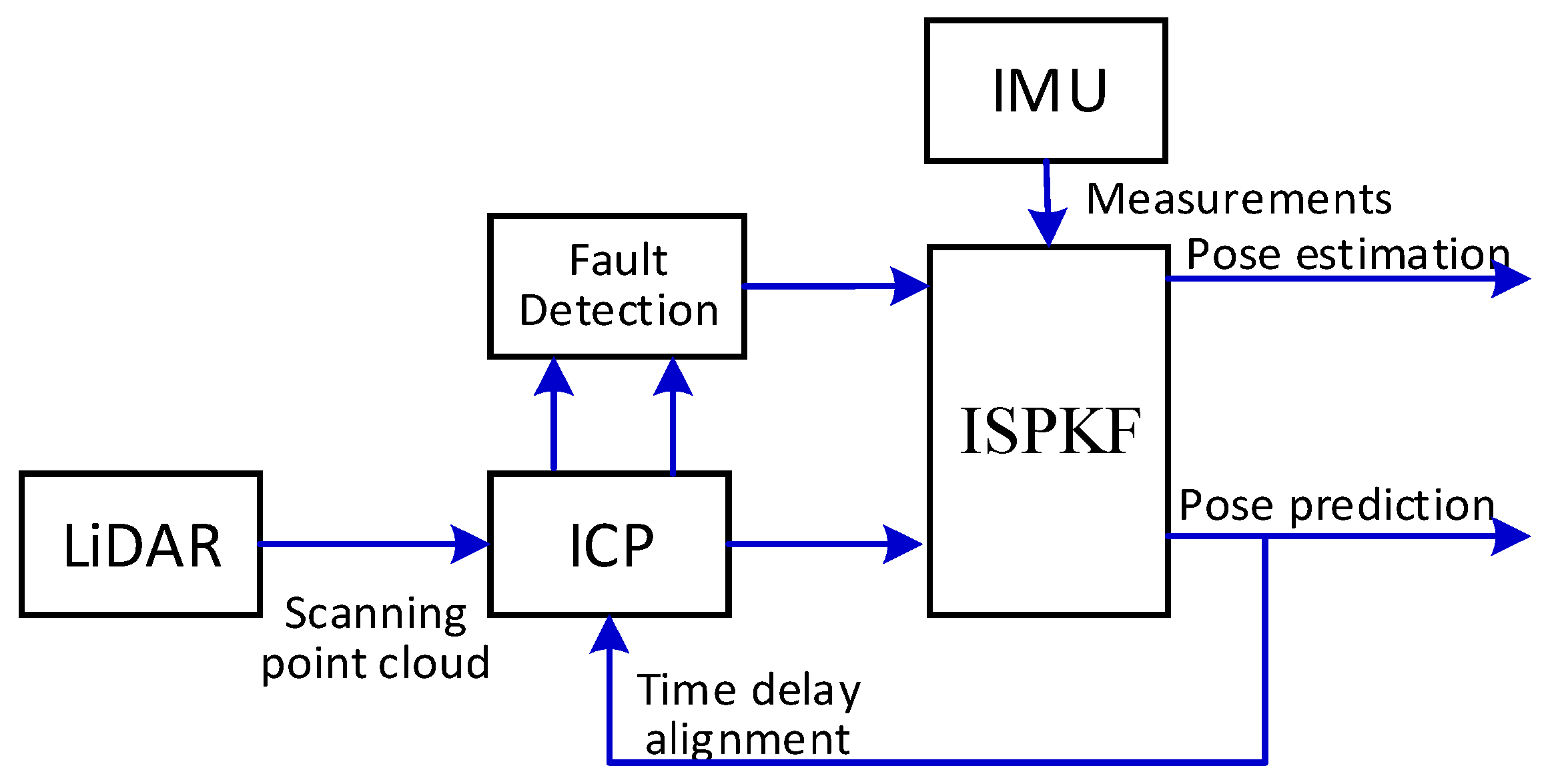

4. Time Delay Calibration Using the ICP-ISPKF Integration Method

4.1. The ICP Algorithm for Estimation the Time Delay and Relative Orientation of LiDAR-IMU

4.2. Iterated Sigma Point Kalman Filter (ISPKF) Algorithm for Compensation Calibration Parameters

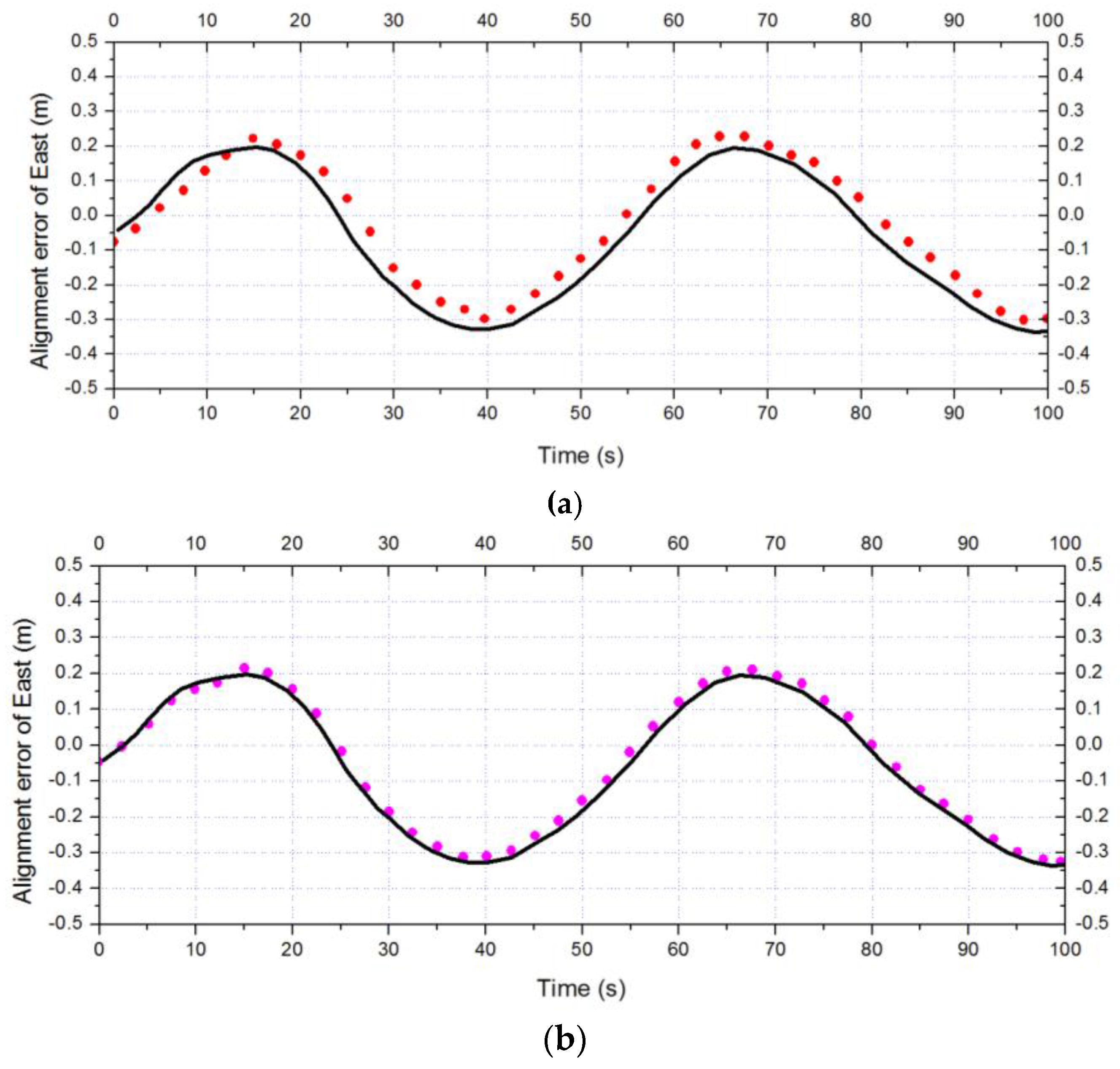

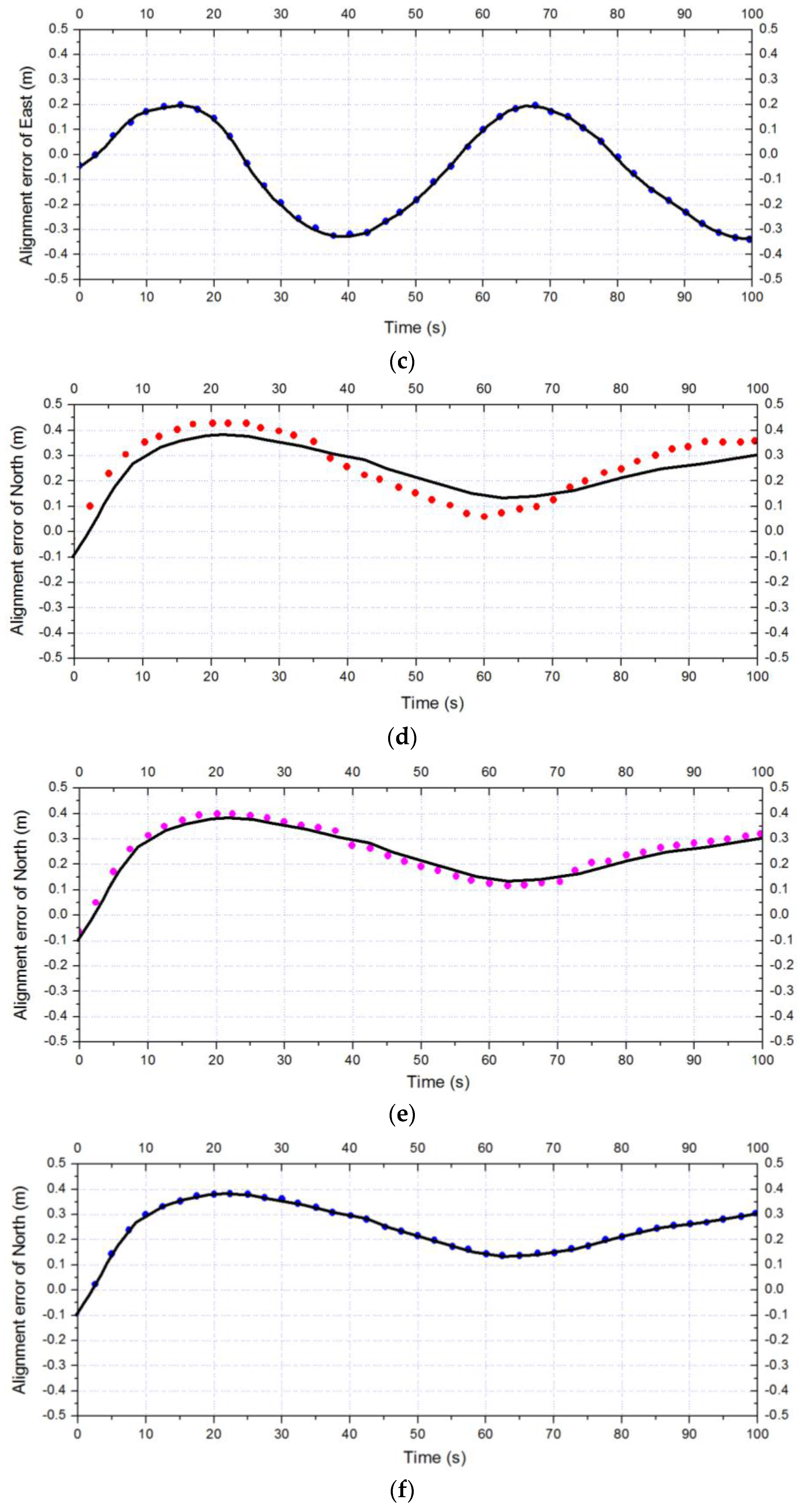

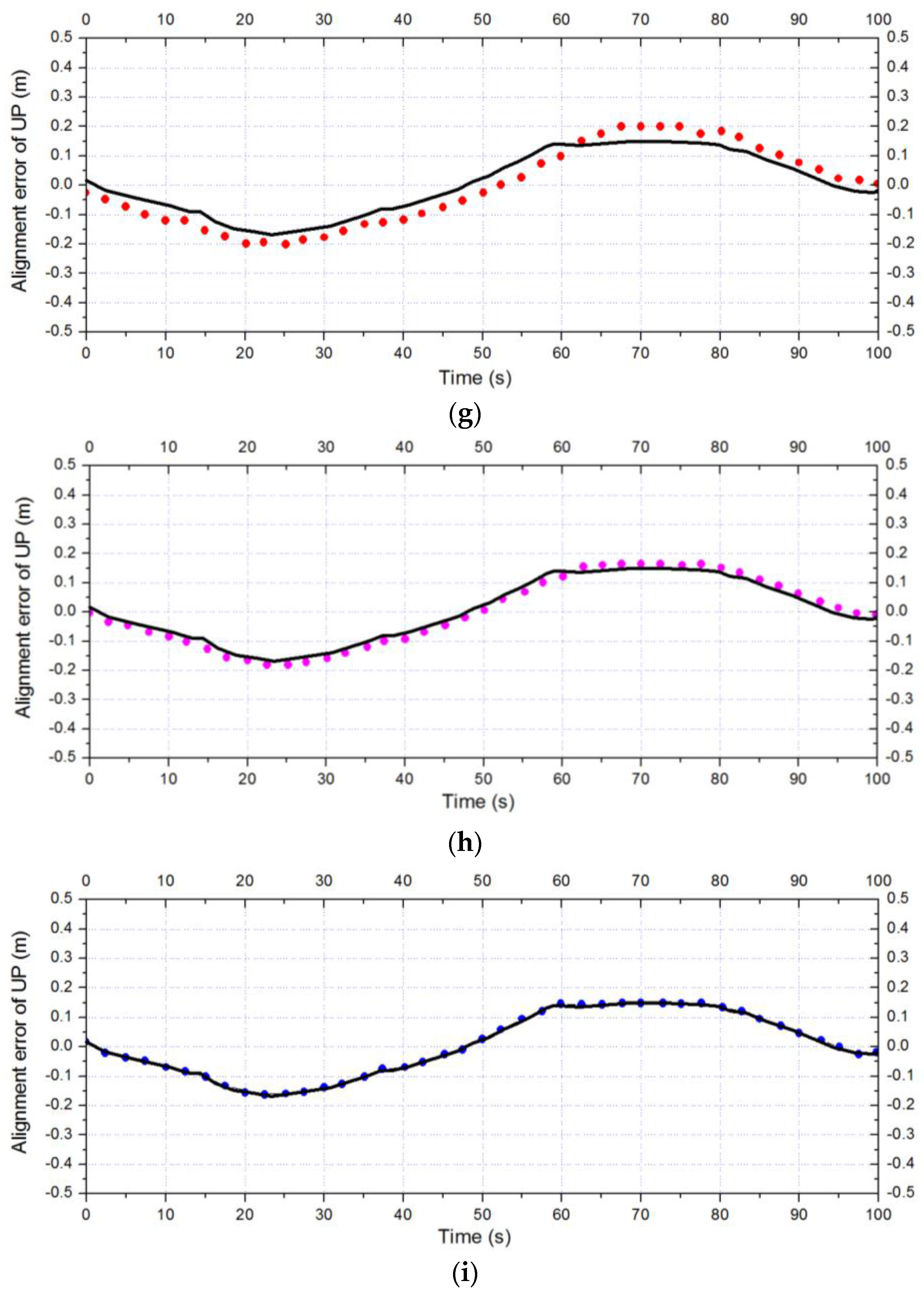

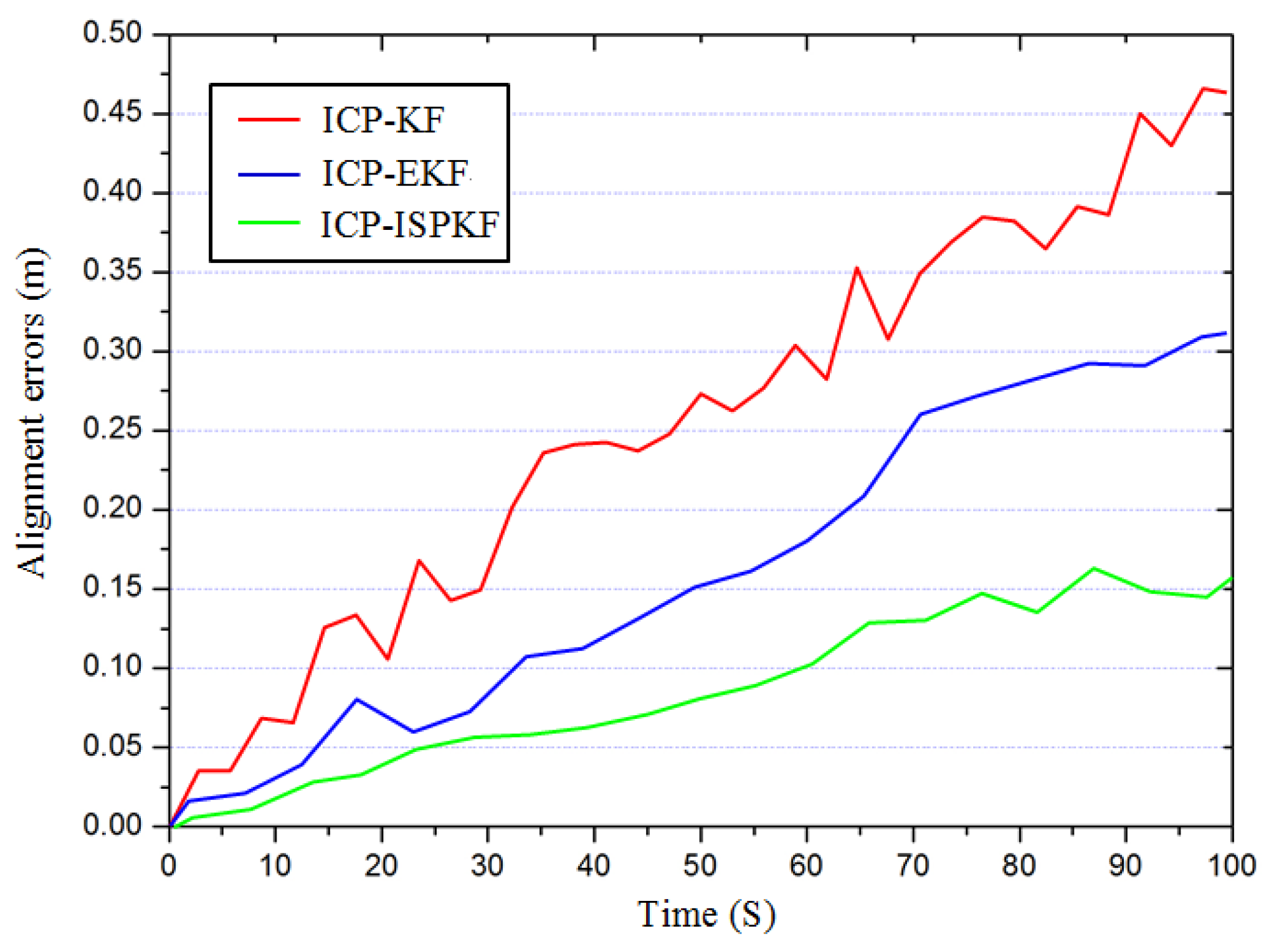

5. Experiments and Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gong, X.; Lin, Y.; Liu, J. 3D LIDAR-camera extrinsic calibration using an arbitrary trihedron. Sensors 2013, 13, 1902–1918. [Google Scholar] [CrossRef] [PubMed]

- Sim, S.; Sock, J.; Kwak, K. Indirect correspondence-based robust extrinsic calibration of LiDAR and camera. Sensors 2016, 16, 933. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Shi, H.; Pan, J.; Zhang, C. Integrated navigation method based on inertial navigation system and LiDAR. Opt. Eng. 2016, 55, 044102. [Google Scholar] [CrossRef]

- Yun, S.; Lee, Y.J.; Kim, C.J.; Sung, S. Integrated navigation design using a gimbaled vision/LiDAR system with an approximate ground description model. Int. J. Aeronaut. Space Sci. 2014, 14, 369–378. [Google Scholar] [CrossRef]

- Kelly, J.; Roy, N.; Sukhatme, G.S. Determining the time delay between inertial and visual sensor measurements. IEEE Trans. Robot. 2014, 30, 1514–1523. [Google Scholar] [CrossRef]

- Aghili, F.; Su, C.-Y. Robust Relative Navigation by Integration of ICP and Adaptive Kalman Filter Using Laser Scanner and IMU. IEEE/ASME Trans. Mech. 2016, 21, 2015–2026. [Google Scholar] [CrossRef]

- Anna, P.; Charles, T.; Spyros, K. On using QA/QC techniques for LiDAR/IMU boresight misalignment. In Proceedings of the 5th International Symposium on Mobile Mapping Technology, Padua, Italy, 19–23 May 2007.

- Deymier, C.; Teuliere, C.; Chateau, T. Self-calibration of a vehicle’s acquisition system with cameras, IMU and 3D LiDAR. Traitement Du Signal 2015, 32, 121–145. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Niu, X.; Wang, L.; Chen, L.; Liu, J.; Shi, C.; Hyyppä, J. LiDAR scan matching aided inertial navigation system in GNSS-denied environments. Sensors 2015, 15, 16710–16728. [Google Scholar] [CrossRef] [PubMed]

- Mirzaei, F.M.; Kottas, D.G.; Roumeliotis, S.I. 3D LIDAR-camera intrinsic and extrinsic calibration: Identifiability and analytical least-squares-based initialization. Int. J. Robot. Res. 2012, 31, 452–467. [Google Scholar] [CrossRef]

- Yun, S.; Lee, Y.J.; Sung, S. IMU/Vision/LiDAR integrated navigation system in GNSS denied environments. In Proceedings of the 2013 IEEE Aerospace Conference Proceedings, Big Sky, MT, USA, 2–9 March 2013.

- Li, R.; Liu, J.; Zhang, L.; Hang, Y. LIDAR/MEMS IMU integrated navigation (SLAM) method for a small UAV in indoor environments. In Proceedings of the Inertial Sensors & Systems Symposium, Laguna Beach, CA, USA, 25–26 February 2014; pp. 1–15.

- Zhang, X.; Lin, Z. Online calibration method for IMU based on the usage of single-beam LiDAR. Infrared Laser Eng. 2013, 42, 466–471. [Google Scholar]

- Pham, D.D.; Suh, Y.S. Pedestrian navigation using foot-mounted inertial sensor and LIDAR. Sensors 2016, 16, 120. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Yin, J.; Lin, Z.; Zhang, C. A positioning and orientation method based on the usage of INS and single-beam LiDAR. Optik 2015, 126, 3376–3381. [Google Scholar] [CrossRef]

- Nguyen, H.-N.; Zhou, J.; Kang, H.-J. A calibration method for enhancing robot accuracy through integration of an extended Kalman filter algorithm and an artificial neural network. Neurocomputing 2015, 151, 996–1005. [Google Scholar] [CrossRef]

- Xian, Z.; Hu, X.; Lian, J. Fusing Stereo Camera and Low-Cost Inertial Measurement Unit for Autonomous Navigation in a Tightly-Coupled Approach. J. Navig. 2014, 68, 434–452. [Google Scholar] [CrossRef]

- Bing, A.; Sentis, L.; Paine, N.; Han, S.; Mok, A.; Fok, C.-L. Stability and Performance Analysis of Time-Delayed Actuator Control Systems. J. Dyn. Syst. Meas. Control 2016, 138, 1–20. [Google Scholar]

- Wu, Q.; Jia, Q.; Shan, J.; Meng, X. Angular velocity estimation based on adaptive simplified spherical simplex unscented Kalman filter in GFSINS. Proc. Inst. Mech. Eng. Part G J. Aerosp. Eng. 2013, 228, 1375–1388. [Google Scholar] [CrossRef]

- Jwo, D.-J. Navigation Integration Using the Fuzzy Strong Tracking Unscented Kalman Filter. J. Navig. 2009, 62, 303–322. [Google Scholar] [CrossRef]

- Gaurav, P.; McBride, J.R.; Savarese, S.; Eustice, R.M. Automatic Extrinsic Calibration of Vision and Lidar by Maximizing Mutual Information. J. Field Robot. 2014, 32, 696–722. [Google Scholar]

- García-Moreno, A.-I.; González-Barbosa, J.-J.; Ramírez Pedraza, A.; Hurtado Ramos, J.B.; Ornelas Rodriguez, F.J. Accurate evaluation of sensitivity for calibration between a LiDAR and a panoramic camera used for remote sensing. J. Appl. Remote Sens. 2016, 10, 024002. [Google Scholar] [CrossRef]

- Klimkovich, B.V.; Tolochko, A.M. Determination of time delays in measurement channels during SINS calibration in inertial mode. Gyroscopy Navig. 2016, 7, 137–144. [Google Scholar] [CrossRef]

- Huang, L.; Barth, M. A novel multi-planar LIDAR and computer vision calibration procedure using 2D patterns for automated navigation. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 117–122.

- Zhou, L. A new minimal solution for the extrinsic calibration of a 2D LIDAR and a camera using three plane-line correspondences. IEEE Sens. J. 2014, 14, 442–454. [Google Scholar] [CrossRef]

- Park, Y.; Yun, S.; Won, C.S.; Cho, K.; Um, K.; Sim, S. Calibration between color camera and 3D LIDAR instruments with a polygonal planar board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- Kelly, J.; Sukhatme, G.S. Visual-Inertial Sensor Fusion: Localization, Mapping and Sensor-to-Sensor Self-calibration. Int. J. Robot. Res. 2011, 30, 56–79. [Google Scholar] [CrossRef]

- Kelly, J.; Sukhatme, G.S. Visual-inertial simultaneous localization, mapping and sensor-to-sensor self-calibration. In Proceedings of the 2009 IEEE International Symposium on Computational Intelligence in Robotics and Automation (CIRA), Daejeon, Korea, 15–18 December 2009; Volume 30, pp. 360–368.

- Sibley, G.; Sukhatme, G.; Matthies, L. The Iterated Sigma Point Kalman Filter with Applications to Long Range Stereo. Robot. Sci. Syst. 2006, 8, 235–244. [Google Scholar]

- Farhad, A. 3D simultaneous localization and mapping using IMU and its observability analysis. Robotica 2011, 29, 805–814. [Google Scholar]

- Kelly, J.; Sukhatme, G.S. A General Framework for Temporal Calibration of Multiple Proprioceptive and Exteroceptive Sensors. In Experimental Robotics; Springer: Berlin, Germany, 2012; Volume 79, pp. 195–209. [Google Scholar]

| IMU | LiDAR | ||||

|---|---|---|---|---|---|

| Navigation | Sensors Accelerometers Gyroscopes | ||||

| Horizontal Position Accuracy: 0.5 m | Range | 10 g | 490°/s | Channels | 16 |

| Vertical Position Accuracy: 0.8 m | Bias Instability | 15 μg | 0.05°/h | Range | 100 m |

| Velocity Accuracy: 0.007 m/s | Initial Bias | <1 mg | <1°/h | Accuracy | ±3 cm |

| Roll & Pitch Accuracy: 0.01° | Scaling Error | <0.03% | <0.01% | Vertical FOV | 30° |

| Heading Accuracy: 0.05° | Scale Stability | <0.04% | <0.02% | Horizontal FOV | 360° |

| Output Data Rate: Up to 1000 Hz | Non-linearity | <0.03% | <0.005% | Output Data Rate | 300,000 pts/s |

| Time Delay Calibration Times Using ICP-ISPKF | Time Delay Error (ms) | Alignment Error in East (m) | Alignment Error in North (m) | Alignment Error in Up (m) |

|---|---|---|---|---|

| 0 | 9.58 | 0.093 | 0.168 | 0.089 |

| 1 | 4.67 | 0.067 | 0.097 | 0.063 |

| 2 | 2.45 | 0.043 | 0.068 | 0.041 |

| 3 | 1.66 | 0.037 | 0.047 | 0.035 |

| 4 | 1.17 | 0.031 | 0.039 | 0.029 |

| 5 | 0.87 | 0.026 | 0.032 | 0.025 |

| 6 | 0.63 | 0.023 | 0.027 | 0.021 |

| 7 | 0.57 | 0.020 | 0.024 | 0.019 |

| 8 | 0.53 | 0.019 | 0.021 | 0.018 |

| 9 | 0.51 | 0.018 | 0.020 | 0.018 |

| 10 | 0.50 | 0.018 | 0.019 | 0.017 |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W. LiDAR-IMU Time Delay Calibration Based on Iterative Closest Point and Iterated Sigma Point Kalman Filter. Sensors 2017, 17, 539. https://doi.org/10.3390/s17030539

Liu W. LiDAR-IMU Time Delay Calibration Based on Iterative Closest Point and Iterated Sigma Point Kalman Filter. Sensors. 2017; 17(3):539. https://doi.org/10.3390/s17030539

Chicago/Turabian StyleLiu, Wanli. 2017. "LiDAR-IMU Time Delay Calibration Based on Iterative Closest Point and Iterated Sigma Point Kalman Filter" Sensors 17, no. 3: 539. https://doi.org/10.3390/s17030539

APA StyleLiu, W. (2017). LiDAR-IMU Time Delay Calibration Based on Iterative Closest Point and Iterated Sigma Point Kalman Filter. Sensors, 17(3), 539. https://doi.org/10.3390/s17030539