Local Positioning System Using Flickering Infrared LEDs

Abstract

1. Introduction

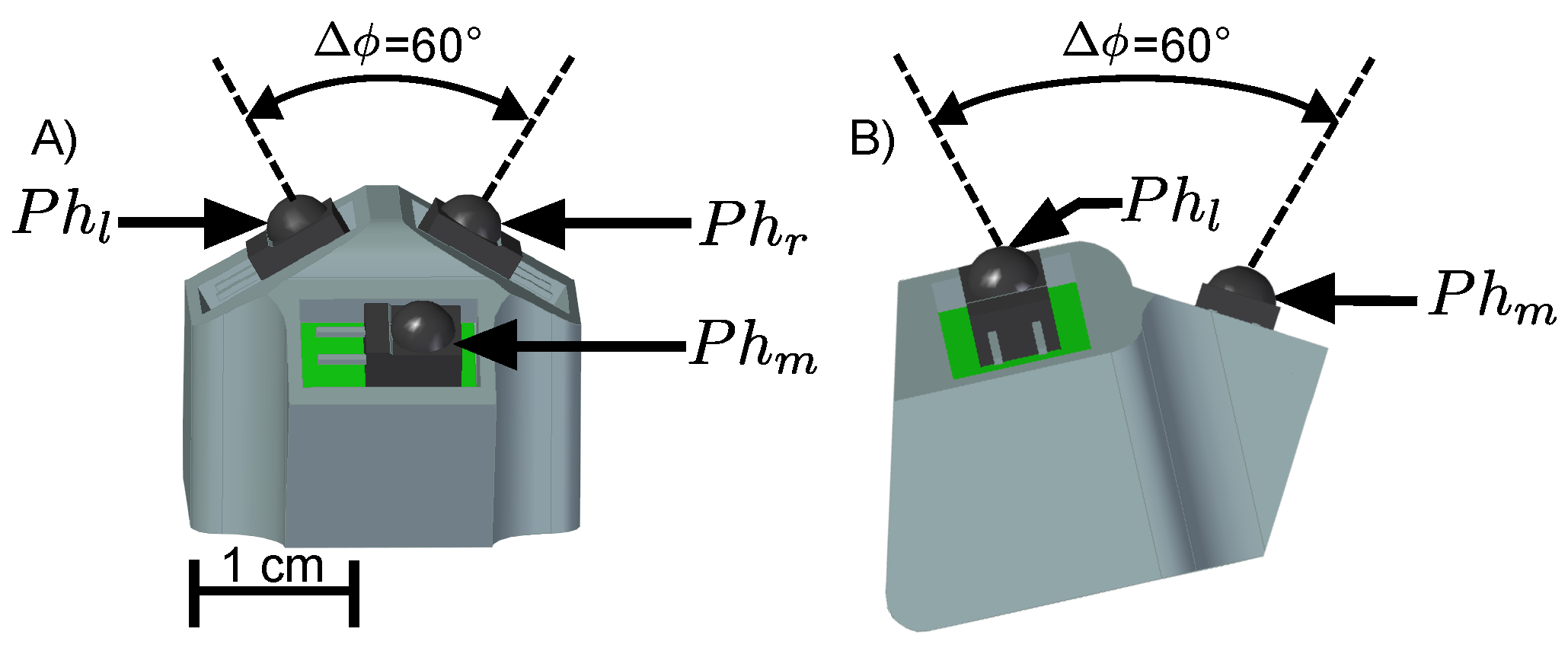

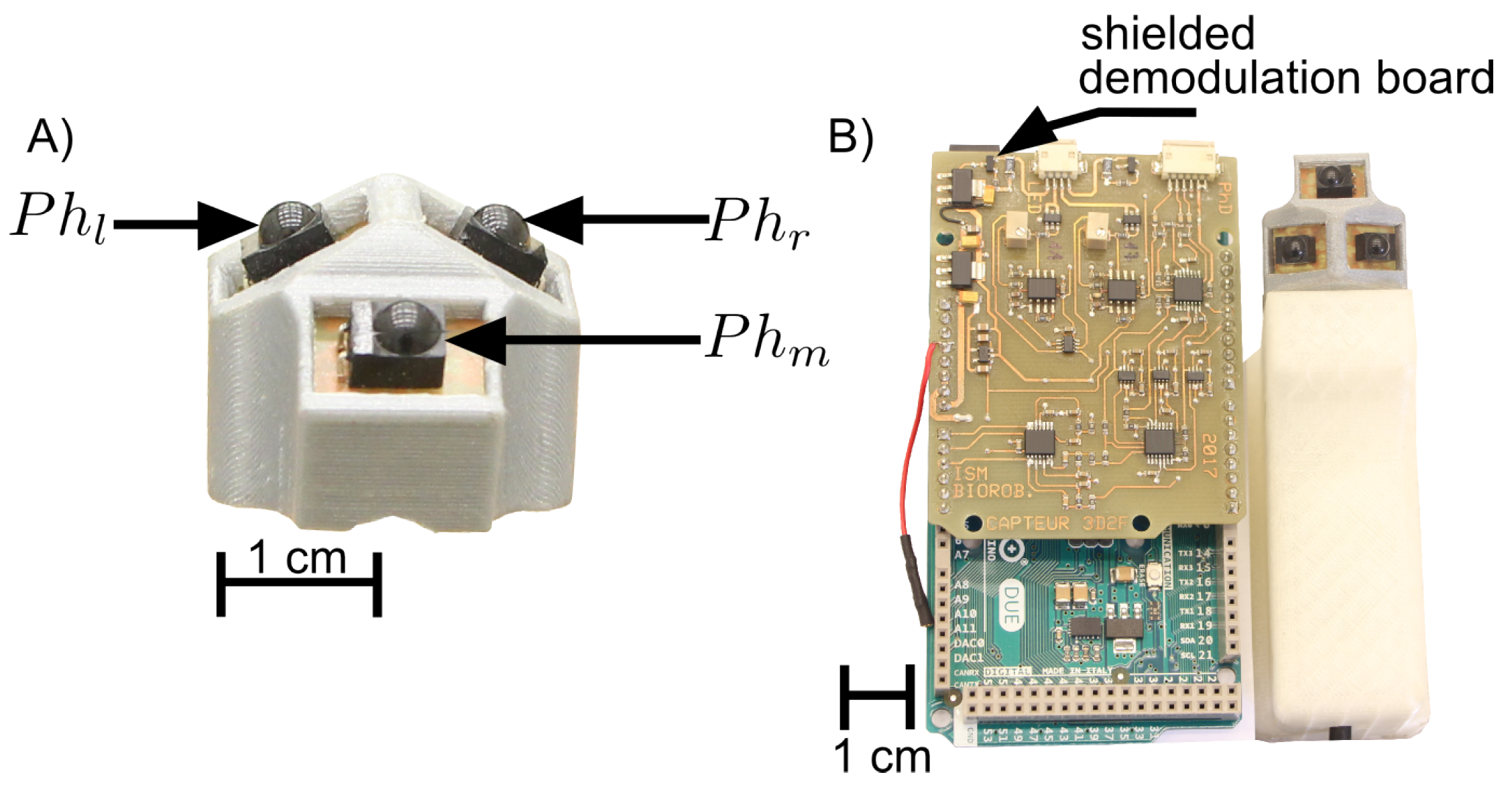

2. Sensor Design

3. Fabrication

4. Modeling

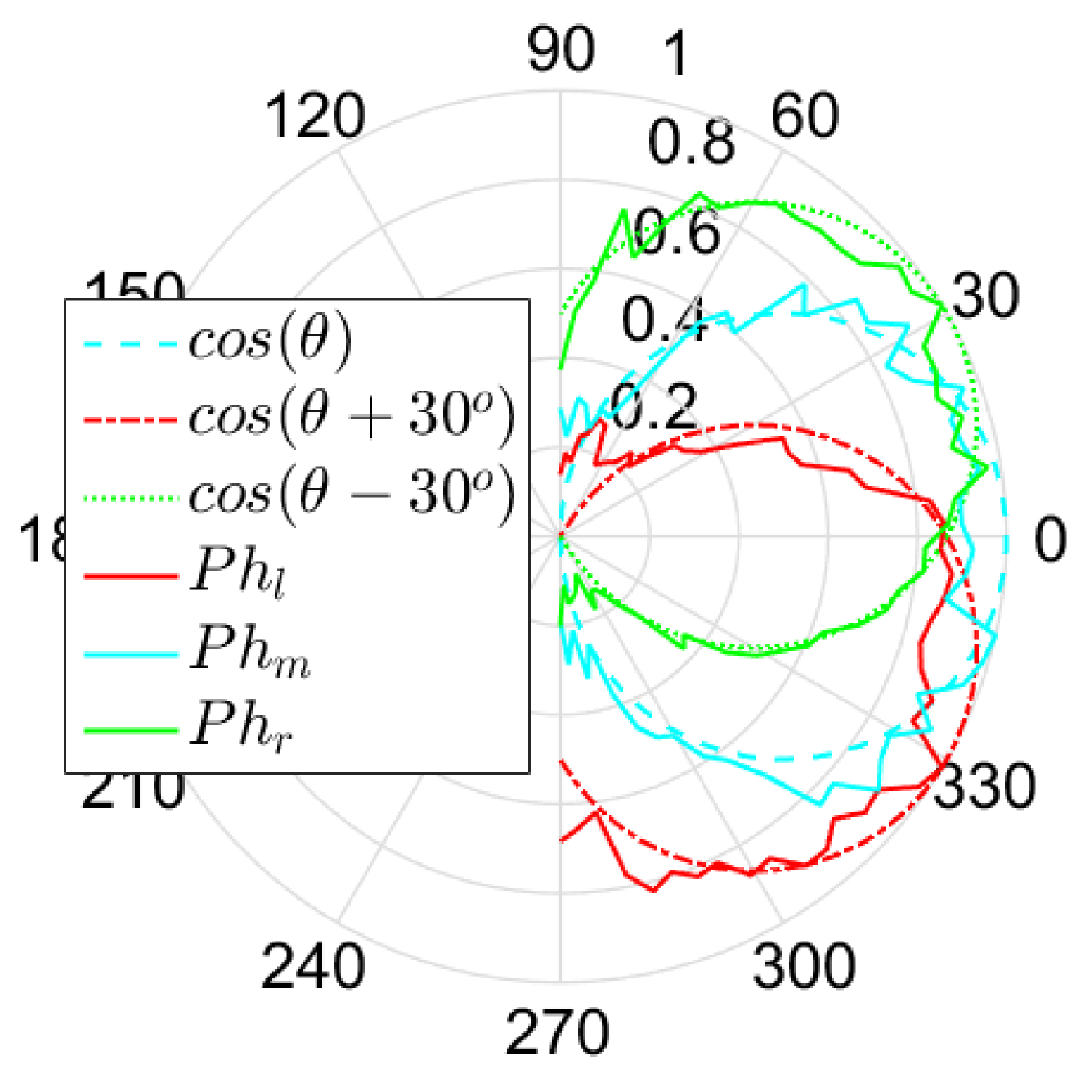

4.1. Angular Sensitivity of the Photosensors

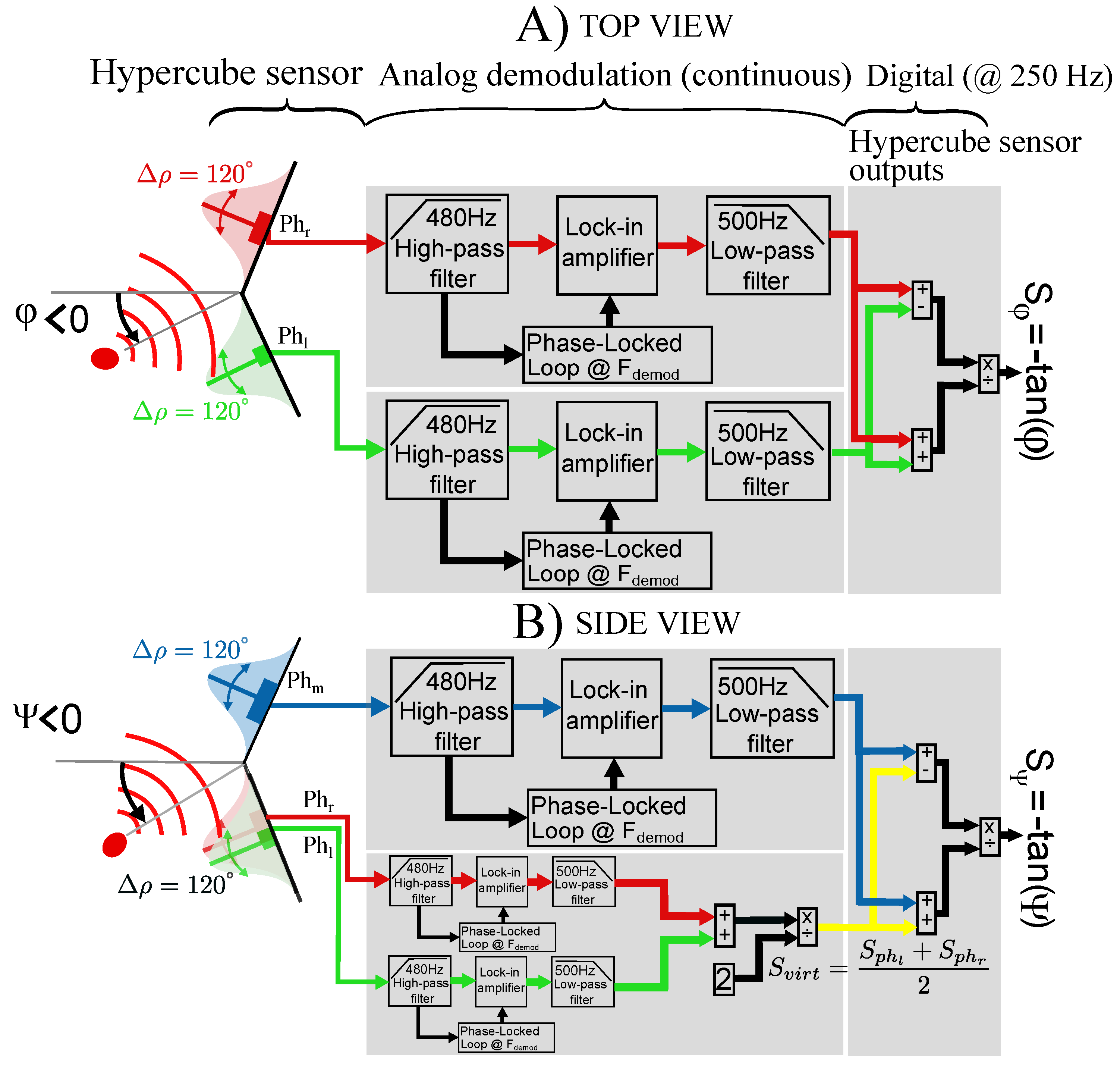

4.2. Principle of the Sensor

5. Experimental Results

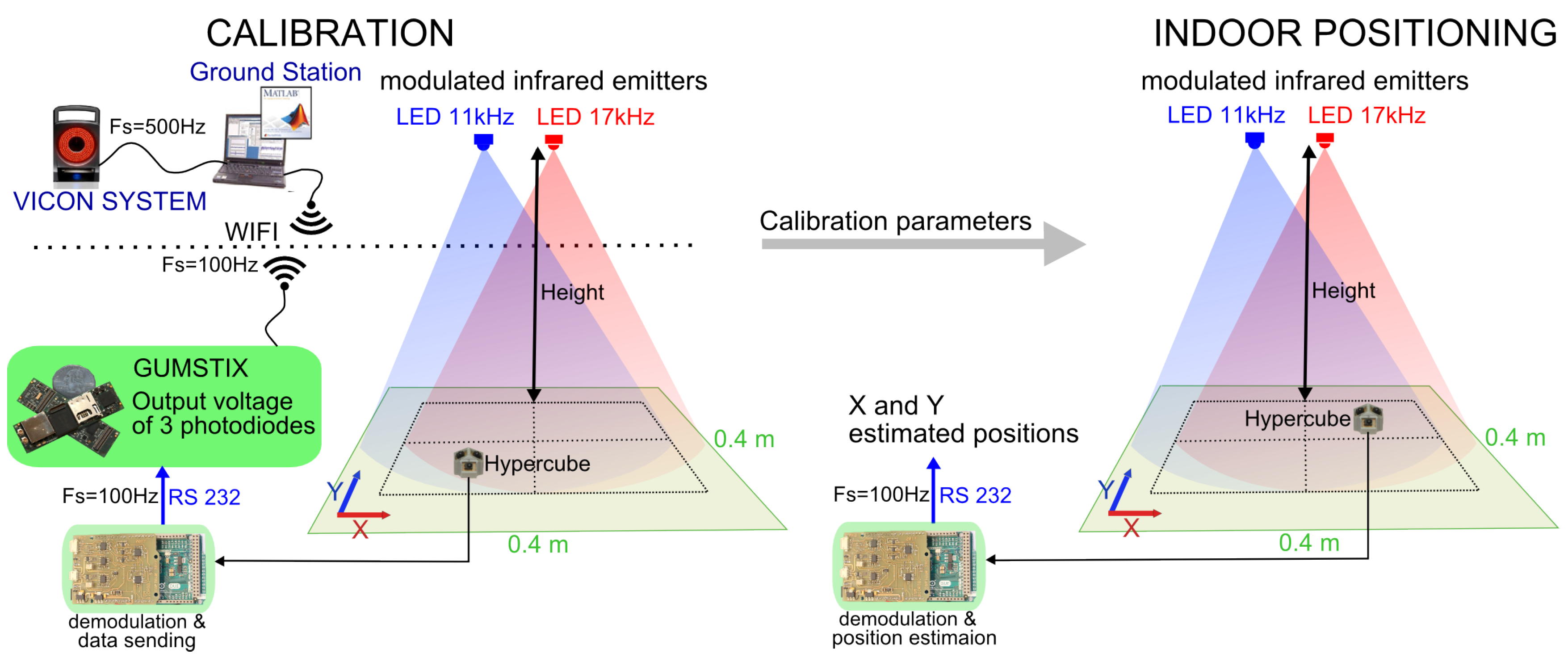

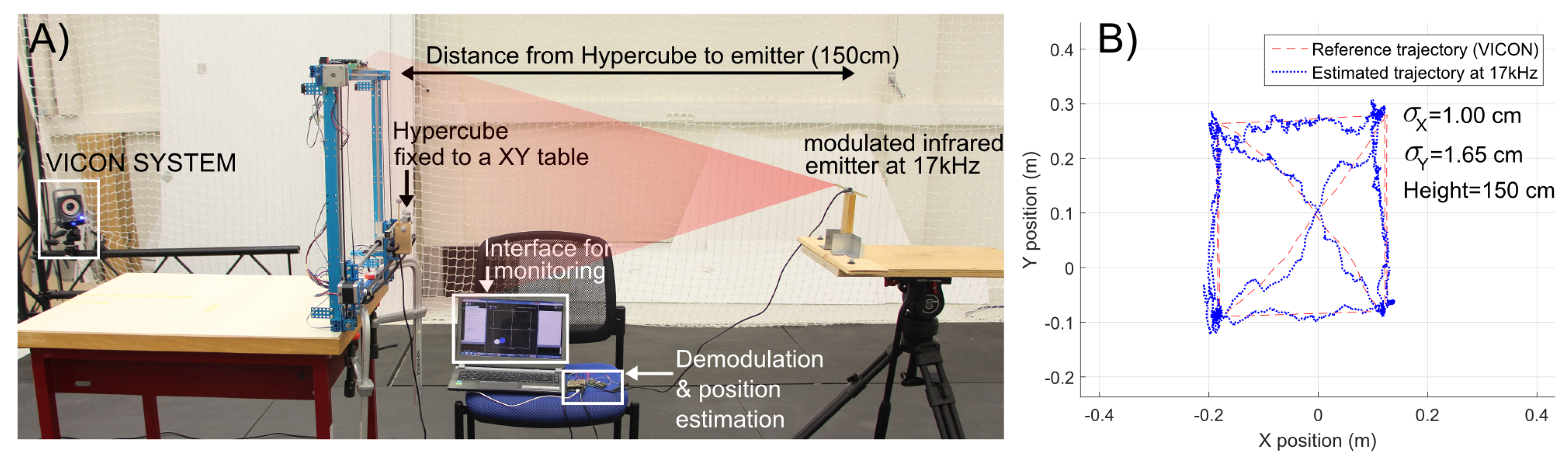

5.1. Position Estimation in 2D

5.2. Localization of a Mobile Robot in 2D

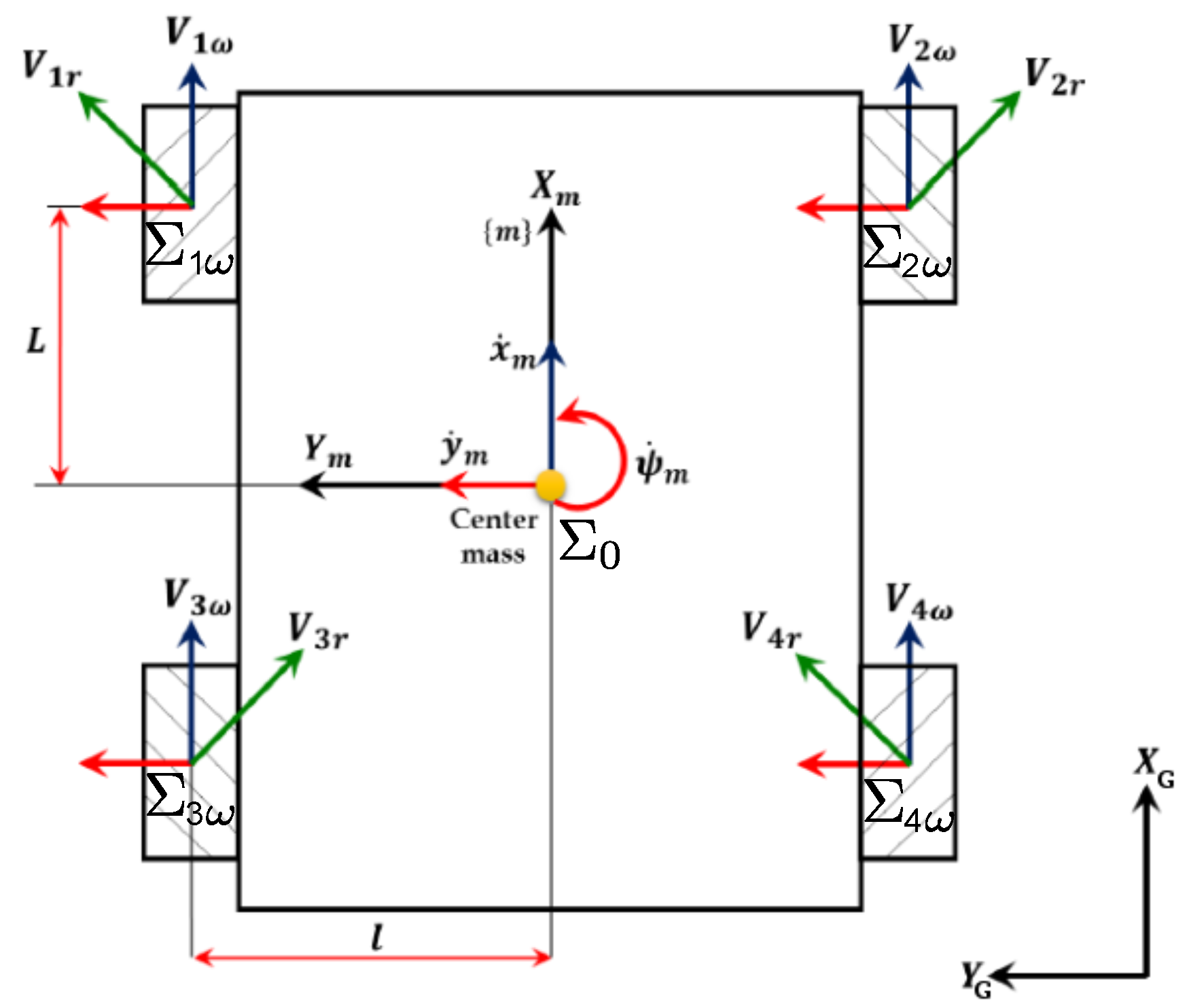

5.2.1. Kinematics and Dynamics Modeling of the Mobile Robot

5.2.2. Design of Position Control

- The nonlinear control block computes each angular reference speed for the wheel i. The control law minimizes the error between the reference position and the position estimate .

- The angular speed of each wheel is controlled in closed loop using a local proportional integral controller (PI).

- The estimated position and heading of the mobile robot collected in the vector are provided by HyperCube or the Vicon motion capture system .

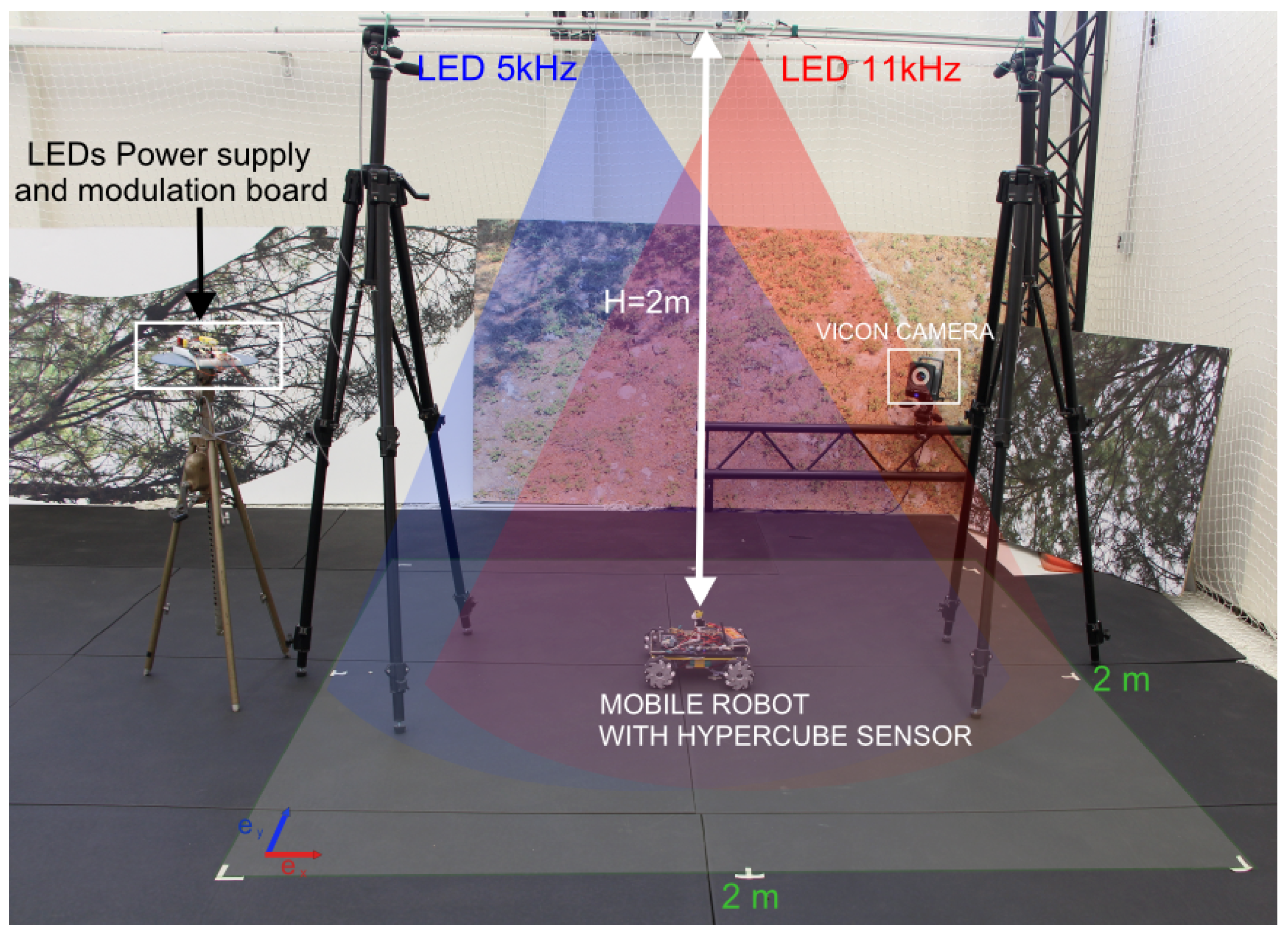

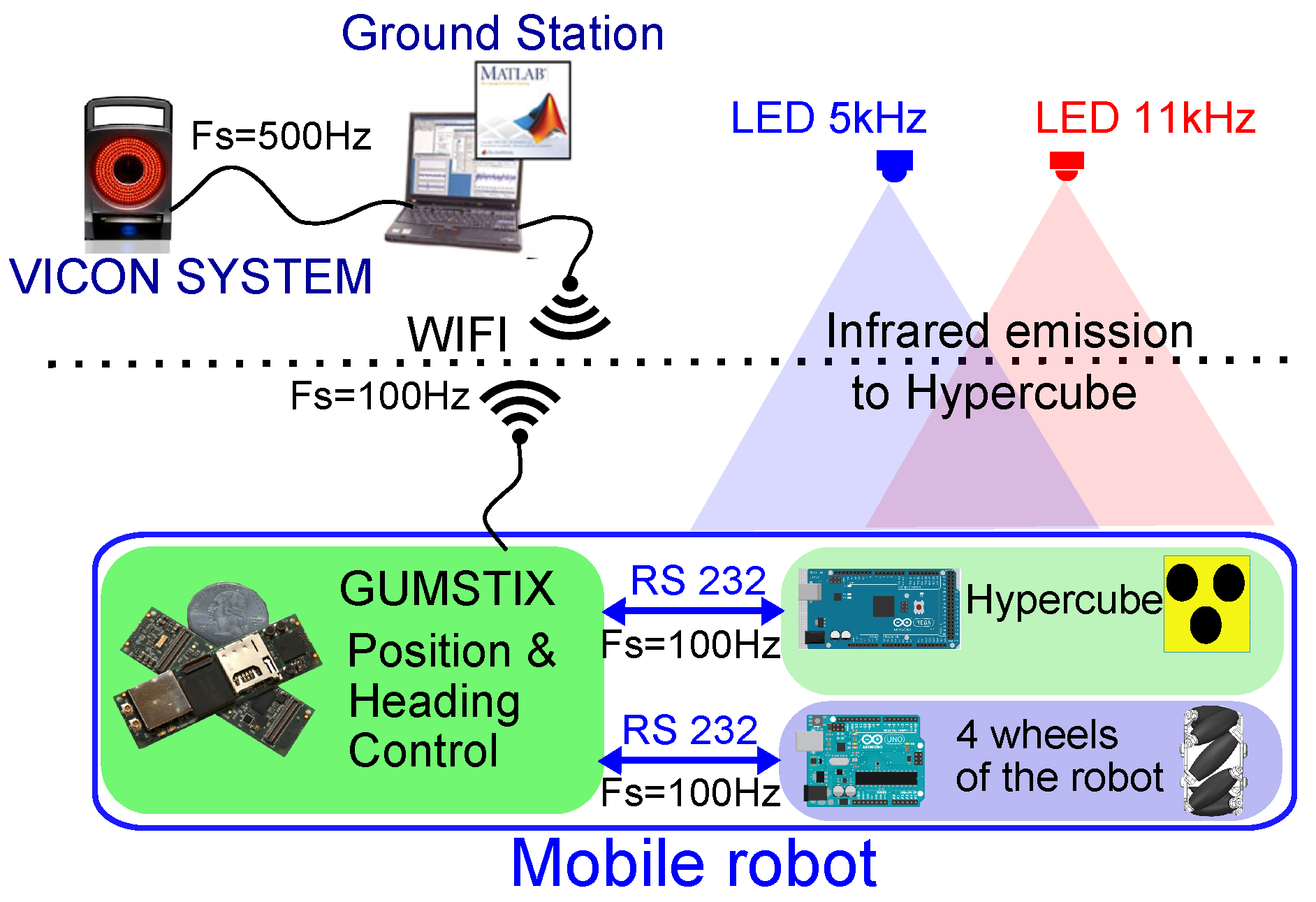

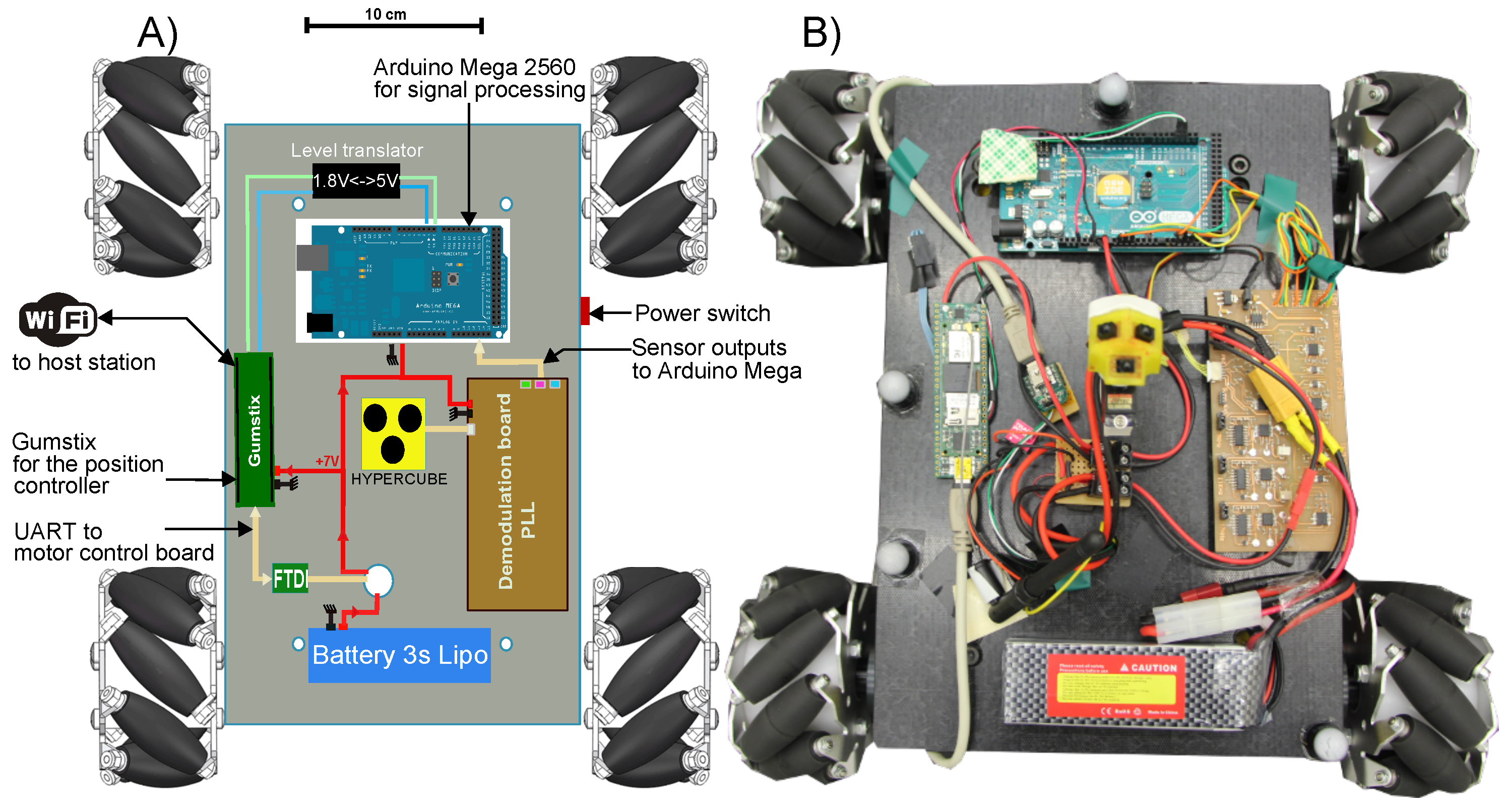

5.2.3. Implementation of the Indoor Localization for the Mobile Robot

- The Vicon motion capture system featuring sub-millimetric accuracy. It provides the localization estimation of the mobile robot. The motion capture data are used for comparison purposes.

- The ground station connected to the Vicon system runs Matlab/Simulink® and QUARC® software programs. The nonlinear control law of the mobile robot presented in Section 5.2.2 is designed with Matlab/Simulink® and compiled. The program is transferred via WIFI radio link to the Gumstix microcontroller embedded on the mobile robot. The control algorithm runs onboard the robot.

5.2.4. Application to the 2D Localization

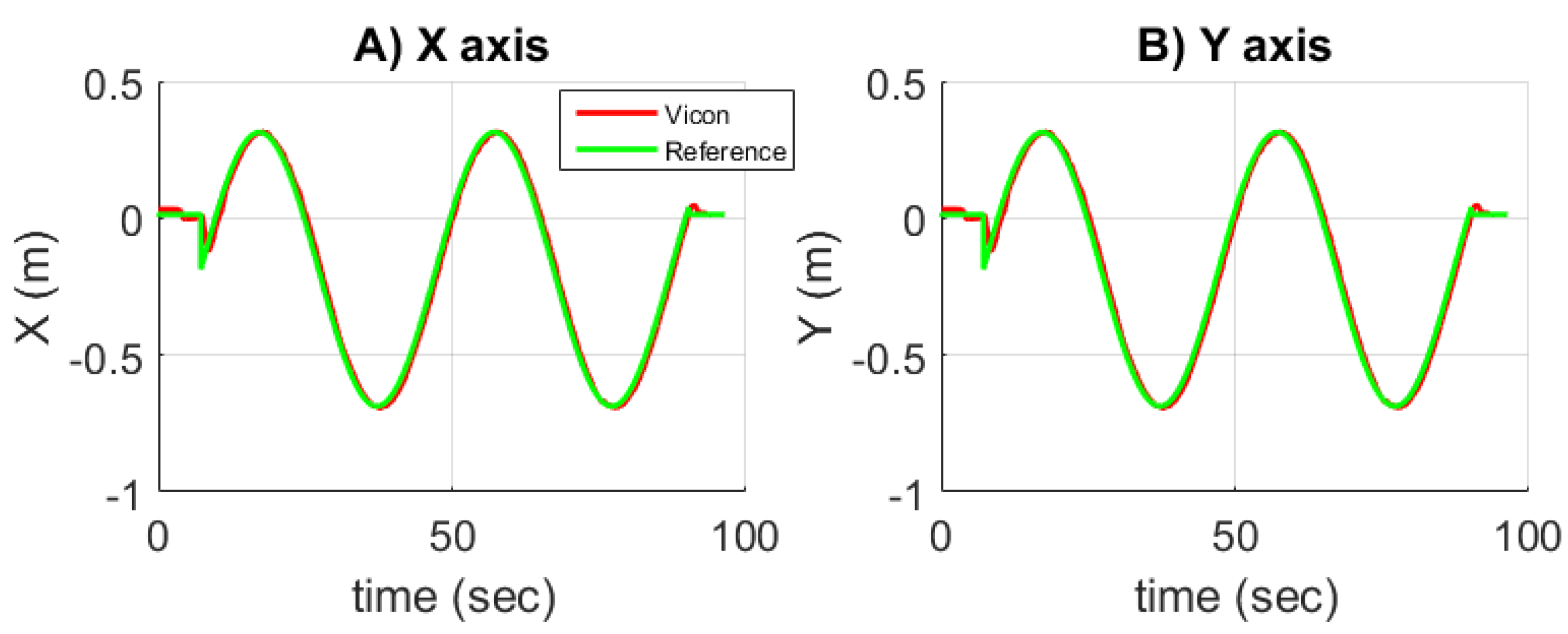

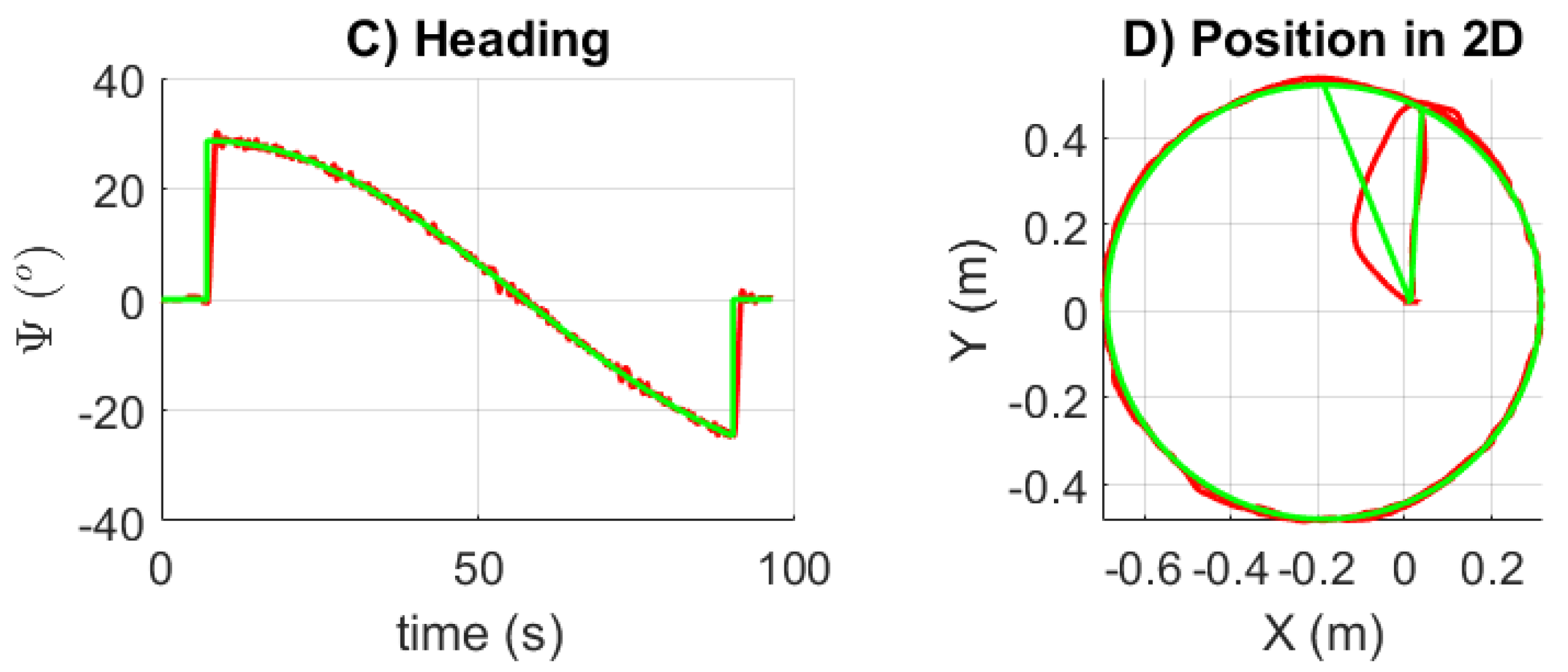

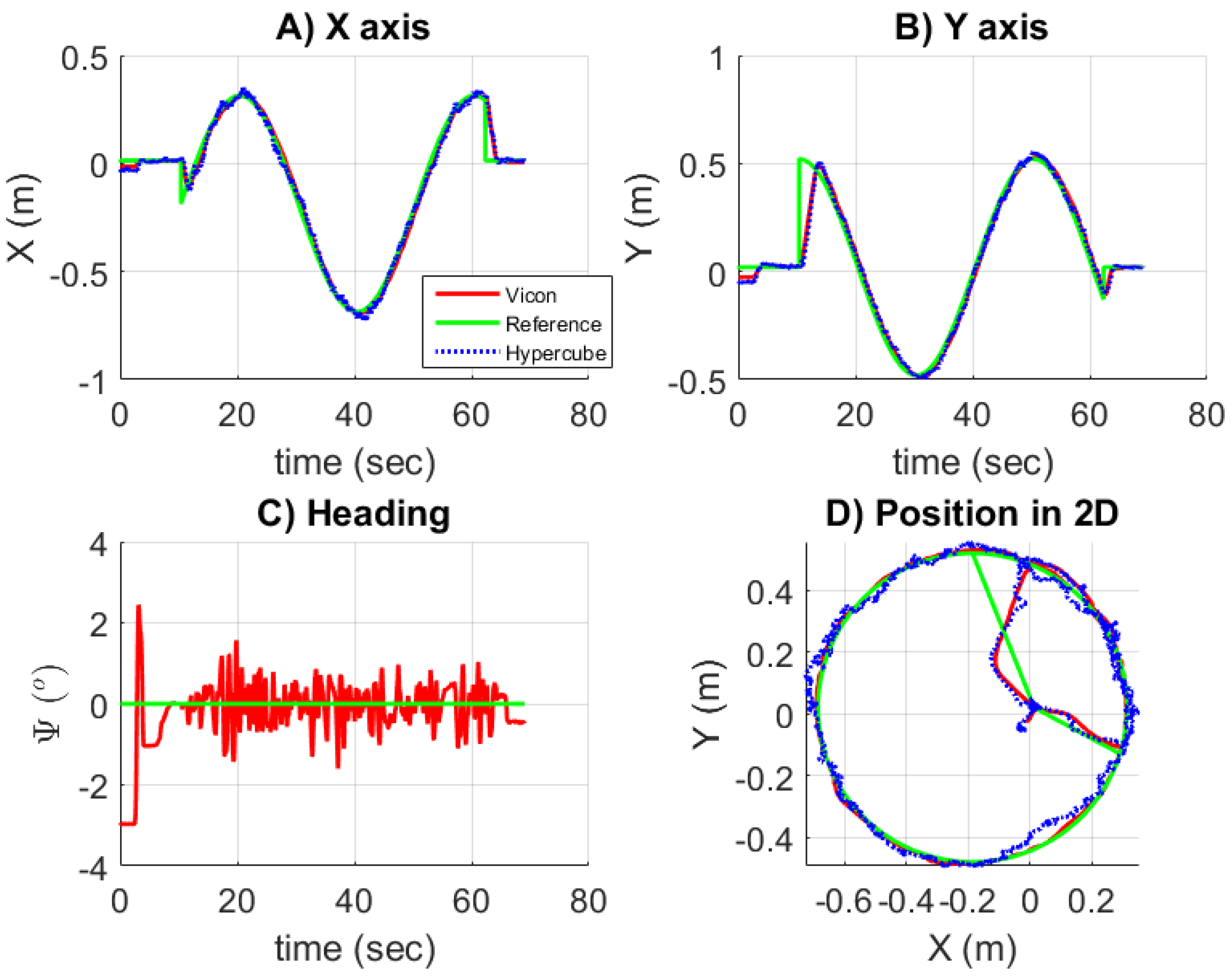

5.2.5. Validation of the Nonlinear Control Law for Trajectory Tracking

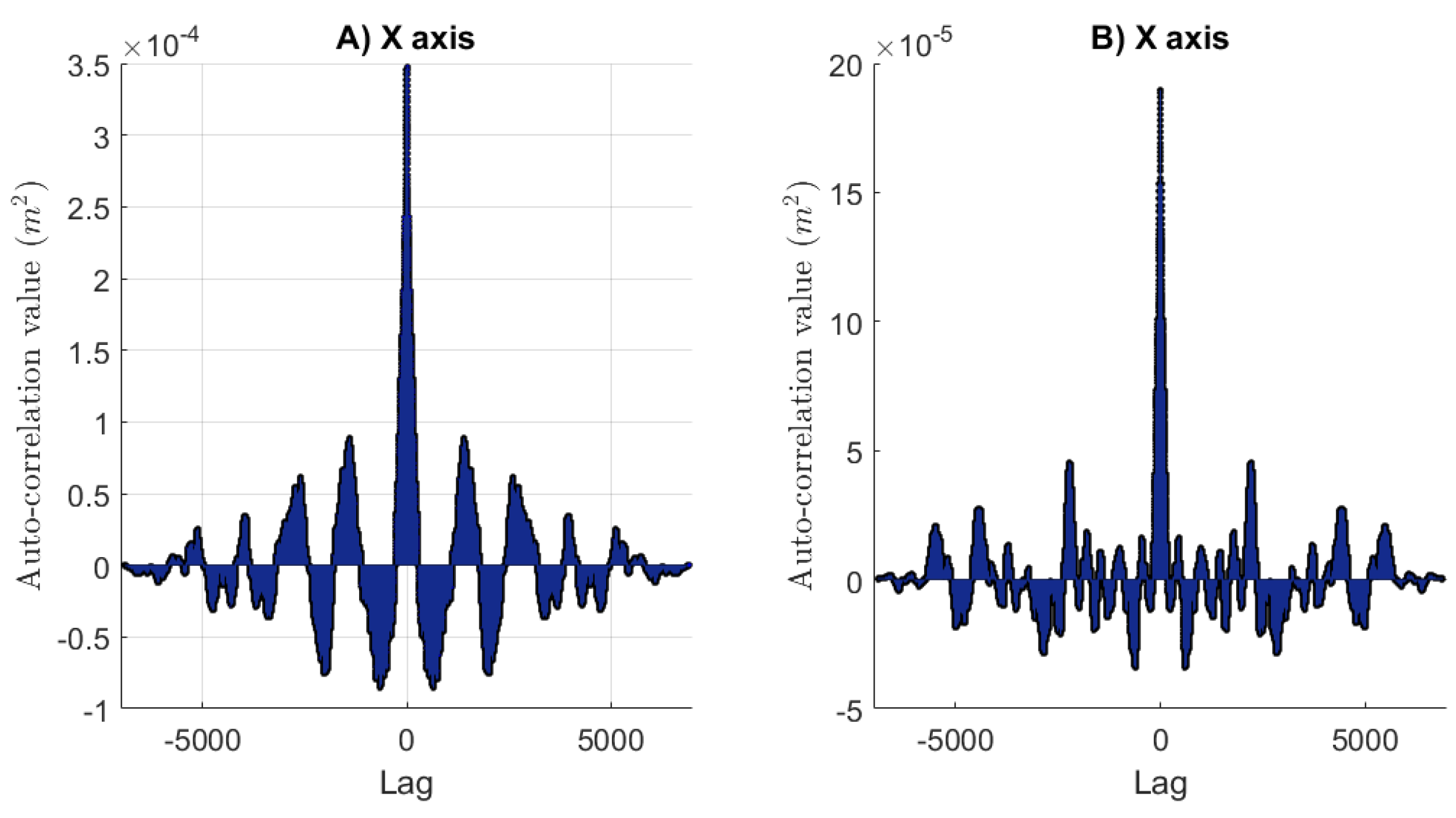

5.2.6. Trajectory Reconstruction Using HyperCube

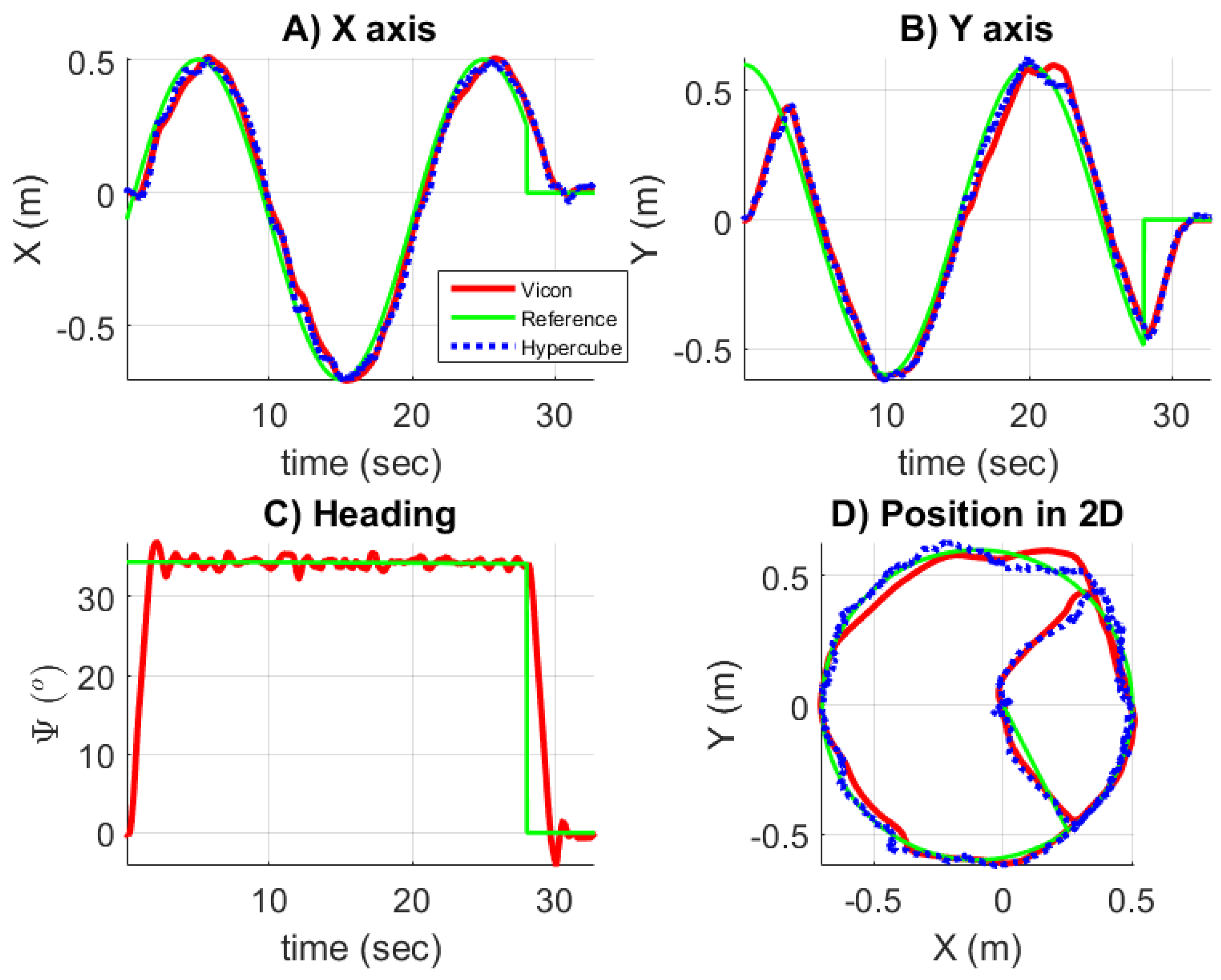

5.2.7. Robot Closed-Loop Control Based on HyperCube

6. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| AGV | Automated Guided Vehicle |

| CCD | Charge Couple Device |

| CMOS | Complementary Metal Oxide Semiconductor |

| GPS | Global Positioning System |

| IMU | Inertial Measurement Unit |

| IR | Infrared |

| LED | Light Emitting Diode |

| LIDAR | LIght Detection And Ranging |

| PD | Photodiode |

| WIFI | Wireless Fidelity |

References

- Bahl, P.; Padmanabhan, V.N. RADAR: An in-building RF-based user location and tracking system. In Proceedings of the INFOCOM 2000 Nineteenth Annual Joint Conference of the IEEE Computer and Communications Societies, Tel Aviv, Israel, 26–30 March 2000; Volume 2, pp. 775–784. [Google Scholar]

- Raja, A.K.; Pang, Z. High accuracy indoor localization for robot-based fine-grain inspection of smart buildings. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 2010–2015. [Google Scholar]

- Kjærgaard, M.B. A taxonomy for radio location fingerprinting. In Proceedings of the International Symposium on Location-and Context-Awareness, Oberpfaffenhofen, Germany, 20–21 September 2007; Springer: Berlin, Germany, 2007; pp. 139–156. [Google Scholar]

- Reinke, C.; Beinschob, P. Strategies for contour-based self-localization in large-scale modern warehouses. In Proceedings of the 2013 IEEE 9th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 5–7 September 2013; pp. 223–227. [Google Scholar]

- Scaramuzza, D.; Fraundorfer, F. Visual Odometry [Tutorial]. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Frassl, M.; Angermann, M.; Lichtenstern, M.; Robertson, P.; Julian, B.J.; Doniec, M. Magnetic maps of indoor environments for precise localization of legged and non-legged locomotion. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 913–920. [Google Scholar]

- Yasir, M.; Ho, S.W.; Vellambi, B.N. Indoor Position Tracking Using Multiple Optical Receivers. J. Lightw. Technol. 2016, 34, 1166–1176. [Google Scholar] [CrossRef]

- Sakai, N.; Zempo, K.; Mizutani, K.; Wakatsuki, N. Linear Positioning System based on IR Beacon and Angular Detection Photodiode Array. In Proceedings of the International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcalá de Henares, Spain, 4–7 October 2016; pp. 4–7. [Google Scholar]

- Simon, G.; Zachár, G.; Vakulya, G. Lookup: Robust and Accurate Indoor Localization Using Visible Light Communication. IEEE Trans. Instrum. Meas. 2017, 66, 2337–2348. [Google Scholar] [CrossRef]

- Arnon, S. Visible Light Communication; Cambridge University Press: Cambridge, UK, 2015. [Google Scholar]

- Komine, T.; Nakagawa, M. Fundamental analysis for visible-light communication system using LED lights. IEEE Trans. Consum. Electron. 2004, 50, 100–107. [Google Scholar] [CrossRef]

- Ijaz, F.; Yang, H.K.; Ahmad, A.; Lee, C. Indoor positioning: A review of indoor ultrasonic positioning systems. In Proceedings of the 2013 15th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 27–30 January 2013; pp. 1146–1150. [Google Scholar]

- Raharijaona, T.; Mignon, P.; Juston, R.; Kerhuel, L.; Viollet, S. HyperCube: A Small Lensless Position Sensing Device for the Tracking of Flickering Infrared LEDs. Sensors 2015, 15, 16484–16502. [Google Scholar] [CrossRef] [PubMed]

- Land, M.F. Visual acuity in insects. Annu. Rev. Entomol. 1997, 42, 147–177. [Google Scholar] [CrossRef] [PubMed]

- Stavenga, D. Angular and spectral sensitivity of fly photoreceptors. II. Dependence on facet lens F-number and rhabdomere type in Drosophila. J. Comp. Physiol. A 2003, 189, 189–202. [Google Scholar]

- Kerhuel, L.; Viollet, S.; Franceschini, N. The VODKA Sensor: A Bio-Inspired Hyperacute Optical Position Sensing Device. IEEE Sens. J. 2012, 12, 315–324. [Google Scholar] [CrossRef]

- Viboonchaicheep, P.; Shimada, A.; Kosaka, Y. Position rectification control for Mecanum wheeled omni-directional vehicles. In Proceedings of the IECON’03 29th Annual Conference of the IEEE Industrial Electronics Society, Roanoke, VA, USA, 2–6 November 2003; IEEE: Piscataway, NJ, USA, 2003; Volume 1, pp. 854–859. [Google Scholar]

- Lin, L.C.; Shih, H.Y. Modeling and adaptive control of an omni-mecanum-wheeled robot. Intell. Control Autom. 2013, 4, 166–179. [Google Scholar] [CrossRef]

- Guerrero-Castellanos, J.; Villarreal-Cervantes, M.; Sanchez-Santana, J.; Ramirez-Martinez, S. Seguimiento de trayectorias de un robot movil (3,0) mediante control acotado. Revista Iberoamericana de Automática e Informática Industrial RIAI 2014, 11, 426–434. [Google Scholar] [CrossRef]

- Euston, M.; Coote, P.; Mahony, R.; Kim, J.; Hamel, T. A complementary filter for attitude estimation of a fixed-wing UAV. In Proceedings of the IROS 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 340–345. [Google Scholar]

- Kim, H.S.; Seo, W.; Baek, K.R. Indoor Positioning System Using Magnetic Field Map Navigation and an Encoder System. Sensors 2017, 17, 651. [Google Scholar] [CrossRef] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raharijaona, T.; Mawonou, R.; Nguyen, T.V.; Colonnier, F.; Boyron, M.; Diperi, J.; Viollet, S. Local Positioning System Using Flickering Infrared LEDs. Sensors 2017, 17, 2518. https://doi.org/10.3390/s17112518

Raharijaona T, Mawonou R, Nguyen TV, Colonnier F, Boyron M, Diperi J, Viollet S. Local Positioning System Using Flickering Infrared LEDs. Sensors. 2017; 17(11):2518. https://doi.org/10.3390/s17112518

Chicago/Turabian StyleRaharijaona, Thibaut, Rodolphe Mawonou, Thanh Vu Nguyen, Fabien Colonnier, Marc Boyron, Julien Diperi, and Stéphane Viollet. 2017. "Local Positioning System Using Flickering Infrared LEDs" Sensors 17, no. 11: 2518. https://doi.org/10.3390/s17112518

APA StyleRaharijaona, T., Mawonou, R., Nguyen, T. V., Colonnier, F., Boyron, M., Diperi, J., & Viollet, S. (2017). Local Positioning System Using Flickering Infrared LEDs. Sensors, 17(11), 2518. https://doi.org/10.3390/s17112518