A Fuzzy-Based Approach for Sensing, Coding and Transmission Configuration of Visual Sensors in Smart City Applications

Abstract

:1. Introduction

- Sensing: it indicates the sensing behavior of visual sensors. For cameras retrieving still images, it may define the sampling frequency, which reflects in the number of snapshots taken per second. For video monitoring, the sensing behavior may indicate transmission bursts or continuous streaming.

- Coding: source nodes may apply different coding algorithms, with diverse compression ratios and processing costs. Visual data resolution and color patterns are also relevant coding configurations.

- Transmission: visual data may be transmitted in real-time, or transmission latency and jitter may not be a concern. Quality of Service (QoS) policies may also be employed over some traffic, which may be prioritized during transmission.

2. Related Works

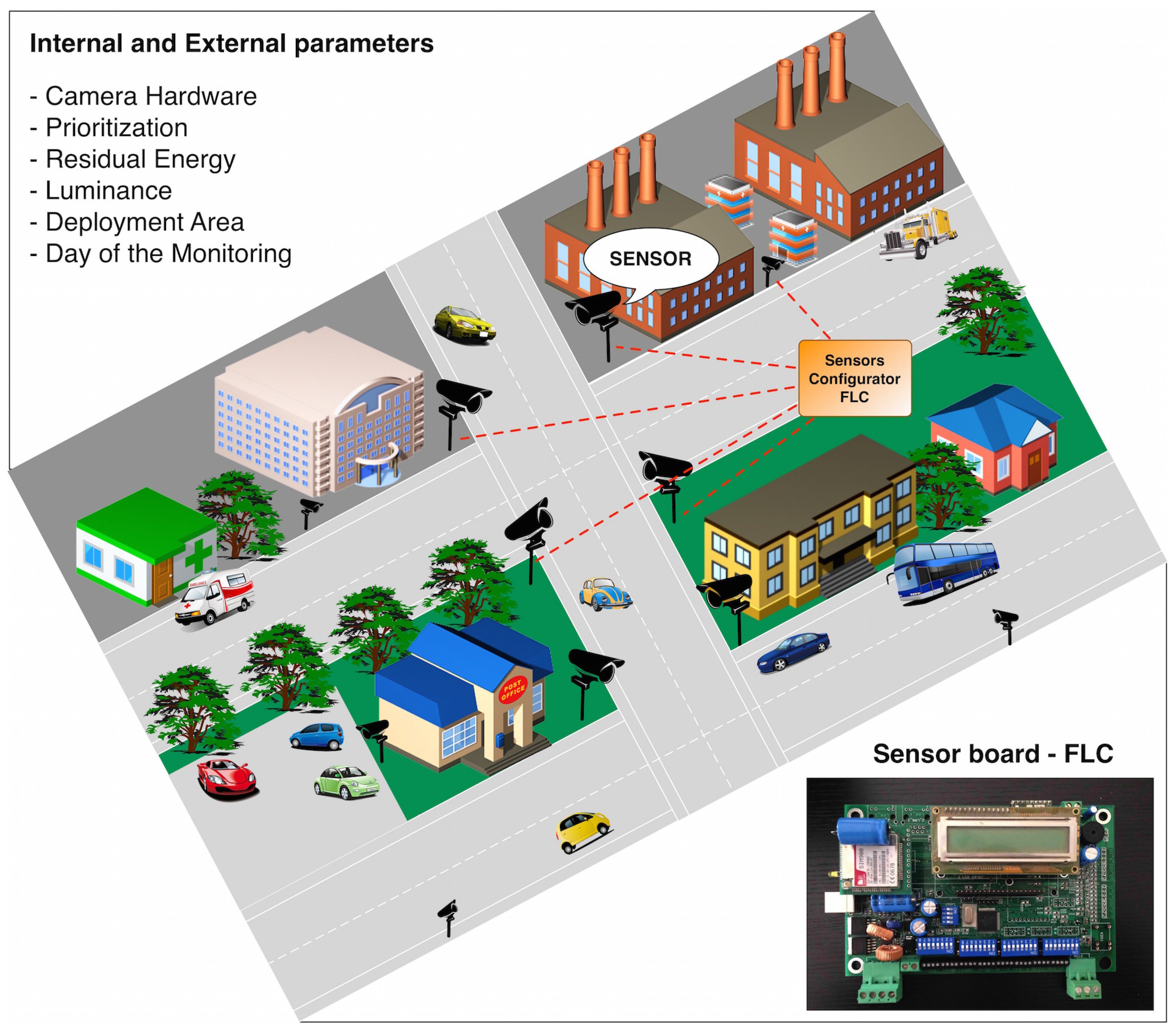

3. Visual Sensing Configuration

3.1. Internal Parameters

- Camera hardware: sensor nodes may be equipped with different types of cameras, which may have different hardware characteristics. Cameras with zooming and rotation capabilities may need to transmit more information for some applications. Lens quality and supported image resolutions are also important parameters that may impact sensor operation.

- Processing power: processing and memory capabilities will determine which multimedia compression algorithms may be executed, affecting sensing quality and energy consumption over the network. This parameter can then guide the configuration of data coding in sensor nodes.

- Event-based prioritization: visual sensors may have different priorities depending on events monitoring [17,30]. Network services and protocols may consider event-based priorities for optimized transmissions. Moreover, most relevant nodes may transmit more data than less relevant sensors, depending on the configurations of the considered monitoring application.

- Residual energy: sensor nodes may operate using batteries, which provide finite energy. Therefore, the current energy level of sensor nodes may interfere in the way sensors will retrieve visual information. For example, the sensing frequency of sensors nodes may be reduced when their energy level is below a threshold.

- Security: some security concerns may be exploited to differentiate sensor nodes. As an example, regions with confidentiality requirements may demand the use of robust cryptography algorithms [33], which depends on available processing power and efficient energy management.

3.2. External Parameters

- Luminance: some visual sensors may be able to retrieve visual information during the night or in dark places, but it may not be true for other sensors. The luminance intensity (measured in lux) can be considered when defining the sensing frequency of visual sensors.

- Deployment area: depending on the considered application and deployment area, visual sensors may need to transmit more visual data. For instance, in public security applications, visual sensors deployed in areas with high levels of criminality may be required to transmit more data than if they were deployed in other areas (even in the absence of events of interest). As a remark, the deployment area is a parameter that has significance for a scenario that may comprise many different WVSNs, which is different than the (internal) prioritization parameter, whose significance is valid for the considered sensor network.

- Day of the monitoring: a city is a complex and dynamic environment, where some patterns may be, sometimes, defined. For example, traffic is affected by the day of the week, since fewer cars may be moving around on weekends, or even on holidays. Thus, depending on the application, visual monitoring may be influenced by the day of the monitoring.

- Relevance of the system: in a smart city scenario, some systems may be more relevant than others. If we have multiple deployed wireless visual sensor networks, a pre-configured relevance level for the different network operations may be considered as an external parameter, impacting the configuration of the sensor nodes.

4. Proposed Approach

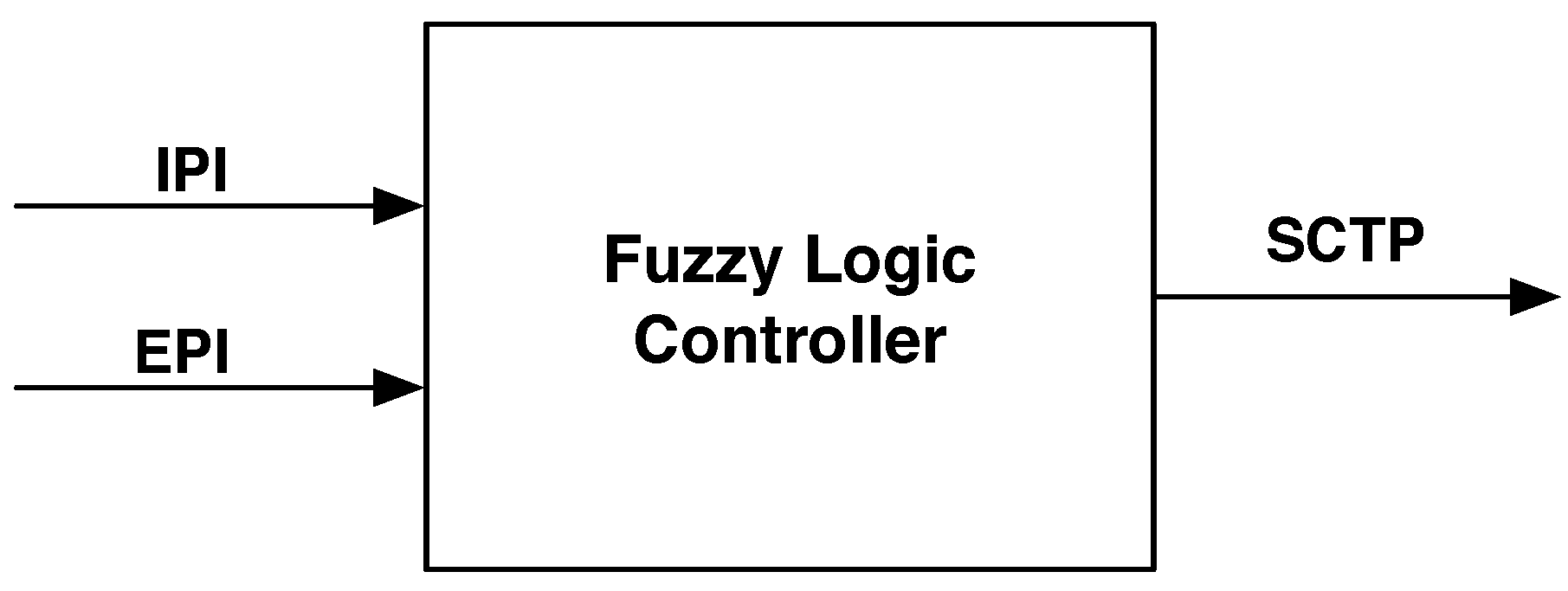

4.1. Fuzzy Logic Controller

4.2. Configuring the FLC

- Very Low (VL);

- Low (L);

- Medium (M);

- High (H);

- Very High (VH).

5. Results

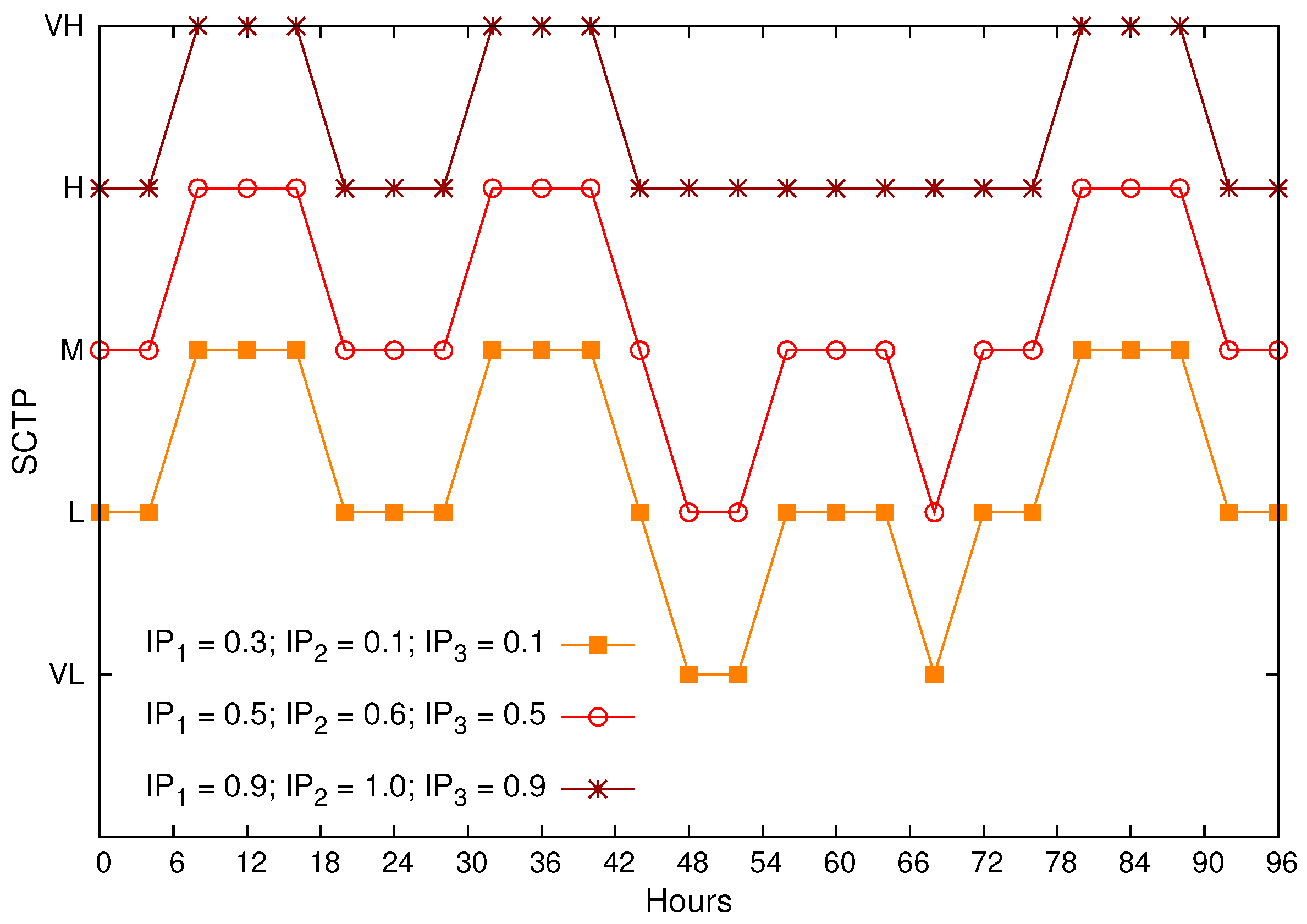

5.1. Computing SCTP for a Public Security Application

5.2. SCTP Computation in Smart City Scenarios

5.3. Relevant Issues When Implementing the Proposed Approach

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Stoianov, I.; Nachman, L.; Madden, S.; Tokmouline, T.; Csail, M. PIPENET: A Wireless Sensor Network for Pipeline Monitoring. In Proceedings of the International Symposium on Information Processing in Sensor Networks, Cambridge, MA, USA, 25–27 April 2007; pp. 264–273.

- Gungor, V.; Lu, B.; Hancke, G. Opportunities and Challenges of Wireless Sensor Networks in Smart Grid. IEEE Trans. Ind. Electron. 2010, 57, 3557–3564. [Google Scholar] [CrossRef]

- Hancke, G.P.; Silva, B.D.C.E.; Hancke, G.P., Jr. The Role of Advanced Sensing in Smart Cities. Sensors 2012, 13, 393–425. [Google Scholar] [CrossRef] [PubMed]

- Collotta, M.; Bello, L.L.; Pau, G. A novel approach for dynamic traffic lights management based on Wireless Sensor Networks and multiple fuzzy logic controllers. Expert Syst. Appl. 2015, 42, 5403–5415. [Google Scholar] [CrossRef]

- Lo, S.W.; Wu, J.H.; Lin, F.P.; Hsu, C.H. Visual Sensing for Urban Flood Monitoring. Sensors 2015, 15, 20006–20029. [Google Scholar] [CrossRef] [PubMed]

- Calavia, L.; Baladrón, C.; Aguiar, J.M.; Carro, B.; Sánchez-Esguevillas, A. A Semantic Autonomous Video Surveillance System for Dense Camera Networks in Smart Cities. Sensors 2012, 12, 10407–10429. [Google Scholar] [CrossRef] [PubMed]

- Almalkawi, I.; Zapata, M.; Al-Karaki, J.; Morillo-Pozo, J. Wireless multimedia sensor networks: Current trends and future directions. Sensors 2010, 10, 6662–6717. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.T.; Chen, P.Y.; Lee, W.S.; Huang, C.-F. Design and Implementation of a Real Time Video Surveillance System with Wireless Sensor Networks. In Proceedings of the IEEE Vehicular Technology Conference, Singapore, 11–14 May 2008; pp. 218–222.

- Baroffio, L.; Bondi, L.; Cesana, M.; Redondi, A.E.; Tagliasacchi, M. A visual sensor network for parking lot occupancy detection in Smart Cities. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milano, Italy, 14–16 December 2015; pp. 745–750.

- Batty, M.; Axhausen, K.; Giannotti, F.; Pozdnoukhov, A.; Bazzani, A.; Wachowicz, M.; Ouzounis, G.; Portugali, Y. Smart cities of the future. Eur. Phys. J. Spec. Top. 2012, 214, 481–518. [Google Scholar] [CrossRef]

- Kruger, C.; Hancke, G.; Bhatt, D. Wireless sensor network for building evacuation. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference, Graz, Austria, 13–16 May 2012; pp. 2572–2577.

- Kim, S.; Pakzad, S.; Culler, D.; Demmel, J.; Fenves, G.; Glaser, S.; Turon, M. Health Monitoring of Civil Infrastructures Using Wireless Sensor Networks. In Proceedings of the International Symposium on Information Processing in Sensor Networks, Cambridge, MA, USA, 22–24 April 2007; pp. 254–263.

- Myung, H.; Lee, S.; Lee, B.J. Structural health monitoring robot using paired structured light. In Proceedings of the IEEE International Symposium on Industrial Electronics, Lausanne, Switzerland, 8–10 July 2009; pp. 396–401.

- Costa, D.G.; Guedes, L.A. A Survey on Multimedia-Based Cross-Layer Optimization in Visual Sensor Networks. Sensors 2011, 11, 5439–5468. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.; Lee, S.; Bovik, A. Optimal image transmission over Visual Sensor Networks. In Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 161–164.

- Lee, J.H.; Jung, I.B. Adaptive-Compression Based Congestion Control Technique for Wireless Sensor Networks. Sensors 2010, 10, 2919–2945. [Google Scholar] [CrossRef] [PubMed]

- Costa, D.G.; Guedes, L.A. Exploiting the sensing relevancies of source nodes for optimizations in visual sensor networks. Multimed. Tools Appl. 2013, 64, 549–579. [Google Scholar] [CrossRef]

- Costa, D.G.; Guedes, L.A.; Vasques, F.; Portugal, P. Adaptive monitoring relevance in camera networks for critical surveillance applications. Int. J. Distrib. Sens. Netw. 2013, 2013, 836721. [Google Scholar] [CrossRef]

- Rosario, D.; Zhao, Z.; Braun, T.; Cerqueira, E. A Cross-Layer QoE-Based Approach for Event-Based Multi-Tier Wireless Multimedia Sensor Networks. Int. J. Adapt. Resilient Auton. Syst. 2014, 5, 1–18. [Google Scholar] [CrossRef]

- Costa, D.G.; Guedes, L.A.; Vasques, F.; Portugal, P. A routing mechanism based on the sensing relevancies of source nodes for time-critical applications in visual sensor networks. In Proceedings of the IEEE/IFIP Wireless Days, Dublin, Ireland, 21–23 November 2012.

- Zhang, L.; Hauswirth, M.; Shu, L.; Zhou, Z.; Reynolds, V.; Han, G. Multi-priority multi-path selection for video streaming in wireless multimedia sensor networks. Lect. Notes Comput. Sci. 2008, 5061, 439–452. [Google Scholar]

- Lecuire, V.; Duran-Faundez, C.; Krommenacker, N. Energy- efficient transmission of wavelet-based images in wireless sensor networks. J. Image Video Process. 2007, 2007, 47345. [Google Scholar] [CrossRef]

- Costa, D.G.; Guedes, L.A.; Vasques, F.; Portugal, P. Energy-Efficient Packet Relaying in Wireless Image Sensor Networks Exploiting the Sensing Relevancies of Source Nodes and DWT Coding. J. Sens. Actuator Netw. 2013, 2, 424–448. [Google Scholar] [CrossRef]

- Duran-Faundez, C.; Costa, D.G.; Lecuire, V.; Vasques, F. A Geometrical Approach to Compute Source Prioritization Based on Target Viewing in Wireless Visual Sensor Networks. In Proceedings of the IEEE World Conference on Factory Communication Systems, Aveiro, Portugal, 3–6 May 2016.

- Aghdama, S.M.; Khansarib, M.; Rabieec, H.R.; Salehib, M. WCCP: A congestion control protocol for wireless multimedia communication in sensor networks. Ad Hoc Netw. 2014, 13, 516–534. [Google Scholar] [CrossRef]

- Steine, M.; Viet Ngo, C.; Serna Oliver, R.; Geilen, M.; Basten, T.; Fohler, G.; Decotignie, J.D. Proactive Reconfiguration of Wireless Sensor Networks. In Proceedings of the 14th ACM International Conference on Modeling, Analysis and Simulation of Wireless and Mobile Systems (MSWiM ’11), Miami, FL, USA, 31 October–4 November 2011; pp. 31–40.

- Szczodrak, M.; Gnawali, O.; Carloni, L.P. Dynamic Reconfiguration of Wireless Sensor Networks to Support Heterogeneous Applications. In Proceedings of the 2013 IEEE International Conference on Distributed Computing in Sensor Systems, Cambridge, MA, USA, 20 May–23 May 2013; pp. 52–61.

- ElGammal, M.; Eltoweissy, M. Distributed dynamic context-aware task-based configuration of wireless sensor networks. In Proceedings of the 2011 IEEE Wireless Communications and Networking Conference, Cancun, Mexico, 28–31 March 2011; pp. 1191–1196.

- Cecílio, J.; Furtado, P. Configuration and data processing over a heterogeneous wireless sensor networks. In Proceedings of the 2011 International Conference on Distributed Computing in Sensor Systems and Workshops (DCOSS), Barcelona, Spain, 27–29 June 2011; pp. 1–5.

- Costa, D.G.; Guedes, L.A.; Vasques, F.; Portugal, P. Research Trends in Wireless Visual Sensor Networks When Exploiting Prioritization. Sensors 2015, 15, 1760–1784. [Google Scholar] [CrossRef] [PubMed]

- Munishmar, V.; Abu-Ghazaleh, N. Coverage algorithms for visual sensor networks. ACM Trans. Sens. Netw. 2013, 9, 45. [Google Scholar]

- Osais, Y.; St-Hilaire, M.; Yu, F. Directional sensor placement with optimal sensing ranging, field of view and orientation. Mob. Netw. Appl. 2010, 15, 216–225. [Google Scholar] [CrossRef]

- Gonçalves, D.; Costa, D.G. Energy-Efficient Adaptive Encryption for Wireless Visual Sensor Networks. In Proceedings of the Brazilian Symposium on Computer Networks and Distributed Systems, Salvador, Brazil, 30 May–3 June 2016.

- Zadeh, L.A. The concept of a linguistic variable and its application to approximate reasoning—II. Inf. Sci. 1975, 8, 301–357. [Google Scholar] [CrossRef]

- Patricio, M.; Castanedo, F.; Berlanga, A.; Perez, O.; Garcia, J.; Molina, J. Computational Intelligence in Visual Sensor Networks: Improving Video Processing Systems. In Computational Intelligence in Multimedia Processing: Recent Advances; Studies in Computational Intelligence; Hassanien, A.E., Abraham, A., Kacprzyk, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 96, pp. 351–377. [Google Scholar]

- Sonmez, C.; Incel, O.; Isik, S.; Donmez, M.; Ersoy, C. Fuzzy-based congestion control for wireless multimedia sensor networks. EURASIP J. Wirel. Commun. Netw. 2014, 2014. [Google Scholar] [CrossRef]

- Lin, K.; Wang, X.; Cui, S.; Tan, Y. Heterogeneous feature fusion-based optimal face image acquisition in visual sensor network. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Pisa, Italy, 11–14 May 2015; pp. 1078–1083.

- Collotta, M.; Pau, G.; Salerno, V.M.; Scatà, G. A fuzzy based algorithm to manage power consumption in industrial Wireless Sensor Networks. In Proceedings of the 2011 9th IEEE International Conference on Industrial Informatics, Lisbon, Portugal, 26–29 July 2011; pp. 151–156.

- Maurya, S.; Jain, V.K. Fuzzy based energy efficient sensor network protocol for precision agriculture. Compute. Electron. Agric. 2016, 130, 20–37. [Google Scholar] [CrossRef]

- Collotta, M.; Cascio, A.L.; Pau, G.; Scatá, G. A fuzzy controller to improve CSMA/CA performance in IEEE 802.15.4 industrial wireless sensor networks. In Proceedings of the 2013 IEEE 18th Conference on Emerging Technologies Factory Automation (ETFA), Cagliari, Italy, 10–13 September 2013; pp. 1–4.

- Chen, J. Improving Life Time of Wireless Sensor Networks by Using Fuzzy c-means Induced Clustering. In Proceedings of the World Automation Congress (WAC), Puerto Vallarta, Mexico, 24–28 June 2012; pp. 1–4.

- Olunloyo, V.O.S.; Ajofoyinbo, A.M.; Ibidapo-Obee, O. On Development of Fuzzy Controller: The Case of Gaussian and Triangular Membership Functions. J. Signal Inf. Process. 2011, 2, 257–265. [Google Scholar] [CrossRef]

- Rahimi, M.; Baer, R.; Iroezi, O.I.; Garcia, J.C.; Warrior, J.; Estrin, D.; Srivastava, M. Cyclops: In situ image sensing and interpretation in wireless sensor networks. In Proceedings of the International Conference on Embedded Networked Sensor Systems, San Diego, CA, USA, 2–4 November 2005.

- Hengstler, S.; Prashanth, D.; Fong, S.; Aghajan, H. MeshEye: A hybrid-resolution smart camera mote for applications in distributed intelligent surveillance. In Proceedings of the International Symposium on Information Processing in Sensor Networks, Cambridge, MA, USA, 25–27 April 2007.

- Rowe, A.; Rosenberg, C.; Nourbakhsh, I. A Second Generation Low Cost Embedded Color Vision System. In Proceedings of the Computer Vision and Pattern Recognition Conference, San Diego, CA, USA, 20–25 June 2005.

- Microchip. PIC24FJ256GB108—16-Bit PIC and dsPIC Microcontrollers; Microchip Technology: Chandler, AZ, USA, 2009. [Google Scholar]

- Albino, V.; Berardi, U.; Dangelico, R.M. Smart Cities: Definitions, Dimensions, Performance, and Initiatives. J. Urban Technol. 2015, 22, 3–21. [Google Scholar] [CrossRef]

| Work | Parameter | Description |

|---|---|---|

| [19] | Events | Events of interest are detected and used to trigger transmissions from sensor nodes, using a proposed multi-tier architecture. |

| [18] | Events | Scalar sensors are used to detect events of interest. Different levels of configurations of visual sensors are established based on the priority of detected events. |

| [20] | Events | Source nodes with higher event-based priorities transmit packets through transmission paths with lower latency. |

| [21] | Media type | The original media stream is split into image and audio, giving to each resulting sub-stream a particular priority when choosing transmission paths. |

| [22] | Node’s status | Relaying nodes may decide to drop packets according to their residual energy level and the relevance of DWT (Discrete Wavelet Transform) subbands. |

| [23] | Node’s status | The energy level of sensor nodes are considered when processing packets to be relayed. |

| [24] | Data content | The viewed segments of targets’ perimeters are associated with priority levels. Most relevant sources transmit higher quality visual data. |

| [25] | Network QoS | The transmission rate of source nodes is adjusted when facing congestion, silently dropping lower-relevant packets at source nodes. |

| Range of Degradation | or (%) |

|---|---|

| Linguistic Values | Interval |

|---|---|

| VL | |

| L | |

| M | |

| H | |

| VH |

| SCTP | ||||||

|---|---|---|---|---|---|---|

| VL | L | M | H | VH | ||

| VL | VL | VL | L | M | H | |

| L | L | L | M | M | H | |

| M | L | M | M | H | H | |

| H | M | M | H | H | VH | |

| VH | M | H | H | VH | VH | |

| Parameter | D | ||

|---|---|---|---|

| Internal (Camera’s hardware) | |||

| Cyclops [43] | 0 | 0 | 10 |

| MeshEye [44] | 5 | 0 | 10 |

| CMUCam [45] | 10 | 0 | 10 |

| Internal (Prioritization) | |||

| Sensing priority | 0–15 | 0 | 15 |

| Internal (Energy) | |||

| Energy level | 0–20,000 J | 20,000 J | 0 J |

| External (Luminance) | |||

| Luminance | 10–100,000 lux | 100,000 lux | 10 lux |

| External (Day) | |||

| Monday-Friday | 10 | 0 | 10 |

| Saturday | 5 | 0 | 10 |

| Sunday | 0 | 0 | 10 |

| External (Deployment area) | |||

| Avenues | 0 | 0 | 10 |

| Streets | 5 | 0 | 10 |

| Public parks | 8 | 0 | 10 |

| Crowded areas | 10 | 0 | 10 |

| SCTP | Sensing | Coding | Transmission |

| VL | 0.1 snapshot/s | SQCIF | No guarantees |

| L | 0.2 snapshot/s | QCIF | No guarantees |

| M | 0.5 snapshot/s | SCIF | Reliable |

| H | 1 snapshot/s | CIF | Reliable and real-time |

| VH | 2 snapshots/s | 4CIF | Reliable and real-time |

| Case | SCTP | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | = 0.38 | = 0.17 | VL | ||||||||||

| 0.34 | 0.32 | 0.45 | 0.35 | 0.21 | 0.54 | 0.58 | 0.12 | 0.26 | 0.21 | 0.16 | 0.28 | ||

| 2 | = 0.19 | = 0.56 | L | ||||||||||

| 0.63 | 0.12 | 0.21 | 0.24 | 0.16 | 0.40 | 0.38 | 0.64 | 0.45 | 0.62 | 0.17 | 0.21 | ||

| 3 | = 0.37 | = 0.73 | M | ||||||||||

| 0.43 | 0.35 | 0.13 | 0.55 | 0.44 | 0.34 | 0.51 | 0.71 | 0.25 | 0.83 | 0.24 | 0.67 | ||

| 4 | = 0.79 | = 0.63 | H | ||||||||||

| 0.25 | 0.88 | 0.08 | 0.97 | 0.67 | 0.73 | 0.19 | 0.54 | 0.69 | 0.69 | 0.12 | 0.43 | ||

| 5 | = 0.95 | = 0.74 | VH | ||||||||||

| 0.48 | 0.93 | 0.34 | 0.98 | 0.18 | 0.94 | 0.38 | 0.67 | 0.37 | 0.97 | 0.25 | 0.51 | ||

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Costa, D.G.; Collotta, M.; Pau, G.; Duran-Faundez, C. A Fuzzy-Based Approach for Sensing, Coding and Transmission Configuration of Visual Sensors in Smart City Applications. Sensors 2017, 17, 93. https://doi.org/10.3390/s17010093

Costa DG, Collotta M, Pau G, Duran-Faundez C. A Fuzzy-Based Approach for Sensing, Coding and Transmission Configuration of Visual Sensors in Smart City Applications. Sensors. 2017; 17(1):93. https://doi.org/10.3390/s17010093

Chicago/Turabian StyleCosta, Daniel G., Mario Collotta, Giovanni Pau, and Cristian Duran-Faundez. 2017. "A Fuzzy-Based Approach for Sensing, Coding and Transmission Configuration of Visual Sensors in Smart City Applications" Sensors 17, no. 1: 93. https://doi.org/10.3390/s17010093

APA StyleCosta, D. G., Collotta, M., Pau, G., & Duran-Faundez, C. (2017). A Fuzzy-Based Approach for Sensing, Coding and Transmission Configuration of Visual Sensors in Smart City Applications. Sensors, 17(1), 93. https://doi.org/10.3390/s17010093