Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area

Abstract

:1. Introduction

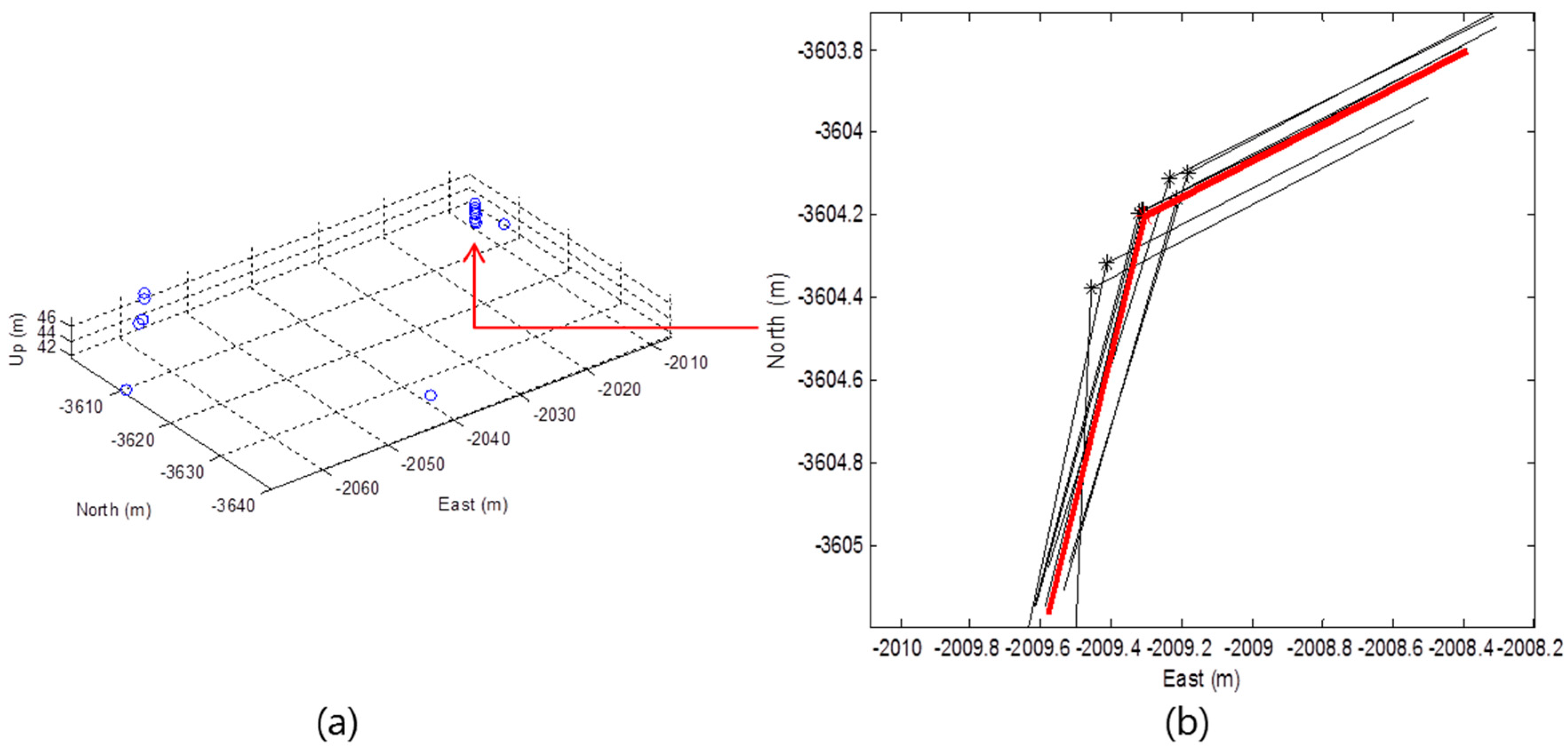

2. Corner Extraction

2.1. Corner Definition

2.2. Corner Extraction

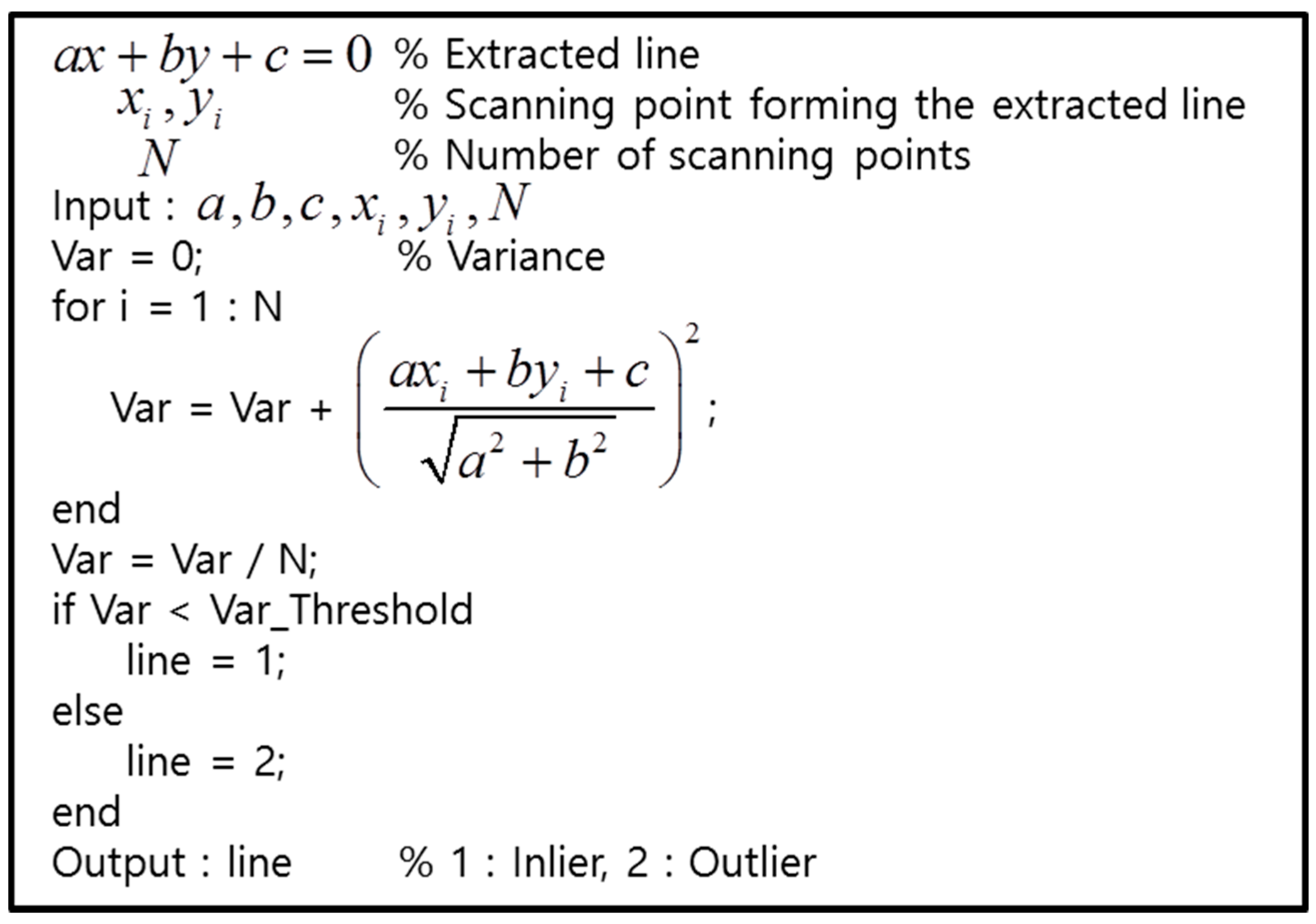

2.2.1. Line Extraction

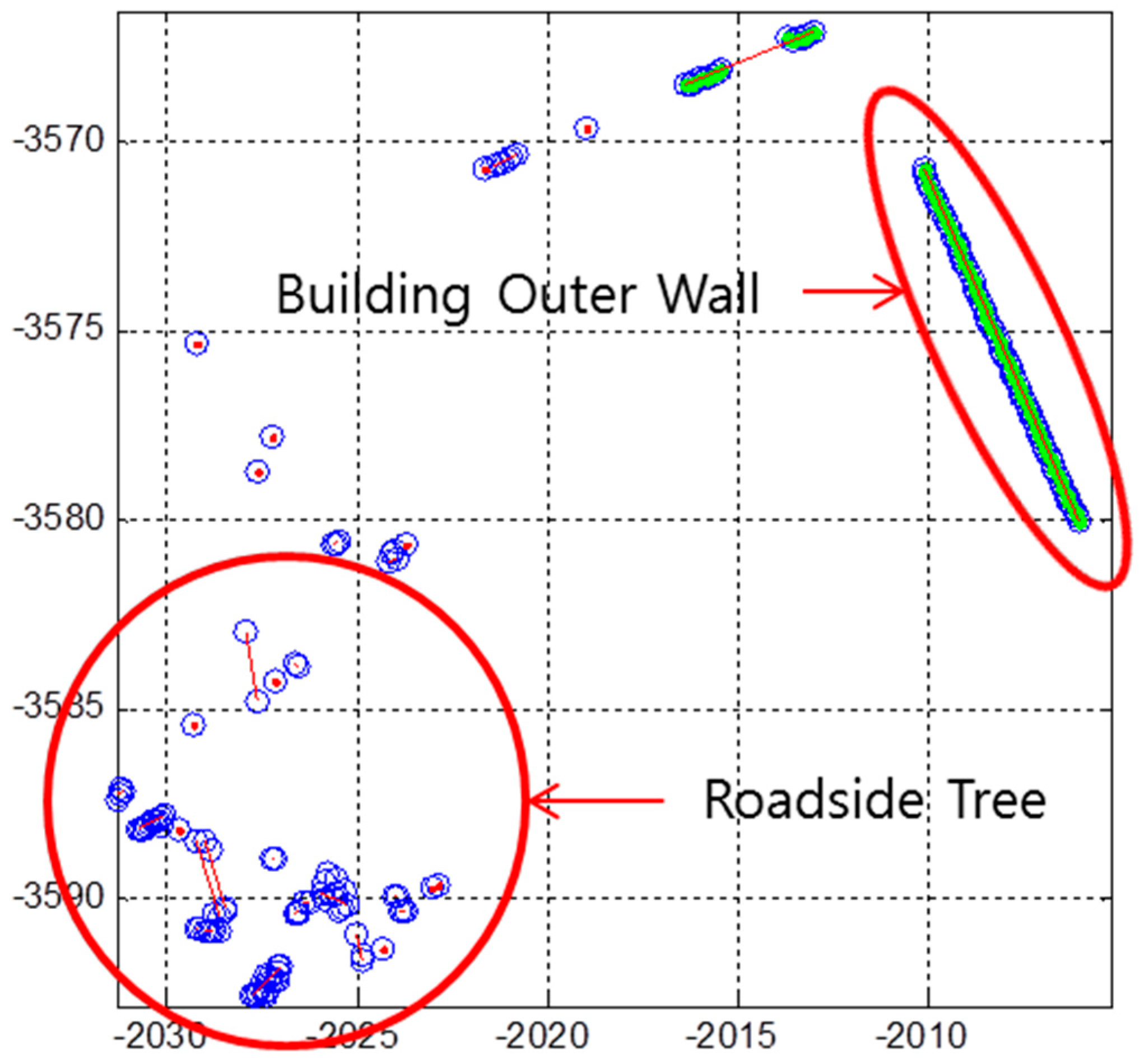

2.2.2. Outlier Removal

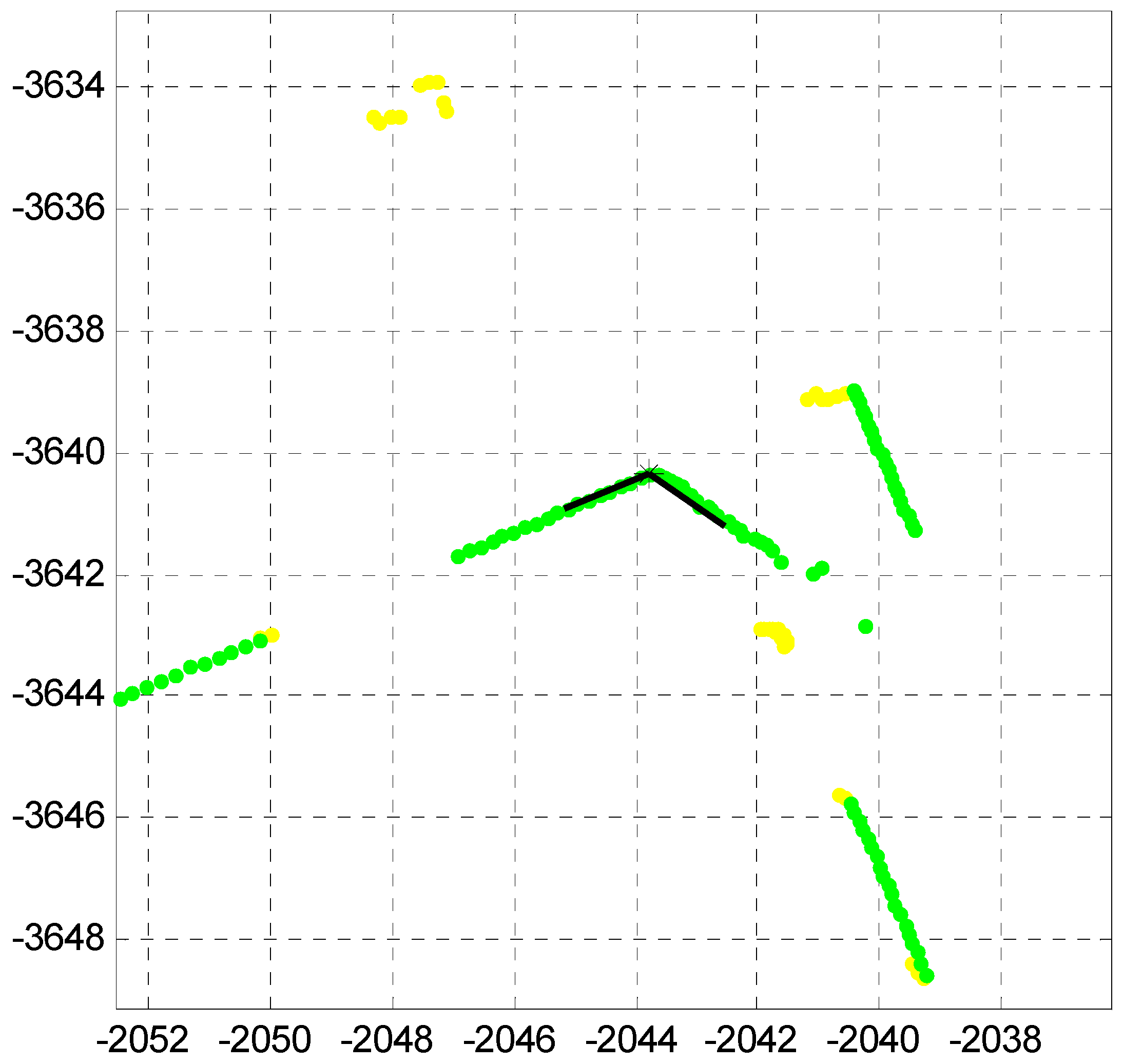

2.2.3. Corner Candidate Extraction

2.2.4. Corner Determination

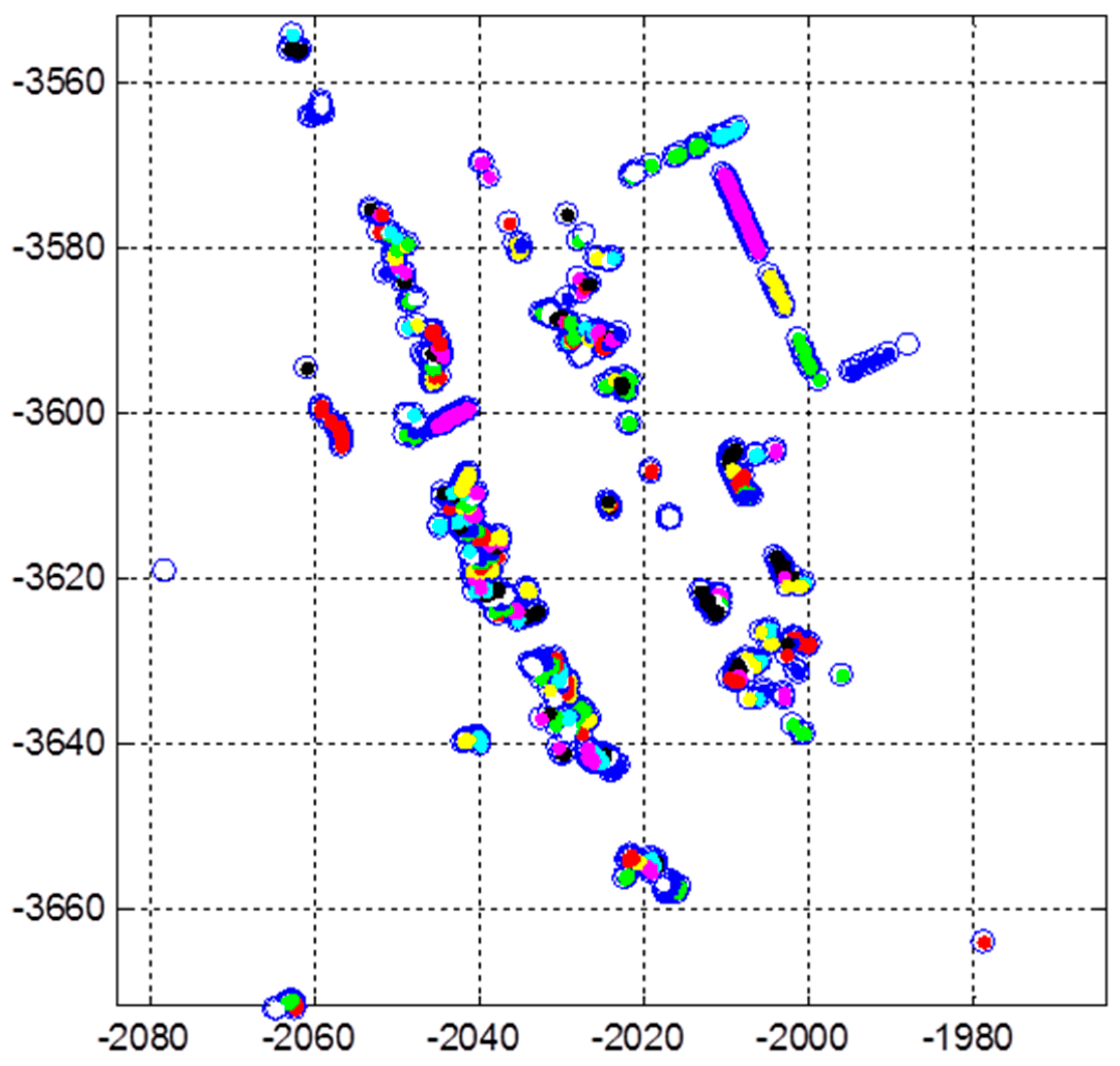

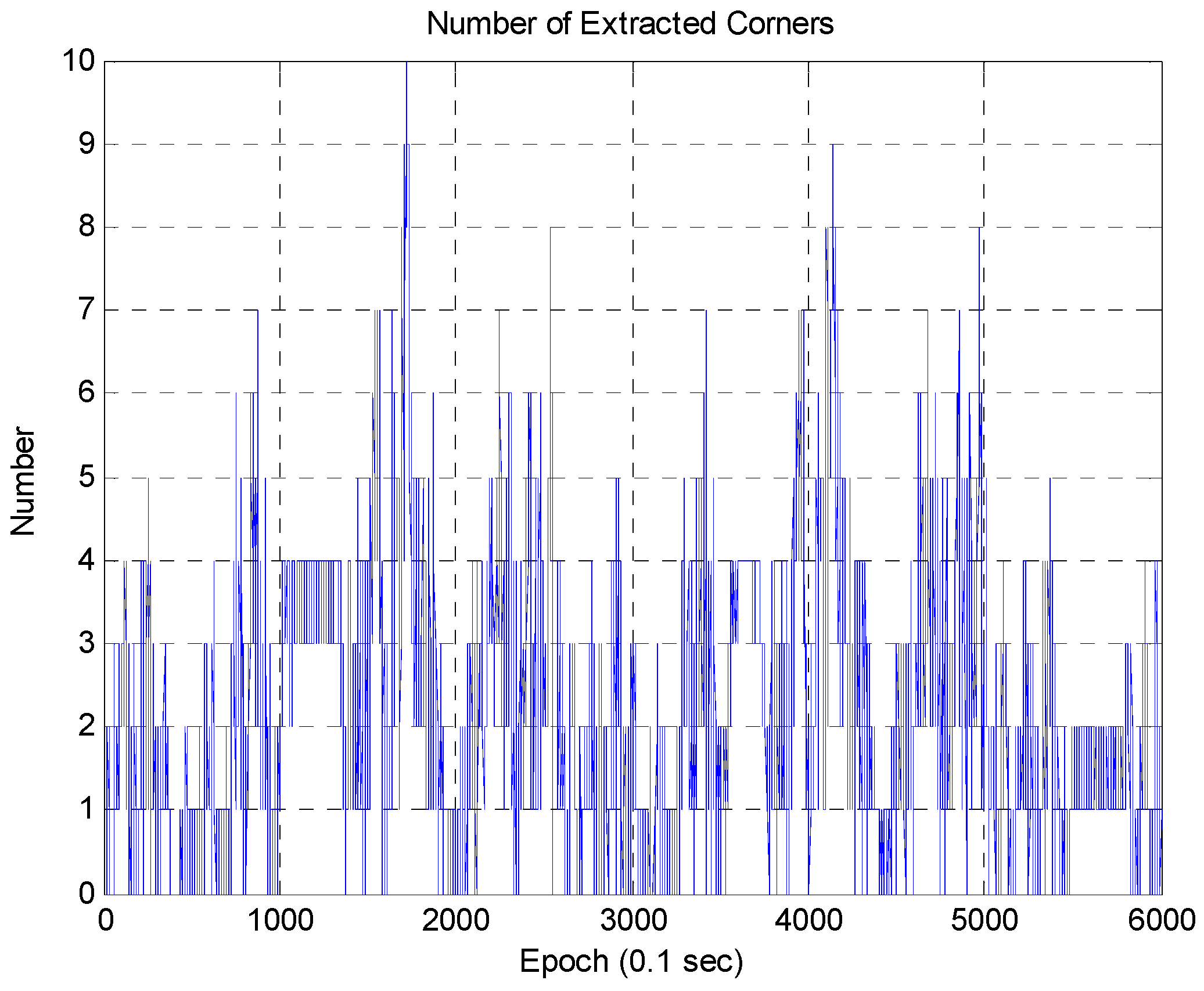

2.2.5. Corner Extraction Result

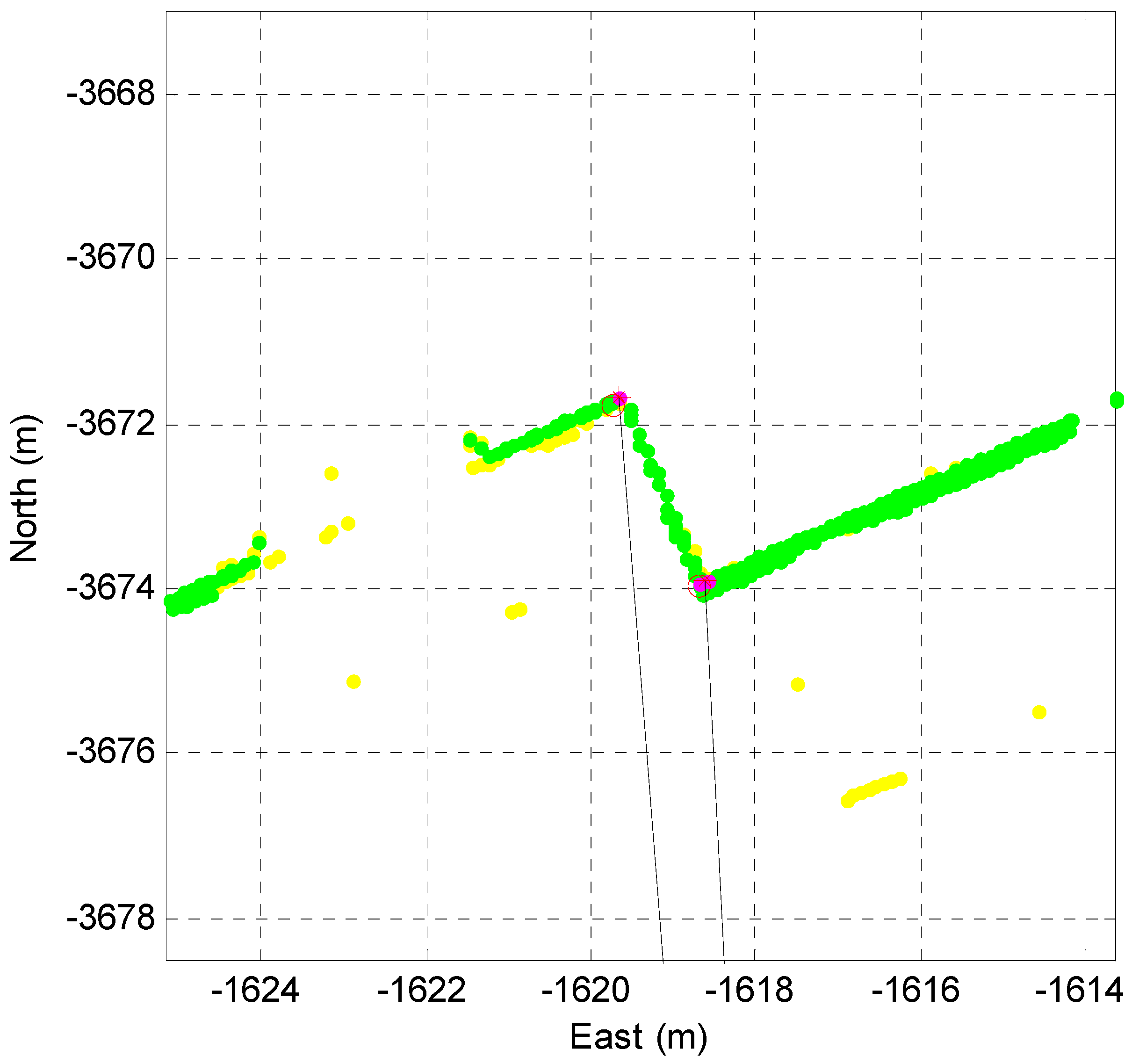

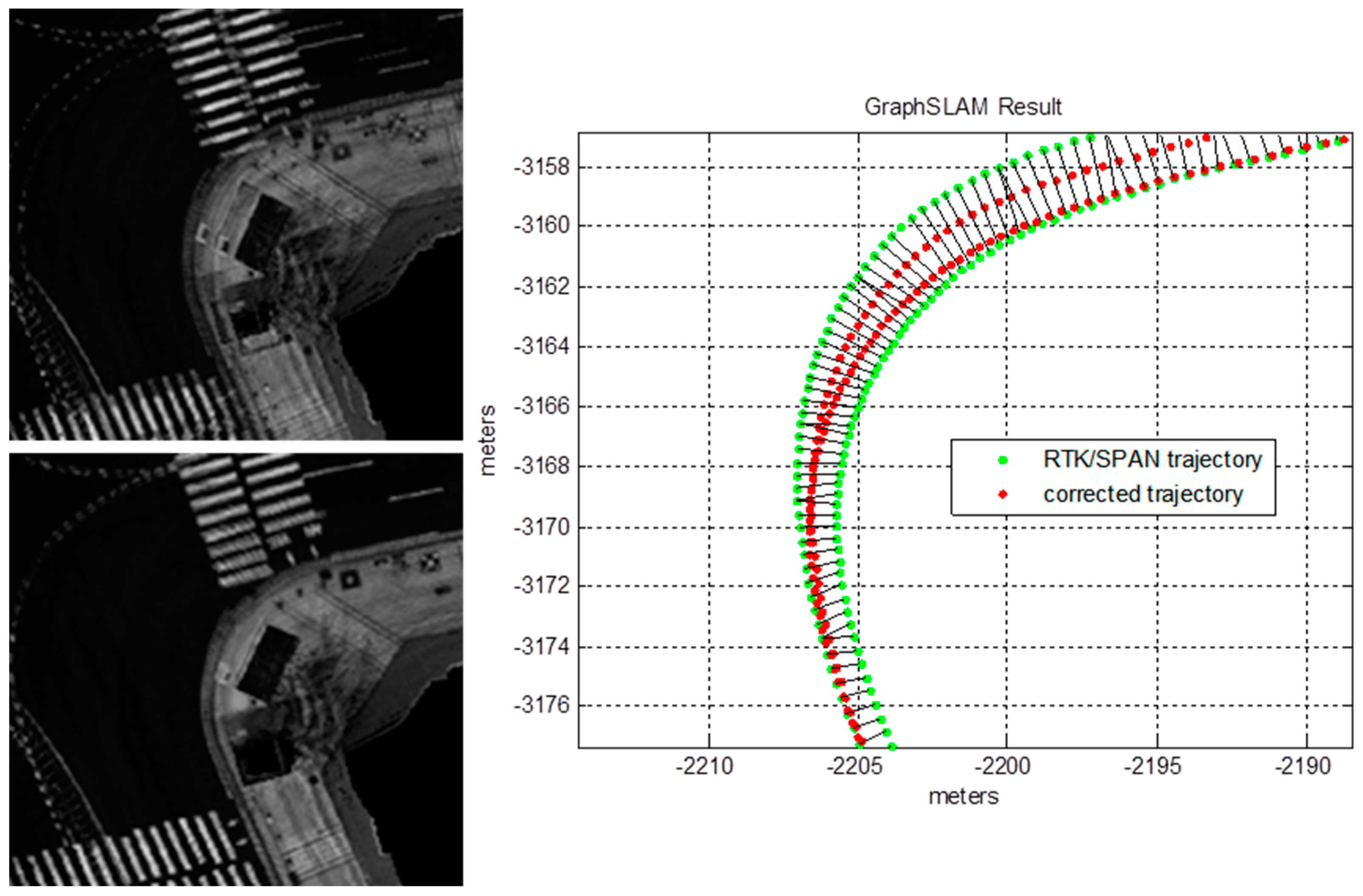

3. Corner Map Generation

3.1. Corner Map Definition

3.2. Corner Map Generation

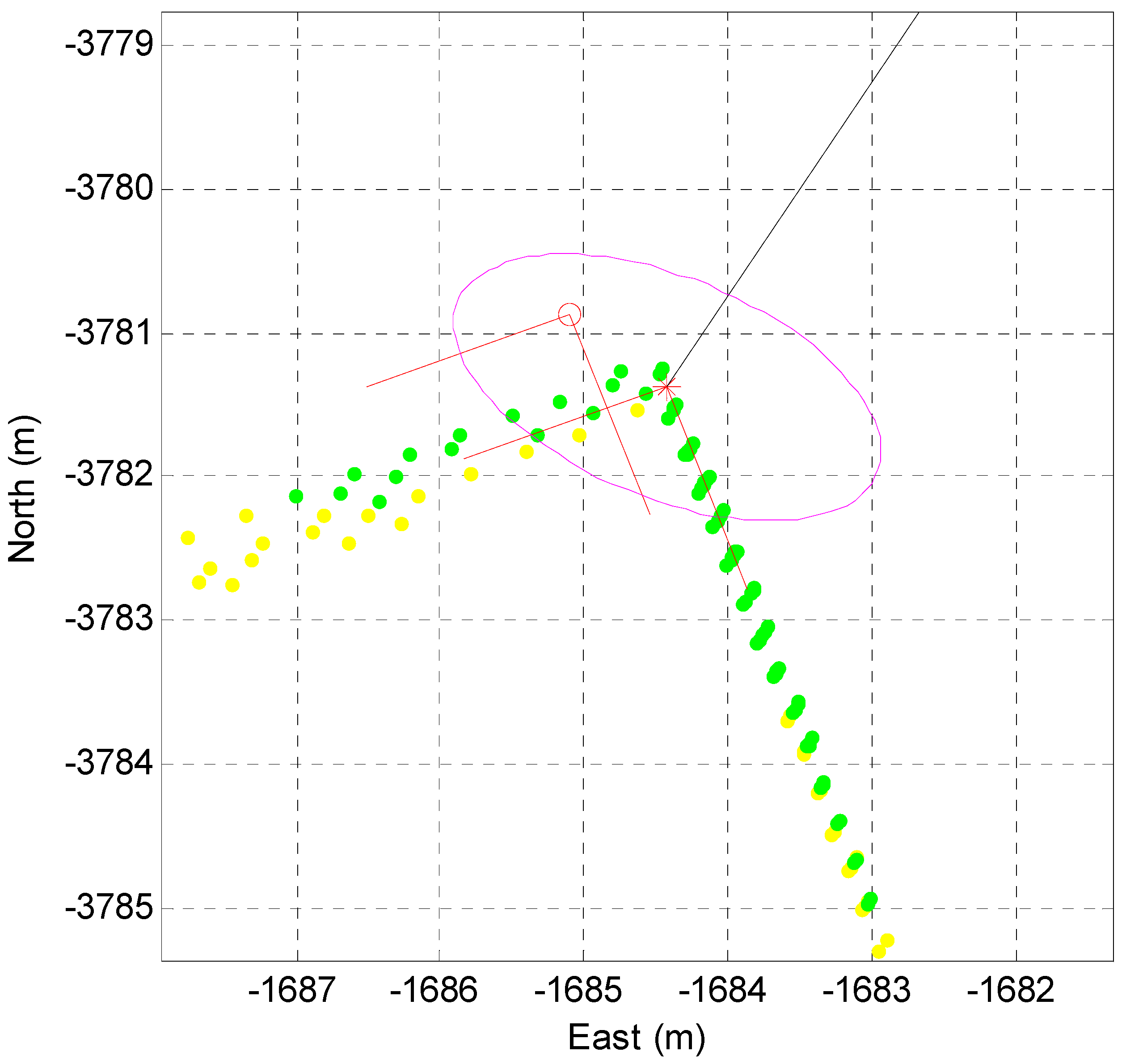

3.3. Data Association with the Corner Map

4. Kalman Filter Configuration and Observability Analysis

4.1. Motion Update

4.2. Measurement Update

4.3. Observability Analysis

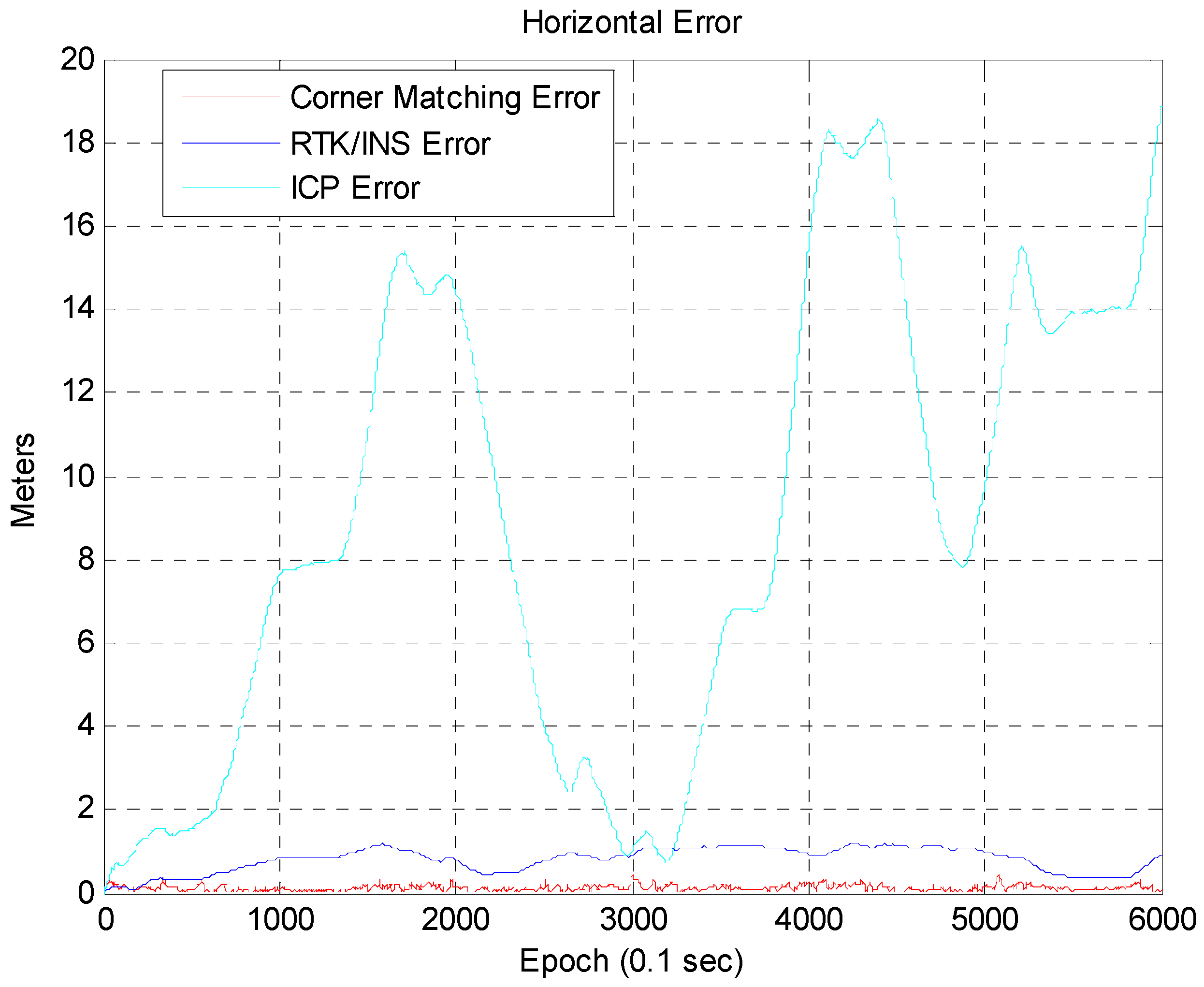

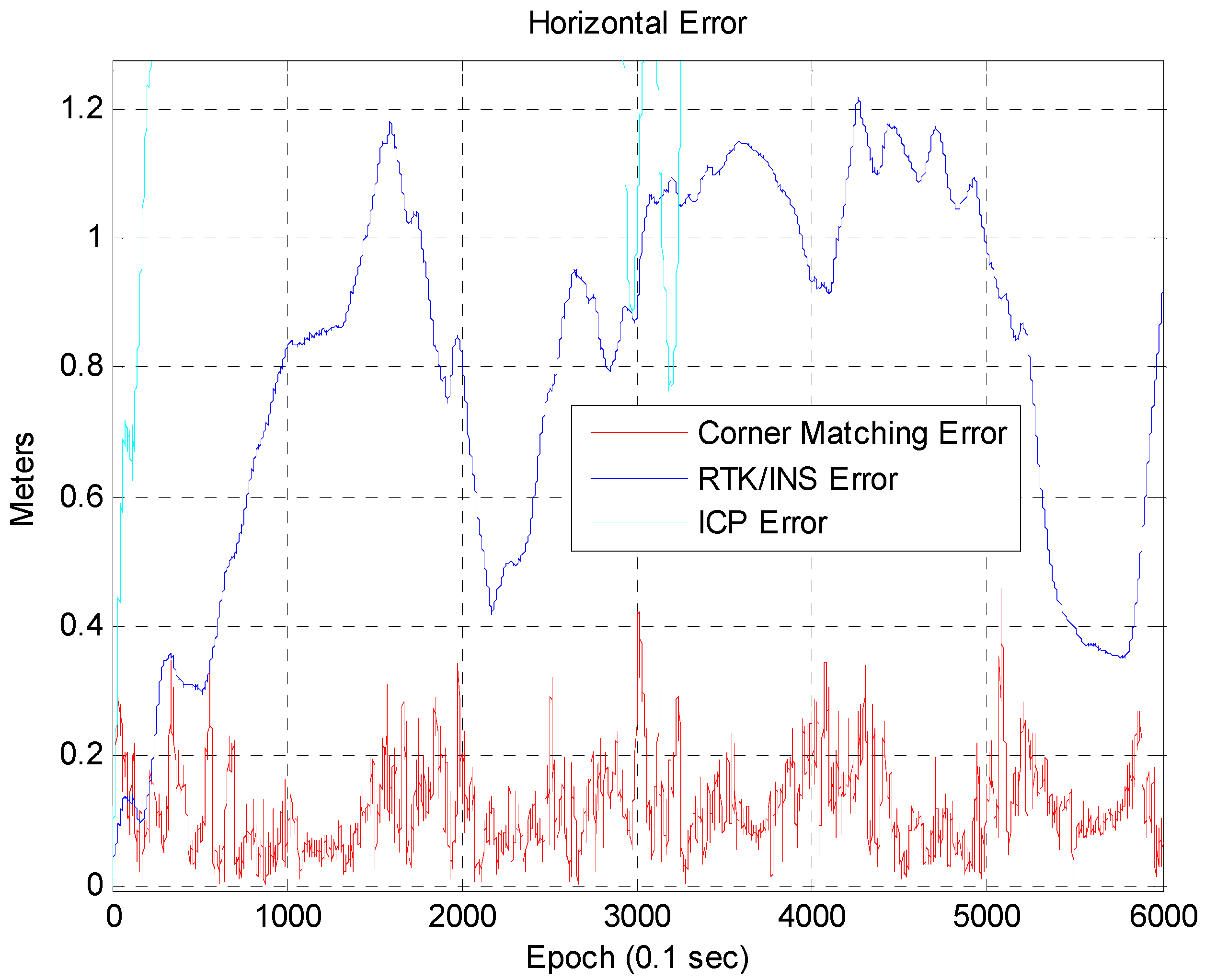

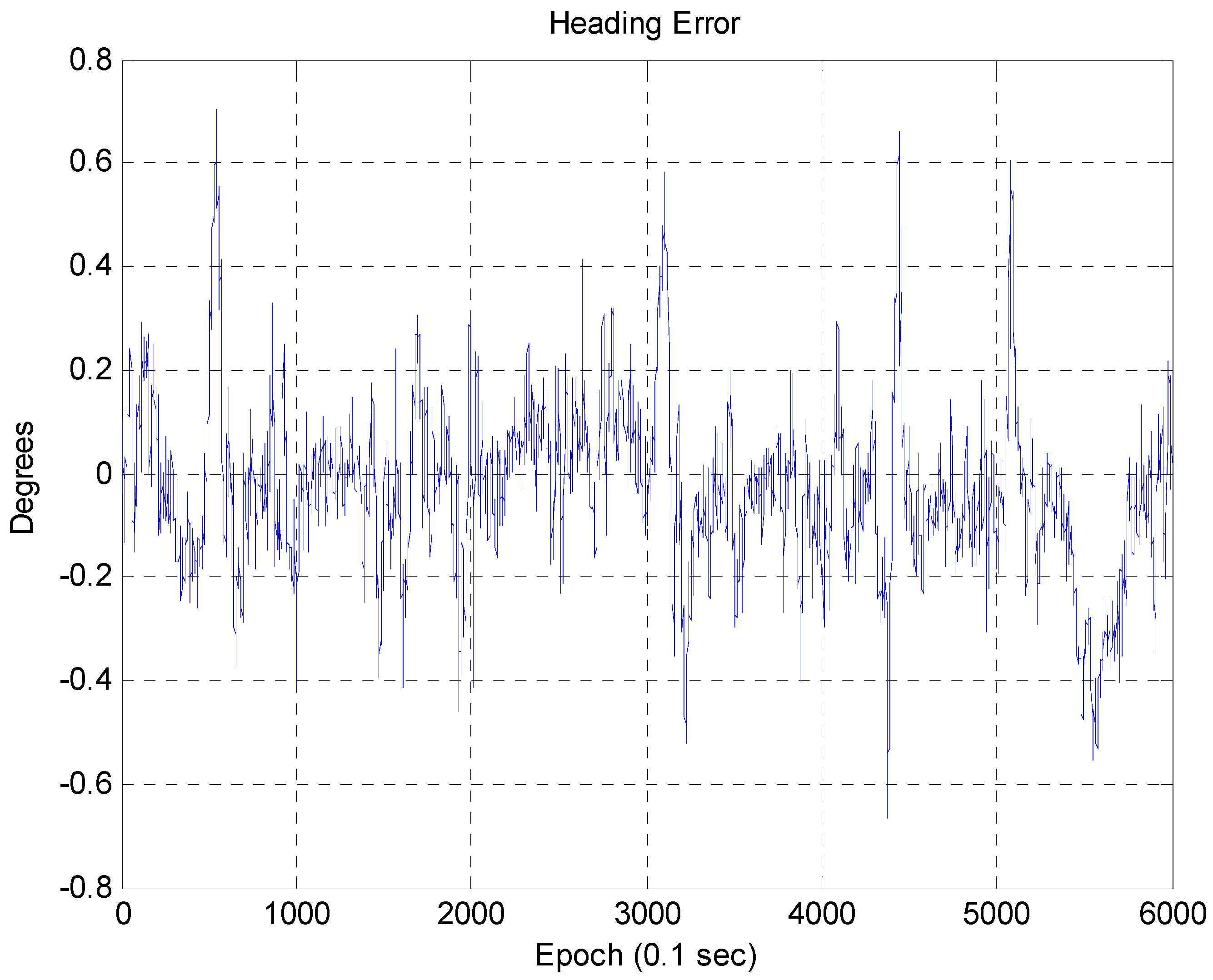

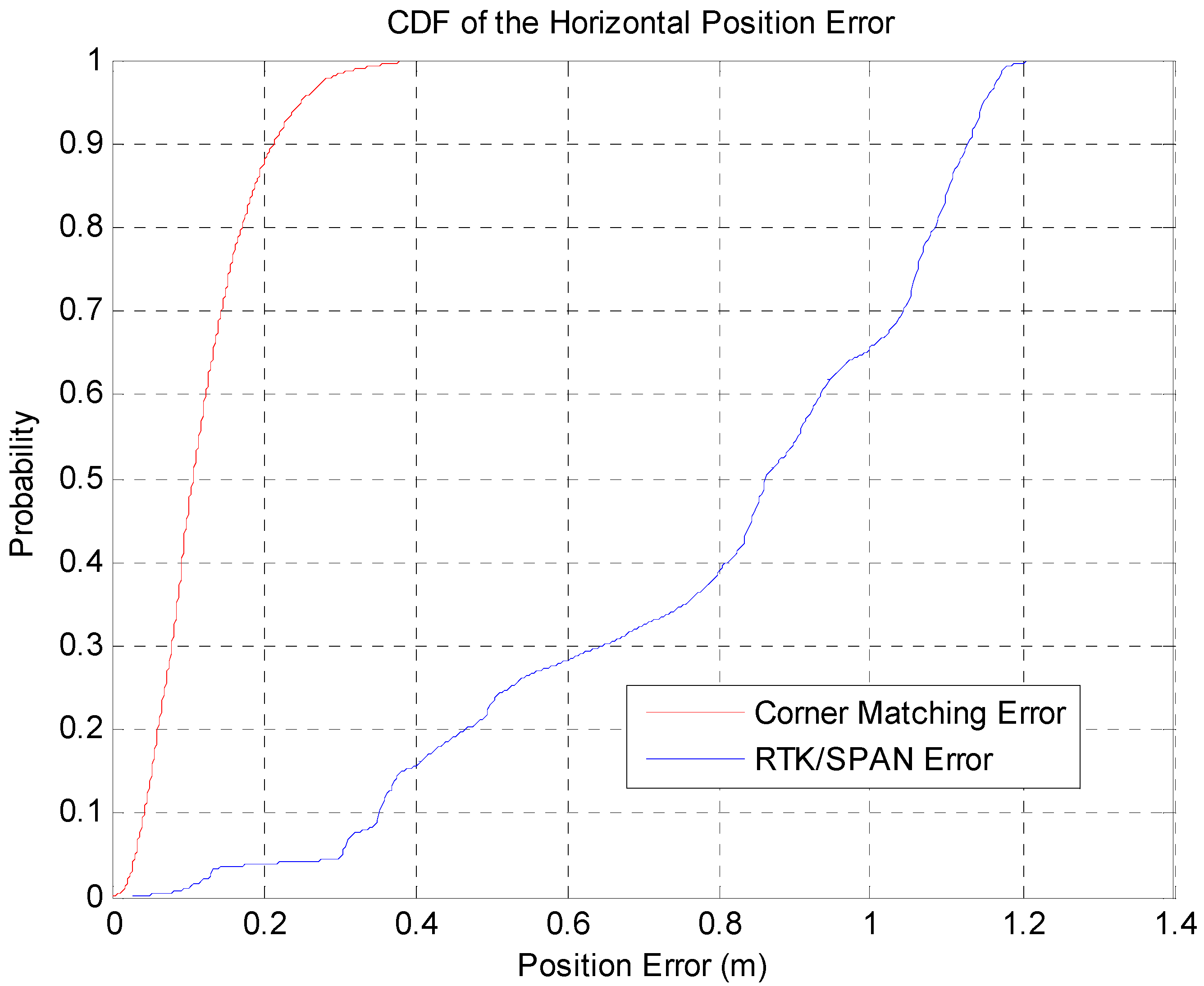

5. Experimental Results

6. Conclusions

Author Contributions

Conflicts of Interest

References

- Kummerle, R.; Hahnel, D.; Dolgov, D.; Thrun, S.; Burgard, W. Autonomous Driving in a Multi-level Parking Structure. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009.

- Brenner, C. Vehicle Localization Using Landmarks Obtained by a LIDAR Mobile Mapping System. Proc. Photogramm. Comput. Vis. Image Anal. 2010, 38, 139–144. [Google Scholar]

- Baldwin, I.; Newman, P. Road vehicle localization with 2D push-broom LIDAR and 3D priors. In Proceedings of the 2012 IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012.

- Chong, Z.J.; Qin, B.; Bandyopadhyay, T.; Ang, M.H., Jr.; Frazzoli, E.; Rus, D. Synthetic 2D LIDAR for Precise Vehicle Localization in 3D Urban Environment. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013.

- Hu, Y.; Gong, J.; Jiang, Y.; Liu, L.; Xiong, G.; Chen, H. Hybrid Map-Based Navigation Method for Unmanned Ground Vehicle in Urban Scenario. Remote Sens. 2013, 5, 3662–3680. [Google Scholar] [CrossRef]

- Choi, J.; Maurer, M. Hybrid Map-based SLAM with Rao-Blackwellized Particle Filters. In Proceedings of the 2014 17th International Conference on Information Fusion (FUSION), Salamanca, Spain, 7–10 July 2014.

- Choi, J. Hybrid Map-based SLAM using a Velodyne Laser Scanner. In Proceedings of the 2014 IEEE 17th International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–10 October 2014.

- Levinson, J.; Thrun, S. Robust Vehicle Localization in Urban Environments Using Probabilistic Maps. In Proceedings of the 2010 IEEE International Conference on Robotics and Automations, Anchorage, Alaska, AK, USA, 3–7 May 2010.

- Levinson, J.; Montemerlo, M.; Thrun, S. Map-Based Precision Vehicle Localization in Urban Environments. In Robotics Science and Systems; MIT Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Hate, A.; Wolf, D. Road Marking Detection Using LIDAR Reflective Intensity Data and its Application to Vehicle Localization. In Proceedings of the 2014 IEEE 17th International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014.

- Wolcott, R.W.; Eustice, R.M. Visual Localization within LIDAR Maps for Automated Urban Driving. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2014), Chicago, IL, USA, 14–18 September 2014.

- Wolcott, R.W.; Eustice, R.M. Fast LIDAR Localization using Multiresolution Gaussian Mixture Maps. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015.

- Li, Y.; Olson, E.B. Extracting General Purpose Feautres from LIDAR Data. In Proceedings of the IEEE International Conference on Robotics and Automamtion, Anchorage, AK, USA, 3–7 May 2010.

- Chee, Y.W.; Yang, T.L. Vision and LIDAR Feature Extraction; Technical Report; Cornell University: Ithaca, NY, USA, 2013. [Google Scholar]

- Park, Y.S.; Yun, S.M.; Won, C.S.; Cho, K.E.; Um, K.H.; Sim, S.D. Calibration between color camera and 3D LIDAR instruments with a polygonal planar board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- Hadji, S.E.; Hing, T.H.; Khattak, M.A.; Ali, M.S.M.; Kazi, S. 2D Feature Extraction in Sensor Coordinates for Laser Range Finder. In Proceedings of the 15th International Conference on Robotics, Control and Manufacturing Technology (ROCOM ’15), Kuala Lumpur, Malaysia, 23–25 April 2015.

- Yan, R.; Wu, J.; Wang, W.; Lim, S.; Lee, J.; Han, C. Natural Corners Extraction Algorithm in 2D Unknown Indoor Environment with Laser Sensor. In Proceedings of the 2012 12th International Conference on Control, Automation and Systems, Jeju Island, Korea, 17–21 October 2012.

- Siadat, A.; Djath, K.; Dufaut, M.; Husson, R. A Laser-Based Mobile Robot Navigation in Structured Environment. In Proceedings of the 1999 European Control Conference (ECC), Karlsruhe, Germany, 31 August–3 September 1999.

- Vazquez-Martin, R.; Nunez, P.; Bandera, A.; Sandoval, F. Curvature-Based Environment Description for Robot Navigation Using Laser Range Sensors. Sensors 2009, 9, 5894–5918. [Google Scholar] [CrossRef] [PubMed]

- Lee, S.Y.; Song, J.B. Mobile Robot Localization Using Range Sensors: Consecutive Scanning and Cooperative Scanning. Int. J. Control Autom. Syst. 2005, 3, 1–14. [Google Scholar]

- Zhang, X.; Rad, A.B.; Wong, Y.K. Sensor Fusion of Monocular Cameras and Laser Rangefinders for Line-Based Simultaneous Localization and Mapping (SLAM) Tasks in Autonomous Mobile Robots. Sensors 2012, 12, 429–452. [Google Scholar] [CrossRef] [PubMed]

- Velodyne LiDAR. Velodyne HDL-32E, User’s Manual and Programming Guide; LIDAR Manufacturing Company: Morgan Hill, CA, USA, 2012. [Google Scholar]

- Arras, K.O.; Siegwart, R. Feature Extraction and Scene Interpretation for Map-Based Navigation and Map Building. In Proceedings of the Symposium on Intelligent Systems and Advanced Manufacturing, Pittsburgh, PA, USA, 14–17 October 1997; Volume 3210, pp. 42–53.

- Borges, G.A.; Aldon, M.J. Line Extraction in 2D Range Images for Mobile Robotics. J. Intell. Robot. Syst. 2004, 40, 267–297. [Google Scholar] [CrossRef]

- Siadat, A.; Kaske, A.; Klausmann, S.; Dufaut, M.; Husson, R. An Optimized Segmentation Method for a 2D Laser-Scanner Applied to Mobile Robot Navigation. In Proceedings of the 3rd IFAC Symposium on Intelligent Components and Instruments for Control Applications, Annecy, France, 9–11 June 1997.

- Harati, A.; Siegwart, R. A New Approach to Segmentation of 2D Range Scans into Linear Regions. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007.

- Nguyen, V.; Martinelli, A.; Tomatis, N.; Siegwart, R. A Comparison of Line Extraction Algorithms Using 2D Laser Rangefinder for Indoor Mobile Robotics. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Edmonton, AB, Canada, 2–6 August 2005.

- Grisetti, G.; Kummerle, R.; Stachniss, C.; Burgard, W. A Tutorial on Graph-Based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Sunderhauf, N.; Protzel, P. Towards a Robust Back-End for Pose Graph SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, St Paul, MN, USA, 14–18 May 2012.

- Hu, H.; Gu, D. Landmark-based Navigation of Industrial Mobile Robots. Int. J. Ind. Robot 2000, 27, 458–467. [Google Scholar] [CrossRef]

- Tao, Z.; Bonnifait, P. Road Invariant Extended Kalman Filter for an Enhanced Estimation of GPS Errors using Lane Markings. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015.

- Kelly, A. Precision dilution in triangulation based mobile robot position estimation. Intell. Auton. Syst. 2003, 8, 1046–1053. [Google Scholar]

| Index | East (m) | North (m) | Direction Angle 1 (Degree) | Direction Angle 2 (Degree) | Covariance Matrix (2 × 2) | |||

|---|---|---|---|---|---|---|---|---|

| 1 | 10 | 10 | 132 | 45 | 0.0021 | 0.0009 | 0.0009 | 0.0029 |

| 2 | 20 | 30 | −13 | −13 | 0.0042 | −0.001 | −0.001 | 0.0015 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Im, J.-H.; Im, S.-H.; Jee, G.-I. Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area. Sensors 2016, 16, 1268. https://doi.org/10.3390/s16081268

Im J-H, Im S-H, Jee G-I. Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area. Sensors. 2016; 16(8):1268. https://doi.org/10.3390/s16081268

Chicago/Turabian StyleIm, Jun-Hyuck, Sung-Hyuck Im, and Gyu-In Jee. 2016. "Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area" Sensors 16, no. 8: 1268. https://doi.org/10.3390/s16081268

APA StyleIm, J.-H., Im, S.-H., & Jee, G.-I. (2016). Vertical Corner Feature Based Precise Vehicle Localization Using 3D LIDAR in Urban Area. Sensors, 16(8), 1268. https://doi.org/10.3390/s16081268