Mobility-Aware Caching and Computation Offloading in 5G Ultra-Dense Cellular Networks

Abstract

:1. Introduction

- We propose a novel caching placement strategy named MS caching. Then, we discuss the impact of the user mobility and the density of SBS on the content caching.

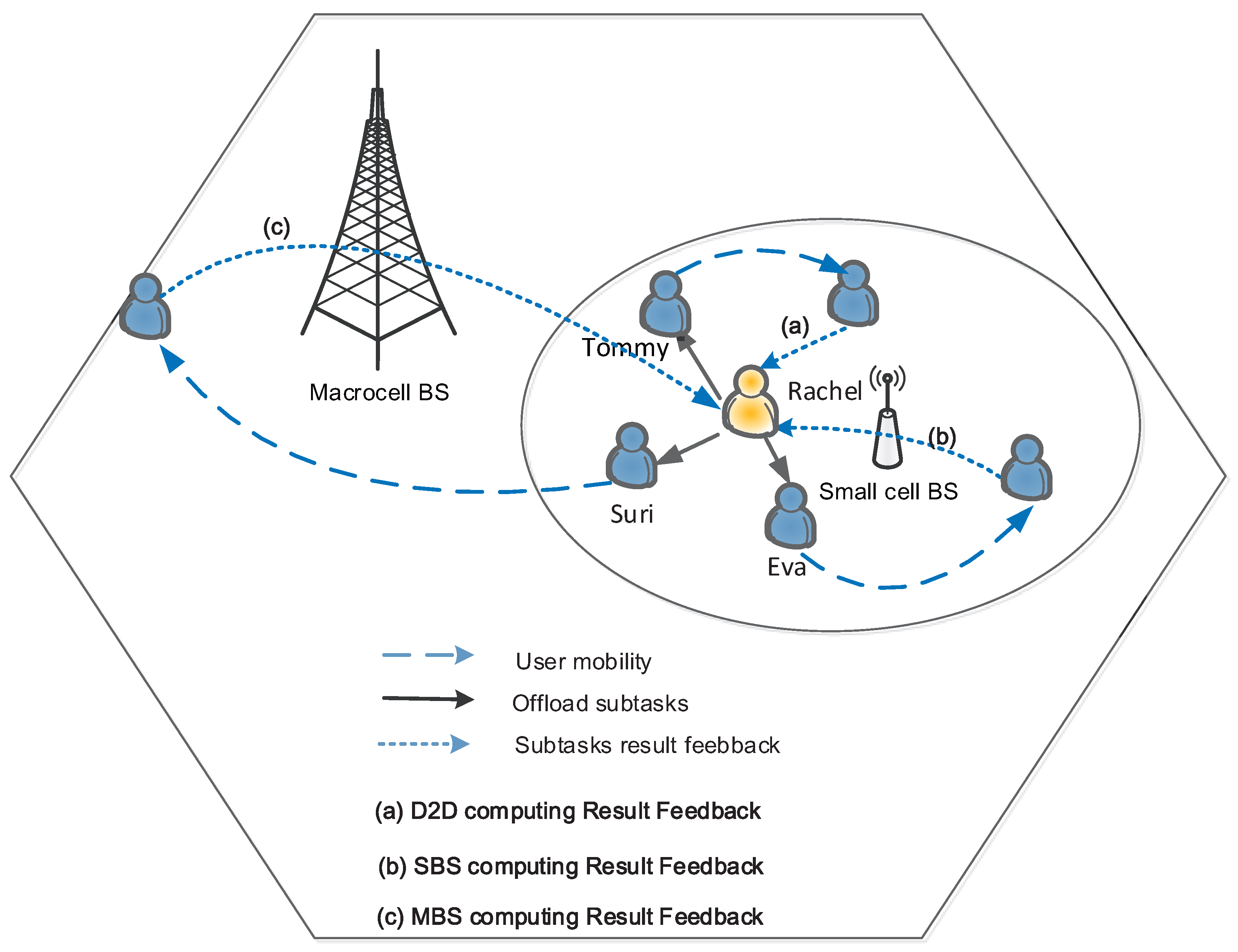

- We discuss the differences and relationships between caching and computation offloading and present a hybrid computation offloading based on MBS computation offloading, SBS computation offloading and D2D computation offloading.

- Considering the selfishness of mobile users, we suggest an incentive design based on network dynamics, differentiated user’s QoE, and the heterogeneity of user terminals in terms of caching and computing.

2. Caching in 5G Ultra-Dense Cellular Networks

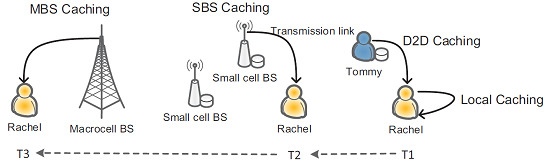

- Local caching: When the user requests content, he or she will firstly examine whether or not such content is cached locally. Once such content is confirmed in the local storage, the user will get access to it without any delay.

- D2D caching: If the content requested by the user is not cached locally, the user will seek such content among the devices within the range of D2D communications. If there exists one user caching such content, the content will be transmitted to the target user via D2D communications.

- SBS caching: Besides D2D caching, if the required content is cached by one SBS, it will be transmitted to the user by the SBS.

- MBS caching: If the content requested by the user cannot be accessed in the aforementioned ways, such a request will be forwarded to the MBS, and the content will be delivered to the user by cellular network connection.

2.1. System Model

2.2. Simulation Results and Discussions

- Popular caching: The popular caching strategies on SBSs and on mobile devices of users are as follows: (1) caching strategy on SBSs: most popular content should be stored on each SBS; (2) caching strategy on mobile devices: most popular content should be cached on each mobile device.

- Random caching: The random caching caching strategies on SBSs and on mobile devices of users are as follows: (1) caching strategy on SBSs: content should be stored at random on each SBS; (2) caching strategy on mobile devices: content should be cached at random on each mobile device.

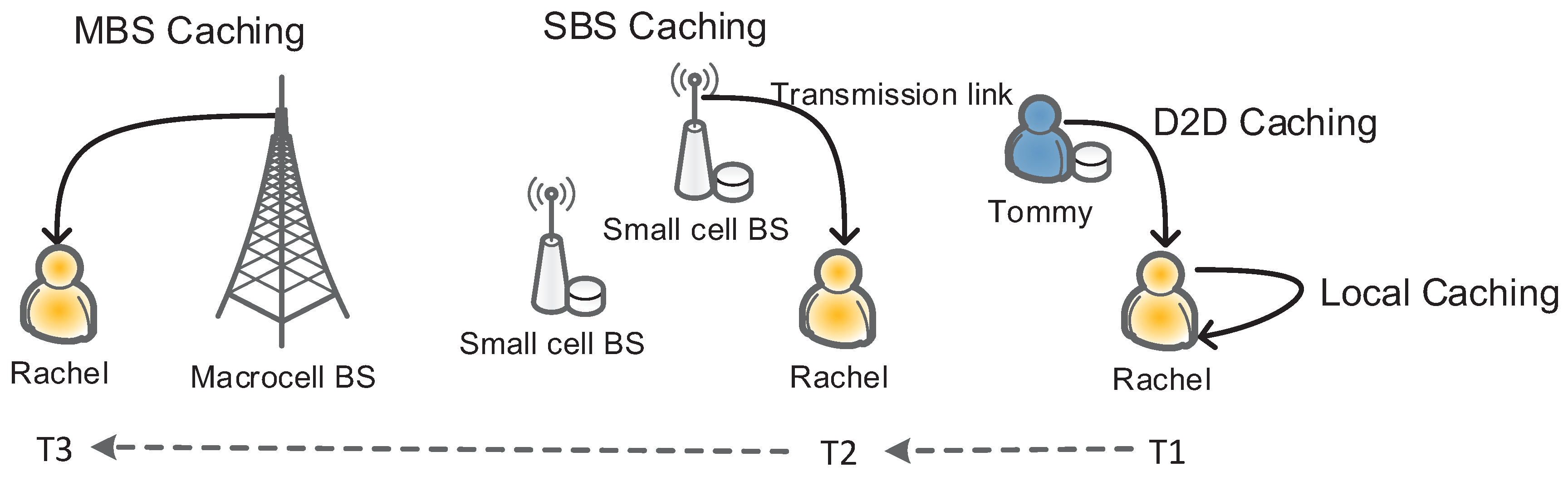

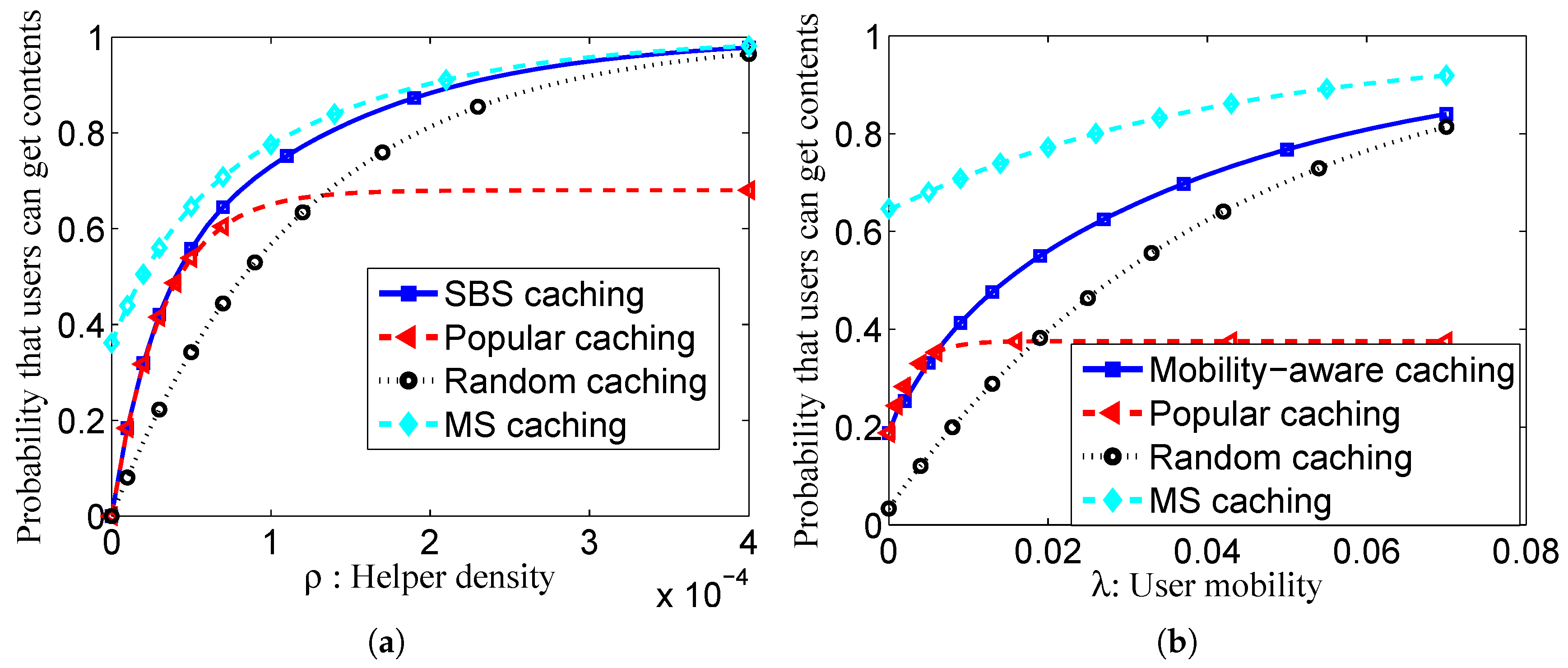

- SBS density-aware caching placement: We have provided the relationship between the SBS density and the probability that the user can obtain the requested content. The SBS density-aware caching placement is compared to the popular caching strategy and the random caching strategy, as shown in Figure 2a. When only the SBS is considered, the SBS-assisted cache placement exhibits higher offloading probability than the popular caching and the random caching.

- Mobility-aware caching placement: The user’s mobility is closely related to the probability for the user to access the content. The λ is the average contact rate of user devices. Similarly, with the analysis of SBS-assisted caching placement, Figure 2b compares the mobility-aware caching with the popular caching and the random caching. As shown in Figure 2b, the mobility-aware cache placement strategy demonstrates better performance than the random caching placement and the popular caching placement.

- MS caching placement: If we take into account the user mobility and the SBS density, a more advanced cache strategy named MS caching placement can be designed as demonstrated. In Figure 2a,b, we compare the performance of the proposed MS caching placement with other strategies. Since both the SBS density and the user mobility are considered, the MS caching placement obtains the highest probability that users can obtain the contents.

3. Computation Offloading in 5G Ultra-Dense Cellular Networks

3.1. Caching vs. Computation Offloading

3.2. Computation Offloading

- MBS computation offloading [39]: A user can offload the computation task to an MBS through a cellular network link. In the research area of mobile cloud computing, when the computation is performed in a cloud environment, the results will be fed back to the user from the cloud via the MBS.

- SBS computation offloading [37]: The computation task is offloaded to an SBS. After SBS completes the computing, the results will be fed back to the user.

- D2D computation offloading [38]: A user terminal can offload the computation task via a D2D link to other mobile devices within the D2D range. Upon the task completion, the results can be transmitted back to the user terminal, if the mobile devices are still within the D2D communication range.

- D2D computing result feedback: After the computational task is processed at the service node, the computing results will be returned directly back to the computation node if the service node and the computation node are still within the range of the D2D communication.

- SBS computing result feedback: After the computational task is completed at the service node, the service node will offload the computing results onto the SBS if the computation node is out of the range of the D2D communication. Then, the SBS will transmit the results to the user if it is within the communication range with the computation node.

- MBS computing result feedback: When the result of the computing task has not been transferred to the user before the deadline, namely when the user and the SBS are still not within the communication range, the SBS will upload the results to the MBS, and then, the final results will be passed back to the user.

4. Incentive Design for Caching and Computation Offloading

- Caching incentive: When the user B obtain content from the user A, the user B needs to pay an incentive (e.g., virtual money), this incentive includes the cost of D2D communication between B and A, the cost of storing content at the expense of the content value from the perspective of the user A. Meanwhile, the user A can get these incentive. From the above, we can see that the popularity of the content of the caching incentive, downloading times of users and caching time are all related to these three aspects.

- Computing incentive: When the user A offloads computing tasks and transfers them to the user B and then the user B helps the user A to proceed with the calculation, the user A will pay the user B an incentive for the communication cost and the computing cost. At the same time, the user B will obtain these incentives. The costs are relatively high due to the fact that the result of computation is equivalent to the content, whose popularity is zero.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, Y.; Chen, M.; Mao, S.; Hu, L.; Leung, V.C. CAP: Crowd Activity Prediction Based on Big Data Analysis. IEEE Netw. 2014, 28, 52–57. [Google Scholar] [CrossRef]

- Peng, L.; Youn, C.H.; Tang, W.; Qiao, C. A Novel Approach to Optical Switching for Intra-Datacenter Networking. J. Lightwave Technol. 2012, 30, 252–266. [Google Scholar] [CrossRef]

- Fortino, G.; Russo, W.; Vaccaro, M. An agent-based approach for the design and analysis of content delivery networks. J. Netw. Comput. Appl. 2014, 37, 127–145. [Google Scholar] [CrossRef]

- Fortino, G.; Russo, W. Using P2P, GRID and Agent technologies for the development of content distribution networks. Future Gener. Comp. Syst. 2008, 24, 180–190. [Google Scholar] [CrossRef]

- Li, J.; Qiu, M.; Ming, Z.; Quan, G.; Qin, X.; Gu, Z. Online Optimization for Scheduling Preemptable tasks on IaaS Cloud systems. J. Parallel Distrib. Comput. 2012, 72, 666–677. [Google Scholar] [CrossRef]

- Li, J.; Ming, Z.; Qiu, M.; Quan, G.; Qin, X.; Chen, T. Resource Allocation Robustness in Multi-Core Embedded Systems with Inaccurate Information. J. Syst. Archit. 2011, 57, 840–849. [Google Scholar] [CrossRef]

- Ge, X.; Tu, S.; Mao, G.; Wang, C.X.; Han, T. 5G ultra-dense cellular networks. IEEE Wirel. Commun. 2016, 23, 72–79. [Google Scholar] [CrossRef]

- Volk, M.; Sterle, J.; Sedlar, U.; Kos, A. An approach to modeling and control of QoE in next generation networks. IEEE Commun. Mag. 2010, 48, 126–135. [Google Scholar] [CrossRef]

- Lin, K.; Wang, W.; Wang, X.; Ji, W.; Wan, J. QoE-Driven Spectrum Assignment for 5G Wireless Networks using SDR. IEEE Wirel. Commun. 2015, 22, 48–55. [Google Scholar] [CrossRef]

- Hossain, M.S.; Muhammad, G.; Alhamid, M.F.; Song, B.; Almutib, K. Audio-visual emotion recognition using big data towards 5G. Mob. Netw. Appl. 2016, 1–11. [Google Scholar] [CrossRef]

- Zheng, K.; Zhang, X.; Zheng, Q.; Xiang, W.; Hanzo, L. Quality-of-experience assessment and its application to video services in LTE networks. IEEE Wirel. Commun. 2015, 1, 70–78. [Google Scholar] [CrossRef]

- Sterle, J.; Sedlar, U.; Rugelj, M.; Kos, A.; Volk, M. Application-driven OAM framework for heterogeneous IoT environments. Int. J. Distrib. Sens. Netw. 2016, 2016. [Google Scholar] [CrossRef]

- Sedlar, U.; Rugelj, M.; Volk, M.; Sterle, J. Deploying and managing a network of autonomous internet measurement probes: Lessons learned. Int. J. Distrib. Sens. Netw. 2015, 2015. [Google Scholar] [CrossRef]

- Zhang, Y.; Qiu, M.; Tsai, C.; Hassan, M.M.; Alamri, A. Health-CPS: Healthcare Cyber-Physical System Assisted by Cloud and Big Data. IEEE Syst. J. 2015, 1–8. [Google Scholar] [CrossRef]

- Qiu, M.; Sha, E.H.-M. Cost Minimization while Satisfying Hard/Soft Timing Constraints for Heterogeneous Embedded Systems. ACM Trans. Des. Autom. Electron. Syst. 2009, 14. [Google Scholar] [CrossRef]

- Wang, X.; Chen, M.; Taleb, T.; Ksentini, A.; Leung, V.C.M. Cache in the Air: Exploiting Content Caching and Delivery Techniques for 5G Systems. IEEE Commun. Mag. 2014, 52, 131–139. [Google Scholar] [CrossRef]

- Zheng, K.; Hou, L.; Meng, H.; Zheng, Q.; Lu, N.; Lei, L. Soft-Defined Heterogeneous Vehicular Network: Architecture and Challenges. IEEE Netw. 2015. arXiv:1510.06579. [Google Scholar]

- Lin, K.; Xu, T.; Song, J.; Qian, Y.; Sun, Y. Node Scheduling for All-directional Intrusion Detection in SDR-based 3D WSNs. IEEE Sens. J. 2016. [Google Scholar] [CrossRef]

- Shanmugam, K.; Golrezaei, N.; Dimakis, A.; Molisch, A.; Caire, G. Femtocaching: Wireless content delivery through distributed caching helpers. IEEE Trans. Inf. Theory 2013, 59, 8402–8413. [Google Scholar] [CrossRef]

- Golrezaei, N.; Mansourifard, P.; Molisch, A.F.; Dimakis, A.G. Base-station assisted device-to-device communications for high-throughput wireless video networks. IEEE Trans. Wirel. Commun. 2014, 13, 3665–3676. [Google Scholar] [CrossRef]

- Song, J.; Song, H.; Choi, W. Optimal caching placement of caching system with helpers. In Proceedings of of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015.

- Lei, L.; Kuang, Y.; Cheng, N.; Shen, X.; Zhong, Z.; Lin, C. Delay-Optimal Dynamic Mode Selection and Resource Allocation in Device-to-Device Communications. IEEE Trans. Veh. Technol. 2015, 65, 3474–3490. [Google Scholar] [CrossRef]

- Zheng, K.; Meng, H.; Chatzimisios, P.; Lei, L.; Shen, X. An SMDP-Based Resource Allocation in Vehicular Cloud Computing Systems. IEEE Trans. Ind. Electron. 2015, 12, 7920–7928. [Google Scholar] [CrossRef]

- Lin, K.; Chen, M.; Deng, J.; Hassan, M.; Fortino, G. Enhanced Fingerprinting and Trajectory Prediction for IoT Localization in Smart Buildings. IEEE Trans. Autom. Sci. Eng. 2016, 1–14. [Google Scholar] [CrossRef]

- Ji, M.; Caire, G.; Molisch, A.F. Wireless device-to-device caching networks: Basic priciples and system performance. IEEE J. Sel. Areas Commun. 2016, 34, 176–189. [Google Scholar] [CrossRef]

- Lin, K.; Song, J.; Luo, J.; Ji, W.; Hossain, M.; Ghoneim, A. GVT: Green Video Transmission in the Mobile Cloud Networks. IEEE Trans. Circuits Syst. Video Technol. 2016. [Google Scholar] [CrossRef]

- Malak, D.; Al-Shalash, M. Optimal caching for device-to-device content distribution in 5G networks. In Proceedings of the 2014 IEEE Globecom Workshops (GC Wkshps), Austin, TX, USA, 8–12 December 2014; pp. 863–868.

- Ji, M.; Caire, G.; Molisch, A. The throughput-outage tradeoff of wireless one-hop caching networks. IEEE Trans. Inf. Theory 2015, 61, 6833–6859. [Google Scholar] [CrossRef]

- Ge, X.; Ye, J.; Yang, Y.; Li, Q. User Mobility Evaluation for 5G Small Cell Networks Based on Individual Mobility Model. IEEE J. Sel. Areas Commun. 2016, 34, 528–541. [Google Scholar] [CrossRef]

- Chae, S.H.; Ryu, J.Y.; Quek, T.Q.S. Wan Choi Cooperative transmission via caching helpers. In Proceedings of the 2015 IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015.

- Ge, X.; Yang, B.; Ye, J.; Mao, G.; Wang, C.-X.; Han, T. Spatial Spectrum and Energy Efficiency of Random Cellular Networks. IEEE Trans. Commun. 2015, 63, 1019–1030. [Google Scholar] [CrossRef]

- Li, Y.; Wang, W. Can mobile cloudletss support mobile applications? In Proceedings of the 33rd Annual IEEE International Conference on Computer Communications (INFOCOM’14), Toronto, ON, Canada, 27 April–2 May 2014; pp. 1060–1068.

- Ahlehagh, H.; Dey, S. Video-aware scheduling and caching in the radio access network. IEEE/ACM Trans. Netw. 2014, 22, 1444–1462. [Google Scholar] [CrossRef]

- Ge, X.; Tu, S.; Han, T.; Li, Q.; Mao, G. Energy Efficiency of Small Cell Backhaul Networks Based on Gauss-Markov Mobile Models. IET Netw. 2015, 4, 158–167. [Google Scholar] [CrossRef]

- Passarella, A.; Conti, M. Analysis of Individual Pair and Aggregate Intercontact Times in Heterogeneous Opportunistic Networks. IEEE Trans. Mob. Comput. 2013, 12, 2483–2495. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, D.; Hassan, M.M.; Alamri, A.; Peng, L. CADRE: Cloud-Assisted Drug REcommendation Service for Online Pharmacies. ACM/Springer Mob. Netw. Appl. 2015, 20, 348–355. [Google Scholar] [CrossRef]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient Multi-User Computation Offloading for Mobile-Edge Cloud Computing. IEEE Trans. Netw. 2015. [Google Scholar] [CrossRef]

- Chen, M.; Hao, Y.; Li, Y.; Lai, C.; Wu, D. On The Computation Offloading at Ad Hoc Cloudlet: Architecture and Service Models. IEEE Commun. 2015, 53, 18–24. [Google Scholar] [CrossRef]

- Tong, L.; Li, Y.; Gao, W. A Hierarchical Edge Cloud Architecture for Mobile Computing. In Proceedings of the IEEE INFOCOM 2016—The 35th Annual IEEE International Conference on Computer Communications, San Francisco, CA, USA, 10–15 April 2016.

- Liu, Q.; Ma, Y.; Alhussein, M.; Zhang, Y.; Peng, L. Green Data Center with IoT Sensing and Cloud-assisted Smart Temperature Controlling System. Comput. Netw. 2016, 101, 104–112. [Google Scholar] [CrossRef]

- Ge, X.; Huang, X.; Wang, Y.; Chen, M.; Li, Q.; Han, T.; Wang, C.-X. Energy Efficiency Optimization for MIMO-OFDM Mobile Multimedia Communication Systems with QoS Constraints. IEEE Trans. Veh. Technol. 2014, 63, 2127–2138. [Google Scholar] [CrossRef]

| Caching | Computation Offloading |

|---|---|

| No feedback, one-way cache and fetch. | Need the feedback of the computation result. |

| The popularity of the cached content is typically high. | The popularity of cached computation result can be understood as 0, since it usually only serves one particular user. |

| The size of shared storage is relatively large. | The shared space to store the computation result is relatively small. |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, M.; Hao, Y.; Qiu, M.; Song, J.; Wu, D.; Humar, I. Mobility-Aware Caching and Computation Offloading in 5G Ultra-Dense Cellular Networks. Sensors 2016, 16, 974. https://doi.org/10.3390/s16070974

Chen M, Hao Y, Qiu M, Song J, Wu D, Humar I. Mobility-Aware Caching and Computation Offloading in 5G Ultra-Dense Cellular Networks. Sensors. 2016; 16(7):974. https://doi.org/10.3390/s16070974

Chicago/Turabian StyleChen, Min, Yixue Hao, Meikang Qiu, Jeungeun Song, Di Wu, and Iztok Humar. 2016. "Mobility-Aware Caching and Computation Offloading in 5G Ultra-Dense Cellular Networks" Sensors 16, no. 7: 974. https://doi.org/10.3390/s16070974

APA StyleChen, M., Hao, Y., Qiu, M., Song, J., Wu, D., & Humar, I. (2016). Mobility-Aware Caching and Computation Offloading in 5G Ultra-Dense Cellular Networks. Sensors, 16(7), 974. https://doi.org/10.3390/s16070974