Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors

Abstract

:1. Introduction

- Using three classifiers, we evaluated three motion sensors at the wrist and pocket positions in various scenarios and showed how these sensors behave in recognizing simple and complex activities, when used at either position or both positions. We showed the relationship between the recognition performance of various activities with these sensors and positions (pocket and wrist).

- Using three classifiers, we evaluated the effect of increasing the window size for each activity in various scenarios and showed that increasing the window size (from 2–30 s) affects the recognition of complex and simple activities in a different way.

- We proposed optimizing the recognition performance in different scenarios with low recognition performance. Moreover, we made our dataset publicly available for reproducibility.

2. Related Work

3. Data Collection and Experimental Setup

- With shuffling: In this method, we shuffle the data before they are divided into ten equal parts. This means that for each participant, some part of his or her data is used in training and the other part in testing. There is no overlap between training and testing data. In this case, the classification performance will be slightly higher and may be closer to a person-dependent validation method.

- Without shuffling: In this method, no shuffling is performed before dividing the whole data into ten equal parts. The order of the data is preserved. In this way, the classification performance will be slightly lower than the shuffling method. In our case, it resembles a person-independent validation for the seven activities that were performed by all ten participants. However, for the rest of the activities, it is not person independent. As the number of participants is less than 10, when we divide their data into ten equal parts, each part may contain data from more than one participant. This can lead to using data from one participant in both training and testing, with no overlap in data between training and testing sets. As the order of the time series data is preserved, the results are closer to the real-life situations.

4. Results and Discussion

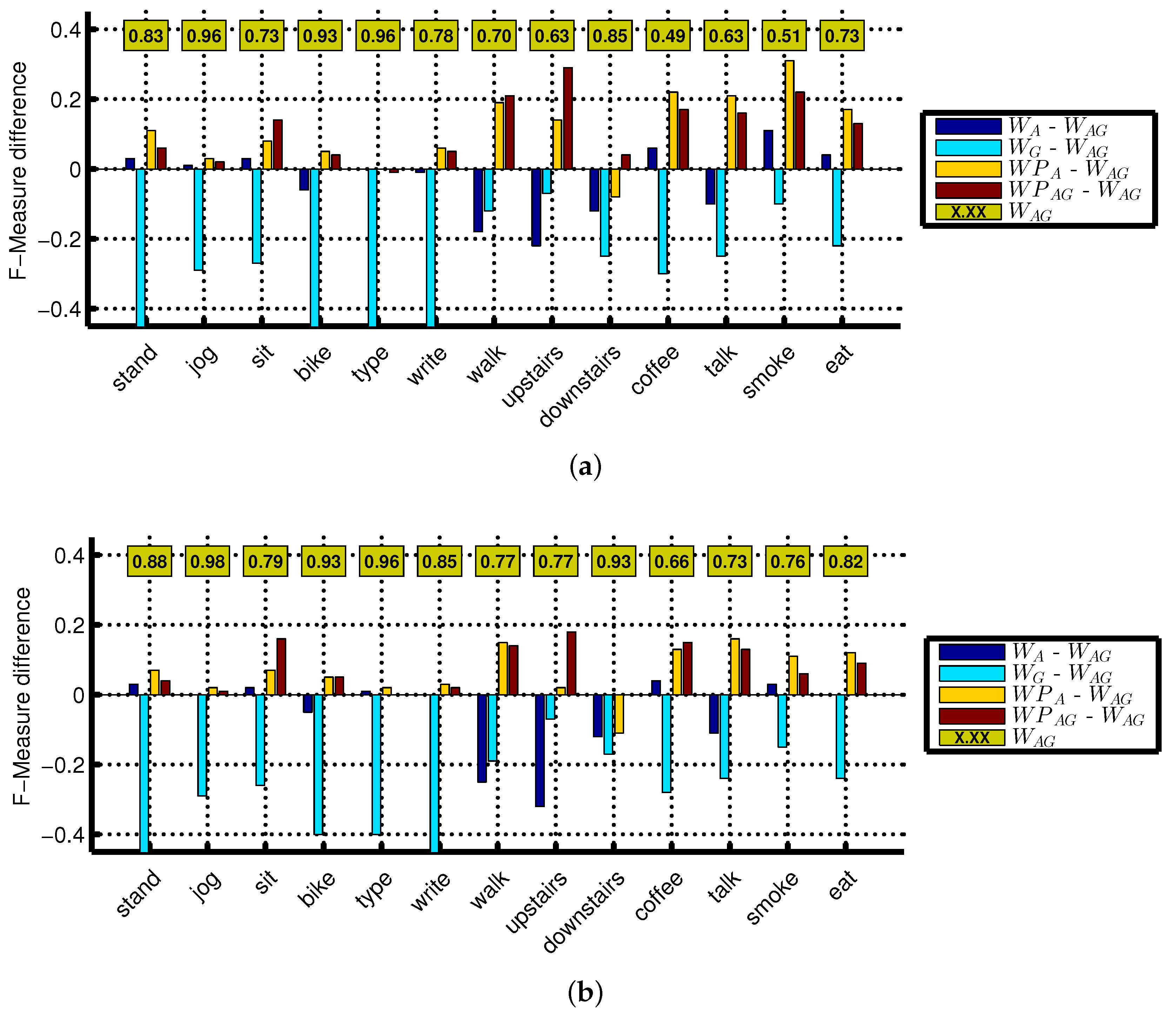

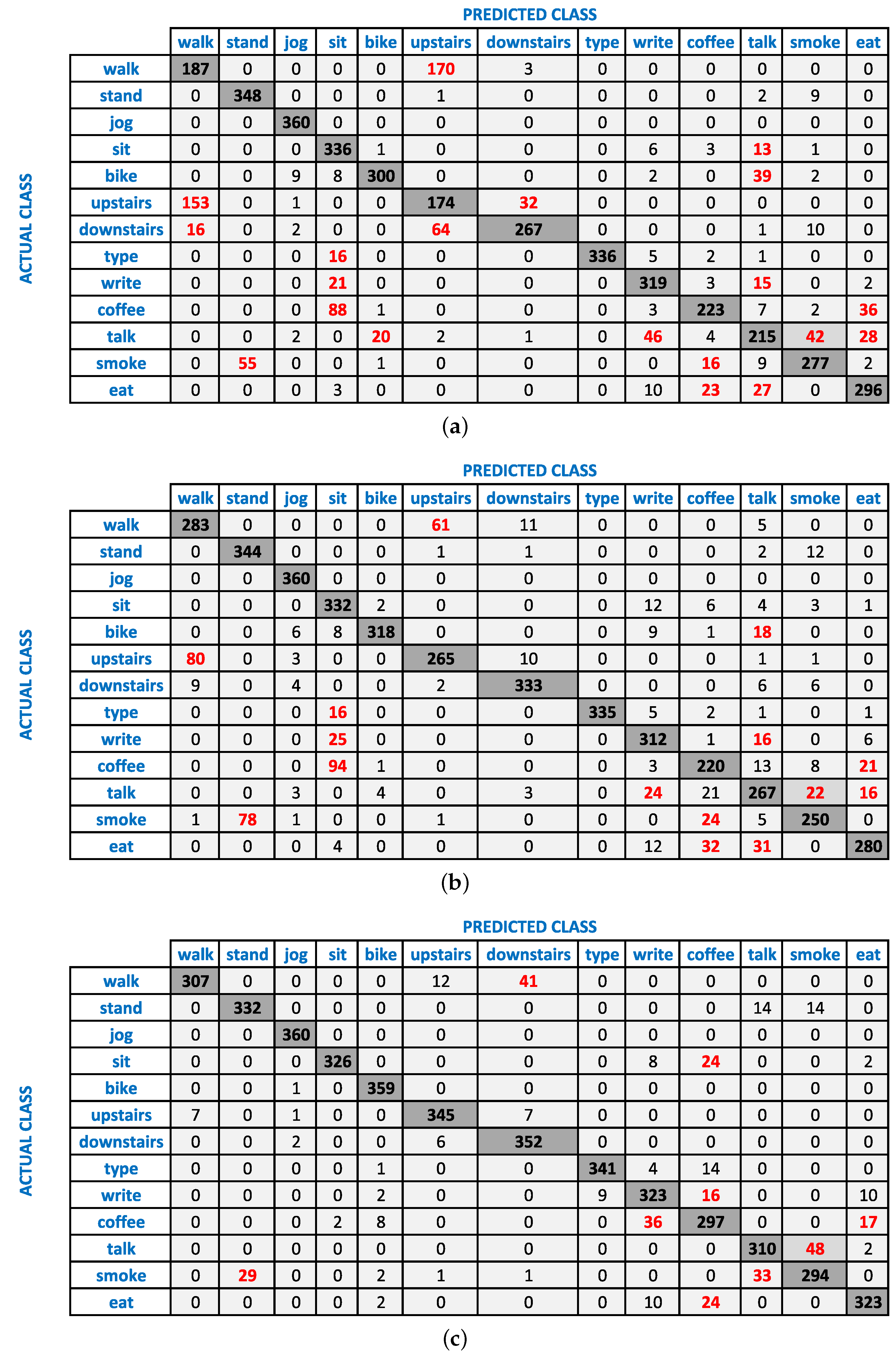

4.1. The Effect of Wrist and Pocket Combination on Recognition Performance

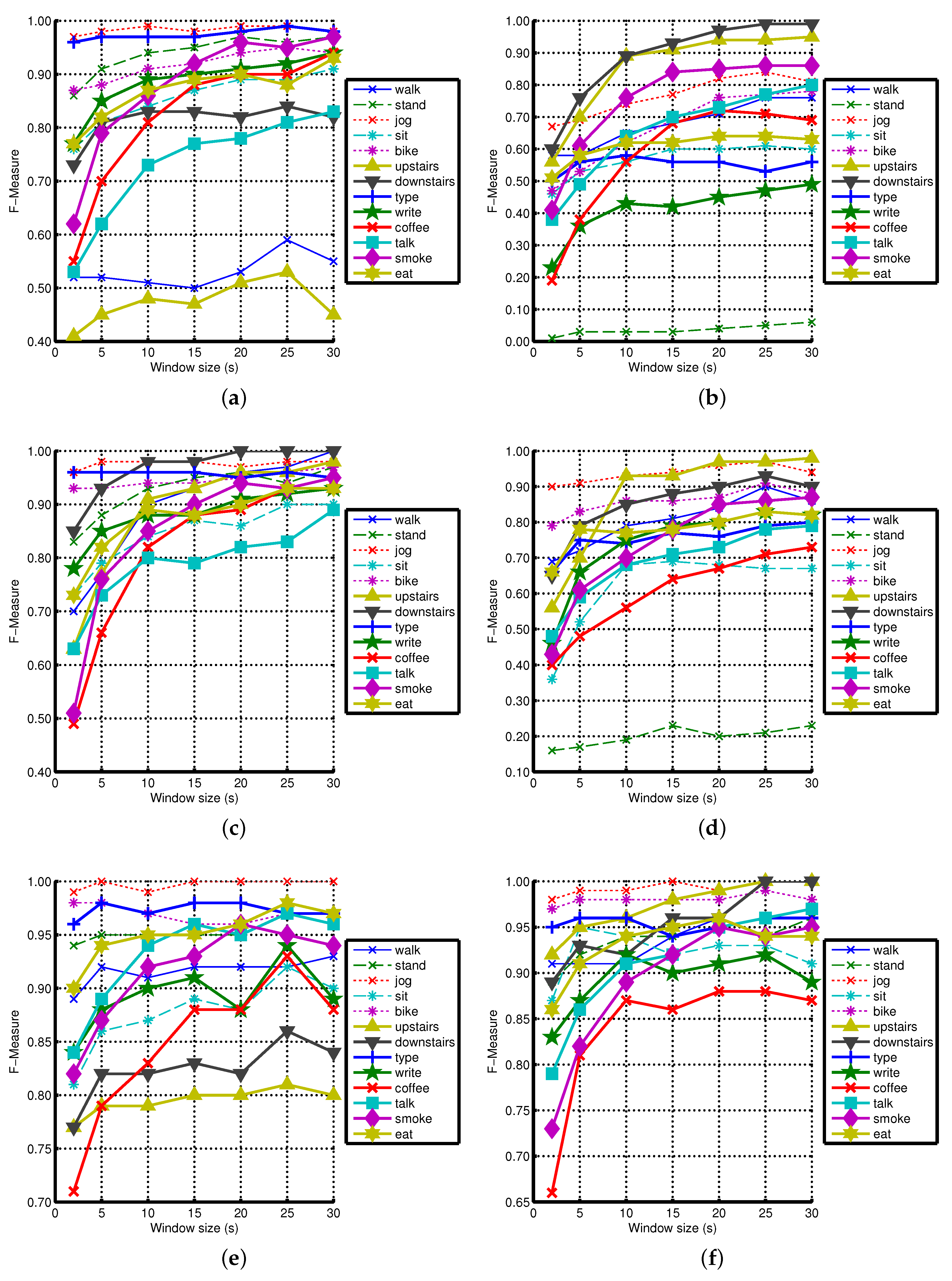

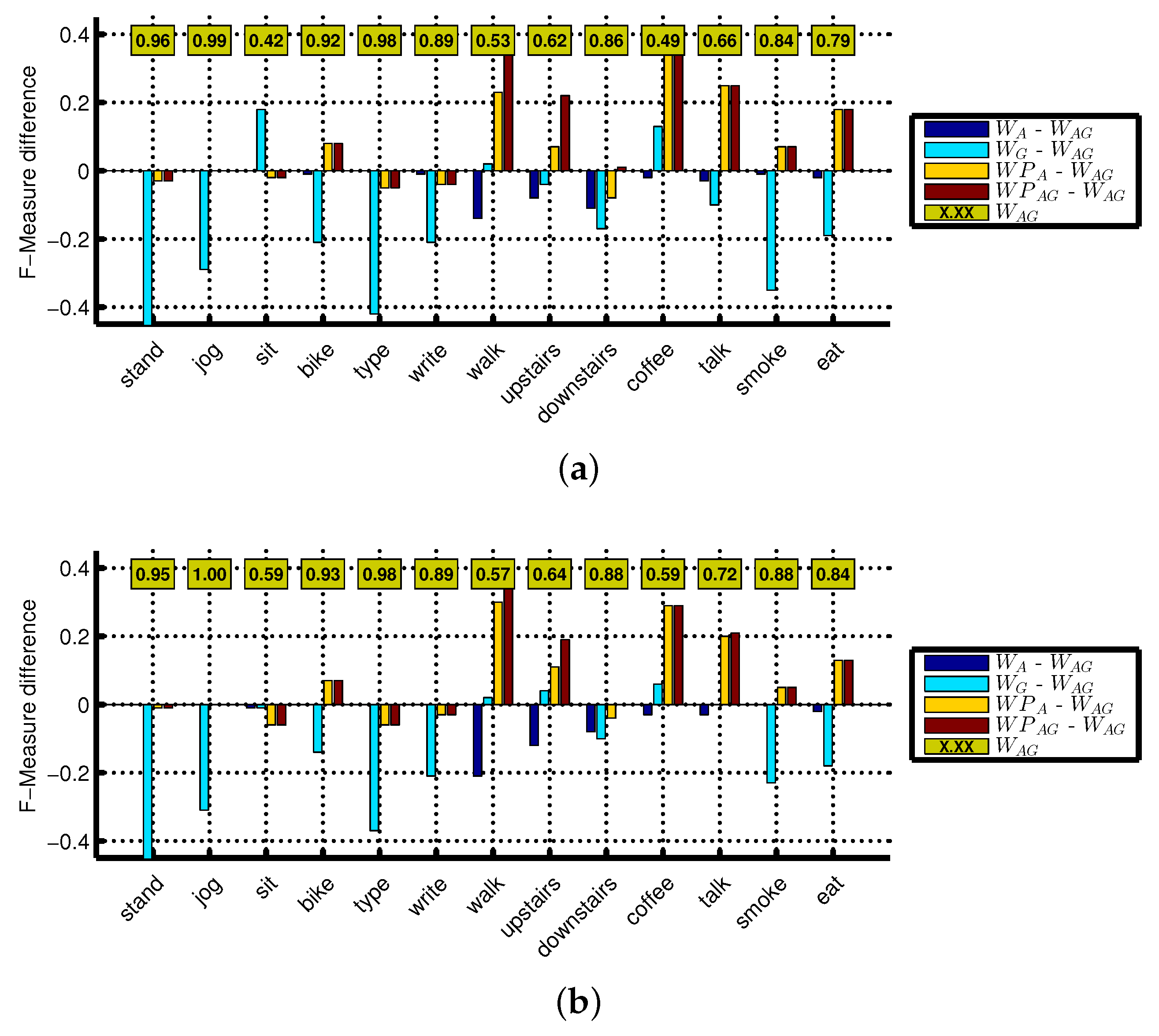

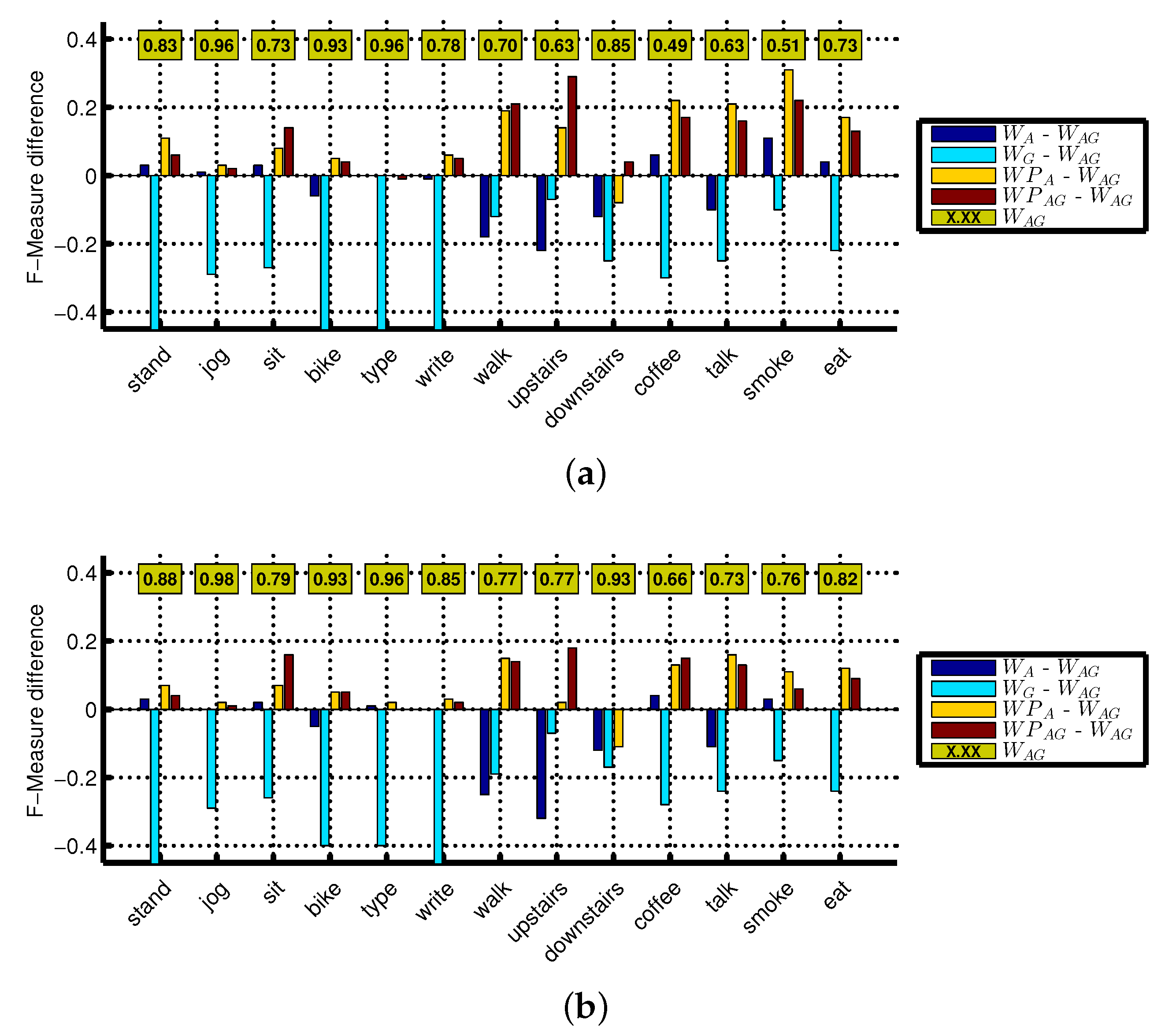

4.2. The Effect of Window Size on Recognition Performance

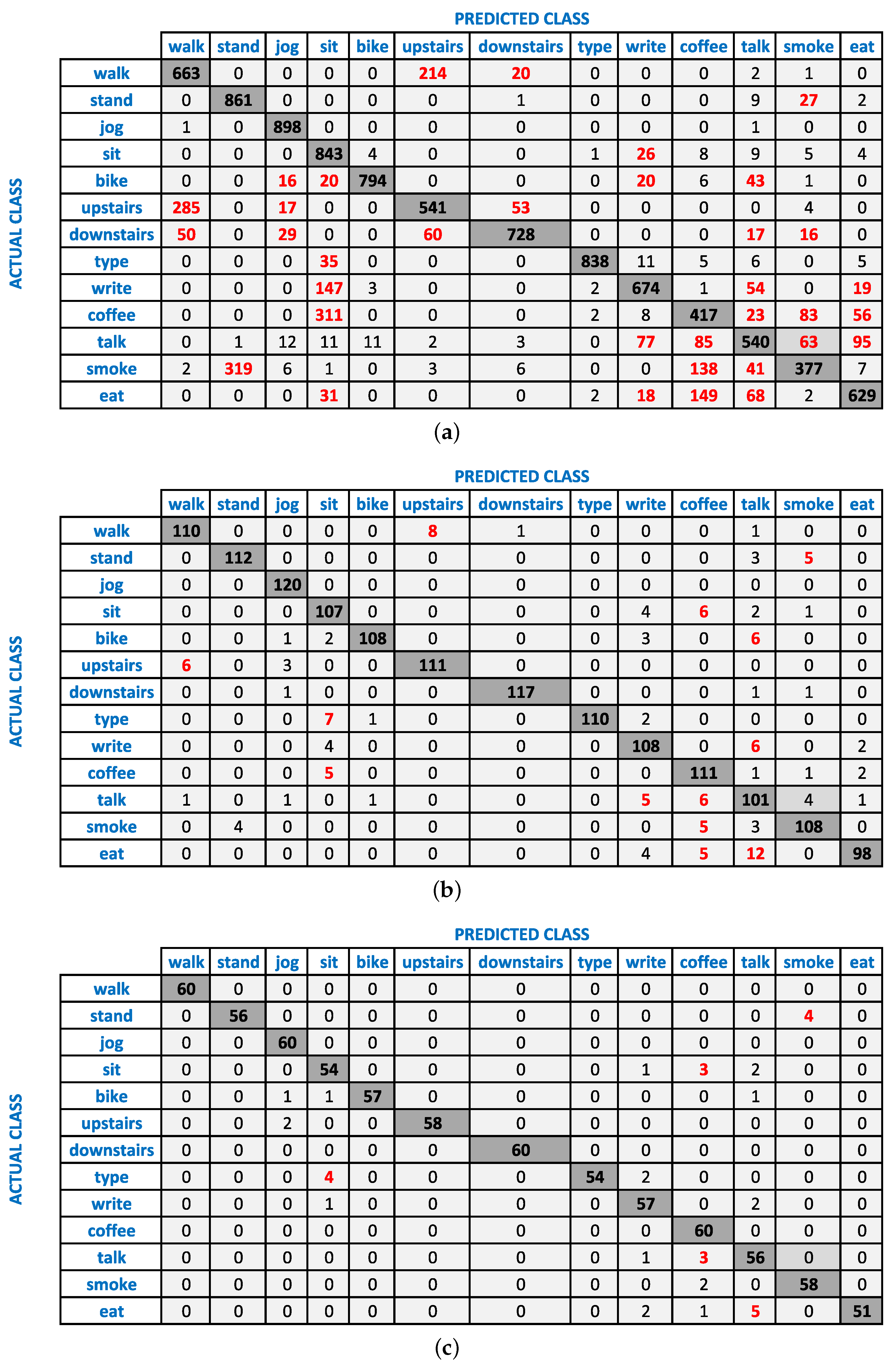

4.3. Analysis Using Cross-Validation with Shuffling Data

4.4. Optimizations for Recognizing Complex Activities

| Algorithm 1 Simple rule-based algorithm. |

|

4.5. Limitations and Future Work

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Classification Results of KNN and Decision Tree

Appendix A.1. Classifiers Settings for Its Parameters

Appendix A.2. The Effect of Sensors Combination on Recognition Performance

Appendix A.3. The Effect of Window Size on Recognition Performance

References

- Incel, O.D.; Kose, M.; Ersoy, C. A review and taxonomy of activity recognition on mobile phones. BioNanoScience 2013, 3, 145–171. [Google Scholar] [CrossRef]

- Khan, W.Z.; Xiang, Y.; Aalsalem, M.Y.; Arshad, Q. Mobile phone sensing systems: A survey. IEEE Commun. Surv. Tutor. 2013, 15, 402–427. [Google Scholar] [CrossRef]

- Bieber, G.; Fernholz, N.; Gaerber, M. Smart Watches for Home Interaction Services. In HCI International 2013-Posters’ Extended Abstracts; Springer: Berlin/Heidelberg, Germany, 2013; pp. 293–297. [Google Scholar]

- Mortazavi, B.J.; Pourhomayoun, M.; Alsheikh, G.; Alshurafa, N.; Lee, S.I.; Sarrafzadeh, M. Determining the single sest sxis for sxercise sepetition secognition and sounting on smartsatches. In Proceedings of the 2014 11th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Zurich, Switzerland, 16–19 June 2014; pp. 33–38.

- Trost, S.G.; Zheng, Y.; Wong, W.K. Machine learning for activity recognition: Hip versus wrist data. Physiol. Meas. 2014, 35, 2183–2189. [Google Scholar] [CrossRef] [PubMed]

- Guiry, J.J.; van de Ven, P.; Nelson, J. Multi-Sensor Fusion for Enhanced Contextual Awareness of Everyday Activities with Ubiquitous Devices. Sensors 2014, 14, 5687–5701. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Garcia, R.I.; Hoover, A.W. A study of temporal action sequencing during consumption of a meal. In Proceedings of the ACM International Conference on Bioinformatics, Computational Biology and Biomedical Informatics, Washington, DC, USA, 22–25 September 2013; p. 68.

- Parate, A.; Chiu, M.C.; Chadowitz, C.; Ganesan, D.; Kalogerakis, E. Risq: Recognizing smoking gestures with inertial sensors on a wristband. In Proceedings of the 12th Annual International Conference on Mobile Systems, Applications, and Services, Bretton Woods, NH, USA, 16–19 June 2014; pp. 149–161.

- Dong, Y.; Scisco, J.; Wilson, M.; Muth, E.; Hoover, A. Detecting periods of eating during free living by tracking wrist motion. IEEE J. Biomed. Health Inform. 2013, 18, 1253–1260. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Scholten, H.; Havinga, P.J. Towards physical activity recognition using smartphone sensors. In Proceedings of the 2013 10th IEEE International Conference on (UIC) Ubiquitous Intelligence and Computing, Vietri sul Mare, Italy, 18–21 December 2013; pp. 80–87.

- Moncada-Torres, A.; Leuenberger, K.; Gonzenbach, R.; Luft, A.; Gassert, R. Activity classification based on inertial and barometric pressure sensors at different anatomical locations. Physiol. Meas. 2014, 35, 1245–1263. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. Fusion of smartphone motion sensors for physical activity recognition. Sensors 2014, 14, 10146–10176. [Google Scholar] [CrossRef] [PubMed]

- Huynh, T.; Schiele, B. Analyzing features for activity recognition. In Proceedings of the 2005 Joint Conference on Smart Objects and Ambient Intelligence: Innovative Context-Aware Services: Usages and Technologies, Grenoble, France, 12–14 October 2005; pp. 159–163.

- Chen, G.; Ding, X.; Huang, K.; Ye, X.; Zhang, C. Changing health behaviors through social and physical context awareness. In Proceedings of the 2015 IEEE International Conference on Computing, Networking and Communications (ICNC), Anaheim, CA, USA, 16–19 February 2015; pp. 663–667.

- Callaway, J.; Rozar, T. Quantified Wellness Wearable Technology Usage and Market Summary. Available online: https://www.rgare.com/knowledgecenter/Pages/QuantifiedWellness.aspx (accessed on 21 March 2016).

- Chernbumroong, S.; Atkins, A.S.; Yu, H. Activity classification using a single wrist-worn accelerometer. In Proceedings of the 2011 5th IEEE International Conference on Software, Knowledge Information, Industrial Management and Applications (SKIMA), Benevento, Italy, 8–11 September 2011; pp. 1–6.

- Da Silva, F.G.; Galeazzo, E. Accelerometer based intelligent system for human movement recognition. In Proceedings of the 2013 5th IEEE International Workshop on Advances in Sensors and Interfaces (IWASI), Bari, Italy, 13–14 June 2013; pp. 20–24.

- Scholl, P.M.; Van Laerhoven, K. A Feasibility Study of Wrist-Worn Accelerometer Based Detection of Smoking Habits. In Proceedings of the 2012 Sixth IEEE International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS), Palermo, Italy, 4–6 July 2012; pp. 886–891.

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. CSUR 2014, 46, 33. [Google Scholar] [CrossRef]

- Lane, N.D.; Miluzzo, E.; Lu, H.; Peebles, D.; Choudhury, T.; Campbell, A.T. A survey of mobile phone sensing. IEEE Commun. Mag. 2010, 48, 140–150. [Google Scholar] [CrossRef]

- Shoaib, M. Human activity recognition using heterogeneous sensors. In Proceedings of the Adjunct Proceedings of the 2013 ACM Conference on Ubiquitous Computing, Zurich, Switzerland, 8–12 September 2013.

- Sen, S.; Subbaraju, V.; Misra, A.; Balan, R.; Lee, Y. The case for smartwatch-based diet monitoring. In Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MO, USA, 23–27 March 2015; pp. 585–590.

- Varkey, J.P.; Pompili, D.; Walls, T.A. Human motion recognition using a wireless sensor-based wearable system. Pers. Ubiquitous Comput. 2012, 16, 897–910. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Scholten, H.; Havinga, P.J.; Incel, O.D. Towards detection of bad habits by fusing smartphone and smartwatch sensors. In Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), St. Louis, MO, USA, 23–27 March 2015; pp. 591–596.

- Hall, M.; Frank, E.; Holmes, G.; Pfahringer, B.; Reutemann, P.; Witten, I.H. The WEKA data mining software: An update. ACM SIGKDD Explor. Newslett. 2009, 11, 10–18. [Google Scholar] [CrossRef]

- Samsung Galaxy S2 Specifications. Available online: http://www.samsung.com/global/microsite/galaxys2/html/specification.html (accessed on 21 March 2016).

- Florentino-Liano, B.; O’Mahony, N.; Artés-Rodríguez, A. Human activity recognition using inertial sensors with invariance to sensor orientation. In Proceedings of the 2012 3rd IEEE International Workshop on Cognitive Information Processing (CIP), Baiona, Spain, 28–30 May 2012; pp. 1–6.

- Ustev, Y.E.; Durmaz Incel, O.; Ersoy, C. User, device and orientation independent human activity recognition on mobile phones: Challenges and a proposal. In Proceedings of the 2013 ACM conference on Pervasive and ubiquitous computing adjunct publication, Zurich, Switzerland, 8–12 September 2013; pp. 1427–1436.

- Pervasive Systems Research Data Sets. Available online: http://ps.ewi.utwente.nl/Datasets.php (accessed on 21 March 2016).

- Figo, D.; Diniz, P.C.; Ferreira, D.R.; Cardoso, J.M. Preprocessing techniques for context recognition from accelerometer data. Pers. Ubiquitous Comput. 2010, 14, 645–662. [Google Scholar] [CrossRef]

- Das, S.; Green, L.; Perez, B.; Murphy, M.; Perring, A. Detecting User Activities Using the Accelerometer on Android Smartphones; Technical Report; Carnegie Mellon University (CMU): Pittsburgh, PA, USA, 2010. [Google Scholar]

- Siirtola, P.; Röning, J. Recognizing human activities user-independently on smartphones based on accelerometer data. Int. J. Interact. Multimed. Artif. Intell. 2012, 1, 38–45. [Google Scholar] [CrossRef]

- Siirtola, P.; Roning, J. Ready-to-use activity recognition for smartphones. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence and Data Mining (CIDM), Singapore, 16–19 April 2013; pp. 59–64.

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. A survey of online activity recognition using mobile phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef] [PubMed]

- Shoaib, M.; Bosch, S.; Incel, D.O.; Scholten, J.; Havinga, P.J.M. Defining a roadmap towards comparative research in online activity recognition on mobile phones. In Proceedings of the 5th International Conference on Pervasive and Embedded Computing and Communication Systems, PECCS 2015, Angers, France, 11–13 February 2015; pp. 154–159.

- Lane, N.D.; Mohammod, M.; Lin, M.; Yang, X.; Lu, H.; Ali, S.; Doryab, A.; Berke, E.; Choudhury, T.; Campbell, A. Bewell: A smartphone application to monitor, model and promote wellbeing. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011; pp. 23–26.

- Das, B.; Seelye, A.; Thomas, B.; Cook, D.; Holder, L.; Schmitter-Edgecombe, M. Using smart phones for context-aware prompting in smart environments. In Proceedings of the 2012 IEEE Consumer Communications and Networking Conference (CCNC), Las Vegas, NV, USA, 14–17 January 2012; pp. 399–403.

- Kose, Mustafa.; Incel, O.D.; Ersoy, C. Online human activity recognition on smart phones. In Proceedings of the Workshop on Mobile Sensing: From Smartphones and Wearables to Big Data, Beijing, China, 16 April 2012; pp. 11–15.

- Lu, H.; Yang, J.; Liu, Z.; Lane, N.D.; Choudhury, T.; Campbell, A.T. The Jigsaw continuous sensing engine for mobile phone applications. In Proceedings of the 8th ACM Conference on Embedded Networked Sensor Systems, Zurich, Switzerland, 3–5 November 2010; pp. 71–84.

- Frank, J.; Mannor, S.; Precup, D. Activity recognition with mobile phones. In Machine Learning and Knowledge Discovery in Databases; Springer: Athens, Greece, 2011; pp. 630–633. [Google Scholar]

- Miluzzo, E.; Lane, N.D.; Fodor, K.; Peterson, R.; Lu, H.; Musolesi, M.; Eisenman, S.B.; Zheng, X.; Campbell, A.T. Sensing meets mobile social networks: The design, implementation and evaluation of the cenceme application. In Proceedings of the 6th ACM Conference on Embedded Network Sensor Systems, Raleigh, NC, USA, 4–7 November 2008; pp. 337–350.

- Thiemjarus, S.; Henpraserttae, A.; Marukatat, S. A study on instance-based learning with reduced training prototypes for device-context-independent activity recognition on a mobile phone. In Proceedings of the 2013 IEEE International Conference on Body Sensor Networks (BSN), Cambridge, MA, USA, 6–9 May 2013; pp. 1–6.

- Rawassizadeh, R.; Tomitsch, M.; Nourizadeh, M.; Momeni, E.; Peery, A.; Ulanova, L.; Pazzani, M. Energy-Efficient Integration of Continuous Context Sensing and Prediction into Smartwatches. Sensors 2015, 15, 22616–22645. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. https://doi.org/10.3390/s16040426

Shoaib M, Bosch S, Incel OD, Scholten H, Havinga PJM. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors. 2016; 16(4):426. https://doi.org/10.3390/s16040426

Chicago/Turabian StyleShoaib, Muhammad, Stephan Bosch, Ozlem Durmaz Incel, Hans Scholten, and Paul J. M. Havinga. 2016. "Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors" Sensors 16, no. 4: 426. https://doi.org/10.3390/s16040426

APA StyleShoaib, M., Bosch, S., Incel, O. D., Scholten, H., & Havinga, P. J. M. (2016). Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors, 16(4), 426. https://doi.org/10.3390/s16040426