Shape Reconstruction Based on a New Blurring Model at the Micro/Nanometer Scale

Abstract

:1. Introduction

2. Heat Diffusion and Blurring Imaging

2.1. Heat Diffusion in Physics

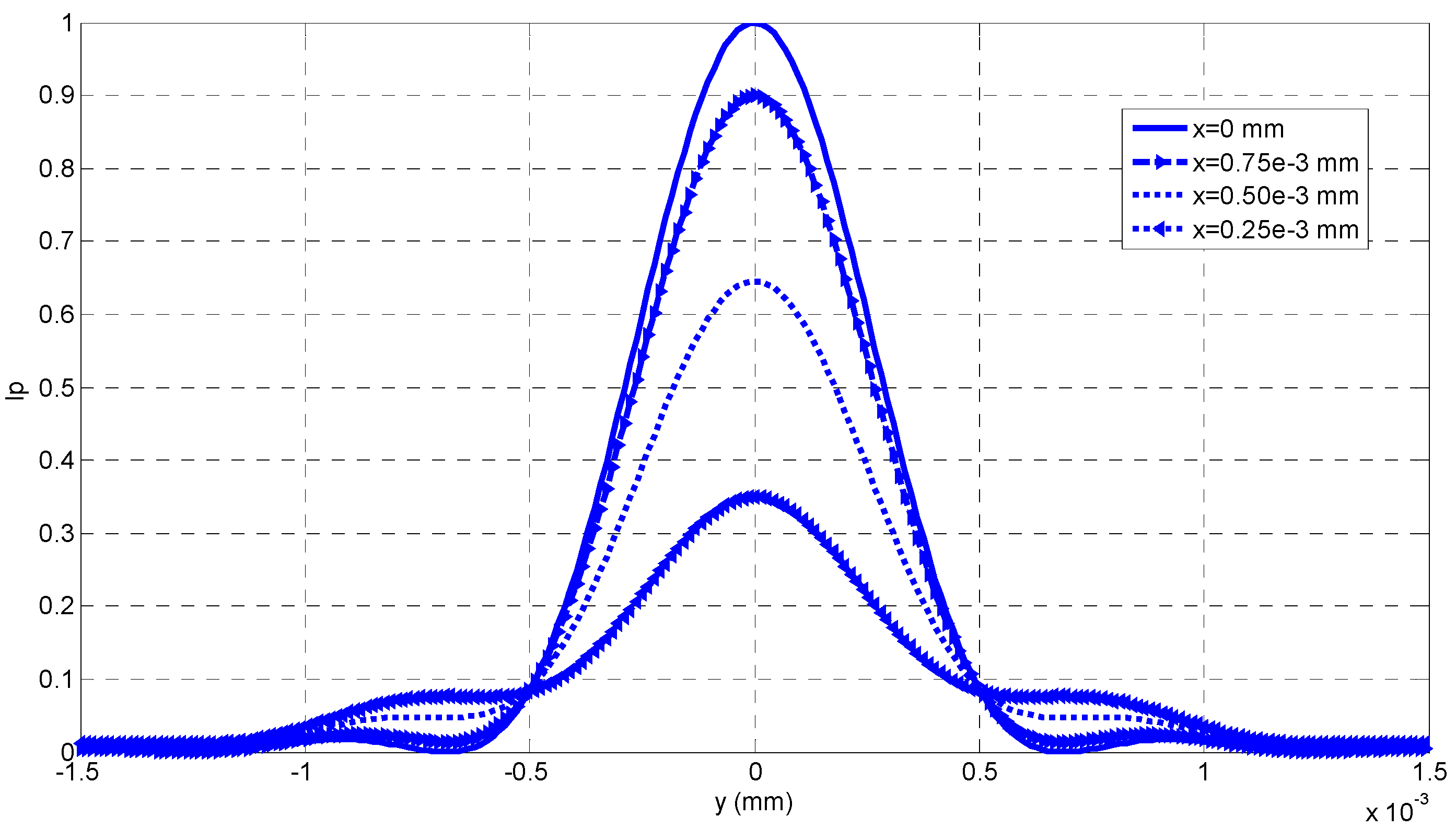

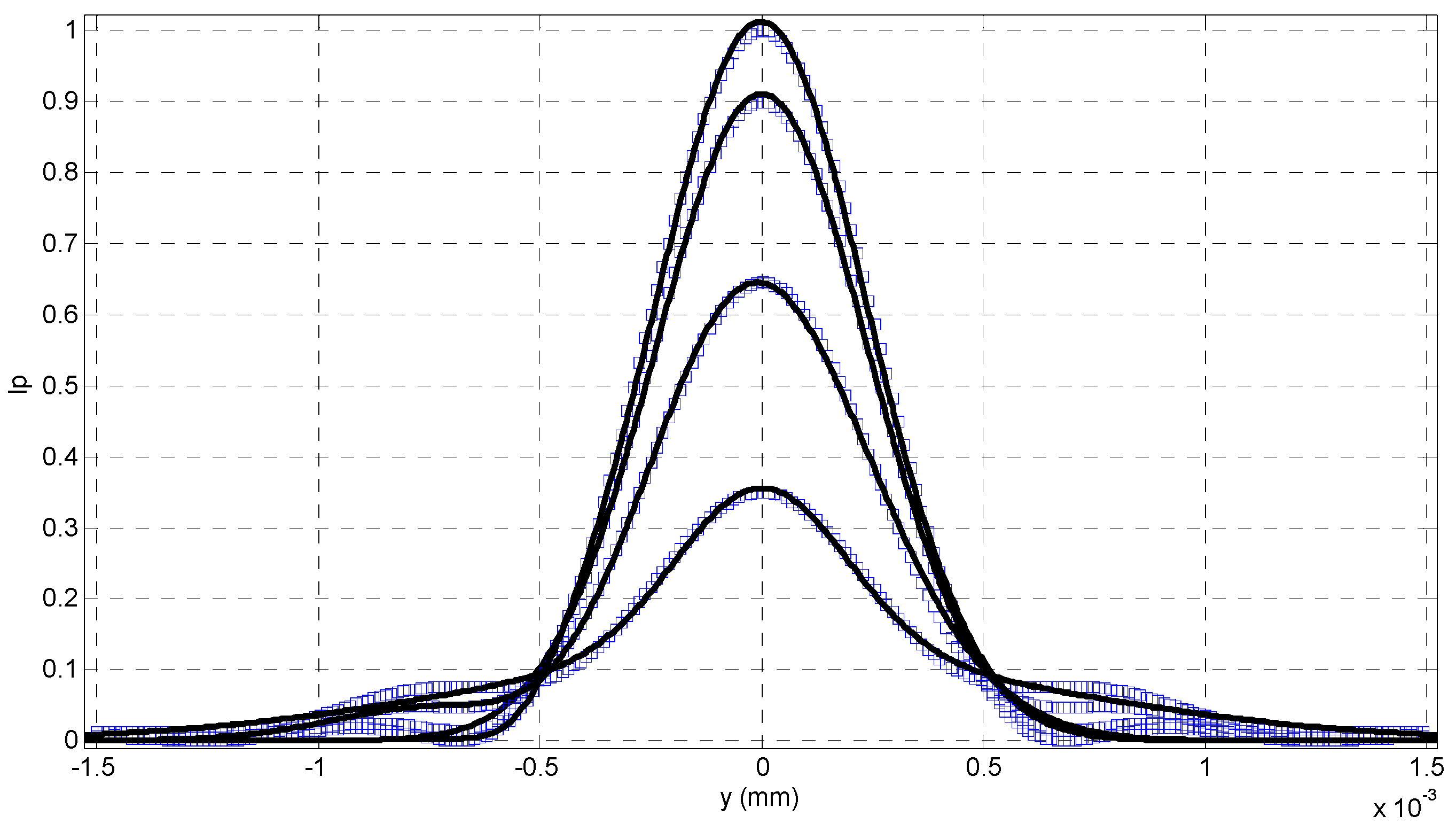

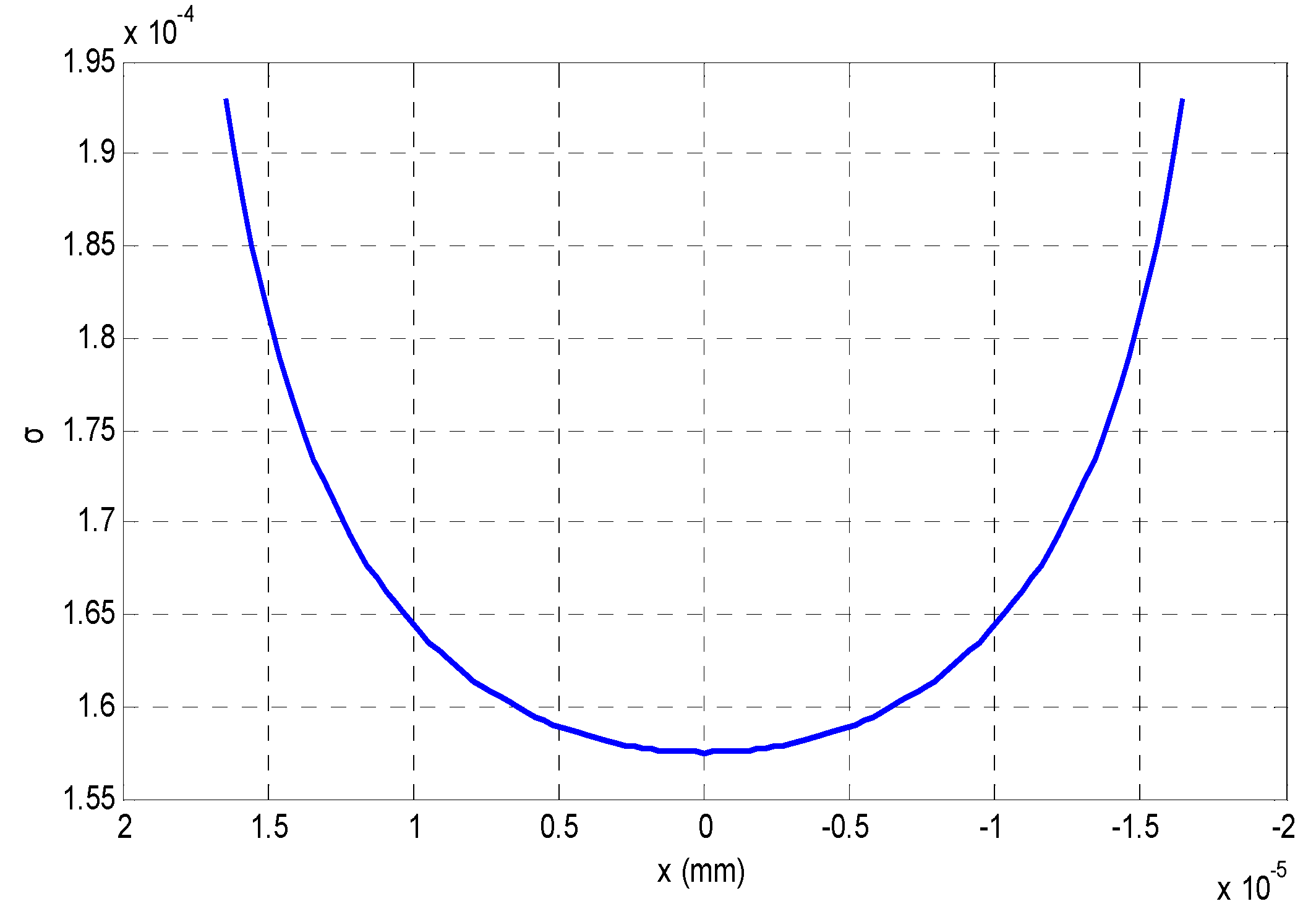

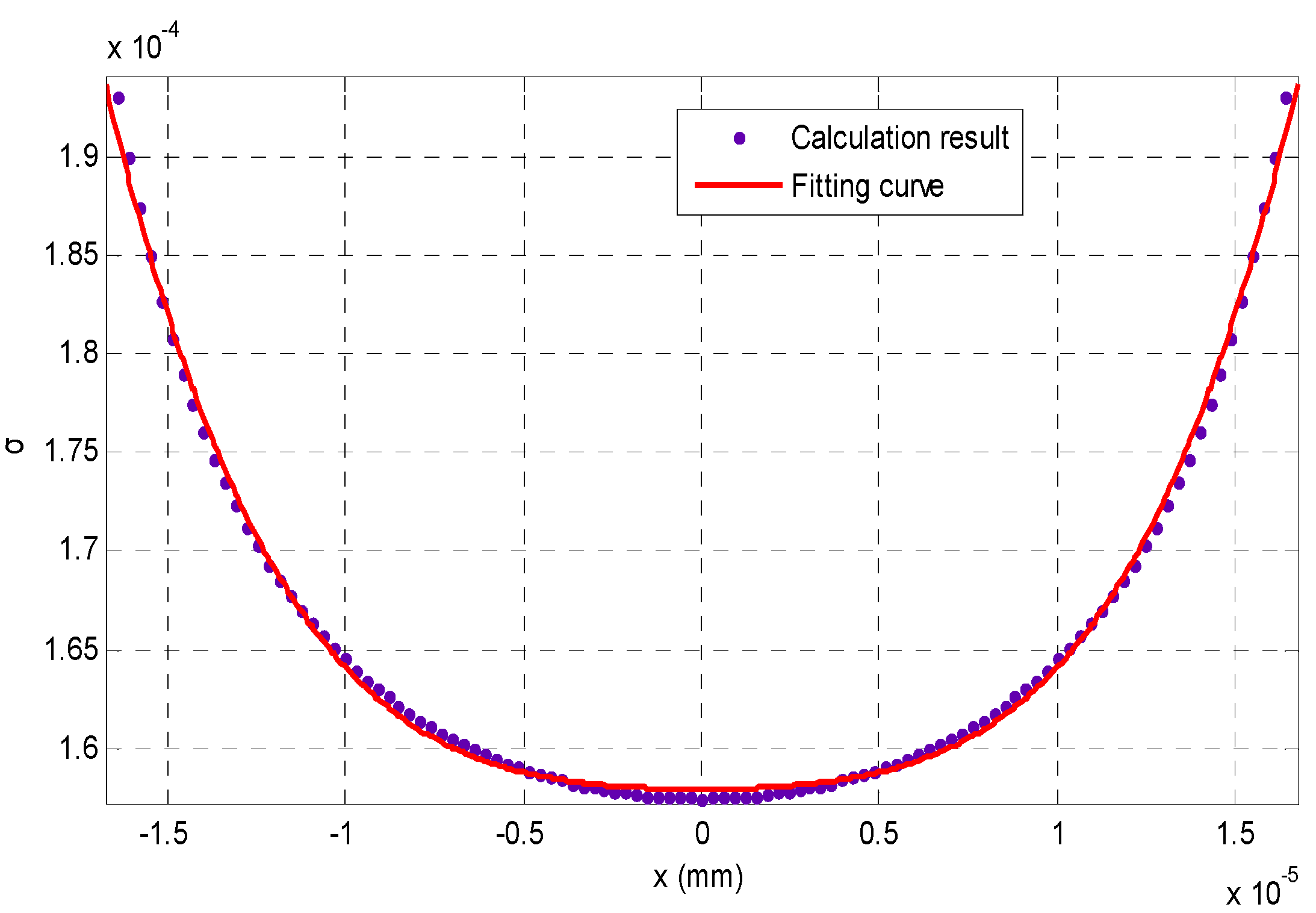

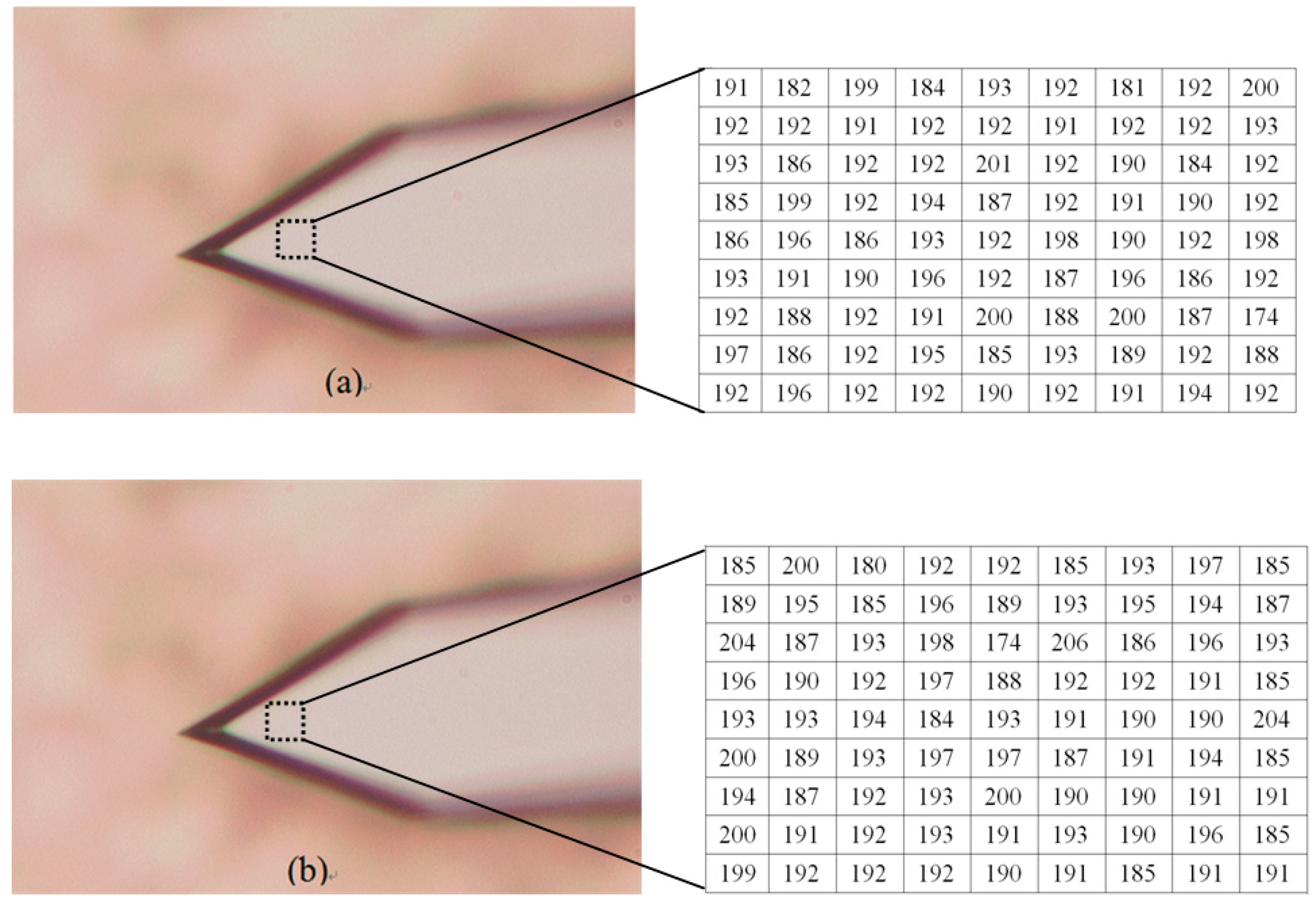

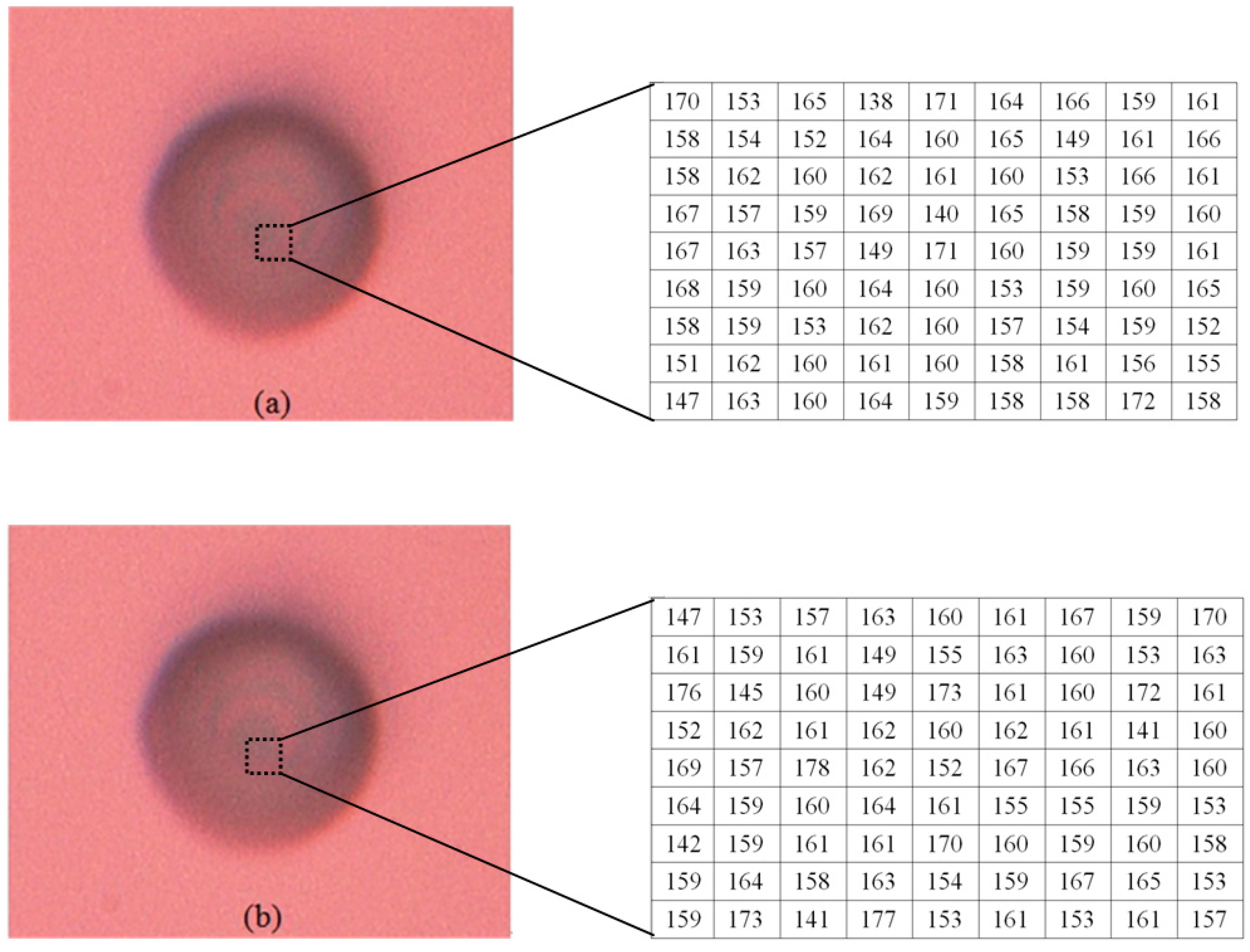

2.2. Blurring Imaging Model

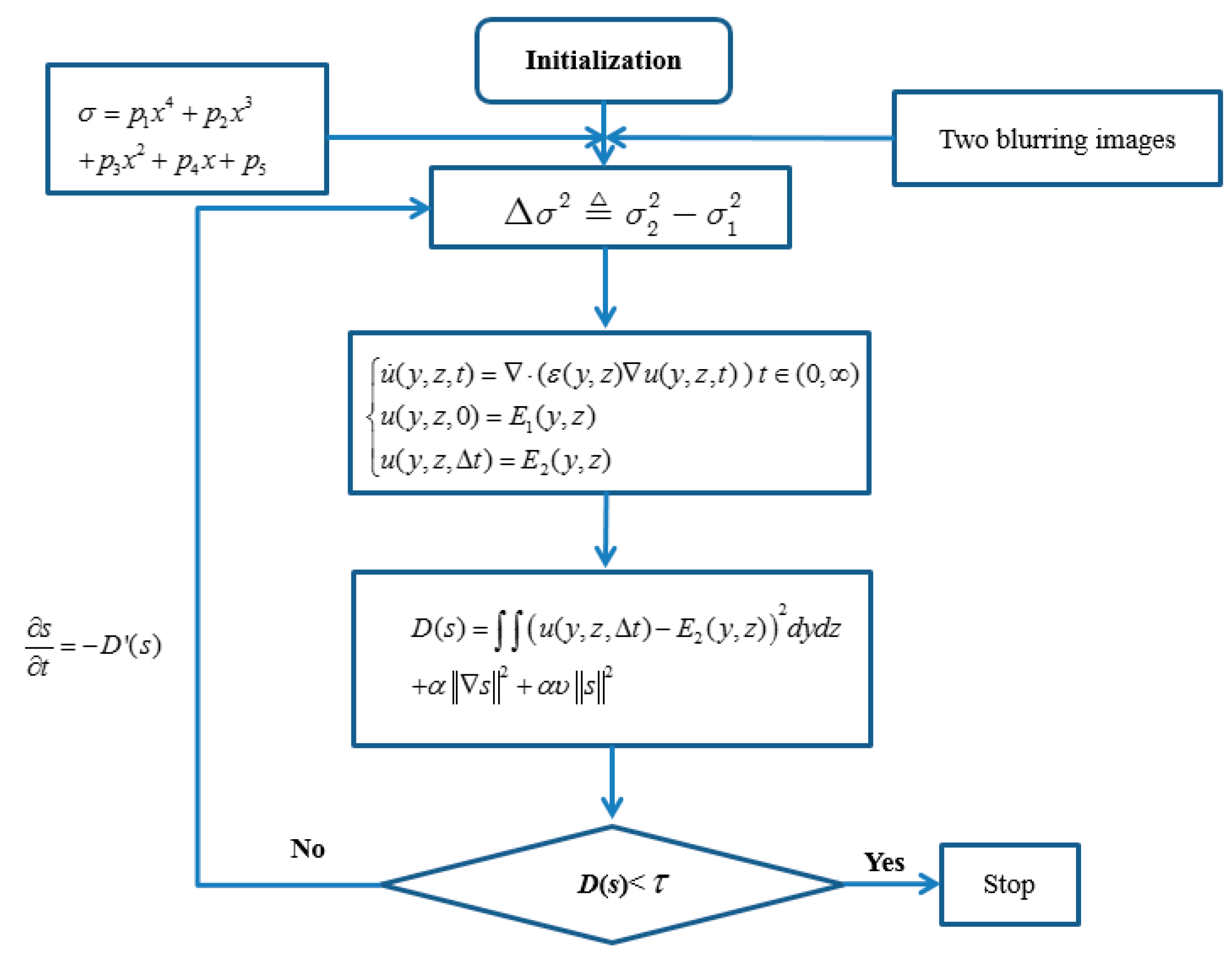

3. Depth Reconstruction with the Blurring Imaging Model

- (1)

- Give camera parameters R, ζ, and s0; two blurring images E1, E2; a threshold τ; α, v, and the step size β of each step;

- (2)

- Initialize the depth map an equifocal plane;

- (3)

- Calculate Equation (26) and obtain the relative blurring;

- (4)

- Solve the diffusion equations in Equation (25) and attain the solution ;

- (5)

- Based on calculate Equation (30). If the cost energy in Equation (30) is less than, the algorithm stops, and the finial depth map is obtained; otherwise calculate the following equation with step β:

- (6)

- Calculate Equation (27), update the global depth information, and return to Step 3.

4. Experiment

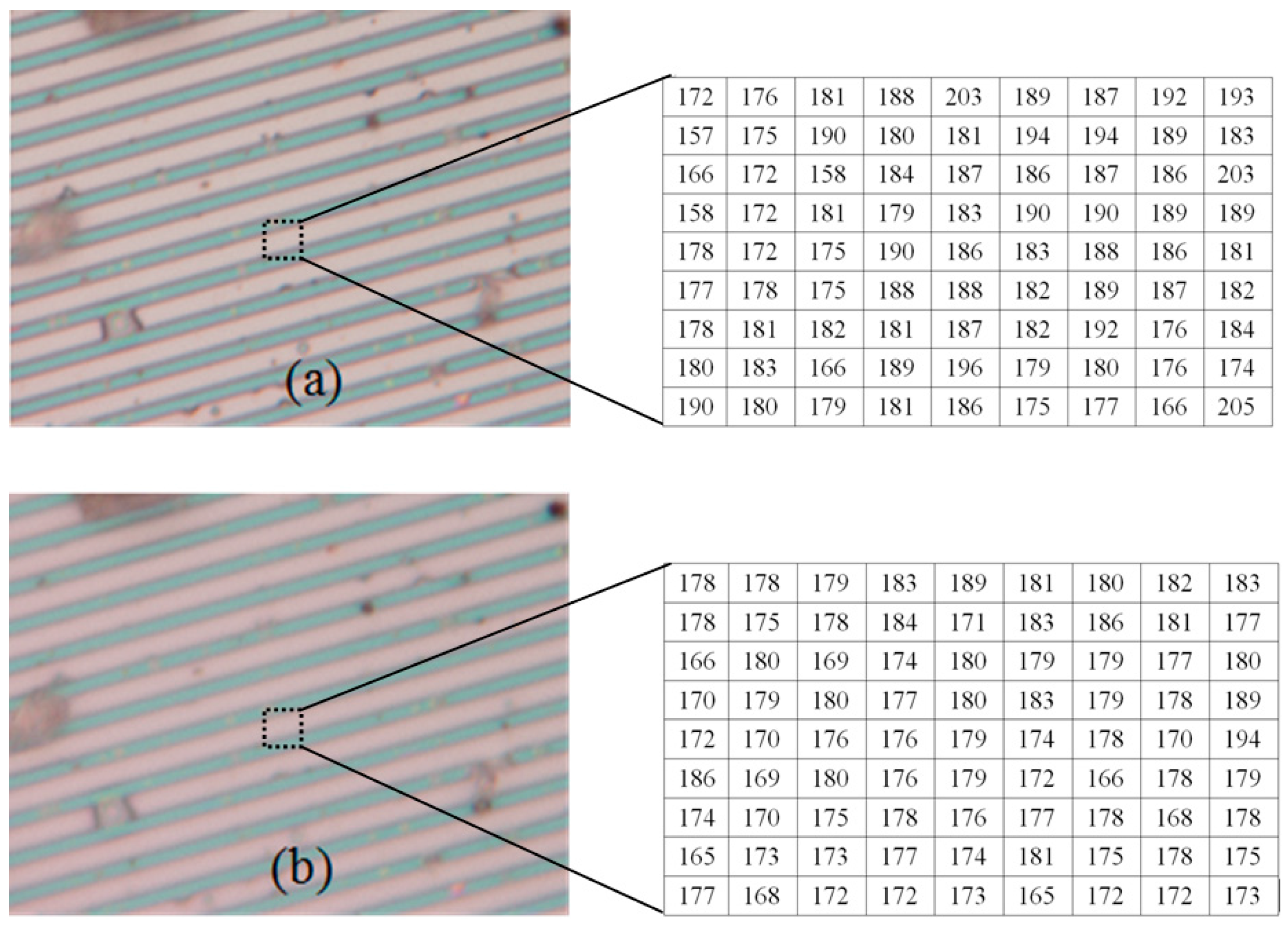

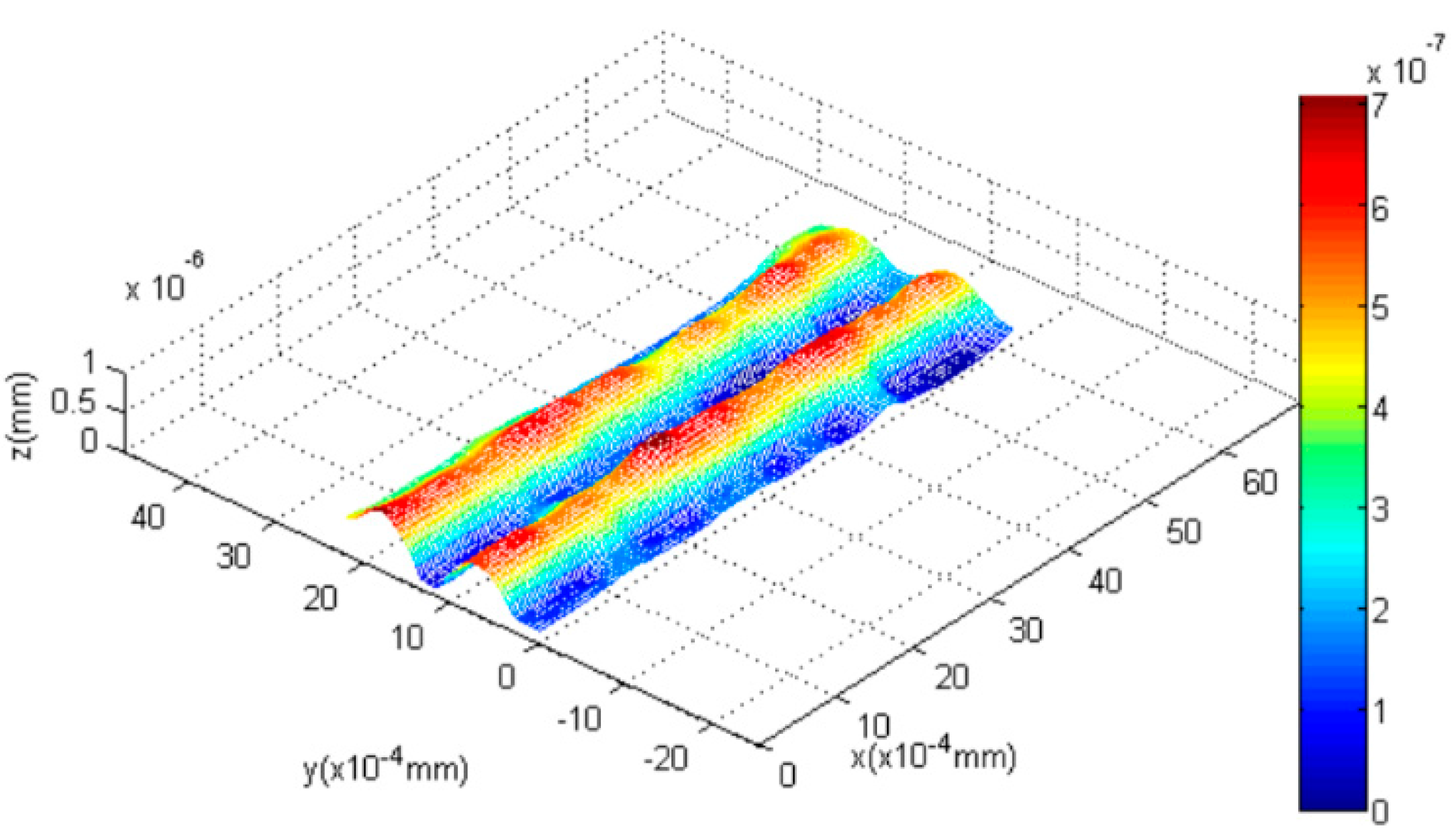

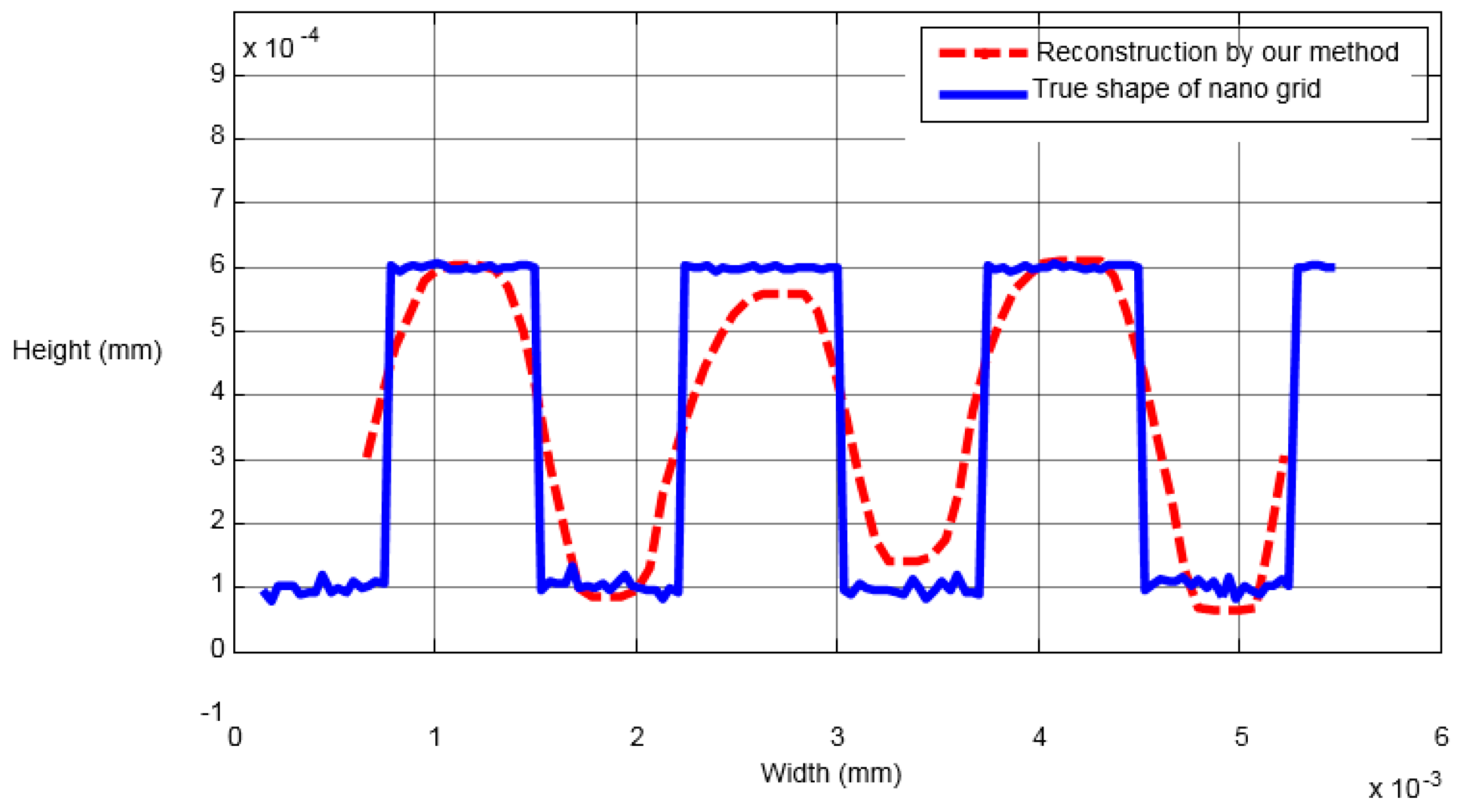

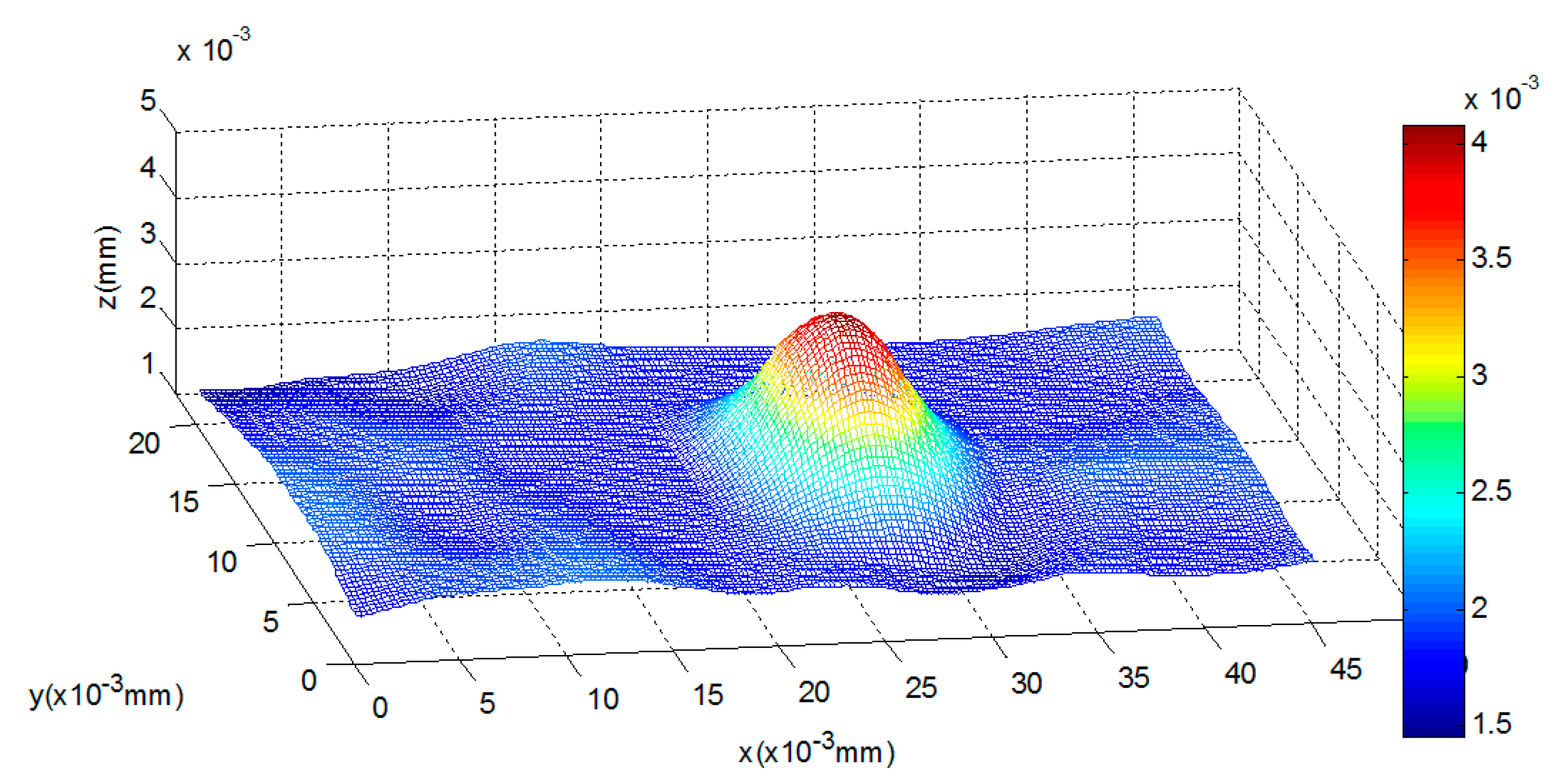

4.1. Nano Grid

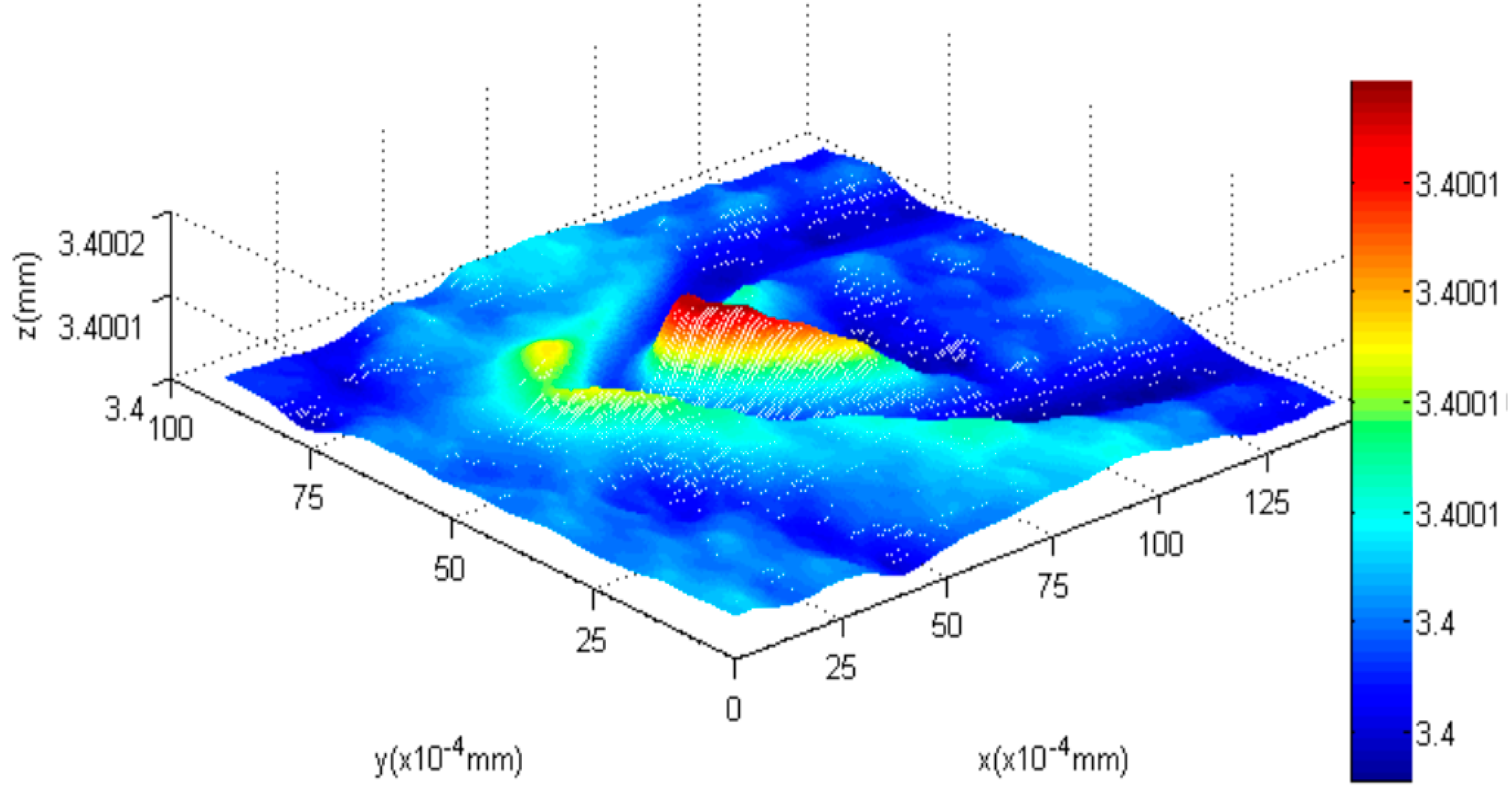

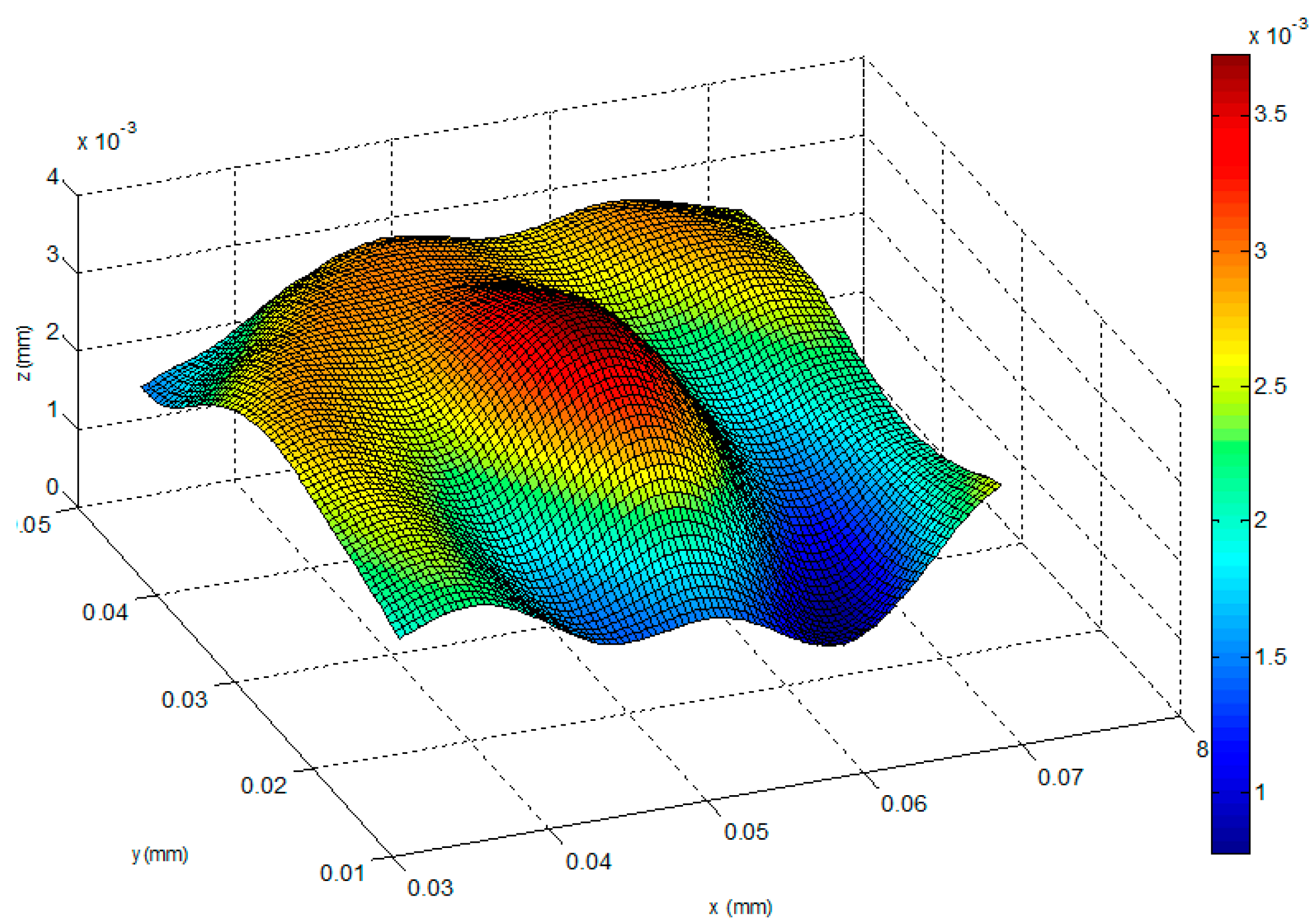

4.2. AFM Cantilever

- (1)

- The highest bend on the AFM cantilever is at the cantilever’s end with the tip, and the bend height between the highest point and lowest point is 97 nm (1.36 × 10−4 mm − 0.39 × 10−4 mm = 0.97 × 10−4 mm) using our method. Therefore, the error of the highest bent point is 3 nm.

- (2)

- For the reconstructed shape of the platform, it is plane compared to the bent cantilever, which coincides with the experimental fact.

- (3)

- On both sides of the bent tip, we can see distinct troughs next to the spike. The reason for this result is that in this paper a global optimization method, which requires a secular change until an optimization value is obtained, is used to solve the diffusion equations. Therefore, one of our future tasks will be to modify the solution method during the shape reconstruction process to solve this problem, and the research direction is to design some adaptive scheme to find an optimal threshold and an interactive step for different surfaces.

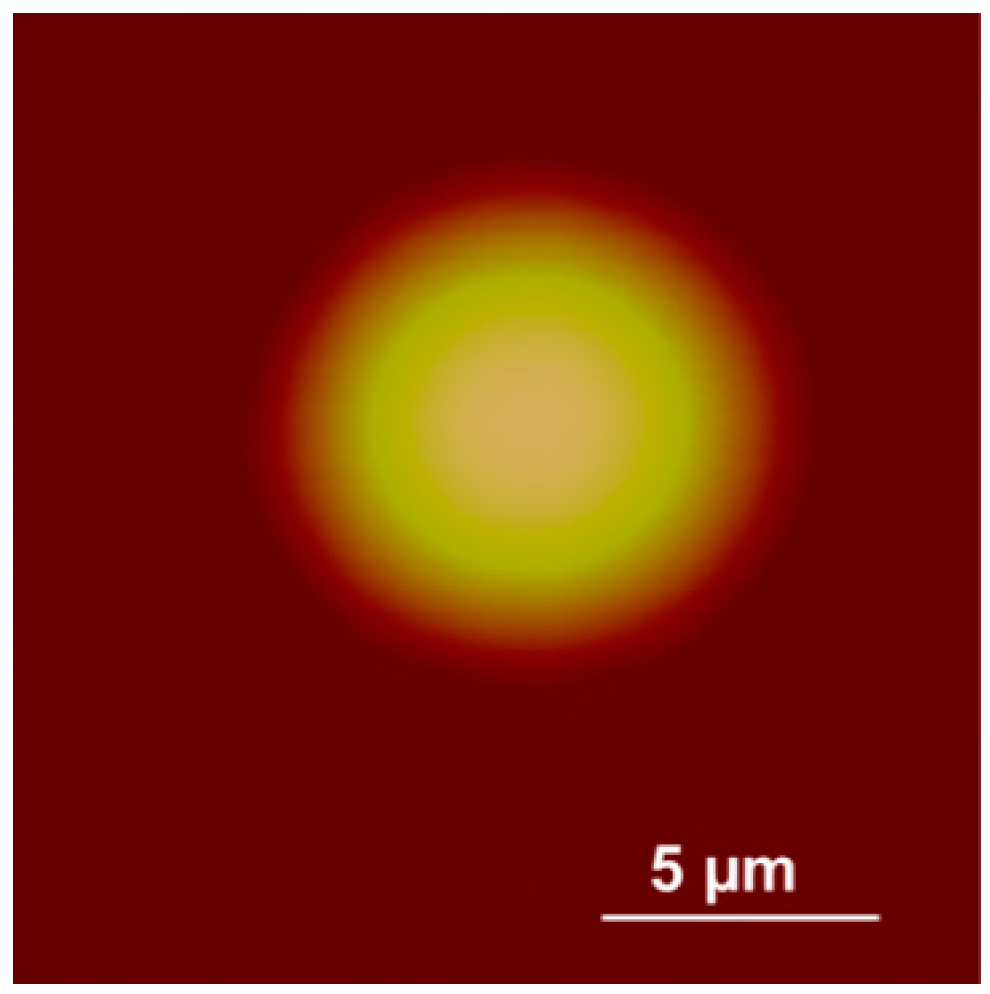

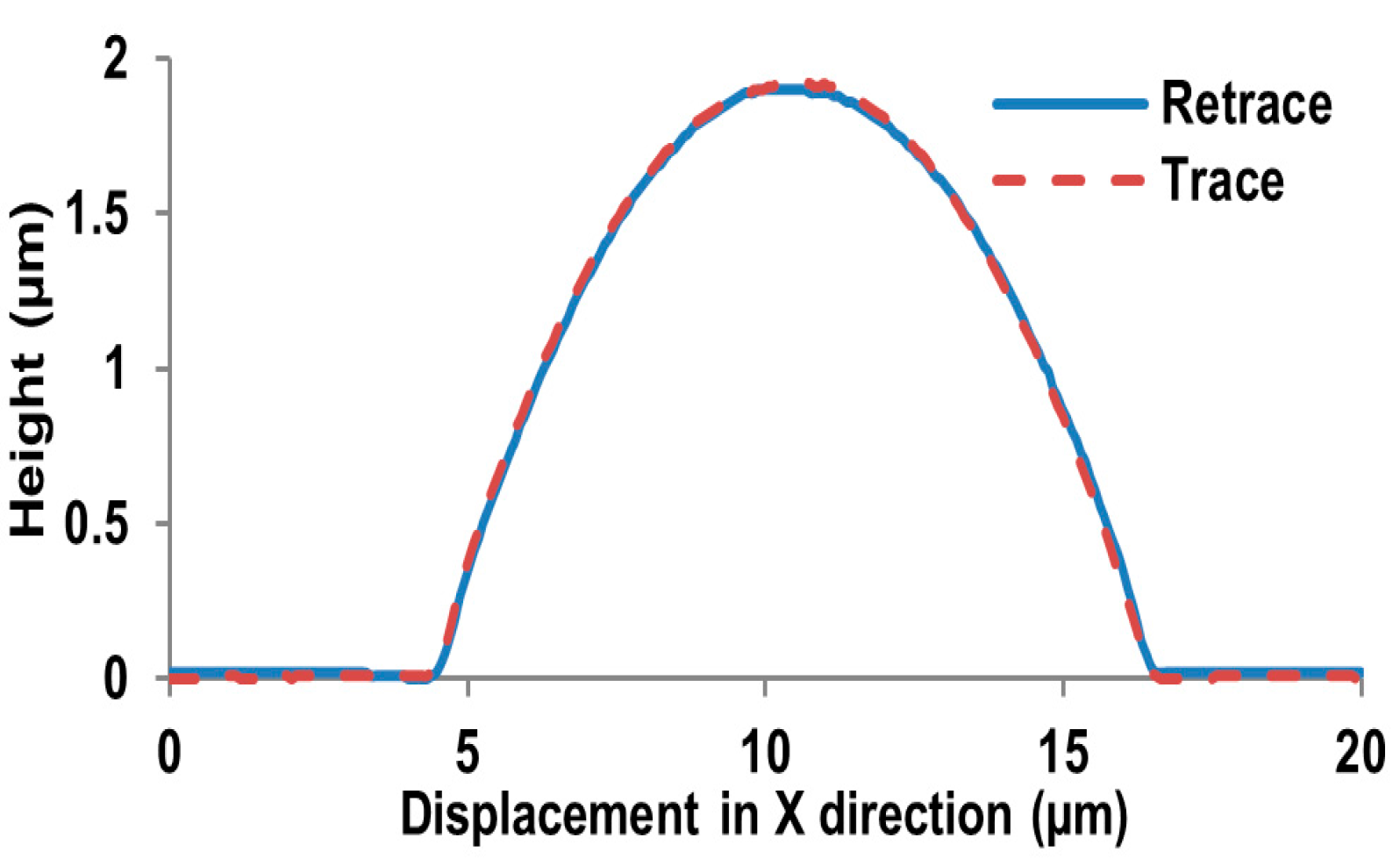

4.3. Microlens

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Overbaugh, J.; Bangham, C.R.M. Selection forces and constraints on retroviral sequence variation. Science 2001, 292, 1106–1109. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Wu, L.Y. State technological development: A case of China’s nanotechnology development. World Dev. 2012, 40, 970–982. [Google Scholar] [CrossRef]

- Guthold, M.; Falvo, M.R.; Matthews, W.G.; Paulson, S.; Washburn, S.; Erie, D.A. Controlled manipulation of molecular samples with the nanomanipulator. IEEE ASME Trans. Mechatron. 2000, 5, 189–198. [Google Scholar] [CrossRef]

- Rubio-Sierra, F.J.; Burghardt, S.; Kempe, A. Atomotic force microscope based nanomanipulator for mechanical and optical lithography. In Proceedings of the IEEE Conference on Nanotechnology, Munich, Germany, 16–19 August 2004; pp. 468–470.

- Wu, L.D. Computer Vision; Fudan University Press: Shanghai, China, 1993; pp. 1–89. [Google Scholar]

- Wei, Y.J.; Dong, Z.L.; Wu, C.D. Depth measurement using single camera with fixed camera parameters. IET Comput. Vis. 2012, 6, 29–39. [Google Scholar] [CrossRef]

- Venema, L.C.; Meunier, V.; Lambin, P.; Dekker, C. Atomic structure of carbon nanotubes from scanning tunneling microscopy. Phys. Rev. B 2000, 61, 2991–2996. [Google Scholar] [CrossRef]

- Tian, X.J.; Wang, Y.C.; Xi, N.; Dong, Z.L.; Tong, Z.H. Ordered arrays of liquid-deposited SWCNT and AFM manipulation. Sci. China 2008, 53, 251–256. [Google Scholar]

- Yin, C.Y. Determining residual nonlinearity of a high-precision heterodyne interferometer. Opt. Eng. 1999, 38, 1361–1365. [Google Scholar] [CrossRef]

- Girod, B.; Scherock, S. Depth from defocus of structured light. In Proceedings of the Optics, Illumination, and Image Sensing for Machine Vision, Philadelphia, Pennsylvania, PA, USA, 8–10 November 1989; pp. 209–215.

- Favaro, P.; Burger, M.; Osher, S.J. Shape from defocus via diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 518–531. [Google Scholar] [CrossRef] [PubMed]

- Favaro, P.; Mennucci, A.; Soatto, S. Observing shape from defocused images. Int. J. Comput. Vis. 2003, 52, 25–43. [Google Scholar] [CrossRef]

- Navar, S.K.; Watanabe, M.; Noguchi, M. Real-time focus range sensor. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 1186–1198. [Google Scholar]

- Word, R.C.; Fitzgerald, J.P.S.; Konenkamp, R. Direct imaging of optical diffraction in photoemission electron microscopy. Appl. Phys. Lett. 2013, 103. [Google Scholar] [CrossRef]

- Kantor, I.; Prakapenka, V.; Kantor, A.; Dera, P.; Kurnosov, A.; Sinogeikin, S.; Dubrovinskia, A.; Dubrovinsky, L. BX90: A new diamond anvil cell design for X-ray diffraction and optical measurements. Rev. Sci. Instrum. 2012, 83. [Google Scholar] [CrossRef] [PubMed]

- Oberst, H.; Kouznetsov, D.; Shimizu, K.; Fujita, J.; Shimizu, F. Fresnel diffraction mirror for atomic wave. Phys. Rev. Lett. 2005, 94. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.Q.; Wang, P. Rayleigh criterion and K Strehl criterion. Acta Photonica Sin. 2000, 29, 621–625. [Google Scholar]

| Parameter | AFM | Our Method | Traditional SFD |

|---|---|---|---|

| Vertical measurement (mm) | 2.10 × 10−3 | 2.103 × 10−3 | 1.98 × 10−3 |

| Horizontal measurement (mm) | 13.5 × 10−3 | 13.7 × 10−3 | 14.6 × 10−3 |

| Running time (s, 256 × 256 pixels) | 240 | 27 | 25 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, Y.; Wu, C.; Wang, W. Shape Reconstruction Based on a New Blurring Model at the Micro/Nanometer Scale. Sensors 2016, 16, 302. https://doi.org/10.3390/s16030302

Wei Y, Wu C, Wang W. Shape Reconstruction Based on a New Blurring Model at the Micro/Nanometer Scale. Sensors. 2016; 16(3):302. https://doi.org/10.3390/s16030302

Chicago/Turabian StyleWei, Yangjie, Chengdong Wu, and Wenxue Wang. 2016. "Shape Reconstruction Based on a New Blurring Model at the Micro/Nanometer Scale" Sensors 16, no. 3: 302. https://doi.org/10.3390/s16030302

APA StyleWei, Y., Wu, C., & Wang, W. (2016). Shape Reconstruction Based on a New Blurring Model at the Micro/Nanometer Scale. Sensors, 16(3), 302. https://doi.org/10.3390/s16030302