Abstract

Vision-based pose estimation is an important application of machine vision. Currently, analytical and iterative methods are used to solve the object pose. The analytical solutions generally take less computation time. However, the analytical solutions are extremely susceptible to noise. The iterative solutions minimize the distance error between feature points based on 2D image pixel coordinates. However, the non-linear optimization needs a good initial estimate of the true solution, otherwise they are more time consuming than analytical solutions. Moreover, the image processing error grows rapidly with measurement range increase. This leads to pose estimation errors. All the reasons mentioned above will cause accuracy to decrease. To solve this problem, a novel pose estimation method based on four coplanar points is proposed. Firstly, the coordinates of feature points are determined according to the linear constraints formed by the four points. The initial coordinates of feature points acquired through the linear method are then optimized through an iterative method. Finally, the coordinate system of object motion is established and a method is introduced to solve the object pose. The growing image processing error causes pose estimation errors the measurement range increases. Through the coordinate system, the pose estimation errors could be decreased. The proposed method is compared with two other existing methods through experiments. Experimental results demonstrate that the proposed method works efficiently and stably.

1. Introduction

With the development of modern industrial technology, quickly and accurately determining the position and orientation between objects is becoming more and more important. This process is called pose estimation. Vision-based pose measurement technology, also known as perspective-n-point (PNP) problem, is to determine the position and orientation of a camera and a target with n feature points on the condition of knowing their world coordinates and 2D image pixel coordinates. It has the advantages of non-contact and high efficiency. It can be widely applied in robotics [1], autonomous aerial refueling [2], electro-optic aiming systems [3,4], virtual reality [5], etc.

The existing approaches to solve object poses can be divided into two categories: analytical algorithms and iterative algorithms. Analytical algorithms apply linear methods to obtain algebraic solutions: Hu et al. [6] uses four points to achieve a linear solution. Lepetit et al. [7] proposed a non-iterative solution named EPnP algorithm. Tang et al. [8] presented a linear algorithm on the condition of five points. Ansar et al. [9] presented a general framework to directly recover the rotation and translation. Duan et al. [10] introduced a new affine invariant of trapezium to realize pose estimation and plane measurement.

As for iterative algorithms, pose estimation is formulated as a nonlinear problem with the constraints, and then it is solved using nonlinear optimization algorithms, most typically, Levenberg-Marquardt method. DeMenthon et al. [11,12,13] proposed POSIT algorithm. It gets the initial value of the solution using a scaled orthographic model to approximate the perspective projection model. Zhang et al. [14,15] proposed a two-stage iterative algorithm. The iterative algorithm is divided into a depth recovery stage and an absolute orientation stage. Peng et al. [16] achieved the object pose non-linearly on the basis of five points using a least squares approach. Liu et al. [17] gets the object pose based on the corresponding geometrical constraints formed by four non-coplanar points. Zhang et al. [18] proposed an efficient solution for vision-based pose determination of a parallel manipulator. Fun et al. [19] proposed a robust and high accurate pose estimation method to solve the PNP problem in real time.

The analytical solutions generally take less computation time. However, they are sensitive to observation noise and usually obtain lower accuracy. As for iterative solutions, the pose estimation is translated into a nonlinear least squares problem through the distance constraints. Then the distance error between feature points is minimized based on 2D image pixel coordinates. However, the iterative solutions also have drawbacks: (1) non-linear optimization needs a good initial estimate of the true solution, (2) they are more time consuming than analytical solutions.

During the pose estimation process, the image processing errors emerge mainly because the perspective projection point of the feature marker center and the perspective projection image center of feature markers do not coincide. The current papers focus on the reduction of image processing errors through better extraction of the image center [20,21,22]. However, those methods are not powerful enough to eliminate the inconsistency between the perspective projection point of the feature marker center and the center of the corresponding perspective projection image, especially when the measurement range increases.

Based on the discussion above, in this paper a robust and accurate pose estimation method based on four coplanar points is proposed: In the first step, by utilizing the linear constraints formed by points, the coordinates of points in the camera coordinate system are solved analytically. The results obtained in the first step are then set as the initial values of an iterative solving process to ensure the accuracy and convergence rate of non-linear algorithm. The Levenberg-Marquardt optimization method is utilized to refine the initial values. In the second step, the coordinate system of object motion is established and the object pose is finally solved. The growing image processing error causes greater pose estimation errors with an increasing measurement range. Through the coordinate system, the pose estimation errors could be decreased.

2. The Solving of Feature Point Coordinates in the Camera Coordinate System

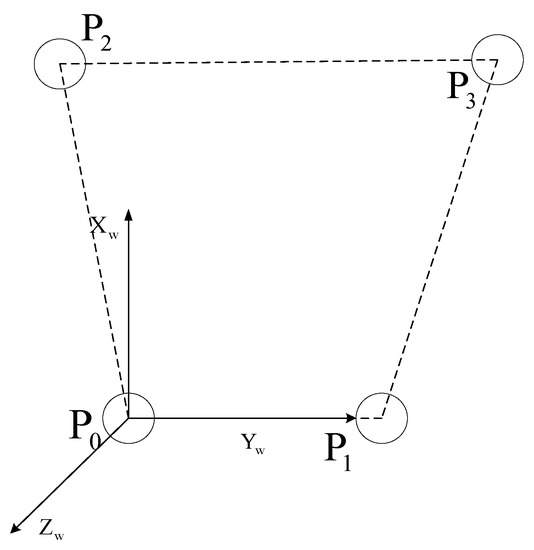

The target pattern with four coplanar points is designed for pose estimation as shown in Figure 1. P0, P1, P2 and P3 form a trapezium. P0P1 is parallel to P2P3.

Figure 1.

The measurement target with four points.

P0 is set as the origin of coordinate system. The connecting line of P0 and P1 is Y axis. is Z axis. Then X axis is . In this way, the world coordinate system (target coordinate system) is constructed.

To achieve the solution of target position, the coordinates of points in the camera coordinate system need to be solved first. is the camera coordinate system. is the world coordinate system. The coordinates of each point in the camera coordinate system is . The coordinates of each point in the world coordinate system are . is known before solving the pose. The corresponding ideal image coordinates are . The relationship of and could be described as

where (u0, v0) is the center pixel of the computer image, sx is the uncertainty image factor, dx and dy are center to center distances between pixels in the row and column directions respectively, f is the focal length, and is the projection depth of Pi.

The coordinates of points in the camera coordinate system could be obtained by solving . Since P0P1 is parallel to P2P3, two linear constraints are introduced. Then is solved (The solving process is in the Appendix).

The coordinates of points in the camera coordinates system is obtained by . So, the vector from the optical center Oc to each point could be calculated through Equation (3).

The length between the feature points could be calculated through Equation (4).

In order to maintain the planarity of the four points, the coplanar constraint (CO) expressed as Equation (5) should also be considered.

Through Equation (6), the Levenberg-Marquardt optimization method is then used to solve . The value of obtained in Equation (2) is used as the initial value to ensure the accuracy and convergence speed of the algorithm.

3. The Solving of Object Pose

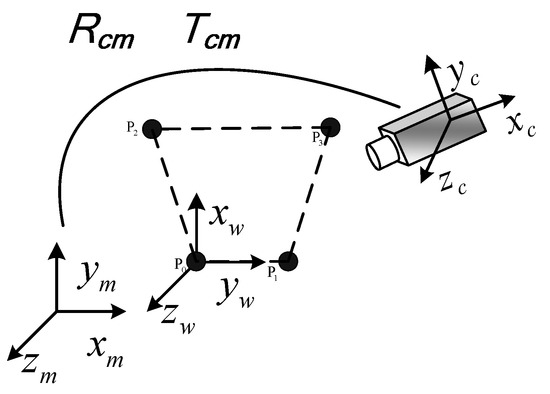

The feature points coordinates in the camera coordinate system , , , are calculated through . The following step is to solve the object pose. As shown in Figure 2, is the rotation and translation coordinate system of target. The three free degrees in rotation are yaw (ym axis and α angle), pitch (xm axis and β angle), and roll (zm axis and γ angle).

Figure 2.

The rotation and translation coordinate system.

is established according to the following steps: (1) The target rotates around ym axis. The images of the target are captured in the locations of different rotation angles. N1 groups of spatial coordinates can be obtained. With the least squares method, the N1 groups of spatial coordinates are used to fit a plane: Ax + By + Cz + D = 0. The vector of the ym axis is,

(2) The target rotates around xm axis. The images of target are captured in the locations of different rotation angles. N2 groups of spatial coordinates can be obtained. With the least squares method, the N2 groups of spatial coordinates are used to fit a plane: Ex + Fy + Gz + H = 0. The vector of the xm axis is,

(3) The point sets obtained in steps (1) and (2) share one rotation center. A sphere-fitting is adopted to describe the center. According to the following sphere-fitting equation, the sphere center could be calculated. The sphere center is the rotation center (the origin of ).

(xom, yom, zom) is the sphere center and r is the sphere radius.

(4) The vector of the zm axis is . The vector of the ym axis is then adjusted by .

As is established, the rotation and translation matrix from to is obtained. The coordinates of feature points in are shown below.

The coordinates of feature points in are represented with .

The positioning accuracy of the feature points during image processing have a direct impact on the pose estimation accuracy. The automatic identification of circular markers is more convenient. There are many available algorithms for the center positioning of circular markers. So in this paper, the center point of the circular markers is selected as the feature point. The nature of imaging is the perspective projection. It has the characteristic that the object is big when near and small when far. This causes the perspective projection point of the circular marker’s center and the center of the corresponding perspective projection image (usually an ellipse) to not coincide, especially when the measurement range is greater [23]. This inconsistency causes image processing errors. Then image processing errors emerge, which may result in the pose estimation errors (The ideal image coordinates in Equation (1) are obtained through these image processing steps). In order to improve measurement accuracy, the following method is introduced.

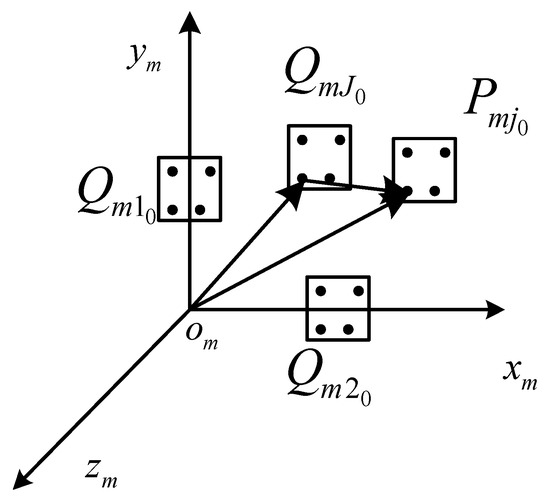

The point sets obtained in Section 2 represent the known typical position of a target in the moving space. It means N typical positions of a target. The pose of a target in the typical position is known. According to Equation (10), N are obtained. Then the coordinates of in in the typical position j are represented with . represents the coordinates of feature points in in the location k. The nearest to is as shown in Figure 3. is calculated as

Figure 3.

The solving of the object pose.

Each pose vector in the moving space corresponds to three angles. They are α (yaw angle), β (pitch angle), and γ (roll angle). They could be calculated as shown in Equation (12).

, and are known quantities. , and could be calculated according to Equation (13). In this way, image processing error caused by the inconsistency mentioned above is significantly reduced, especially when the measurement range is greater. The pose measurement accuracy is then improved. The target pose could be represented with , , and .

4. Experiment Results

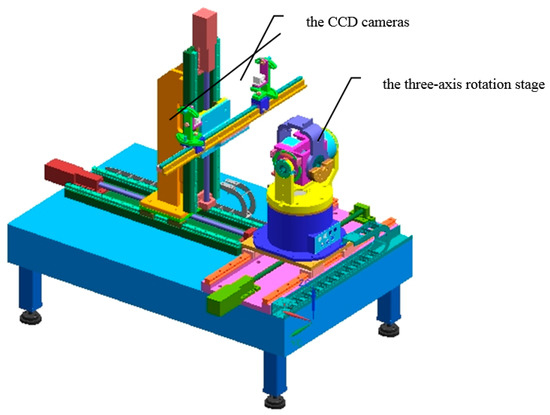

The experimental system that consists of a target, a three-axis rotation stage, and two CCD cameras is shown in Figure 4. The rotation range of stage in yaw axis, pitch axis, roll axis are ±160°, ±90°, ±60° respectively.

Figure 4.

The measurement system.

Camera used in this paper is Teli CSB4000F-20 (Teli, Tokyo, Japan) with the resolution 2008 (h) × 2044 (v), pixel size 0.006 × 0.006 mm2. The lens is Pentax (Tokyo, Japan) 25 mm. The positioning accuracy of rotation stage is less than 20″. The calibration results of camera intrinsic parameters are shown in Table 1 [24]. k1, k2 are the radial distortion coefficients. p1, p2 are the tangential distortion coefficients.

Table 1.

Calibration results.

The images are captured with a single CCD camera in different locations. The feature point coordinates in the camera coordinate system are obtained in the current location. Then the rotation and translation coordinate system of target is established. , and which could be used to represent the target pose are solved.

The target pose measurement experiment includes three parts: computer simulation experiments, real image experiments for accuracy, and real image comparative experiments with other methods.

4.1. Computer Simulation Experiments

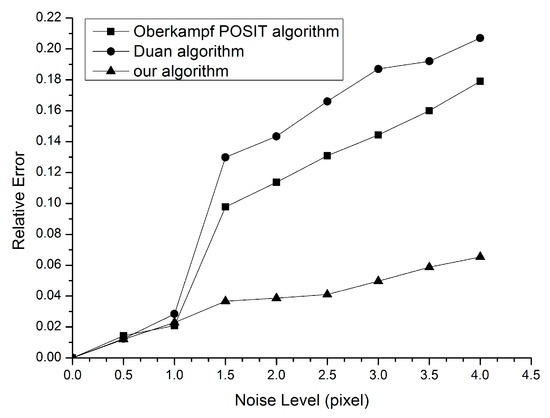

To validate accuracy and noise immunity of the algorithm in this paper, the algorithm proposed is compared with the Oberkampf POSIT algorithm [11,12,13] and the Duan algorithm [10] during the computer simulation experiment process. In this process, the pinhole imaging model of a camera is simulated, thus the points are transformed with perspective projection and the simulated image coordinates of points are acquired. Gaussian noise from 0 pixels to 4 pixels are added to the point’s coordinates of images. The relative errors of estimated poses using the proposed algorithm, Duan algorithm, and Oberkampf POSIT algorithm are shown in Figure 5.

Figure 5.

The simulation experiment results.

It can be seen that the errors are reduced after optimization and they increase with the noise level. It is noticeable that the method proposed produces better results than the other two methods, especially when the noise level is greater than one pixel. In addition, it is noted that the errors keep almost the same level under lower noise disturbance. This phenomenon can be explained as follows: owing to the fact that the Duan algorithm does not have an iterative solving process, this leads to a relatively high error. The Oberkampf POSIT method takes no account of both the coplanarity constraint and initial value of iteration process. With the noise level increasing, the factor of error changes from initial value of the iteration process to coplanarity. This results in a higher error than the proposed algorithm.

4.2. The Measurement Experiments for Accuracy

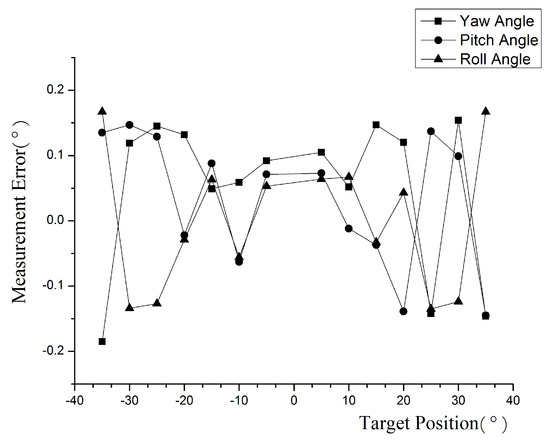

The measurement range of yaw angle, pitch angle, and roll angle is set to −35°~35°. The measurement errors are shown in Figure 6. The measurement error is the absolute difference of the estimated angle to the true angle.

Figure 6.

The measurement error of yaw, pitch, and roll angle.

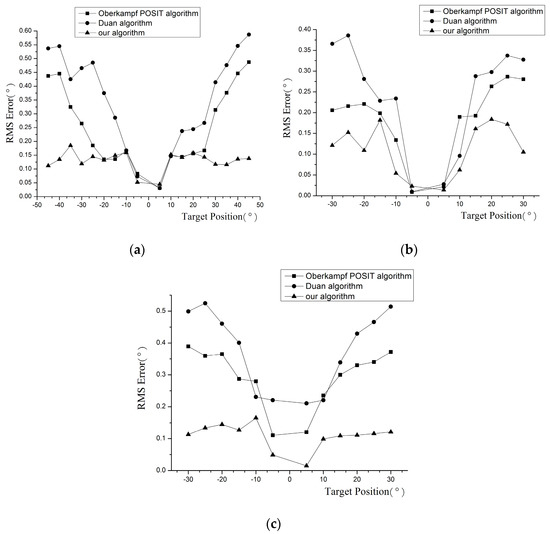

4.3. The Comparative Experiments for Accuracy

The algorithm proposed in this paper, Duan algorithm, and Oberkampf POSIT algorithm are used to calculate target poses. The root mean square (RMS) errors are displayed in Figure 7. Each point in the figure is the RMS error over multiple measurements. As to the Duan algorithm, when the rotation angle is greater, the RMS error of our method is less than that of Duan algorithm. The experiment results demonstrate that the analytical pose measurement process is sensitive to the observation noise. This error could be reduced by the iterative process in our method. At the same time, in our algorithm a good initial value is provided at the beginning of iteration to ensure pose estimation accuracy. As to the Oberkampf POSIT algorithm, experiment results demonstrate that the image processing error mentioned in Section 3 exists in the pose measurement process. Through the method in Section 3, the error could be eliminated successfully, especially when the measurement range is greater.

Figure 7.

The RMS error of rotation angles: (a) Yaw angle; (b) Pitch angle; (c) Roll angle.

By comparing the results of our method and those of the Oberkampf POSIT and Duan algorithms, it is obvious that the measurement accuracy of our method is higher than those in the whole moving space.

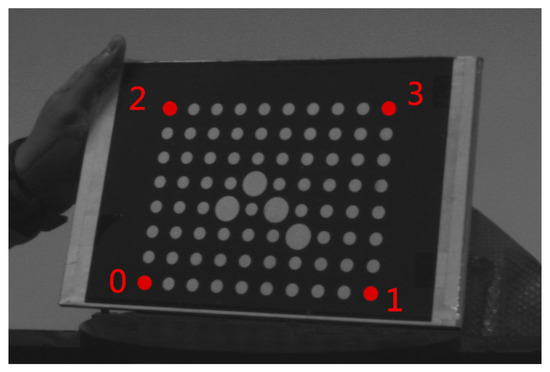

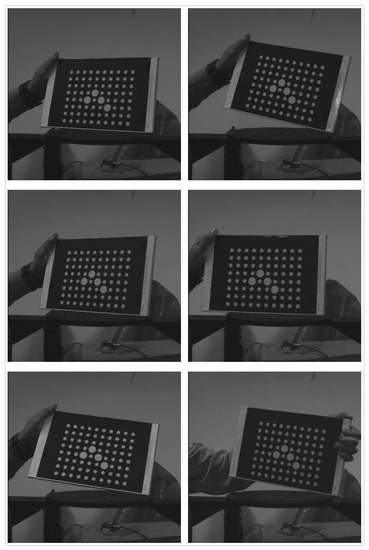

As to the real applications, we first take images for the calibration board of the camera, as shown in Figure 8. The four corner circular markers (marked in red) of the calibration board are used to form a trapezium. The center points of the four circular markers are extracted from the images. Then the lengths of the four sides of the trapezium are calculated with the algorithm proposed in this paper, Duan algorithm, and Oberkampf POSIT algorithm. The measurement errors are shown in Table 2. Figure 9 shows the image sets of the calibration board. D01 is the distance between P0 and P1. D02 is the distance between P0 and P2. D23 is the distance between P2 and P3. D13 is the distance between P1 and P3. The measurement error is the absolute difference of the estimated length to the true length. It can be seen from Table 2 that the results of our algorithm are more close to the real value. The measurement error of our method is less than 0.2 mm. The algorithm proposed in this paper is more effective.

Figure 8.

The image of P0, P1, P2, and P3 on the calibration board.

Table 2.

The measurement errors of the calibration board.

Figure 9.

The image sets of the calibration board.

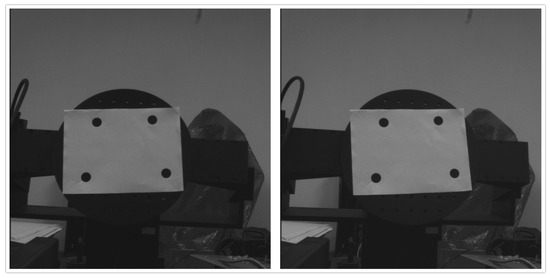

Then we test the proposed algorithm by experimentally detecting the pose information of a rotation stage in yaw angle, pitch angle, and roll angle. Figure 10 shows the image sets of the rotation stage with feature points. Through the target on the rotation stage as shown in Figure 10, its pose is calculated using the algorithm proposed in this paper, Duan algorithm, and Oberkampf POSIT algorithm. The measurement errors are shown in Table 3. The measurement error is the absolute difference of the estimated angle to the true angle. It can be seen from Table 3 that the results of our algorithm are more close to the real value. The measurement error of our method is less than 0.2°. Using the same information extracted from the images, the proposed algorithm has an advantage to identify the pose of the rotation stage.

Figure 10.

The image of the rotation stage with feature points.

Table 3.

The measurement errors of the rotation stage with feature points.

Finally, we estimated the head pose. The pose measurement system based on inertial technology is mounted on a helmet to calculate the head pose as shown in Figure 11. The results are taken as the real values. The corresponding images of the head are captured and shown in Figure 12. The vertices of the trapezium are the outer corners of the two eyes and the mouth. The image points of the four vertices on each image are located in a manual way. The head pose is calculated using the algorithm proposed in this paper, Duan algorithm, and Oberkampf POSIT algorithm. The first image (Frame 0) in Figure 12 is set as the initial position (zero position). The angle between the current positions (Frame 1, Frame 2, Frame 3, and Frame 4) and the initial position (Frame 0) are calculated. The measurement errors are shown in Table 4. The measurement error is the absolute difference of the estimated angle to the real angle.

Figure 11.

The motion capture system based on inertial technology.

Figure 12.

The image sets of head pose.

Table 4.

The measurement errors of head pose.

It can be seen from Table 4 that the results of our algorithm are closer to the real value. The rough localization of the control points (the trapezium) in the image lead to the promotion of the measurement errors. However, our method could decrease these kinds of measurement errors compared with the other two methods. The errors of yaw, pitch, and roll are not independent. The errors of yaw, pitch, and roll influence each other for they constitute the rotation matrix together. When the head rotated in all the axes, as the errors of yaw, pitch, and roll influence each other, measurement errors are promoted.

The distance between object and camera is limited by the field of view. The corresponding experiment results (RMS errors) are shown in Table 5. When the camera is in different locations, the pose of the target is calculated. In Position 1 and Position 2, the camera is near to the target and the target is out of the field of view of the camera. In Position 5 and Position 6, the camera is far from the target and the target is out of the field of view of the camera. In Position 3 and Position 4, the target is in of the field of view of the camera. It can be seen as follows: if the object is out of the range of the field of view, the measurement accuracy is lower. If the object is in the range of the field of view, the measurement accuracy is higher and little influenced by the distance between object and camera. So in order to obtain a higher accuracy, it is needed to ensure that the object is in the range of the field of view of the camera.

Table 5.

The RMS error of cameras in different locations.

5. Conclusions

A robust and accurate vision-based pose estimation algorithm based on four coplanar feature points is presented in this paper. By combining the advantages of the analytical methods and the iterative methods, the iterative process is given a preferable initial value acquired independently by an analytical method. At the same time, the anti-noise ability is strengthened and the result is more stable. The pose estimation errors depend on the feature extraction accuracy. When the measurement range is greater, the image processing errors become greater. In the algorithm proposed, the coordinate system of object motion is established to solve the object pose. In this way, the image processing error which may result in the pose estimation errors could be reduced.

Acknowledgments

This research was supported by the National Natural Science Foundation of China (No. 51605332). This research was supported by the National Natural Science Foundation of China (No. 11302149). This research was supported by the Innovation Team Training Plan of Tianjin Universities and Colleges (Grant No. TD12-5043).This research was also supported by the Tianjin application foundation and advanced technology research program (the youth fund project), No. 15JCQNJC02400.

Author Contributions

Zimiao Zhang conceived and designed the experiments. Shihai Zhang and Qiu Li analyzed the data.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of the Solving of

ε is the distance ratio of P1P0 and P3P2.

The transformation matrix from the world coordinates system to the camera coordinates system is R and T. could be expressed as , and then could be represented with , , . The first linear constraint is shown in Equation (A2).

Given , according to Equations (1) and (A2), Equation (A3) is deduced.

The both sides of Equation (A3) are multiplied with . Given , finally Equation (A4) is obtained which represents the second linear constraint.

could also be expressed as Equation (A5) according to Equation (A2).

According to both Equations (A4) and (A5), is solved as shown in Equation (2) in the paper.

References

- Kim, S.J.; Kim, B.K. Dynamic ultrasonic hybrid localization system for indoor mobile robots. IEEE Trans. Ind. Electron. 2013, 60, 4562–4573. [Google Scholar] [CrossRef]

- Mao, W.; Eke, F. A survey of the dynamics and control of aircraft during aerial refueling. Nonlinear Dyn. Syst. Theory 2008, 8, 375–388. [Google Scholar]

- Valenti, R.; Sebe, N.; Gevers, T. Combining head pose and eye location information for gaze estimation. IEEE Trans. Image Process. 2012, 21, 802–815. [Google Scholar] [CrossRef] [PubMed]

- Murphy-Chutorian, E.; Trivedi, M.M. Head pose estimation in computer vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 607–626. [Google Scholar] [CrossRef] [PubMed]

- Schall, G.; Wagner, D.; Reitmayr, G.; Taichmann, E.; Wieser, M.; Schmalstieg, D.; Hofmann-Wellenhof, B. Global pose estimation using multi-sensor fusion for outdoor augmented reality. In Proceedings of the 8th International Symposium on Mixed and Augmented Reality (ISMAR 2009), Santa Barbara, CA, USA, 19–22 October 2009; pp. 153–162.

- Hu, Z.Y.; Wu, F.C. A note on the number solution of the non-coplanar P4P problem. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 550–555. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. EPnP: An accurate O(n) solution to the PnP problem. Int. J. Comput. Vis. 2009, 81, 155–166. [Google Scholar] [CrossRef]

- Tang, J.; Chen, W.; Wang, J. A novel linear algorithm for P5P problem. Appl. Math. Comput. 2008, 205, 628–634. [Google Scholar] [CrossRef]

- Ansar, A.; Daniilidis, K. Linear pose estimation from points or lines. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 578–589. [Google Scholar] [CrossRef]

- Duan, F.Q.; Wu, F.C.; Hu, Z.Y. Pose determination and plane measurement using a trapezium. Pattern Recognit. Lett. 2008, 29, 223–231. [Google Scholar] [CrossRef]

- DeMenthon, D.F.; Davis, L.S. Model-based object pose in 25 lines of code. Int. J. Comput. Vis. 1995, 15, 123–141. [Google Scholar] [CrossRef]

- Gramegna, T.; Venturino, L.; Cicirelli, G.; Attolico, G.; Distante, A. Optimization of the POSIT algorithm for indoor autonomous navigation. Robot. Auton. Syst. 2004, 48, 145–162. [Google Scholar] [CrossRef]

- Oberkampf, D.; DeMenthon, D.F.; Davis, L.S. Iterative pose estimation using coplanar points. In Proceedings of the 1993 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’93), New York, NY, USA, 15–17 June 1993; pp. 626–627.

- Zhang, S.; Liu, F.; Cao, B.; He, L. Monocular vision-based two-stage iterative algorithm for relative position and attitude estimation of docking spacecraft. Chin. J. Aeronaut. 2010, 23, 204–210. [Google Scholar]

- Zhang, S.; Cao, X.; Zhang, F.; He, L. Monocular vision-based iterative pose estimation algorithm from corresponding feature points. Sci. Chin. Inf. Sci. 2010, 53, 1682–1696. [Google Scholar] [CrossRef]

- Wang, P.; Xiao, X.; Zhang, Z.; Sun, C. Study on the position and orientation measurement method with monocular vision system. Chin. Opt. Lett. 2010, 8, 55–58. [Google Scholar] [CrossRef]

- Liu, M.L.; Wong, K.H. Pose estimation using four corresponding points. Pattern Recognit. Lett. 1999, 20, 69–74. [Google Scholar] [CrossRef]

- Zhang, S.; Ding, Y.; Hao, K.; Zhang, D. An efficient two-step solution for vision-based pose determination of a parallel manipulator. Robot. Comput. Integr. Manuf. 2012, 28, 182–189. [Google Scholar] [CrossRef]

- Fan, B.; Du, Y.; Cong, Y. Robust and accurate online pose estimation algorithm via efficient three-dimensional collinearity model. IET Comput. Vis. 2013, 7, 382–393. [Google Scholar] [CrossRef]

- Pan, H.; Huang, J.; Qin, S. High accurate estimation of relative pose of cooperative space targets based on measurement of monocular vision imaging. Optik 2014, 125, 3127–3133. [Google Scholar] [CrossRef]

- Hmam, H.; Kim, J. Optimal non-iterative pose estimation via convex relaxation. Image Vis. Comput. 2010, 28, 1515–1523. [Google Scholar] [CrossRef]

- Ons, B.; Verstraelen, L.; Wagemans, J. A computational model of visual anisotropy. PLoS ONE 2011, 6, e21091. [Google Scholar] [CrossRef] [PubMed]

- Heikkila, J.; Silvén, O. A four-step camera calibration procedure with implicit image correction. In Proceedings of the 1997 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 1106–1112.

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 1, pp. 666–673.

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).