Abstract

To recognize the user’s motion intention, brain-machine interfaces (BMI) usually decode movements from cortical activity to control exoskeletons and neuroprostheses for daily activities. The aim of this paper is to investigate whether self-induced variations of the electroencephalogram (EEG) can be useful as control signals for an upper-limb exoskeleton developed by us. A BMI based on event-related desynchronization/synchronization (ERD/ERS) is proposed. In the decoder-training phase, we investigate the offline classification performance of left versus right hand and left hand versus both feet by using motor execution (ME) or motor imagery (MI). The results indicate that the accuracies of ME sessions are higher than those of MI sessions, and left hand versus both feet paradigm achieves a better classification performance, which would be used in the online-control phase. In the online-control phase, the trained decoder is tested in two scenarios (wearing or without wearing the exoskeleton). The MI and ME sessions wearing the exoskeleton achieve mean classification accuracy of 84.29% ± 2.11% and 87.37% ± 3.06%, respectively. The present study demonstrates that the proposed BMI is effective to control the upper-limb exoskeleton, and provides a practical method by non-invasive EEG signal associated with human natural behavior for clinical applications.

1. Background

The upper-limb exoskeleton is designed with the goal of restoring functions and assisting activities of daily living (ADL) to those elderly, disabled and injured individuals [1,2,3,4]. Electromyography (EMG) and force sensors are two of the widely used control methods to control those exoskeletons based on the user’s motion intention [5,6,7,8,9,10,11,12]. However, for the seriously amputated and paralyzed people who cannot generate sufficient muscle signals or movements, they are not able to provide the completed EMG and force signals, which will affect the estimation of motion intention. The brain-machine interfaces (BMI) based on electroencephalogram (EEG) have received huge interest due to their potential [13]. A non-invasive recording procedure is safer and easy to apply, and it is potentially applicable to almost all people including those seriously amputated and paralyzed.

For many years, scholars have found that motor execution (ME) and motor imagery (MI) can change the neuronal activity in the primary sensorimotor areas [14,15,16]. When humans execute or imagine the movement of unilateral limb, the power of mu and beta rhythms will decrease or increase in the sensorimotor area of the contralateral hemisphere and the ipsilateral hemisphere, respectively [17]. The former case is called event-related desynchronization (ERD), and the latter event-related synchronization (ERS) [18,19]. The ERD/ERS patterns can be utilized as important features in the discrimination between right hand and left hand movement, and hand and foot movement [17]. In the frequency bands varying between 9 and 14 Hz and between 18 and 26 Hz of EEG signals, the ERD/ERS patterns can provide best discrimination between left and right hand movement imagination, and the accuracy of online classification is more than 80% [20]. To discriminate between hand and foot movement, the online classification accuracy is even as high as 93% [21]. More recently, researchers have focused on using ERD/ERS patterns to control the EEG-based BMI applications [22,23,24]. Ang et al. [25] classified the ERD/ERS patterns as “go” and “rest” using the Common Spatial Pattern algorithm to trigger a 2 degree-of-freedom MIT-Manus robot developed by an MIT research group for reaching tasks. Sarac et al. [26] presented a systematic approach that enables online modification/adaptation of robot assisted rehabilitation exercises by continuously monitoring intention levels of patients utilizing an EEG-based BCI. Linear Discriminant Analysis (LDA) was used to classify ERS/ERD patterns associated with MI. Pfurtscheller et al. [27] used brain oscillations (ERS) to control an electrical driven hand orthosis (open or close) for restoring the hand grasp function. The subjects imagined left versus right hand movement, left and right hand versus no specific imagination, and both feet versus right hand, and achieved an average classification accuracy of approximately 65%, 75% and 95%, respectively. Bai et al. [28] proposed a new ERD/ERS-based BCI method to control a two-dimensional cursor movement, and found the online classifications of the EEG activity with ME/MI were: >90%/∼80% for healthy volunteers and >80%/∼80% for the stroke patient. These studies mainly show the feasibility of BMI integrated robots and orthosis. However, few studies have focused on the upper-limb exoskeleton control using ERD/ERS patterns.

This paper aims to investigate whether self-induced variations of the EEG can be useful as control signals for an upper-limb exoskeleton developed by us. We proposed a BMI based on ERD/ERS, and tested this approach in one closed-loop experiment of increasing real-life applicability. The experiment consists of two phases: the decoder-training phase and the online-control phase. In the decoder-training phase, subjects performed ME or MI under two paradigms, i.e., left versus right hand movement and left hand versus both feet movement. We investigated nine classification strategies (three classifiers × three train–test ratios) in order to select the best classifier and train–test ratios resulting in a best classification performance. Then, based on the best classification strategy, the paradigm achieving a higher classification accuracy was chosen to the online-control phase. In the online-control phase, the trained decoder was tested in two scenarios with visual feedback. In the first scenario, subjects without wearing the exoskeleton (it was hung up beside the subject) controlled it by using ME or MI; in the second scenario, subjects wearing the exoskeleton on the right arm controlled it by using ME or MI.

2. Method

2.1. Subjects and Data Acquisition

Four able-bodied subjects (three males and one female, age range: 27–31 years old) participated in this study. They were all right handed according to the Edinburgh inventory [29], and they all had a good MI ability according to the testing method from [30]. The experiment was carried out in accordance with the approved guidelines. The experimental protocol was reviewed and approved by the human ethical clearance committee of Zhejiang University. Informed written consent was obtained from all subjects that volunteered to perform this experiment.

EEG data were recorded from 28 active electrodes (FC5, FC3, FC1, FCz, FC2, FC4, FC6, C5, C3, C1, Cz, C2, C4, C6, CP5, CP3, CP1, CPz, CP2, CP4, CP6, P5, P3, P1, Pz, P2, P4, P6) attached on an EEG cap in conjunction with the ActiveTwo 64-channel EEG system (BioSemi B.V., Amsterdam, The Netherlands). This system replaces the ground electrodes in conventional systems with two separate electrodes (CMS and DRL) [31]. The reference electrode was placed on the left mastoid. EEG was digitized at 512 Hz, power-line notch filtered at 50 Hz, and band-pass filtered at between 0.5 and 100 Hz. Before electrode attachment, alcohol was used to clean the skin, and conductive gel was used to reduce electrode resistance.

2.2. Experiment Procedure

The experiment consists of two phases: the decoder-training phase and the online-control phase. During recording, a quiet environment with dim light was provided to maintain the subjects’ attention level. They were asked to keep all muscles relaxed; in addition, they were also instructed to avoid eye movements, blinks, body adjustments, swallowing or other movements during the visual cue onset. Cues were presented on a computer screen, and occupied about of the visual angle.

2.2.1. Decoder-Training Phase

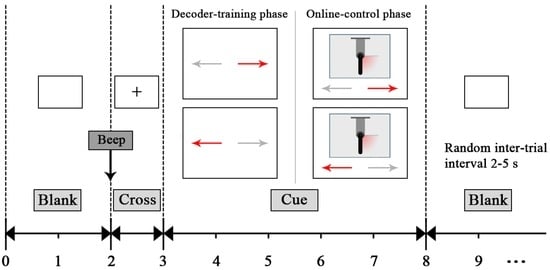

During the decoder-training phase, subjects were seated in a comfortable chair with their forearms semi-extended on the desk, as shown in Figure 1a. Subjects were instructed to perform ME or MI under two paradigms, i.e., left versus right hand movement and left hand versus both feet movement. Each subject completed 480 trials in total, consisting of random sequences of 120 trials of four sessions (see Table 1). As shown in Figure 2, each trial started with the presentation of an acoustical warning tone and a cross (second 2). One second later, a cue was randomly chosen in one of two (“←” and “→”), which indicated the movement to be executed or imagined: left wrist extension, and right wrist extension for Sessions 1 and 2 or both feet dorsiflexion for Sessions 3 and 4, respectively. This cue was presented visually by means of a left/right arrow, which appeared in the middle of the computer screen. Subjects had to perform the ME and MI for 5 s, until the screen content was erased (second 8). After a short pause (random duration between 2 s and 5 s), the next trial started. We asked the subjects to relax their muscles and pay attention to the visual cue. We investigated the two paradigms and chose the one achieving a better classification performance for the online-control phase.

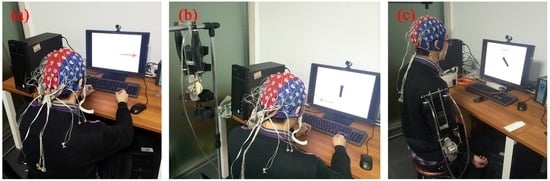

Figure 1.

Experimental setup. (a) in the decoder-training phase, subjects were instructed to perform ME or MI under two paradigms, i.e., left versus right hand movement and left hand versus both feet movement. The left arrow indicated left wrist extension, and the right arrow indicated right wrist extension for Sessions 1 and 2 or both feet dorsiflexion for Sessions 3 and 4. (b,c) in the online-control phase, the trained decoder using the selected paradigm was tested in two scenarios with a visual feedback. In the first scenario, subjects without wearing the exoskeleton (it was hung up beside the subject) controlled it by using ME or MI; in the second scenario, subjects wearing the exoskeleton on the right arm controlled it by using ME or MI.

Table 1.

The descriptions of four sessions in the decoder-training phase.

Figure 2.

Timing of one trial used in the experiment. Each trial started with the presentation of an acoustical warning tone and a cross (second 2). One second later, a cue was randomly chosen in one of two (“←” and “→”), which indicated the movement to be executed or imagined. This cue was presented visually by means of a left/right arrow, which appeared in the middle of the computer screen. Subjects had to perform the ME and MI for five seconds, until the screen content was erased (second 8). After a short pause (random duration between two and five seconds), the next trial started.

2.2.2. Online-Control Phase

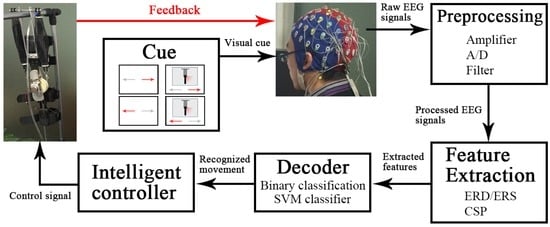

During the online-control phase, the trained decoder using the selected paradigm was tested in two scenarios. In the first scenario, subjects without wearing the exoskeleton (it was hung up beside the subject) were seated in a comfortable chair with their forearms semi-extended on the desk, as shown in Figure 1b. In the second scenario, subjects wearing the exoskeleton on the right arm were seated in a comfortable chair with their left forearm semi-extended on the desk and right arm hanging vertically to the sagittal plane, as shown in Figure 1c. The upper-limb exoskeleton with one degree of freedom could perform two actions: flexion and extension. It was used to assist the elbow movement for users in daily activities. In two scenarios, the exoskeleton could move by a binary control while subjects performed ME or MI under the selected paradigm. The control strategy is shown in Figure 3. Subjects performed ME or MI according to the cue. The presentation of the cue was the same as in the decoder-training phase. After the preprocessing and feature extraction for the raw EEG signals, the extracted features were input to the trained decoder and were classified to recognize which movement the subjects were executing or imagining. Depending on the classification result, the intelligent controller sent control signals to the exoskeleton to make it perform the corresponding action. Feedback was given by a vertical bar simulating the active part of the exoskeleton on the computer screen (Scenarios 1 and 2) or by the exoskeleton mounted on the right arm (Scenario 2). The feedback bar moved the same angle as the exoskeleton in real time. There were two sessions in each scenario, and each session consisted of 60 exoskeleton actions.

Figure 3.

Control strategy of the upper-limb exoskeleton. Subjects performed ME or MI according to the cue. The presentation of cue was same as in the decoder-training phase. After the preprocessing and feature extraction for the raw EEG signals, the extracted features were input into the trained decoder and were classified to recognize which movement the subjects were executing or imagining. Depending on the classification result, the intelligent controller sent control signals to the exoskeleton to make it perform the corresponding action. Feedback was given by a vertical bar simulating the active part of the exoskeleton on the computer screen (Scenarios 1 and 2) or by the exoskeleton mounted on the right arm (Scenario 2). The feedback bar moved the same angle as the exoskeleton in real time.

During the ME, subjects were instructed to perform physical movement immediately upon cue appearance, and not before it. During the MI, subjects were instructed to perform motor imagery immediately upon cue appearance, and not before it. Tongue and eye movements were detectable through the EEG electrodes. Additionally, subject self-reports indicated that subjects were not performing ME or MI before cue appearance. A closed-circuit video was used to ensure that subjects performed ME or MI correctly and were awake and attentive. There was a 10 min rest period between every session in case of fatigue. Each subject was enrolled in the study for 2–2.5 h.

2.3. Trial Exclusion

During the experiment, according to the closed-circuit video, trials with wrong movements during ME were excluded; trials with any movements during MI were excluded. After the experiment, trials that contained muscle tension, tongue movement, face movement, eye movement, or eye blink artifacts in the 2 s preceding cue appearance and during cue were identified offline and excluded from all subsequent visualizations and analyses.

2.4. Feature Extraction

The raw EEG signal was filtered in an 8–30 Hz band. The frequency band was chosen because it includes the alpha and beta frequency bands, which have been shown to be most important for movement classification [20]. To show the ERD/ERS of each class (left hand movement, right hand movement and both feet movement), the EEG signals of C3, Cz and C4 channels were averaged online over the 3 s preceding and 5 s following cue appearance across all trials and all subjects. ERD/ERS is defined as the percentage of power decrease or power increase in relation to a reference period (in this study 0–1 s) [17], according to the expression

The power within the frequency band of interest in the period after the event is given by A, whereas that of the reference period is given by R. ERD/ERS visualizations were created by generating time-frequency maps using a fast Fourier transformation (FFT) with Hanning windows, a 100 ms segment length, and no overlap between consecutive segments [32].

According to the visual inspection of ERD and ERS (refer to the Figure 4 and Figure 5 in Results section), the time period 1–4 s after the cue appearance (seconds 4–7) and the best frequency band for each subject were selected in order to obtain the strongest ERD/ERS to extract features. Each selected time period was analyzed using time segments of 200 ms. Common spatial pattern (CSP) is widely used and highly successful in the binary case [33], which was applied in this study to perform feature extraction. Given two distributions in a high-dimensional space, the CSP algorithm can calculate spatial filters that maximize the variance between two classes [34]. For the analysis, let V denote the raw data of a single trial, an matrix with N the number of channels (28 channels in this study) and T the number of samples in time. The normalized spatial covariance of the EEG can be calculated as

where denotes the transpose operator and trace(a) is the sum of the diagonal elements of a. For each of the two distributions to be separated (i.e., left hand and right hand movement, or left hand movement and both feet movement), the spatial covariance is calculated by averaging over the trials of each class. The composite spatial covariance is given as . can be factored as , where is the matrix of eigenvectors and is the diagonal matrix of eigenvalues. The whitening transformation equalizes the variances in the space spanned by , i.e., all eigenvalues of are equal to one. If and are transformed as and , then and share common eigenvectors U, i.e., if and , then , where I is the identity matrix. With the projection matrix , the decomposition (mapping) of a trial V is given as . The features we use for classification are obtained from the variances of the first and last m rows of the expansion coefficients , since, by construction, they are the best suited to distinguish between the two conditions. Let be the variance of the j-th row of , . The feature vector for trial i is composed of the variances , normalized by the total variance of the projections retained, and log-transformed as

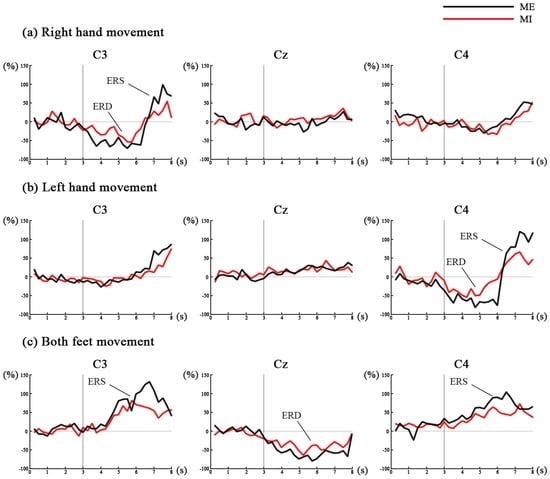

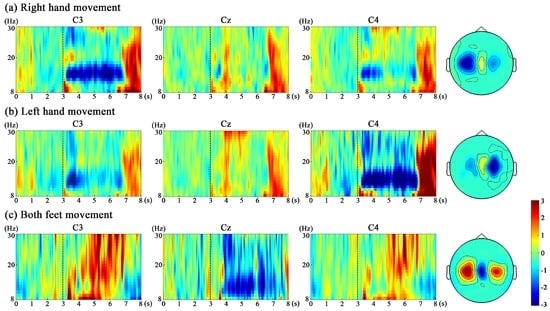

Figure 4.

Time course of ERD/ERS. For each movement (right hand, left hand or both feet movement), the EEG power of C3, Cz and C4 within 8–12 Hz frequency band is averaged offline over the 3 s preceding and 5 s following cue appearance across all trials and all subjects, and is displayed relative (as percentage) to the power of the same EEG derivations recorded during the reference period: (a) shows the ERD/ERS patterns happening in the right hand movement; (b) shows the ERD/ERS patterns happening in the left hand movement; and (c) shows the ERD/ERS patterns happening in both feet movement.

Figure 5.

A representative example of time-frequency maps for ME of three movements from subject 3. (a) time-frequency maps of right hand movement; (b) time-frequency maps of left hand movement; (c) time-frequency maps of both feet movement. For each movement, time-frequency maps of channel C3 over the left sensorimotor cortex, C4 over the right sensorimotor cortex and Cz are illustrated. In the time-frequency map, 3 s means the cue occurrence. The blue color stands for ERD (power decrease), and the red color stands for ERS (power increase). The head topographies corresponding to the best frequency band (12–16 Hz for subject 3) from seconds 4–7 are provided next to the time-frequency maps.

The feature vectors are fed into the classifier.

2.5. Classification

In the decoder-training phase, we applied linear discriminant analysis (LDA), support vector machine (SVM) and backpropagation neural network (BPNN) as the classifiers in this study because of their remarkable robustness and high performance in the BMI applications [35,36]. The input was the feature vector from CSP, and the output was one class (left hand movement, right hand movement or both feet movement). We separated the whole data set into the training set and testing set, and classified the data from the testing set with the model obtained from the training set. For testing the method optimally on the data available to us, we performed the 10-fold cross-validation procedure in the model training phase, i.e., nine folds of data of the training set were used for training and the remaining one fold of data was used for validation. Three different ratios between the training set and testing set were used, i.e., 50%–50%, 60%–40% and 80%–20%. Different classifiers and different train–test ratios can lead to different classification performance. Thus, we compared nine classification strategies in total (three classifiers × three train–test ratios) in order to select the best classifier and train–test ratios. Then, based on the best classification strategy, the better paradigm was selected. In the online-control phase, all data were used for testing data to test the classification models of the selected paradigm.

2.6. Data Analysis

To evaluate the proposed BMI performance, four measurements, i.e., accuracy, precision, recall and F-score, were used in this study. Accuracy is the ratio that the model correctly classifies the input. It can be calculated using the equation . Precision is a measurement indicating the fraction that the model correctly predicts true class while recall indicates the fraction that the model detects true class. Precision and recall can be obtained using equations and , respectively. F-score is an extended measurement of accuracy, and equally combines precision and recall. F-score can be computed using equation . In the decoder-training phase, to evaluate the motor mode × paradigm interaction and motor mode and paradigm main effects, a 2 (ME or MI) × 2 (left versus right hand movement or left hand versus both feet movement) within-subjects ANOVA was applied. In the online-control phase, to evaluate the motor mode × scenario interaction and motor mode and scenario main effects, a 2 (ME or MI) × 2 (wearing or without wearing the exoskeleton) within-subjects ANOVA was applied. All statistics used were carried out at 95% confidence interval.

3. Results

After trial exclusion, all remaining data were processed and analyzed from the four subjects in the experiment.

3.1. Results of the Decoder-Training Phase

3.1.1. Neurophysiological Analysis of ERD/ERS

To show the time course of ERD/ERS, for each movement (right hand, left hand or both feet movement), the EEG power of C3, Cz and C4 within the 8–12 Hz frequency band is averaged offline over the 3 s preceding and 5 s following cue appearance across all trials and all subjects, and is displayed relative (as percentage) to the power of the same EEG derivations recorded during the reference period, as shown in Figure 4. During the ME and MI of right hand and left hand (the time period 1–5 s after the cue appearance (seconds 4–8)), the EEG data reveals a significant ERD and post-movement ERS over the contralateral side, while only a weak ERS can be seen over the ipsilateral side and at the Cz electrode. During the ME and MI of both feet, the EEG data reveals a significant ERD at the Cz electrode, while a significant ERS at the C3 and C4 electrodes. Hand movement can result in a hand area (C3 and C4) ERD and simultaneously in a foot area (Cz) ERS, and foot movement can result in an opposite pattern. In addition, for all three movements, the ERD and ERS are more significant during ME than MI.

Figure 5 shows a representative example of time-frequency maps for ME of three movements from subject 3. For each movement, time-frequency maps of channel C3 over the left sensorimotor cortex, C4 over the right sensorimotor cortex and Cz are illustrated. In the time-frequency map, 3 s means the cue occurrence. The blue color stands for ERD (power decrease), and the red color stands for ERS (power increase). For the right hand and left hand movement, ERD is observed from around 1–4 s (seconds 4–7) after the cue onset due to the response delay; ERD in both alpha and beta bands is observed over motor areas contralateral to the hand moves. The ERS is mainly observed over around second 7–8 in the beta band over the contralateral motor areas. For the both feet movement, the ERD in both alpha and beta bands is observed at Cz electrode and the ERS in both alpha and beta bands is observed at the hand area (C3 and C4) from seconds 4–7. For subject 3, the time-frequency maps show that 12–16 Hz is the best frequency to obtain the strongest ERD/ERS to extract features. The head topographies corresponding to this frequency band from seconds 4–7 are provided next to the time-frequency maps. The best frequency bands for the other three subjects are: 8–12 Hz for subject 1, 18–22 Hz for subject 2, and 14–18 Hz for subject 4.

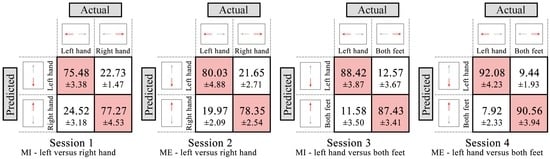

3.1.2. Classification Results

According to Figure 4 and Figure 5, the time period and the best frequency band for each subject were selected for feature extraction and classification. For each session, nine classification models based on different classification strategies (S1–S9) were trained to distinguish between two movements (left versus right hand movement, or left hand versus both feet movement). Table 2 shows classification accuracies of nine classification models for each session across all subjects. The LDA (S1–S3) and SVM (S4–S6) achieve the similar classification accuracies, which are higher than those of BPNN (S7–S9). For the different train–test ratios, the classification accuracies of 80%–20% (S3, S6 and S9) are higher than those of 50%–50% (S1, S4 and S7) and 60%–40% (S2, S5 and S8). S3 has a highest average classification accuracy of 83.71% ± 7.07%. These results indicate that using LDA classifier and 80%–20% train–test ratio (S3) achieves a better classification performance, which is further analysed to select the better paradigm. The confusion matrices of four sessions using S3 classification strategy are shown in Figure 6. The numbers of each entry in the matrix represent the mean value and standard deviation across all subjects. The main diagonal entries stand for the correct classification, and the off-diagonal entries stand for the misclassification. The four sessions achieve mean classification accuracy of 76.38% ± 4.25%, 79.19% ± 3.41%, 87.93% ± 4.83%, and 91.32% ± 2.89%, respectively. The accuracies of ME sessions (Sessions 2 and 4) are higher than those of MI sessions (Sessions 1 and 3); the accuracies of left hand versus both feet movement sessions (Sessions 3 and 4) are higher than those of left versus right hand movement sessions (Sessions 1 and 2). Table 3 shows mean precision, recall and F-score of the two classes of four sessions using S3 classification strategy across all subjects. In general, the results show high precision, recall and F-score indicating that the model has a high performance (Sessions 3 and 4). ANOVA shows no significant interaction between motor mode and paradigm (), while there is a significant main effect of motor mode (F = 17.473, p = 0.004) and paradigm (F = 15.880, p = 0.016) for the classification accuracy. These results indicate that left hand versus both feet movement paradigm achieves a better classification performance, which would be used in the online-control phase.

Table 2.

The classification accuracies of nine classification models based on different classification strategies for each session across all subjects (in %).

Figure 6.

Confusion matrices (in %) for four sessions using S3 classification strategy in the decoder-training phase, averaged across all subjects. The numbers of each entry in the matrix represent the mean value and standard deviation across all subjects. The main diagonal entries stand for the correct classification, and the off-diagonal entries stand for the misclassification.

Table 3.

The mean precision, recall and F-score of the different two classes of four sessions using S3 classification strategy in the decoder-training phase across all subjects (in %).

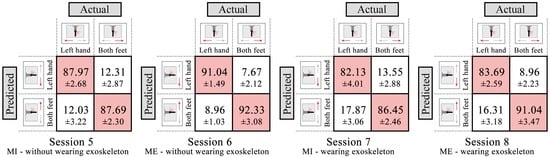

3.2. Results of Online-Control Phase

The trained decoder using the selected paradigm was tested in the online-control phase. In two scenarios , subjects performed ME or MI to control the exoskeleton, resulting in four sessions (Session 5: MI, without wearing exoskeleton; Session 6: ME, without wearing exoskeleton; Session 7: MI, wearing exoskeleton; Session 8: ME, wearing exoskeleton). The trained model (LDA classifier) of Session 3 was used in Sessions 5 and 7; the trained model of Session 4 was used in Sessions 6 and 8. The confusion matrices of Sessions 5–8 are shown in Figure 7. The numbers of each entry in the matrix represent the mean value and standard deviation across all subjects. The main diagonal entries stand for the correct classification, and the off-diagonal entries stand for the misclassification. The four sessions achieve mean classification accuracy of 87.83% ± 2.79%, 91.69% ± 2.95%, 84.29% ± 2.11%, and 87.37% ± 3.06%, respectively. The accuracies of ME sessions (Sessions 6 and 8) are higher than MI sessions (Sessions 5 and 7); the accuracies of without wearing exoskeleton sessions (Sessions 5 and 6) are higher than wearing exoskeleton sessions (Sessions 7 and 8). Table 4 shows mean precision, recall and F-score of the two classes of Sessions 5–8 across all subjects. The results show the higher precision, recall and F-score of Sessions 5 and 6 than those of Sessions 7 an 8. ANOVA shows no significant interaction between motor mode and scenario (), while there is a significant main effect of motor mode (F = 30.327, p < 0.001) and scenario (F = 24.502, p < 0.001) for the classification accuracy. These results indicate that without wearing exoskeleton scenario achieves a better classification performance than wearing exoskeleton scenario.

Figure 7.

Confusion matrices (in %) for four sessions in the online-control phase, averaged across all subjects. The numbers of each entry in the matrix represent the mean value and standard deviation across all subjects. The main diagonal entries stand for the correct classification, and the off-diagonal entries stand for the misclassification.

Table 4.

The mean precision, recall and F-score of the two classes of four sessions in the online-control phase across all subjects (in %).

4. Discussion

By using physiological signals associated with natural behavior, users can interact with the environment through ME or MI. In this study, we proposed a BMI, and investigated whether self-induced variations of the EEG can be useful as control signals for an upper-limb exoskeleton.

In the decoder-training phase, subjects performed ME or MI under two paradigms, i.e., left versus right hand movement and left hand versus both feet movement. During the ME and MI of right hand and left hand, we observed contralateral ERD and ERS in the alpha and beta band during sustained movements and post-movement from all subjects, while only a weak ERS could be seen over the ipsilateral side and at the Cz electrode, as shown in Figure 4 and Figure 5. These facts are because there is a decrease in synchrony of the underlying neuronal populations during ME or MI (ERD), and ERS reflects a somatotypically specific, short-lived brain state associated with deactivation (inhibition) and/or resetting of motor cortex networks which happens in the post-movement [17,37]. In contrast to the hand movement, during the both feet movement, a significant ERD could be found close to electrode Cz overlying the primary foot representation area, while a significant ERS at the electrode C3 and C4 overlying the primary hand representation area, as shown in Figure 4 and Figure 5. These results give evidence of the existence of not only a “hand area mu rhythm” but also a “foot area mu rhythm” [38]. Each primary sensorimotor area may have its own intrinsic rhythm, which is blocked or desynchronized when the corresponding area becomes activated. Although the ERD/ERS patterns were expected to be similar between ME and MI, the amplitudes of ERD/ERS for MI sessions (Sessions 1 and 3) were smaller than those for ME sessions (Sessions 2 and 4). The reason for the fact that MI has less robust performance than ME might be that MI is not a natural behavior and thus requires more effort than ME [39]. In addition, compared with ME, there is no neural feedback in MI which may exhibit less activity (ERS) in the motor cortex and result in a lower signal to noise ratio. However, considering that MI is more meaningful for BMI application, more training should be done for the subjects with MI. Regarding the paradigm selection, the accuracies of left hand versus both feet movement paradigm (Sessions 3 and 4) are higher than those of left versus right hand movement paradigm (Sessions 1 and 2). These facts indicate that ERD and ERS patterns are highly differentiable between left hand and both feet movement, whereas they were less detectable between left hand and right hand movement. Therefore, we chose the left hand versus both feet movement paradigm to be used in the online-control phase.

In the online-control phase, the trained decoder using the selected paradigm was tested in two scenarios. The accuracies of ME sessions (Sessions 6 and 8) are higher than MI sessions (Sessions 5 and 7), which has the same results as those in the decoder-training phase. In addition, the accuracies of without wearing exoskeleton sessions (Sessions 5 and 6) are higher than wearing exoskeleton sessions (Sessions 7 and 8). One reason is that, when wearing the exoskeleton, the right arm will move following the exoskeleton, which causes some problems in practical use because the movement of the right arm would produce ERD/ERS patterns over the hand area and this can result in false decisions of the system [27], especially an effect on the classification of left hand movement. Even if the right arm movement is a passive movement, it can also result in the ERD/ERS patterns similar to those in voluntary movements [40]. A related work [41] about passive movement effect found that the ERD/ERS patterns associated with upper limb movements are not significantly changed by periodic lower limb passive movements, which is similar to our results (the right hand movement mainly affects the classification of left hand movement, but not foot movement, as shown in Figure 7). Therefore, one possible solution is that avoiding using ERD/ERS patterns over the hand area for feature extraction and classification in practical use, i.e., using the other paradigm like face versus foot movement, which mainly results in the ERD/ERS patterns over face area and foot area. This solution only applies to this study (upper-limb exoskeleton); we can still use ERD/ERS patterns over the hand area for the lower-limb exoskeleton or orthosis, but not the ERD/ERS patterns over the foot area.

Artifact contamination (tongue and eye movements, and EMG from facial muscles) in recording of EEG signal may possibly cause serious problems in BMI applications [40]. Throughout the experiment, tongue and eye movements, and EMG signals were monitored for all subjects, to make sure no artifacts during the ME and MI were performed. Furthermore, after the experiments, contaminated trials were identified online and excluded from all subsequent visualizations and analyses. Therefore, the artifact contamination was not a concern in this study.

The future work will cover the following two aspects: (1) the present results show that the proposed BMI are applicable to control an upper-limb exoskeleton for able-bodied subjects. However, BMI applications should also aim to the users affected by motor disabilities. Thus, we should apply the proposed method to these users to validate the results; and (2) in this paper, we aim to investigate whether self-induced variations of the EEG can be useful as control signals for an upper-limb exoskeleton. We explore how to classify binary movements. However, to control an exoskeleton, there are some other important points, like actuator control, control feedback, mechanical structure, etc., which need to be explored and studied.

5. Conclusions

In this paper, we investigated whether self-induced variations of the EEG can be useful as control signals for an upper-limb exoskeleton developed by us. A brain-machine interface based on ERD/ERS was proposed. In summary, ERD/ERS using left versus right hand movement paradigm and left hand versus both feet movement paradigm presented distinguishable patterns as we expected, both in MI and ME; self-induced variations of EEG can be useful as control signals for an upper-limb exoskeleton in two scenarios. Although the mean classification accuracy when subjects wore the exoskeleton was not so high as when subjects did not wear the exoskeleton during the online-control phase, the methods we proposed still exhibited satisfying properties and robust results. The present study demonstrates that the proposed BMI is effective to control the upper-limb exoskeleton, and provides a practical method by a non-invasive EEG signal associated with human natural behavior for clinical applications. It is worthwhile to pursue this potential system since BMI based on EEG can provide support in many ways for assisting daily activities and improving the quality of life of elderly, disabled and injured individuals.

Acknowledgments

This research was supported in part by the China Postdoctoral Science Foundation funded project 2015M581935, the Zhejiang Province Postdoctoral Science Foundation under Project BSH1502116, the National Natural Science Foundation of China 61272304, and the Science and Technology Project of Zhejiang Province 2015C31051.

Author Contributions

Z.T. conceived and designed the experiments; Z.T., C.L. and S.C. performed the experiments; S.S. and S.Z. analyzed the data; S.Z. and Y.C. contributed reagents/materials/analysis tools; Z.T. wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BMI | brain-machine interfaces |

| EEG | electroencephalogram |

| ERD | event-related desynchronization |

| ERS | event-related synchronization |

| ME | motor execution |

| MI | motor imagery |

| ADL | activities of daily living |

| EMG | electromyography |

| LDA | linear discriminant analysis |

| CSP | common spatial pattern |

References

- Lo, H.S.; Xie, S.Q. Exoskeleton robots for upper-limb rehabilitation: State of the art and future prospects. Med. Eng. Phys. 2012, 34, 261–268. [Google Scholar] [CrossRef] [PubMed]

- Lo, A.C.; Guarino, P.D.; Richards, L.G.; Haselkorn, J.K.; Wittenberg, G.F.; Federman, D.G.; Ringer, R.J.; Wagner, T.H.; Krebs, H.I.; Volpe, B.T.; et al. Robot-assisted therapy for long-term upper-limb impairment after stroke. N. Engl. J. Med. 2010, 362, 1772–1783. [Google Scholar] [CrossRef] [PubMed]

- Gopura, R.; Bandara, D.; Kiguchi, K.; Mann, G. Developments in hardware systems of active upper-limb exoskeleton robots: A review. Robot. Auton. Syst. 2016, 75, 203–220. [Google Scholar] [CrossRef]

- Ren, Y.; Kang, S.H.; Park, H.S.; Wu, Y.N.; Zhang, L.Q. Developing a multi-joint upper limb exoskeleton robot for diagnosis, therapy, and outcome evaluation in neurorehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 490–499. [Google Scholar] [PubMed]

- Naik, G.; Al-Timemy, A.; Nguyen, H. Transradial amputee gesture classification using an optimal number of sEMG sensors: An approach using ICA clustering. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 24, 837–846. [Google Scholar] [CrossRef] [PubMed]

- Khokhar, Z.O.; Xiao, Z.G.; Menon, C. Surface EMG pattern recognition for real-time control of a wrist exoskeleton. Biomed. Eng. Online 2010, 9, 1. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.R.; Kumar, D.K. Identification of hand and finger movements using multi run ICA of surface electromyogram. J. Med. Syst. 2012, 36, 841–851. [Google Scholar] [CrossRef] [PubMed]

- Herr, H. Exoskeletons and orthoses: Classification, design challenges and future directions. J. Neuroeng. Rehabil. 2009, 6, 1. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.R.; Nguyen, H.T. Nonnegative matrix factorization for the identification of EMG finger movements: Evaluation using matrix analysis. IEEE J. Biomed. Health Inform. 2015, 19, 478–485. [Google Scholar] [CrossRef] [PubMed]

- Yin, Y.H.; Fan, Y.J.; Xu, L.D. EMG and EPP-integrated human-machine interface between the paralyzed and rehabilitation exoskeleton. IEEE Trans. Inform. Technol. Biomed. 2012, 16, 542–549. [Google Scholar] [CrossRef] [PubMed]

- Naik, G.R.; Kumar, D.K.; Jayadeva. Twin SVM for gesture classification using the surface electromyogram. IEEE Trans. Inform. Technol. Biomed. 2010, 14, 301–308. [Google Scholar] [CrossRef] [PubMed]

- Yang, Z.; Chen, Y.; Wang, J. Surface EMG-based sketching recognition using two analysis windows and gene expression programming. Front. Neurosci. 2016, 10, 445. [Google Scholar] [CrossRef] [PubMed]

- Wolpaw, J.R.; Birbaumer, N.; McFarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-computer interfaces for communication and control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Pregenzer, M.; Pfurtscheller, G. Frequency component selection for an EEG-based brain to computer interface. IEEE Trans. Rehabil. Eng. 1999, 7, 413–419. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Aranibar, A. Event-related cortical desynchronization detected by power measurements of scalp EEG. Electroencephalogr. Clin. Neurophysiol. 1977, 42, 817–826. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Da Silva, F.L. Event-related EEG/MEG synchronization and desynchronization: Basic principles. Clin. Neurophysiol. 1999, 110, 1842–1857. [Google Scholar] [CrossRef]

- Pfurtscheller, G. Graphical display and statistical evaluation of event-related desynchronization (ERD). Electroencephalogr. Clin. Neurophysiol. 1977, 43, 757–760. [Google Scholar] [CrossRef]

- Pfurtscheller, G. Event-related synchronization (ERS): An electrophysiological correlate of cortical areas at rest. Electroencephalogr. Clin. Neurophysiol. 1992, 83, 62–69. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C.; Flotzinger, D.; Pregenzer, M. EEG-based discrimination between imagination of right and left hand movement. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 642–651. [Google Scholar] [CrossRef]

- Müller-Putz, G.R.; Kaiser, V.; Solis-Escalante, T.; Pfurtscheller, G. Fast set-up asynchronous brain-switch based on detection of foot motor imagery in 1-channel EEG. Med. Biol. Eng. Comput. 2010, 48, 229–233. [Google Scholar] [CrossRef] [PubMed]

- Broetz, D.; Braun, C.; Weber, C.; Soekadar, S.R.; Caria, A.; Birbaumer, N. Combination of brain-computer interface training and goal-directed physical therapy in chronic stroke: A case report. Neurorehabil. Neural Repair 2010, 6, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Caria, A.; Weber, C.; Brötz, D.; Ramos, A.; Ticini, L.F.; Gharabaghi, A.; Braun, C.; Birbaumer, N. Chronic stroke recovery after combined BCI training and physiotherapy: A case report. Psychophysiology 2011, 48, 578–582. [Google Scholar] [CrossRef] [PubMed]

- Ramos-Murguialday, A.; Broetz, D.; Rea, M.; Läer, L.; Yilmaz, Ö.; Brasil, F.L.; Liberati, G.; Curado, M.R.; Garcia-Cossio, E.; Vyziotis, A.; et al. Brain-machine interface in chronic stroke rehabilitation: A controlled study. Ann. Neurol. 2013, 74, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Ang, K.K.; Guan, C.; Chua, K.S.G.; Ang, B.T.; Kuah, C.; Wang, C.; Phua, K.S.; Chin, Z.Y.; Zhang, H. A clinical study of motor imagery-based brain-computer interface for upper limb robotic rehabilitation. In Proceedings of the 31st Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Minneapolis, MN, USA, 3–6 September 2009; pp. 5981–5984.

- Sarac, M.; Koyas, E.; Erdogan, A.; Cetin, M.; Patoglu, V. Brain computer interface based robotic rehabilitation with online modification of task speed. In Proceedings of the 2013 IEEE International Conference on Rehabilitation Robotics (ICORR), Seattle, WA, USA, 24–26 June 2013; pp. 1–7.

- Pfurtscheller, G.; Guger, C.; Müller, G.; Krausz, G.; Neuper, C. Brain oscillations control hand orthosis in a tetraplegic. Neurosci. Lett. 2000, 292, 211–214. [Google Scholar] [CrossRef]

- Bai, O.; Lin, P.; Vorbach, S.; Floeter, M.K.; Hattori, N.; Hallett, M. A high performance sensorimotor beta rhythm-based brain-computer interface associated with human natural motor behavior. J. Neural Eng. 2008, 5, 24–35. [Google Scholar] [CrossRef] [PubMed]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Vidaurre, C.; Blankertz, B. Towards a cure for BCI illiteracy. Brain Topogr. 2010, 23, 194–198. [Google Scholar] [CrossRef] [PubMed]

- Nolan, H.; Whelan, R.; Reilly, R. FASTER: Fully automated statistical thresholding for EEG artifact rejection. J. Neurosci. Methods 2010, 192, 152–162. [Google Scholar] [CrossRef] [PubMed]

- Bai, O.; Mari, Z.; Vorbach, S.; Hallett, M. Asymmetric spatiotemporal patterns of event-related desynchronization preceding voluntary sequential finger movements: A high-resolution EEG study. Clin. Neurophysiol. 2005, 116, 1213–1221. [Google Scholar] [CrossRef] [PubMed]

- Onose, G.; Grozea, C.; Anghelescu, A.; Daia, C.; Sinescu, C.; Ciurea, A.; Spircu, T.; Mirea, A.; Andone, I.; Spânu, A.; et al. On the feasibility of using motor imagery EEG-based brain-computer interface in chronic tetraplegics for assistive robotic arm control: A clinical test and long-term post-trial follow-up. Spinal Cord 2012, 50, 599–608. [Google Scholar] [CrossRef] [PubMed]

- Qaraqe, M.; Ismail, M.; Serpedin, E. Band-sensitive seizure onset detection via CSP-enhanced EEG features. Epilepsy Behav. 2015, 50, 77–87. [Google Scholar] [CrossRef] [PubMed]

- Subasi, A.; Gursoy, M.I. EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Syst. Appl. 2010, 37, 8659–8666. [Google Scholar] [CrossRef]

- Subasi, A. Automatic recognition of alertness level from EEG by using neural network and wavelet coefficients. Expert Syst. Appl. 2005, 28, 701–711. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Solis-Escalante, T. Could the beta rebound in the EEG be suitable to realize a “brain switch”? Clin. Neurophysiol. 2009, 120, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C.; Andrew, C.; Edlinger, G. Foot and hand area mu rhythms. Int. J. Psychophysiol. 1997, 26, 121–135. [Google Scholar] [CrossRef]

- Huang, D.; Lin, P.; Fei, D.Y.; Chen, X.; Bai, O. Decoding human motor activity from EEG single trials for a discrete two-dimensional cursor control. J. Neural Eng. 2009, 6, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Formaggio, E.; Storti, S.F.; Galazzo, I.B.; Gandolfi, M.; Geroin, C.; Smania, N.; Spezia, L.; Waldner, A.; Fiaschi, A.; Manganotti, P. Modulation of event-related desynchronization in robot-assisted hand performance: Brain oscillatory changes in active, passive and imagined movements. J. Neuroeng. Rehabil. 2013, 10, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Lisi, G.; Noda, T.; Morimoto, J. Decoding the ERD/ERS: Influence of afferent input induced by a leg assistive robot. Front. Syst. Neurosci. 2014, 8, 1–12. [Google Scholar] [CrossRef] [PubMed]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).