Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array

Abstract

:1. Introduction

2. Method Description

2.1. The Correction Process

2.2. Registration Algorithm

2.3. Anti-Ghosting Measures

2.4. Bad Pixel Replacement

3. Results

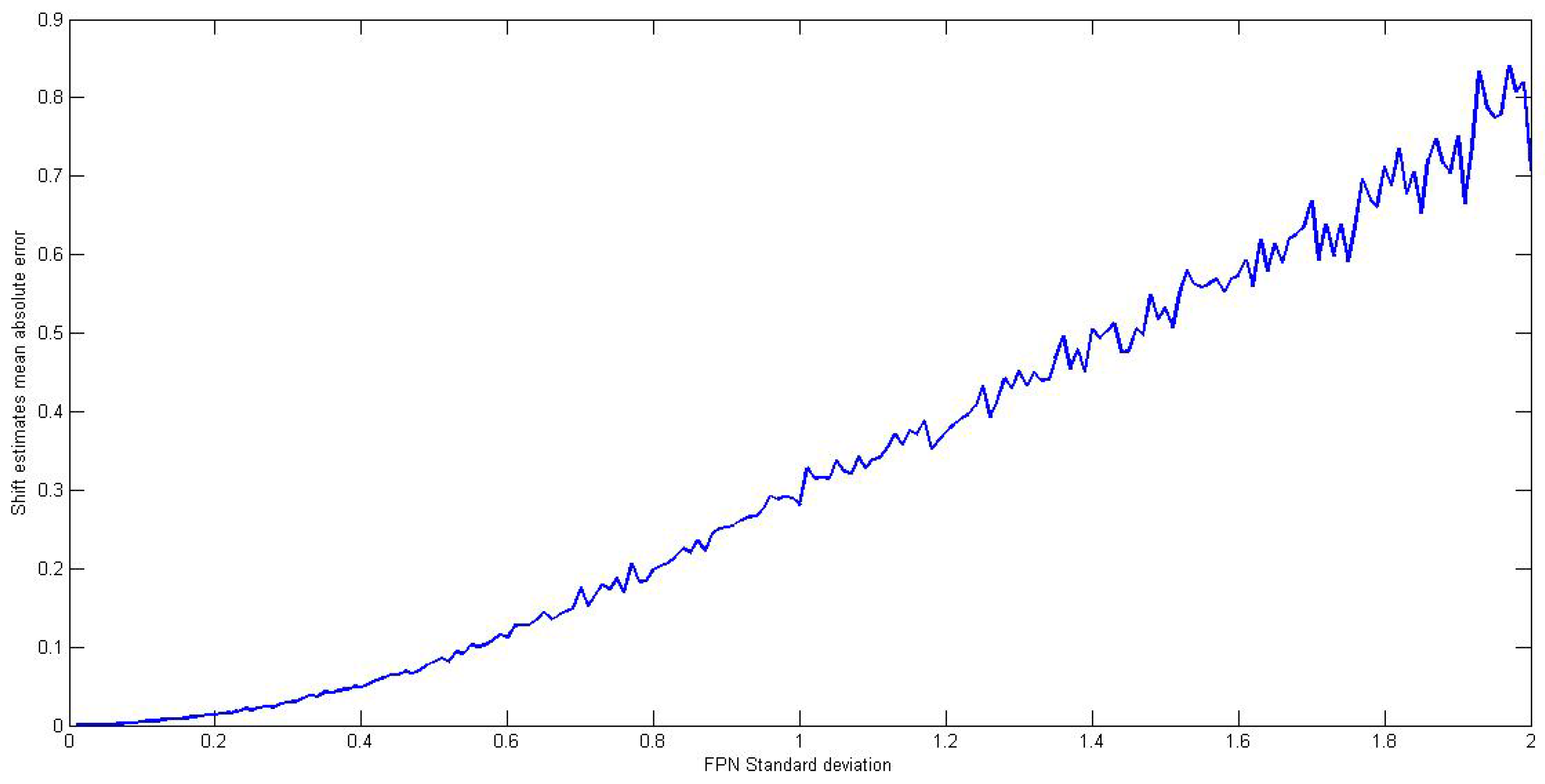

3.1. Registration Accuracy

3.2. Parameters and Experimental Setting

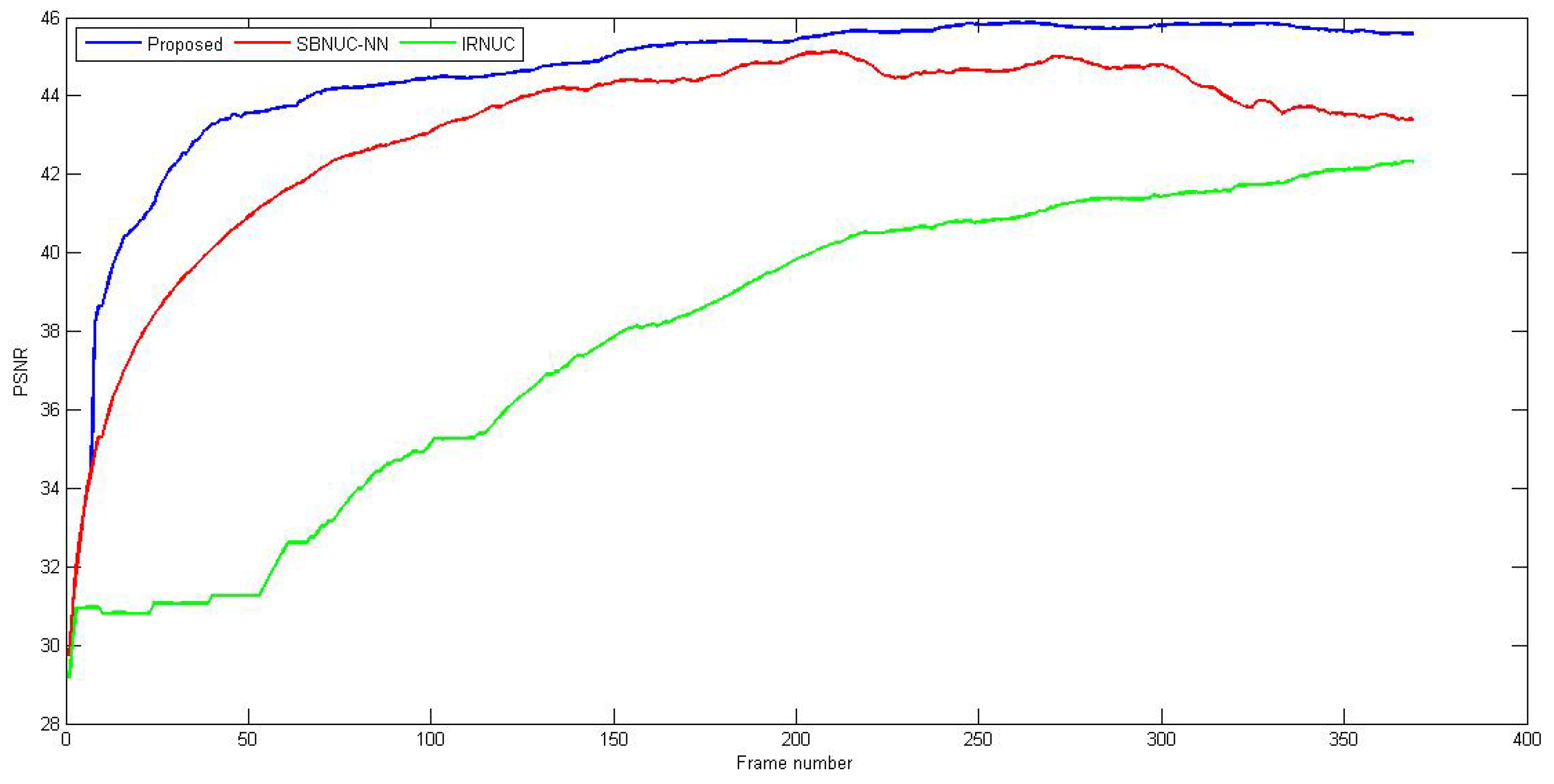

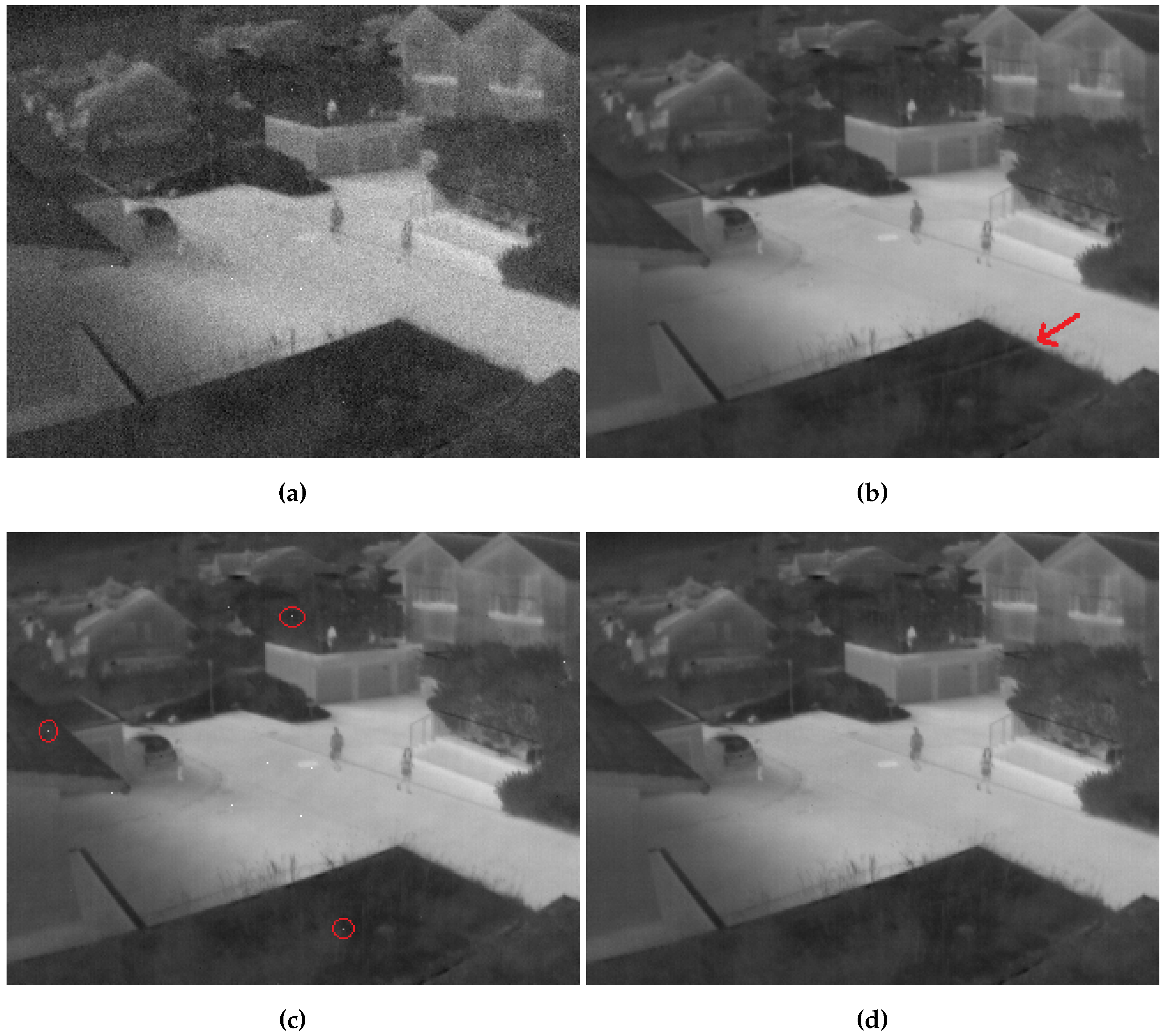

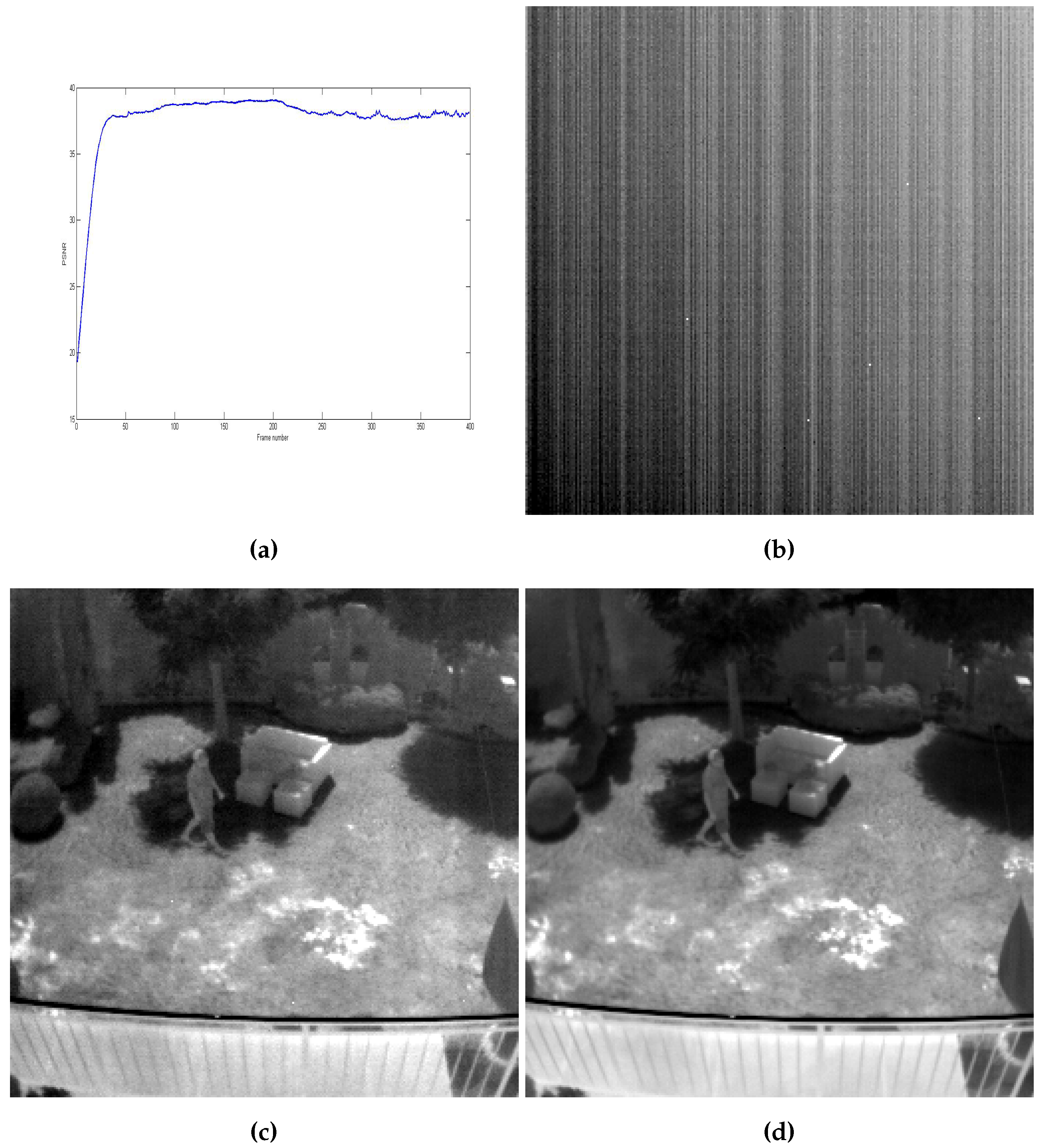

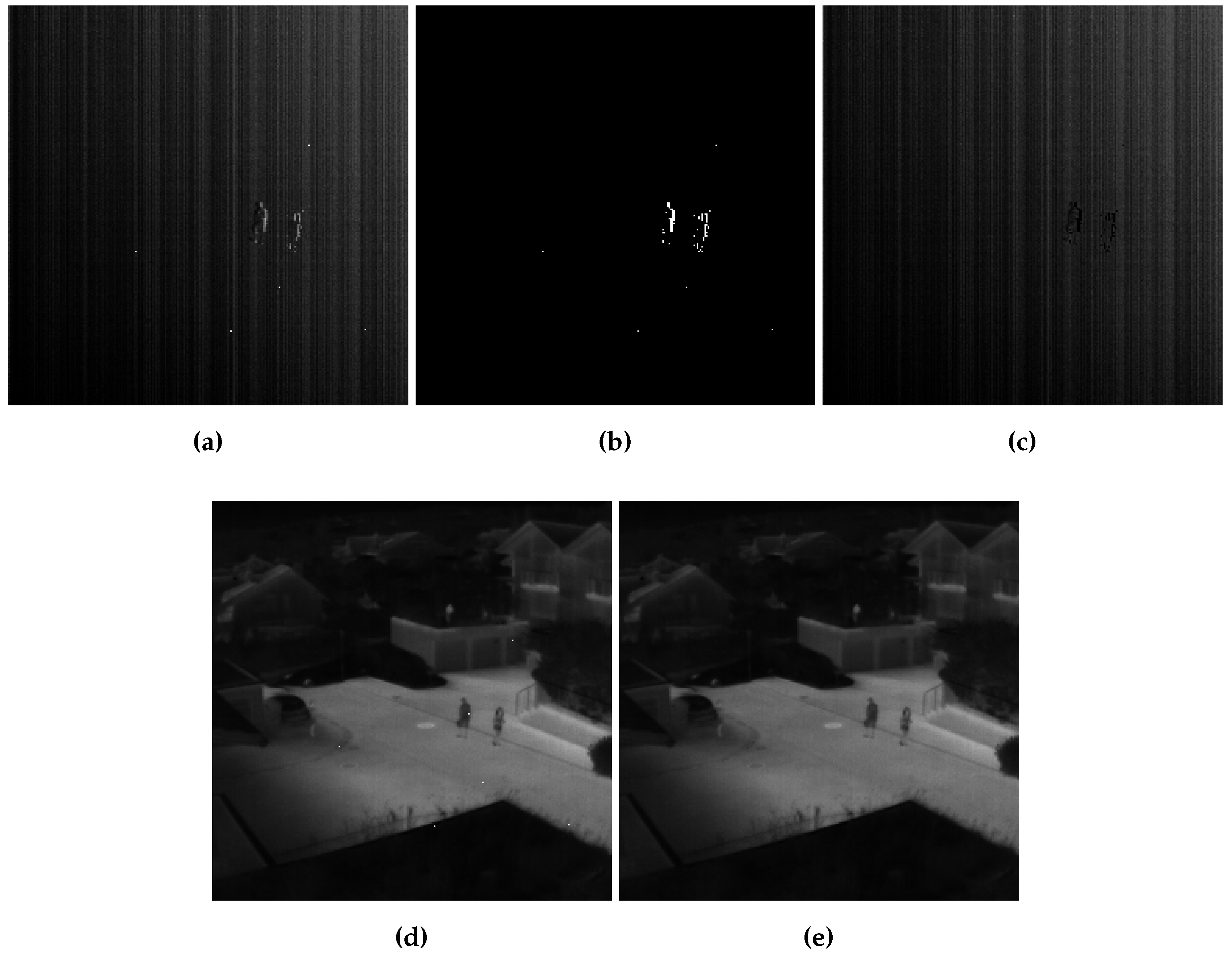

3.3. Correction Efficiency

3.4. Bad Pixel Replacement

4. Discussion

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| FPA | Focal Plane Array |

| IRFPA | Infrared Focal Plane Array |

| FPN | Fixed Pattern Noise |

| NU | Nonuniformity |

| NUC | Nonuniformity Correction |

| SBNUC | Scene-Based Nonuniformity Correction |

| LMS | Least Mean Square |

| IRNUC | Interframe Registration-based Nonuniformity Correction |

| SBNUC-NN | Scene-Based Nonuniformity Correction Based on Neural Network |

| DFT | Discrete Fourier Transform |

| PSNR | Peak Signal-to-Noise Ratio |

| RMSE | Root Mean Square Error |

| MIRE | Midway Infrared Equalization |

References

- Perry, D.L.; Dereniak, E.L. Linear theory of nonuniformity correction in infrared staring sensors. Opt. Eng. 1993, 32, 1854–1859. [Google Scholar] [CrossRef]

- Harris, J.G. Nonuniformity correction of infrared image sequences using the constant-statistics constraint. IEEE Trans. Image Process. 1999, 8, 1148–1151. [Google Scholar] [CrossRef] [PubMed]

- Hayat, M.M.; Torres, S.N.; Armstrong, E.; Cain, S.C.; Yasuda, B. Statistical algorithm for nonuniformity correction in focal-plane arrays. Appl. Opt. 1999, 38, 772–780. [Google Scholar] [CrossRef] [PubMed]

- Torres, S.N.; Hayat, M.M. Kalman filtering for adaptive nonuniformity correction in infrared focal-plane arrays. JOSA A 2003, 20, 470–480. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X.; Qian, W. Scene-based nonuniformity correction method using multiscale constant statistics. Opt. Eng. 2011, 50, 087006. [Google Scholar] [CrossRef]

- Hardie, R.C.; Hayat, M.M.; Armstrong, E.; Yasuda, B. Scene-based nonuniformity correction with video sequences and registration. Appl. Opt. 2000, 39, 1241–1250. [Google Scholar] [CrossRef] [PubMed]

- Ratliff, B.M.; Hayat, M.M.; Hardie, R.C. An algebraic algorithm for nonuniformity correction in focal-plane arrays. JOSA A 2002, 19, 1737–1747. [Google Scholar] [CrossRef] [PubMed]

- Ratliff, B.M.; Hayat, M.M.; Tyo, J.S. Generalized algebraic scene-based nonuniformity correction algorithm. JOSA A 2005, 22, 239–249. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X. Scene-based nonuniformity correction algorithm based on interframe registration. JOSA A 2011, 28, 1164–1176. [Google Scholar] [CrossRef] [PubMed]

- Zuo, C.; Chen, Q.; Gu, G.; Sui, X.; Ren, J. Improved interframe registration based nonuniformity correction for focal plane arrays. Infrared Phys. Technol. 2012, 55, 263–269. [Google Scholar] [CrossRef]

- Zuo, C.; Zhang, Y.; Chen, Q.; Gu, G.; Qian, W.; Sui, X.; Ren, J. A two-frame approach for scene-based nonuniformity correction in array sensors. Infrared Phys. Technol. 2013, 60, 190–196. [Google Scholar] [CrossRef]

- Black, W.T.; Tyo, J.S. Feedback-integrated scene cancellation scene-based nonuniformity correction algorithm. J. Electron. Imaging 2014, 23, 023005. [Google Scholar] [CrossRef]

- Black, W.T.; Tyo, J.S. Improving feedback-integrated scene cancellation nonuniformity correction through optimal selection of available camera motion. J. Electron. Imaging 2014, 23, 053014. [Google Scholar] [CrossRef]

- Scribner, D.; Sarkady, K.; Kruer, M.; Caulfield, J.; Hunt, J.; Colbert, M.; Descour, M. Adaptive retina-like preprocessing for imaging detector arrays. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993; pp. 1955–1960.

- Zhang, T.; Shi, Y. Edge-directed adaptive nonuniformity correction for staring infrared focal plane arrays. Opt. Eng. 2006, 45, 016402. [Google Scholar] [CrossRef]

- Vera, E.; Torres, S. Fast adaptive nonuniformity correction for infrared focal-plane array detectors. Eurasip J. Appl. Signal Process. 2005, 2005, 1994–2004. [Google Scholar] [CrossRef]

- Hardie, R.C.; Baxley, F.; Brys, B.; Hytla, P. Scene-based nonuniformity correction with reduced ghosting using a gated LMS algorithm. Opt. Express 2009, 17, 14918–14933. [Google Scholar] [CrossRef] [PubMed]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156–158. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.S. Digital image smoothing and the sigma filter. Comput. Vis. Graph. Image Process. 1983, 24, 255–269. [Google Scholar] [CrossRef]

- Portmann, J.; Lynen, S.; Chli, M.; Siegwart, R. People Detection and Tracking from Aerial Thermal Views. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1794–1800.

- Tendero, Y.; Landeau, S.; Gilles, J. Non-uniformity Correction of Infrared Images by Midway Equalization. Image Process. Line 2012, 2, 134–146. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boutemedjet, A.; Deng, C.; Zhao, B. Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array. Sensors 2016, 16, 1890. https://doi.org/10.3390/s16111890

Boutemedjet A, Deng C, Zhao B. Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array. Sensors. 2016; 16(11):1890. https://doi.org/10.3390/s16111890

Chicago/Turabian StyleBoutemedjet, Ayoub, Chenwei Deng, and Baojun Zhao. 2016. "Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array" Sensors 16, no. 11: 1890. https://doi.org/10.3390/s16111890

APA StyleBoutemedjet, A., Deng, C., & Zhao, B. (2016). Robust Approach for Nonuniformity Correction in Infrared Focal Plane Array. Sensors, 16(11), 1890. https://doi.org/10.3390/s16111890