1. Introduction

Multi-angle remote sensing, providing multi-angle observations of the same scene, enhances human’s ability to monitor and identify the Earth’s surface [

1]. However, to successful use multi-angle images, the distortions caused by imaging from multiple viewing angles must be removed [

2]. Geometric correction, especially in the case of imaging with large viewing angles, is an indispensable preprocessing step for the processing and applications of remote sensing images [

3]. There are two key factors that can affect correction accuracy. One is how to effectively detect and accurately match the feature points between the reference image and the distorted image. The other is how to precisely estimate the model parameters using control points (CPs, i.e., matched feature points).

Previous studies of feature point extraction methods can be divided into three categories. The first category is based on the image gray that uses the gray differences around a pixel of an image [

4]. The second category is based on the boundary. Detecting objects include invariant corners at the maximum curvature of the boundary chain code and intersection points after polygon approximation [

5]. The third category is based on the parameter model that models each pixel of an image [

6]. Since the same target in the distorted image often exhibits local distortions and gray differences compared to in the reference image, the second category method that depends on the edge quality, and the third category method that is restricted to the parametric model are unsuitable. The first category method is comparatively simple and easy to combine with other methods. According to the evaluation of various feature point extraction algorithms in [

7], Scale-invariant feature transform (SIFT) [

8] can provide high stability of image translation, rotation, and scaling. However, its modeling principle does not include complete affine invariance, which is only robust to a certain degree of angle changes in practice. Afterwards, Morel [

9] achieved an increasing number of extracted points by simulating scale and parameters related to the optical axis of the imaging sensor and normalizing parameters related to translation and rotation. However, the algorithm is more complex to perform the simulation and normalization for each image pairs, and it is time consuming in practice. In this paper, a new CPs extraction algorithm is proposed by compensating the perspective difference for large angle (LA) images. A reference feature point set is formed by simulating the multi-views of the reference image and performing feature point detection on simulated images. The proposed algorithm ensures effective matching of feature points between the reference image and LA images.

Moreover, how to precisely estimate model parameters is another important aspect for correction accuracy [

10,

11]. Goncalves [

12] experimentally proved the influence of the CP distribution on solving model parameters. The correction accuracy achieved by a uniform distribution of CPs is better than it obtained with a non-uniform set [

13]. In addition, traditional methods usually employ the same correction model for a whole image [

14] while few have considered the serious local distortions and resolution changes causing by LA imaging. Also, the large dimensions of an image would obviously magnify the error correction model parameters. Assuming that a 1000 × 1000 image is processed using the second-order polynomial model, the order of magnitude will reach 106. The piecewise linear functions [

15,

16,

17], which divide the images into triangular elements by Delaunay’s triangulation method, and take affine transformation as the mapping function, are suitable to reduce local geometric distortions. However, the correction accuracy possible using the affine transformation model is lower than that of using a projection transformation model for LA images. The projection transformation model additionally requires at least five CP pairs. Consequently, the proposed piecewise correction algorithm is indispensable for LA image correction. Firstly, the image is separated into grids according to the variability of spatial resolution. Severely distorted are as have relatively intensive grid distributions. Based on these grids, the overall CP distribution is controlled and the piecewise location is determined. Then, CPs are optimized by joining the CPs distribution measurement (DM) to select the representative CPs in each grid. Finally, subparts deduced from the piecewise location are corrected by different correction formulae using the optimized CPs.

The rest of this paper is organized as follows: In

Section 2, CP extraction based on multi-view simulation (MVS) is introduced. The piecewise correction algorithm is advanced with the optimized CPs by DM are described in

Section 3. In

Section 4, experimental results are presented to quantify the effectiveness of the new method. The conclusions of this paper with a summary of our results are provided in the final section.

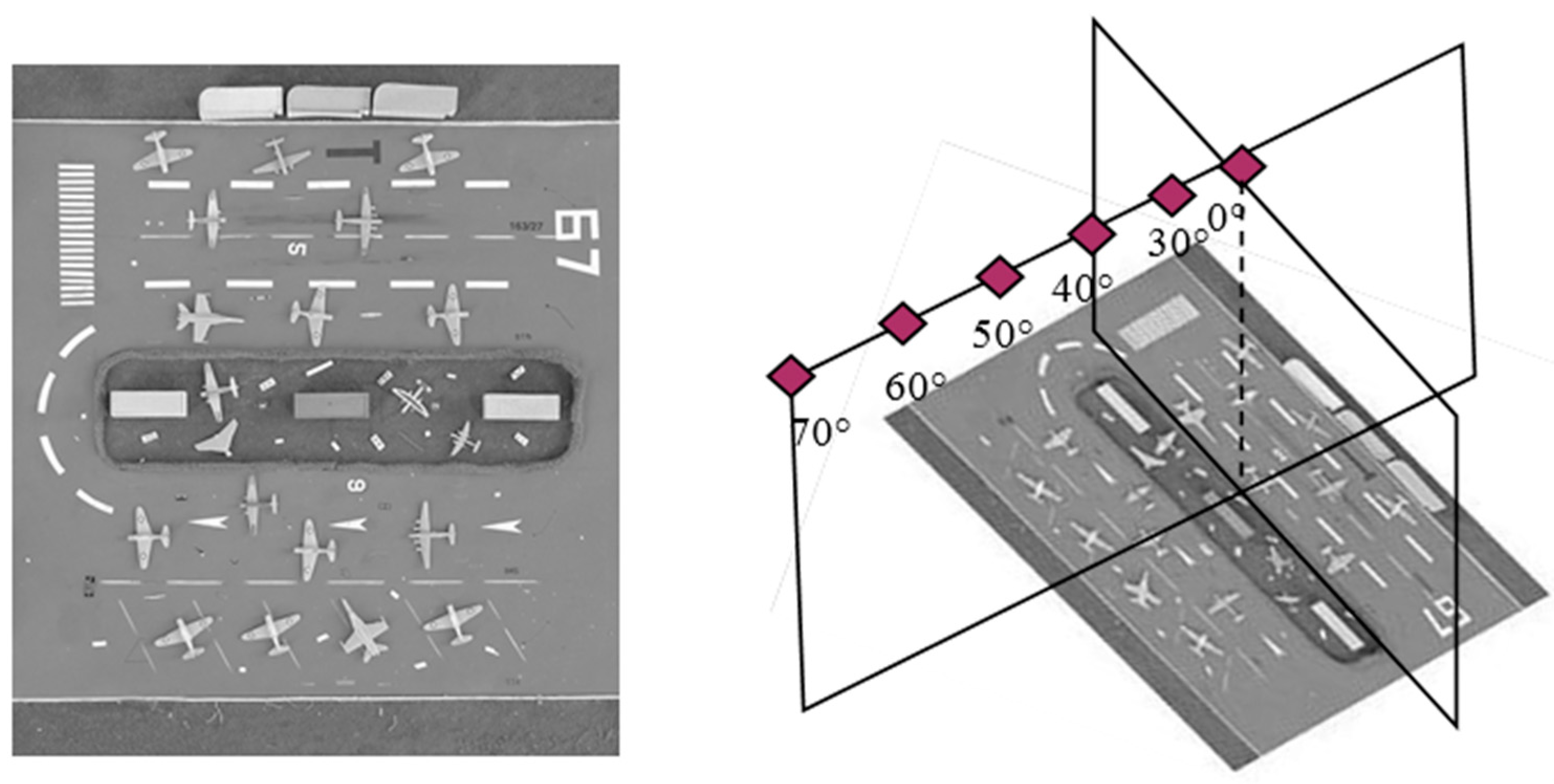

2. CPs Extraction Based on Multi-View Simulation

SIFT, having excellent performance against scaling, rotation, noise and illumination changes, has been applied widely in the feature detection field. However, the modeling process does not consider the imaging oblique angle which results in the change of neighborhood pixels as shown in

Figure 1. For example, in the process of the scale space extremum detection, determining whether the current pixel is a maximum value or minimum value requires 26 pixels in the neighborhood as a reference. If the reference pixels are changed, such as resolution reduction and gray distortion caused by LA imaging, the result of the extreme value would be changed. As another example, the process of the key point description also uses the gradient and direction of neighboring pixels as a reference. If the reference pixels were changed, the description vector would be changed which can reduce the matching probability. In the [

18], the impact of LA imaging on spatial resolution is analyzed. For LA images, along the seriously oblique direction, the spatial resolution is decreasing with the increasing of imaging angles as shown in

Figure 2. The off-nadir ground resolution

is given by:

where

is the nadir’s resolution,

θ is the angle from nadir. The off-nadir resolution is reduced by a factor of cosine squared of

θ. Equation (1) demonstrates that the resolution compression is nonlinear when the imaging angle is larger than 45°. The performance of SIFT is stable within 50° while it is obviously ineffective beyond 50°, which is shown in the following experimental

Section 4.1. The experimental results are consistent with the theoretical analysis.

Based on the above analysis, in order to compensate viewing angle differences between the reference image and LA images, SIFT is extended to the multi-views space in this paper. The transformation model employed in MVS is described in

Section 2.1. The detailed procedure of CPs extraction based on MVS is introduced in

Section 2.2.

2.1. Multi-View Transformation Model

The projection relation of ground scene

I0 to digital image

I can be described as:

where

A and

T are projection and translation, respectively.

According to the affine approximation theory [

19], the above model can be expressed as:

If any determinant of

A is strictly positive, following the Singular Value Decomposition (SVD) principle,

A has a unique decomposition:

where

> 0,

is a zoom parameter,

R1 and

R2 are two rotation matrices, and

t expresses a tilt, namely a diagonal matrix with first eigenvalue

t > 1 and the second eigenvalue equal to 1.

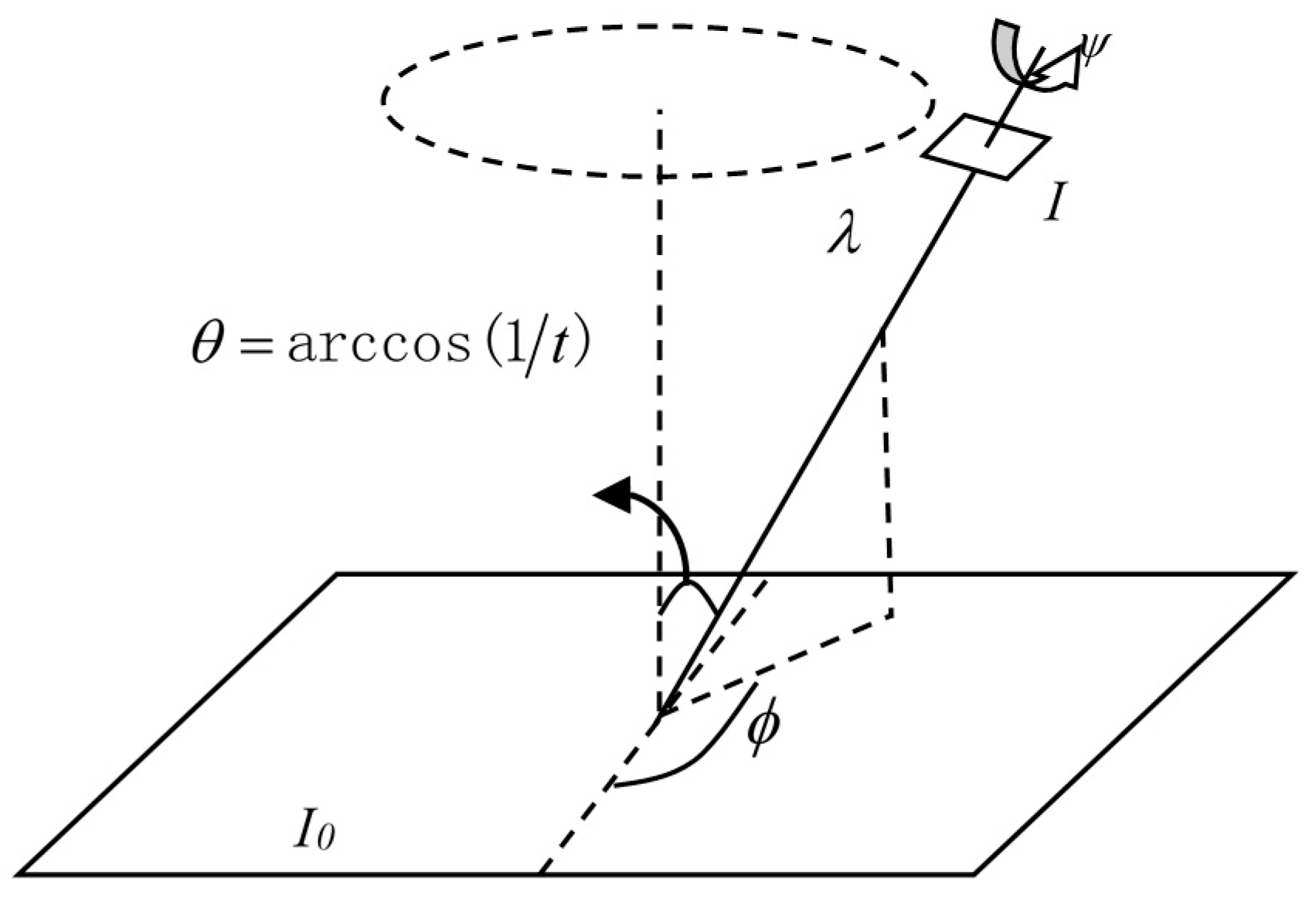

The geometric explanation for the matrix decomposition of transformation is shown in

Figure 3. The angle

φ is called longitude, which is formed by the plane containing the normal and the optical axis with a fixed vertical plane, where

φ = ∊[0,

π). The angle

θ = arccos(1/

t) is called latitude, which is formed by the optical axis with the normal to the image plane

I0, where

θ = ∊[0,

π/2).

ψ is the rotation angle of the imaging sensor around the optical axis. As seen in

Figure 3, the motion of the imaging sensor leads to a parameter change from the positive perspective (

λ0 = 1,

t0 = 1,

φ0 =

ψ0 = 0) to the oblique angle (

λ,

t,

φ,

ψ), which represents the transformation

I(

x,y) →

I(

A(

x,y)), where the longitude

φ and the latitude

θ determine the amount of perspective changes. The MVS is mainly based on two factors: the longitude

φ and the latitude

θ.

θ is represented by the tilt parameter

t = |1/cosθ| which measures the oblique amount between the reference image and LA images. The oblique distortion causes a directional subsampling in the direction given by the longitude

φ.

The Affine SIFT (ASIFT) approach [

9] operates on each image to simulate all distortions caused by a variation of the camera optical axis direction, and then it applies the SIFT method. ASIFT provides robust image matching between the two images due to the viewpoint change. However, ASIFT is time consuming. Assuming that the tilt and angle ranges are [

tmin,

tmax] = [1,4

] and [

φmin,

φmax] = [0°, 180°], and the sampling steps are Δ

t =

Δ

φ = 72°/

t. The complexity of the ASIFT approach is 1 + (|

Γt|−1)(180°/72°) = 1 + 5 × 2.5 = 13.5 times as much as that of a single SIFT routine, where

. Even if the two-resolution scheme is applied, the ASIFT complexity is still more than twice that of SIFT. On the other hand, multiple matching of ASIFT results in matching point redundancy which demands huge calculating quantity and long computing time during the CPs optimization process. Even so, ASIFT provides a good idea for extracting CPs between the two images of viewing angle differences.

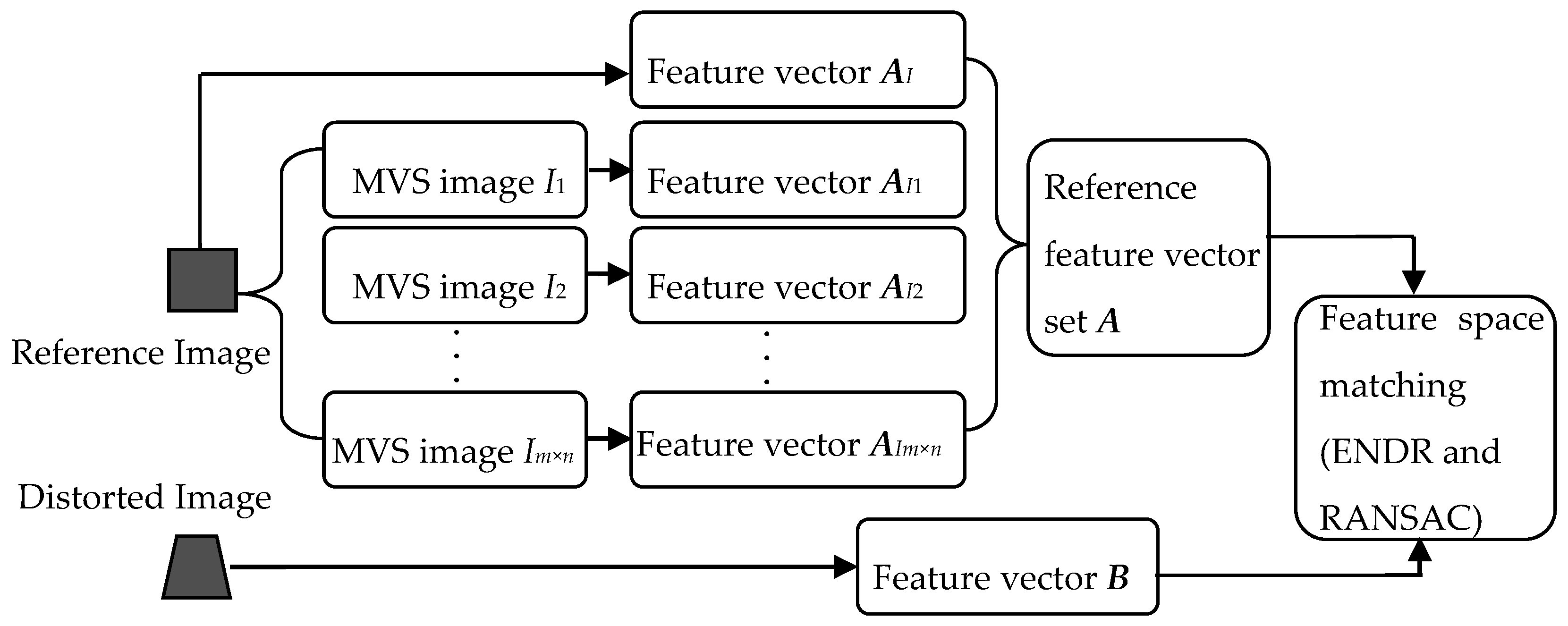

Inspired by ASIFT, the new CPs extraction algorithm is proposed by simulating multi-views of the reference image to compensate the viewing angle difference for LA images. And then the new algorithm applies the SIFT to simulated images to construct a reference feature points database. Finally, feature matching is conducted between the reference feature point database and the feature points set detected from the LA image. The overview of the proposed algorithm is shown as

Figure 4.

2.2. Procedure of CPs Extraction Based on Multi-View Simulation

The proposed algorithm proceeds by the following steps:

The reference image I is transformed by simulating all possible affine distortions by changing the camera optical axis orientation. In this process, the oblique transform is simulated by the tilt parameter t. Assuming that the y direction is the oblique direction, it represents I(x,y)→I((x,ty), which is equivalent to perform a y directional t-subsampling. Therefore, an antialiasing filter is previously applied, namely the convolution by a Gaussian with standard deviation This ensures a very small aliasing error.

The simulated images of the reference image are calculated by the tilt parameter

t and the longitude

φ with sampling step lengths. When the latitude

θ increases at a fixed degree, the image distortion is growing. Hence, the sampling density of

θ should increase with the increasing of

θ. As a result, the corresponding tilt parameter

t follows a geometric series

tk1 = 1,

u,

u2,…,

um(

k1 = 0,1,…,

m), with

u > 1. Meanwhile, the image distortion is more drastic by a fixed longitude displacement Δ

φ at the larger latitude

θ = arccos(1/

t). Hence, the longitudes

φ are an arithmetic series for each tilt

(

k2 = 0,1,…,

n), where the integer

k2 satisfies the condition

< 180°). The calculation of simulated images can be described as:

where the

Ik expresses a set of simulated images of the reference image

I, namely

I1,

I2,

…,

Im×n.

The feature point detection and description (SIFT) are applied to the reference image

I and the simulated images

I1,

I2,

…,

Im×n. The feature vector sets

AI,

AI1,

AI2,…,

AIm×n are established which are combined together to form the reference feature vector set

A:

The feature vector set

B, obtained from the distorted LA image, is matched with the reference feature vector set

A by employing the European neighbor distance ratio criterion (ENDR) [

20] and Random Sample Consensus (RANSAC) [

21].

The two-resolution scheme [

9] is applied to the proposed algorithm to reduce the computation complexity. With this, matched feature points, namely CPs, are extracted.

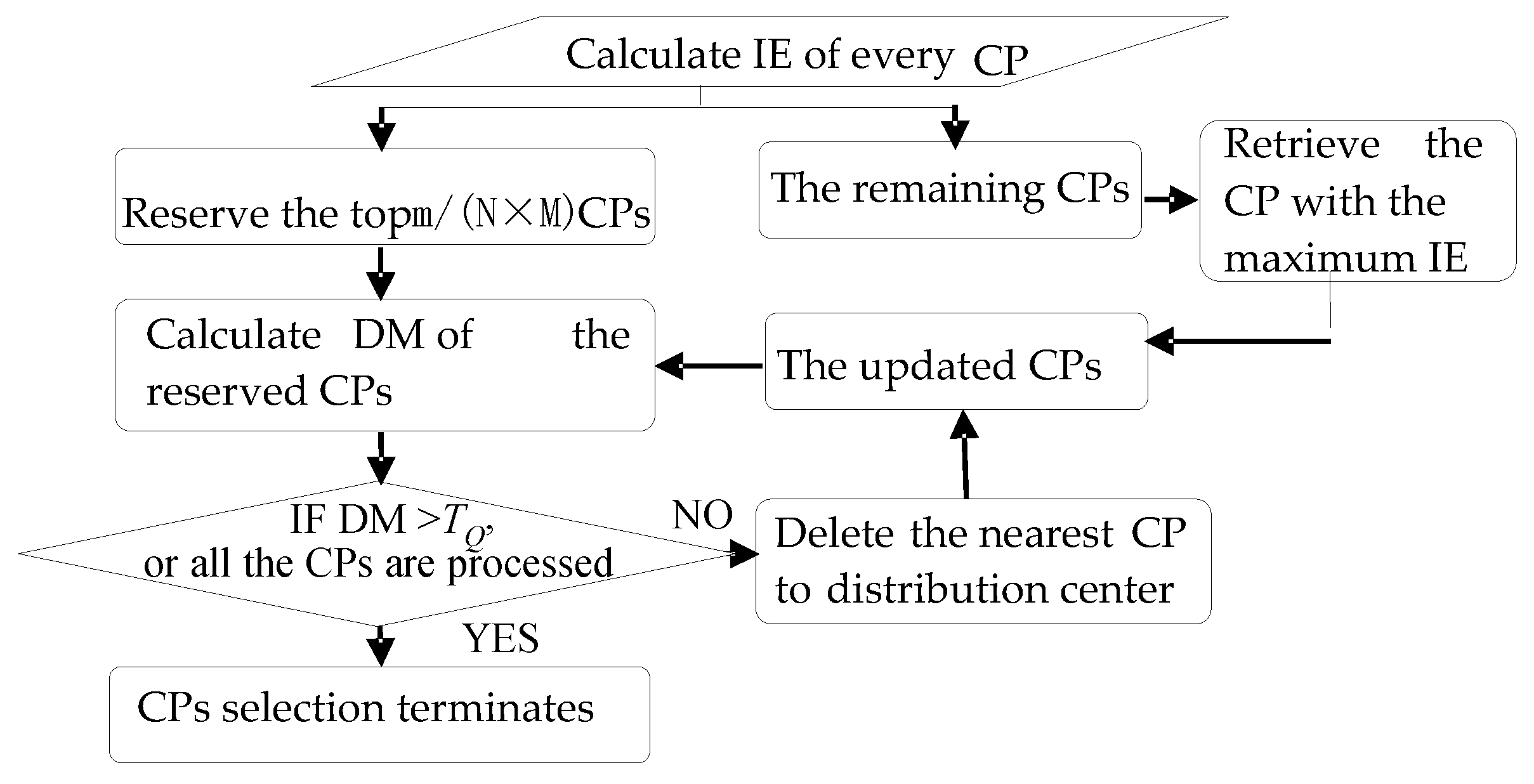

3. Piecewise Correction with Optimized CPs Based on Distribution Measurement

For different correction models, the required minimum number of CPs is different. However, a larger number of CPs doesn’t achieve a better root mean square error (RMSE) when CPs are concentrated in a local area. The CPs which are uniformly distributed in the image can achieve accurate model parameter estimation. To quantify the uniformity of CPs, the concept of DM is introduced based on information entropy. Then the proposed piecewise correction algorithm with the optimized CPs is presented.

3.1. The Distribution Measurement of CPs

Zhu [

22] indicated that the probability of correct matching and information content enjoy strong correlation. This means that the probability of correct matching increases with increasing information entropy. The information entropy of every CP can be obtained by defining the second order differential invariants as the descriptor. The second order differential invariants

v are [

23]:

where

Lx and

Ly are the convolution by the one-dimensional Gauss kernel, and

Lxy is the convolution by the two-dimensional Gauss kernel.

In order to calculate the probability of entropy, the descriptor

v is partitioned into multi-dimensional vector space. The partitioning is dependent on the distance between descriptors which is measured by Mahalanobis distance. The distance between descriptors

v1 and

v2 is:

where

Λ is the covariance matrix.

Λ can be SVD decomposed into

Λ−1 =

GTPG, where

P is a diagonal matrix and

G is an orthogonal matrix. Then,

v can be normalized by

P, which represents

vnorm =

P1/2Gv. After the normalization,

D(

v1,

v2) can be rewritten as:

The descriptor

v can be partitioned into many cells of equal size. Then the probability of each cell is used to calculate the entropy of

v:

where

pi =

nv/

n is the probability of

v.

nv and

n are the number of CPs in each cell and the total number of CPs, respectively.

The CP distribution can be regarded as the spatial dispersion of points, which can be measured by the normalized mean distance between CP and the distribution center of CPs. The distribution center of CPs is:

and DM is computed as:

where

Ix and

Iy are the row and column of the region. The parameter

wi is the weight of the CP

i, which is obtained by

H(

v) as Equation (10).

The bigger the DM is, the better the CPs distribution is. The smaller the DM is, the more concentrated the CPs is.

3.2. Piecewise Correction Strategy with Optimized CPs

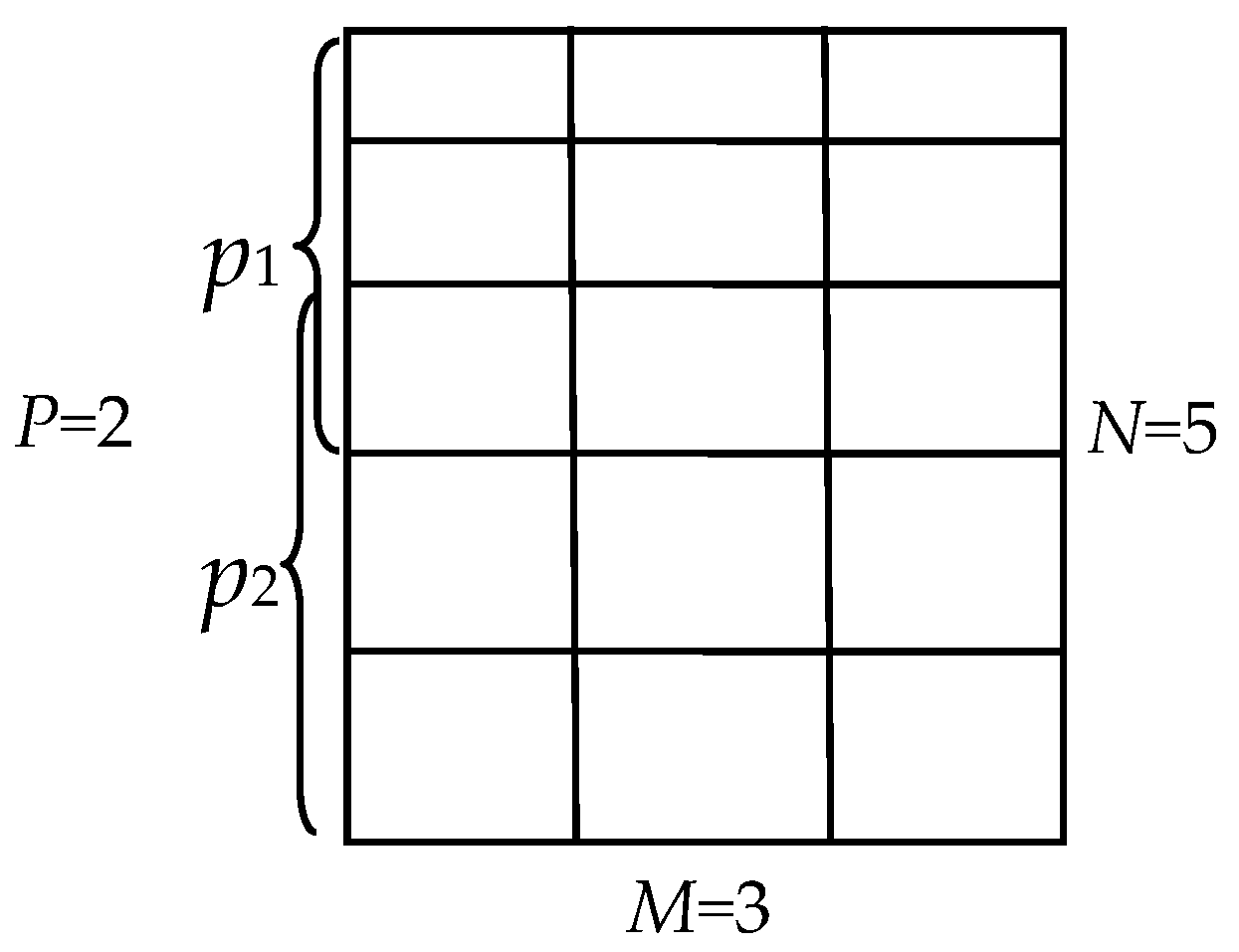

According to the previous analysis, piecewise correction is indispensable for LA images, and the distribution quality of CPs is important to RMSE. Consequently, the image is gridded to determine the piecewise location and simultaneously control the overall CPs distribution. In each grid, DM is employed to constrain the CP quality. By using these procedures, the distribution uniformity of CPs is guaranteed from global to local.

Since the resolution of LA images along the oblique direction (assuming the

y direction) is nonlinear loss, the gridded strategies of the

x direction and the

y direction are different. For the

x direction, the image is averagely divided into

M subparts. For the

y direction, the resolution variability is used to measure the distortion. Our aim is enable each grid to own approximately changing rate to the resolution. As a result, the gridded strategy of the

y direction is shown as in

Figure 5.

It can be seen that the larger the angle is, the smaller the division interval is. The gridded strategy for the

y direction is:

where

θ0 and

θN are boundaries of the viewing angles.

N is the subpart amount in the

y direction

.The detail of the proposed piecewise correction algorithm goes as follows:

4. Experimental Results and Analysis

In order to comprehensively illustrate the performance of the proposed method, two groups of datasets are employed. One group is taken from our semi-physical platform by imaging nadir images for the reference image and off-nadir images for distortion images. The viewing angles are from 30° to 70° for an interval of 10° as shown in

Figure 8.

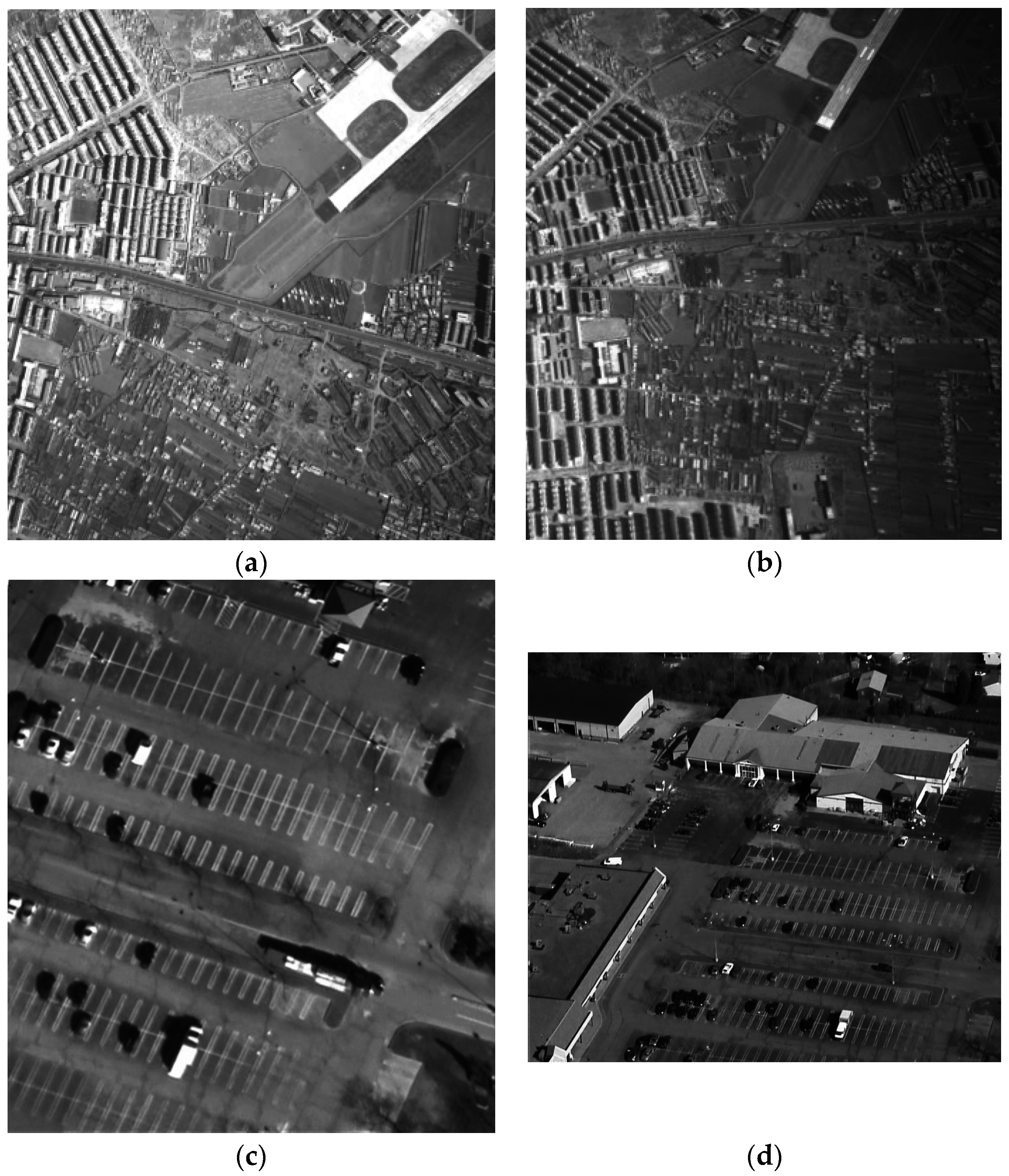

Another is aerial remote sensing images as shown in

Figure 9, and their details are listed in

Table 1. Our programs are implemented with MATLAB 2012a and the experiments are conducted on a Windows 7 computer with a Xeon 2.66 GHz CPU, and 8 GB memory.

4.1. Performance of CPs Extraction Based on Multi-View Simulation

This experiment is to examine the adaptability of the CPs extraction based on MVS for LA images. MVS of the reference image takes into account all distortions with enough accuracy which are caused by the variation of the imaging sensor optical axis. Therefore, the MVS is applied with very large parameter sampling ranges and small sampling steps. With the increase of the latitude

θ, the distortions are increasingly larger. Especially near 90°, a little change of latitude

θ can give rise to severe distortion. As a result, the sampling density would grow. Setting

satisfies this requirement. Similarly, since the image distortion in the high latitude is larger than that in the low latitude, the sampling step of the longitude

φ should be reduced with the increasing of the latitude

θ. We set

= 72°/

tk1. The corresponding sampling parameters for MVS are demonstrated in

Table 2. In our experiment, we set

m = 4 and

n = 2.

Table 3 lists the correct matching number which is conducted by ASIFT, SIFT, Harris-Affine [

24], Hessian-Affine [

7] and the proposed algorithm respectively. The computation costs of ASIFT, SIFT and the proposed algorithm are shown in

Table 4.

It can be inferred that the performances of all the algorithms are deduced with the increasing of the imaging angle. The ASIFT and the proposed algorithm which can obtain more CPs than the other algorithms are more robust for LA images. For the distorted image with 70° as example, SIFT gets 0 CPs, the proposed algorithm gets 52 CPs, and the ASIFT gets 120 CPs. Compared with SIFT algorithm, the proposed algorithm successfully achieves the LA image correction, so it is worth spending a little bit more time. However, the ASIFT is time consuming compared with the proposed algorithm. The computation cost of ASIFT is nearly twice that of the proposed algorithm. Multiple matching of ASIFT results in the matching point redundancy which demands the huge calculating quantity and long computing time in the following process. This is indicative of the fact that the proposed algorithm is indispensable and more efficient than the other algorithms, especially for LA images. In addition, for the proposed algorithm, the situation whereby the result of 40° is more than the 30° one is related the sampling parameters u.

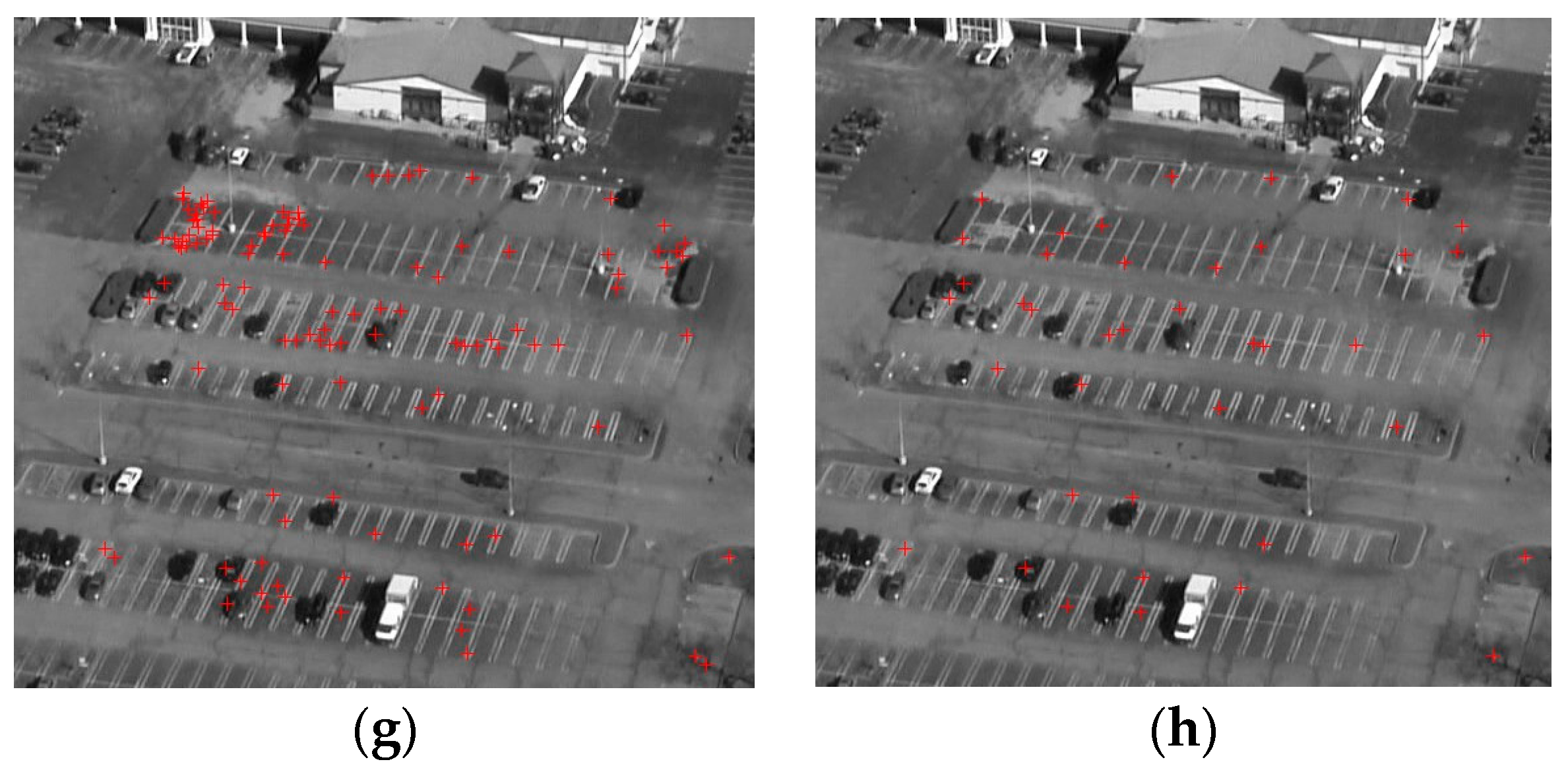

4.2. Performance of the Piecewise Correction with Optimized CPs

The total CPs of the whole image is adjustable. The number normally is 30~100. We set the total number

m = 45. Considering the CPs number and computational complexity, we set

M = 3,

N = 5 and

p = 2, just as shown in

Figure 7. If

Ix equals to

Iy, the threshold

TQ should be 0.25. Considering that

Ix and

Iy usually are different,

TQ is adjusted to 0.35. For the CPs optimization process, the computation efficiencies of two CPs sets, which are obtained by the ASIFT and the proposed algorithm, are listed in

Table 5. It is illustrated that the ASIFT is too time consuming to apply in practice. This is another proof that the proposed algorithm is indispensable. The CPs before and after optimization are shown in

Figure 10.

It is obvious that the CPs gather around some objects before optimization. After the optimization, dense CPs are reduced. To examine the behavior of the proposed method, this part presents the correction results conducted by the total CPs from

Section 2.2, the optimized CPs by using DM, the piecewise linear functions [

15] by using the optimized CPs, and the piecewise strategy from

Section 3.2, respectively. Except for the piecewise linear functions, the projective transformation is employed for the correction model. The test points in all the experiments have the same quantity and quality to ensure the equality of experiments, the number is set to 12. Here, RMSE is used to scale the correction accuracy. It can be given as:

where

n is the number of CPs, Δ

xi and Δ

yi are the CPs’ offset between the reference coordinates and the corrected coordinates. We also compute the correction errors of the

x and

y directions based on

and

.

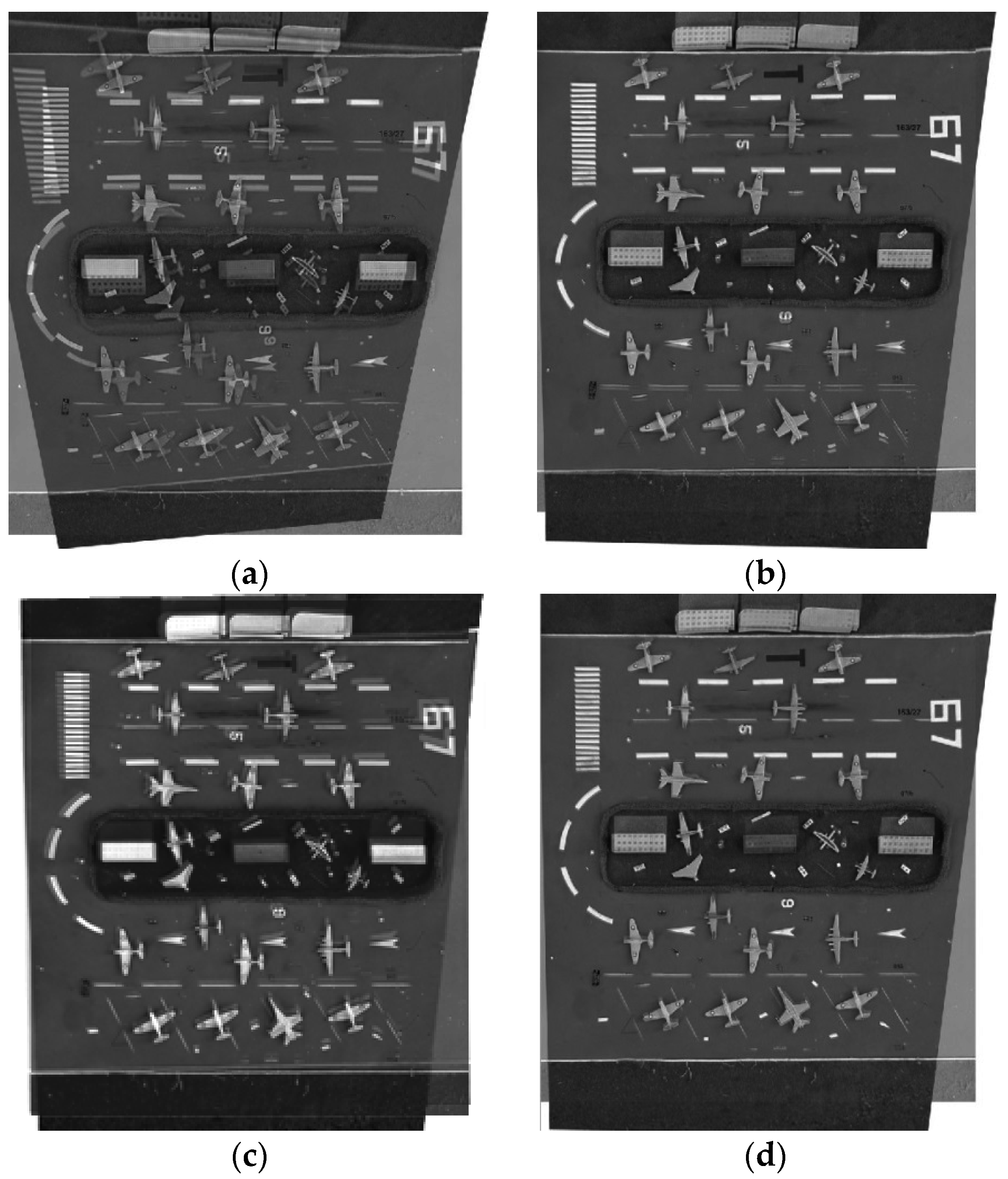

Comparison of correction errors for the

x and

y direction is listed in

Table 6, and the comparison of RMSEs is listed in

Table 7.The mosaic results by different correction methods for 60° as an example are shown in

Figure 11.

Figure 12 shows the mosaic results of the aerial images 1 and 2 by different correction methods.

Visual inspection suggests that

Figure 11a and the

Figure 12b possess obvious double shadows. It means that correcting using the total CPs gives rise to a larger correction error. Similarly,

Figure 11c and the

Figure 12f also possess double shadows. This demonstrates the piecewise linear functions are not suitable for correcting LA images. A comparison of correction errors for the

x and

y direction is given in

Table 6, and comparisons of RMSEs are listed in

Table 7. It is observed that the optimized distribution of CPs is effective at improving the correction accuracy. After CP optimization, the reduction of the number of CPs doesn’t lead to a reduction of the correction accuracy, instead, the correction accuracy in the

x and

y direction is advanced, and the final RMSE is also improved. For the LA image with 60°, RMSE increases 12.03 pixels.

Compared with the model estimation based on the whole image, the proposed piecewise strategy improves the correction accuracy in both the x and y direction, and the final RMSE accordingly increases. The improvement of the correction accuracy increases with the increasing of the oblique angle especially for the y direction. For the LA image with 70°, RMSE of the proposed piecewise correction advances 0.45 pixels compared to the whole image correction. We can conclude that the proposed algorithm is effective and adaptable to correct LA distorted images.