Ontology-Based High-Level Context Inference for Human Behavior Identification

Abstract

:1. Introduction

2. Related Work

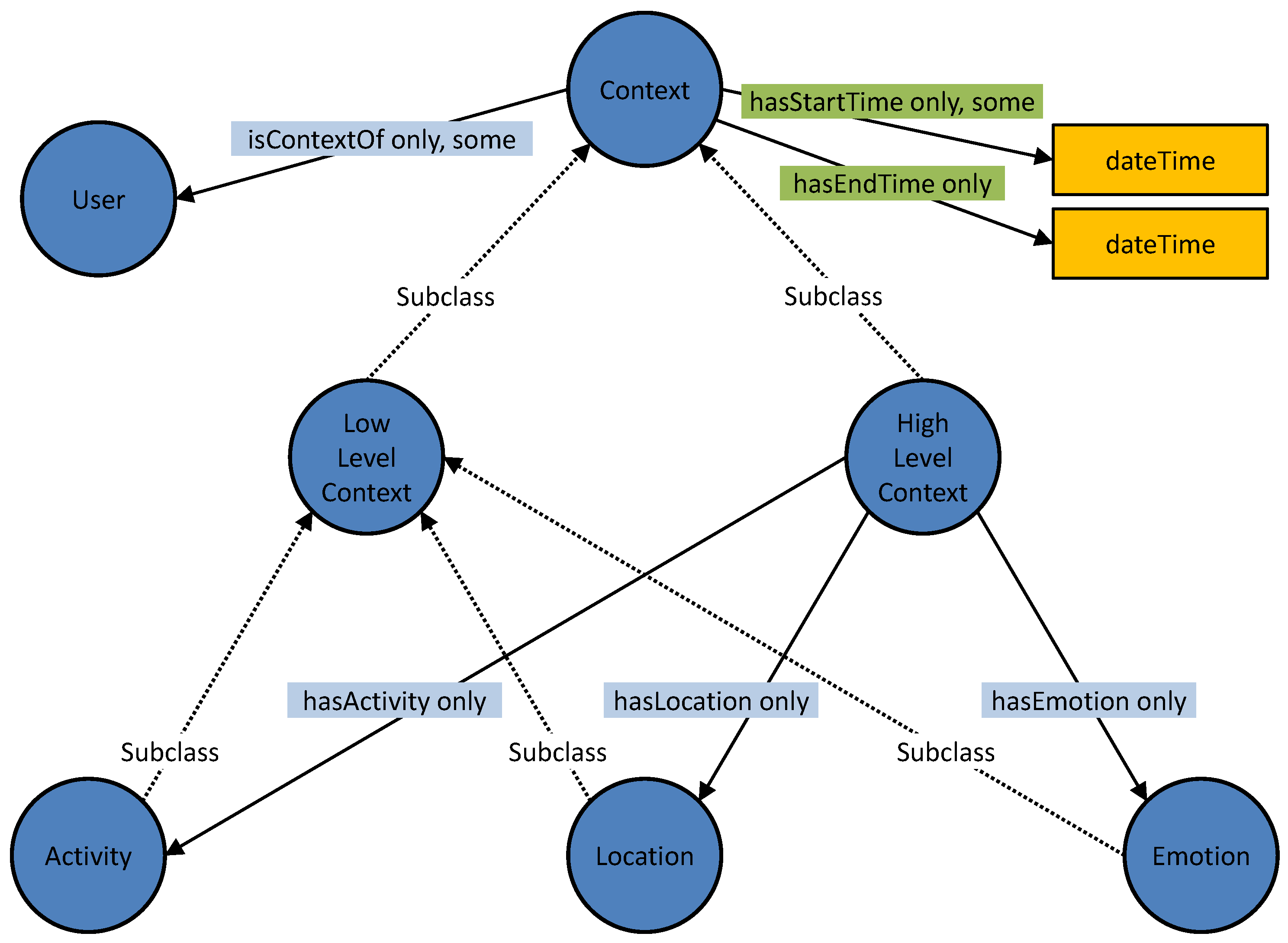

3. Mining Minds Context Ontology

3.1. Terminology for the Definition of Context

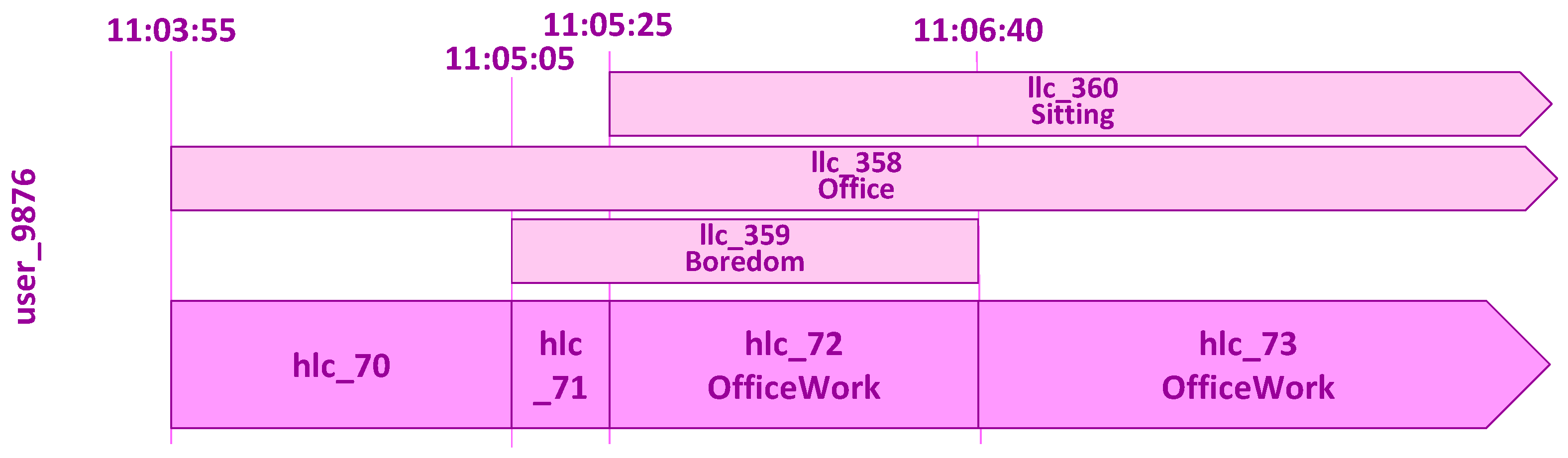

3.2. Instances of Context

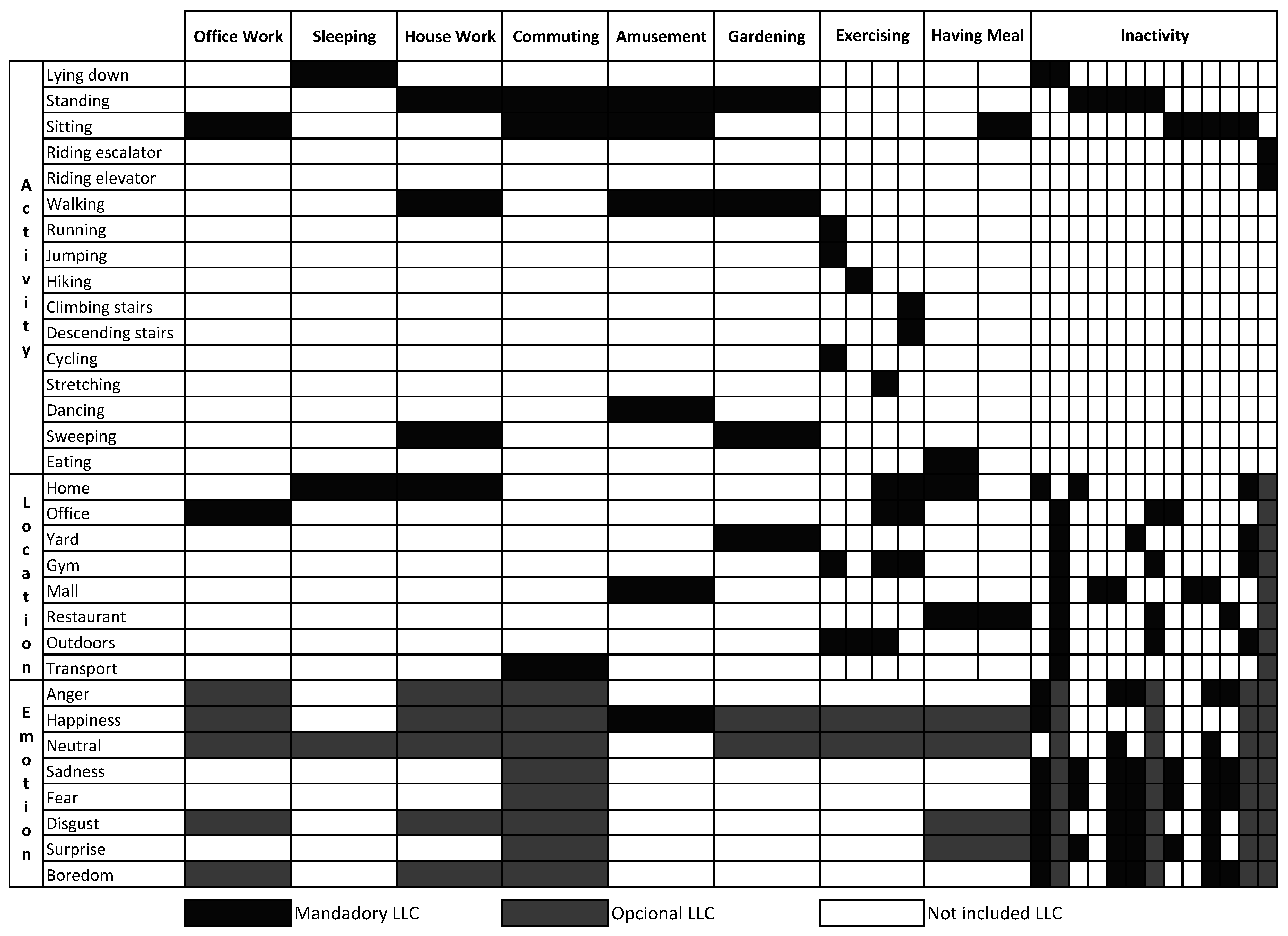

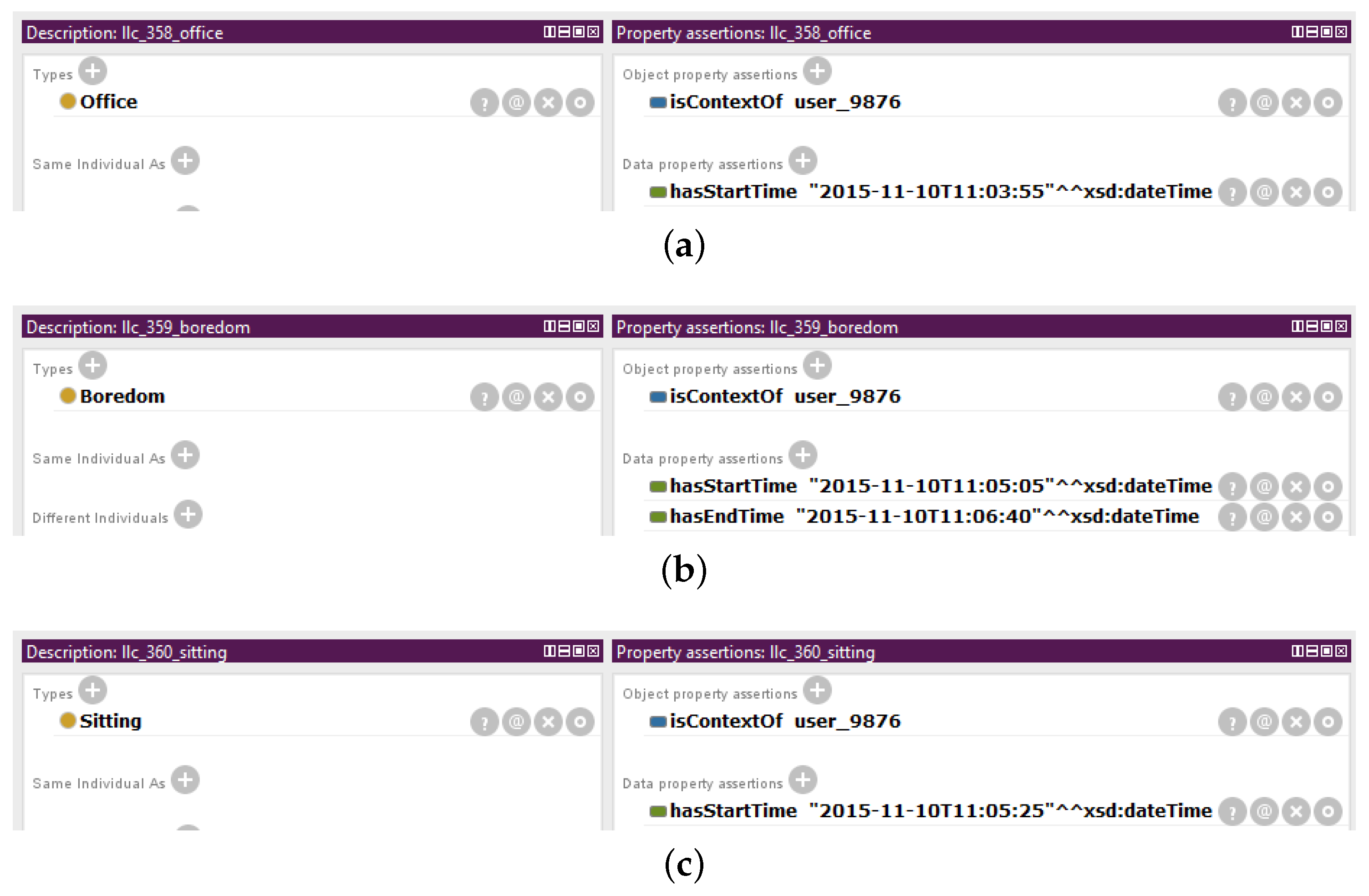

3.2.1. Instances of Low-Level Context

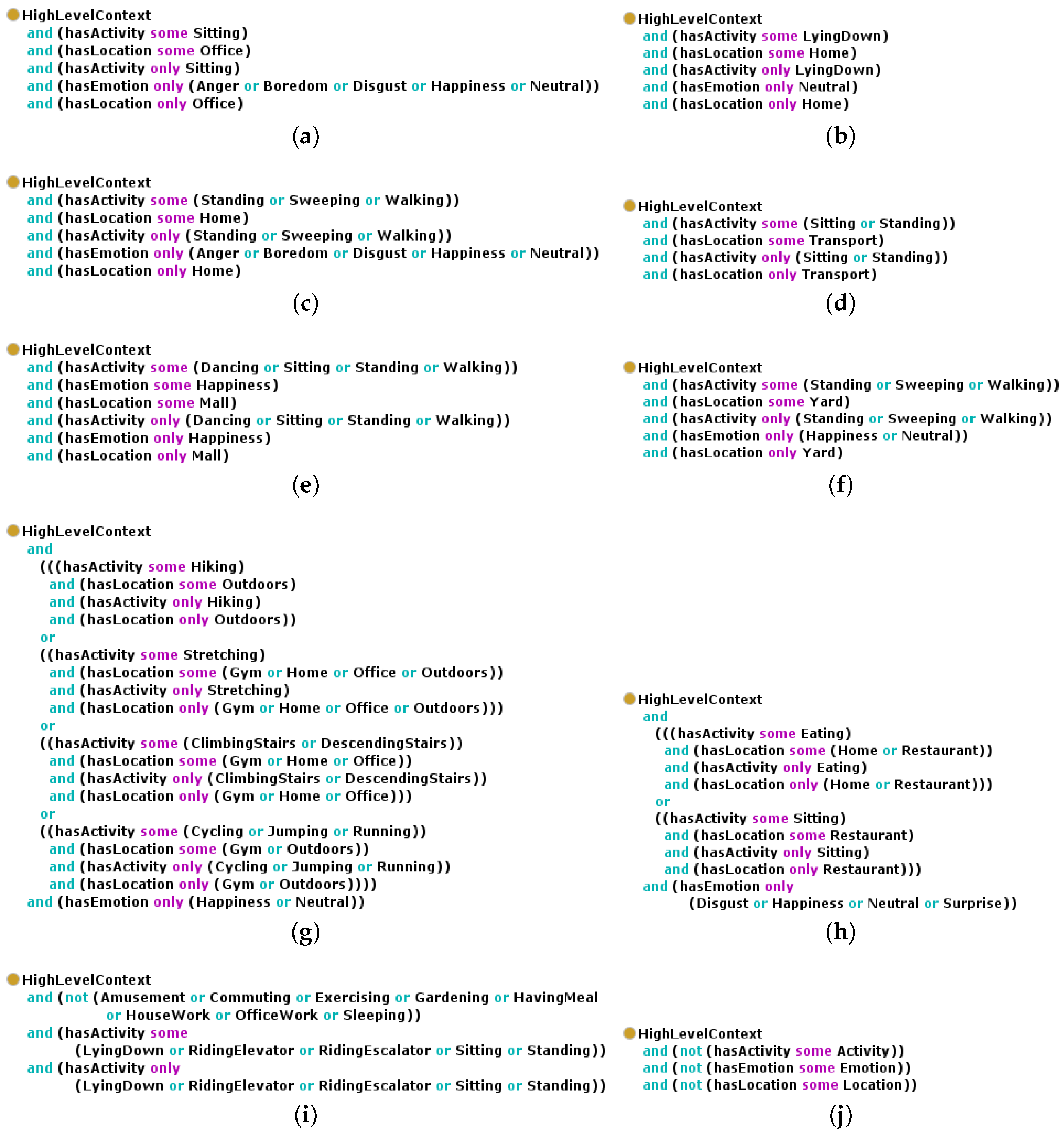

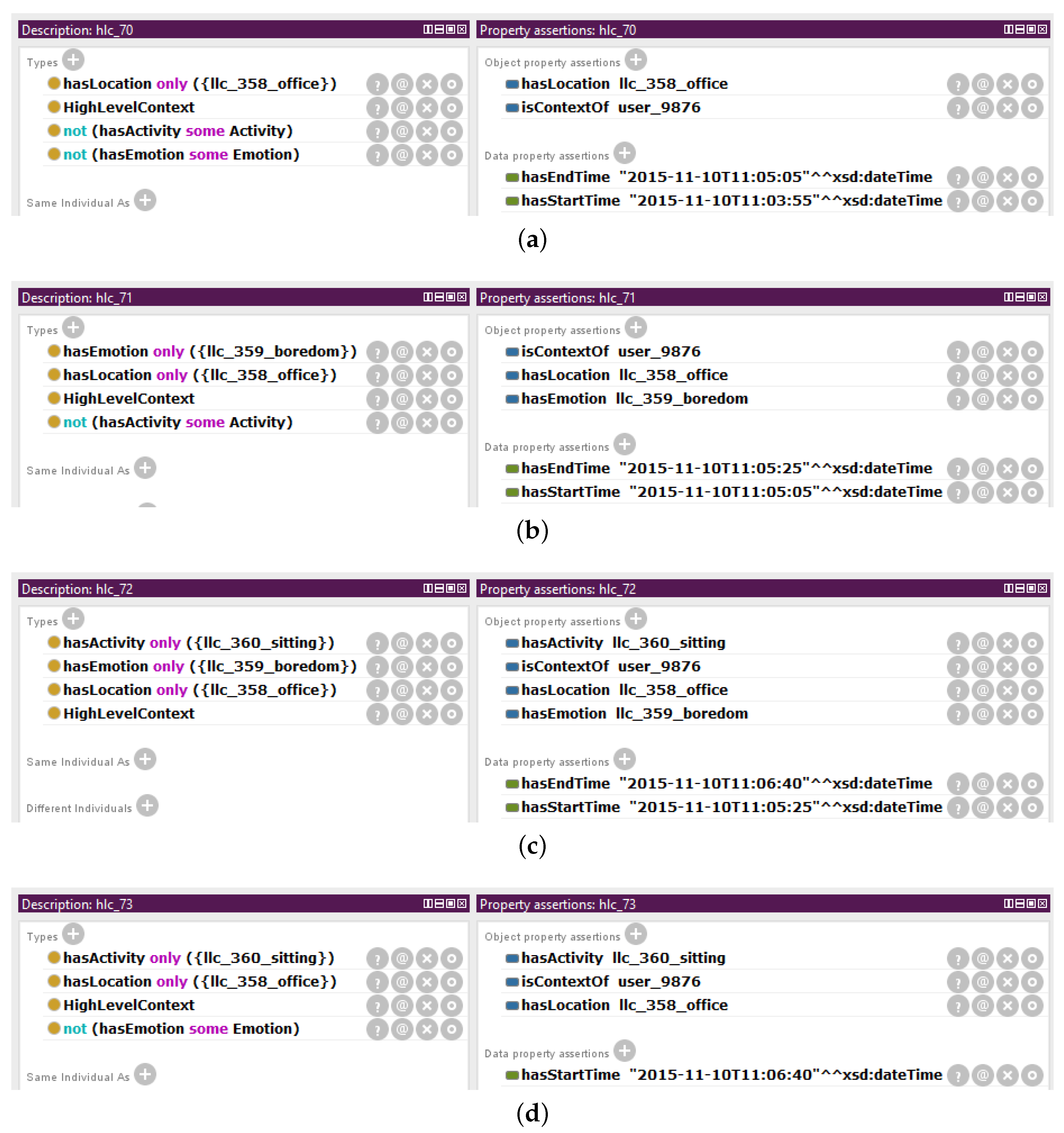

3.2.2. Instances of Unclassified High-Level Context

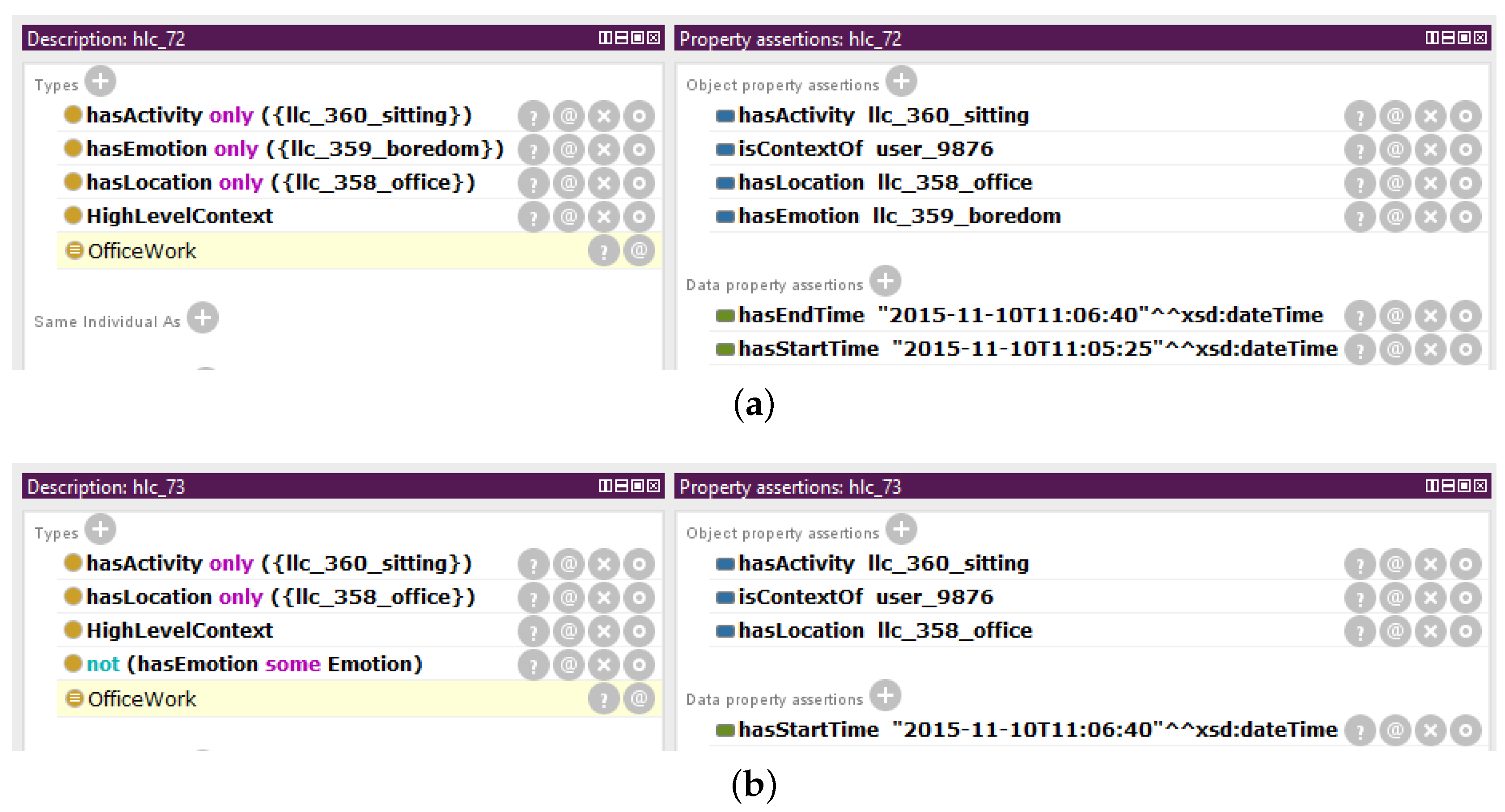

3.2.3. Instances of Classified High-Level Context

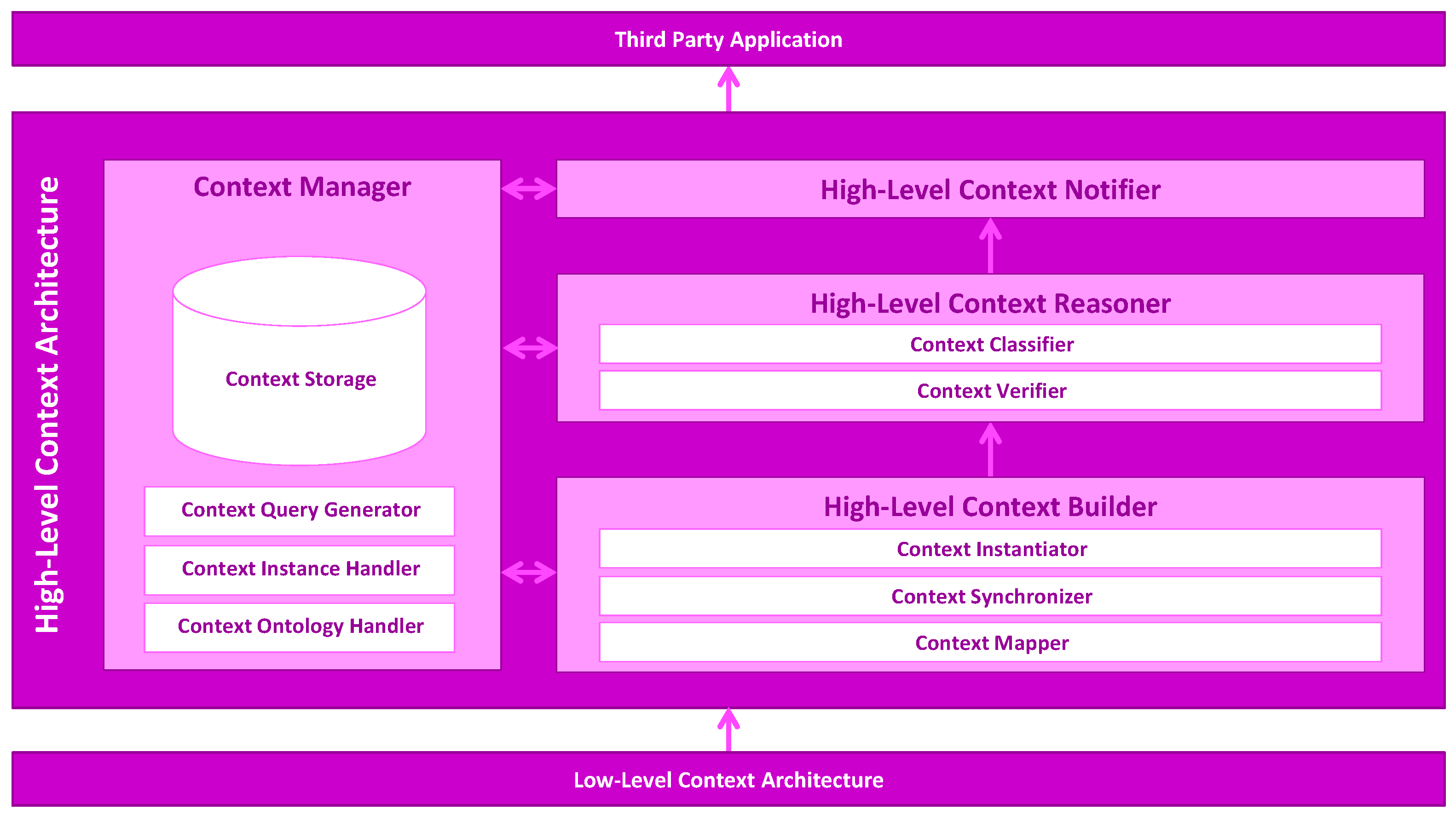

4. Mining Minds High-Level Context Architecture

4.1. High-Level Context Builder

4.1.1. Context Mapper

4.1.2. Context Synchronizer

4.1.3. Context Instantiator

4.2. High-Level Context Reasoner

4.2.1. Context Verifier

4.2.2. Context Classifier

4.3. High-Level Context Notifier

4.4. Context Manager

4.4.1. Context Storage

4.4.2. Context Ontology Handler

4.4.3. Context Instance Handler

4.4.4. Context Query Generator

SELECT ?hlc

WHERE

{ ?hlc rdf:type HighLevelContext ;

isContextOf user_9876 ;

hasStartTime ?starttime .

FILTER NOT EXISTS ?hlc hasEndTime ?endtime .

FILTER ( ?starttime <= “2015-11-10T11:05:25”ˆˆxsd:dateTime )

}

5. Evaluation

5.1. Robustness of the Mining Minds Context Ontology

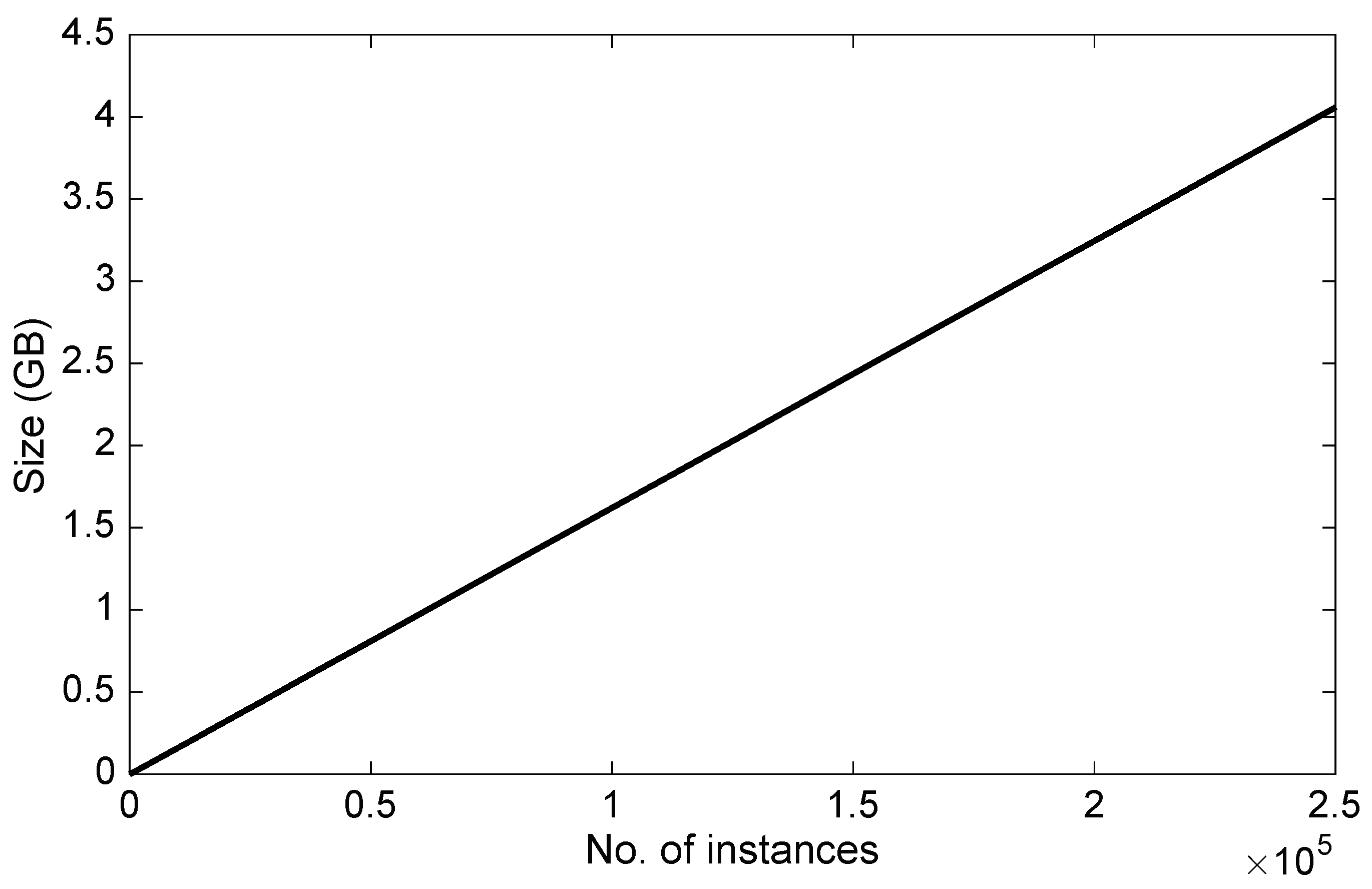

5.2. Performance of the Mining Minds High-Level Context Architecture

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ke, S.R.; Thuc, H.L.U.; Lee, Y.J.; Hwang, J.N.; Yoo, J.H.; Choi, K.H. A review on video-based human activity recognition. Computers 2013, 2, 88–131. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Fallah, N.; Apostolopoulos, I.; Bekris, K.; Folmer, E. Indoor human navigation systems: A survey. Interact. Comput. 2013, 25, 21–33. [Google Scholar]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Koolagudi, S.G.; Rao, K.S. Emotion recognition from speech: A review. Int. J. Speech Technol. 2012, 15, 99–117. [Google Scholar] [CrossRef]

- Liao, L.; Fox, D.; Kautz, H. Extracting places and activities from gps traces using hierarchical conditional random fields. Int. J. Robot. Res. 2007, 26, 119–134. [Google Scholar] [CrossRef]

- Banos, O.; Damas, M.; Pomares, H.; Prieto, A.; Rojas, I. Daily living activity recognition based on statistical feature quality group selection. Expert Syst. Appl. 2012, 39, 8013–8021. [Google Scholar] [CrossRef]

- Pan, Y.; Shen, P.; Shen, L. Speech emotion recognition using support vector machine. Int. J. Smart Home 2012, 6, 101–108. [Google Scholar]

- Liming, C.; Nugent, C.; Okeyo, G. An Ontology-Based Hybrid Approach to Activity Modeling for Smart Homes. IEEE T. Hum. Mach. Syst. 2014, 44, 92–105. [Google Scholar]

- Khanna, P.; Sasikumar, M. Rule Based System for Recognizing Emotions Using Multimodal Approach. Int. J. Adv. Comput. Sci. Appl. 2013, 4, 7. [Google Scholar] [CrossRef]

- Bae, I.H. An ontology-based approach to ADL recognition in smart homes. Future Gener. Comput. Syst. 2014, 33, 32–41. [Google Scholar] [CrossRef]

- BakhshandehAbkenar, A.; Loke, S.W. MyActivity: Cloud-Hosted Continuous Activity Recognition Using Ontology-Based Stream Reasoning. In Proceedings of the IEEE International Conference on Mobile Cloud Computing, Services, and Engineering, Oxford, UK, 8–11 April 2014.

- Riboni, D.; Bettini, C. OWL 2 Modeling and Reasoning with Complex Human Activities. Pervasive Mob. Comput. 2011, 7, 379–395. [Google Scholar] [CrossRef]

- Banos, O.; Bilal-Amin, M.; Ali-Khan, W.; Afzel, M.; Ali, T.; Kang, B.H.; Lee, S. The Mining Minds Platform: A Novel Person-Centered Digital Health and Wellness Framework. In Proceedings of the 9th International Conference on Pervasive Computing Technologies for Healthcare, Istanbul, Turkey, 20–23 May 2015.

- Banos, O.; Bilal Amin, M.; Ali Khan, W.; Afzal, M.; Hussain, M.; Kang, B.H.; Lee, S. The Mining Minds digital health and wellness framework. BioMed. Eng. OnLine 2016, 15, 165–186. [Google Scholar] [CrossRef] [PubMed]

- Baldauf, M.; Dustdar, S.; Rosenberg, F. A survey on context-aware systems. Int. J. Ad Hoc Ubiquitous Comput. 2007, 2, 263–277. [Google Scholar] [CrossRef]

- Bettini, C.; Brdiczka, O.; Henricksen, K.; Indulska, J.; Nicklas, D.; Ranganathan, A.; Riboni, D. A survey of context modelling and reasoning techniques. Pervasive Mob. Comput. 2010, 6, 161–180. [Google Scholar] [CrossRef]

- Perera, C.; Zaslavsky, A.; Christen, P.; Georgakopoulos, D. Context Aware Computing for The Internet of Things: A Survey. IEEE Commun. Surv. Tutor. 2014, 16, 414–454. [Google Scholar] [CrossRef]

- Chen, H.; Finin, T.; Joshi, A. The SOUPA Ontology for Pervasive Computing. In Ontologies for Agents: Theory and Experiences; Tamma, V., Cranefield, S., Finin, T., Willmott, S., Eds.; BirkHauser: Basel, Switzerland, 2005; pp. 233–258. [Google Scholar]

- Wang, X.H.; Zhang, D.Q.; Gu, T.; Pung, H.K. Ontology based context modeling and reasoning using OWL. In Proceedings of the Second IEEE Annual Conference on Pervasive Computing and Communications Workshops, Orlando, FL, USA, 14–17 March 2004; pp. 18–22.

- Chen, H.; Finin, T.; Joshi, A. An ontology for context-aware pervasive computing environments. Knowl. Eng. Rev. 2003, 18, 197–207. [Google Scholar] [CrossRef]

- Gu, T.; Pung, H.K.; Zhang, D.Q. A Middleware for Building Context-Aware Mobile Services. In Proceedings of IEEE Vehicular Technology Conference (VTC), Milan, Italy, 17–19 May 2004.

- Hervás, R.; Bravo, J.; Fontecha, J. A Context Model based on Ontological Languages: A Proposal for Information Visualization. J. UCS 2010, 16, 1539–1555. [Google Scholar]

- Poveda Villalon, M.; Suárez-Figueroa, M.C.; García-Castro, R.; Gómez-Pérez, A. A context ontology for mobile environments. In Proceedings of the Workshop on Context, Information and Ontologies (CEUR-WS), Lisbon, Portugal, 11–15 October 2010.

- Agostini, A.; Bettini, C.; Riboni, D. Hybrid Reasoning in the CARE Middleware for Context Awareness. Int. J. Web Eng. Technol. 2009, 5, 3–23. [Google Scholar] [CrossRef]

- Riboni, D.; Bettini, C. COSAR: Hybrid Reasoning for Context-aware Activity Recognition. Pers. Ubiquitous Comput. 2011, 15, 271–289. [Google Scholar] [CrossRef]

- Okeyo, G.; Chen, L.; Wang, H.; Sterritt, R. Dynamic sensor data segmentation for real-time knowledge-driven activity recognition. Pervasive Mobile Comput. 2014, 10, 155–172. [Google Scholar] [CrossRef]

- Helaoui, R.; Riboni, D.; Stuckenschmidt, H. A Probabilistic Ontological Framework for the Recognition of Multilevel Human Activities. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp ’13), Zurich, Switzerland, 8–12 September 2013; ACM: New York, NY, USA, 2013; pp. 345–354. [Google Scholar]

- Rodriguez, N.D.; Cuellar, M.P.; Lilius, J.; Calvo-Flores, M.D. A fuzzy ontology for semantic modelling and recognition of human behaviour. Knowl. Based Syst. 2014, 66, 46–60. [Google Scholar] [CrossRef]

- Korpipaa, P.; Mantyjarvi, J.; Kela, J.; Keranen, H.; Malm, E.J. Managing context information in mobile devices. IEEE Pervasive Comput. 2003, 2, 42–51. [Google Scholar] [CrossRef]

- Strang, T.; Linnhoff-Popien, C. A context modeling survey. In Proceedings of the Sixth International Conference on Ubiquitous Computing, Workshop on Advanced Context Modelling, Reasoning and Management, Nottingham, UK, 7–10 September 2004.

- Chen, H.; Finin, T.; Joshi, A.; Kagal, L.; Perich, F.; Chakraborty, D. Intelligent agents meet the semantic Web in smart spaces. IEEE Int. Comput. 2004, 8, 69–79. [Google Scholar] [CrossRef]

- Dey, A.K. Understanding and Using Context. Pers. Ubiquitous Comput. 2001, 5, 4–7. [Google Scholar] [CrossRef]

- W3C OWL Working Group. OWL 2 Web Ontology Language: Document Overview (Second Edition); W3C Recommendation, 11 December 2012. Available online: http://www.w3.org/TR/owl2-overview/ (accessed on 10 March 2016).

- Mining Minds Context Ontology Version 2. Available online: http://www.miningminds.re.kr/icl/context/context-v2.owl (accessed on 10 March 2016).

- Peterson, D.; Gao, S.; Malhotra, A.; Sperberg-McQueen, C.M.; Thompson, H.S. W3C XML Schema Definition Language (XSD) 1.1 Part 2: Datatypes; W3C Recommendation, 5 April 2012. Available online: http://www.w3.org/TR/xmlschema11-2/ (accessed on 10 March 2016).

- Protégé. Available online: http://protege.stanford.edu/ (accessed on 10 March 2016).

- Sirin, E.; Parsia, B.; Grau, B.C.; Kalyanpur, A.; Katz, Y. Pellet: A practical OWL-DL reasoner. J. Web Semant. 2007, 5, 51–53. [Google Scholar] [CrossRef]

- Harris, S.; Seaborne, A. SPARQL 1.1 (SPARQL Query Language for RDF); W3C Recommendation, 21 March 2013. Available online: http://www.w3.org/TR/sparql11-query/ (accessed on 10 March 2016).

- Apache Jena. Available online: https://jena.apache.org/ (accessed on 10 March 2016).

- Brickley, D.; Guha, R.V. RDF Schema 1.1; W3C Recommendation, 25 February 2014. Available online: https://www.w3.org/TR/rdf-schema/ (accessed on 10 March 2016).

- Richardson, L.; Ruby, S. Restful Web Services. Available online: http://transform.ca.com/LAC-Creating-RESTful-web-services-restful-interface.html?mrm=508752&cid=NA-SEM-API-ACF-000011-00000946-000000110 (accessed on 10 March 2016).

| 5% | 10% | 20% | 50% | |

|---|---|---|---|---|

| Activity | 97.60 ± 0.05 | 95.13 ± 0.05 | 90.39 ± 0.04 | 75.32 ± 0.20 |

| Location | 99.45 ± 0.02 | 98.82 ± 0.05 | 97.61 ± 0.15 | 93.93 ± 0.02 |

| Emotion | 99.63 ± 0.02 | 99.18 ± 0.05 | 98.32 ± 0.05 | 96.04 ± 0.07 |

| Act & Loc | 97.08 ± 0.10 | 94.27 ± 0.16 | 88.48 ± 0.11 | 72.63 ± 0.10 |

| Act & Emo | 97.16 ± 0.12 | 94.22 ± 0.06 | 89.60 ± 0.10 | 73.53 ± 0.30 |

| Loc & Emo | 99.00 ± 0.05 | 98.02 ± 0.09 | 96.24 ± 0.05 | 91.25 ± 0.09 |

| Act & Loc & Emo | 96.56 ± 0.06 | 93.10 ± 0.30 | 87.52 ± 0.11 | 71.60 ± 0.13 |

| Context Mapper | Context Synchronizer | Context Instantiator | Context Verifier | Context Classifier | Context Notifier | |

|---|---|---|---|---|---|---|

| Mean (s) | 0.986 | 2.188 | 0.001 | 0.032 | 0.046 | 1.012 |

| Standard Deviation (s) | 0.348 | 1.670 | 0.000 | 0.014 | 0.019 | 0.268 |

| Context Manager (%) | 99.53 | 99.97 | 0.00 | 0.00 | 0.00 | 99.99 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Villalonga, C.; Razzaq, M.A.; Khan, W.A.; Pomares, H.; Rojas, I.; Lee, S.; Banos, O. Ontology-Based High-Level Context Inference for Human Behavior Identification. Sensors 2016, 16, 1617. https://doi.org/10.3390/s16101617

Villalonga C, Razzaq MA, Khan WA, Pomares H, Rojas I, Lee S, Banos O. Ontology-Based High-Level Context Inference for Human Behavior Identification. Sensors. 2016; 16(10):1617. https://doi.org/10.3390/s16101617

Chicago/Turabian StyleVillalonga, Claudia, Muhammad Asif Razzaq, Wajahat Ali Khan, Hector Pomares, Ignacio Rojas, Sungyoung Lee, and Oresti Banos. 2016. "Ontology-Based High-Level Context Inference for Human Behavior Identification" Sensors 16, no. 10: 1617. https://doi.org/10.3390/s16101617

APA StyleVillalonga, C., Razzaq, M. A., Khan, W. A., Pomares, H., Rojas, I., Lee, S., & Banos, O. (2016). Ontology-Based High-Level Context Inference for Human Behavior Identification. Sensors, 16(10), 1617. https://doi.org/10.3390/s16101617