Exploring Direct 3D Interaction for Full Horizontal Parallax Light Field Displays Using Leap Motion Controller

Abstract

:1. Introduction

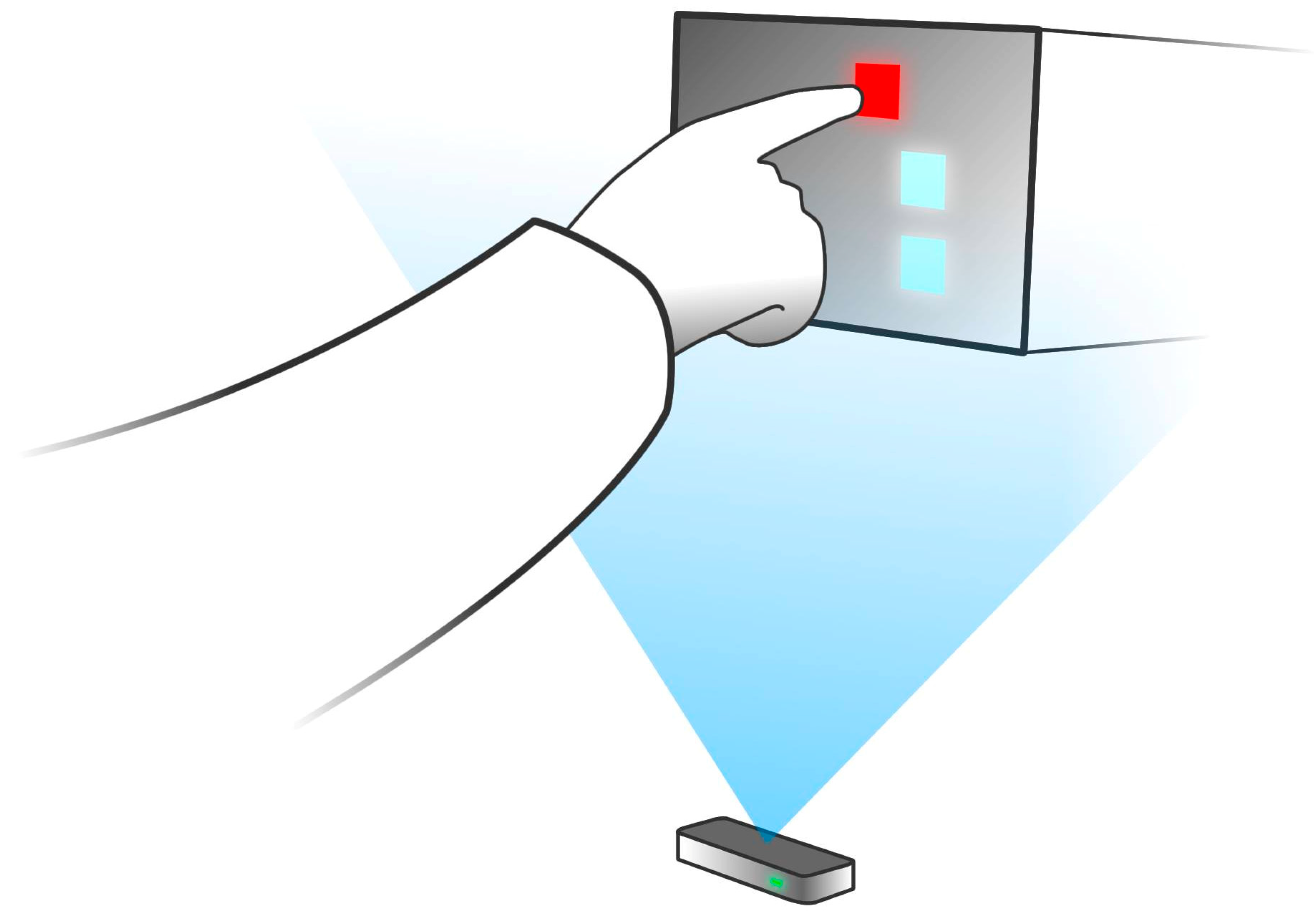

- We propose the first framework that provides a realistic direct haptic interaction with virtual 3D objects rendered on a light field display. Our solution consists of a calibration procedure that leverages the available depth of field and the finger tracking accuracy, and a real-time interactive rendering pipeline that modifies and renders light field according to 3D light field geometry and the input gestures captured by the Leap Motion Controller.

- We evaluate the implemented interaction framework and report on the results of a first user study on interaction with a light field display. The aim of the study was a subjective and objective evaluation of the proposed interaction setup and user experience when interacting with the content rendered on light field displays.

2. Related Work

2.1. Wearable Devices

2.2. Marker-Based Optical Tracking Systems

2.3. Hands-Free Tracking

2.4. Leap Motion Controller

2.5. Freehand Interaction with Projection-Based Light Field Displays

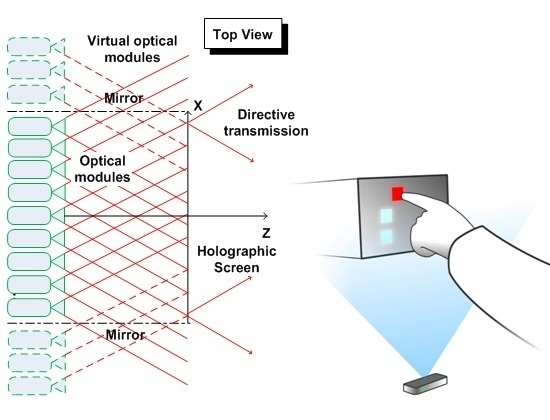

3. System Design

3.1. Leap Motion Controller

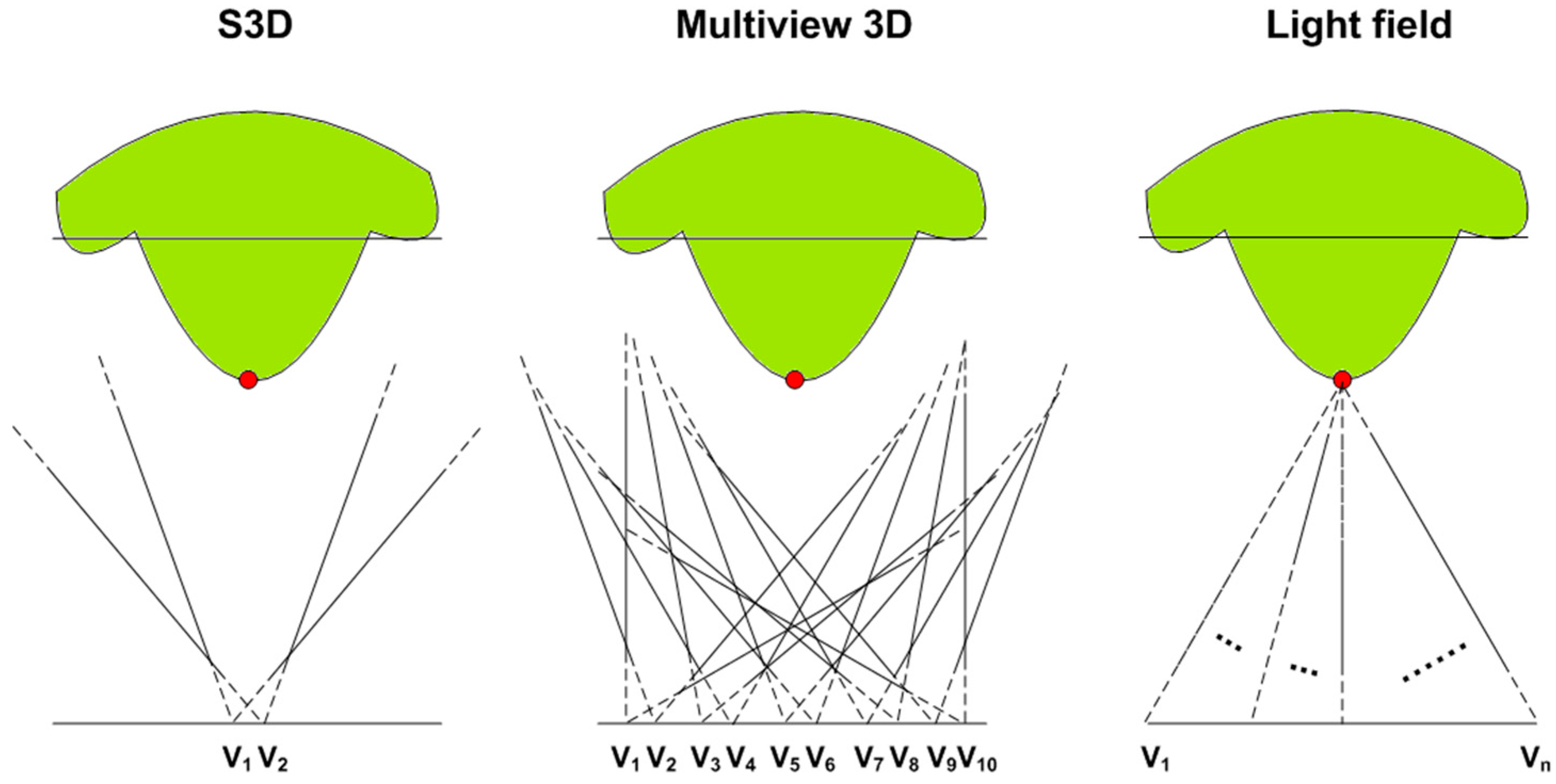

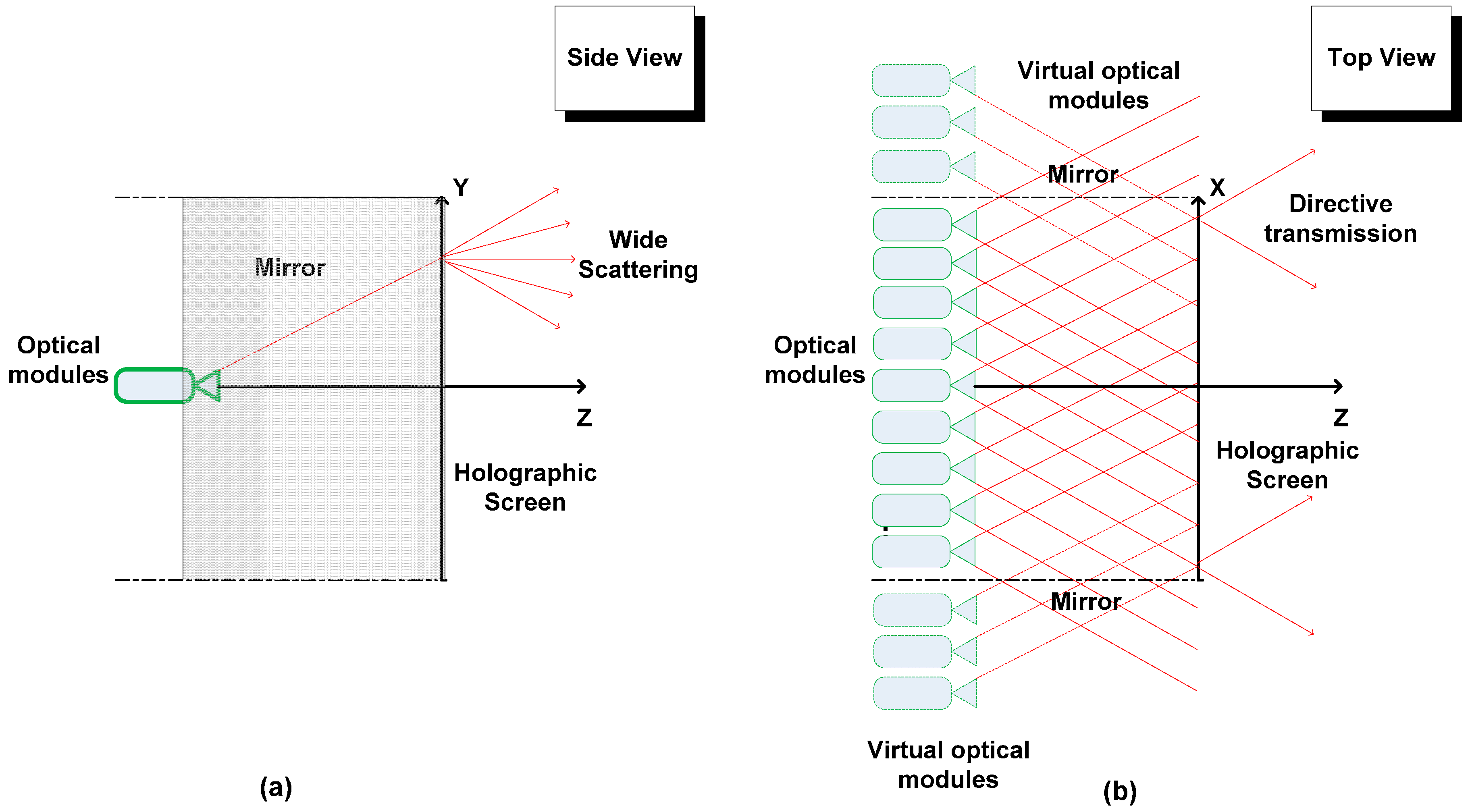

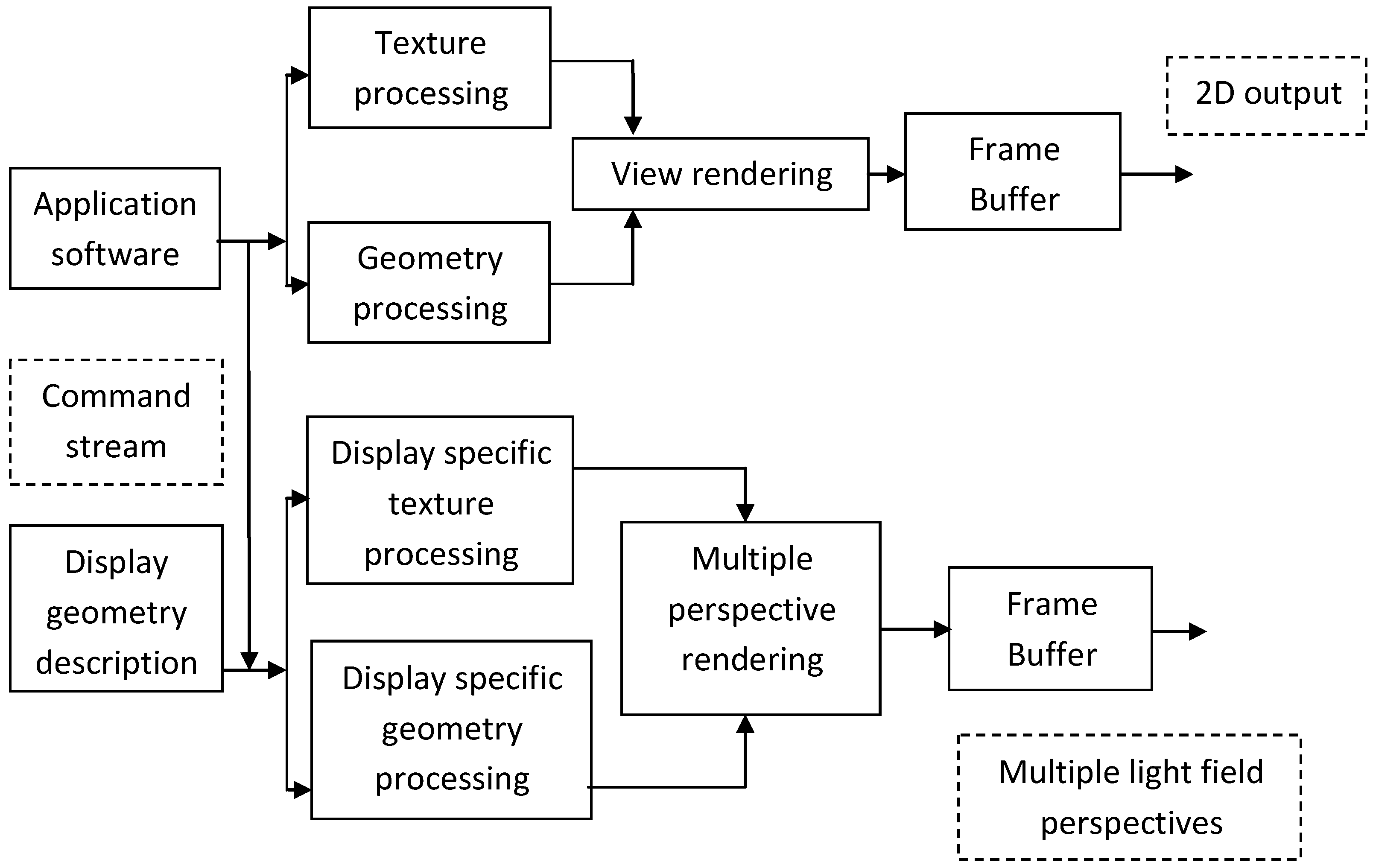

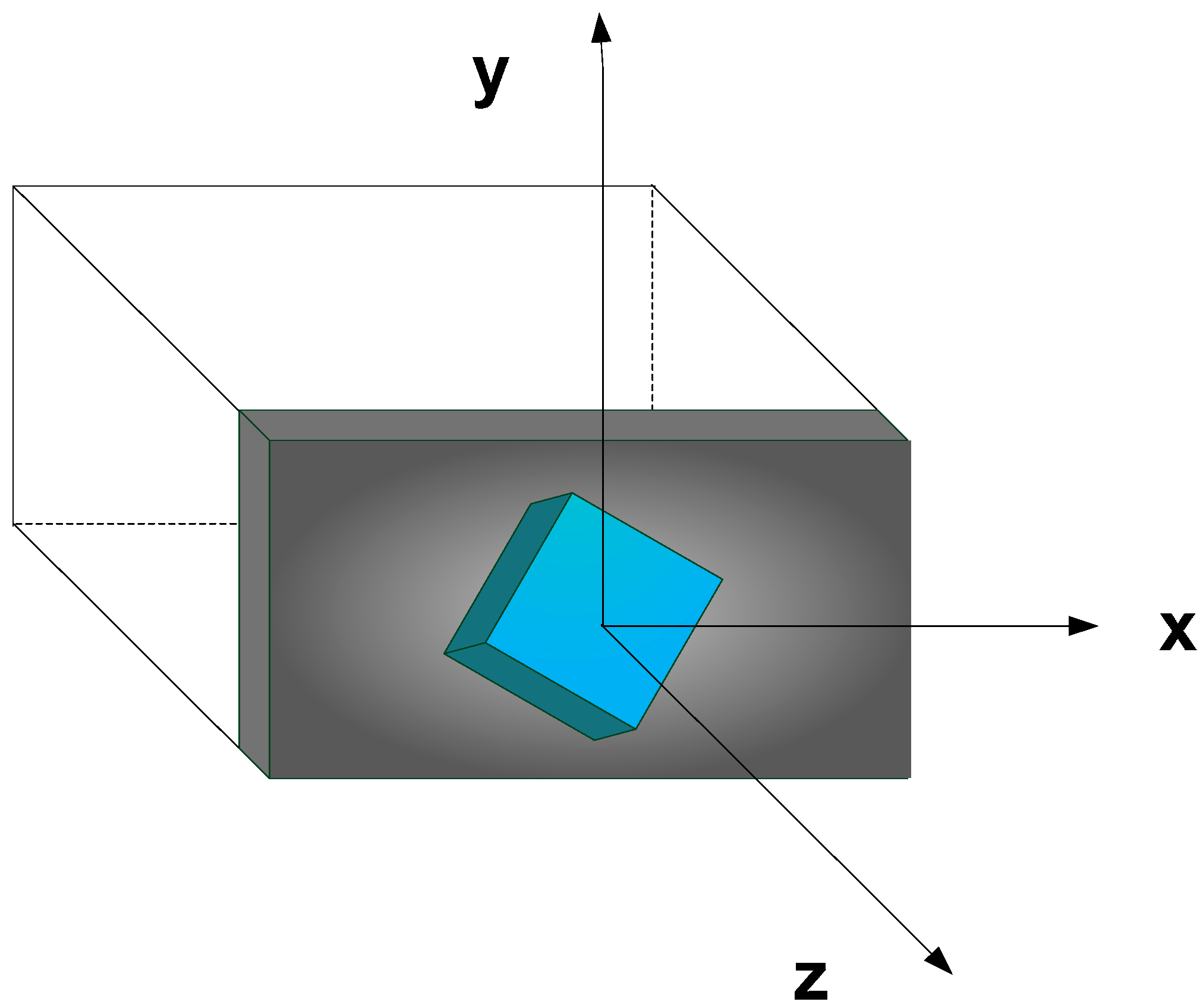

3.2. 3D Rendering on Light Field Displays

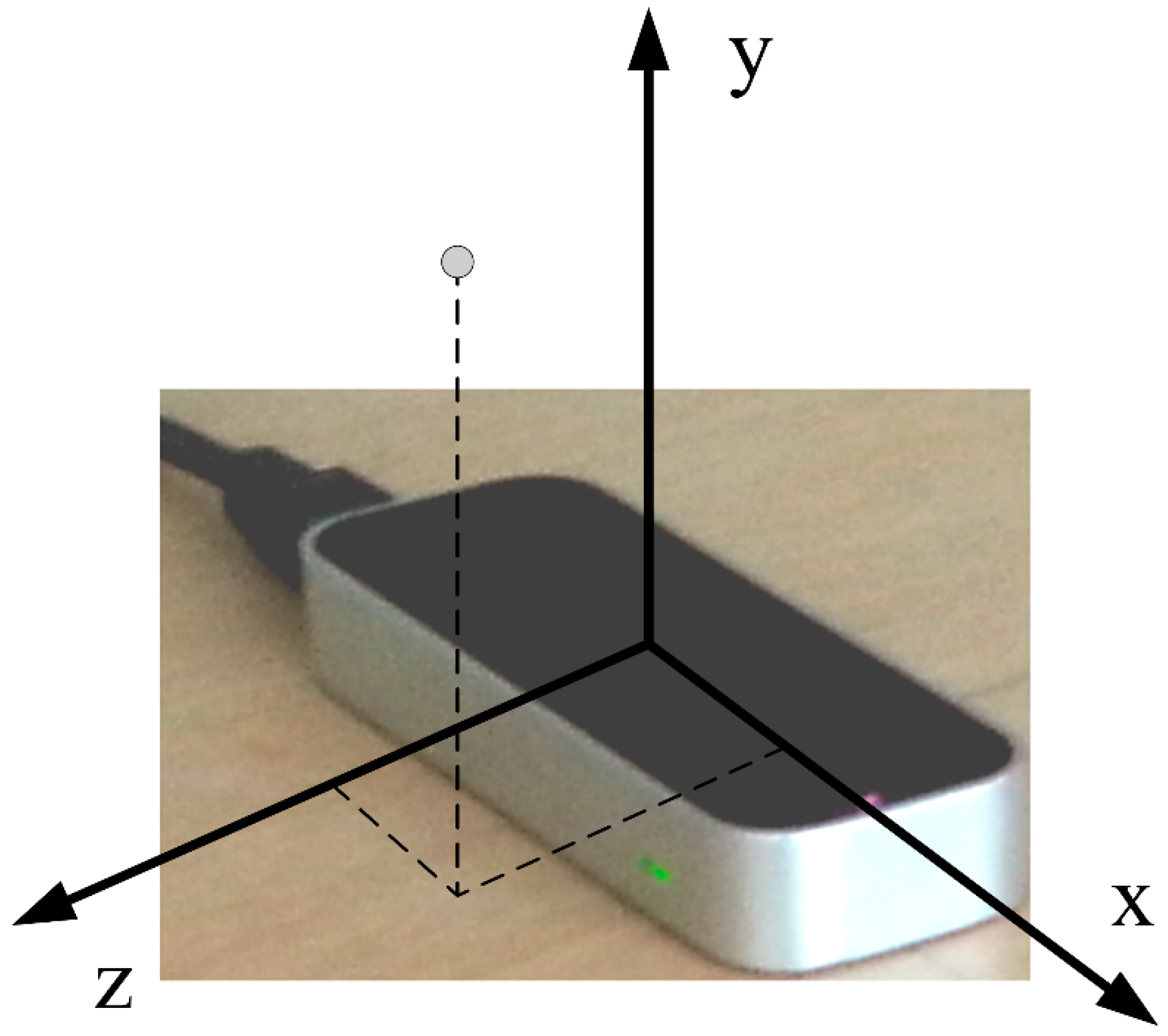

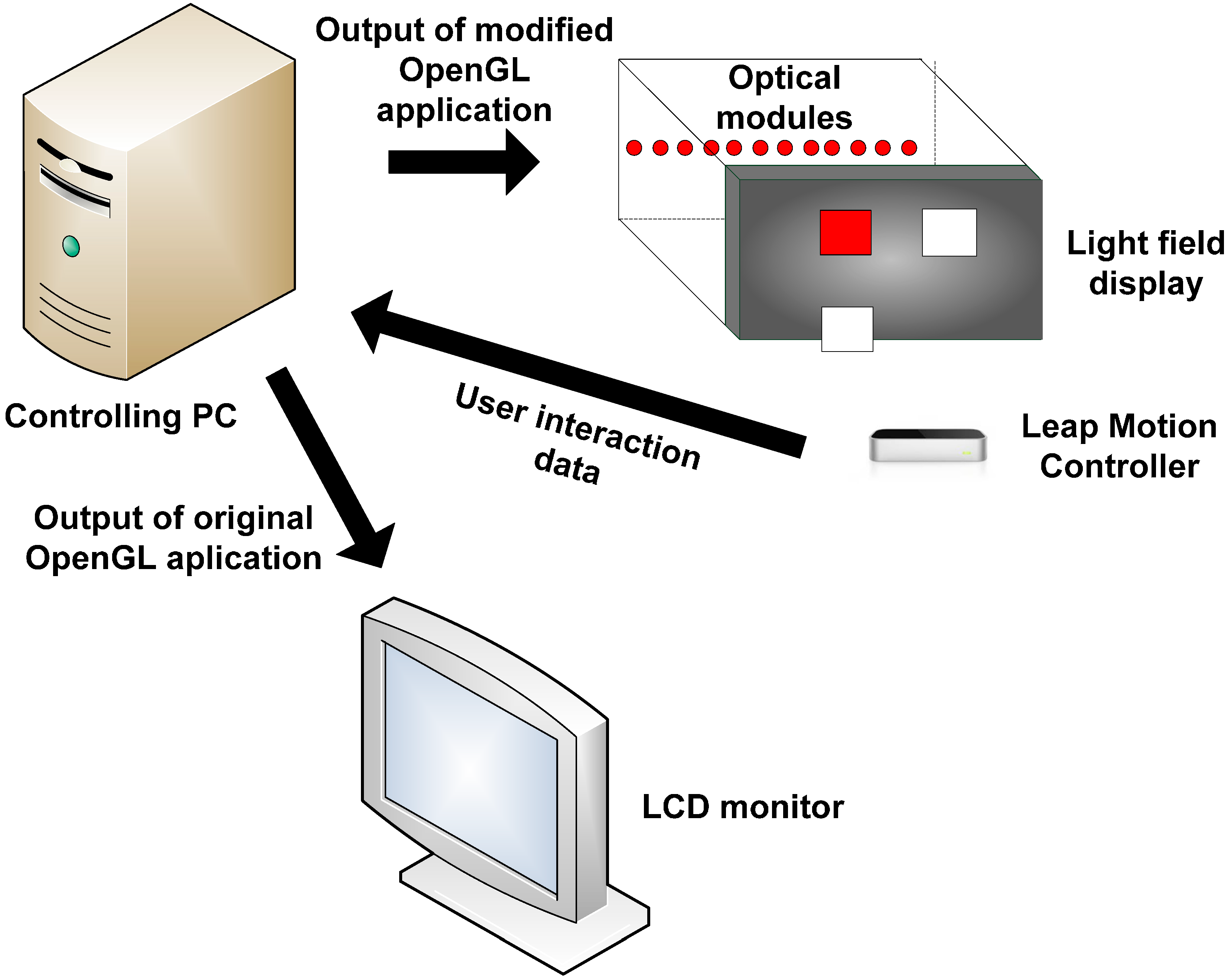

3.3. Experimental Setup

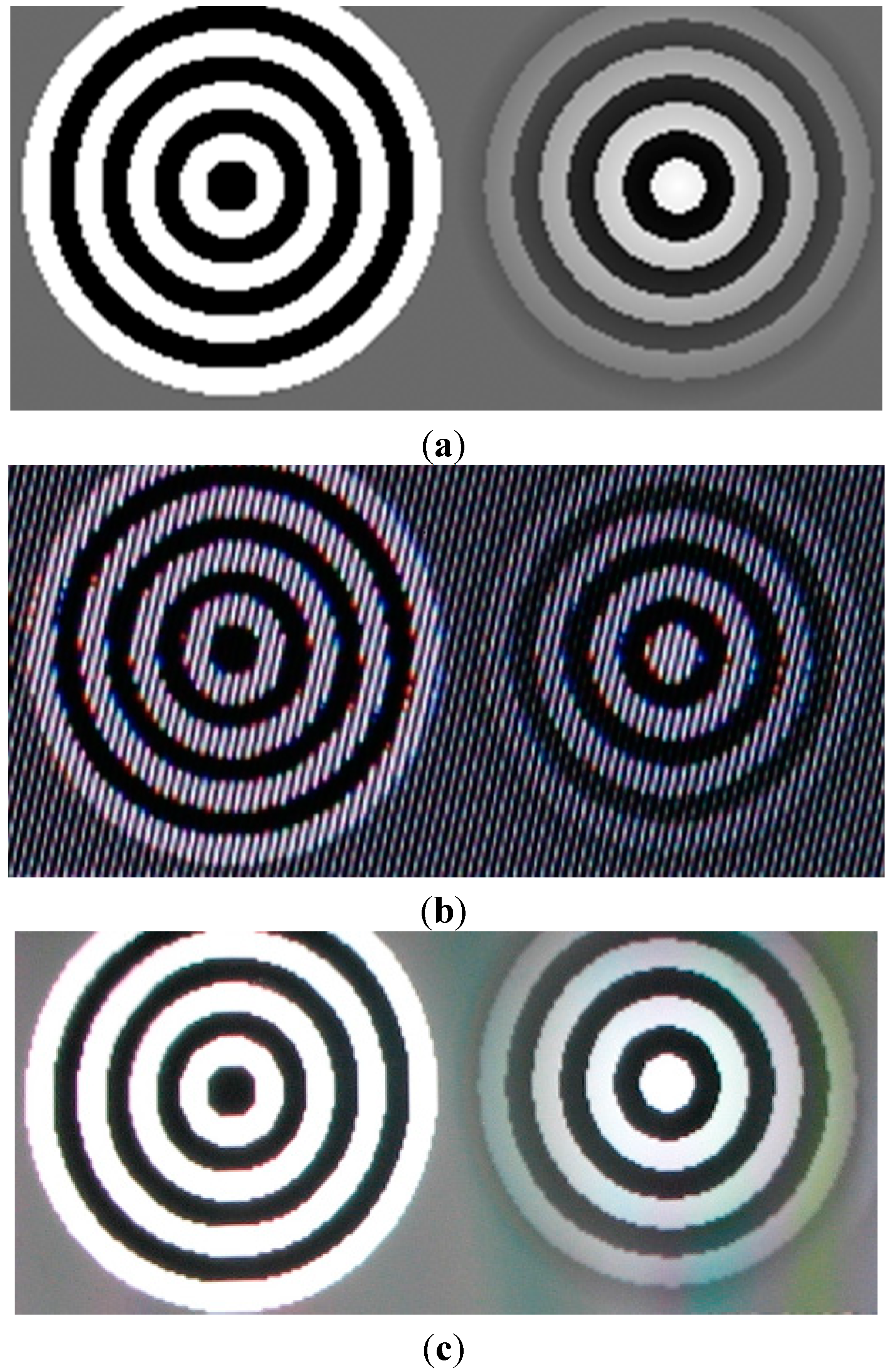

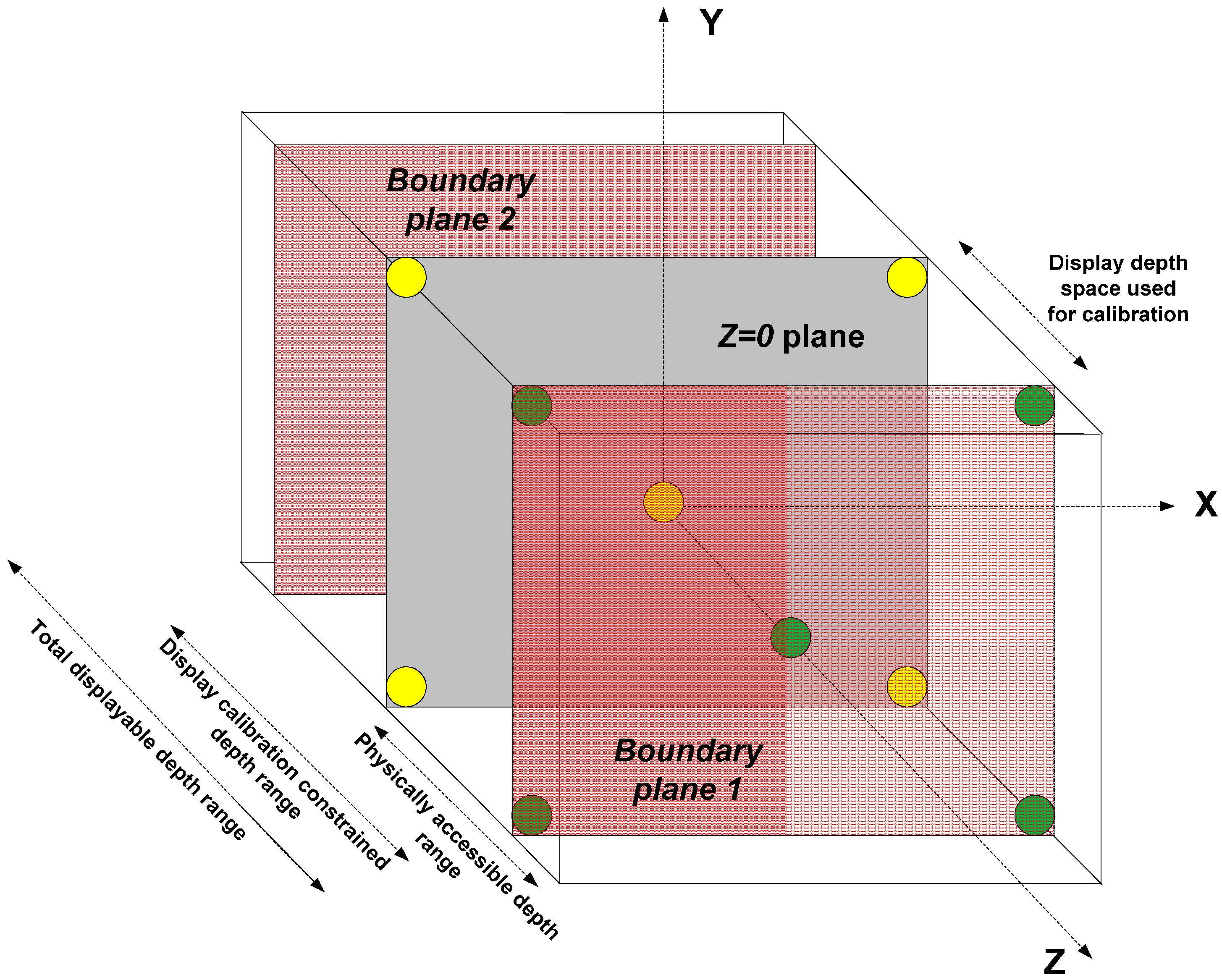

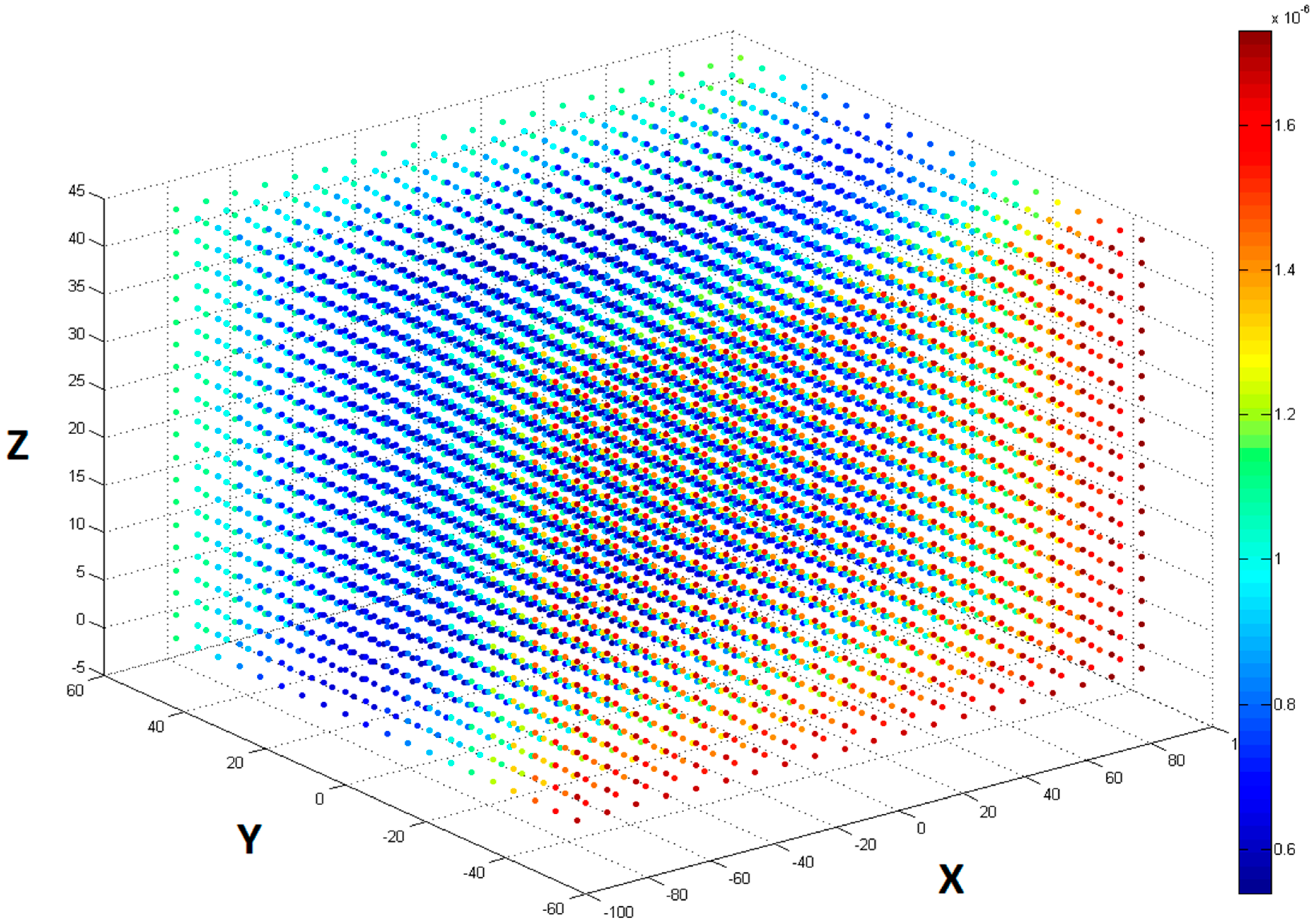

3.4. Calibrating Light Field Display to Leap Motion Controller

4. Evaluation

4.1. Design

- in 2D mode, the displayed objects were distributed on a plane in close proximity of the display surface; and

- in 3D mode, the objects were distributed in a space with the distance varying from 0 to 7 cm from the display.

- task completion times,

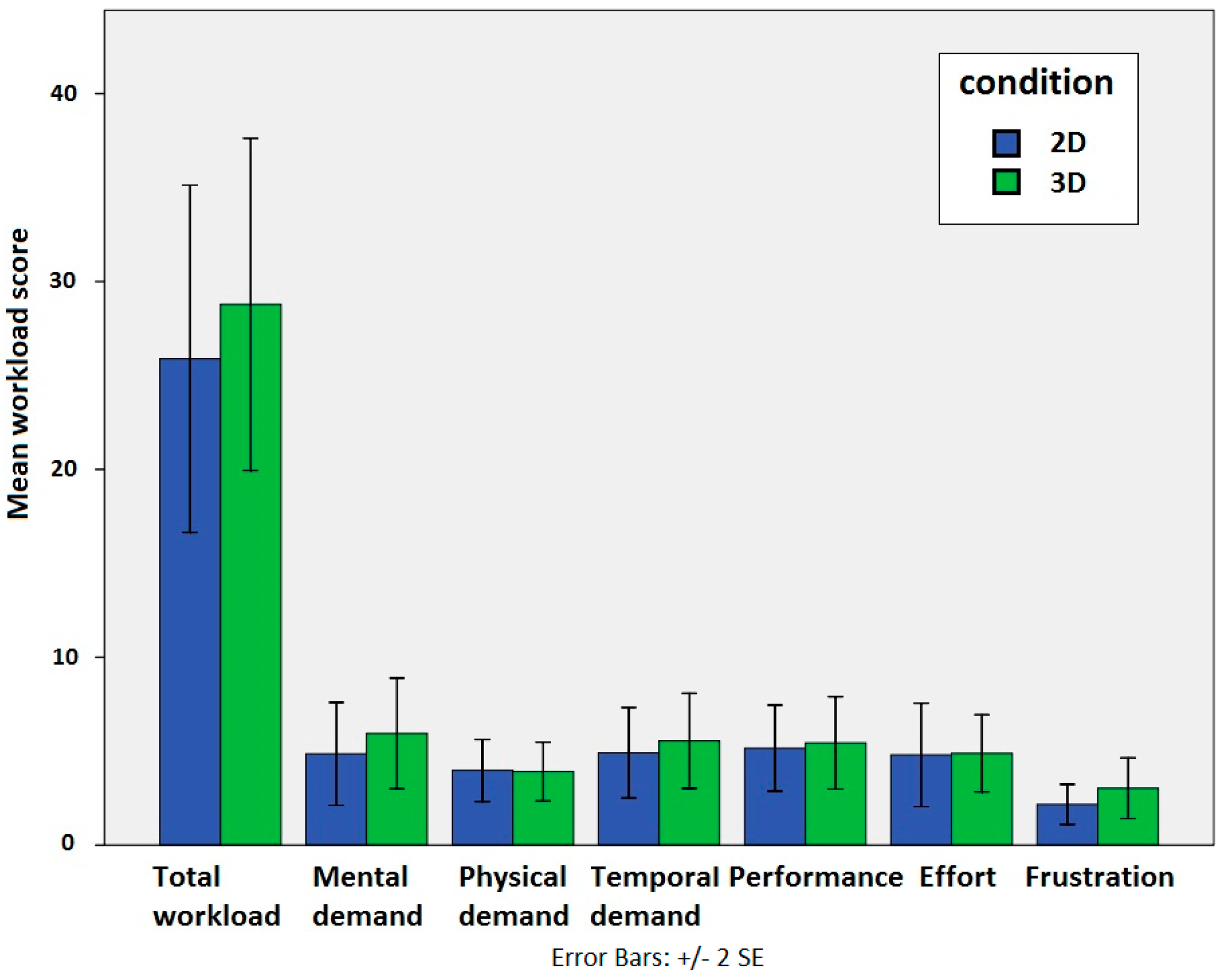

- cognitive workload, and

- perceived user experience.

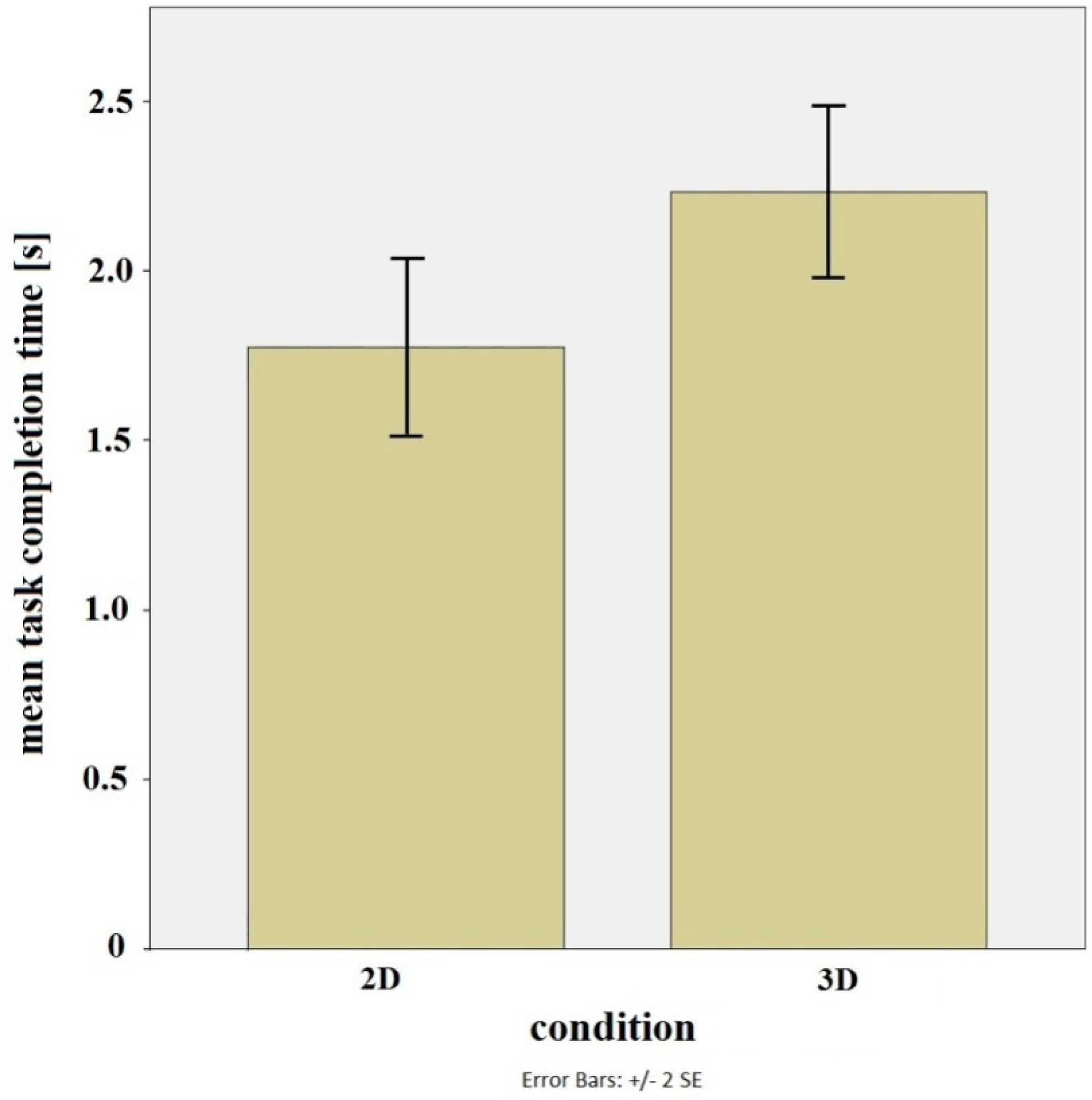

4.2. Results

5. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Wetzstein, G.; Lanman, D.; Hirsch, M.; Raskar, R. Tensor displays: Compressive light field synthesis using multilayer displays with directional backlighting. ACM Trans. Graph. 2012, 31, 80. [Google Scholar] [CrossRef]

- Balogh, T. Method and Apparatus for Displaying Three-Dimensional Images. U.S. Patent 6,201,565, 13 March 1997. [Google Scholar]

- Bowman, D.A.; Chen, J.; Wingrave, C.A.; Lucas, J.; Ray, A.; Polys, N.F.; Li, Q.; Haciahmetoglu, Y.; Kim, J.-S.; Kim, S.; et al. New directions in 3D user interfaces. Int. J. Virtual Real. 2006, 5, 3–14. [Google Scholar]

- Subramanian, S.; IJsselsteijn, W.A. Survey and classification of spatial object manipulation techniques. In Proceedings of Conference on Human-Computer Interaction, Interfacing Reality in the New Millennium (OZCHI 2000), Sydney, Australia, 4–8 December 2000; pp. 330–337.

- Chan, L.W.; Kao, H.S.; Chen, M.Y.; Lee, M.S.; Hsu, J.; Hung, Y.P. Touching the void: Direct-touch interaction for intangible displays. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 2625–2634.

- Leap Motion Controller. Available online: https://www.leapmotion.com (accessed on 27 December 2014).

- Wingrave, C.A.; Williamson, B.; Varcholik, P.D.; Rose, J.; Miller, A.; Charbonneau, E.; Bott, J.; LaViola, J.J. The wiimote and beyond: Spatially convenient devices for 3D user interfaces. IEEE Comput. Graph. Appl. 2010, 30, 71–85. [Google Scholar] [CrossRef] [PubMed]

- Bruder, G.; Steinicke, F.; Sturzlinger, W. To touch or not to touch?: Comparing 2D touch and 3D mid-air interaction on stereoscopic tabletop surfaces. In Proceedings of the 1st Symposium on Spatial User Interaction, Los Angeles, CA, USA, 20–21 July 2013; pp. 9–16.

- Grossman, T.; Wigdor, D.; Balakrishnan, R. Multi-finger gestural interaction with 3D volumetric displays. In Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology, Santa Fe, NM, USA, 24–27 October 2004; pp. 61–70.

- Wang, R.; Paris, S.; Popović, J. 6D hands: Markerless hand-tracking for computer aided design. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 549–558.

- Butler, A.; Hilliges, O.; Izadi, S.; Hodges, S.; Molyneaux, D.; Kim, D.; Kong, D. Vermeer: Direct interaction with a 360 viewable 3D display. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, CA, USA, 16–19 October 2011; pp. 569–576.

- Zhang, Z. Microsoft Kinect sensor and its effect. IEEE MultiMed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Vogel, D.; Balakrishnan, R. Distant free hand pointing and clicking on very large, high resolution displays. In Proceedings of the 18th Annual ACM Symposium on User Interface Software and Technology (UIST’05), Seattle, WA, USA, 23–27 October 2005; pp. 33–42.

- Hilliges, O.; Kim, D.; Izadi, S.; Weiss, M.; Wilson, A. HoloDesk: Direct 3D interactions with a situated see-through display. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012; pp. 2421–2430.

- Ren, Z.; Yuan, J.; Meng, J.; Zhang, Z. Robust part-based hand gesture recognition using Kinect sensor. IEEE Trans. Multimed. 2013, 15, 1110–1120. [Google Scholar] [CrossRef]

- Guna, J.; Jakus, G.; Pogačnik, M.; Tomažič, S.; Sodnik, J. An Analysis of the Precision and Reliability of the Leap Motion Sensor and Its Suitability for Static and Dynamic Tracking. Sensors 2014, 14, 3702–3720. [Google Scholar] [CrossRef] [PubMed]

- Fanini, B. A 3D Interface to Explore and Manipulate Multi-Scale Virtual Scenes using the Leap Motion Controller. In Proceedings of The Seventh International Conference on Advances in Computer-Human Interactions (ACHI 2014), Barcelona, Spain, 23–27 March 2014; pp. 258–263.

- Apostolellis, P.; Bortz, B.; Peng, M.; Polys, N.; Hoegh, A. Poster: Exploring the integrality and separability of the Leap Motion Controller for direct manipulation 3D interaction. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Minneapolis, MN, USA, 29–30 March 2014; pp. 153–154.

- Shen, J.; Luo, Y.; Wang, X.; Wu, Z.; Zhou, M. GPU-Based Realtime Hand Gesture Interaction and Rendering for Volume Datasets Using Leap Motion. In Proceedings of 2014 International Conference on the Cyberworlds (CW), Santander, Spain, 6–8 October 2014; pp. 85–92.

- Coelho, J.C.; Verbeek, F.J. Pointing Task Evaluation of Leap Motion Controller in 3D Virtual Environment. In Creating the Difference, Proceedings of the ChiSparks 2014 Conference, The Hague, The Netherlands, 3 April 2014; pp. 78–85.

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Evaluation of the Leap Motion Controller as a New Contact-Free Pointing Device. Sensors 2015, 15, 214–233. [Google Scholar] [CrossRef]

- Adhikarla, V.K.; Wozniak, P.; Barsi, A.; Singhal, D.; Kovács, P.T.; Balogh, T. Freehand interaction with large-scale 3D map data. In Proceedings of 3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), Budapest, Hungary, 2–4 July 2014; pp. 1–4.

- Adihikarla, V.K.; Wozniak, P.; Teather, R. HoloLeap: Towards efficient 3D object manipulation on light field displays. In Proceedings of the 2nd ACM symposium on Spatial User Interaction (SUI’14), Honolulu, HI, USA, 4–5 October 2014; p. 158.

- Jones, A.; McDowall, I.; Yamada, H.; Bolas, M.; Debevec, P. Rendering for an interactive 360 light field display. ACM Trans. Graph. (TOG) 2007, 26, 40-1–40-10. [Google Scholar] [CrossRef]

- Agus, M.; Gobbetti, E.; Guitián, J.A.I.; Marton, F.; Pintore, G. GPU accelerated direct volume rendering on an interactive light field display. Comput. Graph. Forum 2008, 27, 231–240. [Google Scholar] [CrossRef]

- Balogh, T.; Kovács, P.T.; Megyesi, Z.; Barsi, A. HoloVizio–True 3D Display System. In 3DTV Conference 2007—The True Vision Capture, Transmission and Display of 3D Video, Kos Island, Greece, 7–9 May 2007; pp. 1–4.

- Agus, M.; Pintore, G.; Marton, F.; Gobbetti, E.; Zorcolo, A. Visual enhancements for improved interactive rendering on light field displays. In Proceedings of the Eurographics, Italian Chapter Conference, Salerno, Italy, 24–25 November 2011; pp. 1–7.

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000. Available online: http://opencv.org/ (accessed on 10 April 2015).

- Hart, S.G.; Staveland, L.E. Development of a Multi-Dimensional Workload Rating Scale: Results of Empirical and Theoretical Research. In Human Mental Workload; Hancock, P.A., Meshkati, N., Eds.; Elsevier: Amsterdam, The Netherlands, 1988; pp. 139–183. [Google Scholar]

- Laugwitz, B.; Held, T.; Schrepp, M. Construction and Evaluation of a User Experience Questionnaire; Springer: Berlin/Heidelberg, Germany, 2008; pp. 63–76. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adhikarla, V.K.; Sodnik, J.; Szolgay, P.; Jakus, G. Exploring Direct 3D Interaction for Full Horizontal Parallax Light Field Displays Using Leap Motion Controller. Sensors 2015, 15, 8642-8663. https://doi.org/10.3390/s150408642

Adhikarla VK, Sodnik J, Szolgay P, Jakus G. Exploring Direct 3D Interaction for Full Horizontal Parallax Light Field Displays Using Leap Motion Controller. Sensors. 2015; 15(4):8642-8663. https://doi.org/10.3390/s150408642

Chicago/Turabian StyleAdhikarla, Vamsi Kiran, Jaka Sodnik, Peter Szolgay, and Grega Jakus. 2015. "Exploring Direct 3D Interaction for Full Horizontal Parallax Light Field Displays Using Leap Motion Controller" Sensors 15, no. 4: 8642-8663. https://doi.org/10.3390/s150408642

APA StyleAdhikarla, V. K., Sodnik, J., Szolgay, P., & Jakus, G. (2015). Exploring Direct 3D Interaction for Full Horizontal Parallax Light Field Displays Using Leap Motion Controller. Sensors, 15(4), 8642-8663. https://doi.org/10.3390/s150408642