1. Introduction

The early and accurate classification of cancer remains one of the most critical challenges in precision medicine [

1]. With the emergence of microarray technologies, researchers have been able to monitor the expression levels of thousands of genes simultaneously, offering valuable insights into the molecular signatures of various cancers [

2]. However, this abundance of information comes with a significant drawback: the curse of dimensionality. Gene expression datasets typically contain far more features (genes) than samples, making traditional machine learning models prone to overfitting and limiting their generalizability.

To address this, researchers have increasingly turned to metaheuristic optimization algorithms for gene selection [

3]. These algorithms aim to identify compact subsets of informative genes that maximize classification accuracy while reducing redundancy. Among the many metaheuristic approaches proposed, Nuclear Reaction Optimization (NRO) [

4] has gained attention for its ability to balance exploration and exploitation. NRO simulates the natural phenomena of nuclear fission and fusion to guide its search: the fission phase generates diverse candidate solutions through mutation and Lévy flight, while the fusion phase refines them toward optimality.

Despite its strengths, the standard NRO algorithm may encounter premature convergence, especially during the later stages of optimization, where diversity in the population can diminish. To overcome this limitation, we propose a novel extension called Genetic-Embedded Nuclear Reaction Optimization (GNR). GNR maintains the core structure of NRO but enhances its fusion phase with a Genetic Algorithm-inspired uniform crossover. With a 30% probability, the crossover operator recombines the best-known solution with a randomly selected peer, allowing each gene to be inherited from the best parent with 80% probability. This design preserves the mutation-driven exploration of fission while improving the fusion phase’s ability to refine solutions and avoid stagnation.

To evaluate the effectiveness of GNR, we apply it to six well-known microarray cancer datasets. A lightweight statistical filter is used as a preprocessing step to reduce the initial dimensionality, after which GNR is employed to identify optimal gene subsets. Classification performance is assessed using Support Vector Machines (SVMs) and Leave-One-Out Cross-Validation (LOOCV), ensuring a rigorous evaluation of the selected gene panels.

The rest of this paper is structured as follows:

Section 2 outlines the background and foundations of the proposed method.

Section 3 reviews related hybrid optimization approaches.

Section 4 details the methodology, including preprocessing, feature filtering, and the GNR algorithm.

Section 5 presents experimental results and comparisons, followed by biological relevance and conclusions.

2. Background

High-throughput gene expression data present significant challenges due to their high dimensionality and limited sample sizes, often leading to overfitting and high computational burden. Dimensionality reduction is commonly applied to address this, either through feature extraction [

5]—which transforms the original feature space (e.g., PCA [

6], LDA [

7]) at the cost of interpretability—or through feature selection, which preserves the original gene features. Feature selection techniques are typically categorized into filter, wrapper, and hybrid methods. Filter methods evaluate each feature independently using statistical criteria [

8], wrapper methods rely on classifier performance to assess feature subsets [

9], and hybrid methods combine both for improved balance between accuracy and efficiency [

10].

In this work, we adopt a hybrid selection strategy, first applying the F-score filter to reduce dimensionality, followed by a metaheuristic optimization stage. The optimization is conducted using an enhanced version of Nuclear Reaction Optimization (NRO), which we refer to as GNR. In GNR, we introduce an embedded genetic operator by incorporating uniform crossover into the algorithm’s fusion phase. This algorithm-level hybridization enhances exploitation, maintains diversity, and reduces the likelihood of convergence stagnation.

2.1. F-Score

The F-score is a straightforward and effective statistical filter technique, commonly used in feature selection for binary classification tasks. It evaluates how well a feature differentiates between two classes by comparing inter-class variance (differences between class means) to intra-class variance (variability within each class). Features with higher F-scores are deemed more significant because they offer better separation between the positive and negative classes [

11].

Let a dataset contain samples and features, where each sample belongs to one of two classes: positive () and negative (). For a given feature , let:

be the mean of in the positive class,

be the mean of in the negative class,

be the global mean of across all samples,

and be the number of samples in the positive and negative classes, respectively.

The F-score of feature

is computed as:

Here, the numerator measures between-class variance and the denominator represents within-class variance. A higher F-score indicates that the feature has a stronger ability to distinguish between the two classes.

Unlike wrapper methods that rely on classifiers, F-score is model-independent and computationally efficient, making it suitable for high-dimensional data like microarray gene expressions [

12]. In this research, the F-score is used to preselect informative genes before applying the Nuclear Reaction Optimization (NRO) algorithm. This two-step process improves classification accuracy and reduces computational cost by narrowing the search space early on.

2.2. Nuclear Reaction Optimization (NRO)

Nuclear Reaction Optimization (NRO) is a metaheuristic optimization algorithm that draws inspiration from the physical processes of nuclear reactions, specifically fission and fusion [

13]. In nature, fission involves splitting a heavy atomic nucleus into smaller fragments, while fusion refers to the merging of lighter nuclei to form a heavier one. Both reactions release a considerable amount of energy.

NRO uses these phenomena as metaphors for the optimization process. Fission encourages the exploration of the solution space by creating diverse candidate solutions, while fusion refines existing solutions to improve accuracy. This mechanism enables a dynamic balance between global exploration and local exploitation during the search for optimal results.

2.2.1. Nuclear Fission Phase

In the fission phase, the algorithm generates new candidate solutions by breaking an existing one into smaller elements. This step allows the search process to cover a broader portion of the solution space, reducing the risk of becoming trapped in a local optimum. The formulation for producing a fission-based solution is as follows:

Here,

the novel solution produced during fission.

the optimal solution identified to date.

a random variable produced around , with determining the dispersion.

: parameters regulating the extent of exploration (see Equations (7) and (8) below).

a random variable introducing variability in the solution.

mutation factors determining the scale of adjustments for subaltern and essential fission products, respectively.

heated neutron, calculated as where are two random solutions.

the probability governing whether subaltern or essential fission products are produced.

This method ensures a balanced search by generating some solutions near the best one for local refinement, while others are positioned farther away to promote broader exploration.

The fission process efficiency is affected by the step size, which regulates the degree of divergence of new solutions from existing ones. The step sizes are calculated with the subsequent formula:

Here,

: current generation number; the term guarantees a reduction in step sizes as iterations advance.

the distance between current solution and best-known solution.

the distance between random solution and best-known solution.

Initially, substantial step sizes facilitate the exploration of the solution space. Subsequently, the step sizes diminish, enabling the algorithm to narrow down the most effective solutions and achieve an optimal outcome.

Mutation factors are embedded in the fission equation to either intensify or broaden the search, helping prevent premature convergence and promoting efficient optimization. The factors are defined as follows:

Here,

2.2.2. Nuclear Fusion Phase

The fusion phase focuses on enhancing solutions that show promise. It consists of two components: ionization, which perturbs candidate solutions using population diversity, and fusion, which merges beneficial information from top solutions.

In the ionization step, the solution is modified by differences between randomly chosen individuals:

Here,

: components of two randomly selected fission solutions.

current solution.

random value for diversity.

If the difference term

becomes very small, the search may stagnate. To avoid this, the algorithm applies Lévy flight [

14] to provide significant changes:

Here,

: a scaling factor controlling the magnitude of jumps.

: heavy-tailed random step size, introducing both small and large adjustments.

indicates element-wise multiplication.

: best-known solution in the dimension.

After ionization, the fusion step combines the ionized solution with guidance from high-quality solutions. There are two options for this phase:

Here,

: refined solution after fusion.

best-known solution guiding the search.

: ionized solutions selected for comparison.

: random value for diversity.

Or, if diversity is insufficient:

These fusion mechanisms help the algorithm either intensify the search around elite solutions or leap toward unexplored areas when progress slows.

In summary, the NRO algorithm leverages the interplay between nuclear fission and fusion processes to navigate the search space efficiently. By mimicking principles from nuclear physics, it adapts its search behavior to explore diverse areas and steadily move toward optimal solutions. This makes it a robust and dependable method for addressing complex optimization problems.

3. Related Works

Gene selection for cancer classification has been widely addressed using metaheuristic optimization algorithms due to their ability to navigate high-dimensional search spaces effectively. Among these, a growing body of work has focused on enhancing traditional metaheuristics by embedding genetic operators, such as crossover and mutation, to improve convergence and maintain diversity. This embedded strategy allows algorithms to balance exploration and exploitation more efficiently, particularly in gene expression datasets where sample sizes are limited and feature redundancy is common. The following studies illustrate various approaches that incorporate genetic mechanisms into metaheuristic frameworks for gene selection.

Li et al. [

15] integrate genetic-algorithm operators into particle swarm optimization (PSO) to create a hybrid PSO/GA for gene-subset selection. PSO supplies the positional updates, while GA’s uniform crossover (rate = 0.985) and mutation (rate = 0.05) inject diversity and curb premature convergence. Using SVM with ten-fold cross-validation on leukemia, colon, and breast-cancer microarrays, the hybrid consistently outperformed standalone PSO or GA, achieving, for example, 97.2% accuracy on leukemia with only ~19 genes. The study shows that embedding GA operators within PSO improves exploration–exploitation balance and yields compact, high-discriminative gene sets.

Chuang et al. [

16] proposed a hybrid gene selection method that integrates a Combat Genetic Algorithm (CGA) into Binary Particle Swarm Optimization (BPSO) for microarray classification. The embedded CGA includes uniform crossover and a mutation operator applied with a rate of 0.05, enhancing local refinement within the global PSO framework. The method was evaluated on ten public microarray datasets using 1-NN with Leave-One-Out Cross-Validation and demonstrated strong performance—achieving the lowest classification error in 9 out of 10 datasets while significantly reducing the number of selected genes. This study confirms the benefit of embedding genetic mechanisms into swarm-based algorithms to improve convergence and selection efficiency in high-dimensional biomedical data.

Alshamlan et al. [

17] proposed the Genetic Bee Colony (GBC) algorithm, a hybrid method that incorporates Genetic Algorithm (GA) operators into the Artificial Bee Colony (ABC) model for gene selection from microarray data. The approach integrates uniform crossover and mutation within the ABC structure—crossover is embedded during the onlooker bee phase, while mutation is applied in the scout bee phase. The crossover probability rate (CPR) is set to 0.8 and the mutation probability rate (MPR) is 0.01, allowing controlled genetic variation to enhance convergence and maintain diversity. GBC was evaluated on six public microarray datasets and achieved superior classification accuracy and gene subset compactness compared to standard ABC and mRMR-ABC methods. This study reinforces the effectiveness of embedding GA operators into swarm-based optimizers for high-dimensional gene selection tasks.

Motieghader et al. [

18] introduced a hybrid gene selection method known as GALA, which combines a Genetic Algorithm (GA) with Learning Automata (LA) to enhance search efficiency in microarray-based cancer classification. While LA is a reinforcement-based technique rather than a metaheuristic one, it is used here to guide GA-based evolution through probabilistic feedback and adaptive updates. The genetic component employs single-point crossover and order-based mutation with rates of 0.70 and 0.30, respectively. GALA was evaluated on six public microarray datasets—including Colon, ALL_AML, SRBCT, and Tumors_9—and achieved high classification accuracy (e.g., 99.81% on Colon and 100% on ALL_AML) with compact gene subsets. Although LA is not metaheuristic, this method demonstrates how GA, when coupled with adaptive learning mechanisms, can effectively balance exploration and exploitation in high-dimensional gene selection tasks.

Collectively, these studies demonstrate the effectiveness of embedding genetic operators into metaheuristic optimization frameworks for gene selection. By integrating crossover and mutation within swarm-based or evolutionary algorithms, these approaches achieve improved classification accuracy, reduced gene subsets, and enhanced convergence behavior. Building on this foundation, the present study introduces a new GA-embedded variant of Nuclear Reaction Optimization (GNR), designed to further strengthen exploitation while preserving the exploration capabilities essential in high-dimensional biomedical search spaces.

4. Results and Analysis

4.1. Dimensionality Reduction

In the preprocessing phase, four filter methods (F-score, Information Gain (IG), ReliefF, and mRMR) were independently applied to rank genes based on their relevance to the target classes. The performance of each filter was initially assessed across multiple subset sizes (50, 100, 200, 300, 400, and 500 genes) using classification accuracy as the evaluation metric. F-score achieved the highest classification accuracy across most subset sizes, closely followed by Information Gain. ReliefF and mRMR generally produced lower classification results. To further validate these observations, the gene subsets generated by each filter were also optimized using the NRO algorithm and evaluated for final classification performance. The results confirmed that IG, ReliefF, and mRMR based preprocessing led to lower accuracies after optimization compared to using F-score. Based on this evaluation, only F-score was selected for final integration with the NRO algorithm, while the other three were excluded.

4.2. GNR Algorithm Results

This section reports the performance of the Genetic-Embedded Nuclear Reaction Optimization (GNR) algorithm across the six microarray cancer datasets. The evaluation lists best, average, and worst accuracies, each accompanied by precision, recall, F1-score, and 95% confidence intervals to capture both peak performance and run-to-run variability. We tested fixed subset sizes from 2 to 25 genes; to keep the tables concise, we include only those sizes that altered at least one metric and we stop reporting once the model achieved perfect accuracy for the dataset in question.

Across subsets ranging from two to twenty-two genes in the Colon dataset, every additional marker incrementally improved the Support-Vector-Machine classifier. A turning point occurred at twenty-two genes: accuracy, precision, recall, and the F1-score all reached their highest observed values and remained unchanged thereafter. The result indicates that complete discrimination between tumor and normal tissue in this binary dataset requires a comparatively broad transcript panel, with each added gene delivering complementary information until class boundaries are fully resolved (

Table 1).

For the acute lymphoblastic versus acute myeloid leukemia data, a three-gene subset already secured perfect accuracy, precision, recall, and F1-score; enlarging the subset to four or five genes produced identical outcomes. The rapid convergence shows that the decisive expression differences between the two leukemia subtypes are captured by a minimal signature and that further expansion simply adds redundant features (

Table 2).

In the three-class Leukemia 2 dataset, performance rose step-by-step as the subset grew from two to four genes. At four genes every evaluation metric reached its ceiling, after which larger subsets offered no improvement. The monotonic gains imply that each additional gene contributed a non-overlapping class-specific signal and that four genes constitute the smallest subset able to resolve all three disease phenotypes (

Table 3).

Remarkably, the Lung adenocarcinoma dataset achieved optimal values for all four metrics with only two genes and the results remained identical when extra genes were introduced. This outcome suggests that a very limited number of transcripts dominate the discriminative structure of the data, allowing the optimization process to attain flawless classification without risk of over-parameterization (

Table 4).

In the Lymphoma dataset, a two-gene subset was already capable of yielding perfect accuracy in the best trial, yet the average and worst-case accuracies remained slightly lower, indicating run-to-run variability. When a third gene was added, this variance disappeared: every repeat achieved 100% accuracy, precision, recall, and F1-score, and a fourth gene offered no further improvement. Investigators therefore may select either the ultra-compact two-gene panel, accepting minor stability loss, or the three-gene panel, which guarantees reproducible, maximized performance across all evaluations (

Table 5).

The four-class Small Round Blue Cell Tumor dataset exhibited the most pronounced incremental pattern. A two-gene subset yielded moderate performance; adding a third and fourth gene produced substantial gains and a five-gene subset finally delivered perfect scores across all metrics. The orderly improvements confirm that the embedded crossover in the GNR algorithm can continue to integrate informative genes until every residual misclassification is eliminated (

Table 6).

4.3. Comparative Analysis

To assess the effectiveness of the GNR algorithm, we compare its performance with the original NRO algorithm and several existing hybrid gene selection methods. The comparison focuses on classification accuracy and the number of selected genes.

As shown in

Table 7, in the comparative performance of GNR and its predecessor NRO across all six microarray benchmarks, the Genetic-Embedded Nuclear Reaction Optimization (GNR) achieved 100% classification accuracy with gene panels ranging from two (lung, lymphoma) to twenty-two transcripts (colon). When the same datasets were evaluated with the original NRO, perfect accuracy persisted only on the three easier tasks—Leukemia 1, Lung, and Lymphoma—where the decision surfaces are already sharply defined. In the remaining, more heterogeneous datasets the shortfall was modest but systematic: NRO lagged by 1.6 percentage points on Colon, by 1.4 points on Leukemia 2, and by 2.4 points on SRBCT.

Table 8 compares GNR with nine other two-metaheuristic hybrids (SIW-APSO, HHO-GWO, iBABC-CGO, HHO-GRASP, GBC, QMFOA, PSO-GA, GALA, and PCC-GA). Accuracy is the common metric and the number of genes each method keeps appears in parentheses, so the table shows at a glance how well every algorithm balances prediction quality with subset compactness across the six public microarray cancer datasets.

GNR is the only method that reaches 100 percent on every dataset. It does so with exceptionally small panels on five tasks (3 genes for Leukemia 1, 4 for Leukemia 2, 2 for Lung, 2 for Lymphoma, and 5 for SRBCT). No competitor delivers the same mix of flawless accuracy and parsimony. HHO-GWO and GBC also report perfect scores on five datasets but require more genes across the board and both fall short on Colon. QMFOA achieves 100 percent wherever it is evaluated, yet its panels range from 20 to 32 genes. Algorithms that produce the leanest signatures, such as iBABC-CGO (≈2–8 genes) and SIW-APSO (5–13 genes), do so at the cost of missing perfect accuracy on one or more datasets.

Taken together, the comparison positions GNR as the only algorithm that attains perfect accuracy everywhere and it already keeps the gene lists extremely short on five of the six datasets. The lone outlier is the Colon set, where GNR still needs 22 genes to stay flawless. Shrinking that count is inherently difficult: the Colon matrix contains just 62 samples but 2 000 expression features, and the tumor-versus-normal signal is spread thinly across many moderately informative genes instead of being dominated by a few highly discriminative ones. Because each gene contributes only a fragment of the separating pattern, removing even a single marker can expose borderline cases and break the perfect decision boundary. Achieving the same accuracy with fewer genes will therefore require an exceptionally precise pruning step that can eliminate only those genes whose information is fully redundant while retaining the collective signal needed to distinguish the classes.

4.4. Biological Relevance

Table 9 presents the predictive genes identified by the GNR algorithm that achieved 100% classification accuracy across the six microarray datasets. The biological relevance of these genes was not explored in depth in this study. However, a preliminary check using the “GeneCards” database [

26] indicated that many of the selected genes are associated with cancer-related functions or processes. Because this research focuses primarily on the design and evaluation of a computational gene selection method, detailed functional analysis and interpretation of individual genes are considered beyond its current scope and are better suited for future work by domain experts in biomedical research.

5. Materials and Methods

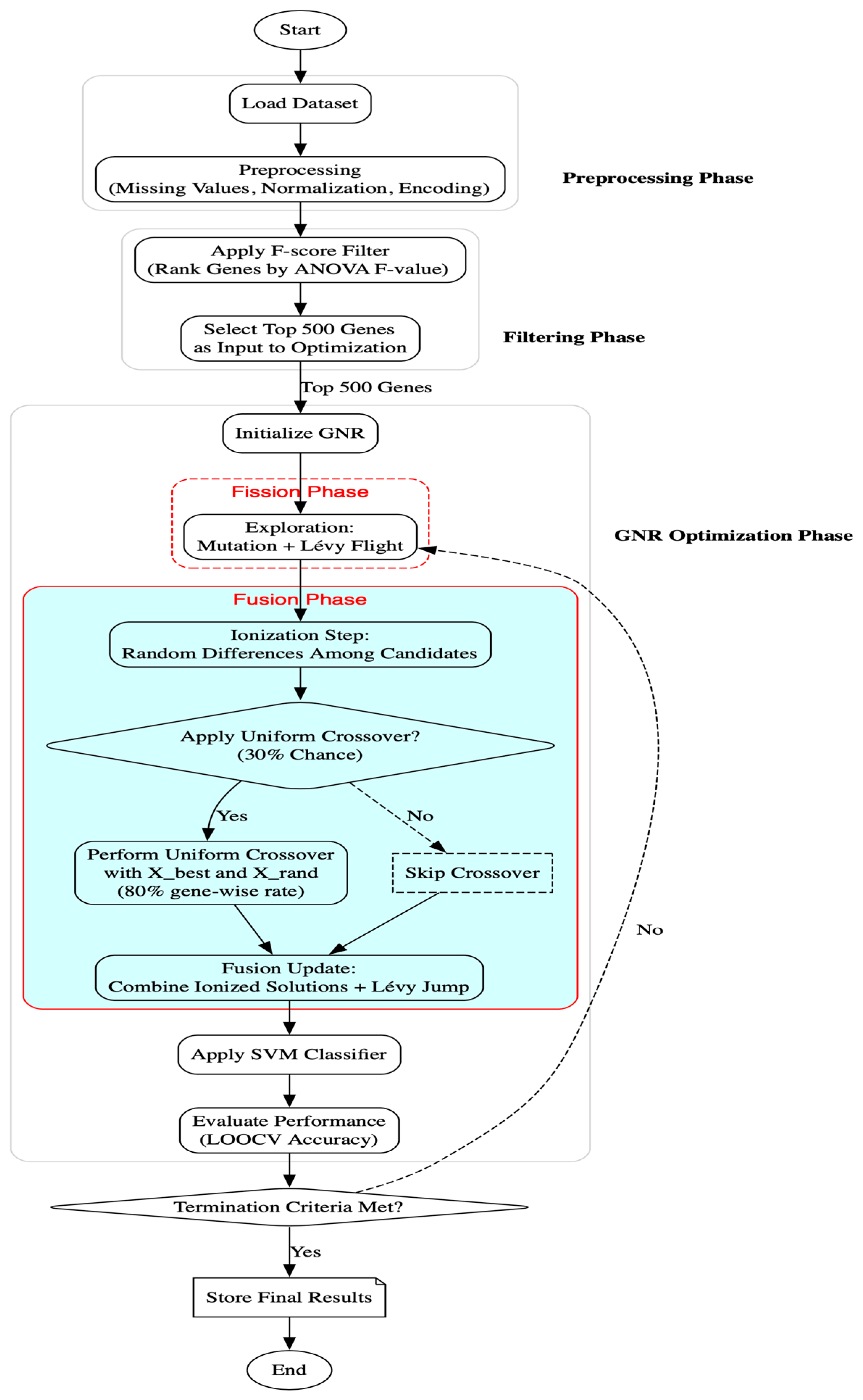

This study presents an enhanced hybrid approach for gene selection in cancer classification that combines statistical dimensionality reduction with a modified version of the Nuclear Reaction Optimization (NRO) algorithm. The proposed method introduces two key improvements over previous work: (1) dimensionality reduction is streamlined by using only the F-score filter, based on prior findings that demonstrated its superior compatibility with NRO; and (2) the optimization stage is extended by embedding a genetic crossover operator within the nuclear fusion phase of NRO, resulting in the proposed Genetic-Embedded Nuclear Reaction Optimization (GNR) algorithm. This modification is designed to enhance exploitation and prevent stagnation during the search process.

The overall methodology involves dataset preprocessing, F-score-based filtering, optimization using GNR, classification using Support Vector Machines (SVMs), and performance evaluation via cross-validation. All experiments were implemented in Python (version 3.x), using numpy and pandas for data manipulation, scipy.io.arff for dataset loading, and scikit-learn for filtering, normalization, and classification. The GNR algorithm was developed from scratch based on the mathematical foundations of NRO, including nuclear fission, fusion, Lévy flights, and mutation operators, with the new addition of a probabilistic uniform crossover mechanism embedded in the fusion phase.

Figure 1 illustrates the complete workflow, from preprocessing to optimization and evaluation.

5.1. Dataset and Preprocessing

To evaluate the effectiveness of the proposed hybrid gene selection method, we selected six benchmark microarray cancer datasets that are widely used in bioinformatics research. These datasets include both binary and multiclass classification tasks, providing a diverse and realistic testbed for assessing model performance and generalizability. All datasets were originally published in peer-reviewed studies and are frequently used in comparative evaluations. For transparency and reproducibility, all datasets used in this study have been compiled and made publicly available in our GitHub repository:

https://github.com/ShahadAlkamli/GNR.git (accessed on 1 August 2025). Version 1.0 (GitHub release tag v1.0) of the software was used for all experiments reported in this paper.

Each dataset comes with class labels that were defined in the original studies, usually based on clinical diagnosis or pathology results. For instance, the Colon dataset [

27] contains samples labeled as either tumor (40 samples) or normal (22 samples). In Leukemia 1 [

28], the labels distinguish between acute lymphoblastic leukemia (ALL, 47 samples) and acute myeloid leukemia (AML, 25 samples). The Lung dataset [

29] separates tumor cases (86) from normal ones (10). Among the multiclass datasets, Leukemia 2 [

30] includes B-cell (38), AML (25), and T-cell (9) samples. Lymphoma [

31] consists of three subtypes: diffuse large B-cell lymphoma (DLBCL, 46), chronic lymphocytic leukemia (CLL, 11), and follicular lymphoma (FL, 9). In small round blue cell tumor (SRBCT) [

32] , there are four tumor types, represented by numerical labels (Classes 1 through 4) with 29, 25, 18, and 11 samples.

An overview of these datasets, including the number of genes and classes, is provided in

Table 10. Their variety makes them useful for evaluating how well the method performs across different types of cancer classification problems.

All datasets underwent preprocessing to ensure they were suitable for use with machine learning models. Among the datasets, only the Lymphoma dataset contained missing values, representing approximately 4.91% of its full data matrix (12,264 missing entries out of a total of 249,612). These missing values were spread across 2796 out of 4026 genes. Due to the low percentage and broad distribution of the missing data, their effect on the overall quality of the dataset was considered minimal. To maintain the structure of the data without introducing notable bias, mean imputation was employed; this involved substituting each missing value with the average expression level of the respective gene.

To address differences in gene expression scales, Z-score normalization was applied, transforming each gene’s values to have a mean of zero and a standard deviation of one. Furthermore, for multiclass classification tasks, the categorical class labels were converted to numeric form using label encoding to ensure compatibility with the classification algorithms. These preprocessing measures ensured that all datasets were fully prepared for the subsequent gene selection and classification processes.

5.2. F-Score-Based Dimensionality Reduction

Dimensionality reduction was performed using the F-score filter, a univariate statistical method that evaluates each gene’s ability to distinguish between classes based on ANOVA F-values. This method was selected for its computational simplicity and established effectiveness in high-dimensional data scenarios. For each dataset, F-scores were computed using the f_classif function from scikit-learn and genes were ranked accordingly. The top 500 ranked genes (k = 500) were selected as input to the optimization algorithm. This threshold was empirically chosen to ensure a strong balance between reducing dimensionality and retaining class-discriminative information.

5.3. Genetic-Embedded Nuclear Reaction Optimization (GNR)

To perform feature selection, we adopted the Genetic-Embedded Nuclear Reaction Optimization (GNR) algorithm, an enhanced variant of the Nuclear Reaction Optimization (NRO) algorithm. NRO is a physics-inspired metaheuristic that simulates nuclear fission and fusion processes to explore and exploit complex search spaces [

13]. In our previous work [

4], NRO demonstrated strong performance in selecting informative gene subsets from high-dimensional microarray data, outperforming several other metaheuristics in terms of classification accuracy and subset compactness.

However, during extended experimentation with NRO, we observed that the algorithm frequently plateaued in later generations. In many runs, the best fitness value remained unchanged across multiple consecutive generations, even though the algorithm continued iterating. This stagnation during the exploitation phase was evident from convergence curves and triggered early stopping in a significant number of trials. These findings suggested that while NRO was effective at exploration through its mutation-based fission mechanism, it lacked a strong local refinement component.

To address this limitation, we introduced a genetic operator into the fusion phase of NRO, resulting in the proposed GNR algorithm. Genetic Algorithms (GAs) are well-known for their effective exploitation strategies, particularly through crossover operations that allow the exchange of information between high-quality solutions. By embedding a uniform crossover within the fusion stage, GNR strengthens the exploitation capacity of NRO while preserving its robust exploratory behavior during fission.

In the proposed GNR algorithm, the fusion mechanism is selectively enhanced by a genetic crossover operator. After the ionization step and before applying Equation (9) or (10), a uniform crossover may be triggered. This operator is applied only if a randomly drawn value from a uniform distribution [0,1] is less than a predefined crossover probability

. If this condition is met, the fusion step is replaced with a genetic recombination between the current best solution

and a randomly selected solution

from the population. The offspring solution is computed as:

If the crossover condition is not satisfied, the algorithm proceeds with the original fusion mechanism using Equations (9) or (10), depending on the stagnation status. This probabilistic integration of genetic crossover improves the algorithm’s exploitation capability, promotes useful gene mixing, and reduces the likelihood of premature convergence without interfering with the fission-driven exploration process.

In the GNR algorithm, each candidate solution is encoded as a continuous vector in

where

D is the number of features (genes) after F-score filtering. The population is initialized with 500 such vectors. Optimization proceeds over a maximum of 30 generations, with early stopping enabled if no improvement is observed in the best solution for five consecutive generations. The full set of parameters used for GNR is summarized in

Table 11.

To determine the optimal number of genes, we tested subsets ranging from 2 to 25 genes. For each dataset, we report the smallest number of genes that achieved 100% accuracy. This strategy highlights the minimal subset required to reach perfect classification, ensuring both performance and compactness in the selected gene panels.

This embedded genetic mechanism allows GNR to maintain NRO’s balance between exploration and exploitation while enhancing its ability to fine-tune gene subsets in later generations. The overall structure of the GNR algorithm, including initialization, dimensionality reduction, fission, fusion with crossover, fitness evaluation, and stopping conditions, is summarized in Algorithm 1.

| Algorithm 1 Genetic-Embedded Nuclear Reaction Optimization (GNR) Algorithm |

Require: Dataset D, Population size N = 500, Max generations T = 30, Early stopping patience P = 5

Ensure: Optimized subset of gene

▷ Preprocessing and Filtering

1: Handle missing values (mean imputation)

2: Normalize features using Z-score

3: Encode categorical labels (if any)

4: Compute F-score for each gene

5: Select top 500 genes based on F-score

▷ Initialization

6: for each subset size k ∈ {5, 10, 15} do

7: Initialize population of continuous vectors xi ∈ [0, 1]D

8: Randomly initialize each xi in population

9: Evaluate fitness using SVM with LOOCV

10: Set ← best solution, no_improve ← 0

▷ Fission Phase: Exploration via perturbation

11: for g = 1 to T do

12: for each solution do

13: Generate new solution using Equation (2)

14: Compute dynamic step sizes using Equations (3) and (4)

15: Apply mutation factors using Equations (5) and (6)

16: end for

▷ Fusion Phase: Exploitation with embedded crossover

17: for each solution do

18: Apply ionization via Equation (7)

19: if solutions are similar then

20: Apply Lévy flight adjustment using Equation (8)

21: end if

22: Generate as follows:

23: if rand < 0.3 then

24: Select random solution

25: for each gene d ∈ {1, . . . , D} do

26: ← if rand < 0.8: ; else:

27: end for

28: else

29: Apply fusion using Equation (9) or (10) depending on similarity

30: end if

31: end for

▷ Fitness Evaluation and Best Solution Update

32: for each solution do

33: Evaluate fitness via SVM + LOOCV

34: if > then

35: Update ← , reset no_improve ← 0

36: else

37: Increment no_improve ← no_improve + 1

38: end if

39: end for

40: if no_improve ≥ then

41: break

42: end if

43: end for

44: end for

▷ Final Output

45: return best gene subset |

5.4. Classification and Fitness Evaluation

Support Vector Machines (SVMs) were adopted as the classification model to evaluate the effectiveness of the gene subsets selected by the optimization algorithm. SVMs are widely recognized for their strong performance on high-dimensional gene expression data, as shown in both our previous work and in numerous studies across the bioinformatics literature. Their ability to define optimal hyperplanes in sparse feature spaces makes them particularly suitable for microarray-based cancer classification tasks.

In this study, SVMs were configured with a linear kernel and a regularization parameter of C = 1. This configuration was chosen to maintain a good balance between maximizing the decision margin and maintaining sensitivity to misclassifications. The same settings were applied across all datasets to ensure consistent model evaluation.

The fitness of the selected gene subsets was measured based on classification accuracy using Leave-One-Out Cross-Validation (LOOCV). LOOCV is considered a robust evaluation method, especially when dealing with datasets that have a small number of samples. It works by treating each sample in turn as a test instance while the remaining samples are used for training. This process continues until every sample has been used once as a test case, providing a thorough and unbiased estimate of prediction accuracy.

To compare performance, 5-fold and 10-fold cross-validation techniques were also applied to each dataset. These methods produced classification accuracies that were consistently 1 to 2 percent lower than those obtained using LOOCV. Although LOOCV was more computationally demanding, often requiring between two and ten times more time depending on the dataset, it delivered more reliable results. Since each sample in small datasets has a significant influence on learning, LOOCV was selected to minimize bias and enhance the model’s stability. Previous research in bioinformatics has also confirmed that LOOCV improves generalization and lowers the risk of overfitting in high-dimensional gene expression classification problems [

20,

21,

22,

23,

33].

6. Conclusions

This study presented Genetic-Embedded Nuclear Reaction Optimization (GNR), a hybrid gene selection algorithm designed to improve cancer classification accuracy by enhancing the exploitation capabilities of Nuclear Reaction Optimization (NRO) through the integration of a genetic uniform crossover mechanism. By coupling this metaheuristic with a statistical F-score filter, GNR achieves a balanced trade-off between exploration and refinement in high-dimensional gene expression datasets. Experimental validation on six benchmark microarray datasets demonstrated that GNR consistently achieved perfect classification accuracy using compact gene subsets, outperforming both the original NRO and a range of competitive hybrid algorithms in terms of both accuracy and parsimony. The inclusion of the genetic operator significantly mitigated stagnation during the later optimization stages, a common limitation observed in traditional NRO implementations. These results underscore the value of hybridizing bio-inspired optimization techniques with genetic components, especially in bioinformatics tasks characterized by high feature dimensionality and limited samples.

Future work may explore further enhancements to the GNR framework by integrating biological pathway information, expanding its application to other omics data types, and conducting comprehensive biological validation of the selected gene panels. Although this study focused on microarray datasets, the GNR algorithm is compatible with RNA-seq data when appropriately normalized. Researchers interested in applying GNR to RNA-seq or other modern high-throughput data types are encouraged to adapt the framework accordingly and assess its performance in those settings.

Author Contributions

Conceptualization, H.A.; data curation, S.A.; formal analysis, H.A. and S.A.; investigation, S.A.; methodology, H.A.; supervision, H.A.; validation, S.A.; writing—original draft, S.A.; writing—review and editing, S.A. and H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Ongoing Research Funding Program, (ORF-2025-904), King Saud University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

The authors really appreciate the kind support from Ongoing Research Funding Program, (ORF-2025-904), King Saud University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fitzgerald, R.C.; Antoniou, A.C.; Fruk, L.; Rosenfeld, N. The future of early cancer detection. Nat. Med. 2022, 28, 666–677. [Google Scholar] [CrossRef]

- Krzyszczyk, P.; Acevedo, A.; Davidoff, E.J.; Timmins, L.M.; Marrero-Berrios, I.; Patel, M.; White, C.; Lowe, C.; Sherba, J.J.; Hartmanshenn, C.; et al. The growing role of precision and personalized medicine for cancer treatment. Technology 2018, 6, 79–100. [Google Scholar] [CrossRef] [PubMed]

- Alkamli, S.S.; Alshamlan, H.M. Performance Evaluation of Hybrid Bio-Inspired and Deep Learning Algorithms in Gene Selection and Cancer Classification. IEEE Access 2025, 13, 59977–59990. [Google Scholar] [CrossRef]

- Alkamli, S.; Alshamlan, H. Evaluating the Nuclear Reaction Optimization (NRO) Algorithm for Gene Selection in Cancer Classification. Diagnostics 2025, 15, 927. [Google Scholar] [CrossRef]

- Hira, Z.M.; Gillies, D.F. A Review of Feature Selection and Feature Extraction Methods Applied on Microarray Data. Adv. Bioinform. 2015, 2025, 198363. [Google Scholar] [CrossRef]

- Maćkiewicz, A.; Ratajczak, W. Principal components analysis (PCA). Comput. Geosci. 1993, 19, 303–342. [Google Scholar] [CrossRef]

- Xanthopoulos, P.; Pardalos, P.M.; Trafalis, T.B. Linear Discriminant Analysis. In Robust Data Mining; Xanthopoulos, P., Pardalos, P.M., Trafalis, T.B., Eds.; Springer: New York, NY, USA, 2013; pp. 27–33. [Google Scholar] [CrossRef]

- Sánchez-Maroño, N.; Alonso-Betanzos, A.; Tombilla-Sanromán, M. Filter Methods for Feature Selection—A Comparative Study. In Intelligent Data Engineering and Automated Learning—IDEAL 2007; Yin, H., Tino, P., Corchado, E., Byrne, W., Yao, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 178–187. [Google Scholar] [CrossRef]

- Kohavi, R.; John, G.H. Wrappers for feature subset selection. Artif. Intell. 1997, 97, 273–324. [Google Scholar] [CrossRef]

- Hsu, H.-H.; Hsieh, C.-W.; Lu, M.-D. Hybrid feature selection by combining filters and wrappers. Expert Syst. Appl. 2011, 38, 8144–8150. [Google Scholar] [CrossRef]

- Chen, Y.-W.; Lin, C.-J. Combining SVMs with Various Feature Selection Strategies. In Feature Extraction: Foundations and Applications; Guyon, I., Nikravesh, M., Gunn, S., Zadeh, L.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 315–324. [Google Scholar] [CrossRef]

- Liu, H.; Yu, L. Toward integrating feature selection algorithms for classification and clustering. IEEE Trans. Knowl. Data Eng. 2005, 17, 491–502. [Google Scholar] [CrossRef]

- Wei, Z.; Huang, C.; Wang, X.; Han, T.; Li, Y. Nuclear Reaction Optimization: A Novel and Powerful Physics-Based Algorithm for Global Optimization. IEEE Access 2019, 7, 66084–66109. [Google Scholar] [CrossRef]

- Zhuoran, Z.; Changqiang, H.; Hanqiao, H.; Shangqin, T.; Kangsheng, D. An optimization method: Hummingbirds optimization algorithm. J. Syst. Eng. Electron. 2018, 29, 386–404. [Google Scholar] [CrossRef]

- Li, S.; Wu, X.; Tan, M. Gene selection using hybrid particle swarm optimization and genetic algorithm. Soft Comput. 2008, 12, 1039–1048. [Google Scholar] [CrossRef]

- Chuang, L.-Y.; Yang, C.-H.; Li, J.-C.; Yang, C.-H. A Hybrid BPSO-CGA Approach for Gene Selection and Classification of Microarray Data. Available online: https://www.liebertpub.com/doi/10.1089/cmb.2010.0064 (accessed on 10 May 2025).

- Alshamlan, H.M.; Badr, G.H.; Alohali, Y.A. Genetic Bee Colony (GBC) algorithm: A new gene selection method for microarray cancer classification. Comput. Biol. Chem. 2015, 56, 49–60. [Google Scholar] [CrossRef] [PubMed]

- A Hybrid Gene Selection Algorithm for Microarray Cancer Classification Using Genetic Algorithm and Learning Automata—Science Direct. Available online: https://www.sciencedirect.com/science/article/pii/S2352914817301284 (accessed on 10 May 2025).

- Nagra, A.A.; Khan, A.H.; Abubakar, M.; Faheem, M.; Rasool, A.; Masood, K.; Hussain, M. A gene selection algorithm for microarray cancer classification using an improved particle swarm optimization. Sci. Rep. 2024, 14, 19613. [Google Scholar] [CrossRef] [PubMed]

- AlShamlan, H.; AlMazrua, H. Enhancing Cancer Classification through a Hybrid Bio-Inspired Evolutionary Algorithm for Biomarker Gene Selection. Comput. Mater. Contin. 2024, 79, 675–694. [Google Scholar] [CrossRef]

- Nssibi, M.; Manita, G.; Chhabra, A.; Mirjalili, S.; Korbaa, O. Gene selection for high dimensional biological datasets using hybrid island binary artificial bee colony with chaos game optimization. Artif. Intell. Rev. 2024, 57, 51. [Google Scholar] [CrossRef]

- Pirgazi, J.; Kallehbasti, M.M.P.; Sorkhi, A.G.; Kermani, A. An efficient hybrid filter-wrapper method based on improved Harris Hawks optimization for feature selection. BioImpacts 2024, 15, 30340. [Google Scholar] [CrossRef]

- Dabba, A.; Tari, A.; Meftali, S. Hybridization of Moth flame optimization algorithm and quantum computing for gene selection in microarray data. J. Ambient Intell. Humaniz. Comput. 2021, 12, 2731–2750. [Google Scholar] [CrossRef]

- Motieghader, H.; Najafi, A.; Sadeghi, B.; Masoudi-Nejad, A. A hybrid gene selection algorithm for microarray cancer classification using genetic algorithm and learning automata. Inform. Med. Unlocked 2017, 9, 246–254. [Google Scholar] [CrossRef]

- Hameed, S.S.; Muhammad, F.F.; Hassan, R.; Saeed, F. Gene Selection and Classification in Microarray Datasets using a Hybrid Approach of PCC-BPSO/GA with Multi Classifiers. J. Comput. Sci. 2018, 14, 868–880. [Google Scholar] [CrossRef][Green Version]

- GeneCards—Human Genes|Gene Database|Gene Search. Available online: https://www.genecards.org/ (accessed on 13 May 2025).

- Alon, U.; Barkai, N.; Notterman, D.A.; Gish, K.; Ybarra, S.; Mack, D.; Levine, A.J. Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. USA 1999, 96, 6745–6750. [Google Scholar] [CrossRef]

- Beer, D.G.; Kardia, S.L.R.; Huang, C.-C.; Giordano, T.J.; Levin, A.M.; Misek, D.E.; Lin, L.; Chen, G.; Gharib, T.G.; Thomas, D.G.; et al. Gene-expression profiles predict survival of patients with lung adenocarcinoma. Nat. Med. 2002, 8, 816–824. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, S.A.; Staunton, J.E.; Silverman, L.B.; Pieters, R.; den Boer, M.L.; Minden, M.D.; Sallan, S.E.; Lander, E.S.; Golub, T.R.; Korsmeyer, S.J. MLL translocations specify a distinct gene expression profile that distinguishes a unique leukemia. Nat. Genet. 2002, 30, 41–47. [Google Scholar] [CrossRef] [PubMed]

- Alizadeh, A.A.; Eisen, M.B.; Davis, R.E.; Ma, C.; Lossos, I.S.; Rosenwald, A.; Boldrick, J.C.; Sabet, H.; Tran, T.; Yu, X.; et al. Distinct types of diffuse large B-cell lymphoma identified by gene expression profiling. Nature 2000, 403, 503–511. [Google Scholar] [CrossRef] [PubMed]

- Khan, J.; Wei, J.S.; Ringnér, M.; Saal, L.H.; Ladanyi, M.; Westermann, F.; Berthold, F.; Schwab, M.; Antonescu, C.R.; Peterson, C.; et al. Classification and diagnostic prediction of cancers using gene expression profiling and artificial neural networks. Nat. Med. 2001, 7, 673–679. [Google Scholar] [CrossRef]

- Golub, T.R.; Slonim, D.K.; Tamayo, P.; Huard, C.; Gaasenbeek, M.; Mesirov, J.P.; Coller, H.; Loh, M.L.; Downing, J.R.; Caligiuri, M.A.; et al. Molecular Classification of Cancer: Class Discovery and Class Prediction by Gene Expression Monitoring. Science 1999, 286, 531–537. [Google Scholar] [CrossRef]

- Lumumba, V.; Sang, D.; Mpaine, M.; Makena, N.; Daniel Kavita, M. Comparative Analysis of Cross-Validation Techniques: LOOCV, Comparative Analysis Cross-Validation, and Repeated K-folds Cross-Validation in Machine Learning Models. Am. J. Theor. Appl. Stat. 2024, 13, 127–137. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).