AI-Powered Mice Behavior Tracking and Its Application for Neuronal Manifold Analysis Based on Hippocampal Ensemble Activity in an Alzheimer’s Disease Mice Model

Abstract

1. Introduction

2. Results

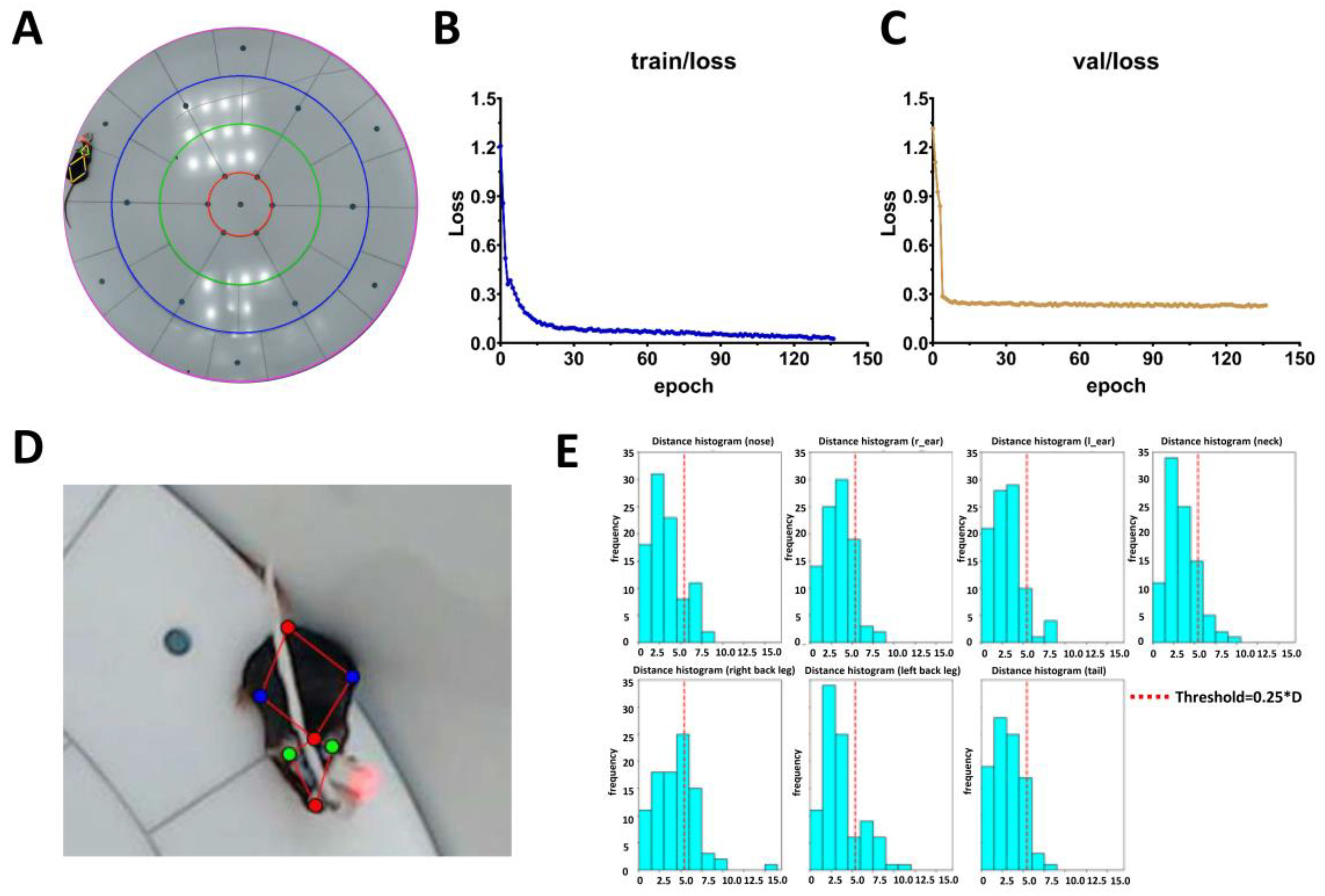

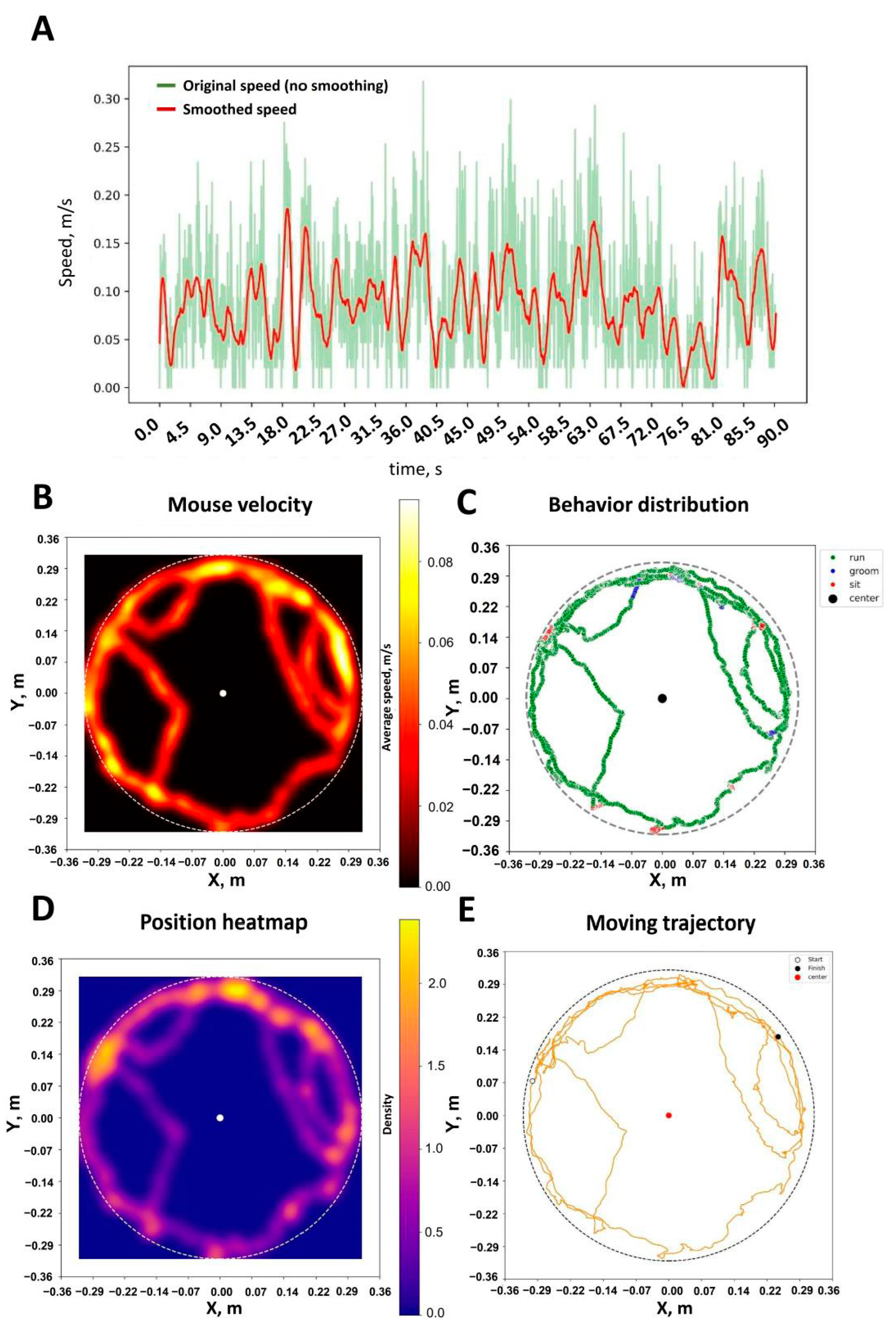

2.1. Artificial Intelligence-Powered Mice Tracking Using Pretrained YOLO-Pose-v8

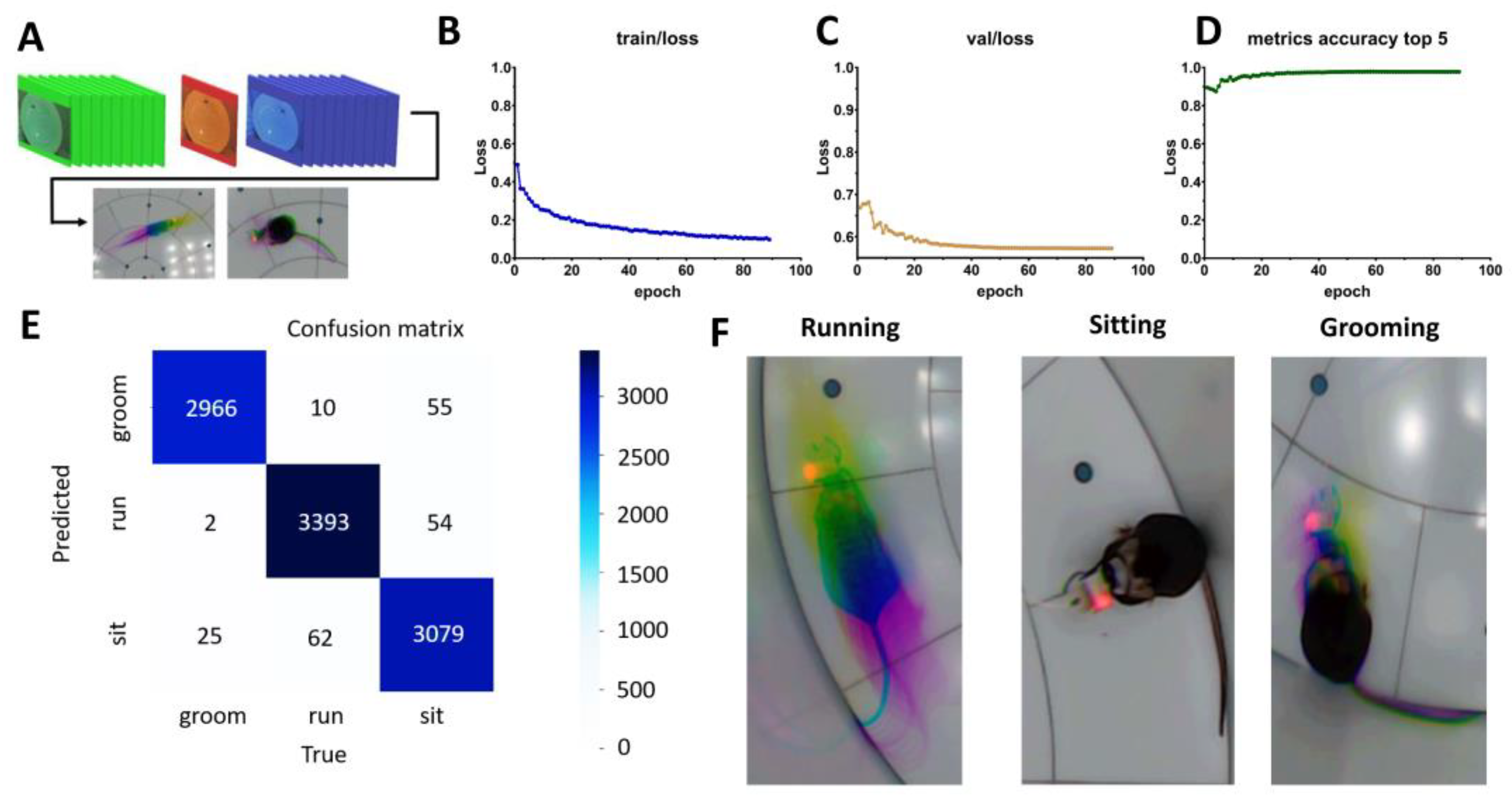

2.2. Artificial Intelligence-Powered Mice Behavior Scoring Using Pretrained YOLO-Pose-v11

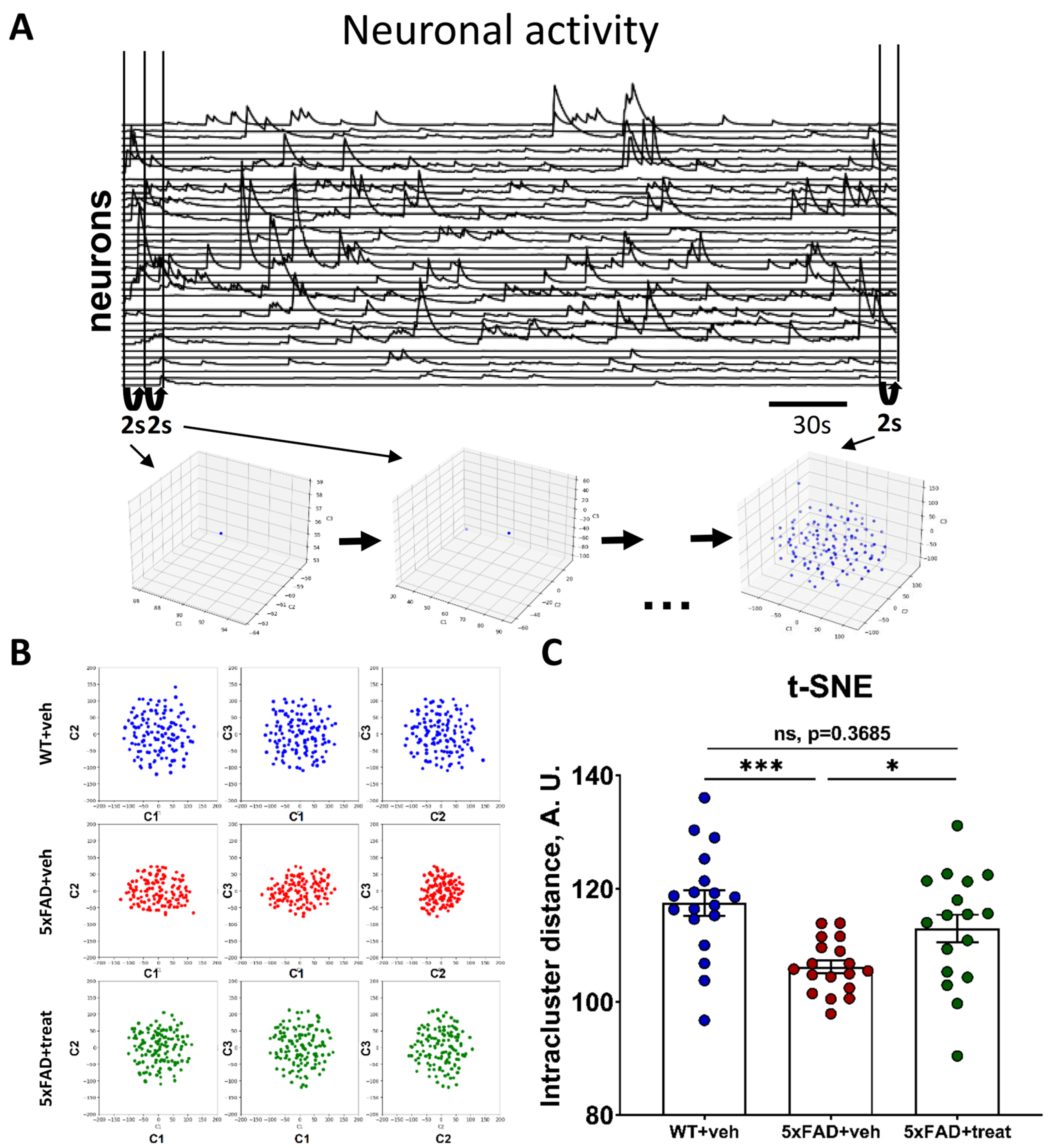

2.3. Neuronal Manifold Construction in Normal and Pathological Conditions Based on Miniature Fluorescence Calcium Imaging

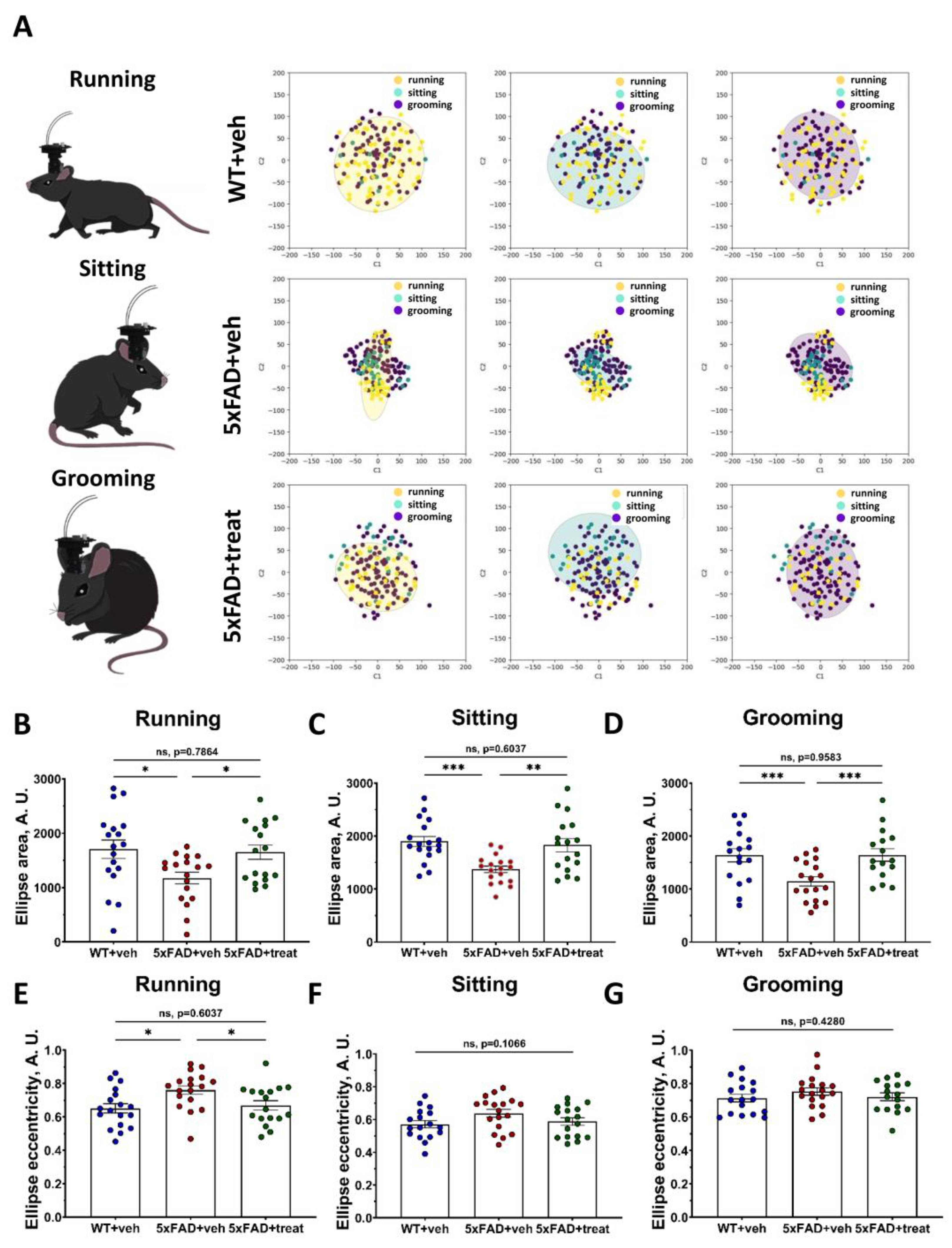

2.4. Altered Neuronal Manifold Composition in the Transgenic 5xFAD Mice During Different Behavioral Types

3. Discussion

4. Materials and Methods

4.1. Animals

4.2. Mice Treatment

4.3. Neuronal Network Training for Mice Tracking and Behavioral Scoring

4.4. Viral Constructs Delivery and GRIN-Lens Implantation

4.5. Hippocampal Neuronal Activity Recordings Under Freely Behaving Conditions

4.6. Processing of Miniscope Recordings

4.7. Datasets

4.8. Neuronal Manifold Construction Based on the Neuronal Calcium Traces

4.9. Preprocessing and Temporal Aggregation of Neural Activity

4.10. Methods for Dimensionality Reduction

4.11. Error Ellipse Estimation

4.12. Control Analysis with Phase-Randomized Data

4.13. Statistics

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Im, C.; Seo, J.M. A Review of Electrodes for the Electrical Brain Signal Recording. Biomed. Eng. Lett. 2016, 6, 104–112. [Google Scholar] [CrossRef]

- Lee, J.M.; Pyo, Y.W.; Kim, Y.J.; Hong, J.H.; Jo, Y.; Choi, W.; Lin, D.; Park, H.G. The Ultra-Thin, Minimally Invasive Surface Electrode Array NeuroWeb for Probing Neural Activity. Nat. Commun. 2023, 14, 7088. [Google Scholar] [CrossRef] [PubMed]

- Erofeev, A.; Antifeev, I.; Bolshakova, A.; Bezprozvanny, I.; Vlasova, O. In Vivo Penetrating Microelectrodes for Brain Electrophysiology. Sensors 2022, 22, 9085. [Google Scholar] [CrossRef] [PubMed]

- Werner, C.T.; Williams, C.J.; Fermelia, M.R.; Lin, D.T.; Li, Y. Circuit Mechanisms of Neurodegenerative Diseases: A New Frontier with Miniature Fluorescence Microscopy. Front. Neurosci. 2019, 13, 1174. [Google Scholar] [CrossRef] [PubMed]

- Aharoni, D.; Khakh, B.S.; Silva, A.J.; Golshani, P. All the Light That We Can See: A New Era in Miniaturized Microscopy. Nat. Methods 2019, 16, 11–13. [Google Scholar] [CrossRef] [PubMed]

- Aharoni, D.; Hoogland, T.M. Circuit Investigations with Open-Source Miniaturized Microscopes: Past, Present and Future. Front. Cell. Neurosci. 2019, 13, 141. [Google Scholar] [CrossRef] [PubMed]

- Gerasimov, E.I.I.; Erofeev, A.I.I.; Pushkareva, S.A.A.; Barinov, D.S.S.; Bolsunovskaja, M.V.V.; Yang, X.; Yang, H.; Zhou, C.; Vlasova, O.L.L.; Li, W.; et al. Miniature Fluorescent Microscope: History, Application, and Data Processing. Zhurnal Vyss. Nervn. Deyatelnosti Im. I.P. Pavlov. 2020, 70, 852–864. [Google Scholar] [CrossRef]

- Gerasimov, E.; Pchitskaya, E.; Vlasova, O.; Bezprozvanny, I. Dynamic Changes in the Hippocampal Neuronal Circuits Activity Following Acute Stress Revealed by Miniature Fluorescence Microscopy Imaging. Mol. Brain 2024, 17, 92. [Google Scholar] [CrossRef] [PubMed]

- Grienberger, C.; Giovannucci, A.; Zeiger, W.; Portera-Cailliau, C. Two-Photon Calcium Imaging of Neuronal Activity. Nat. Rev. Methods Prim. 2022, 2, 67. [Google Scholar] [CrossRef] [PubMed]

- Ali, F.; Kwan, A.C. Interpreting In Vivo Calcium Signals from Neuronal Cell Bodies, Axons, and Dendrites: A Review. Neurophotonics 2019, 7, 011402. [Google Scholar] [CrossRef] [PubMed]

- Pologruto, T.A.; Yasuda, R.; Svoboda, K. Monitoring Neural Activity and [Ca2+] with Genetically Encoded Ca2+ Indicators. J. Neurosci. 2004, 24, 9572–9579. [Google Scholar] [CrossRef] [PubMed]

- Segalin, C.; Williams, J.; Karigo, T.; Hui, M.; Zelikowsky, M.; Sun, J.J.; Perona, P.; Anderson, D.J.; Kennedy, A. The Mouse Action Recognition System (MARS) Software Pipeline for Automated Analysis of Social Behaviors in Mice. eLife 2021, 10, e63720. [Google Scholar] [CrossRef] [PubMed]

- Philipsberg, P.A.; Christenson Wick, Z.; Diego, K.S.; Vaughan, N.; Galas, A.; Jurkowski, A.; Feng, Y.; Vetere, L.M.; Chen, L.; Soler, I.; et al. Chronotate: An Open-Source Tool for Manual Timestamping and Quantification of Animal Behavior. Neurosci. Lett. 2023, 814, 137461. [Google Scholar] [CrossRef] [PubMed]

- Miller, A.L.; Flecknell, P.A.; Leach, M.C.; Roughan, J.V. A Comparison of a Manual and an Automated Behavioural Analysis Method for Assessing Post-Operative Pain in Mice. Appl. Anim. Behav. Sci. 2011, 131, 138–144. [Google Scholar] [CrossRef]

- Tomihara, S.; Oka, Y.; Kanda, S. Establishment of Open-Source Semi-Automated Behavioral Analysis System and Quantification of the Difference of Sexual Motivation between Laboratory and Wild Strains. Sci. Rep. 2021, 11, 10894. [Google Scholar] [CrossRef] [PubMed]

- Kuchiiwa, S.; Kuchiiwa, T. A Novel Semi-Automated Apparatus for Measurement of Aggressive Biting Behavior in Mice. J. Neurosci. Methods 2014, 228, 27–34. [Google Scholar] [CrossRef] [PubMed]

- Samson, A.L.; Ju, L.; Kim, H.A.; Zhang, S.R.; Lee, J.A.A.; Sturgeon, S.A.; Sobey, C.G.; Jackson, S.P.; Schoenwaelder, S.M. MouseMove: An Open Source Program for Semi-Automated Analysis of Movement and Cognitive Testing in Rodents. Sci. Rep. 2015, 5, 16171. [Google Scholar] [CrossRef] [PubMed]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless Pose Estimation of User-Defined Body Parts with Deep Learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Pereira, T.D.; Tabris, N.; Matsliah, A.; Turner, D.M.; Li, J.; Ravindranath, S.; Papadoyannis, E.S.; Normand, E.; Deutsch, D.S.; Wang, Z.Y.; et al. SLEAP: A Deep Learning System for Multi-Animal Pose Tracking. Nat. Methods 2022, 19, 486–495. [Google Scholar] [CrossRef] [PubMed]

- Lipp, H.P.; Krackow, S.; Turkes, E.; Benner, S.; Endo, T.; Russig, H. IntelliCage: The Development and Perspectives of a Mouse- and User-Friendly Automated Behavioral Test System. Front. Behav. Neurosci. 2023, 17, 1270538. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object Detection Based on YOLO Network. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Viskaitis, P.; Arnold, M.; Garau, C.; Jensen, L.T.; Fugger, L.; Peleg-Raibstein, D.; Burdakov, D. Ingested Non-Essential Amino Acids Recruit Brain Orexin Cells to Suppress Eating in Mice. Curr. Biol. 2022, 32, 1812–1821. [Google Scholar] [CrossRef] [PubMed]

- Oh, J.; Lee, C.; Kaang, B.K. Imaging and Analysis of Genetically Encoded Calcium Indicators Linking Neural Circuits and Behaviors. Korean J. Physiol. Pharmacol. 2019, 23, 237–249. [Google Scholar] [CrossRef] [PubMed]

- Mitchell-Heggs, R.; Prado, S.; Gava, G.P.; Go, M.A.; Schultz, S.R. Neural Manifold Analysis of Brain Circuit Dynamics in Health and Disease. J. Comput. Neurosci. 2023, 51, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Chaudhuri, R.; Gerçek, B.; Pandey, B.; Peyrache, A.; Fiete, I. The Intrinsic Attractor Manifold and Population Dynamics of a Canonical Cognitive Circuit across Waking and Sleep. Nat. Neurosci. 2019, 22, 1512–1520. [Google Scholar] [CrossRef] [PubMed]

- Duncker, L.; Sahani, M. Dynamics on the Manifold: Identifying Computational Dynamical Activity from Neural Population Recordings. Curr. Opin. Neurobiol. 2021, 70, 163–170. [Google Scholar] [CrossRef] [PubMed]

- Dong, Z.; Mau, W.; Feng, Y.; Pennington, Z.T.; Chen, L.; Zaki, Y.; Rajan, K.; Shuman, T.; Aharoni, D.; Cai, D.J. Minian, an Open-Source Miniscope Analysis Pipeline. eLife 2022, 11, e70661. [Google Scholar] [CrossRef] [PubMed]

- Gerasimov, E.; Mitenev, A.; Pchitskaya, E.; Chukanov, V.; Bezprozvanny, I. NeuroActivityToolkit—Toolbox for Quantitative Analysis of Miniature Fluorescent Microscopy Data. J. Imaging 2023, 9, 243. [Google Scholar] [CrossRef] [PubMed]

- Oblak, A.L.; Lin, P.B.; Kotredes, K.P.; Pandey, R.S.; Garceau, D.; Williams, H.M.; Uyar, A.; O’Rourke, R.; O’Rourke, S.; Ingraham, C.; et al. Comprehensive Evaluation of the 5XFAD Mouse Model for Preclinical Testing Applications: A MODEL-AD Study. Front. Aging Neurosci. 2021, 13, 713726. [Google Scholar] [CrossRef] [PubMed]

- Forner, S.; Kawauchi, S.; Balderrama-Gutierrez, G.; Kramár, E.A.; Matheos, D.P.; Phan, J.; Javonillo, D.I.; Tran, K.M.; Hingco, E.; da Cunha, C.; et al. Systematic Phenotyping and Characterization of the 5xFAD Mouse Model of Alzheimer’s Disease. Sci. Data 2021, 8, 270. [Google Scholar] [CrossRef] [PubMed]

- Gerasimov, E.; Bezprozvanny, I.; Vlasova, O.L. Activation of Gq-Coupled Receptors in Astrocytes Restores Cognitive Function in Alzheimer’s Disease Mice Model. Int. J. Mol. Sci. 2023, 24, 9969. [Google Scholar] [CrossRef] [PubMed]

- Oakley, H.; Cole, S.L.; Logan, S.; Maus, E.; Shao, P.; Craft, J.; Guillozet-Bongaarts, A.; Ohno, M.; Disterhoft, J.; Van Eldik, L.; et al. Intraneuronal β-Amyloid Aggregates, Neurodegeneration, and Neuron Loss in Transgenic Mice with Five Familial Alzheimer’s Disease Mutations: Potential Factors in Amyloid Plaque Formation. J. Neurosci. 2006, 26, 10129–10140. [Google Scholar] [CrossRef] [PubMed]

- Zhong, M.Z.; Peng, T.; Duarte, M.L.; Wang, M.; Cai, D. Updates on Mouse Models of Alzheimer’s Disease. Mol. Neurodegener. 2024, 19, 23. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Chen, L.; Johnston, K.G.; Crapser, J.; Green, K.N.; Ha, N.M.L.; Tenner, A.J.; Holmes, T.C.; Nitz, D.A.; Xu, X. Degenerate Mapping of Environmental Location Presages Deficits in Object-Location Encoding and Memory in the 5xFAD Mouse Model for Alzheimer’s Disease. Neurobiol. Dis. 2023, 176, 105939. [Google Scholar] [CrossRef] [PubMed]

- Grieco, S.F.; Holmes, T.C.; Xu, X. Probing Neural Circuit Mechanisms in Alzheimer’s Disease Using Novel Technologies. Mol. Psychiatry 2023, 28, 4407–4420. [Google Scholar] [CrossRef] [PubMed]

- Vorhees, C.V.; Williams, M.T. Morris Water Maze: Procedures for Assessing Spatial and Related Forms of Learning and Memory. Nat. Protoc. 2006, 1, 848–858. [Google Scholar] [CrossRef] [PubMed]

- Garthe, A.; Kempermann, G. An Old Test for New Neurons: Refining the Morris Water Maze to Study the Functional Relevance of Adult Hippocampal Neurogenesis. Front. Neurosci. 2013, 7, 63. [Google Scholar] [CrossRef] [PubMed]

- Shoji, H.; Takao, K.; Hattori, S.; Miyakawa, T. Contextual and Cued Fear Conditioning Test Using a Video Analyzing System in Mice. J. Vis. Exp. 2014, 1, 50871. [Google Scholar] [CrossRef]

- Curzon, P.; Rustay, N.R.; Browman, K.E. Cued and Contextual Fear Conditioning for Rodents; Buccafusco, J.J., Ed.; CRC Press/Taylor & Francis: Boca Raton, FL, USA, 2009; ISBN 978-1-4200-5234-3. [Google Scholar]

- Dyomina, A.V.; Smolensky, I.V.; Zaitsev, A.V. Refinement of the Barnes and Morris Water Maze Protocols Improves Characterization of Spatial Cognitive Deficits in the Lithium-Pilocarpine Rat Model of Epilepsy. Epilepsy Behav. 2023, 147, 109391. [Google Scholar] [CrossRef] [PubMed]

- Kraeuter, A.-K.; Guest, P.C.; Sarnyai, Z. The Y-Maze for Assessment of Spatial Working and Reference Memory in Mice. Methods Mol. Biol. 2019, 1916, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Deacon, R.M.J.; Rawlins, J.N.P. T-Maze Alternation in the Rodent. Nat. Protoc. 2006, 1, 7–12. [Google Scholar] [CrossRef] [PubMed]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Samkari, E.; Arif, M.; Alghamdi, M.; Al Ghamdi, M.A. Human Pose Estimation Using Deep Learning: A Systematic Literature Review. Mach. Learn. Knowl. Extr. 2023, 5, 1612–1659. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Li, Q.; Li, R.; Ji, K.; Dai, W. Kalman Filter and Its Application. In Proceedings of the 2015 8th International Conference on Intelligent Networks and Intelligent Systems (ICINIS), Tianjin, China, 1–3 November 2015; pp. 74–77. [Google Scholar] [CrossRef]

- Kingsbury, L.; Huang, S.; Wang, J.; Gu, K.; Golshani, P.; Wu, Y.E.; Hong, W. Correlated Neural Activity and Encoding of Behavior across Brains of Socially Interacting Animals. Cell 2019, 178, 429–446.e16. [Google Scholar] [CrossRef] [PubMed]

- Shuman, T.; Aharoni, D.; Cai, D.J.; Lee, C.R.; Chavlis, S.; Page-Harley, L.; Vetere, L.M.; Feng, Y.; Yang, C.Y.; Mollinedo-Gajate, I.; et al. Breakdown of Spatial Coding and Interneuron Synchronization in Epileptic Mice. Nat. Neurosci. 2020, 23, 229–238. [Google Scholar] [CrossRef] [PubMed]

- Roshchina, M.A.; Smirnov, I.V.; Isaev, A.B.; Roshchin, M.; Borodinova, A.A.; Aseyev, N.A.; Balaban, P.M. Investigation of Ca1 Neuronal Activity during Context Fear Conditioning with Miniature Fluorescence Microscopes. Opera Medica Physiol. 2021, 8, 52–58. [Google Scholar] [CrossRef]

- Beacher, N.J.; Washington, K.A.; Werner, C.T.; Zhang, Y.; Barbera, G.; Li, Y.; Lin, D.T. Circuit Investigation of Social Interaction and Substance Use Disorder Using Miniscopes. Front. Neural Circuits 2021, 15, 762441. [Google Scholar] [CrossRef] [PubMed]

- Hur, S.W.; Safaryan, K.; Yang, L.; Blair, H.T.; Masmanidis, S.C.; Mathews, P.J.; Aharoni, D.; Golshani, P. Correlated Signatures of Social Behavior in Cerebellum and Anterior Cingulate Cortex. eLife 2024, 12, RP88439. [Google Scholar] [CrossRef] [PubMed]

- Lütcke, H.; Margolis, D.J.; Helmchen, F. Steady or Changing? Long-Term Monitoring of Neuronal Population Activity. Trends Neurosci. 2013, 36, 375–384. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.W.; Wardill, T.J.; Sun, Y.; Pulver, S.R.; Renninger, S.L.; Baohan, A.; Schreiter, E.R.; Kerr, R.A.; Orger, M.B.; Jayaraman, V.; et al. Ultrasensitive Fluorescent Proteins for Imaging Neuronal Activity. Nature 2013, 499, 295–300. [Google Scholar] [CrossRef] [PubMed]

- Pachitariu, M.; Stringer, C.; Harris, K.D. Robustness of Spike Deconvolution for Neuronal Calcium Imaging. J. Neurosci. 2018, 38, 7976–7985. [Google Scholar] [CrossRef] [PubMed]

- Eimer, W.A.; Vassar, R. Neuron Loss in the 5XFAD Mouse Model of Alzheimer’s Disease Correlates with Intraneuronal Aβ42 Accumulation and Caspase-3 Activation. Mol. Neurodegener. 2013, 8, 2. [Google Scholar] [CrossRef] [PubMed]

- Zhou, P.; Resendez, S.L.; Rodriguez-Romaguera, J.; Jimenez, J.C.; Neufeld, S.Q.; Giovannucci, A.; Friedrich, J.; Pnevmatikakis, E.A.; Stuber, G.D.; Hen, R.; et al. Efficient and Accurate Extraction of In Vivo Calcium Signals from Microendoscopic Video Data. eLife 2018, 7, e28728. [Google Scholar] [CrossRef] [PubMed]

- Koh, T.H.; Bishop, W.E.; Kawashima, T.; Jeon, B.B.; Srinivasan, R.; Mu, Y.; Wei, Z.; Kuhlman, S.J.; Ahrens, M.B.; Chase, S.M.; et al. Dimensionality Reduction of Calcium-Imaged Neuronal Population Activity. Nat. Comput. Sci. 2023, 3, 71–85. [Google Scholar] [CrossRef] [PubMed]

- Rubin, A.; Sheintuch, L.; Brande-Eilat, N.; Pinchasof, O.; Rechavi, Y.; Geva, N.; Ziv, Y. Revealing Neural Correlates of Behavior without Behavioral Measurements. Nat. Commun. 2019, 10, 4745. [Google Scholar] [CrossRef] [PubMed]

- Ullah, R.; Ali, G.; Ahmad, N.; Akram, M.; Kumari, G.; Amin, M.U.; Umar, M.N. Attenuation of Spatial Memory in 5xfad Mice by Halting Cholinesterases, Oxidative Stress and Neuroinflammation Using a Cyclopentanone Derivative. Pharmaceuticals 2020, 13, 318. [Google Scholar] [CrossRef] [PubMed]

- Kang, S.; Kim, J.; Chang, K.A. Spatial Memory Deficiency Early in 6xTg Alzheimer’s Disease Mouse Model. Sci. Rep. 2021, 11, 1334. [Google Scholar] [CrossRef] [PubMed]

- Greenamyre, J.T.; Maragos, W.F.; Albin, R.L.; Penney, J.B.; Young, A.B. Glutamate Transmission and Toxicity in Alzheimer’s Disease. Prog. Neuropsychopharmacol. Biol. Psychiatry 1988, 12, 421–430. [Google Scholar] [CrossRef] [PubMed]

- Abdul, H.M.; Sama, M.A.; Furman, J.L.; Mathis, D.M.; Beckett, T.L.; Weidner, A.M.; Patel, E.S.; Baig, I.; Murphy, M.P.; LeVine, H.; et al. Cognitive Decline in Alzheimer’s Disease Is Associated with Selective Changes in Calcineurin/NFAT Signaling. J. Neurosci. 2009, 29, 12957–12969. [Google Scholar] [CrossRef] [PubMed]

- Danysz, W.; Parsons, C.G. Alzheimer’s Disease, β-Amyloid, Glutamate, NMDA Receptors and Memantine—Searching for the Connections. Br. J. Pharmacol. 2012, 167, 324–352. [Google Scholar] [CrossRef] [PubMed]

- Shankar, G.M.; Walsh, D.M. Alzheimer’s Disease: Synaptic Dysfunction and Aβ. Mol. Neurodegener. 2009, 4, 48. [Google Scholar] [CrossRef] [PubMed]

- Penazzi, L.; Tackenberg, C.; Ghori, A.; Golovyashkina, N.; Niewidok, B.; Selle, K.; Ballatore, C.; Smith, A.B.; Bakota, L.; Brandt, R. Aβ-Mediated Spine Changes in the Hippocampus Are Microtubule-Dependent and Can Be Reversed by a Subnanomolar Concentration of the Microtubule-Stabilizing Agent Epothilone D. Neuropharmacology 2016, 105, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Kuchibhotla, K.V.; Goldman, S.T.; Lattarulo, C.R.; Wu, H.Y.; Hyman, B.T.; Bacskai, B.J. Aβ Plaques Lead to Aberrant Regulation of Calcium Homeostasis In Vivo Resulting in Structural and Functional Disruption of Neuronal Networks. Neuron 2008, 59, 214–225. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Sun, S.; Wu, L.; Pchitskaya, X.E.; Zakharova, X.O.; Tacer, K.F. Store-Operated Calcium Channel Complex in Postsynaptic Spines: A New Therapeutic Target for Alzheimer’s Disease Treatment. J. Neurosci. 2016, 36, 11837–11850. [Google Scholar] [CrossRef] [PubMed]

- Popugaeva, E.; Pchitskaya, E.; Speshilova, A.; Alexandrov, S.; Zhang, H.; Vlasova, O.; Bezprozvanny, I. STIM2 Protects Hippocampal Mushroom Spines from Amyloid Synaptotoxicity. Mol. Neurodegener. 2015, 10, 37. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Chen, L.; Baglietto-Vargas, D.; Kamalipour, P.; Ye, Q.; LaFerla, F.M.; Nitz, D.A.; Holmes, T.C.; Xu, X. Spatial Coding Defects of Hippocampal Neural Ensemble Calcium Activities in the Triple-Transgenic Alzheimer’s Disease Mouse Model. Neurobiol. Dis. 2022, 162, 105562. [Google Scholar] [CrossRef] [PubMed]

- Shimazaki, H. Neural Coding: Foundational Concepts, Statistical Formulations, and Recent Advances. Neurosci. Res. 2025, 214, 75–80. [Google Scholar] [CrossRef] [PubMed]

- Onken, A.; Karunasekara, P.P.C.R.; Kayser, C.; Panzeri, S. Understanding Neural Population Coding: Information Theoretic Insights from the Auditory System. Adv. Neurosci. 2014, 2014, 1–14. [Google Scholar] [CrossRef]

- Yuste, R.; Cossart, R.; Yaksi, E. Neuronal Ensembles: Building Blocks of Neural Circuits. Neuron 2024, 112, 875–892. [Google Scholar] [CrossRef] [PubMed]

- Oh, B.-I.; Kim, Y.J.; Kang, M.S. Ensemble Representations Reveal Distinct Neural Coding of Visual Working Memory. Nat. Commun. 2019, 10, 5665. [Google Scholar] [CrossRef] [PubMed]

- Aery Jones, E.A.; Giocomo, L.M. Neural Ensembles in Navigation: From Single Cells to Population Codes. Curr. Opin. Neurobiol. 2023, 78, 102665. [Google Scholar] [CrossRef] [PubMed]

- Hong, G.; Lieber, C.M. Novel Electrode Technologies for Neural Recordings. Nat. Rev. Neurosci. 2019, 20, 330–345. [Google Scholar] [CrossRef] [PubMed]

- Zhang, B.; Deng, C.; Cai, C.; Li, X. In Vivo Neural Interfaces—From Small- to Large-Scale Recording. Front. Nanotechnol. 2022, 4, 885411. [Google Scholar] [CrossRef]

- Chen, K.; Tian, Z.; Kong, L. Advances of Optical Miniscopes for In Vivo Imaging of Neural Activity in Freely Moving Animals. Front. Neurosci. 2022, 16, 994079. [Google Scholar] [CrossRef] [PubMed]

- Inoue, M. Genetically Encoded Calcium Indicators to Probe Complex Brain Circuit Dynamics In Vivo. Neurosci. Res. 2021, 169, 2–8. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Rózsa, M.; Liang, Y.; Bushey, D.; Wei, Z.; Zheng, J.; Reep, D.; Broussard, G.J.; Tsang, A.; Tsegaye, G.; et al. Fast and Sensitive GCaMP Calcium Indicators for Imaging Neural Populations. Nature 2023, 615, 884–891. [Google Scholar] [CrossRef] [PubMed]

- Hussain, M. YOLO-v1 to YOLO-v8, the Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Eltokhi, A.; Kurpiers, B.; Pitzer, C. Behavioral Tests Assessing Neuropsychiatric Phenotypes in Adolescent Mice Reveal Strain- and Sex-Specific Effects. Sci. Rep. 2020, 10, 11263. [Google Scholar] [CrossRef] [PubMed]

- Angu Bala Ganesh, K.S.V. A Short Review on Behavioural Assessment Methods in Rodents. Bioinformation 2023, 19, 866–870. [Google Scholar] [CrossRef] [PubMed]

- Sarkar, D. A Review of Behavioral Tests to Evaluate Different Types of Anxiety and Anti-Anxiety Effects. Clin. Psychopharmacol. Neurosci. 2020, 18, 341–351. [Google Scholar] [CrossRef] [PubMed]

- Knopman, D.S.; Amieva, H.; Petersen, R.C.; Chételat, G.; Holtzman, D.M.; Hyman, B.T.; Nixon, R.A.; Jones, D.T. Alzheimer Disease. Nat. Rev. Dis. Prim. 2021, 7, 33. [Google Scholar] [CrossRef] [PubMed]

- Supnet, C.; Bezprozvanny, I. Presenilins Function in ER Calcium Leak and Alzheimer’s Disease Pathogenesis. Cell Calcium 2011, 50, 303–309. [Google Scholar] [CrossRef] [PubMed]

- Pchitskaya, E.; Popugaeva, E.; Bezprozvanny, I. Calcium Signaling and Molecular Mechanisms Underlying Neurodegenerative Diseases. Cell Calcium 2018, 70, 87–94. [Google Scholar] [CrossRef] [PubMed]

- Hampel, H.; Hardy, J.; Blennow, K.; Chen, C.; Perry, G.; Kim, S.H.; Villemagne, V.L.; Aisen, P.; Vendruscolo, M.; Iwatsubo, T.; et al. The Amyloid-β Pathway in Alzheimer’s Disease. Mol. Psychiatry 2021, 26, 5481–5503. [Google Scholar] [CrossRef] [PubMed]

- Murphy, M.P.; Levine, H. Alzheimer’s Disease and the Amyloid-β Peptide. J. Alzheimer’s Dis. 2010, 19, 311–323. [Google Scholar] [CrossRef] [PubMed]

- Arvanitakis, Z.; Shah, R.C.; Bennett, D.A. Diagnosis and Management of Dementia: Review. JAMA 2019, 322, 1589–1599. [Google Scholar] [CrossRef] [PubMed]

- Gerasimov, E.; Rakovskaya, A.; Pchitskaya, E.; Vlasova, O.; Dahl, R.; Bezprozvanny, I. A Positive Allosteric Modulator of the SERCA Pump Rescues Hippocampal Neuronal Circuits Dysfunction and Cognitive Defects in a Mouse Model of Alzheimer’s Disease. J. Neurosci. 2025, 45, e2337242025. [Google Scholar] [CrossRef] [PubMed]

- Mittag, M.; Mediavilla, L.; Remy, S.; Cuntz, H.; Jedlicka, P. Modelling the Contributions to Hyperexcitability in a Mouse Model of Alzheimer’s Disease. J. Physiol. 2023, 601, 3403–3437. [Google Scholar] [CrossRef] [PubMed]

- Sosulina, L.; Mittag, M.; Geis, H.R.; Hoffmann, K.; Klyubin, I.; Qi, Y.; Steffen, J.; Friedrichs, D.; Henneberg, N.; Fuhrmann, F.; et al. Hippocampal Hyperactivity in a Rat Model of Alzheimer’s Disease. J. Neurochem. 2021, 157, 2128–2144. [Google Scholar] [CrossRef] [PubMed]

- Toniolo, S.; Sen, A.; Husain, M. Modulation of Brain Hyperexcitability: Potential New Therapeutic Approaches in Alzheimer’s Disease. Int. J. Mol. Sci. 2020, 21, 9318. [Google Scholar] [CrossRef] [PubMed]

- Korzhova, V.; Marinković, P.; Njavro, J.R.; Goltstein, P.M.; Sun, F.; Tahirovic, S.; Herms, J.; Liebscher, S. Long-Term Dynamics of Aberrant Neuronal Activity in Awake Alzheimer’s Disease Transgenic Mice. Commun. Biol. 2021, 4, 1368. [Google Scholar] [CrossRef] [PubMed]

- Dahl, R.; Bezprozvanny, I. SERCA Pump as a Novel Therapeutic Target for Treating Neurodegenerative Disorders. Biochem. Biophys. Res. Commun. 2024, 734, 150748. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Liang, B.; Barbera, G.; Hawes, S.; Zhang, Y.; Stump, K.; Baum, I.; Yang, Y.; Li, Y.; Lin, D.-T. Miniscope GRIN Lens System for Calcium Imaging of Neuronal Activity from Deep Brain Structures in Behaving Animals. Curr. Protoc. Neurosci. 2019, 86, e56. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa Fabianpedregosa, F.; Michel, V.; Grisel Oliviergrisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Vanderplas, J.; Cournapeau, D.; Pedregosa, F.; Varoquaux, G.; et al. Scikit-Learn: Machine Learning in Python Gaël Varoquaux Bertrand Thirion Vincent Dubourg Alexandre Passos pedregosa, varoquaux, gramfort et al. Matthieu Perrot. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Key Point/Neuronal Network Approach | YOLO-Pose-v8 | DeepLabCut |

|---|---|---|

| Nose | 81.72 | 3.9 |

| Left ear | 88.17 | 26.0 |

| Right ear | 88.17 | 2.4 |

| Tail | 86.02 | 9.6 |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Encoder performance based on the neuronal manifolds | ||||

| WT+veh vs. 5xFAD+veh | 0.750 ± 0.016 ** | 0.764 ± 0.017 ** | 0.834 ± 0.019 * | 0.814 ± 0.017 ** |

| 5xFAD+veh vs. 5xFAD+treat | 0.673 ± 0.014 ** | 0.701 ± 0.010 ** | 0.7265 ± 0.020 ** | 0.700 ± 0.015 ** |

| WT+veh vs. 5xFAD+treat | 0.446 ± 0.014 ns | 0.395 ± 0.016 ns | 0.436 ± 0.013 ns | 0.402 ± 0.014 ns |

| Shuffled | ||||

| WT+veh vs. 5xFAD+veh | 0.612 ± 0.038 | 0.608 ± 0.043 | 0.642 ± 0.047 | 0.598 ± 0.048 |

| 5xFAD+veh vs. 5xFAD+treat | 0.570 ± 0.024 | 0.611 ± 0.024 | 0.613 ± 0.031 | 0.596 ± 0.026 |

| WT+veh vs. 5xFAD+treat | 0.479 ± 0.022 | 0.429 ± 0.024 | 0.493 ± 0.028 | 0.448 ± 0.025 |

| Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Encoder performance based on the neuronal manifolds (running epochs) | ||||

| WT+veh vs. 5xFAD+veh | 0.684 ± 0.007 **** | 0.704 ± 0.009 **** | 0.683 ± 0.010 *** | 0.673 ± 0.008 **** |

| 5xFAD+veh vs. 5xFAD+treat | 0.623 ± 0.012 **** | 0.663 ± 0.015 **** | 0.664 ± 0.011 *** | 0.648 ± 0.011 *** |

| WT+veh vs. 5xFAD+treat | 0.420 ± 0.010 ## | 0.363 ± 0.013 ## | 0.408 ± 0.018 # | 0.374 ± 0.014 # |

| Shuffled | ||||

| WT+veh vs. 5xFAD+veh | 0.494 ± 0.023 | 0.500 ± 0.028 | 0.511 ± 0.035 | 0.475 ± 0.031 |

| 5xFAD+veh vs. 5xFAD+treat | 0.470 ± 0.024 | 0.500 ± 0.028 | 0.471 ± 0.044 | 0.466 ± 0.035 |

| WT+veh vs. 5xFAD+treat | 0.498 ± 0.020 | 0.447 ± 0.025 | 0.503 ± 0.035 | 0.458 ± 0.028 |

| Encoder performance based on the neuronal manifolds (sitting epochs) | ||||

| WT+veh vs. 5xFAD+veh | 0.743 ± 0.012 **** | 0.758 ± 0.013 **** | 0.769 ± 0.014 *** | 0.743 ± 0.012 **** |

| 5xFAD+veh vs. 5xFAD+treat | 0.634 ± 0.009 ** | 0.666 ± 0.006 ** | 0.692 ± 0.015 * | 0.663 ± 0.011 ** |

| WT+veh vs. 5xFAD+treat | 0.495 ± 0.010 ns | 0.450 ± 0.012 ns | 0.504 ± 0.008 ns | 0.461 ± 0.009 ns |

| Shuffled | ||||

| WT+veh vs. 5xFAD+veh | 0.539 ± 0.031 | 0.534 ± 0.033 | 0.576 ± 0.042 | 0.529 ± 0.038 |

| 5xFAD+veh vs. 5xFAD+treat | 0.525 ± 0.029 | 0.553 ± 0.029 | 0.573 ± 0.042 | 0.549 ± 0.035 |

| WT+veh vs. 5xFAD+treat | 0.490 ± 0.023 | 0.433 ± 0.028 | 0.489 ± 0.041 | 0.445 ± 0.033 |

| Encoder performance based on the neuronal manifolds (grooming epochs) | ||||

| WT+veh vs. 5xFAD+veh | 0.666 ± 0.014 *** | 0.678 ± 0.017 *** | 0.675 ± 0.012 *** | 0.658 ± 0.014 *** |

| 5xFAD+veh vs. 5xFAD+treat | 0.665 ± 0.013 ** | 0.692 ± 0.014 **** | 0.702 ± 0.013 * | 0.681 ± 0.012 ** |

| WT+veh vs. 5xFAD+treat | 0.467 ± 0.015 ns | 0.379 ± 0.026 ns | 0.339 ± 0.025 ns | 0.339 ± 0.024 ns |

| Shuffled | ||||

| WT+veh vs. 5xFAD+veh | 0.523 ± 0.026 | 0.528 ± 0.032 | 0.542 ± 0.028 | 0.502 ± 0.026 |

| 5xFAD+veh vs. 5xFAD+treat | 0.513 ± 0.029 | 0.534 ± 0.025 | 0.648 ± 0.036 | 0.575 ± 0.030 |

| WT+veh vs. 5xFAD+treat | 0.502 ± 0.026 | 0.425 ± 0.040 | 0.385 ± 0.041 | 0.384 ± 0.038 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gerasimov, E.; Karasev, V.; Umnov, S.; Chukanov, V.; Pchitskaya, E. AI-Powered Mice Behavior Tracking and Its Application for Neuronal Manifold Analysis Based on Hippocampal Ensemble Activity in an Alzheimer’s Disease Mice Model. Int. J. Mol. Sci. 2025, 26, 7180. https://doi.org/10.3390/ijms26157180

Gerasimov E, Karasev V, Umnov S, Chukanov V, Pchitskaya E. AI-Powered Mice Behavior Tracking and Its Application for Neuronal Manifold Analysis Based on Hippocampal Ensemble Activity in an Alzheimer’s Disease Mice Model. International Journal of Molecular Sciences. 2025; 26(15):7180. https://doi.org/10.3390/ijms26157180

Chicago/Turabian StyleGerasimov, Evgenii, Viacheslav Karasev, Sergey Umnov, Viacheslav Chukanov, and Ekaterina Pchitskaya. 2025. "AI-Powered Mice Behavior Tracking and Its Application for Neuronal Manifold Analysis Based on Hippocampal Ensemble Activity in an Alzheimer’s Disease Mice Model" International Journal of Molecular Sciences 26, no. 15: 7180. https://doi.org/10.3390/ijms26157180

APA StyleGerasimov, E., Karasev, V., Umnov, S., Chukanov, V., & Pchitskaya, E. (2025). AI-Powered Mice Behavior Tracking and Its Application for Neuronal Manifold Analysis Based on Hippocampal Ensemble Activity in an Alzheimer’s Disease Mice Model. International Journal of Molecular Sciences, 26(15), 7180. https://doi.org/10.3390/ijms26157180