Abstract

Protein function prediction plays a crucial role in uncovering the molecular mechanisms underlying life processes in the post-genomic era. However, with the widespread adoption of high-throughput sequencing technologies, the pace of protein function annotation significantly lags behind that of sequence discovery, highlighting the urgent need for more efficient and reliable predictive methods. To address the problem of existing methods ignoring the hierarchical structure of gene ontology terms and making it challenging to dynamically associate protein features with functional contexts, we propose a novel protein function prediction framework, termed Partial Order-Based Self-Attention for Gene Ontology (POSA-GO). This cross-modal collaborative modelling approach fuses GO terms with protein sequences. The model leverages the pre-trained language model ESM-2 to extract deep semantic features from protein sequences. Meanwhile, it transforms the partial order relationships among Gene Ontology (GO) terms into topological embeddings to capture their biological hierarchical dependencies. Furthermore, a multi-head self-attention mechanism is employed to dynamically model the association weights between proteins and GO terms, thereby enabling context-aware functional annotation. Comparative experiments on the CAFA3 and SwissProt datasets demonstrate that POSA-GO outperforms existing state-of-the-art methods in terms of Fmax and AUPR metrics, offering a promising solution for protein functional studies.

1. Introduction

Proteins are at the core of life activities and are involved in key processes such as signaling, metabolic regulation, and maintenance of the cellular structure. Defining their functions reveals disease mechanisms and drug targets and is the key to understanding the operation of biomolecules [1]. However, traditional biochemical experiments are costly, lengthy, and low-throughput [2], resulting in reliable functional annotation of only approximately 0.23% of protein sequences [3]. With the rapid development of high-throughput sequencing technology, the number of unannotated proteins has proliferated, and it is difficult to match the experimental validation capability; so there is an urgent need for efficient and accurate protein function annotation methods to break through the experimental limitations [4].

Traditional protein function prediction methods mainly rely on homology-based transfer [5]. For example, BLAST (version 2.13.0) [6] (BLAST: Basic Local Alignment Search Tool) and Diamond [7] (Diamond-Crystal and Molecular Structure Visualization) technologies sequence unknown proteins against known proteins and transfer known protein functions to similar unknown proteins by utilizing the biological principle that homologous proteins tend to have similar functions due to their common evolutionary origins. However, studies have shown that this approach has limited predictive reliability for distant homologous proteins [8].

In recent years, deep learning has shown great potential in protein function prediction, and many computational methods have been developed to solve the protein function prediction problem [9,10]. Graph2GO [11], DeepFMB [12], and Struct2GO [13] integrate multi-source data, such as protein structural domains and amino acid sequences, through graph convolutional networks to infer protein function. At the same time, DeepText2Go [14] utilizes the abstracts of protein-related publications to mine functional information. Despite the progress made by these methods, most of them rely on external experimental data and suffer from high data acquisition costs and poor scalability [15,16]. There are still two significant challenges in deep learning: first, extracting compelling features from amino acid sequences. Transformer-based pre-trained language models (e.g., ProtT5 [17], ESM [18], ProLLaMA [19]) provide efficient solutions. The second is how to map the low-dimensional embeddings of proteins into a large-scale hierarchical functional labelling space. Early approaches used flat classifiers, ignoring semantic associations and hierarchical constraints between labels [20]. In recent studies, DeepGOA [21] captures the topological relationships between labels via a two-layer graph convolutional network (GCN), or TALE [22] learns the low-dimensional representations of labels via matrix decomposition. However, modelling complex semantic relationships between labels still needs to be further optimized to improve the prediction performance [23].

Gene Ontology (GO) [15], as a standard system for protein function annotation, covers three major domains: molecular function (MF), biological process (BP), and cellular component (CC) [24]. GO terms are constructed in the hierarchical directed acyclic graph (DAG), with shallow terms summarizing abstract, generalized semantics and more profound terms focusing on concrete precise semantics [25]. In protein function annotation tasks, each protein is usually associated with multiple GO terms, which makes protein function prediction a large-scale, multi-label classification problem. In addition, the hierarchical structure of GO terms requires consistency in the prediction results. If a protein is annotated as a specific GO term, all the ancestral terms of the term (up to the root node) need to be annotated as well; at the same time, the prediction probability of a specific GO term has to be greater than or equal to the probability of all of its sub-terms [26,27,28]. This hierarchical constraint requires that the model accurately predicts multiple labels and ensures that the prediction results conform to the semantic hierarchy of GO terms.

In order to overcome the limitations of existing methods and solve the problem of multi-label classification for protein function prediction, we conducted an in-depth study. We proposed the end-to-end protein function prediction model, Partial Order-Based Self-Attention for Gene Ontology (POSA-GO). This new protein function prediction method incorporates the GO term topology with the cross-modal attention mechanism. First, protein-level sequence features are extracted using a pre-trained model, which can effectively capture functionally relevant semantic information in individual protein sequences. Second, topological embeddings of GO terms with partial order relations are generated by combining the PO2Vec algorithm [25], explicitly modelling the shortest path dependency between terms to capture the biological information of GO terms better. Finally, the multi-attention mechanism dynamically calculates the association weights of protein features with GO term embeddings to realize context-aware functional annotation and significantly improve prediction accuracy. Comparative experiments on the CAFA3 dataset show that the improved prediction performance is attributed to the combination of high-quality biased-order relation-based feature extraction of GO terms and the joint prediction module of attention, demonstrating the effectiveness of our approach.

2. Results

2.1. Experimental Setup

We used the same hyperparameter settings to evaluate the POSA-GO model for the three branches of gene ontology: BPO, CCO, and MFO. Among them, GO term embedding is obtained by learning through the GO term encoder: the model is trained for 400 epochs with a batch size of 3000, and the comparative learning objective is optimized using the Adam optimizer (learning rate of 5 × 10−2) [29], with the temperature parameter τ fixed at 0.1. Negative sampling is set to a total number of samples of k = 80, of which 25% are derived from the ancestor terms of the target term (u = 0.25) to balance semantic differentiation with hierarchical relationship maintenance. The POSA-GO model was trained using a uniform base configuration: 25 training cycles, batch size 32, Adam optimizer (learning rate 1 × 10−4, weight decay 0.1) with Step-learning rate scheduling (gamma = 0.9) and Dropout (p = 0.2) regularization, and the number of multi-attention heads was set to 8. All experiments were executed under randomized seeds of 42, and the hardware platform was NVIDIA RTX 3090 GPUs(NVIDIA Corporation, based in Santa Clara, California, USA.). The model structural parameters were differentially set to address the characteristics of the different GO branches: the BPO branch had a potential dimension of 768 (with an MFO/CCO of 512), and the predicted interim dimensions were 1280 (BPO), 768 (MFO), and 896 (CCO).

Here, POSA-GO is compared with six methods: the label transfer method Naive [30] based on sequence similarity, the homology function prediction Diamond [7] based on homology, the graph convolutional neural network (GCN)-based method DeepGOA [21], the deep learning and sequence comparison method DeepGOPlus [20] based on CNNs, the method based on Transformer TALE [22], and PO2GO [25], a deep-learning method based on improved partial order relations; and in our experiments we use the hyperparameters provided in the original paper.

2.2. POSA-GO Outperforms Competing State-of-the-Art Methods

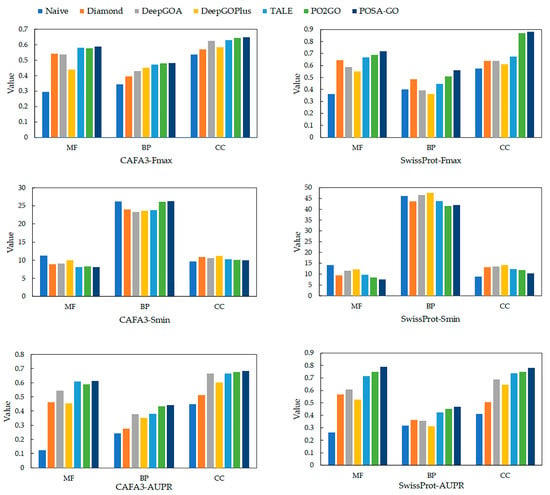

Figure 1 illustrates the performance of different methods in testing the three GO aspects of the CAFA3 and SwissProt datasets [31]. The GO terms in the biological process (BP) ontology are inherently characterized by a large number of terms, a highly hierarchical structure, a long-tailed distribution of informative terms, and complex cross-branch semantic associations, making the prediction of BP terms significantly more challenging compared with those in the cellular component (CC) and molecular function (MF) ontologies. Traditional and deep-learning methods for BP prediction generally suffer from modeling bias for low-frequency specificity terms and semantic propagation attenuation, leading to difficulties in optimizing information-weighted semantic distance metrics. Thus, Smin usually performs worse in BP prediction than structurally insensitive statistical metrics. Regarding Fmax and AUPR, POSA-GO outperforms other competing methods in all three GOs.

Figure 1.

The performance of POSA-GO in predicting protein functions was evaluated against other models using the CAFA3 dataset and the SwissProt dataset across three Gene Ontology (GO) categories: Biological Process (BP), Molecular Function (MF), and Cellular Component (CC). The methods compared include Naive, Diamond, and TALE, which are standalone approaches. DeepGOPlus, PO2GO, and POSA-GO are composite methods. Performance was assessed using three metrics: the area under the precision–recall curve (AUPR), where a higher value is better; minimum semantic distance (Smin), where a lower value is better; and maximum F1-score (Fmax), where a higher value is better.

Specifically, in terms of Fmax, POSA-GO outperforms the competing methods in MFO, BPO, and CCO for the CAFA3 and SwissProt datasets by (1.2%, 4.3%), (0.6%, 9.8%), and (0.9%, 1.1%), respectively. AUPR on MFO, BPO, and CCO on the CAFA3 and SwissProt datasets are higher than competing methods by (0.1%, 5.3%), (1.6%, 4.4%), and (1.1%, 4%), respectively. The experimental results of POSA-GO demonstrate that, compared with CNN or GCN-based multi-source fusion methods, features based on the mechanism of multi-head self-attention cross-fertilization exhibit significant advantages.

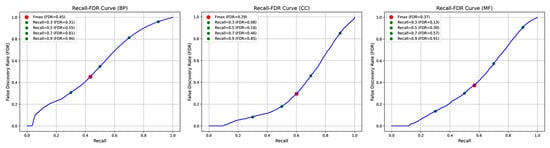

In addition, we further analyze the false discovery rate (FDR) of POSA-GO on various ontologies using the CAFA3 dataset and present the Recall–FDR curves in Figure 2 to evaluate the model’s reliability in practical applications. For the BPO model, at maximum recall (approximately 0.9), the false discovery rate reaches as high as 0.959, resulting in a significant number of false positives. In the CCO model, there is improved control of the FDR. At the Fmax threshold of 0.499, the FDR is only 0.295. Even with a high recall of approximately 0.9, the FDR remains below 0.85. In the MFO model, similar to the BPO model, the FDR sharply increases at high recall levels. However, at the optimal threshold of 0.395, the FDR is 0.374, indicating acceptable reliability when predictions are made at the Fmax point. In summary, FDR tends to rise with high recall, but a good balance between recall ability and prediction reliability can be achieved by selecting an appropriate prediction threshold.

Figure 2.

Trends in Recall–FDR for model POSA-GO on the three GO ontologies. As the prediction threshold decreases, the recall increases, accompanied by a significant rise in false discovery rate (FDR), which is evident in the BP and MF ontologies. In the CC ontology, the FDR growth is relatively flat.

2.3. The Influence of the Number of Attention Heads on Prediction Performance

We investigated the effect of the number of attention heads (h) on the performance of protein function prediction models in the multi-head self-attention mechanism on the CAFA3 dataset. Four different values of h = 1, 2, 4, and 8 were set for the experiment, and a comprehensive performance evaluation was carried out on three protein function ontologies: MFO, BPO, and CCO. The results are presented in Table 1, which shows that when the value of h ranges from 1 to 4, Fmax exhibits a trend of steady improvement. This indicates that as the number of attention heads increases, the model’s feature extraction ability also steadily improves. It can learn feature representations of different subspaces in parallel, capturing complex information in protein sequences more comprehensively. However, when h increases to 8, the model shows a significant decrease in the Fmax metric on all three tasks and an increase in the Smin metric. This performance degradation can be explained from several perspectives: too many attentional heads can lead to a dimensional explosion of the feature space, increasing the computational overhead of the model, which may produce redundant feature representations and noise interference; the model may also build up too large a sensory field as a result, so that some irrelevant distant dependencies are incorrectly reinforced, interfering with the identification of key local features; too many attentional heads may also lead to the model overfitting, especially in the case of limited training data. Therefore, when applying the multi-head self-attention mechanism, it is necessary to carefully weigh the model’s expressive ability and computational efficiency and set an appropriate h-value.

Table 1.

The performance of POSA-GO with different h on the CAFA3 dataset.

2.4. Model Ablation Study

We conducted ablation experiments on the CAFA3 dataset to evaluate the effectiveness of the GO item embedding module and the joint prediction module based on multi-head self-attention in POSA-GO. Table 2 of the experimental results shows that removing the attention mechanism significantly degrades the model’s performance on all feature categories (MF, BP, CC), as reflected in the overall degradation of the Fmax and AUPR metrics. This phenomenon suggests that the attention mechanism plays a key role in feature interaction and weight assignment to effectively capture the complex association between protein sequences and GO terms. For the GO term embedding module, the performance of the removed model is similarly degraded in terms of the Fmax and AUPR metrics. Note that in the BP task, the AUPR metrics of POSA-GO are slightly lower than those of the versions with attention removed or PO2Vec removed, which may be attributed to the small size of the training data for the BP task under the CAFA3 dataset, which is prone to overfitting when too many parameters are introduced, and the dependence of some of the biological processes on the information of localized regions, which results in the model’s ineffectiveness in capturing the long-distance dependence. In summary, the experimental results verified the advantages of the multi-head self-attention mechanism in feature fusion, which can model long-range dependencies more effectively in cellular components and molecular functions compared with simple feature splicing. Meanwhile, the embeddings obtained through the GO term embedding module training significantly enhance the model’s generalization ability, but the training data size may limit its effect.

Table 2.

Ablation analysis: Effect evaluation on the CAFA3 dataset.

3. Discussion

Currently, many proteins in existing databases lack functional annotations, and traditional homology-based methods have limited accuracy when predicting the functions of distantly related proteins with low sequence similarity. For proteins with completely unknown functions, prediction methods primarily rely on the intrinsic features of the proteins. Potential functional tags are indirectly inferred by calculating associations between the target protein and annotated proteins that share similar features.

In this study, we propose an innovative protein function prediction model called POSA-GO, which does not require sequence homology comparisons. First, leverage the powerful pre-training capabilities of the protein language model ESM-2 to identify structural features that are evolutionarily conserved and functionally relevant in protein sequences. Next, we employ the PO2Vec algorithm to generate topological embeddings of GO terms with partial order relations, effectively modeling the hierarchical structure and semantic associations between Gene Ontology terms. This enables the model to perform functional inference through the is_a and part_of relationships. Finally, even in the absence of homologous sequences, the cross-modal multi-head self-attention mechanism dynamically establishes the precise associations between protein local features and GO terms, enabling multi-level protein function annotation.

This fusion of sequence features and topological embeddings improves the model’s performance in Fmax and AUPR indexes. It significantly enhances the specificity of functional annotation and the ability to learn with fewer samples. The model’s modular design makes it scalable. It supports the flexible substitution of different protein encoders and its GO term embedding method, which can be extended to other gene ontology learning tasks. It offers a novel technical approach for predicting protein function.

A significant breakthrough in protein structure prediction has been achieved with the introduction of the AlphaFold2 model [32]. This algorithm predicts the 3D structure of proteins based on their amino acid sequences, achieving prediction accuracy at the atomic level [32]. However, this method has limitations. First, its effectiveness is constrained by the availability of 3D structural data. For proteins lacking experimental structural data, prediction errors can impact the accuracy of functional inferences. Second, the relationship between a protein’s structure and its function is closer than that of its sequence; therefore, precise structure-to-function mapping still relies on additional annotation tools. To overcome the limitations mentioned, we can utilize the structural features predicted by AlphaFold2 as complementary inputs, combining them with the sequence features from POSA-GO. A self-attention mechanism is then employed to dynamically establish correlation weights between the feature embeddings and the Gene Ontology (GO) terms. This approach enhances the performance of protein function prediction.

We plan to explore multimodal data fusion and model architectures to optimize POSA-GO in future work. First, inspired by HNetGO [33], introducing a fast and accurate MSA algorithm, diamond, to compute the similarity between protein sequences and protein interaction network data, combined with a dynamic weighting mechanism, may generate a more comprehensive functional characterization. Second, while combining graph neural networks (GNNs) with structural prediction features such as AlphaFold2 [32], literature-derived a priori knowledge maps are introduced to design a cross-graph information transfer strategy based on the attention mechanism, which enables the model to learn both the underlying features from sequence to structure and the high-level functional associations in the literature knowledge. For example, Prot2Text [34] demonstrated that integrating graph and sequence information improves the understanding of protein function. Third, hierarchical-aware contrastive learning strategies can be developed to enhance the semantic differentiation of GO term embedding and design hierarchical attention mechanisms to optimize the interaction process between sequence features and ontology terms, respectively, in addition to focusing on model interpretability enhancement by visualizing the decision basis and constructing memory-enhancing modules to capture rare functional patterns better.

Mass spectrometry (MS)-based proteomics directly identifies and quantifies amino acid sequences, providing an unbiased and systematic analysis of protein expression, modifications, and interactions with high quantitative accuracy, specificity, and cross-species applicability [35]. Notably, MS-based interaction proteomics, such as affinity purification MS (AP-MS), can effectively capture dynamic and condition-specific protein–protein interactions, localize interactions to specific domains, or identify interactions dependent on post-translational modifications (PTMs) [35]. In future work, we propose to use the high-confidence interaction data obtained from AP-MS to improve protein function prediction. We intend to encode the corresponding protein–protein interaction (PPI) network and annotate it with Gene Ontology (GO) terms, which will facilitate transfer learning using graph neural networks (GNNs).

4. Materials and Methods

4.1. Overview

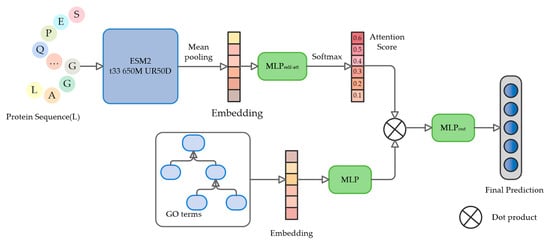

The POSA-GO model is designed with a modular architecture, which consists of three core components to form a complete prediction process: first, the protein feature extraction module encodes the deep semantic encoding of the input sequences through a pre-trained language model; second, the GO term embedding module specializes in the hierarchical topological features of the gene ontology terms; and lastly, the joint prediction module based on self-attention dynamically integrates the output features from the first two modules that realize end-to-end protein function prediction. The whole system constructs standardized inputs through the input data generation module and outputs the final functional annotation results after being trained by the joint predictor. The framework of the proposed POSA-GO is shown in Figure 3.

Figure 3.

The architecture of POSA-GO. First, the pre-trained language model ESM2 is used to extract the embedded representation of the initial protein, which is then aggregated into the global representation of the protein using a mean pooling strategy. Meanwhile, the GO term embedding module handles the hierarchical topological characterization of gene ontology terms. Then, the global representation of protein sequences and the feature matrices of gene ontology terms are sent in parallel with two MLPs to learn the attention-weight vectors and semantic alignment mappings. Finally, the dot product between protein features and GO term embeddings is dynamically computed through multi-head self-attention mechanisms to derive association weights, and the weighted features are then fed into the MLP output layer to generate the final annotation results.

4.2. Dataset

Gene Ontology (GO) data were obtained from the official GO website, where isolated terms were removed, obsolete term IDs were replaced with their primary IDs, and only the is_a and part_of relationships were retained. Finally, 27,709 terms were obtained in the BPO domain, 11,256 in the MFO domain, and 4043 in the CCO domain. In this paper, we collected, reviewed, and manually annotated proteome sequences from SwissProt [36], which contains 546,651 protein sequences. To demonstrate the generalizability of POSA-GO, this paper uses the same training sequences, experimental annotations, and test benchmarks as the CAFA3 dataset in the literature [25]. It compares it with other protein function prediction methods. The statistics of training and testing sets used in this study are shown in Table 3.

Table 3.

The number of protein sequences with experimental annotations in CAFA3 and SwissProt datasets grouped by sub-ontologies.

4.3. The Architecture of POSA-GO

4.3.1. Pretrained Protein Language Model

In this study, POSA-GO utilizes the protein language model esm2_t33_650M_UR50D (ESM-2 for short, facebookresearch/esm: Evolutionary Scale Modeling (esm): Pretrained language models for proteins) [37] to extract high-quality protein feature representations from amino acid sequences, thereby bypassing computationally intensive multiple sequence alignment (MSA) or structural templates. ESM-2 is a pre-trained protein language model (PLM) based on the Transformer architecture, whose model structure draws on RoBERTa’s [38] improvement strategy and contains a multilayer Self-Attention mechanism and feed-forward neural networks. ESM-2 is designed to provide a high-quality representation of protein features. It is trained through Masked Language Modeling (MLM) combined with Span Masking, which enables it to capture local structure more effectively. During training, the model must predict randomly masked amino acids to learn the contextual dependencies and evolutionary patterns of protein sequences [39]. The training data for ESM-2 come from UniRef50(UniRef | UniProt help | UniProt) [40], which covers over 250 million protein sequences. Compared with the training strategy of ESM-1b [18], ESM-2 employs dynamic mask scaling and gradient accumulation in training to alleviate the overfitting problem in low-complexity regions in protein sequences. The pre-trained ESM-2 can be directly used for downstream tasks without fine-tuning. Specifically, for a protein p of length L amino acids, the embedding representation of each amino acid is first extracted using ESM-2 and combined into a feature matrix Z∈ℝd×L, where d is the embedding dimension. In order to aggregate the global representation of the protein from the amino acid level embeddings, the same mean pooling strategy as Unsal et al. is used secondly, and the final embedding representation of protein p is obtained as f(p) = mean(Z)∈ℝd×1 [41].

4.3.2. Multilayer Perceptron

MLP is a fully connected feed-forward artificial neural network. Its core computation can be expressed as , where is the input of the lth layer of the MLP, is the output of the lth layer, is the weight matrix of the lth layer, denotes the number of neurons in the lth layer, and is the bias term of the lth layer [42].

4.3.3. Multi-Head Attention Layer

The multi-head attention mechanism in this paper is based on the Transformer architecture, which has been enhanced for protein sequence feature extraction tasks based on previous research [43]. The multi-head attention layer in the model consists of three parts: input projection, parallel attention head computation, and multi-head fusion output. The specific structure is as follows: Unlike the classical Transformer, the query (), key (), and value () in POSA-GO use separate input sources to enhance flexibility. The query () is generated from the MLP module (q) by linear transformation and combined with batch normalization, ReLU activation, and Dropout for nonlinear feature extraction to generate a high-latitude semantic representation [44,45,46]. Independent linear layer mapping obtains keys () and values () to realize the model’s fusion of sequential and non-sequential modal information. Each attention head is aggregated by computing the similarity scores of and and weighting [43]:

where the scaling factor is used to prevent the gradient from being destabilized by too large a dot product result. The model uses multiple parallel attention heads, and the outputs of each head are spliced and fused through a non-dynamic quantized linear layer, omitting the feed-forward network module in classical multi-head attention and plugging the attention outputs directly into the downstream prediction task [43]. Multi-head attention is defined as [43]

where is the number of attention heads, and denotes the output projection matrix for fusing the outputs of all heads. is defined as [43]

where , , and are the th header query, key projection, and value projection matrices, respectively, where is the bit size of the hidden embedding vector and [43].

4.3.4. GO Term Embedding with PO2Vec

Protein function prediction is a multi-label classification problem. In most approaches, the classes (GO terms) are constructed as a directed acyclic graph (DAG), and this structure has consistency requirements for the final prediction results [47,48,49]. Here, the model applies PO2Vec proposed by [25] to thoroughly learn the structural information between GO terms using a biased ordering relation within/outside the paths. This learning strategy defines two constraints—(1) in-path: for a given term , its ancestor term , and another in-path term , if is closer to than , then , where returns the number of edges of the shortest path between the terms, i.e., the similarity between and is more significant than that between and . (2) out-path: For a given term , its ancestor term , and out-of-path term , there exists a similarity between and that is much greater than between and . Note that given two terms and , if there is a direct or indirect ancestor or descendant relationship between and , then is an in/out-of-path term for . Otherwise, is an out-of-path term for , i.e., .

For GO term feature embedding, PO2Vec employs an unsupervised representation learning method, contrast learning, to maximize the consistency between similar instances and minimize the consistency between different instances. The commonly used loss function InfoNCE is as follows [50]:

where is the within-batch training sample, is a positive sample, and is the similarity between and .

Traditional stochastic negative sampling in modelling results in models biased toward learning simple, irrelevant terms and ignoring important but difficult term associations because there are far more out-of-path terms than in-path terms. For this reason, an improved harmful sampling method is employed to increase the proportion of in-path terms, prompting the model to learn to distinguish between semantically similar terms and thus learn hierarchical, biased order relations more accurately. The method is divided into three stages: index construction, hierarchical sampling, and comparison learning.

First, three types of candidate sets for each term are constructed: in-path term list , out-of-path term list , and cross-domain out-of-path term list . contains terms that are on the same path as (e.g., ancestor or descendant nodes), contains terms that belong to the same domain as but are not on its path, and contains terms that belong to different domains and are not path-associated with , where the terms in are sorted in ascending order of the shortest path length.

Second, high-quality negative samples are proportionally drawn from the three types of lists to balance semantic similarity and difference. Specifically, an ancestor term from is randomly selected as a positive sample , and the total number of negative samples is fixed as . The proportion of negative samples within a path is controlled by the hyperparameter . The in-path negative samples are obtained by sampling terms from , whose path lengths are all larger than , to help the model distinguish fine-grained hierarchical relationships. The out-of-path negative samples are sampled from and by to enhance the model’s judgment of cross-domain and irrelevant terms.

Finally, the sampled positive and negative samples are used to train the contrast loss, pulling closer to the representation of the positive samples and pushing farther away from the representation of the negative sample set . We apply the balanced InfoNCE loss function to learn the embedding representation of GO terms:

where is a temperature hyperparameter to control the function, is smaller so that similarity differences are amplified, is larger so that the sample distribution is smoother, denotes the embedding vector of the samples, and balances the effect of the number of negative samples in different categories.

4.3.5. Protein-Term Link Prediction

For any given protein sequence and GO term, we generate initial embeddings of the sequence and GO term, respectively, by pre-training, project both into the same semantic space using two completely independent MLPs to eliminate distributional differences, subsequently compute the dot product of the projection vectors of the protein with all the terms as the base similarity, and output the final prediction by a third MLP with a nonlinear transformation of the similarity vectors probability (Figure 1).

Specifically, for a given protein , its original embedding representation is obtained through the protein feature extractor ESM-2. For a given Gene Ontology (GO) term , its original embedding is obtained through the terms encoder. In order to map the embeddings of proteins and Gene Ontology (GO) terms into the same semantic space, two independent multilayer perceptron machines (MLPs) are used to perform the nonlinear transformation, respectively:

where the original protein embedding has dimension , the projected protein embedding has dimension , and the projected Gene Ontology embedding is consistent with the protein embedding dimension. We do this by computing the similarity vector of protein with all GO terms :

which reacts to the similarity between the two, where is the number of Gene Ontology terms. Unlike traditional methods that directly use the similarity as the prediction result, we invoke another MLP to optimize the similarity vector further:

where the predicted probability vector , and each element represents the probability that the protein is annotated as . Since a protein may correspond to multiple Gene Ontology terms, a binary cross-entropy loss is used here:

where indicates whether the protein is authentically annotated with the Gene Ontology term .

4.4. Evaluation Metrics

Three metrics are used in this study to evaluate the predictive performance of the model, namely Fmax, Smin, and AUPR. Fmax is the maximum of all F1 scores computed at different prediction thresholds , as defined below [51,52]:

where and are the precision and recall of the prediction results for all proteins under the threshold , respectively defined as follows:

where is the number of proteins with annotations whose prediction score is greater than or equal to . and are the set of accurate annotations for protein and predicted annotations for the th protein at threshold , respectively. denotes the number of elements. is the total number of proteins used for evaluation.

Smin is used to measure the minimum semantic distance between the predicted annotations and the accurate annotations, reacting to the degree of functional semantic proximity between the predicted results and the accurate annotations, as defined below [24]:

where is the information content of Gene Ontology term , measures the average information content of terms that are true but not predicted, measures the average information content of terms that are incorrectly predicted, is the conditional probability of Gene Ontology term appearing under the condition of a given parent term, which is used to reflect the rarity of the term, and is the set of parent terms of Gene Ontology term [24]. AUPR is the area enclosed by the Precision–Recall curve with the area of the region enclosed by the coordinate axes, which is more comprehensive than the metrics under a single threshold and reflects the overall performance of the model under all thresholds.

5. Conclusions

In summary, the model POSA-GO proposed in this study significantly improves the accuracy of functional annotation on the benchmark test set. The core innovations of the model are reflected in the following: firstly, the cutting-edge protein language model ESM-2 is used to deeply mine the functional information in sequences; secondly, the discrete GO terms are modelled as continuous topological embeddings based on the bias–order relationship [52]; and lastly, the precise alignment of sequence features and functional semantics is achieved by the multimodal fusion mechanism. This study provides a reliable research solution for the functional annotation of proteins.

Author Contributions

Conceptualization, Y.L. and B.W.; methodology, Y.D.; validation, B.Y.; data acquisition and processing, H.J. and B.W.; drafting of the manuscript, B.W. and Y.L.; review and editing, Y.L., B.W., Y.D., B.Y. and H.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets analyzed in this study are from the SwissProt dataset: ‘http://www.uniprot.org/uniprot/’ accessed on 10 May 2023. and the CAFA3 dataset: ‘https://github.com/xbiome/protein-annotation’ accessed on 16 May 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Eisenberg, D.; Marcotte, E.M.; Xenarios, I.; Yeates, T.O. Protein function in the post-genomic era. Nature 2000, 405, 823–826. [Google Scholar] [CrossRef] [PubMed]

- Costanzo, M.; VanderSluis, B.; Koch, E.N.; Baryshnikova, A.; Pons, C.; Tan, G.; Wang, W.; Usaj, M.; Hanchard, J.; Lee, S.D.; et al. A global genetic interaction network maps a wiring diagram of cellular function. Science 2016, 353, aaf1420. [Google Scholar] [CrossRef]

- The UniProt Consortium. UniProt: The universal protein knowledgebase in 2023. Nucleic Acids Res. 2022, 51, D523–D531. [Google Scholar]

- Cruz, L.M.; Trefflich, S.; Weiss, V.A.; Castro, M.A.A. Protein Function Prediction. Methods Mol. Biol. 2017, 1654, 55–75. [Google Scholar] [PubMed]

- Clark, W.T.; Radivojac, P. Analysis of protein function and its prediction from amino acid sequence. Proteins 2011, 79, 2086–2096. [Google Scholar] [CrossRef] [PubMed]

- Altschul, S.F.; Madden, T.L.; Schäffer, A.A.; Zhang, J.; Zhang, Z.; Miller, W.; Lipman, D.J. Gapped BLAST and PSI-BLAST: A new generation of protein database search programs. Nucleic Acids Res. 1997, 25, 3389–3402. [Google Scholar] [CrossRef]

- Buchfink, B.; Xie, C.; Huson, D.H. Fast and sensitive protein alignment using DIAMOND. Nat. Methods 2015, 12, 59–60. [Google Scholar] [CrossRef]

- Friedberg, I. Automated protein function prediction–the genomic challenge. Brief. Bioinform. 2006, 7, 225–242. [Google Scholar] [CrossRef]

- Lin, B.; Luo, X.; Liu, Y.; Jin, X. A comprehensive review and comparison of existing computational methods for protein function prediction. Brief. Bioinform. 2024, 25, bbae289. [Google Scholar] [CrossRef]

- Szklarczyk, D.; Nastou, K.; Koutrouli, M.; Kirsch, R.; Mehryary, F.; Hachilif, R.; Hu, D.; Peluso, M.E.; Huang, Q.; Fang, T.; et al. The STRING database in 2025: Protein networks with directionality of regulation. Nucleic Acids Res. 2025, 53, D730–D737. [Google Scholar] [CrossRef]

- Fan, K.; Guan, Y.; Zhang, Y. Graph2GO: A multi-modal attributed network embedding method for inferring protein functions. Gigascience 2020, 9, giaa081. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Shuai, Y.; Li, Y.; Zeng, M.; Li, M. Enhancing Protein Function Prediction Through the Fusion of Multi-Type Biological Knowledge with Protein Language Model and Graph Neural Network. IEEE Trans. Comput. Biol. Bioinform. 2025, 22, 581–590. [Google Scholar] [CrossRef]

- Jiao, P.; Wang, B.; Wang, X.; Liu, B.; Wang, Y.; Li, J. Struct2GO: Protein function prediction based on graph pooling algorithm and AlphaFold2 structure information. Bioinformatics 2023, 39, btad637. [Google Scholar] [CrossRef]

- You, R.; Huang, X.; Zhu, S. DeepText2GO: Improving large-scale protein function prediction with deep semantic text representation. Methods 2018, 145, 82–90. [Google Scholar] [CrossRef] [PubMed]

- Yan, N.; Lv, Z.; Hong, W.; Xu, X. Editorial: Feature representation and learning methods with applications in protein secondary structure. Front. Bioeng. Biotechnol. 2021, 9, 748722. [Google Scholar] [CrossRef] [PubMed]

- Zhou, H.; Yin, M.; Wu, W.; Li, M.; Fu, K.; Chen, J.; Wu, J.; Wang, Z. ProtCLIP: Function-Informed Protein Multi-Modal Learning. arXiv 2024, arXiv:2412.20014v1. [Google Scholar] [CrossRef]

- Elnaggar, A.; Heinzinger, M.; Dallago, C.; Rehawi, G.; Wang, Y.; Jones, L.; Gibbs, T.; Feher, T.; Angerer, C.; Steinegger, M.; et al. ProtTrans: Towards cracking the language of lifes code through self-supervised deep learning and high performance computing. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7112–7127. [Google Scholar] [CrossRef]

- Rives, A.; Meier, J.; Sercu, T.; Goyal, S.; Lin, Z.; Liu, J.; Guo, D.; Ott, M.; Zitnick, C.L.; Ma, J.; et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl. Acad. Sci. USA 2021, 118, e2016239118. [Google Scholar] [CrossRef]

- Lv, L.; Lin, Z.; Li, H.; Liu, Y.; Cui, J.; Chen, C.Y.-C.; Yuan, L.; Tian, Y. ProLLaMA: A Protein Language Model for Multi-Task Protein Language Processing. arXiv 2024, arXiv:2402.16445v2. [Google Scholar] [CrossRef]

- Kulmanov, M.; Hoehndorf, R. DeepGOPlus: Improved protein function prediction from sequence. Bioinformatics 2019, 36, 422–429. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, J.; Zhang, X.; Yu, G. Deepgoa: Predicting gene ontology annotations of proteins via graph convolutional network. In Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, 18–21 November 2019; IEEE: New York, NY, USA; pp. 1836–1841. [Google Scholar]

- Cao, Y.; Shen, Y. TALE: Transformer-based protein function annotation with joint sequence–label embedding. Bioinformatics 2021, 37, 2825–2833. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.Y.; Wang, J.F.; Hu, Y.; Li, X.H.; Qian, Y.R.; Song, C.L. Evaluating the advancements in protein language models for encoding strategies in protein function prediction: A comprehensive review. Front. Bioeng. Biotechnol. 2025, 13, 1506508. [Google Scholar] [CrossRef]

- Wu, K.; Wang, L.; Liu, B.; Liu, Y.; Wang, Y.; Li, J. PSPGO: Cross-Species Heterogeneous Network Propagation for Protein Function Prediction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2023, 20, 1713–1724. [Google Scholar] [CrossRef]

- Li, W.; Wang, B.; Dai, J.; Kou, Y.; Chen, X.; Pan, Y.; Hu, S.; Xu, Z.Z. Partial order relation-based gene ontology embedding improves protein function prediction. Brief. Bioinform. 2024, 25, bbae077. [Google Scholar] [CrossRef] [PubMed]

- Valentini, G. True path rule hierarchical ensembles for genomewide gene function prediction. IEEE/ACM Trans. Comput. Biol. Bioinform. 2010, 8, 832–847. [Google Scholar] [CrossRef] [PubMed]

- Abbass, J.; Nebel, J.-C. Rosetta and the journey to predict proteins’ structures, 20 years on. Curr. Bioinform. 2020, 15, 611–628. [Google Scholar] [CrossRef]

- Cheng, L.; Hu, Y.; Sun, J.; Zhou, M.; Jiang, Q.; Sahinalp, C. DincRNA: A comprehensive webbased bioinformatics toolkit for exploring disease associations and ncRNA function. Bioinformatics 2018, 34, 1953–1956. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980v9. [Google Scholar] [CrossRef]

- Yang, F.-J. An Implementation of Naive Bayes Classifier. In Proceedings of the 2018 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 13–15 December 2018; pp. 301–306. [Google Scholar]

- Zhou, N.; Jiang, Y.; Bergquist, T.R.; Lee, A.J.; Kacsoh, B.Z.; Crocker, A.W.; Lewis, K.A.; Georghiou, G.; Nguyen, H.N.; Hamid, N.; et al. The CAFA challenge reports improved protein function prediction and new functional annotations for hundreds of genes through experimental screens. Genome Biol. 2019, 20, 244. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, H.; Zhang, F.; Wang, X.; Wu, K.; Qiu, S.; Liu, B.; Wang, Y.; Hu, Y.; Li, J. HNetGO: Protein function prediction via heterogeneous network transformer. Brief. Bioinform. 2023, 24, bbab556. [Google Scholar] [CrossRef] [PubMed]

- Abdine, H.; Chatzianastasis, M.; Bouyioukos, C.; Vazirgiannis, M. Prot2Text: Multimodal Protein’s Function Generation with GNNs and Transformers. arXiv 2024, arXiv:2307.14367v3. [Google Scholar] [CrossRef]

- Guo, T.; Steen, J.A.; Mann, M. Mass-spectrometry-based proteomics: From single cells to clinical applications. Nature 2025, 638, 901–911. [Google Scholar] [CrossRef] [PubMed]

- Marcotte, E.M.; Pellegrini, M.; Ng, H.-L.; Rice, D.W.; Yeates, T.O.; Eisenberg, D. Detecting protein function and protein-protein interactions from genome sequences. Science 1999, 285, 751–753. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Akin, H.; Rao, R.; Hie, B.; Zhu, Z.; Lu, W.; Smetanin, N.; Verkuil, R.; Kabeli, O.; Shmueli, Y.; et al. Evolutionary-scale prediction of atomic-level protein structure with a language model. Science 2023, 379, 1123–1130. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A robustly optimized BERT Pretraining approach. arXiv 2019, arXiv:1907.11692v1. [Google Scholar] [CrossRef]

- Jang, Y.J.; Qin, Q.Q.; Huang, S.Y.; Peter, A.T.J.; Ding, X.M.; Kornmann, B. Accurate prediction of protein function using statistics-informed graph networks. Nat. Commun. 2024, 15, 6601. [Google Scholar] [CrossRef]

- Suzek, B.E.; Wang, Y.; Huang, H.; McGarvey, P.B.; Wu, C.H.; UniProt Consortium. UniRef clusters: A comprehensive and scalable alternative for improving sequence similarity searches. Bioinformatics 2015, 31, 926–932. [Google Scholar] [CrossRef]

- Unsal, S.; Atas, H.; Albayrak, M.; Turhan, K.; Acar, A.C.; Doğan, T. Learning functional properties of proteins with language models. Nat. Mach. Intell. 2022, 4, 227–245. [Google Scholar] [CrossRef]

- Yuan, Q.; Xie, J.; Xie, J.; Zhao, H.; Yang, Y. Fast and accurate protein function prediction from sequence through pretrained language model and homology-based label diffusion. Brief. Bioinform. 2023, 24, bbad117. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167v3. [Google Scholar] [CrossRef]

- Zaremba, W.; Sutskever, I.; Vinyals, O. Recurrent Neural Network Regularization. arXiv 2014, arXiv:1409.2329v5. [Google Scholar] [CrossRef]

- Agarap, A.F. Deep Learning using Rectified Linear Units (ReLU). arXiv 2019, arXiv:1803.08375v2. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Kagaya, Y.; Kihara, D. Domain-PFP allows protein function prediction using function-aware domain embedding representations. Commun. Biol. 2023, 6, 1103. [Google Scholar] [CrossRef] [PubMed]

- Gu, Z.; Luo, X.; Chen, J.; Deng, M.; Lai, L. Hierarchical graph transformer with contrastive learning for protein function prediction. Bioinformatics 2023, 39, btad410. [Google Scholar] [CrossRef]

- Ashburner, M.; Ball, C.A.; Blake, J.A.; Botstein, D.; Butler, H.; Cherry, J.M.; Davis, A.P.; Dolinski, K.; Dwight, S.S.; Eppig, J.T.; et al. Gene ontology: Tool for the unification of biology. Nat. Genet. 2000, 25, 25–29. [Google Scholar] [CrossRef]

- van den Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2019, arXiv:1807.03748v2. [Google Scholar] [CrossRef]

- You, R.; Yao, S.; Mamitsuka, H.; Zhu, S. DeepGraphGO: Graph neural network for large-scale, multispecies protein function prediction. Bioinformatics 2021, 37, i262–i271. [Google Scholar] [CrossRef]

- Wu, Z.; Guo, M.; Jin, X.; Chen, J.; Liu, B. CFAGO: Cross-fusion of network and attributes based on attention mechanism for protein function prediction. Bioinformatics 2023, 39, btad123. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).