Abstract

Alpha-helical transmembrane proteins (αTMPs) play essential roles in drug targeting and disease treatments. Due to the challenges of using experimental methods to determine their structure, αTMPs have far fewer known structures than soluble proteins. The topology of transmembrane proteins (TMPs) can determine the spatial conformation relative to the membrane, while the secondary structure helps to identify their functional domain. They are highly correlated on αTMPs sequences, and achieving a merge prediction is instructive for further understanding the structure and function of αTMPs. In this study, we implemented a hybrid model combining Deep Learning Neural Networks (DNNs) with a Class Hidden Markov Model (CHMM), namely HDNNtopss. DNNs extract rich contextual features through stacked attention-enhanced Bidirectional Long Short-Term Memory (BiLSTM) networks and Convolutional Neural Networks (CNNs), and CHMM captures state-associative temporal features. The hybrid model not only reasonably considers the probability of the state path but also has a fitting and feature-extraction capability for deep learning, which enables flexible prediction and makes the resulting sequence more biologically meaningful. It outperforms current advanced merge-prediction methods with a Q4 of 0.779 and an MCC of 0.673 on the independent test dataset, which have practical, solid significance. In comparison to advanced prediction methods for topological and secondary structures, it achieves the highest topology prediction with a Q2 of 0.884, which has a strong comprehensive performance. At the same time, we implemented a joint training method, Co-HDNNtopss, and achieved a good performance to provide an important reference for similar hybrid-model training.

1. Introduction

Alpha-helical transmembrane proteins (αTMPs) play an important role in physiological processes and biochemical pathways, particularly in drug targeting and disease therapy [1]. The 3D structure of αTMPs are challenging to determine using nuclear magnetic resonance (NMR) and X-ray crystal diffraction [2,3] due to their structural specificity. Due to the physiological significance of αTMPs and the lack of known structures [4,5], many bioinformatics approaches have explored their structure and function based on amino acid sequences.

The protein’s secondary structure helps to identify functional domains [6,7,8]. Topological structure is generally the position of transmembrane proteins (TMPs) relative to the biological membrane and the number of transmembrane segments. The secondary structure of the transmembrane region of αTMPs is the α-helix. The combined prediction of the secondary and topological structures avoids conflicting predictions of alpha-helix transmembrane structures caused by different structural predictors. Moreover, it provides a simpler and more effective method for the local primary screening of drug-targeted binding and helps subsequent studies focus on the appropriate region of action in advance. Although Alphafold2 has been shown to perform well in predicting transmembrane proteins [9], the study of the combined structure can provide an important reference for related applications.

Machine Learning (ML) improved the performance of structural predictors, replacing earlier empirical, statistical, and principle approaches [10,11,12]. Neural Networks (NNs) were successfully applied to the secondary structure-prediction methods JPred, PSIPRED, and PredictProtein [13,14,15]. PHDhtm [7] and MEMSAT3 [16] used NNs to improve the topology prediction performance. TMHMM [17] and HMMTOP [18] applied Hidden Markov Models (HMMs) to solve topology-prediction problems. However, HMMs lack the ability to extract long-term correlations for complex patterns. OCTOPUS [19] and SPOCTOPUS [20] further improved topology-prediction performance by combining HMMs and NNs. Deep Learning (DL) offers the possibility of learning more implicit features from biological sequences. MUFOLD-SS and DeepTMHMM use DL to predict the protein’s secondary structure and topology of TMPs, respectively [21,22]. However, there are only a few methods for the merge prediction of the topological and secondary structures of TMPs. TMPSS [23] and MASSP [24] achieved simultaneous prediction by using the DL of Convolutional Neural Networks (CNNs) or Long Short-Term Memory (LSTM) layers, and such studies usually employed multi-task learning to predict structures separately, creating conflicting prediction results. Furthermore, DL is not well suited for modeling temporal phenomena.

Hidden Neural Networks (HNNs) provide new insight by reasonably combining the Class Hidden Markov Model (CHMM) and NNs [25,26]. Similar hybrid models have been applied in many fields such as speech recognition, time-perception recommendation systems [27,28,29], and such forth due to their excellent performance. HNNs have also been explored in computational biology [30,31,32]. The HNNs’ prediction performance can be further improved by choosing more complex Deep Learning Neural Networks (DNNs) instead of a simple multilayer perceptron. In this study, we implemented topology and secondary structure merge-prediction methods for αTMPs, which construct hybrid models by combining CHMM and LSTM. The hybrid models not only have the ability of general DNNs to learn complex patterns, but also constrain the corresponding state paths using statistical transition probabilities, making the results more biologically meaningful in practice. A deep learning module consisting mainly of LSTM was used to capture context dependencies of the sequence. The CHMM module extracts temporal features and correlations of states, where the final output of the deep learning module assigned to each state was used as the emission probability by the CHMM. The prediction of the topological structure corresponding to the Q2 metric refers to the accuracy of Transmembrane (T) and No-transmembrane (N) in classification. The prediction of the protein’s secondary structure corresponds to the Q3 metric refers to helix, strand, and coil. Merge prediction corresponds to Q4 and refers to T-helix (M), N-helix (H), N-strand (E), and N-coil (C). HDNNtopss, as a merged predictor which uses a separate training method, used independently generated datasets to achieve a Q4 of 0.779 in merge prediction, and it achieved a Q2 of a whopping 0.884 in topology prediction. It has strong practical significance and all-around performance as the final predictor of this study. At the same time, we put forward a joint training idea, Co-HDNNtopss, which combined DNNs and CHMM and attempted to mutually promote both to achieve the best convergence effect in training. Although the current result is not the best, potentially due to the unbalanced ratio of structural label categories, it can provide some reference for similar hybrid-model training. Our method, pre-trained models, and supporting materials can be accessed through https://github.com/NENUBioCompute/HDNNtopss (accessed on 10 March 2023).

2. Results and Discussion

2.1. Evaluations Criteria

Combined with the evaluation criteria for predicting the secondary structure and topology of transmembrane proteins at the residual level, for the combined prediction of these two structures of transmembrane proteins, we evaluated the performance of the methods based on the following metrics, where TN, TP, FN, and FP, respectively, denoted true negative, true positive, false negative, and false positive samples.

ACC is the number of correctly predicted residues in standard state mode, corresponding to Q2, Q3, and Q4.

To evaluate the performance of the model, we also took the following metrics, including the Matthews Correlation Coefficient (MCC), Segment Overlap Measure (SOV) [33], Recall, Precision, Specificity, and F1-score [34,35,36]. The formulae are as follows:

The correct TM, indicates the number of proteins with the correctly predicted number of transmembrane strands.

2.2. Window Size Exploration Experiment of HDNNtopss

The selection of the optimal network input size is the key to deep learning networks. Based on the above accuracy measurements, the optimal network input size of HDNNtopss was determined, and the results are shown in Table 1. Considering the accuracy of transmembrane fragment identification, the Q4, and the MCC of merge-structure prediction, a network input with a window size of 19 yields the best network-prediction results for αTMPs on HDNNtopss.

Table 1.

Results of HDNNtopss for different window sizes on Test50.

2.3. Prediction Performance Analysis at the Residue Level

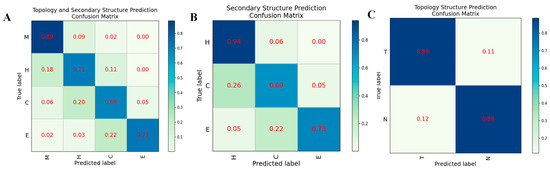

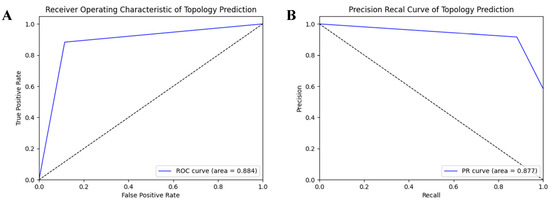

In order to evaluate the prediction performance at the residual level, we used a confusion matrix, Receiver Operating Characteristic (ROC) curve, and Precision-Recall (PR) curve to display the prediction results of HDNNtopss for the blind-test set (see Figure 1 and Figure 2). Figure 1A shows the confusion matrix of the merge-structure prediction, while Figure 1B,C show the confusion matrix of the secondary-structure prediction and topology prediction, respectively. It can be seen that the topology prediction of αTMPs is good, while there are some confusing structures in the secondary-structure prediction. Figure 2A,B show the ROC and PR curves on topology prediction, respectively. They also support the conclusion.

Figure 1.

Confusion matrix on different structural predictions of HDNNtopss (A) Structure merge prediction. (B) Secondary structure prediction. (C) Topology structure prediction.

Figure 2.

ROC and PR on topology prediction. (A) ROC on topology prediction. (B) PR on topology prediction.

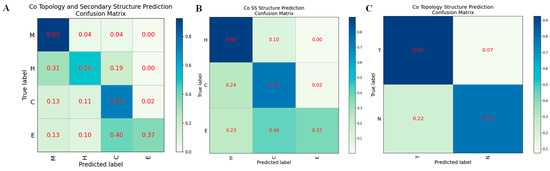

For Co-HDNNtopss, we showed and analyzed the performance of each structure category using the confusion matrix. It can be seen from Figure 3 that the transmembrane (T) and non-transmembrane regions (N) under the topological-structure prediction classification criteria of the C-plot can be well-divided and handled. There is not much confusion between the two categories, and only a few residues originally in the N region are predicted to be in the T region. In contrast, the B-plot, corresponding to the classification criteria for secondary-structure prediction, shows that class ‘E’ (strand) is poorly predicted, and this class is confused with both ‘H’ (helix) and ‘C’ (coil), i.e., residues originally labeled as ‘E’ are predicted as ‘H’ or ‘C’, while the box value of ‘E’ in the confusion matrix is only 0.37. This result suggests that the unevenness of the structural classes of αTMPs leads to biased prediction results. This phenomenon was not evident in the predictions of the separate training approach, suggesting that this joint training approach amplifies the defect of sample category imbalance. The A-plot, corresponding to the classification criteria of the structure merge prediction, also shows that the N-helix (H) and N-strand (E) have slightly poorer prediction results, except for the T-helix (M) and N-coil (C), which have a clearer sample category delineation.

Figure 3.

Confusion matrix on different structural predictions of Co-HDNNtopss. (A) Structure merge prediction. (B) Secondary structure prediction. (C) Topology structure prediction.

After analysis, this was hypothesized to be due to the joint training process in which we used the sigmoid output for each category in the deep learning module for its probability, while some categories with a smaller proportion could not use the softmax output as in the separate training process, forcing each category to adjust and promote each other in time. Furthermore, the phenomenon of fewer positive samples and more negative samples creates bias in the classification model.

2.4. Overall Prediction Performance

To compare the accuracy in predicting the four classes of transmembrane-protein topology and secondary structures combined, we compared the results with TMPSS, which predicts both structures simultaneously. Since these two predictions are simultaneous rather than combined, we transformed the prediction of topological dichotomization and secondary-structure dichotomization to obtain four classification structures. The BioChemical Library (BCL), a necessary tool of MASSP, could not be downloaded when we tested it, and the web server provided by MASSP was not available [24]. Therefore, we didn’t compare it with another method for the multi-task prediction of two structures using MASSP.

In Table 2, the results show that the performance of our separate training predictor is better; the HDNNtopss method achieved a Q4 of 0.779 and an MCC of 0.673, while the other classification criteria also performed well. The time indicator showed the prediction time excluding the generation of HHblits profiles in the test set, and HDNNtopss consumes the least time. Whether it is due to the structural relevance of the combined prediction of the two structures or the sensitivity created by the hybrid model, our work using HDNNtopss showed the best performance. After discussion, HDNNtopss has some practical significance, and the combined prediction of structures may also provide a reference role for local primary screening for drug-target binding. Table 2 also shows the metrics of the joint training model for each category, and it can be seen that M and C are well predicted, while E is slightly worse with lower recall. The results indicate that the non-transmembrane strand with smaller proportions is poorly predicted due to the unbalanced sample categories, and the joint training method is less suitable as the final prediction model compared to the separate training method. However, it can provide a reference for similar mixed-model training, especially under data with a balanced proportion of categories.

Table 2.

Comparative benchmark of different methods in topology and secondary structure merge prediction.

2.5. Topological Structure Prediction Performance

To compare the expressiveness of topology prediction with current advanced methods, we transformed the output’s quadruple classification results into two classifications for transmembrane and non-transmembrane regions. Table 3 reports the comparative analysis of different methods in terms of topology-prediction performance. In addition to the separate training method, HDNNtopss, and joint training, Co-HDNNtopss, the state-of-the-art tested methods include TOPCONS [37], CCTOP [38], PolyPhobius [39], OCTOPUS [19], SPOCTOPUS [20], SCAMPI_MSA [40], DeepTMHMM [22], Phobius [41], Philius [42], and TMPSS [23]. For topology prediction, the methods were compared on the Test50 defined in this paper.

Table 3.

Comparative benchmark of different methods in topology prediction.

As seen from Table 3, by testing using an independent test set, our HDNNtopss Q2 was the best, reaching 0.884 with an MCC also reaching 0.763. It can be seen that the Q2 of our method was the same as that of CCTOP, but the MCC was slightly better. Although TM was slightly lower than DeepTMHMM, it is worth mentioning that up to six transmembrane proteins were predicted as non-transmembrane proteins using this method. Overall, our method had the best prediction ability for the topology.

2.6. Secondary Structure Prediction Performance

In order to compare the expressiveness of secondary structure prediction with current advanced methods, we transformed the output’s quadruple classification results into three classifications for helix, coil, and strand. Table 4 reports the comparative analysis of different methods in terms of secondary-structure prediction performance. In addition to the separate training method, HDNNtopss, and common training, Co-HDNNtopss, the state-of-the-art tested methods included JPred4 [13], SSpro5 [43], Spider3 [44], PSIPRED4.0 [45], MUFOLD-SS [21], DeepCNF [46], Porter 5 [47], and TMPSS [23]. The methods were compared on the Test50 defined in this paper.

Table 4.

Comparative benchmark of different methods for secondary-structure prediction.

In terms of secondary-structure prediction results, the prediction performance of SSpro5 is far ahead of other methods. Furthermore, it can be seen that the prediction of our separate training method, HDNNtopss, for the secondary structure is slightly worse compared with some current advanced methods, but it also achieves a better prediction level.

In summary, our method HDNNtopss also achieves better performance under the topology and secondary-structure prediction classification criteria and has a strong synthesis capability.

2.7. Case Studies

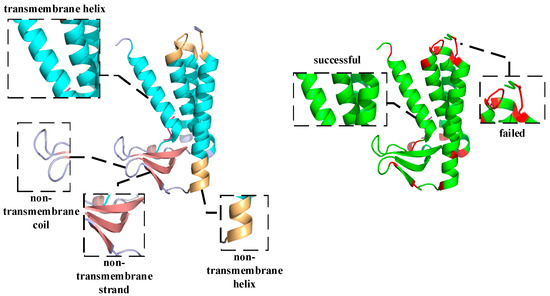

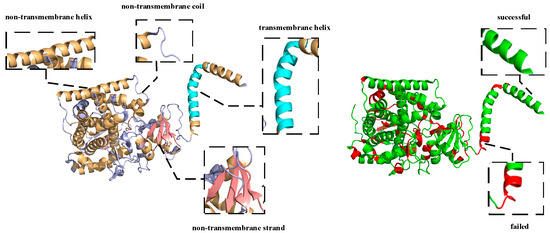

To further discuss the validity of HDNNtopss in predicting the topology and secondary structure of αTMPs, we performed a case study of several alpha-helical transmembrane proteins. By comparing the predicted labels with the real labels on the 3D view, it was found that, although most of the structures were accurately predicted by the predictor, there were still some issues that needed to be improved. The details are discussed below, where the results are visualized using PyMOL [48].

As shown in Figure 4, the predicted topology of 6ryo_A is shown on the left side. 6ryo is a lipoprotein signal peptidase from Staphylococcus aureus [49], and it is important for the study of antibiotic resistance. The cyan part indicates the predicted result of M, the light orange part indicates the predicted result of H, the light blue part indicates the predicted result of C, and the salmon part indicates the predicted result of E, On the right side, the difference between the predicted structure and the real structure is shown, the green part indicates a successful prediction, and the red part indicates a failed prediction. Comparing the results with the real structural labels, it can be found that the prediction model basically restores the structure of 6ryo_A, except for one to several residues at the junction that were incorrectly predicted.

Figure 4.

Schematic diagram of the structure of the alpha-helical transmembrane protein, 6ryo_A, and a schematic diagram of the difference between the predicted and real structures.

The vast majority of αTMPs are similar to 6ryo_A, and their topology and secondary structure can be largely recovered using this predictor with only a few errors of the poor identification of the junction of different structures. This may be caused by the different labeling criteria of the training set and the dependence of the deep learning method on the data. The analysis of a large number of results revealed that a very small number of αTMPs showed a small confusion error between different structures, except for boundary prediction error, which occurred in 4wmz_A. 4wmz exists in the Saccharomyces cerevisiae YJM789 organism, and it is a kind of oxidoreductase inhibitor [50], which provides an in-depth understanding of a drug-resistance mechanism. As shown in Figure 5 for 4wmz_A, the color-labeling and significance are consistent with Figure 4. It can be seen that, although the transmembrane fragments were correctly identified, a small segment of residue that should have been H was predicted to be C, and some that should have been C was predicted to be E. This indicates that, due to the unbalanced proportion of samples, some of the samples could not be accurately identified due to the small proportion, and a small number of unreasonable structures were not adjusted by the model. However, it can also be seen that, although the protein has only one transmembrane segment, we accurately predicted it. Although our predictor still has some small problems, it does have quite a good prediction ability.

Figure 5.

Schematic diagram of the structure of the alpha-helical transmembrane protein, 4wmz_A, and a schematic diagram of the difference between the predicted and real structures.

3. Materials and Methods

3.1. Datasets

TMPs are usually large in size. Moreover, some parts are buried in biological membranes, and most of them are difficult to dissolve in appropriate detergents, inhibiting their purification and crystallization. Therefore, fewer TMPs with known structures were included in the study compared to proteins in general. The number of known structures of transmembrane proteins is increasing at a slow rate, but our predictor can provide a reference for local primary screening. We chose to process the data source to obtain the dataset. The Protein Data Bank of transmembrane proteins (PDBTM) database [51] was the data source. Approximately 5721 αTMPs were downloaded from the 13 August 2021 version of the PDBTM database. Chains containing unknown residues such as (“X”) and those <30 in length were removed. CD-HIT [52,53] was used to remove redundancy, retaining 30% sequence similarity, leaving 1242 chains. The data were randomly divided into 1142 protein chains in the training set, 50 in the test set named Test50, and 50 in the valid set. Regarding the division of the 4 classes of combined structures, for the transmembrane helix structure, we took the more accurate structure obtained using the resolution in PDBTM [51] as the standard. For the 3 classes of secondary structures in the non-transmembrane region, we took the Protein Data Bank (PDB) file analyzed using the DSSP [54] program as the standard.

3.2. Inputs Encoding

Based on the special structure and function of αTMPs, we chose two input-encoding features of amino acids for the deep learning module: the One-hot code and the HHblits [55] evolutionary profile. In our experiments, the HHblits used the database uniprot20_2016_02. The profile values were scaled by the sigmoid function into the range (0, 1), making the distribution more uniform and reasonable (see Equation (10)). Each amino acid in the protein sequence was represented as a vector of 30 HHblits profile values and 1 NoSeq label (representing a gap). The One-hot encoding matrix, which indicates the index of the amino acid species to which the residue belongs, was based on a 20-dimensional One-hot and a 1-dimensional NoSeq. There was a total of 52-dimensional feature encoding matrices.

The inputs of CHMM in the real sense are protein sequences that were encoded using numbers from 0–19 corresponding to each amino acid. The inputs conform to the observation sequence in the general HMMs. This was based on the trained transition probabilities and the emission probabilities obtained from the deep learning module used to decode sequences.

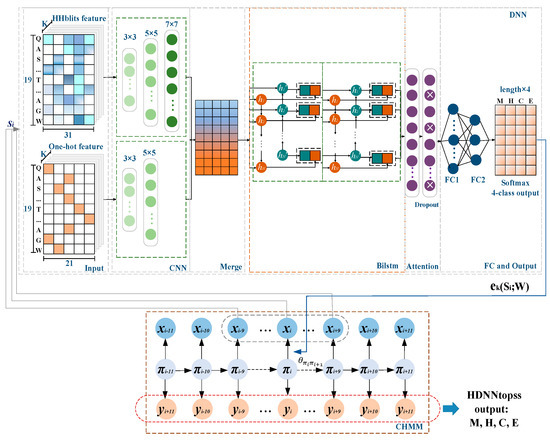

3.3. Models Framework

We implemented a separate training method, HDNNtopss, and a joint training, Co-HDNNtopss. They both use the hybrid model of a deep learning module and CHMM.

For HDNNtopss (see Figure 6), the DNN part comprises the deep learning module. Each observation in the protein sequence is encoded by contextual amino acid as input to the DNNs. The output of the deep learning module is the probability matrix corresponding to each state of the sequence observations. The emission probability of CHMM is replaced by the output to realize the combination of the two modules. CHMM uses the Viterbi algorithm [56] for optimizing the segmentation of state predictions generated by the deep learning module. The 4-category predicted merge structures are the final output.

Figure 6.

Diagram of HDNNtopss. Each amino acid in the observed sequence of αTMPs in the CHMM module of the figure is used as input in the deep learning module by encoding the contextual sliding window. The output of the deep learning module is replaced with the emission probabilities in the CHMM. Finally, the model decodes the output based on the trained parameters.

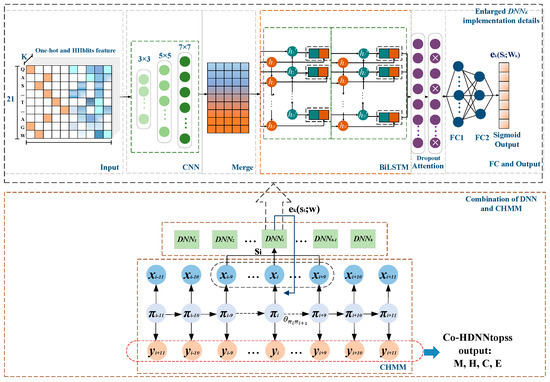

For Co-HDNNtopss (see Figure 7), the combination of DNNs and CHMM shows the following details. The context of the observations from the CHMM is input to the DNNk, which outputs the values corresponding to the emission network for the state k. The first half shows the detailed implementation details of the DNNk emission network. In order to reduce the complexity of the model in the joint training to reduce the time consumption of training, after many experiments and attempts, the deep learning module was finally chosen; see Enlarged DNNk implementation details, in Figure 7.

Figure 7.

Diagram of Co-HDNNtopss. As shown in the combination of DNN and CHMM, the context of CHMM is the input for DNNk, and the initial value of each training round of the emission probability of a state in CHMM is replaced by DNNk output. The enlarged implementation details of DNNk are shown in the figure. Finally, the model decodes outputs based on the trained parameters.

3.4. Deep Learning Module

The deep learning module in HDNNtopss is mainly composed of CNNs and attention-enhanced Bidirectional Long Short-Term Memory (BiLSTM) layers. As shown in the DNN part of Figure 6, this includes feature-integration layers, CNNs, attention-enhanced BiLSTM layers, and fully connected layers. The input to the model is the HHBlits profile and One-hot code generated from the sequence, using a sliding window of the length of 19 residues as its central residue feature. Then, the input of the model is passed to Stacked CNN layers to extract effective data features separately, and BiLSTM layers capture the longer distance as dependent relations and global information. We also added an attention mechanism to the BiLSTM layers to help the model enhance attention to the critical regions. After the BiLSTM, there are two fully connected layers. Apart from the original HNN text, in order to avoid training the emission network of multiple groups of states, we did not use sigmoid but the softmax function as the activation function of the last layer.

The deep learning module in Co-HDNNtopss is almost the same as in the HDNNtopss method but simpler. The input part is a mixture of two features into the stacked CNNs, attention-enhanced BiLSTM with fewer units, and fully connected layers. To facilitate the rewriting of the loss function in the joint training, the sigmoid output probability of each state is treated as the emission probability in CHMM.

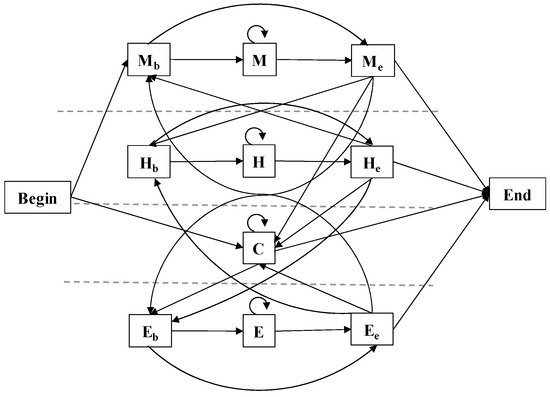

3.5. CHMM Module

CHMM is more suitable for complex protein-structure prediction problems than the standard HMM with specified labeled data [26]. The CHMM module is the same in separate training and joint training. For predicting the structure of αTMPs, assume that the protein sequence is , a state sequence , and a set of labels .

Our model consisted of 12 states, including Begin and End states, and conceptually combines the previous ideas of αTMPs topology and protein secondary-structure prediction [31,57,58]. The model consists of 4 sub-models corresponding to the 4 labels to be predicted, namely, sub-models M, H, C, and E. Except for C, each sub-model contains a beginning and end state, and the state transition of the model is shown in Figure 8.

Figure 8.

State model diagram. There are 12 states including Beginning and End states, divided into 4 sub-models, and the figure shows the possible transitions between states.

The transition probability of CHMM from to is denoted by . The emission probability of the state generating the observation is denoted by . The output value of the corresponding state of the DNNs is used to replace the emission probabilities between each observation and state. We obtained the appropriate transition probability using the supervised training of CHMM. A protein sequence was decoded into the corresponding structural properties by using the above probability parameter.

3.6. HDNNtopss Module Training

HDNNtopss is a separate training method; the deep learning module uses the supervise learning method, and CHMM uses Conditional Maximum Likelihood (CML) estimation.

For deep learning modules, we used labels to supervise learning and training to get the emission value between the observation and state, where refers to the contextual encoding of each , and refers to the DNNs weights. An early termination strategy and a save-best strategy were adopted. When the loss value of the verification set did not continue to decline within the training period of 10 epochs, the best weight information of the model was retained and training was stopped. The parameters were trained using an Adam optimizer [59] to change the learning rate during model training dynamically. Batch normalization layers can effectively prevent network overfitting and improve training speed.

For CHMM, the parameters to be trained included the transition probability. It corresponded to two rounds of the forward and backward algorithms: once in the free-running phase (f), which corresponds to all paths without considering the labels, and once in the clamped phase (c), which is a labeled joint probability calculation. We used CML estimation to update the parameters. CML makes the probability of the free-running phase as close as possible to that of the clamped phase by adjusting the model parameters so as to achieve the desired training probability parameters. In order to facilitate calculations such as gradient descent, the negative likelihood logarithm conversion was performed aas follows:

In the above formula, refers to the probability of the occurrence of under the parametric model, is the joint probability of occurring under the parametric model, i.e., the probability of occurring when the label sequence is consistent with the state path. We implemented a gradient descent on the transition probabilities using in each iteration, thus, achieving an update of the transition probabilities, noting that the CHMM is different from the Baum-Welch learning method of the general HMM.

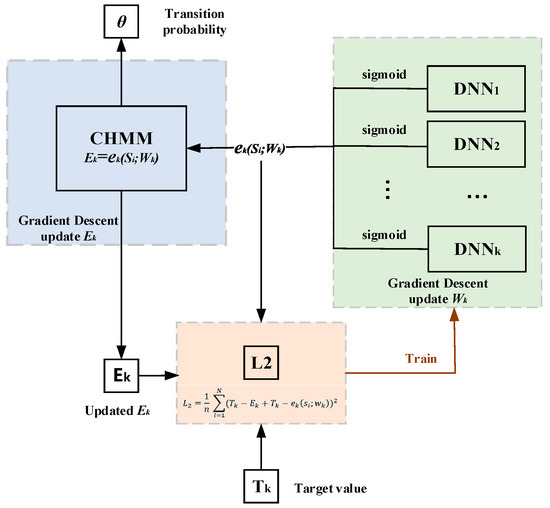

3.7. Co-HDNNtopss Module Training

Co-HDNNtopss training, the idea of joint training deep learning and CHMM, was proposed here. The flow diagram of the joint training is shown in Figure 9.

Figure 9.

Joint training diagram. The output obtained by training the DNN model weights corresponding to each state. The output was used as initial emission probability of CHMM and the transition probability for an epoch of training. The updated emission and other parameters form a new error function used to train a new epoch of the DNNs, and this process was repeated until convergence.

After training one epoch of weights in deep learning, we brought the output probability value of the emission network DNNk into CHMM for one training iteration with the transition probability parameter in CHMM, where the output value of this emission network is gradient descent as the emission probability parameter in CHMM, and its gradient descent method by was consistent with the update of the transition probability (see Equation (15)). The updated emission probability parameter obtained after one iteration in CHMM, the target value and together form a new error calculation (see Equation (16)). Here, we regarded each state as a regression problem. The final activation function is sigmoid, and each state corresponds to a deep-learning emission network. It is worth mentioning that the outputs are the sigmoid activation regression output, with one DNNk for each state. Then, we followed the commonly used MSE to establish a new loss function for each state (see Equation (17)). According to the convergence of the loss function, the gradient descent was calculated (see Equation (18)).

This above process was repeated and then brought into CHMM training until the accuracy of the validation dataset stopped rising. At this point, the training transition probability parameters and emission probabilities were obtained. We hoped that using this approach, the two modules could achieve a joint promotion during the training so that the expectation value decreased. However, since the deep learning module was too complex and effective, a few iterations of the weights reached a reasonable value, while the transition probability parameter required many iterations to make the expected value converge, and the convergence speed and efficiency of these two were not consistent. Hence, the effect of the joint training method was not as good as the separate training, but in the subsequent improved version, we will further consider a reasonable method for training the two together to further improve the prediction accuracy.

3.8. Decoding

The decoder used the Viterbi algorithm, which is widely used to optimally partition the 12 states by initialization, recursion, termination, and optimal-path backtracking [56]. The optimal path for each sequence was obtained by encoding the probabilistic parameters obtained from training. Four classes of predicted structures were finally obtained, including M, H, C, and E.

4. Conclusions

In this study, we proposed hybrid models of CHMM and DNN to predict the topology and secondary merged structure of αTMPs from the primary sequence. The DNN mainly consists of stacked CNN and an attention-enhanced BiLSTM capturing the dependencies between contextual residuals. The statistical model CHMM extracts the correlation of labels through trained state-transition probabilities. This hybrid model not only improves the topology and secondary-structure prediction accuracy but also makes the predicted structural label strings match the actual structure according to the constraints of state-label correlation, leading to predictions that are more biologically meaningful.

Our separate training method, HDNNtopss, reflects a strong utility, with a Q4 reaching 0.779 and an MCC reaching 0.673 in the blind-test set results. Additionally, HDNNtopss achieved the best performance in the topology-prediction task compared to other state-of-the-art methods, it reached a high Q2 of 0.884 and had a strong comprehensive performance. The new method Co-HDNNtopss of joint training DNN and CHMM was also proposed, and although the accuracy was not the highest, this idea combined the two methods of training to closely promote each other. It can provide a reference for similar hybrid-model training. After discussion and analysis, the unbalanced ratio of structural label categories has been resolved.

In the future, we will choose newer datasets or reasonable sampling methods to solve the sample imbalance problem so that the joint training method can reflect a better performance capability. We will also add beta-barrel transmembrane proteins to expand the scope of our data and present the tool as a web server to make our predictor more extensive and useful.

Furthermore, we implemented HDNNtopss as a publicly available predictor for the research community. The pre-trained model and the datasets we used in this paper can be downloaded at https://github.com/NENUBioCompute/HDNNtopss.git (accessed on 10 March 2023). Finally, we sincerely hope that the predictor and the support materials we released in this study will help the researchers who need them.

Author Contributions

Conceptualization, T.G., Y.Z., and H.W.; methodology, T.G., Y.Z., and H.W.; Software, T.G., Y.Z., and L.Z.; validation, T.G., Y.Z., and L.Z.; formal analysis, T.G., Y.Z., and L.Z.; investigation, T.G., Y.Z., and L.Z.; writing—original draft preparation, Y.Z. and H.W.; writing—review and editing, T.G., Y.Z., L.Z., and H.W.; All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Jilin Scientific and Technological Development Program (No. 20230201090GX, 20210101175JC), the Capital Construction Funds within the Jilin Province budget (grant 2022C043-2), the Science and Technology Research Project of the Education Department of Jilin Province (No. JJKH20191309KJ), and the Ministry of Science and Technology Experts Project (No. G2021130003L).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Acknowledgments

We would like to thank Mingjun Cheng from the Science and Technology Innovation Platform Management Center of Jilin Province and other teachers and students from the Institute of Computational Biology, Northeast Normal University for their help.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, F.; Egea, P.F.; Vecchio, A.J.; Asial, I.; Gupta, M.; Paulino, J.; Bajaj, R.; Dickinson, M.S.; Ferguson-Miller, S.; Monk, B.C.; et al. Highlighting membrane protein structure and function: A celebration of the Protein Data Bank. J. Biol. Chem. 2021, 296, 100557. [Google Scholar] [CrossRef] [PubMed]

- Arora, A.; Tamm, L.K. Biophysical approaches to membrane protein structure determination. Curr. Opin. Struct. Biol. 2001, 11, 540–547. [Google Scholar] [CrossRef] [PubMed]

- Doerr, A. Membrane protein structures. Nat. Methods 2008, 6, 35. [Google Scholar] [CrossRef]

- Almen, M.S.; Nordstrom, K.J.; Fredriksson, R.; Schioth, H.B. Mapping the human membrane proteome: A majority of the human membrane proteins can be classified according to function and evolutionary origin. BMC Biol. 2009, 7, 50. [Google Scholar] [CrossRef]

- Westbrook, J.; Feng, Z.; Chen, L.; Yang, H.; Berman, H.M. The Protein Data Bank and structural genomics. Nucleic Acids Res. 2003, 31, 489–491. [Google Scholar] [CrossRef]

- Hopf, T.A.; Colwell, L.J.; Sheridan, R.; Rost, B.; Sander, C.; Marks, D.S. Three-dimensional structures of membrane proteins from genomic sequencing. Cell 2012, 149, 1607–1621. [Google Scholar] [CrossRef]

- Tan, C.W.; Jones, D.T. Using neural networks and evolutionary information in decoy discrimination for protein tertiary structure prediction. BMC Bioinform. 2008, 9, 94. [Google Scholar] [CrossRef]

- Marks, D.S.; Colwell, L.J.; Sheridan, R.; Hopf, T.A.; Pagnani, A.; Zecchina, R.; Sander, C. Protein 3D structure computed from evolutionary sequence variation. PLoS ONE 2011, 6, e28766. [Google Scholar] [CrossRef]

- Hegedus, T.; Geisler, M.; Lukacs, G.L.; Farkas, B. Ins and outs of AlphaFold2 transmembrane protein structure predictions. Cell Mol. Life Sci. 2022, 79, 73. [Google Scholar] [CrossRef]

- Finkelstein, A.V.; Ptitsyn, O.B. Statistical analysis of the correlation among amino acid residues in helical, β-stractural and non-regular regions of globular proteins. J. Mol. Biol. 1971, 62, 613–624. [Google Scholar] [CrossRef]

- Scheraga, H.A. Prediction of protein conformation. Curr. Top. Biochem. 1974, 13, 222–245. [Google Scholar]

- Heijne, G.V. Membrane protein structure prediction. Hydrophobicity analysis and the positive-inside rule. J. Mol. Biol. 1992, 225, 487–494. [Google Scholar] [CrossRef] [PubMed]

- Drozdetskiy, A.; Cole, C.; Procter, J.; Barton, G.J. JPred4: A protein secondary structure prediction server. Nucleic Acids Res. 2015, 43, W389–W394. [Google Scholar] [CrossRef] [PubMed]

- Buchan, D.W.; Ward, S.M.; Lobley, A.E.; Nugent, T.C.; Bryson, K.; Jones, D.T. Protein annotation and modelling servers at University College London. Nucleic Acids Res. 2010, 38, W563–W568. [Google Scholar] [CrossRef] [PubMed]

- Yachdav, G.; Kloppmann, E.; Kajan, L.; Hecht, M.; Goldberg, T.; Hamp, T.; Honigschmid, P.; Schafferhans, A.; Roos, M.; Bernhofer, M.; et al. PredictProtein--an open resource for online prediction of protein structural and functional features. Nucleic Acids Res. 2014, 42, W337–W343. [Google Scholar] [CrossRef]

- Senior, A.W.; Evans, R.; Jumper, J.; Kirkpatrick, J.; Sifre, L.; Green, T.; Qin, C.; Zidek, A.; Nelson, A.W.R.; Bridgland, A.; et al. Improved protein structure prediction using potentials from deep learning. Nature 2020, 577, 706–710. [Google Scholar] [CrossRef]

- Sonnhammer, E.; Heijne, G.V.; Krogh, A.V. A Hidden Markov Model for Predicting Transmembrane Helices in Protein Sequences. Proc. Int. Conf. Intell. Syst. Mol. Biol. 1998, 6, 175–182. [Google Scholar]

- Tusnády, G.E.; Simon, I. Principles governing amino acid composition of integral membrane proteins: Application to topology prediction. J. Mol. Biol. 1998, 283, 489–506. [Google Scholar] [CrossRef]

- Viklund, H.; Elofsson, A. OCTOPUS: Improving topology prediction by two-track ANN-based preference scores and an extended topological grammar. Bioinformatics 2008, 24, 1662–1668. [Google Scholar] [CrossRef]

- Viklund, H.; Bernsel, A.; Skwark, M.; Elofsson, A. SPOCTOPUS: A combined predictor of signal peptides and membrane protein topology. Bioinformatics 2008, 24, 2928–2929. [Google Scholar] [CrossRef]

- Fang, C.; Shang, Y.; Xu, D. MUFOLD-SS: New deep inception-inside-inception networks for protein secondary structure prediction. Proteins 2018, 86, 592–598. [Google Scholar] [CrossRef] [PubMed]

- Hallgren, J.; Tsirigos, K.D.; Pedersen, M.D.; Almagro Armenteros, J.J.; Marcatili, P.; Nielsen, H.; Krogh, A.; Winther, O. DeepTMHMM predicts alpha and beta transmembrane proteins using deep neural networks. bioRxiv 2022. [Google Scholar] [CrossRef]

- Liu, Z.; Gong, Y.; Bao, Y.; Guo, Y.; Lin, G.N. TMPSS: A Deep Learning-Based Predictor for Secondary Structure and Topology Structure Prediction of Alpha-Helical Transmembrane Proteins. Front. Bioeng. Biotechnol. 2021, 8, 629937. [Google Scholar] [CrossRef]

- Li, B.; Mendenhall, J.; Capra, J.A.; Meiler, J. A Multitask Deep-Learning Method for Predicting Membrane Associations and Secondary Structures of Proteins. J. Proteome Res. 2021, 20, 4089–4100. [Google Scholar] [CrossRef]

- Krogh, A.; Riis, S.K. Hidden neural networks. Neural Comput. 1999, 11, 541–563. [Google Scholar] [CrossRef] [PubMed]

- Krogh, A.S. Hidden Markov models for labeled sequences. In Proceedings of the 12th IAPR International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994. [Google Scholar]

- Rogozan, A.; Deléglise, P. Visible speech modelling and hybrid hidden Markov models/neural networks based learning for lipreading. In Proceedings of the IEEE International Joint Symposia on Intelligence and Systems, Rockville, MD, USA, 23–23 May 1998; pp. 336–342. [Google Scholar]

- Zheng, Y.; Jia, S.; Yu, Z.; Huang, T.; Liu, J.K.; Tian, Y. Probabilistic inference of binary Markov random fields in spiking neural networks through mean-field approximation. Neural Netw. 2020, 126, 42–51. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Chen, H. A Hybrid Neural Network and Hidden Markov Model for Time-aware Recommender Systems. In Proceedings of the 11th International Conference on Agents and Artificial Intelligence, Prague, Czech Republic, 19–21 February 2019; pp. 204–213. [Google Scholar]

- Tamposis, I.A.; Sarantopoulou, D.; Theodoropoulou, M.C.; Stasi, E.A.; Kontou, P.I.; Tsirigos, K.D.; Bagos, P.G. Hidden neural networks for transmembrane protein topology prediction. Comput. Struct. Biotechnol. J. 2021, 19, 6090–6097. [Google Scholar] [CrossRef] [PubMed]

- Lin, K.; Simossis, V.A.; Taylor, W.R.; Heringa, J. A simple and fast secondary structure prediction method using hidden neural networks. Bioinformatics 2005, 21, 152–159. [Google Scholar] [CrossRef]

- Luigi, M.P.; Piero, F.; Luca, M.; Rita, C. Prediction of the disulfide bonding state of cysteines in proteins with hidden neural networks. Protein Eng. 2002, 15, 951–953. [Google Scholar]

- Zemla, A.; Venclovas, E.; Fidelis, K.; Rost, B. A modified definition of Sov, a segment-based measure for protein secondary structure prediction assessment. Proteins Struct. Funct. Bioinform. 1999, 34, 220–223. [Google Scholar] [CrossRef]

- Tan, J.X.; Li, S.H.; Zhang, Z.M.; Chen, C.X.; Lin, H. Identification of hormone binding proteins based on machine learning methods. Math. Biosci. Eng. MBE 2019, 16, 2466–2480. [Google Scholar] [CrossRef] [PubMed]

- Yang, W.; Zhu, X.J.; Huang, J.; Ding, H.; Lin, H. A Brief Survey of Machine Learning Methods in Protein Sub-Golgi Localization. Curr. Bioinform. 2019, 14, 234–240. [Google Scholar] [CrossRef]

- Xjz, A.; Cqf, A.; Hyl, A.; Wei, C.; Lin, H.A. Predicting protein structural classes for low-similarity sequences by evaluating different features. Knowl.-Based Syst. 2019, 163, 787–793. [Google Scholar]

- Tsirigos, K.D.; Peters, C.; Shu, N.; Kall, L.; Elofsson, A. The TOPCONS web server for consensus prediction of membrane protein topology and signal peptides. Nucleic Acids Res. 2015, 43, W401–W407. [Google Scholar] [CrossRef]

- Dobson, L.; Remenyi, I.; Tusnady, G.E. CCTOP: A Consensus Constrained TOPology prediction web server. Nucleic Acids Res. 2015, 43, W408–W412. [Google Scholar] [CrossRef]

- Kall, L.; Krogh, A.; Sonnhammer, E.L. An HMM posterior decoder for sequence feature prediction that includes homology information. Bioinformatics 2005, 21 (Suppl. 1), i251–i257. [Google Scholar] [CrossRef]

- Bernsel, A.; Viklund, H.; Falk, J.; Lindahl, E.; von Heijne, G.; Elofsson, A. Prediction of membrane-protein topology from first principles. Proc. Natl. Acad. Sci. USA 2008, 105, 7177–7181. [Google Scholar] [CrossRef]

- Kall, L.; Krogh, A.; Sonnhammer, E.L. A combined transmembrane topology and signal peptide prediction method. J. Mol. Biol. 2004, 338, 1027–1036. [Google Scholar] [CrossRef]

- Reynolds, S.M.; Kall, L.; Riffle, M.E.; Bilmes, J.A.; Noble, W.S. Transmembrane topology and signal peptide prediction using dynamic bayesian networks. PLoS Comput. Biol. 2008, 4, e1000213. [Google Scholar] [CrossRef]

- Magnan, C.N.; Pierre, B. SSpro/ACCpro 5: Almost perfect prediction of protein secondary structure and relative solvent accessibility using profiles, machine learning and structural similarity. Bioinformatics 2014, 30, 2592–2597. [Google Scholar] [CrossRef]

- Rhys, H.; Yuedong, Y.; Kuldip, P.; Yaoqi, Z. Capturing Non-Local Interactions by Long Short Term Memory Bidirectional Recurrent Neural Networks for Improving Prediction of Protein Secondary Structure, Backbone Angles, Contact Numbers, and Solvent Accessibility. Bioinformatics 2017, 33, 2842–2849. [Google Scholar]

- Buchan, D.W.A.; Jones, D.T. The PSIPRED Protein Analysis Workbench: 20 years on. Nucleic Acids Res. 2019, 47, W402–W407. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Peng, J.; Ma, J.; Xu, J. Protein Secondary Structure Prediction Using Deep Convolutional Neural Fields. Sci. Rep. 2016, 6, 18962. [Google Scholar] [CrossRef] [PubMed]

- Torrisi, M.; Kaleel, M.; Pollastri, G. Deeper Profiles and Cascaded Recurrent and Convolutional Neural Networks for state-of-the-art Protein Secondary Structure Prediction. Sci. Rep. 2019, 9, 12374. [Google Scholar] [CrossRef] [PubMed]

- Delano, W.L. PyMOL: An Open-Source Molecular Graphics Tool. Protein Crystallogr. 2002, 40, 82–92. [Google Scholar]

- Olatunji, S.; Yu, X.; Bailey, J.; Huang, C.Y.; Zapotoczna, M.; Bowen, K.; Remskar, M.; Muller, R.; Scanlan, E.M.; Geoghegan, J.A.; et al. Structures of lipoprotein signal peptidase II from Staphylococcus aureus complexed with antibiotics globomycin and myxovirescin. Nat. Commun. 2020, 11, 140. [Google Scholar] [CrossRef] [PubMed]

- Sagatova, A.A.; Keniya, M.V.; Wilson, R.K.; Monk, B.C.; Tyndall, J.D. Structural Insights into Binding of the Antifungal Drug Fluconazole to Saccharomyces cerevisiae Lanosterol 14 alpha-Demethylase. Antimicrob. Agents Chemother. 2015, 59, 4982–4989. [Google Scholar] [CrossRef]

- Kozma, D.; Simon, I.; Tusnady, G.E. PDBTM: Protein Data Bank of transmembrane proteins after 8 years. Nucleic Acids Res. 2013, 41, D524–D529. [Google Scholar] [CrossRef]

- Huang, Y.; Niu, B.; Gao, Y.; Fu, L.; Li, W. CD-HIT Suite: A web server for clustering and comparing biological sequences. Bioinformatics 2010, 26, 680–682. [Google Scholar] [CrossRef]

- Wu, S.; Zhu, Z.; Fu, L.; Niu, B.; Li, W. WebMGA: A customizable web server for fast metagenomic sequence analysis. BMC Genom. 2011, 12, 444. [Google Scholar] [CrossRef]

- Kabsch, W.; Sander, C. Dictionary of Secondary structure in Proteins: Pattern Recognition of Hydrogenbonded and Geometrical Features. Biopolymers 2004, 22, 2577–2637. [Google Scholar] [CrossRef] [PubMed]

- Remmert, M.; Biegert, A.; Hauser, A.; Soding, J. HHblits: Lightning-fast iterative protein sequence searching by HMM-HMM alignment. Nat. Methods 2011, 9, 173–175. [Google Scholar] [CrossRef] [PubMed]

- Durbin, R.; Eddy, S.; Krogh, A.; Mitchison, G. Biological Sequence Analysis: Probabilistic Models of Proteins and Nucleic Acids; Biological Sequence Analysis. Protein Sci. 1998, 8, 695. [Google Scholar]

- Lee, S.Y.; Lee, J.Y.; Jung, K.S.; Ryu, K.H. A 9-state hidden Markov model using protein secondary structure information for protein fold recognition. Comput. Biol. Med. 2009, 39, 527–534. [Google Scholar] [CrossRef]

- Bagos, P.G.; Liakopoulos, T.D.; Hamodrakas, S.J. Algorithms for incorporating prior topological information in HMMs: Application to transmembrane proteins. BMC Bioinform. 2006, 7, 189. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).