A Comprehensive Evaluation of the Performance of Prediction Algorithms on Clinically Relevant Missense Variants

Abstract

:1. Introduction

2. Results

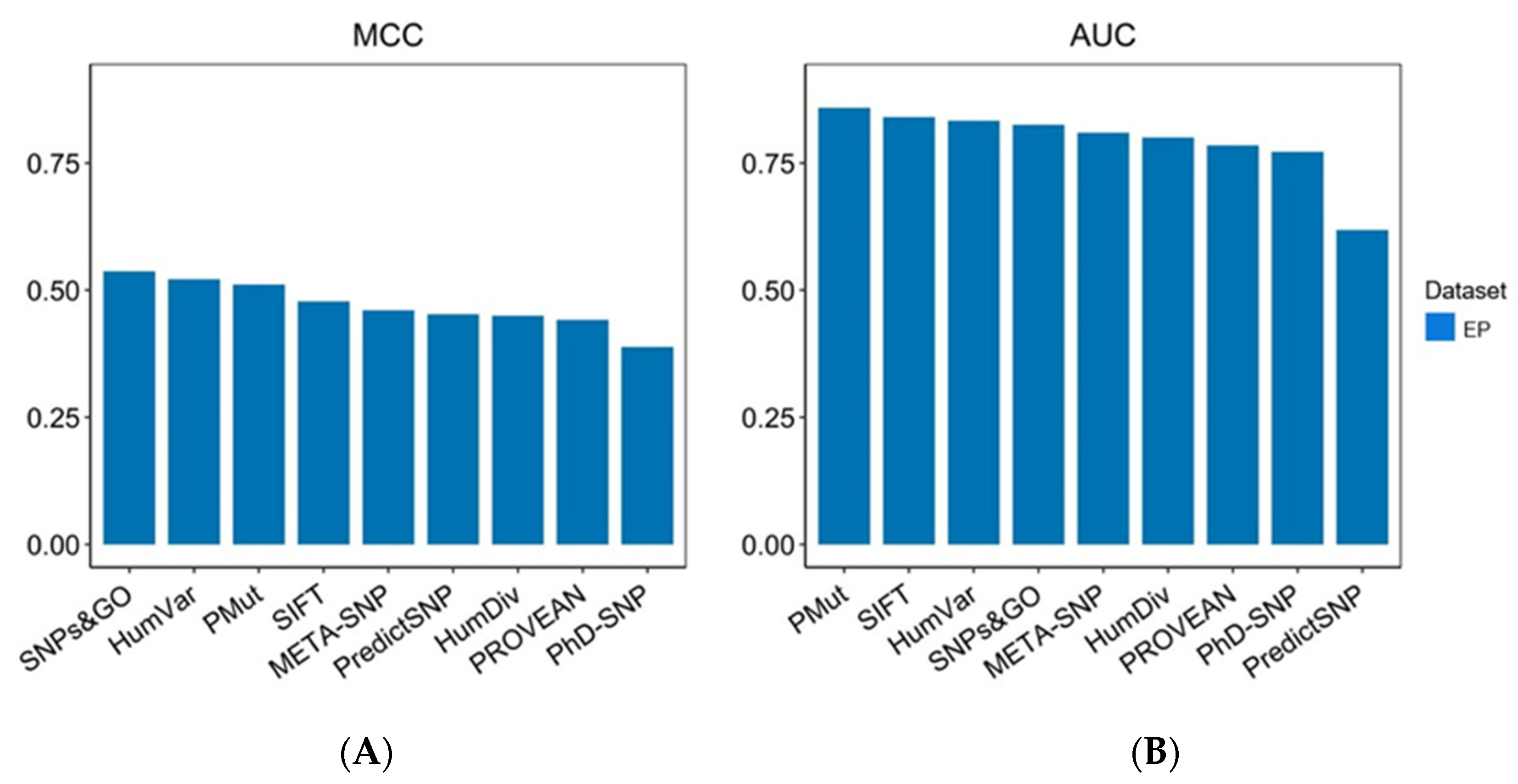

2.1. Benchmarking Datasets and Evaluation on the Expert Panel Dataset

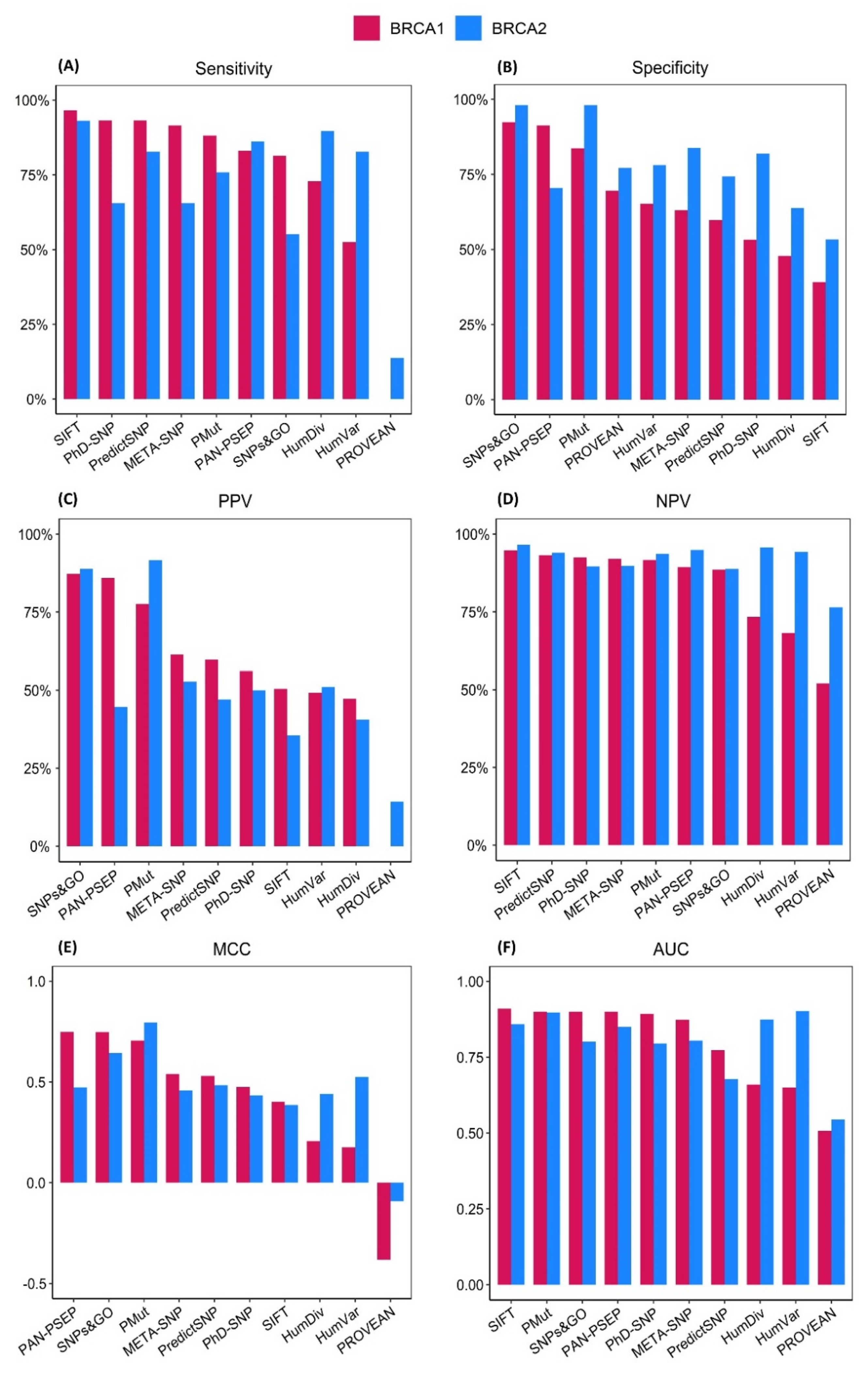

2.2. Evaluation on the BRCA1 Dataset

2.3. Evaluation on the BRCA2 Dataset

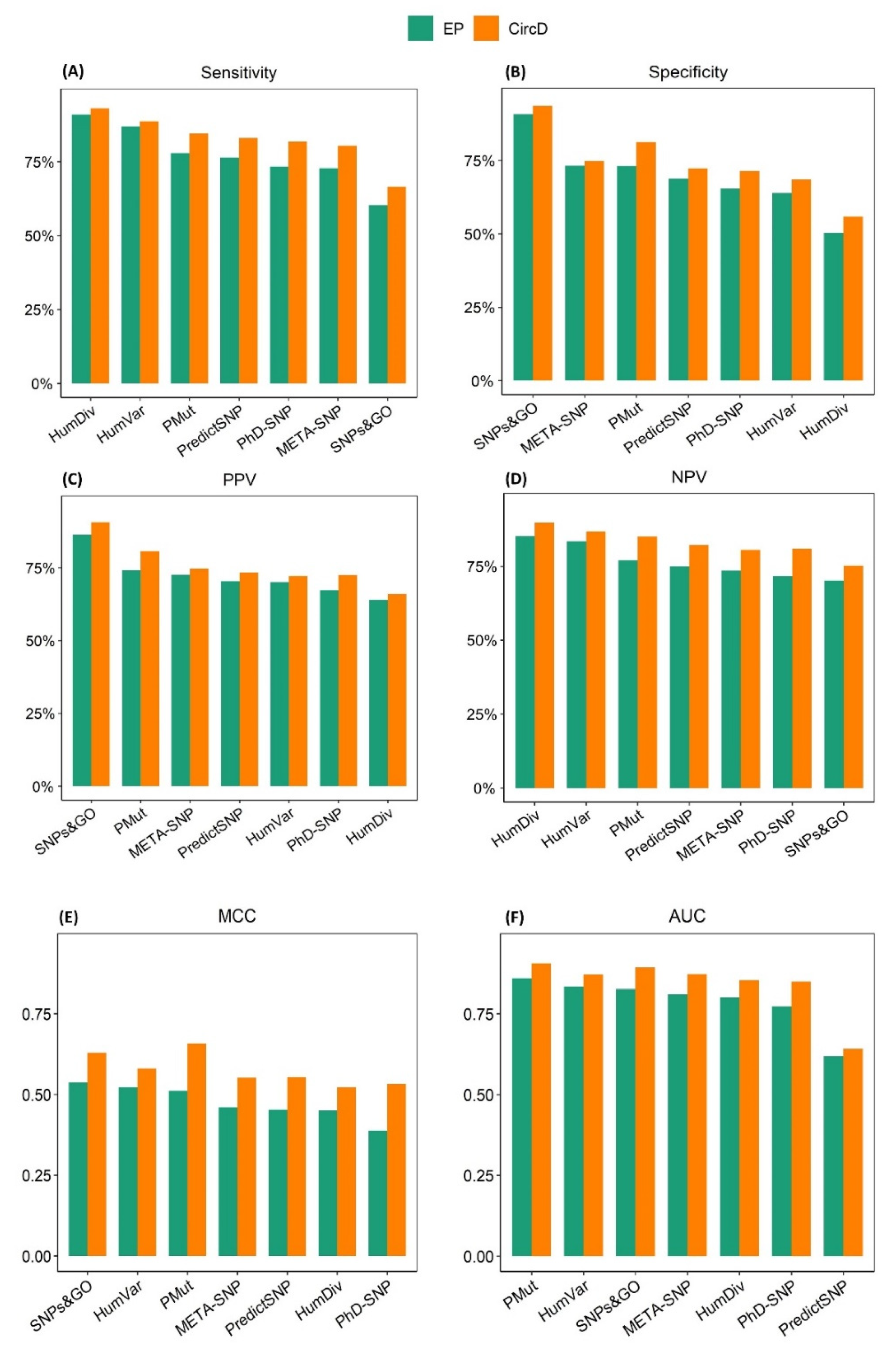

2.4. Assessing the Effect of Type 1 Circularity on the Performance of the Prediction Algorithms

3. Discussion

4. Materials and Methods

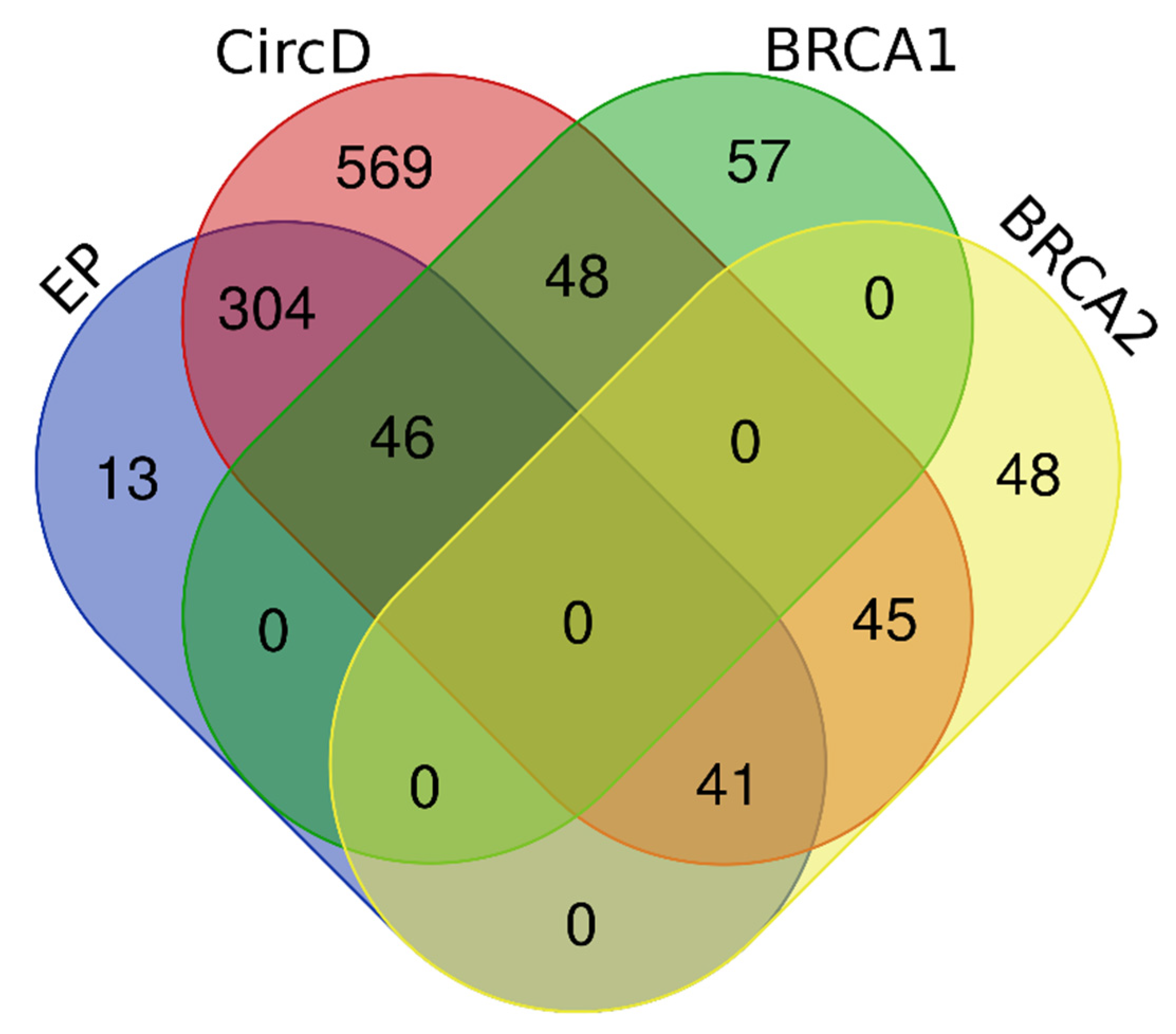

4.1. Variant Acquisition and Dataset Generation

4.2. Dataset Composition

4.3. Prediction Algorithm Selection

4.4. Benchmarking

4.5. Variant Classification

4.6. Performance Evaluation Metrics

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yohe, S.; Thyagarajan, B. Review of Clinical Next-Generation Sequencing. Arch. Pathol. Lab. Med. 2017, 141, 1544–1557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suwinski, P.; Ong, C.K.; Ling, M.H.T.; Poh, Y.M.; Khan, A.M.; Ong, H.S. Advancing Personalized Medicine through the Application of Whole Exome Sequencing and Big Data Analytics. Front. Genet. 2019, 10, 49. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vears, D.F.; Sénécal, K.; Borry, P. Reporting Practices for Variants of Uncertain Significance from next Generation Sequencing Technologies. Eur. J. Med. Genet. 2017, 60, 553–558. [Google Scholar] [CrossRef]

- Cheon, J.Y.; Mozersky, J.; Cook-Deegan, R. Variants of Uncertain Significance in BRCA: A Harbinger of Ethical and Policy Issues to Come? Genome Med. 2014, 6, 121. [Google Scholar] [CrossRef] [Green Version]

- Federici, G.; Soddu, S. Variants of Uncertain Significance in the Era of High-Throughput Genome Sequencing: A Lesson from Breast and Ovary Cancers. J. Exp. Clin. Cancer Res. 2020, 39, 46. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Domené, S.; Scaglia, P.A.; Gutiérrez, M.L.; Domené, H.M. Applying Bioinformatic Platforms, in Vitro, and in Vivo Functional Assays in the Characterization of Genetic Variants in the GH/IGF Pathway Affecting Growth and Development. Cells 2021, 10, 2063. [Google Scholar] [CrossRef] [PubMed]

- González-del Pozo, M.; Fernández-Suárez, E.; Bravo-Gil, N.; Méndez-Vidal, C.; Martín-Sánchez, M.; Rodríguez-de la Rúa, E.; Ramos-Jiménez, M.; Morillo-Sánchez, M.J.; Borrego, S.; Antiñolo, G. A Comprehensive WGS-Based Pipeline for the Identification of New Candidate Genes in Inherited Retinal Dystrophies. NPJ Genom. Med. 2022, 7, 17. [Google Scholar] [CrossRef] [PubMed]

- Adzhubei, I.A.; Schmidt, S.; Peshkin, L.; Ramensky, V.E.; Gerasimova, A.; Bork, P.; Kondrashov, A.S.; Sunyaev, S.R. A Method and Server for Predicting Damaging Missense Mutations. Nat. Methods 2010, 7, 248–249. [Google Scholar] [CrossRef] [Green Version]

- Ng, P.C.; Henikoff, S. SIFT: Predicting Amino Acid Changes That Affect Protein Function. Nucleic Acids Res. 2003, 31, 3812–3814. [Google Scholar] [CrossRef] [Green Version]

- Schwarz, J.M.; Cooper, D.N.; Schuelke, M.; Seelow, D. Mutationtaster2: Mutation Prediction for the Deep-Sequencing Age. Nat. Methods 2014, 11, 361–362. [Google Scholar] [CrossRef]

- Ernst, C.; Hahnen, E.; Engel, C.; Nothnagel, M.; Weber, J.; Schmutzler, R.K.; Hauke, J. Performance of in Silico Prediction Tools for the Classification of Rare BRCA1/2 Missense Variants in Clinical Diagnostics. BMC Med. Genom. 2018, 11, 35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- López-Ferrando, V.; Gazzo, A.; De La Cruz, X.; Orozco, M.; Gelpí, J.L. PMut: A Web-Based Tool for the Annotation of Pathological Variants on Proteins, 2017 Update. Nucleic Acids Res. 2017, 45, W222–W228. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.; Sims, G.E.; Murphy, S.; Miller, J.R.; Chan, A.P. Predicting the Functional Effect of Amino Acid Substitutions and Indels. PLoS ONE 2012, 7, e46688. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ioannidis, N.M.; Rothstein, J.H.; Pejaver, V.; Middha, S.; McDonnell, S.K.; Baheti, S.; Musolf, A.; Li, Q.; Holzinger, E.; Karyadi, D.; et al. REVEL: An Ensemble Method for Predicting the Pathogenicity of Rare Missense Variants. Am. J. Hum. Genet. 2016, 99, 877–885. [Google Scholar] [CrossRef] [Green Version]

- Capriotti, E.; Altman, R.B.; Bromberg, Y. Collective Judgment Predicts Disease-Associated Single Nucleotide Variants. BMC Genom. 2013, 14 (Suppl. 3), S2. [Google Scholar] [CrossRef] [Green Version]

- Niroula, A.; Vihinen, M. Variation Interpretation Predictors: Principles, Types, Performance, and Choice. Hum. Mutat. 2016, 37, 579–597. [Google Scholar] [CrossRef]

- Richards, S.; Aziz, N.; Bale, S.; Bick, D.; Das, S.; Gastier-Foster, J.; Grody, W.W.; Hegde, M.; Lyon, E.; Spector, E.; et al. Standards and Guidelines for the Interpretation of Sequence Variants: A Joint Consensus Recommendation of the American College of Medical Genetics and Genomics and the Association for Molecular Pathology. Genet. Med. 2015, 17, 405–424. [Google Scholar] [CrossRef] [Green Version]

- de la Campa, E.Á.; Padilla, N.; de la Cruz, X. Development of Pathogenicity Predictors Specific for Variants That Do Not Comply with Clinical Guidelines for the Use of Computational Evidence. BMC Genom. 2017, 18, 569. [Google Scholar] [CrossRef] [Green Version]

- Ghosh, R.; Oak, N.; Plon, S.E. Evaluation of in Silico Algorithms for Use with ACMG/AMP Clinical Variant Interpretation Guidelines. Genome Biol. 2017, 18, 225. [Google Scholar] [CrossRef] [Green Version]

- Gunning, A.C.; Fryer, V.; Fasham, J.; Crosby, A.H.; Ellard, S.; Baple, E.L.; Wright, C.F. Assessing Performance of Pathogenicity Predictors Using Clinically Relevant Variant Datasets. J. Med. Genet. 2021, 58, 547–555. [Google Scholar] [CrossRef]

- Ellard, S.; Baple, E.L.; Callaway, A.; Berry, I.; Forrester, N.; Turnbull, C.; Owens, M.; Eccles, D.M.; Abbs, S.; Scott, R.; et al. ACGS Best Practice Guidelines for Variant Classification in Rare Disease 2020. 2020. Available online: https://www.acgs.uk.com/media/11631/uk-practice-guidelines-for-variant-classification-v4-01-2020.pdf (accessed on 7 June 2022).

- Feng, B.J. PERCH: A Unified Framework for Disease Gene Prioritization. Hum. Mutat. 2017, 38, 243–251. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bendl, J.; Stourac, J.; Salanda, O.; Pavelka, A.; Wieben, E.D.; Zendulka, J.; Brezovsky, J.; Damborsky, J. PredictSNP: Robust and Accurate Consensus Classifier for Prediction of Disease-Related Mutations. PLoS Comput. Biol. 2014, 10, e1003440. [Google Scholar] [CrossRef] [PubMed]

- van der Velde, K.J.; de Boer, E.N.; van Diemen, C.C.; Sikkema-Raddatz, B.; Abbott, K.M.; Knopperts, A.; Franke, L.; Sijmons, R.H.; de Koning, T.J.; Wijmenga, C.; et al. GAVIN: Gene-Aware Variant INterpretation for Medical Sequencing. Genome Biol. 2017, 18, 6. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alirezaie, N.; Kernohan, K.D.; Hartley, T.; Majewski, J.; Hocking, T.D. ClinPred: Prediction Tool to Identify Disease-Relevant Nonsynonymous Single-Nucleotide Variants. Am. J. Hum. Genet. 2018, 103, 474–483. [Google Scholar] [CrossRef] [Green Version]

- Landrum, M.J.; Lee, J.M.; Benson, M.; Brown, G.; Chao, C.; Chitipiralla, S.; Gu, B.; Hart, J.; Hoffman, D.; Hoover, J.; et al. ClinVar: Public Archive of Interpretations of Clinically Relevant Variants. Nucleic Acids Res. 2016, 44, D862–D868. [Google Scholar] [CrossRef] [Green Version]

- With, P.; Oncokb, M.; Food, U.S.; Comprehensive, N.; Network, C.; To, R.; Genomics, C.; Oncokb, C.; The, I.; Food, U.S.; et al. OncoKB: A Precision Oncology Knowledge Base. JCO Precis. Oncol. 2017, 1, 1–16. [Google Scholar]

- Stenson, P.D.; Ball, E.V.; Mort, M.; Phillips, A.D.; Shiel, J.A.; Thomas, N.S.T.; Abeysinghe, S.; Krawczak, M.; Cooper, D.N. Human Gene Mutation Database (HGMD®): 2003 Update. Hum. Mutat. 2003, 21, 577–581. [Google Scholar] [CrossRef]

- Sasidharan Nair, P.; Vihinen, M. VariBench: A Benchmark Database for Variations. Hum. Mutat. 2013, 34, 42–49. [Google Scholar] [CrossRef]

- Li, J.; Zhao, T.; Zhang, Y.; Zhang, K.; Shi, L.; Chen, Y.; Wang, X.; Sun, Z. Performance Evaluation of Pathogenicity-Computation Methods for Missense Variants. Nucleic Acids Res. 2018, 46, 7793–7804. [Google Scholar] [CrossRef] [Green Version]

- Grimm, D.G.; Azencott, C.A.; Aicheler, F.; Gieraths, U.; Macarthur, D.G.; Samocha, K.E.; Cooper, D.N.; Stenson, P.D.; Daly, M.J.; Smoller, J.W.; et al. The Evaluation of Tools Used to Predict the Impact of Missense Variants Is Hindered by Two Types of Circularity. Hum. Mutat. 2015, 36, 513–523. [Google Scholar] [CrossRef]

- Thusberg, J.; Olatubosun, A.; Vihinen, M. Performance of Mutation Pathogenicity Prediction Methods on Missense Variants. Hum. Mutat. 2011, 32, 358–368. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Wei, P.; Jian, X.; Gibbs, R.; Boerwinkle, E.; Wang, K.; Liu, X. Comparison and Integration of Deleteriousness Prediction Methods for Nonsynonymous SNVs in Whole Exome Sequencing Studies. Hum. Mol. Genet. 2015, 24, 2125–2137. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Suybeng, V.; Koeppel, F.; Harlé, A.; Rouleau, E. Comparison of Pathogenicity Prediction Tools on Somatic Variants. J. Mol. Diagn. 2020, 22, 1383–1392. [Google Scholar] [CrossRef] [PubMed]

- Capriotti, E.; Calabrese, R.; Casadio, R. Predicting the Insurgence of Human Genetic Diseases Associated to Single Point Protein Mutations with Support Vector Machines and Evolutionary Information. Bioinformatics 2006, 22, 2729–2734. [Google Scholar] [CrossRef] [Green Version]

- Capriotti, E.; Calabrese, R.; Fariselli, P.; Martelli, P.L.; Altman, R.B.; Casadio, R. WS-SNPs&GO: A Web Server for Predicting the Deleterious Effect of Human Protein Variants Using Functional Annotation. BMC Genom. 2013, 14 (Suppl. S3), S6. [Google Scholar] [CrossRef] [Green Version]

- Tang, H.; Thomas, P.D. PANTHER-PSEP: Predicting Disease-Causing Genetic Variants Using Position-Specific Evolutionary Preservation. Bioinformatics 2016, 32, 2230–2232. [Google Scholar] [CrossRef]

- Nicoletto, M.O.; Donach, M.; De Nicolo, A.; Artioli, G.; Banna, G.; Monfardini, S. BRCA-I and BRCA-2 Mutations as Prognostic Factors in Clinical Practice and Genetic Counselling. Cancer Treat. Rev. 2001, 27, 295–304. [Google Scholar] [CrossRef] [PubMed]

- Caputo, S.M.; Golmard, L.; Léone, M.; Damiola, F.; Guillaud-Bataille, M.; Revillion, F.; Rouleau, E.; Derive, N.; Buisson, A.; Basset, N.; et al. Classification of 101 BRCA1 and BRCA2 Variants of Uncertain Significance by Cosegregation Study: A Powerful Approach. Am. J. Hum. Genet. 2021, 108, 1907–1923. [Google Scholar] [CrossRef]

- Cubuk, C.; Garrett, A.; Choi, S.; King, L.; Loveday, C.; Torr, B.; Burghel, G.J.; Durkie, M.; Callaway, A.; Robinson, R.; et al. Clinical Likelihood Ratios and Balanced Accuracy for 44 in Silico Tools against Multiple Large-Scale Functional Assays of Cancer Susceptibility Genes. Genet. Med. 2021, 23, 2096–2104. [Google Scholar] [CrossRef]

- Kerr, I.D.; Cox, H.C.; Moyes, K.; Evans, B.; Burdett, B.C.; van Kan, A.; McElroy, H.; Vail, P.J.; Brown, K.L.; Sumampong, D.B.; et al. Assessment of in Silico Protein Sequence Analysis in the Clinical Classification of Variants in Cancer Risk Genes. J. Community Genet. 2017, 8, 87–95. [Google Scholar] [CrossRef] [Green Version]

- Pfeffer, C.M.; Ho, B.N.; Singh, A.T.K. The Evolution, Functions and Applications of the Breast Cancer Genes BRCA1 and BRCA2. Cancer Genom. Proteom. 2017, 14, 293–298. [Google Scholar]

- Altschul, S.F.; Gish, W.; Miller, W.; Myers, E.W.; Lipman, D.J. Basic Local Alignment Search Tool. J. Mol. Biol. 1990, 215, 403–410. [Google Scholar] [CrossRef]

- Camacho, C.; Coulouris, G.; Avagyan, V.; Ma, N.; Papadopoulos, J.; Bealer, K.; Madden, T.L. BLAST+: Architecture and Applications. BMC Bioinform. 2009, 10, 421. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Martelotto, L.G.; Ng, C.K.; De Filippo, M.R.; Zhang, Y.; Piscuoglio, S.; Lim, R.S.; Shen, R.; Norton, L.; Reis-Filho, J.S.; Weigelt, B. Benchmarking Mutation Effect Prediction Algorithms Using Functionally Validated Cancer-Related Missense Mutations. Genome Biol. 2014, 15, 484. [Google Scholar] [CrossRef] [PubMed]

- Bateman, A.; Martin, M.J.; Orchard, S.; Magrane, M.; Agivetova, R.; Ahmad, S.; Alpi, E.; Bowler-Barnett, E.H.; Britto, R.; Bursteinas, B.; et al. UniProt: The Universal Protein Knowledgebase in 2021. Nucleic Acids Res. 2021, 49, D480–D489. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [Green Version]

- Mandrekar, J.N. Receiver Operating Characteristic Curve in Diagnostic Test Assessment. J. Thorac. Oncol. 2010, 5, 1315–1316. [Google Scholar] [CrossRef] [Green Version]

- Team, R.C. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Olsen, L.; Zachariae, H. cvms: Cross-Validation for Model Selection. R Package Version 1.3.3. 2021. Available online: https://CRAN.R-project.org/package=cvms (accessed on 7 June 2022).

- Wickham, H. Package ‘ggplot2’: Elegant Graphics for Data Analysis; Springer-Verlag: New York, NY, USA, 2016; Available online: https://ggplot2.tidyverse.org (accessed on 7 June 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Software | ACC (%) | SEN (%) | SPE (%) | PPV (%) | NPV (%) | MCC | AUC |

|---|---|---|---|---|---|---|---|

| (A) BRCA1 | |||||||

| PMut | 85.43 | 88.14 | 83.7 | 77.61 | 91.67 | 0.705 | 0.932 |

| PROVEAN | 42.38 | 0.00 | 69.57 | 0.00 | 52.03 | −0.382 | 0.507 |

| SIFT | 61.59 | 96.61 | 39.13 | 50.44 | 94.74 | 0.402 | 0.910 |

| SNPs&GO | 88.08 | 81.36 | 92.39 | 87.27 | 88.54 | 0.748 | 0.925 |

| PhD-SNP | 68.87 | 93.22 | 53.26 | 56.12 | 92.45 | 0.475 | 0.893 |

| PredictSNP | 72.85 | 93.22 | 59.78 | 59.78 | 93.22 | 0.530 | 0.773 |

| META-SNP | 74.17 | 91.53 | 63.04 | 61.36 | 92.06 | 0.540 | 0.873 |

| PAN-PSEP | 88.08 | 83.05 | 91.3 | 85.96 | 89.36 | 0.748 | 0.895 |

| HumDiv | 57.62 | 72.88 | 47.83 | 47.25 | 73.33 | 0.206 | 0.660 |

| HumVar | 60.26 | 52.54 | 65.22 | 49.21 | 68.18 | 0.176 | 0.650 |

| (B) BRCA2 | |||||||

| PMut | 93.28 | 75.86 | 98.10 | 91.67 | 93.64 | 0.794 | 0.898 |

| PROVEAN | 63.43 | 13.79 | 77.14 | 14.29 | 76.42 | −0.092 | 0.544 |

| SIFT | 61.94 | 93.10 | 53.33 | 35.53 | 96.55 | 0.386 | 0.859 |

| SNPs&GO | 88.81 | 55.17 | 98.10 | 88.89 | 88.79 | 0.643 | 0.801 |

| PhD-SNP | 78.36 | 65.52 | 81.90 | 50.00 | 89.58 | 0.433 | 0.795 |

| PredictSNP | 76.12 | 82.76 | 74.29 | 47.06 | 93.98 | 0.484 | 0.678 |

| META-SNP | 79.85 | 65.52 | 83.81 | 52.78 | 89.80 | 0.458 | 0.805 |

| PAN-PSEP | 73.88 | 86.21 | 70.48 | 44.64 | 94.87 | 0.473 | 0.850 |

| HumDiv | 69.40 | 89.66 | 63.81 | 40.63 | 95.71 | 0.441 | 0.874 |

| HumVar | 79.10 | 82.76 | 78.10 | 51.06 | 94.25 | 0.525 | 0.902 |

| Software | ACC (%) | SEN (%) | SPE (%) | PPV (%) | NPV (%) | MCC | AUC | M/V |

|---|---|---|---|---|---|---|---|---|

| PMut | 82.84 | 84.54 | 81.26 | 80.65 | 85.05 | 0.657 | 0.905 | 16 |

| SNPs&GO | 80.63 | 66.53 | 93.61 | 90.57 | 75.22 | 0.629 | 0.892 | 0 |

| PhD-SNP | 76.35 | 81.78 | 71.35 | 72.46 | 80.95 | 0.533 | 0.848 | 0 |

| PredictSNP | 77.40 | 82.97 | 72.26 | 73.38 | 82.16 | 0.554 | 0.641 | 0 |

| META-SNP | 77.49 | 80.40 | 74.82 | 74.63 | 80.55 | 0.552 | 0.871 | 0 |

| HumDiv | 73.69 | 93.04 | 55.86 | 66.01 | 89.71 | 0.522 | 0.853 | 0 |

| HumVar | 78.17 | 88.67 | 68.50 | 72.17 | 86.77 | 0.580 | 0.870 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qorri, E.; Takács, B.; Gráf, A.; Enyedi, M.Z.; Pintér, L.; Kiss, E.; Haracska, L. A Comprehensive Evaluation of the Performance of Prediction Algorithms on Clinically Relevant Missense Variants. Int. J. Mol. Sci. 2022, 23, 7946. https://doi.org/10.3390/ijms23147946

Qorri E, Takács B, Gráf A, Enyedi MZ, Pintér L, Kiss E, Haracska L. A Comprehensive Evaluation of the Performance of Prediction Algorithms on Clinically Relevant Missense Variants. International Journal of Molecular Sciences. 2022; 23(14):7946. https://doi.org/10.3390/ijms23147946

Chicago/Turabian StyleQorri, Erda, Bertalan Takács, Alexandra Gráf, Márton Zsolt Enyedi, Lajos Pintér, Ernő Kiss, and Lajos Haracska. 2022. "A Comprehensive Evaluation of the Performance of Prediction Algorithms on Clinically Relevant Missense Variants" International Journal of Molecular Sciences 23, no. 14: 7946. https://doi.org/10.3390/ijms23147946

APA StyleQorri, E., Takács, B., Gráf, A., Enyedi, M. Z., Pintér, L., Kiss, E., & Haracska, L. (2022). A Comprehensive Evaluation of the Performance of Prediction Algorithms on Clinically Relevant Missense Variants. International Journal of Molecular Sciences, 23(14), 7946. https://doi.org/10.3390/ijms23147946