1. Background

Gene expression contains gene transcription, splicing, translation, and conversion into biologically active protein molecules in vivo. Transcriptional regulation for genes is completed by transcription factors (TFs) binding to specific sites of these genes. These sites are called Transcription Factor Binding Sites (TFBSs). Thus, TFBS prediction is crucial for understanding transcriptional regulatory networks and gene expression regulation [

1,

2,

3].

Both experimental and computational methods were proposed to predict TFBSs. ChIP-chip [

4,

5] and ChIP-seq are two representative experimental methods. ChIP-chip combines the use of the ChIP technology and the gene chip technology. It first obtains DNA fragments by ChIP experiments and then uses gene chips to obtain DNA fragments that can bind to target TFs. ChIP-seq [

5,

6,

7] was proposed to identify TFBSs at genome scale, which combines the ChIP technology and high-throughput sequencing. Although these experimental methods can identify TFBSs accurately, they are labor-intensive and costly to run. Therefore, it is urgent to propose computational methods for TFBS prediction.

Many computational methods were proposed to predict TFBSs. As TFBSs are degenerate sequence motifs [

8], position weight matrices (PWMs) [

9] are used to represent TFBSs by many computational methods. PWM is derived from a set of aligned sequences which have related biology functions. PWMs of TFs can be represented by matrices, whose elements are the binding preferences of TFs at each position. As PWM can show base variations of each position of TFBSs, it is a popular method for DNA motif representations [

9]. As PWMs of many TFs can be retrieved from two transcription factor databases: JASPAR [

10] and TRANSFAC [

11], recent works attempted to predict TFBSs for those TFs by simply scanning the genome using their PWMs. For example, Comet [

12] and ModuleMiner [

13] used PWMs documented in these two databases to identify TFBSs. However, these prediction methods always generate too many false positives due to the factor that PWM cannot extract position dependencies between positions.

In addition to the use of PWMs, many methods also used machine learning to predict TFBSs. Talebzadeh and Zare-Mirakabad [

14] developed a method by the combined use of two types of features: the closest distance to the nearest histone and the total number of histone modifications. Won et al. [

15] developed an integrated method (called Chromia) using PWM and histone modification features. Results showed that Chromia significantly outperforms many available methods on 13 TFs in the mouse embryonic stem (mES) cell. As Support Vector Machine (SVM) has been successfully applied to address many prediction problems in Bioinformtics, some works also used SVM to predict TFBSs of TFs. For example, Kumar et al. [

16] used SVM to predict site occupancy and binding strength. In addition to SVM, random forest [

17] was also used to predict TFBSs. For example, Tsai et al. [

18] used random forest to examine the relative importance of sequence features, histone modification features, as well as DNA structure features in prediction and indicated that all the three feature types are significant in TFBS prediction.

Recent works suggested that deep learning methods are useful for TFBS prediction. DeepBind [

19], DeepSea [

20] and DanQ [

21] are three representative deep learning methods. DeepBind [

19], proposed by Alipanahi et al., used Convolutional Neural Network (CNN) to learn representations for TFBSs from their DNA sequences. DeepSea [

20], proposed by Zhou and Troyanskaya, combined the use of CNN and multi-task learning to learn representations for DNA sequences. DanQ [

21], an improved model of DeepSEA proposed by Quang and Xie, combined the use of CNN and Recurrent neural network (RNN). Both DeepSEA and DanQ used multi-task learning to learn representations for TFBSs. They include 690 TFBS prediction tasks, 104 histone modification prediction tasks, 125 prediction tasks for DNase I-hypersensitive sites (DHSs). These three deep learning methods achieve very good performance and are considered the state-of-the-art works.

When there exists sufficient labeled data, most existing methods can achieve very good performance. However, labeled data for most TFs in a lot of cell types can only be obtained by ChIP-chip or ChIP-seq, which are labor-intensive and costly to run. Thus, TFs do not have sufficient labeled data in many cell types, and some do not have any labeled data. It is quite challenging to predict TFBSs for TFs in cell types which lack labeled data. Nevertheless, several studies have shown that TFBSs of a TF in different cell types have common histone modifications and have common binding motifs. Therefore, computational methods can leverage on labeled data of target TFs available in cross-cell-type to predict TFBSs in cell types that lack labeled data. In this paper, we propose a cross-cell-type method, referred to as DANN_TF (see Materials and Methods), by combining the use of CNN and Adversarial Network [

22] to learn common features among multiple cell types with available labeled data. The learned common features are aimed to predict TFBSs for cell types that do not have sufficient labeled data or do not have any labeled data. The resource and executable code are freely available at

http://hlt.hitsz.edu.cn/DANNTF/ and

http://www.hitsz-hlt.com:8080/DANNTF/.

2. Experiments and Results

2.1. Experimental Settings

To evaluate DANN_TF at genomic scale, we predict TFBSs for the five TFs in the five cell types (see Materials and Methods, Table 5) with a total of 25 prediction tasks. When the target cell type has labeled data, DANN_TF amounts to a data augmentation method due to the fact that labeled data in the target cell type is augmented by labeled data in source cell types. To evaluate the influence of the size of labeled data in the target cell type on DANN_TF, we evaluate the performance of semi-supervised prediction by DANN_TF. In semi-supervised prediction, we conduct three experiments, in which 50%, 20% and 10% trained data in the target cell type are labeled, respectively. When the target cell type has no labeled data, DANN_TF amounts to a cross-cell-type method due to the fact that labeled data in source cell types is used to predict TFBSs for the target cell type. In each evaluation, our proposed DANN_TF is compared with a baseline method. This baseline method is similar to DANN_TF except that it does not use Adversarial Network in prediction. To further evaluate the validity of labeled data available in source cell types for prediction in the target cell type, we compare our DANN_TF with supervised prediction by the baseline method. Finally, we compare our proposed DANN_TF with existing methods with state-of-the-art performance. In these evaluations, labeled data of each TF in each cell type are divided into 10 separate folds of equal size: 8 folds for training, 1 fold for validation, and 1 fold for test. The above process is repeated for ten times until each fold is tested once. Finally, the performance on the 10 folds are averaged.

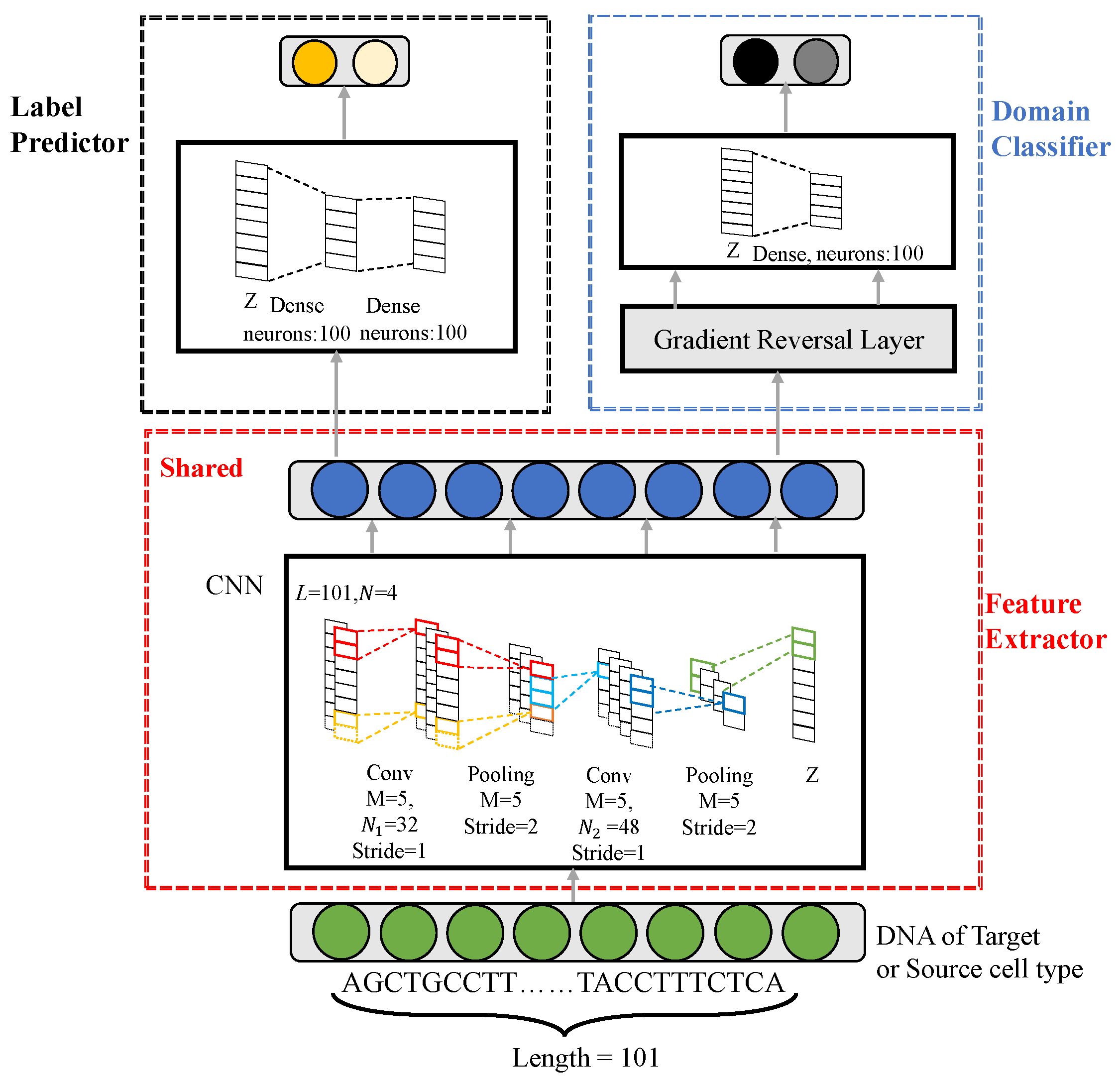

The inputs of the feature extractor (see Materials and Methods, Figure 6) are feature matrices with dimension of

. The feature extractor in DANN_TF uses two convolution layers with

and

kernels, respectively, and each is followed by a max pooling layer. The kernel size of the two convolution layers and the pooling size of the two pooling layers are

. The stride of the two convolution layers and the two pooling layers are 1 and 2, respectively. A dropout regularization layer with dropout probability of 0.7 is used to avoid overfitting. The label predictor has two fully connected layers of 100 neurons followed by a softmax classifier. The domain classifier has a fully connected layer with 100 neurons followed by a softmax classifier. The domain adaptation parameter

is set to 1. Our DANN_TF model was trained using the Momentum algorithm [

23] with batch size of 128 instances. The momentum and the learning rate are set as 0.9 and 0.001, respectively. DANN_TF was implemented using the tensorflow-gpu 1.0 library.

2.2. Evaluation Metrics

We evaluate our proposed DANN_TF and compare with the baseline method using AUC. AUC [

24] is defined as the area under the Receiver Operating Characteristic (ROC) curve. ROC plots the true positive rate (sensitivity) versus the false negative rate (1-specificity) of different thresholds on the importance score. AUC measures the similarity of predictions to the known gold standard and the value is equivalent to the probability that a randomly chosen positive instance is ranked higher than a randomly chosen negative instance. An AUC of 1.0 and 0.5 indicate the best performance and the random performance, respectively.

A recent work [

25] suggested that F1 score is another useful evaluation metric for TFBS prediction problem.

where

denotes the number of true positives,

denotes the number of false positives and

denotes the number of false negatives. Moreover, we used

p value, calculated by the Wilcoxon signed-ranks test [

26], to evaluate whether performance differences in this paper are significant.

2.3. The Impact of Negative Samples on Experiment

In general, there are two negative sequence generation methods: random method and shuffle method. In the shuffle method, a non-TFBS was constructed for every TFBS by shuffling dinucleotides in this TFBS to keep the dinucleotide distribution unchanged. In the random method, non-TFBSs are random genomic loci that are not annotated as TFBS. To evaluate the impacts of the two methods on the performance of our proposed DANN_TF, we evaluate the performance of DANN_TF on JunD in the five cell types for cross-cell-type prediction by using negative sequences generated by the two negative sequence generation methods. For JunD in each cell type, we first used the shuffle method and the random method to generate 15 sets of non-TFBSs randomly, respectively. All these 30 sets contain equal number of non-TFBSs. Next, we obtain 30 data sets by combining the 30 sets of non-TFBSs with all the TFBSs. Finally, we trained our proposed DANN_TF by combining the unlabeled training data of the target cell type and labeled data of source cell types and trained the baseline method by labeled training data of source cell types. The performance of DANN_TF trained by different sets of non-TFBSs are listed in

Supplementary Table S1. Results show that the performance of the shuffle method and the random method are almost the same. Therefore, it is a strong indication that the two methods have little effect on the performance of DANN_TF.

2.4. Results of Data Augmentation by DANN_TF

To evaluate the performance of data augmentation by DANN_TF, our proposed DANN_TF and the baseline method are trained by the training data of the target cell type and all labeled data of source cell types. They are validated and tested by the validation data and the test data of the target cell type, respectively.

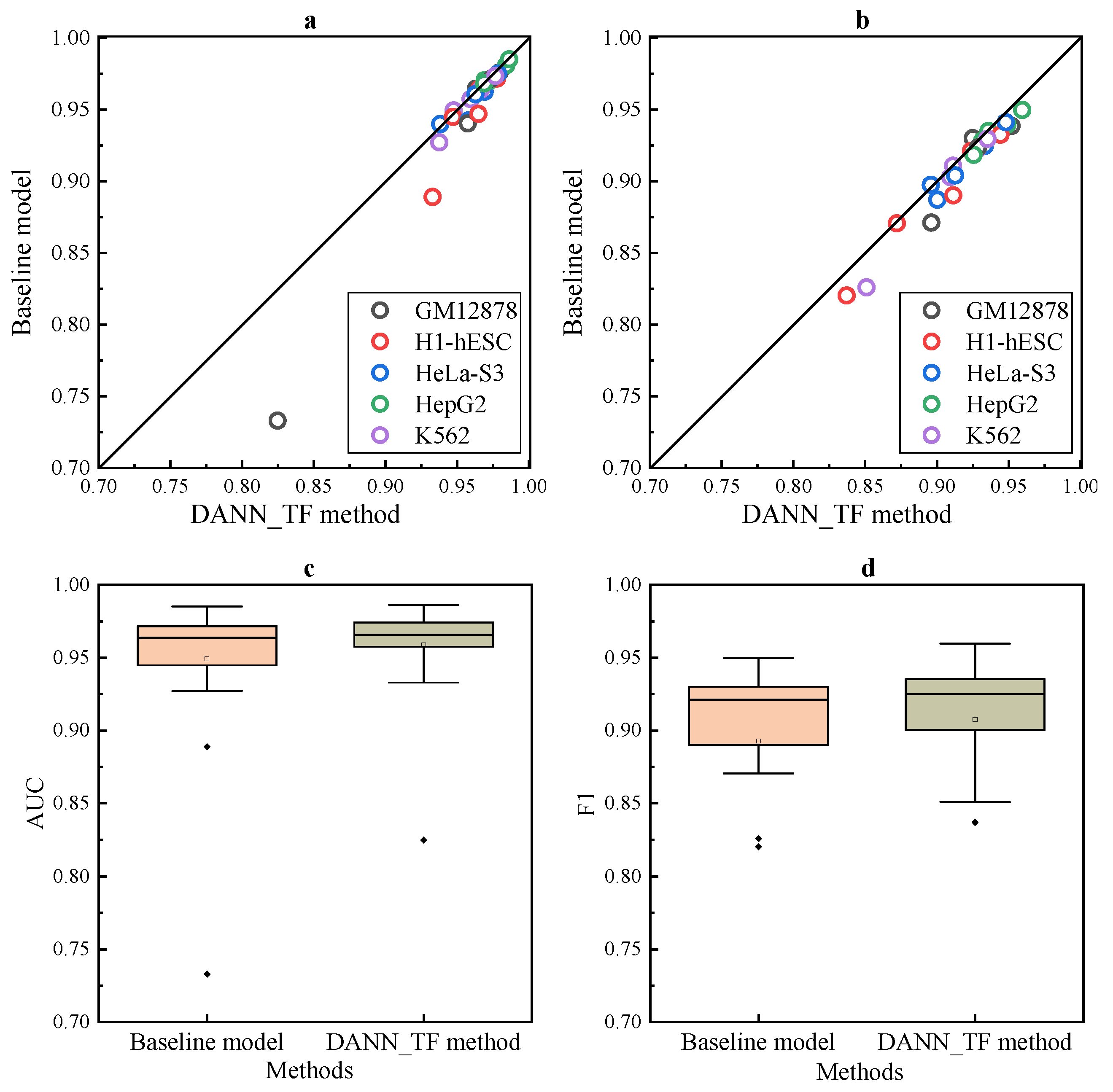

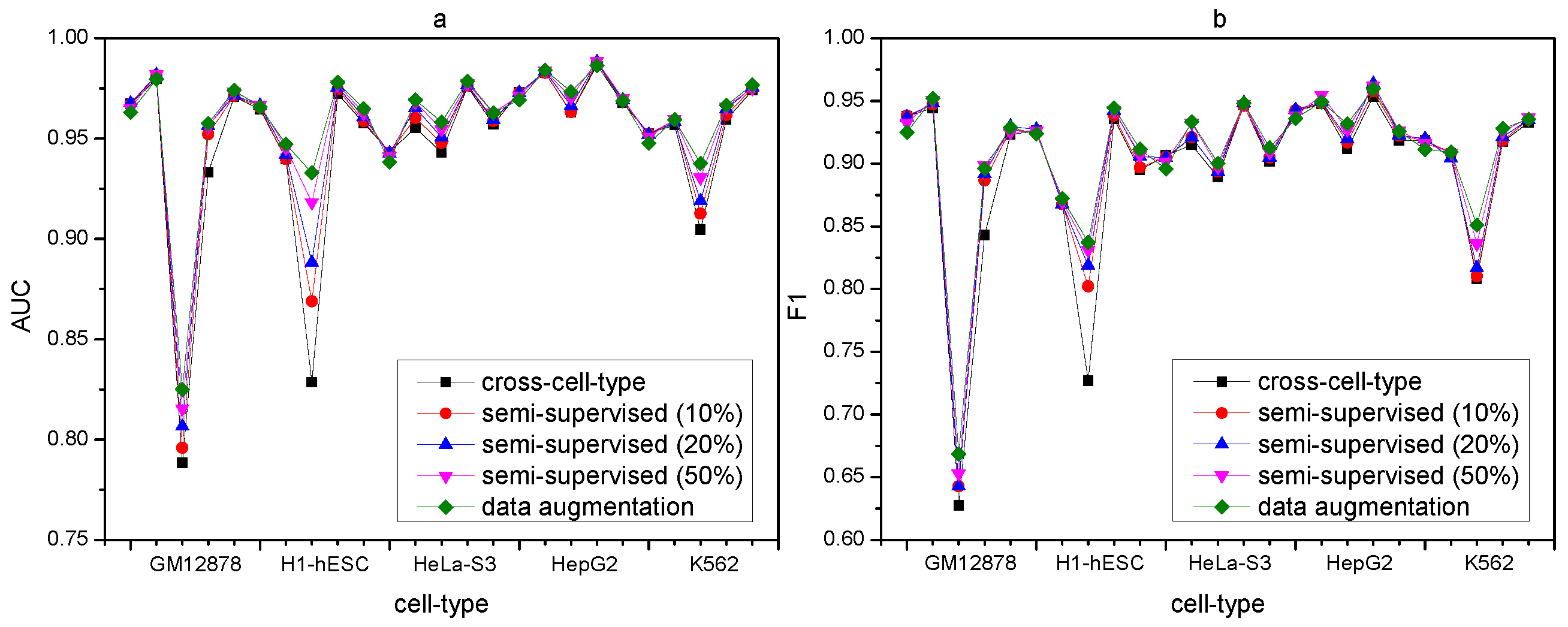

Results of DANN_TF in

Figure 1a,b show that DANN_TF achieves higher AUC and F1 score than the baseline method for most cell-type TF pairs. A cell-type TF pair denotes a prediction task.

Results in

Figure 1c,d show that the first quartile, the median and the third quartile of AUC and F1 score of DANN_TF are higher than that of the baseline method. Details of AUC and F1 score of DANN_TF and the baseline method on the 25 cell-type TF pairs is shown in

Supplementary Table S2, where the best performers are marked by bold. Results show that DANN_TF achieves higher AUC than the baseline method significantly for 21 pairs out of the 25 cell-type TF pairs. The maximum improvement and the average improvement are 9.19% and 0.95%, respectively. DANN_TF also achieves higher F1 score than the baseline method significantly for 23 pairs out of the 25 cell-type TF pairs. The maximum improvement and the average improvement are 16.29% and 1.47%, respectively. DANN_TF achieves the maximum improvement when predicting TFBSs for JunD in GM12878. One possible reason is that labeled training data of JunD in GM12878 is less than other cell-type TF pairs and DANN_TF can leverage labeled data available in the source cell types to greatly improve the performance for GM12878.

Most noticeably, our proposed DANN_TF performs better than the baseline method with a larger margin for JunD in three cell types. The improvements in GM12878, H1-hESC and K562 are 9% in AUC, 4% in AUC, and 2.5% in F1 score, respectively. For REST, the improvement is more than 1.5% in AUC for GM12878 and more than 1% in F1 score for three cell types. For GABPA and USF2, although their improvements are smaller than that of the other three TFs, the improvement in F1 score for some cell types are also more than 1%. This is a strong indication that Adversarial Network indeed play an important role in learning common features among the target cell type and source cell types. Exceptionally, DANN_TF achieves lower AUC than the baseline method for CTCF in four cell types. One possible reason is that the labeled training data of CTCF in these four cell types are sufficient and augmenting their training data by labeled data available in other cell types may bring a lot of noise.

2.5. Results of Semi-Supervised Prediction by DANN_TF

To evaluate the performance of semi-supervised prediction by DANN_TF, we suppose that only a portion of the training data of the target cell type is labeled and the remaining training data is unlabeled. To evaluate the influence of the number of the labeled training data in the target cell type on DANN_TF, three experiments are conducted: (1) 50% training data is labeled, (2) 20% training data is labeled and (3) 10% training data is labeled.

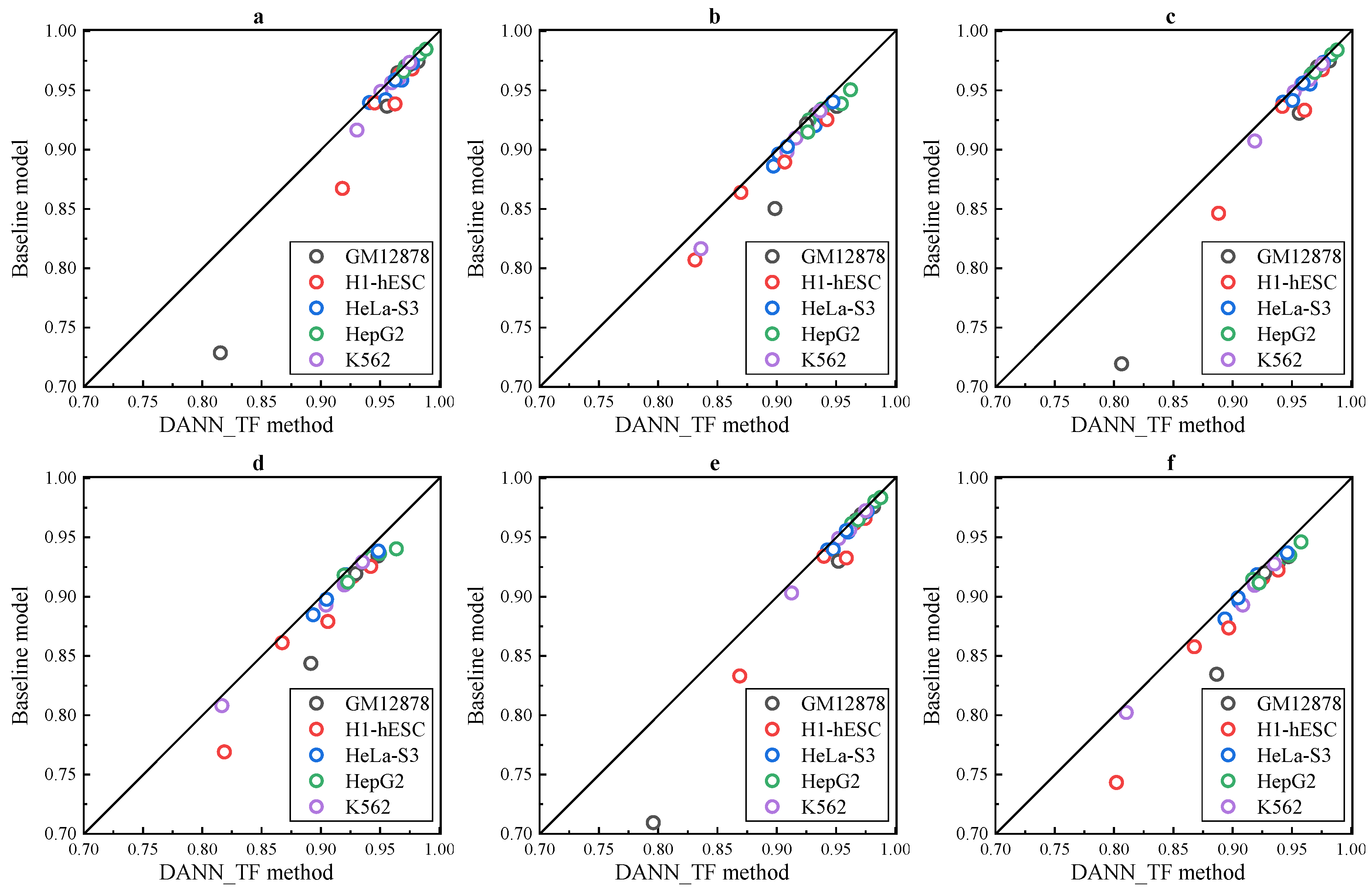

Results of the three experiments in

Figure 2a–f show that DANN_TF performs better than the baseline method in both AUC and F1 score for all the 25 cell-type TF pairs in all the three experiments. Details of AUC and F1 score of DANN_TF and the baseline method for each cell-type TF pair in the three experiments is listed in

Supplementary Tables S3–S5, respectively. For the experiment with 50% labeled training data, the maximum improvement and the average improvement in AUC are 8.69% and 1.14%, respectively. The maximum improvement and the average improvement in F1 score are 12.32% and 1.35%, respectively. The improvements in AUC on JunD in GM12878 and H1-hESC as well as USF2 in H1-hESC are 8.69%, 5.1% and 2.41%, respectively. For the experiment with 20% labeled training data, the maximum improvement and the average improvement in AUC are 8.71% and 1.5%, respectively. The maximum improvement and the average improvement in F1 score are 10.46% and 1.75%, respectively. The improvements in AUC on JunD in GM12878 and H1-hESC is 8.71% and 4.19%, respectively. The improvements in AUC on REST in GM12878 and USF2 in H1-hESC are 2.56% and 2.76%, respectively. For the experiment with 10% labeled training data, the maximum improvement and the average improvement in AUC are 8.66% and 1.06%, respectively. The maximum improvement and the average improvement in F1 score are 10.64% and 1.77%, respectively. The improvements in AUC on JunD in GM12878 and H1-hESC is 9.06% and 3.59%, respectively. The improvements in AUC on REST in GM12878 and USF2 in H1-hESC are 2.22% and 2.61%, respectively.

The analysis of the three experiments shows that DANN_TF achieves high performance when labeled training data is reduced. On the contrary, the performance of the baseline method is reduced obviously when labeled training data is reduced. This is a strong indication that Adversarial Network indeed can help to learn common features among the target cell type and source cell types when the number of labeled training data in the target cell type is limited.

2.6. Results of Cross-Cell-Type Prediction by DANN_TF

To evaluate the performance of cross-cell-type prediction by our proposed DANN_TF, we suppose that all the training data of the target cell type is unlabeled while the validation data as well as the test data are labeled. Thus, our proposed DANN_TF is trained by combining unlabeled training data of the target cell type and labeled data of source cell types. As the baseline method cannot use unlabeled data, the baseline method is trained by labeled data of source cell types. They are validated and tested by the validation data and the test data of the target cell type, respectively. As our goal is to predict TFBSs of TFs in the target cell type, and DANN_TF is trained by unlabeled training data of the target cell type and labeled data of source cell types, thus DANN_TF is an unsupervised method. For example, when we use DANN_TF to predict TFBSs for CTCF in GM1278, the training data of DANN_TF are the combination of unlabeled training data of CTCF in GM12878 and labeled data of CTCT in other four cell types. As DANN_TF do not use any labeled training data of CTCF in GM12878, predictions of DANN_TF for CTCF in GM12878 are unsupervised predictions.

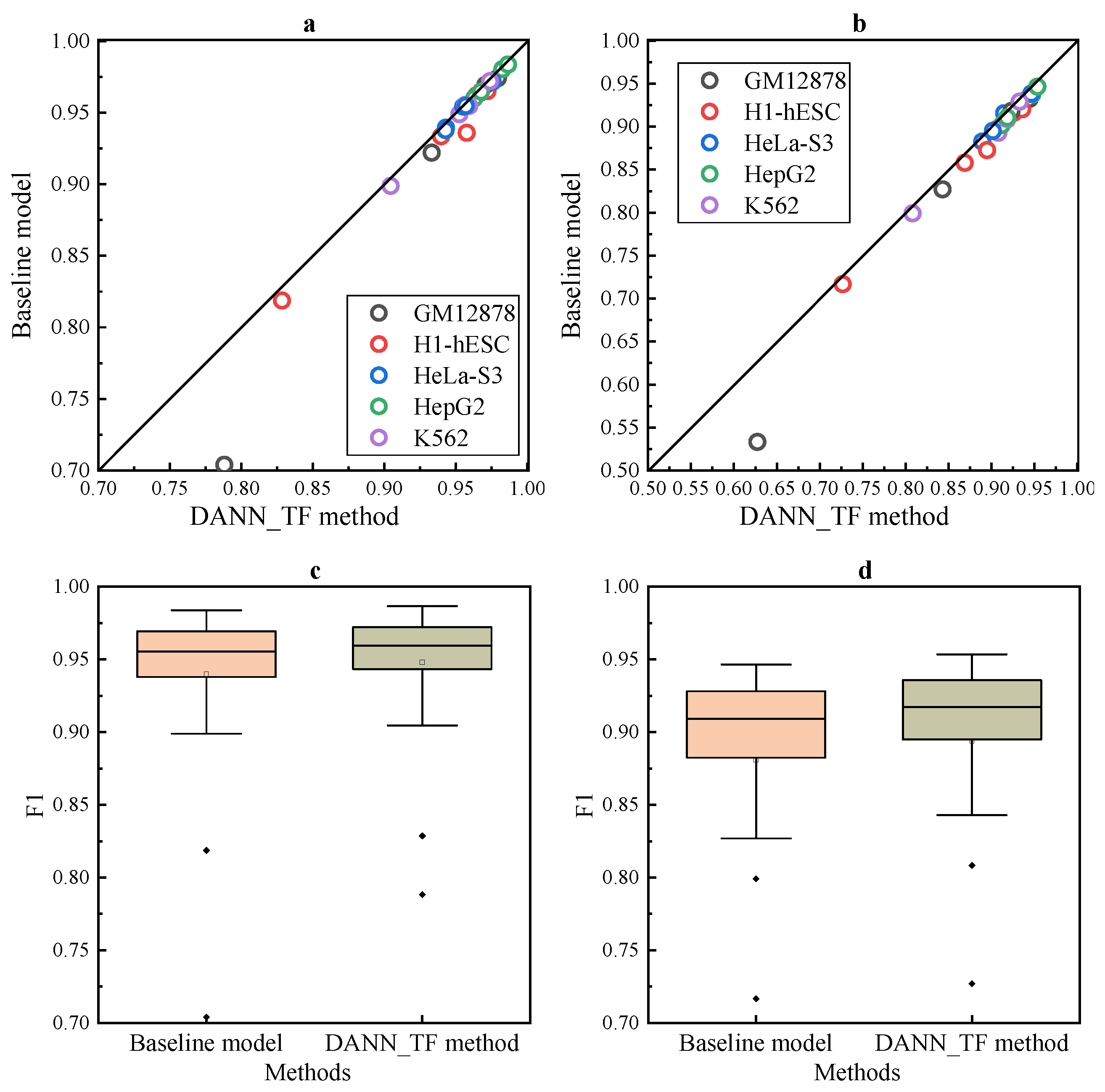

The comparison listed in

Figure 3a,b shows that DANN_TF performs better than the baseline method for most cell-type TF pairs.

Figure 3c,d show that the first quartile, the median and the third quartile of the performance of DANN_TF are higher than that of the baseline method. Details of AUC and F1 score of DANN_TF and the baseline method for each cell-type TF pair is listed in

Supplementary Table S6, where the best performers are marked by bold. The table shows that DANN_TF performs better than the baseline method significantly on all the 25 cell-type TF pairs in both AUC and F1 score. More specifically, for JunD in GM12878 and H1-hESC, REST in GM12878 as well as USF2 in H1-hESC, the improvements are more than 1% in AUC significantly. In terms of F1 score, DANN_TF performs better than the baseline method significantly for most cell-type TF pairs. These results indicate that our proposed DANN_TF can achieve better performance than the baseline method for cross-cell-type prediction.

2.7. Comparison between Cross-Cell-Type Prediction by DANN_TF and Supervised Prediction by the Baseline Method

To further evaluate the performance of cross-cell-type prediction by our proposed DANN_TF, we compare the performance of cross-cell-type prediction by DANN_TF to that of supervised prediction by the baseline method. Our proposed DANN_TF is trained by the combined use of unlabeled training data of the target cell type and labeled data of source cell types. The baseline method is trained by the training data of the target cell type, which are labeled.

The performance of cross-cell-type prediction and supervised prediction are listed in

Table 1. The table shows that there are 24 pairs out of the 25 cell-type TF pairs on which DANN_TF performs better than the baseline method significantly in AUC. For F1 score, there are 23 pairs on which DANN_TF performs better than the baseline method significantly. The average improvement is more than 5% in AUC and more than 8% in F1 score, which is a very prominent improvement. More specifically, for JunD, REST, and GABPA, our proposed DANN_TF performs better than the baseline method with a larger margin for almost all the five cell types. DANN_TF performs better than the baseline method by more than 17% AUC for REST in GM12878, more than 9% AUC for REST in H1-hESC. Meanwhile, for CTCF and USF2, F1 score and AUC in some cell types are also improved by more than 1%. These comparisons show that DANN_TF achieves better performance than the baseline method.

2.8. Results for Cross-Cell-Type Prediction by DANN_TF on 13 TFs of the Five Cell Types

We further evaluate DANN_TF for cross-cell-type prediction by additional 13 TFs in the five cell types (see Materials and Methods, Table 6). We suppose that all the training data of the target cell type is unlabeled while the validation data as well as the test data are labeled. Thus, DANN_TF is trained by combining unlabeled training data of the target cell type and labeled data of all the source cell types. As the baseline method cannot be trained by unlabeled data, it is trained by only labeled data of the source cell types. We also compare cross-cell-type prediction by DANN_TF to supervised prediction by the baseline method, where the baseline method is trained by labeled data of the target cell type.

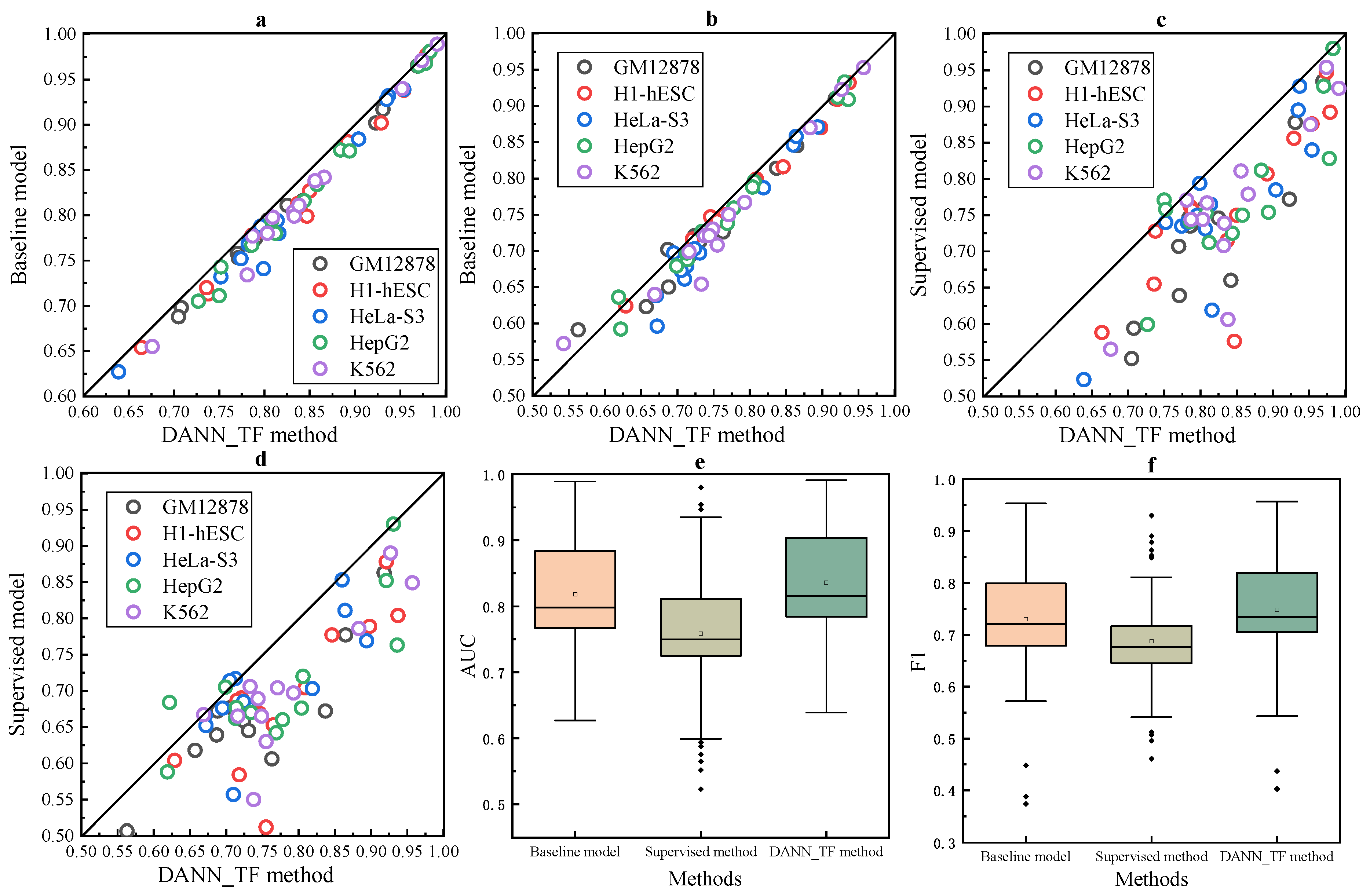

The comparison between cross-cell-type prediction by the baseline method and our proposed DANN_TF and supervised prediction by the baseline method is shown in

Figure 4.

Figure 4a–d show that DANN_TF achieves higher AUC and F1 score than the baseline method for cross-cell-type prediction, and performs better than supervised prediction for most cell-type TF pairs.

Figure 4e,f show that the first quartile, the median and the third quartile of AUC and F1 score for DANN_TF are highest, respectively. Details of AUC and F1 score for these three models on the additional 13 TFs are listed in

Supplementary Table S7. AUCs and F1 scores of cross-cell-type prediction by DANN_TF and the baseline method and supervised prediction by the baseline method for the 13 TFs in the five cell types are shown in

Supplementary Figure S1.

Table 2 and

Table 3 summarize AUC gains of DANN_TF compared to the baseline method and supervised prediction, respectively. For the five cell types, DANN_TF outperforms the baseline method on at least 86.4% TFs of all the cell types. The maximum improvement and the average improvement are at least 2.6% and at least 1.5%, re1spectively. The micro average of the maximum improvements and the average improvements are 4.4% and 1.8%, respectively. Moreover, DANN_TF outperforms supervised prediction on at least 86.4% TFs of all the cell types. The maximum improvement and the average improvement are at least 15.0% and at least 6.6%, respectively. The micro average of the maximum improvements and the average improvements are 20.6% and 7.7%, respectively. The F1 score gain of DANN_TF compared to the baseline method and supervised prediction are listed in

Supplementary Tables S8 and S9, respectively.

In the 13 TFs, five TFs do not have specific binding motifs in any of the three common databases (JASPAR [

10], TRANSFAC [

11] and Uniprobe [

27]). As these TFs do not have specific binding motifs which can be learned by CNN from labeled data in cross-cell-type, they may achieve low improvements than the sequence-specific TFs. Results listed in

Supplementary Table S7 show that DANN_TF achieves higher AUC than the baseline method and supervised prediction by 1.6% and 7.2% on average, respectively, for the TFs without specific binding motifs. For the sequence-specific TFs, DANN_TF achieves higher AUC than the baseline method and supervised prediction by 2.0% and 8.0% on average, respectively. Although DANN_TF achieves lower improvements for the TFs without specific binding motifs than the sequence-specific TFs, DANN_TF also achieves prominent improvements for them. It indicates that DANN_TF can learn binding features from labeled data in cross-cell-type for the TFs which do not have specific binding motifs.

2.9. Comparison between Data Augmentation, Semi-Supervised, and Cross-Cell-Type Prediction

We further compare the performance of cross-cell-type prediction by our proposed DANN_TF with that of data augmentation by the baseline method. Results show that for CTCF in all the five cell types, GABPA in GM12878 and K562, JunD in GM12878, and HeLa-S3, REST in H1-hESC and HepG2 as well as USF2 in H1-hESC, the performance of cross-cell-type prediction by DANN_TF is better than that of data augmentation by the baseline method. Most noticeably, the performance of cross-cell-type prediction by DANN_TF for JunD in GM12878 is better than that of the baseline method for data augmentation by more than 12%. It indicates that DANN_TF trained by labeled data from source cell types can achieve better performance than the baseline method trained by labeled data from both the target cell type and source cell types.

We also compare the performance of data augmentation, semi-supervised, and cross-cell-type prediction by DANN_TF, which is show in

Supplementary Tables S10 and S11, and

Figure 5. In

Figure 5, the horizontal ordinate denotes the 25 cell-type TF pairs.

Figure 5a,b show the AUC comparison and the F1 score comparison, respectively.

Results show that the discrepancies of both AUC and F1 score among data augmentation, semi-supervised prediction, and cross-cell-type prediction are very small for most cell-type TF pairs. It indicates that DANN_TF is applicable to TFs in cell types which have insufficient labeled data or do not have any labeled data.

2.10. Comparison with the State-of-the-Art Methods

Several deep learning methods including DeepSEA [

20] and DanQ [

21] achieved state-of-the-art performance. Quang and Xie (2016) also proposed an alternative model of DanQ, called DanQ-JASPAR (DanQ-J), by initializing half of CNN kernels with motifs from JASPAR [

28]. We also consider an alternative model of DeepSEA, referred to as DeepSEA-JASPAR (DeepSEA-J), by using the same kernel initializing method as DanQ-JASPAR. We downloaded the torch implementation of DeepSEA [

20] from the software’s webpage (

http://DeepSEA.princeton.edu/) and the Keras implementation of DanQ and DanQ-JASPAR [

21] from the software’s webpage (

http://github.com/uci-cbcl/DanQ). In this section, we compare our proposed DANN_TF with DeepSEA, DeepSEA-JASPAR, DanQ, and DanQ-JASPAR on the 25 cell-type TF pairs by ten-fold cross-validation. All these four comparing methods are multi-task learning methods. As the comparison between DANN_TF and them is conducted by 25 cell-type TF pairs, they contain 25 TFBS prediction tasks.

The performance of the four state-of-the-art methods and our proposed DANN_TF is listed in

Table 4, where the best performers and the second-best performers are marked by bold and underline, respectively. Please note that DANN_TF is trained by unlabeled training data of the target cell type and labeled data of source cell types while the four state-of-the-art methods are trained by labeled training data of the target cell type and labeled data of source cell types by using their default setup and parameters. The table shows that our proposed DANN_TF performs better than the four state-of-the-art methods on 24 pairs out of the 25 cell-type TF pairs. The maximum and the minimum improvement in AUC are 29.7% and 1.7%, respectively. The average improvement in AUC is 14.8%, which is a very prominent improvement. It manifests that our proposed DANN_TF performs better than the four state-of-the-art methods.

To evaluate the efficiency of our proposed DANN_TF, we compare the training time of DANN_TF for each epoch to that of the baseline method and the four state-of-the-art methods. JunD in the five cell types are taken as examples to evaluate their efficiency. All the methods are trained on NVIDIA GeForce RTX 2080Ti. Results show that DANN_TF takes 45s, 42s, 44s, 42s, and 45s for GM12878, H1-hESC, HeLa-S3, HepG2, and K562, respectively, and the training time of the baseline method for these five cell types are 33s, 34s, 32s, 28s, and 29s, respectively. The training time of DanQ, DanQ-JASPAR, DeepSEA, and DeepSEA-JASPAR are 401s, 355s, 96s, and 85s, respectively. As their predictions for the five cell types are completed by a single model, their average training time for each cell type are 80s, 71s, 19s, and 17s, respectively. In summary, DANN_TF takes less time than DanQ and DanQ-JASPAR and spent a little more time than the baseline method, DeepSEA, and DeepSEA-JASPAR.

4. Materials and Methods

As shown by many recently published works [

29,

30,

31,

32], a complete prediction model in bioinformatics should contain the following five components: validation benchmark dataset(s), an effective feature extraction procedure, an efficient predicting algorithm, a set of fair evaluation criteria and comparisons with state-of-the-art methods. The latter two components have been introduced in Experiments and Results. In this section, the definition of TFBS for our prediction task will be introduced first. Then, details of the first three components of our proposed DANN_TF will be described in sequence.

4.1. TF-Binding Site (TFBS)

Recently, most studies used ChIP-seq to obtain TFBSs and non-TFBSs. ChIP-seq first provides a signal for each fragment in a genome. Then a peak calling method is applied to identify peaks from the genome according to the given signals. Based on the works by Alipanahi et al. [

19] and Zeng et al. [

33], the TFBS at each peak is defined as a 101 bp sequence by taking the midpoint of the peak as the center. For example, given a genome

G with length of

L (

)

where

represents the first nucleotide,

represents the second nucleotide and so forth. For a peak with the midpoint at the position

i in

G, its TFBS can be represented as

Apart from TFBSs, all other nonoverlapping 101-bp DNA fragments in the genome are defined as non-TFBSs.

4.2. Datasets

Two data sets are used to evaluate the performance of our proposed DANN_TF. The first one consists of five TFs and the other one contains 13 TFs.

In the first data set, five different cell types are selected: (1) GM12878, a kind of Lymphocyte in humans, (2) H1-hESC, human embryonic stem cells, (3) HeLa-S3, human cervical cancer cells, (4) HepG2, which is derived from a 15-year-old Caucasian liver tissue, and (5) K562, which is the first artificially cultured cells of human myeloid leukemia. The five TFs in this data set contain: (1) CTCF, (2) JunD, (3) GABPA, (4) REST, and (5) USF2. Peaks of these five TFs in the five cell types can be downloaded from ENCODE [

34] freely. Peaks can be provided in one of two formats: narrow peak and broad peak. Some TFs have well defined binding sites and can be modeled by narrow peaks while binding sites of other TFs are less well localized and would better be modeled by broader peaks. Thus, the narrow peak format is used if available. Otherwise, the broad peak format is used. The number of TFBSs for the five TFs in the five cell types is listed in

Table 5. In the second data set, a total of 13 TFs are used to evaluate the generalization ability of our proposed DANN_TF. These 13 TFs have available TFBSs in all the five cell types. The TFBSs of these TFs in the five cell types were identified by TF ChIP-seq experiments and their peaks can be downloaded from ENCODE. Among the 13 TFs, eight TFs are sequence-specific and have specific binding motifs [

10,

11,

27]. The remaining five TFs (CHD2, EZH2, NRSF, RAD21 and TAF1) do not have specific binding motifs in any of the three common databases (JASPAR [

10], TRANSFAC [

11] and Uniprobe [

27]). The number of TFBSs for the 13 TFs in the five cell types is listed in

Table 6.

In contrast to TFBSs, non-TFBSs of a TF are defined as DNA regions which cannot be bound by the TF. Much literature [

16] has used a shuffle method to construct non-TFBSs. In the shuffle method, a non-TFBS was constructed for every TFBS by shuffling dinucleotides in this TFBS to keep the dinucleotide distribution unchanged. This way, we can construct the same number of non-TFBSs as TFBSs for each TF. The TFBSs and non-TFBSs for all these cell-type TF pairs are freely available at our webserver.

4.3. Feature Representation

The one-hot encoding is commonly used embedding method [

35,

36]. This method represents each word as a feature vector with the dimension as the vocabulary size. In each vector, only one element is 1, which indicates the current word, and all the remaining elements are 0. DNA is composed by four nucleotide types (A, G, C, T), so the dimension of the one-hot vectors of the four nucleotide types is four. They can be encoded as follows

As both TFBSs and non-TFBSs are composed by 101 nucleotides, they can be encoded by matrices of dimension . Thus, for a TFBS , denoted by , its encoded vector can be denoted as

4.4. Transfer Learning

Before introducing our proposed method, we first introduce several related concepts of transfer learning.

Transfer learning [

37] is a common problem in machine learning. Transfer learning aims to address one problem by using knowledge learned from a different but related problem. The mathematical definition of transfer learning is formulated as follows: given a source domain

and its learning task

as well as a target domain

and its learning task

, transfer learning aims to learn the task

of

by using the knowledge in

and

, where

, or

. In TFBS prediction for a TF in a target cell type, the target cell type is the target domain while other cell types are the source domain, called source cell types. Thus, transfer learning aims to address the lack of labeled data in the target cell type by using labeled data available in source cell types. In our study, we attempt to use Adversarial Network to address transfer learning problem. Adversarial Network was proposed by Goodfellow [

38] and achieved great success for deep learning methods. This model contains two components: a discriminator and a generator. The discriminator is used to distinguish the target cell type from source cell types while the generator aims to extract common features among the target cell type and source cell types. As Adversarial Network can help to learn common features for multiple cell types, it is suitable to address transfer learning problems in TFBS prediction.

4.5. DANN_TF

In this work, we propose a novel prediction method, referred to as Domain-Adversarial Neural Networks for TF-binding site prediction (DANN_TF), for TFs in cell types which lack labeled data. DANN_TF combines the use of CNN and Adversarial Network to learn common features among the target cell type and source cell types. Then learned common features are aimed to predict TFBSs for TFs in the target cell type. The framework in

Figure 6 shows that our proposed DANN_TF contains three components: a feature extractor, a label predictor, and a domain classifier. The green circles denote a input sequence, the blue circles denote learned features by the feature extractor, the dark yellow circle and the pale yellow circle denote TFBS and non-TFBS, respectively, and the dark black circle and the pale black circle denote the target cell type and source cell types, respectively. The input to DANN_TF is a sample of the target cell-type or source cell types. For example, when we use DANN_TF to predict TFBSs of CTCF in GM1278, then GM12878 is the target cell type while the other four cell types are the source cell types. The input to DANN_TF is a TFBS of CTCF in GM12878 or in the other four cell types. The output contains two components: the Label Predictor predicts the input sequence into TFBS or non-TFBS while the Domain Classifier predicts whether the input sequence belongs to the target cell type or the source cell types.

The feature extractor is used to learn common features for the target cell type and source cell types. This feature extractor consists of two convolution layers and each is followed by a max pooling layer. The label predictor outputs prediction results based on features learned by the feature extractor. This label predictor consists of two fully connected layers followed by a softmax classifier. The domain classifier distinguishes whether an input sequence belongs to the target cell type or source cell types based on features learned by the feature extractor. This domain classifier consists of a Gradient Reversal layer (GRL) followed by a fully connected layer and a

classifier. The feature extractor acts as a generator while the domain classifier acts as a discriminator, so they constitute an Adversarial Network. DANN_TF provides a real-valued score for a given DNA sequence

as follows

where

and

denote the feature extractor and the label predictor in DANN_TF, respectively. The domain classifier

plays a role in the real-valued score by constituting an Adversarial Network with the feature extractor

. Details of these three modules are given in the following sections.

4.5.1. Feature Extractor

The feature extractor plays a role as a generator in DANN_TF. It contains the sequential alternation between two convolution and two pooling layers to learn sequence features at different spatial scales.

For the convolution layers, the input is a matrix

X of dimension

, where

N is the dimension of each element and

L is the length of input sequences. The output of the convolution layers is

where

i is the index of an output position and

k is the index of a kernel. Each convolution kernel

is an

weight matrix with

M being the window size and

N being the number of input channels (for the first convolution layer

N equals 4, for higher-level convolution layers

N equals the number of kernels in the previous convolution layer). ReLU represents the rectified linear function. The pooling layers are fed with matrices

Y outputted by the convolution layers and output vectors

with dimension of

d. An element

of vector

Z is computed as

where

Y is the input,

i is the index for an output position,

k is the index of a kernel and

M is the pooling window size.

In summary, the output of the feature extractor is

where

and

denote a convolution layer and a pooling layer, respectively.

4.5.2. Label Predictor

The label predictor is aimed to predict whether input DNA sequences are TFBSs or not based on features learned by the feature extractor. This predictor consists of two fully connected layers followed by a

classifier. The label predictor is fed by feature vectors Z, and computes

where

denotes a fully connected layer and

denotes the

classifier. The

classifier contains two output units denoting TFBS and non-TFBS, respectively. To avoid overfitting, a dropout layer is added before the first fully connected layer. With the dropout technique, any entry is set to 0 randomly with a dropout rate.

4.5.3. Domain Classifier

The domain classifier aims to discriminate DNA sequences into the target cell type or source cell types. This classifier consists of a GRL followed by a fully connected layer and a

classifier. In forward propagation, GRL acts as an identity transformation. In back propagation, GRL can change the sign of gradient by multiplying

.

can be computed by following formula

where

p is a hyperparameter with value from 0 to 1. This strategy allows the domain classifier to be less sensitive to noisy at the early stages of the training procedure. The domain classifier takes feature vectors

Z as input and outputs

where

denotes the fully connected layer. The

classifier contains two output units to denote the target cell type and source cell types, respectively.

4.5.4. Objective Function

We use cross entropy as our loss function. Formally, we use

,

and

to denote the feature extractor, the label predictor, and the domain classifier, respectively, where

,

and

are their parameters. The prediction loss and the domain loss can be expressed as follows

where

x denotes an input DNA sequence,

L and

D denote the true label and the true domain of the input DNA sequence

x, respectively,

is calculated by Formula (10). The reason that the domain loss is multiply by a negative value is that DANN_TF can help learn features shared by the target cell type and source cell types by decreasing the performance of the domain classifier. Then, learned features can aim to make predictions for TFs in the target cell type.

The total loss

consists of two parts: the prediction loss and the domain loss. Thus, the total loss function

can be formulated as

where

is the domain adaptation parameter.