Pairwise Performance Comparison of Docking Scoring Functions: Computational Approach Using InterCriteria Analysis

Abstract

1. Introduction

2. Results and Discussion

- (i)

- the best docking score (a lower score suggests better protein–ligand binding)—hereinafter referred to as BestDS;

- (ii)

- the lowest root mean square deviation (RMSD) between the predicted poses and the ligand in the co-crystallized complex—hereinafter referred to as BestRMSD;

- (iii)

- the RMSD between the pose with the best docking score and the ligand in the co-crystallized complex—hereinafter referred to as RMSD_BestDS;

- (iv)

- the docking score of the pose with the lowest RMSD to the ligand in the co-crystallized complex—hereinafter referred to as DS_BestRMSD.

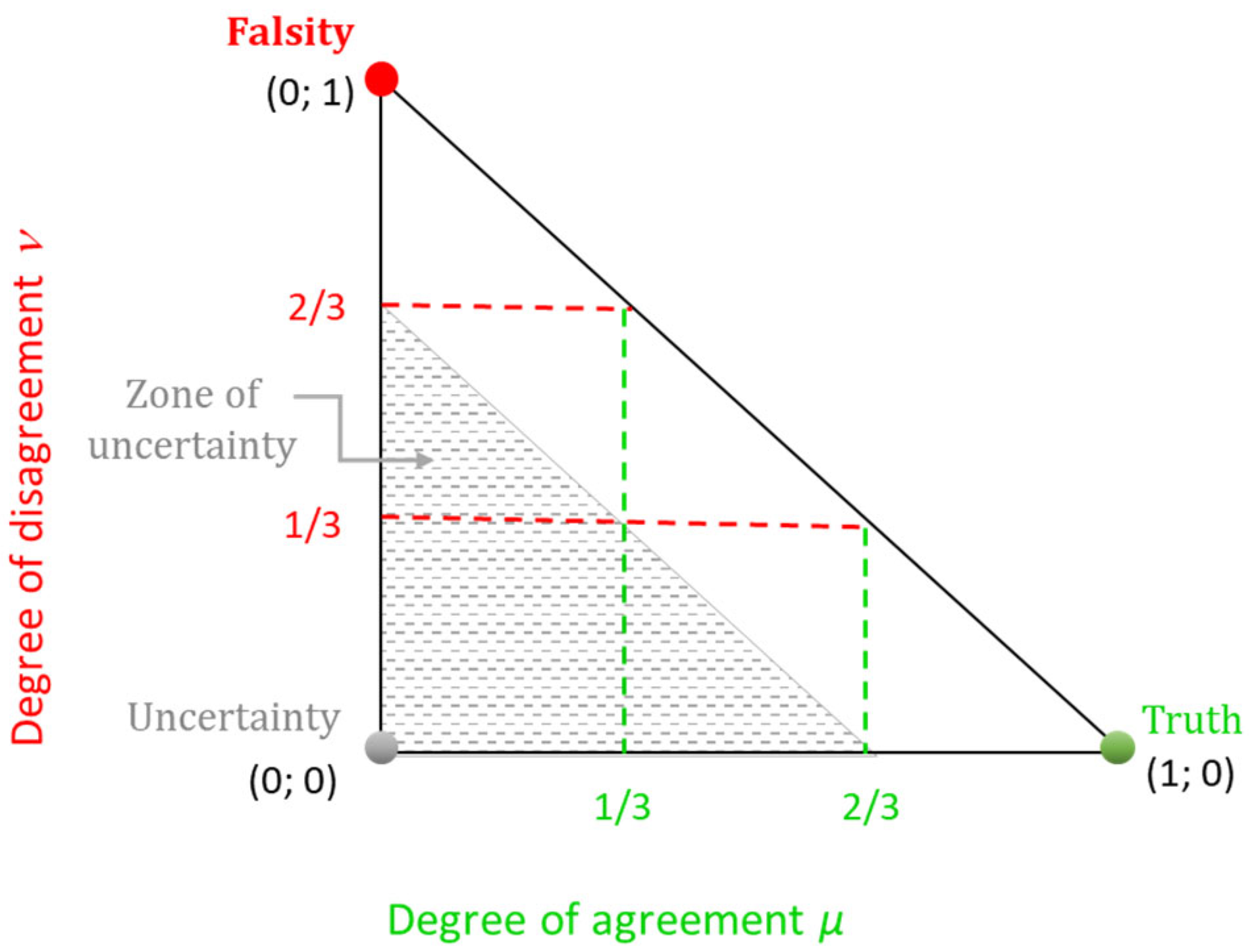

- positive consonance, whenever ≥ 2/3 and < 1/3;

- negative consonance, whenever < 1/3 and ≥ 2/3;

- dissonance, whenever 0 ≤ < 2/3, 0 ≤ < 2/3, and 2/3 ≤ + ≤ 1;

- uncertainty, 0 ≤ < 2/3, 0 ≤ < 2/3, and 0 ≤ + < 2/3.

- (1)

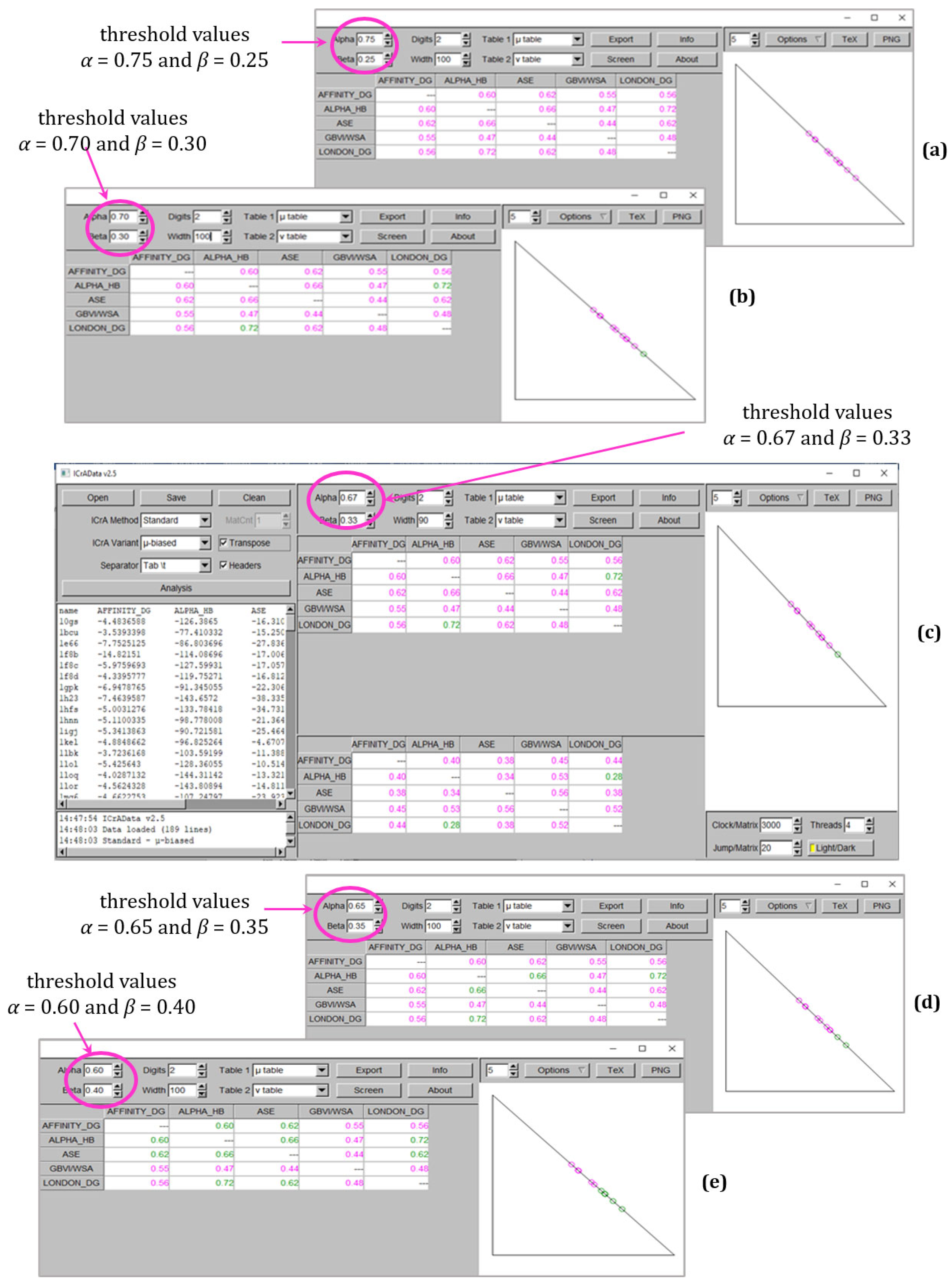

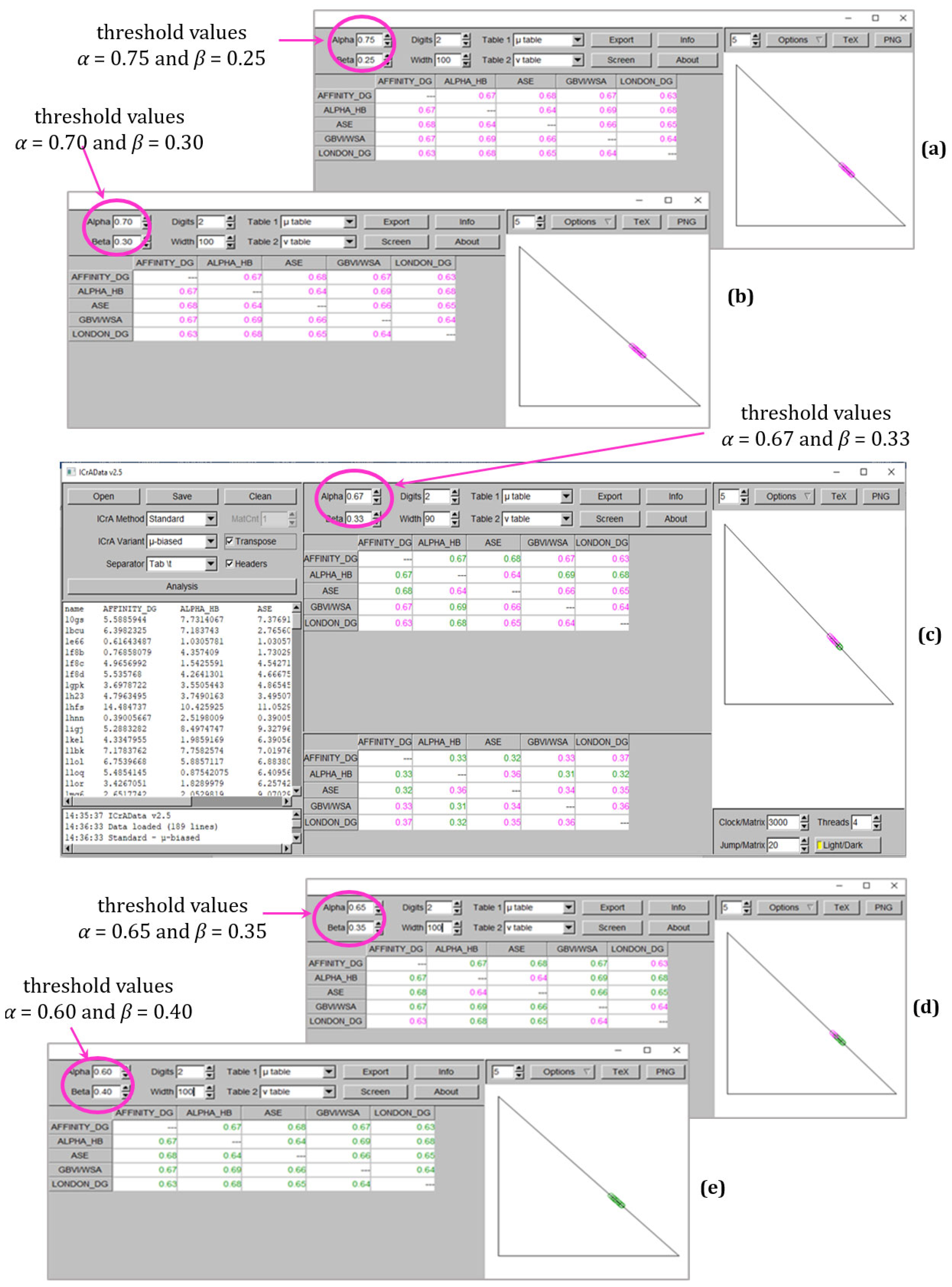

- an absence of any kind of agreement in all explored values of α and β with the experimental data (except one, between GBVI/WSA dG and (−logKd) or (−logKi) at α = 0.60 and β = 0.40, rows highlighted in grey in Table 3). This result is in accordance with our previous studies [19]. The lack of any agreement might also be explained by the fact that, even when implementing a variety of scoring terms and becoming more sophisticated, the scoring functions are still a computational approximation mostly aimed at assisting in the prediction of ligand binding poses. This is confirmed by the results of the BestRMSD docking output (Table 2).

- (2)

- a positive consonance between two scoring functions, Alpha HB and London dG: in particular, for 0.67/0.33 threshold values, they are comparable in all four docking outputs (a row in bold in Table 3). The result suggests that these scoring functions might be used interchangeably. At the same time, some pairs show small comparability (Affinity dG–London dG and GBVI/WSA dG–London dG), suggesting that they can complement each other in consensus docking studies.

3. Materials and Methods

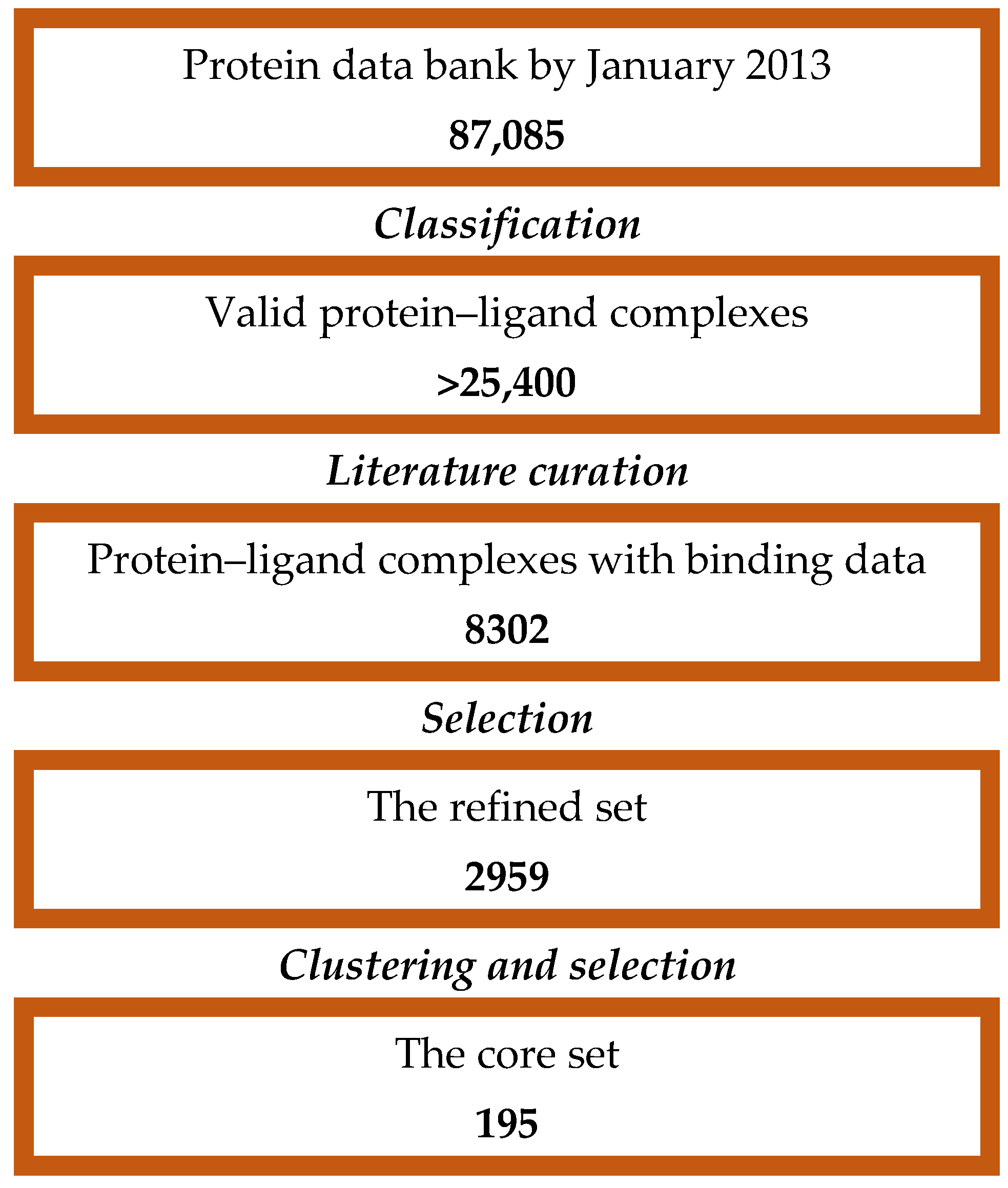

3.1. Dataset

3.2. Molecular Docking

- ASE is based on the Gaussian approximation and depends on the radii of the atoms and the distance between the ligand atom and receptor atom pairs. ASE is proportional to the sum of the Gaussians over all ligand atom–receptor atom pairs.

- Affinity dG is a linear function that calculates the enthalpy contribution to the binding free energy, including terms based on interactions between H-bond donor and acceptor pairs, ionic interactions, metal ligation, hydrophobic interactions, unfavorable interactions (between hydrophobic and polar atoms,) and favorable interactions (between any two atoms).

- Alpha HB is a linear combination of two terms: (i) the geometric fit of the ligand to the binding site with regard to the attraction and repulsion depending on the distance between the atoms and (ii) H-bonding effects.

- London dG estimates the free binding energy of the ligand, accounting for the average gain or loss of rotational and translational entropy, the loss of flexibility of the ligand, the geometric imperfections of H-bonds and metal ligations compared to the ideal ones, and the desolvation energy of atoms.

- GBVI/WSA dG estimates the free energy of ligand bindings considering the weighted terms for the Coulomb energy, solvation energy, and van der Waals contributions.

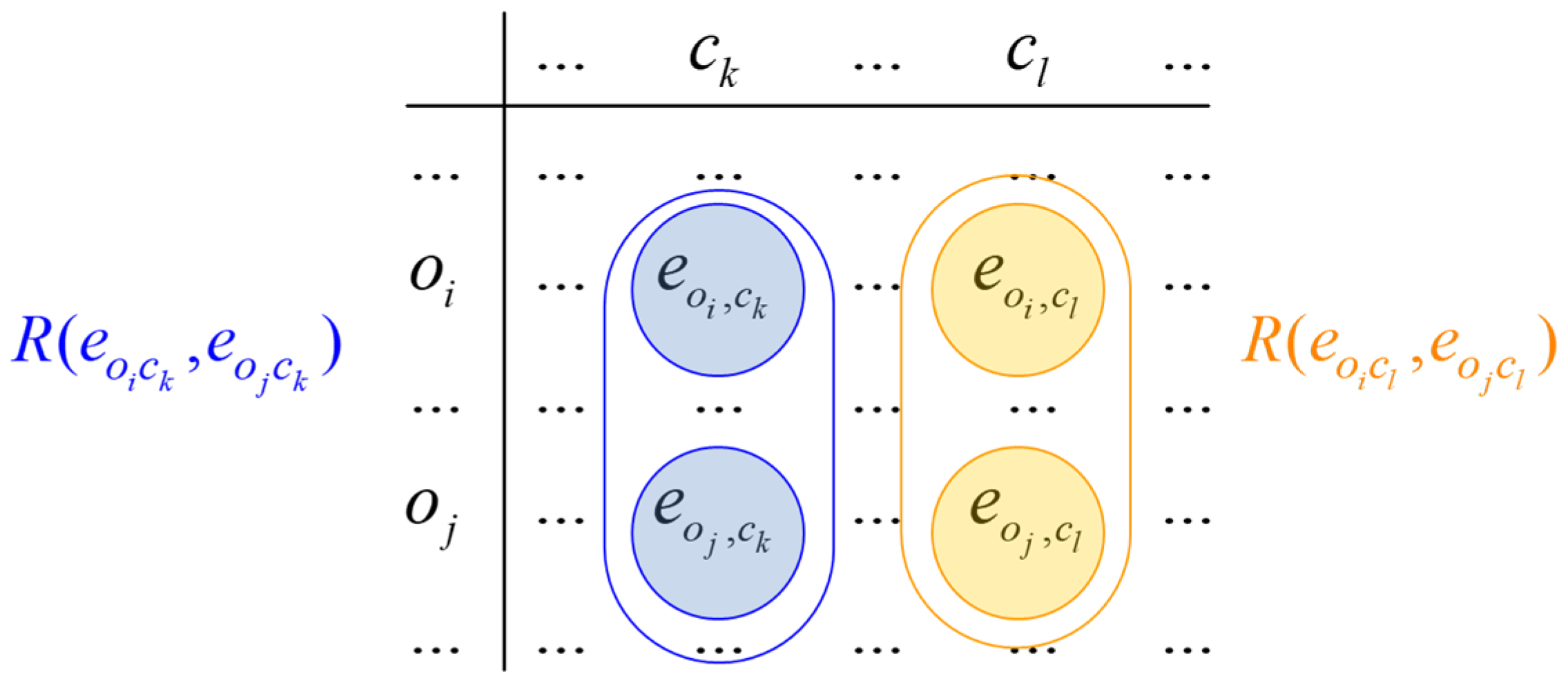

3.3. InterCriteria Analysis Approach

| C1 | … | Ck | … | Cn | |

| O1 | … | … | |||

| … | … | … | … | … | … |

| Oi | … | … | |||

| … | … | … | … | … | … |

| Om | … | … |

- –

- is the number of cases in which the relations and (or the relations and ) are simultaneously satisfied.

- –

- is the number of cases in which the relation and (or the relations and ) are simultaneously satisfied.

- , called the degree of agreement in terms of ICrA, and

- , called the degree of disagreement in terms of ICrA.

| C1 | … | Ck | … | Cn | |

| C1 | ⟨1, 0⟩ | … | … | ||

| … | … | … | … | … | … |

| Ck | … | ⟨1, 0⟩ | … | ||

| … | … | … | … | … | … |

| Cn | … | … | ⟨1, 0⟩ |

- positive consonance, whenever > α and < β;

- negative consonance, whenever < β and > α;

- dissonance, otherwise.

3.4. Software Implementation of ICrA

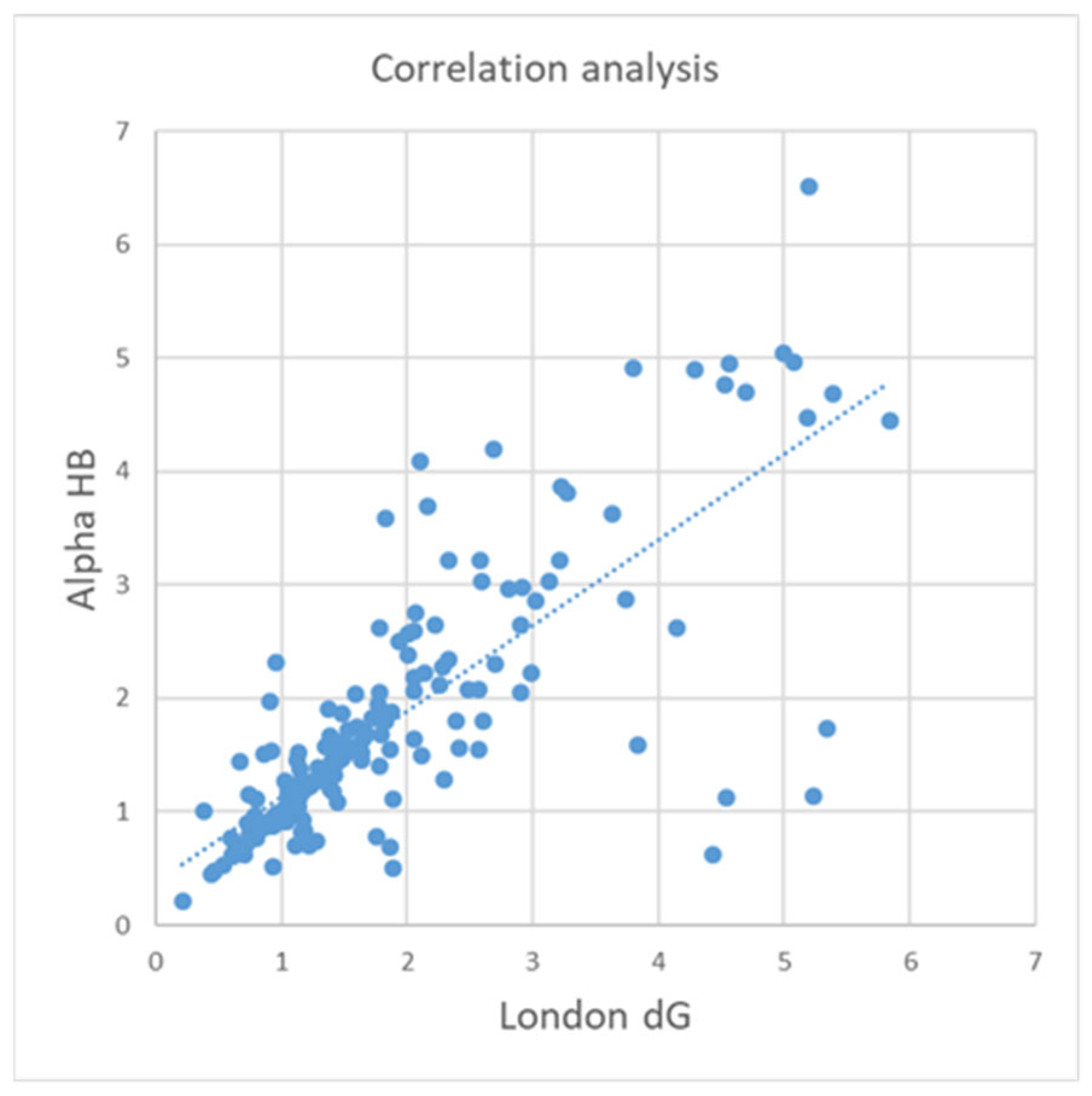

3.5. Correlation Analysis

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Höltje, H.-D.; Sippl, W.; Rognan, D.; Folkers, G. Molecular Modeling: Basic Principles and Applications, 3rd rev. expanded ed.; Wiley-VCH: Weinheim, Germany, 2008. [Google Scholar]

- Cheng, T.; Li, X.; Li, Y.; Liu, Z.; Wang, R. Comparative Assessment of Scoring Functions on a Diverse Test Set. J. Chem. Inf. Model. 2009, 49, 1079–1093. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Liu, Z.H.; Han, L.; Li, J.; Liu, J.; Zhao, Z.X.; Li, C.K.; Wang, R.X. Comparative Assessment of Scoring Functions on an Updated Benchmark: I. Compilation of the Test Set. J. Chem. Inf. Model. 2014, 54, 1700–1716. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Lucke, A.J.; Fairlie, D.P. Comparing Sixteen Scoring Functions for Predicting Biological Activities of Ligands for Protein Targets. J. Mol. Graph. Model. 2015, 57, 76–88. [Google Scholar] [CrossRef] [PubMed]

- Su, M.; Yang, Q.; Du, Y.; Feng, G.; Liu, Z.; Li, Y.; Wang, R. Comparative Assessment of Scoring Functions: The CASF-2016 Update. J. Chem. Inf. Model. 2019, 59, 895–913. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Fang, X.; Lu, Y.; Wang, S. The PDBbind Database: Collection of Binding Affinities for Protein-Ligand Complexes with Known Three-Dimensional Structures. J. Med. Chem. 2004, 47, 2977–2980. [Google Scholar] [CrossRef] [PubMed]

- Yan, Z.; Wang, J. Incorporating specificity into optimization: Evaluation of SPA using CSAR 2014 and CASF 2013 benchmarks. J. Comput. Aided Mol. Des. 2016, 30, 219–227. [Google Scholar] [CrossRef] [PubMed]

- Kalinowsky, L.; Weber, J.; Balasupramaniam, S.; Baumann, K.; Proschak, E. A Diverse Benchmark Based on 3D Matched Molecular Pairs for Validating Scoring Functions. ACS Omega 2018, 3, 5704–5714. [Google Scholar] [CrossRef] [PubMed]

- Molecular Operating Environment. The Chemical Computing Group, v. 2016.08. Available online: http://www.chemcomp.com (accessed on 22 May 2025).

- Gaillard, T. Evaluation of AutoDock and AutoDock Vina on the CASF-2013 Benchmark. J. Chem. Inf. Model. 2018, 58, 1697–1706. [Google Scholar] [CrossRef] [PubMed]

- Khamis, M.A.; Gomaa, W. Comparative assessment of machine-learning scoring functions on PDBbind 2013. Eng. Appl. Artif. Intell. 2015, 45, 136–151. [Google Scholar] [CrossRef]

- Li, Y.; Su, M.; Han, L.; Liu, Z.; Wang, R. Comparative Assessment of Scoring Functions on an Updated Benchmark: 2. Evaluation Methods and General Results. J. Chem. Inf. Model. 2014, 54, 1717–1736. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Qiu, Z.; Jiao, Q.; Chen, C.; Meng, Z.; Cui, X. Structure-based Protein-drug Affinity Prediction with Spatial Attention Mechanisms. In Proceedings of the 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Houston, TX, USA, 9–12 December 2021; pp. 92–97. [Google Scholar]

- Wang, Y.; Wei, Z.; Xi, L. Sfcnn: A novel scoring function based on 3D convolutional neural network for accurate and stable protein-ligand affinity prediction. BMC Bioinform. 2022, 23, 222. [Google Scholar] [CrossRef] [PubMed]

- Rana, M.M.; Nguyen, D.D. Geometric graph learning with extended atom-types features for protein-ligand binding affinity prediction. Comput. Biol. Med. 2023, 164, 107250. [Google Scholar] [CrossRef] [PubMed]

- Atanassov, K.; Mavrov, D.; Atanassova, V. Intercriteria Decision making: A new approach for multicriteria decision making, based on index matrices and intuitionistic fuzzy sets. Issues IFSs GNs 2014, 11, 1–8. [Google Scholar]

- Chorukova, E.; Marinov, P.; Umlenski, I. Survey on Theory and Applications of InterCriteria Analysis Approach. In Research in Computer Science in the Bulgarian Academy of Sciences. Studies in Computational Intelligence, 1st ed.; Atanassov, K., Ed.; Springer: Cham, Switzerland, 2021; Volume 934, pp. 453–469. [Google Scholar]

- Jereva, D.; Angelova, M.; Tsakovska, I.; Alov, P.; Pajeva, I.; Miteva, M.; Pencheva, T. InterCriteria Analysis Approach for Decision-making in Virtual Screening: Comparative Study of Various Scoring Functions. Lect. Notes Netw. Syst. 2022, 374, 67–78. [Google Scholar]

- Jereva, D.; Pencheva, T.; Tsakovska, I.; Alov, P.; Pajeva, I. Exploring Applicability of InterCriteria Analysis to Evaluate the Performance of MOE and GOLD Scoring Functions. Stud. Comput. Intell. 2021, 961, 198–208. [Google Scholar]

- Tsakovska, I.; Alov, P.; Ikonomov, N.; Atanassova, V.; Vassilev, P.; Roeva, O.; Jereva, D.; Atanassov, K.; Pajeva, I.; Pencheva, T. Intercriteria analysis implementation for exploration of the performance of various docking scoring functions. Stud. Comput. Intell. 2021, 902, 88–98. [Google Scholar]

- Atanassov, K.; Atanassova, V.; Gluhchev, G. Intercriteria analysis: Ideas and problems. Notes Intuit. Fuzzy Sets 2015, 21, 81–88. [Google Scholar]

- Medina-Franco, J.L. Grand Challenges of Computer-Aided Drug Design: The Road Ahead. Front. Drug Discov. 2021, 1, 728551. [Google Scholar] [CrossRef]

- Atanassov, K. Index Matrices: Towards an Augmented Matrix Calculus, Studies in Computational Intelligence, 1st ed.; Springer International Publishing: Cham, Switzerland, 2014; Volume 573. [Google Scholar]

- Atanassov, K. Intuitionistic Fuzzy Logics; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Zadeh, L.A. Fuzzy Sets. Inf. Control. 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Correlation Analysis. Available online: https://www.questionpro.com/features/correlation-analysis.html (accessed on 9 January 2025).

| BestDS | BestRMSD | RMSD_BestDS | DS_BestRMSD | |

|---|---|---|---|---|

| Affinity dG–Alpha HB | 0.60 | 0.81 | 0.67 | 0.59 |

| Affinity dG–ASE | 0.62 | 0.77 | 0.68 | 0.57 |

| Affinity dG–GBVI/WSA dG | 0.55 | 0.83 | 0.67 | 0.61 |

| Affinity dG–London dG | 0.56 | 0.78 | 0.63 | 0.56 |

| Alpha HB–ASE | 0.66 | 0.79 | 0.64 | 0.62 |

| Alpha HB–GBVI/WSA dG | 0.47 | 0.76 | 0.69 | 0.45 |

| Alpha HB–London dG | 0.72 | 0.84 | 0.68 | 0.70 |

| ASE–GBVI/WSA dG | 0.44 | 0.73 | 0.66 | 0.36 |

| ASE–London dG | 0.62 | 0.77 | 0.65 | 0.60 |

| GBVI/WSA dG–London dG | 0.48 | 0.73 | 0.64 | 0.46 |

| Affinity dG–(−logKd) or (−logKi) | 0.45 | 0.57 | 0.50 | 0.53 |

| Alpha HB–(−logKd) or (−logKi) | 0.40 | 0.53 | 0.44 | 0.49 |

| ASE–(−logKd) or (−logKi) | 0.35 | 0.56 | 0.37 | 0.57 |

| GBVI/WSA dG–(−logKd) or (−logKi) | 0.58 | 0.56 | 0.60 | 0.55 |

| London dG–(−logKd) or (−logKi) | 0.45 | 0.53 | 0.45 | 0.48 |

| α/β | BestDS | BestRMSD | RMSD_BestDS | DS_BestRMSD |

|---|---|---|---|---|

| 0.75/0.25 | 0 | 8 | 0 | 0 |

| 0.70/0.30 | 1 | 10 | 0 | 1 |

| 0.67/0.33 | 1 | 10 | 5 | 1 |

| 0.65/0.35 | 2 | 10 | 7 | 1 |

| 0.60/0.40 | 5 | 10 | 11 | 4 |

| Pairs of Scoring Functions | α/β | ||||

|---|---|---|---|---|---|

| 0.75/0.25 | 0.70/0.30 | 0.67/0.33 | 0.65/0.35 | 0.60/0.40 | |

| Affinity dG–Alpha HB | 1 | 1 | 2 | 2 | 3 |

| Affinity dG–ASE | 1 | 1 | 2 | 2 | 3 |

| Affinity dG–GBVI/WSA dG | 1 | 1 | 2 | 2 | 3 |

| Affinity dG–London dG | 1 | 1 | 1 | 1 | 2 |

| Alpha HB–ASE | 1 | 1 | 1 | 2 | 4 |

| Alpha HB–GBVI/WSA dG | 1 | 1 | 2 | 2 | 2 |

| Alpha HB–London dG | 1 | 3 | 4 | 4 | 4 |

| ASE–GBVI/WSA dG | 1 | 1 | 2 | 2 | |

| ASE–London dG | 1 | 1 | 1 | 2 | 4 |

| GBVI/WSA dG–London dG | 1 | 1 | 1 | 2 | |

| Affinity dG–(−logKd) or (−logKi) | 0 | 0 | 0 | 0 | 0 |

| Alpha HB–(−logKd) or (−logKi) | 0 | 0 | 0 | 0 | 0 |

| ASE–(−logKd) or (−logKi) | 0 | 0 | 0 | 0 | 0 |

| GBVI/WSA dG–(−logKd) or (−logKi) | 0 | 0 | 0 | 0 | 1 |

| London dG–(−logKd) or (−logKi) | 0 | 0 | 0 | 0 | 0 |

| BestDS | BestRMSD | RMSD_BestDS | DS_BestRMSD | |||||

|---|---|---|---|---|---|---|---|---|

| ICrA µ | CA R | ICrA µ | CA R | ICrA µ | CA R | ICrA µ | CA R | |

| Affinity dG–Alpha HB | 0.60 | 0.20 | 0.81 | 0.74 | 0.67 | 0.55 | 0.59 | 0.19 |

| Affinity dG–ASE | 0.62 | 0.23 | 0.77 | 0.68 | 0.68 | 0.53 | 0.57 | 0.03 |

| Affinity dG–GBVI/WSA dG | 0.55 | 0.12 | 0.83 | 0.81 | 0.67 | 0.55 | 0.61 | 0.22 |

| Affinity dG–London dG | 0.56 | 0.14 | 0.78 | 0.66 | 0.63 | 0.35 | 0.56 | 0.06 |

| Alpha HB–ASE | 0.66 | 0.54 | 0.79 | 0.77 | 0.64 | 0.45 | 0.62 | 0.42 |

| Alpha HB–GBVI/WSA dG | 0.47 | −0.10 | 0.76 | 0.63 | 0.69 | 0.53 | 0.45 | −0.05 |

| Alpha HB–London dG | 0.72 | 0.55 | 0.84 | 0.79 | 0.68 | 0.52 | 0.70 | 0.47 |

| ASE–GBVI/WSA dG | 0.44 | −0.19 | 0.73 | 0.57 | 0.66 | 0.48 | 0.36 | −0.16 |

| ASE–London dG | 0.62 | 0.29 | 0.77 | 0.68 | 0.65 | 0.42 | 0.60 | 0.24 |

| GBVI/WSA dG–London dG | 0.48 | −0.16 | 0.73 | 0.57 | 0.64 | 0.36 | 0.46 | −0.06 |

| Affinity dG–(−logKd) or (−logKi) | 0.45 | −0.08 | 0.57 | 0.21 | 0.50 | 0.19 | 0.53 | 0.06 |

| Alpha HB–(−logKd) or (−logKi) | 0.40 | −0.29 | 0.53 | 0.10 | 0.44 | 0.03 | 0.49 | −0.18 |

| ASE–(−logKd) or (−logKi) | 0.35 | −0.42 | 0.56 | 0.12 | 0.37 | 0.24 | 0.57 | −0.37 |

| GBVI/WSA dG–(−logKd) or (−logKi) | 0.58 | 0.13 | 0.56 | 0.23 | 0.60 | 0.19 | 0.55 | 0.09 |

| London dG–(−logKd) or (−logKi) | 0.45 | −0.13 | 0.53 | 0.06 | 0.45 | −0.02 | 0.48 | −0.11 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Angelova, M.; Alov, P.; Tsakovska, I.; Jereva, D.; Lessigiarska, I.; Atanassov, K.; Pajeva, I.; Pencheva, T. Pairwise Performance Comparison of Docking Scoring Functions: Computational Approach Using InterCriteria Analysis. Molecules 2025, 30, 2777. https://doi.org/10.3390/molecules30132777

Angelova M, Alov P, Tsakovska I, Jereva D, Lessigiarska I, Atanassov K, Pajeva I, Pencheva T. Pairwise Performance Comparison of Docking Scoring Functions: Computational Approach Using InterCriteria Analysis. Molecules. 2025; 30(13):2777. https://doi.org/10.3390/molecules30132777

Chicago/Turabian StyleAngelova, Maria, Petko Alov, Ivanka Tsakovska, Dessislava Jereva, Iglika Lessigiarska, Krassimir Atanassov, Ilza Pajeva, and Tania Pencheva. 2025. "Pairwise Performance Comparison of Docking Scoring Functions: Computational Approach Using InterCriteria Analysis" Molecules 30, no. 13: 2777. https://doi.org/10.3390/molecules30132777

APA StyleAngelova, M., Alov, P., Tsakovska, I., Jereva, D., Lessigiarska, I., Atanassov, K., Pajeva, I., & Pencheva, T. (2025). Pairwise Performance Comparison of Docking Scoring Functions: Computational Approach Using InterCriteria Analysis. Molecules, 30(13), 2777. https://doi.org/10.3390/molecules30132777