Abstract

Accurately predicting drug–target interactions is a critical yet challenging task in drug discovery. Traditionally, pocket detection and drug–target affinity prediction have been treated as separate aspects of drug–target interaction, with few methods combining these tasks within a unified deep learning system to accelerate drug development. In this study, we propose EMPDTA, an end-to-end framework that integrates protein pocket prediction and drug–target affinity prediction to provide a comprehensive understanding of drug–target interactions. The EMPDTA framework consists of three main modules: pocket online detection, multimodal representation learning for affinity prediction, and multi-task joint training. The performance and potential of the proposed framework have been validated across diverse benchmark datasets, achieving robust results in both tasks. Furthermore, the visualization results of the predicted pockets demonstrate accurate pocket detection, confirming the effectiveness of our framework.

1. Introduction

Proteins are the workhorses in biological organisms, orchestrating virtually all biological processes. They often necessitate interactions with other molecules, termed ligands, to fulfill their specialized functions. Drug-like small molecules binding to proteins are important and widely studied, as they can facilitate drug discovery [1]. In most drug design projects, the initial goal is to find ligands that bind to a specific protein target with high affinity and specificity [2]. However, the costs associated with failed trials are substantial, with the median capitalized research and development investment to bring a new drug to market estimated at $985.3 million between 2009 and 2018 by the US Food and Drug Administration (FDA) [3]. Given these challenges, there is an urgent need for rapid screening of promising candidate drugs using computational methods. As such, binding pocket detection and drug–target affinity (DTA) prediction emerge as pivotal downstream tasks offering valuable insights for drug discovery.

Binding pocket detection plays a critical role in the initial stages of drug discovery. Traditional template-based and energy-based methods rely on high-quality templates or complex energy simulations, often encountering limitations with data and trade-offs in efficiency. Recently, machine learning methods leveraging protein geometric features have demonstrated remarkable performance and generalization capabilities [4]. In early geometric-based methods, Fpocket [5] treats atoms in proteins as spheres, calculates the alpha spheres of each atom, and derives the overall surface shape of protein molecules by merging and trimming adjacent alpha spheres. In contrast, P2Rank [6], another widely used tool, employs Connolly points [7] to represent solvent-reachable surfaces and model protein surfaces. After clustering the features, a random forest classifier distinguishes each Connolly point into two ligandable and unligandable categories. These detection methods are frequently utilized as standalone procedures in the drug development process [8].

After identifying binding pockets, assessing the drug–target affinity becomes pivotal in determining the strength of binding interactions. Methods for predicting drug–target affinity can be broadly categorized into similarity-based, sequence-based, and structure-based approaches. Similarity-based methods like KronRLS [9] and SimBoost [10] operate on the principle of guilt-by-association, assuming that similar drugs interact with similar targets and vice versa. However, these methods are often limited by knowledge constraints and struggle to generalize to novel scenarios. Sequence-based methods such as DeepDTA [11] and GraphDTA [12] follow the conventional approach of processing drug and target inputs separately. The primary distinction between DeepDTA and GraphDTA lies in whether they convert molecular compounds into graph structures for representation, as graphs offer a more suitable format for molecules. With the growing accumulation of protein structures and the advent of protein structure prediction models like AlphaFold2 [13], numerous structure-based methods have emerged, overcoming the limitations of sequence modal information. Molecular docking, a traditional yet effective method, offers good interpretability but suffers from computational inefficiency due to the sampling–scoring paradigm [14,15,16,17]. As a result, E(3)-equivariant graph neural networks have emerged, capable of directly generating molecular conformations without the need for extensive sampling [18,19].

While binding pockets significantly influence affinity, few approaches effectively integrate pocket information into drug–target affinity (DTA) prediction. DeepPS, for instance, explicitly leverages functional motifs extracted from protein amino acid sequences [20]. Furthermore, TANKBind [8] utilizes P2Rank as a preprocessing step to refine the interaction scope. However, none of these methods achieve end-to-end affinity prediction with online binding pocket identification. The external modules for pocket detection lead to increased training and inference complexity.

To overcome these constraints, we introduce EMPDTA, an end-to-end multimodal representation learning framework with pocket online detection for drug–target affinity prediction. EMPDTA combines pocket detection and affinity prediction seamlessly through three main components: pocket online detection (POD) module, multimodal representation learning (MRL) module, and joint training (JT) module. The POD module employs residue-level point cloud sampling on the protein surface and fast quasi-geodesic convolution layers in feature extraction. Meanwhile, the MRL module incorporates multimodal features encoded from sequence, structure, and surface information of drug–target pairs. In the JT module, the insights gained from fine-tuning on the POD module enhance the performance of the affinity on small datasets. We also compare our method with the state-of-the-art (SOTA) methods on the benchmark datasets. Our framework demonstrates excellent predictive performance across both tasks, with the effectiveness further confirmed through the visualization of pocket predictions.

2. Results

2.1. Pocket Detection Performance

In our first experiment, we exclusively leverage different modalities of protein information on the POD module, setting aside the contributions of other modules in our framework. To specifically assess the effectiveness of our POD module, we evaluate its performance across three benchmark datasets with pocket labels (notably, the proteins in Filtered Davis are a subset of those in Davis). The results presented in Table 1 indicate that all online methods outperform the commonly utilized offline method (P2Rank), showing a significant lead.

Table 1.

Predictive performance of different models on pocket prediction task.

On the smaller datasets (Davis and KIBA), the CNN model, leveraging sequence modal information, demonstrated commendable performance across both AUROC and AUPRC metrics, with a mere 0.4 M parameters. Interestingly, the GCN model, based on graphs, exhibited inferior performance compared to CNN, even with double the parameters. Particularly noteworthy was the significant decline in AUPRC, suggesting a notable decrease in GCN prediction accuracy. This disparity may stem from the conventional GCN architecture’s lack of adaptability to relational graph inputs. However, GearNet, specially designed for protein relational graphs, did not encounter this limitation. Nonetheless, the exceptional classification performance of GearNet comes at the expense of parameters, nearly tenfold that of CNN. Notably, our surface-based POD module boasts the lowest parameters, achieving performance close to GearNet in AUROC, albeit with slightly lower AUPRC.

On the larger dataset (PDBbind), the predictive abilities of different models can be evaluated in a more realistic context. Our POD module stands out as a leader across both metrics. With robust predictive performance observed across various datasets and minimal parameter requirements, the POD module underscores its potential as an online plug-in module in the drug-discovery pipeline.

2.2. Multimodal Models Achieve Better Performance Than Single Modal Models

Our experiments demonstrate that integrating multiple modalities with the DNN predictor enhances affinity prediction performance. To isolate the impact of the POD module, we bypass the pocket detection process and use the pocket labels as ground truth. We then compare the performance across different modalities on the filtered Davis dataset (Table 2).

Table 2.

Affinity performance of single-modal and multimodal models on Filtered Davis dataset.

The sequence modality achieves excellent performance as a single-modal model; leveraging features obtained through PLM models proves effective for affinity prediction tasks, closely approaching the performance of multimodal approaches. However, upon concatenating features from multiple modalities, the model’s performance remains consistently high. Notably, incorporating protein surface information leads to a slight improvement, underscoring the role of diverse modalities in enhancing affinity prediction tasks.

2.3. Joint Training with Fine-Tuning Demonstrates Superior Performance

Considering that our model primarily consists of POD modules and MRL modules, there are several training methods available for the JT module. Experiments in this section aim to address this issue, including whether to fine-tune the POD module and whether to integrate BCE loss for pocket prediction. The first consideration arises from the limited number of proteins in some datasets. Therefore, pretraining the POD module on the PDBbind dataset, which contains a substantial number of protein structures, enables the acquisition of more generalized knowledge from protein structures. Subsequently, fine-tuning the DNN predictor on smaller datasets reduces the required memory and accelerates the training process. For instance, on an RTX 4070Ti 12G graphic card, the batch size for fine-tuning mode is 64, and the training time is 105s (first line in Table 3), while the batch size is halved and training time doubles under the normal mode (second line in Table 3).

Table 3.

Training method comparison on Filtered Davis.

On the other side, comparing the single-task (affinity values) and multi-tasks (affinity values and pocket labels), the results presented in Table 3 suggest that augmenting the label information of pockets not only enhances affinity prediction performance but also yields high-quality pocket prediction outcomes. Compared to the single task on affinity prediction, our EMPDTA model with a fine-tuned approach led to improvements of 5.8% and 155.7% in AUROC and AUPRC (first and third line). The same trend is observed in the normal method, which clearly shows that with the pocket label, the POD module can learn more realistic information and provide a more accurate guide in affinity prediction. This experiment underscores that leveraging multiple labels and fine-tuning the POD module can yield the most optimal training outcomes. We use this training mode in our subsequent experiments and will no longer differentiate between them.

2.4. Comparison with State-of-the-Art Methods

Our proposed EMPDTA demonstrates leading performance on small datasets such as Filtered Davis. As shown in Table 4, our method achieves an RMSE of 0.663, significantly outperforming the state-of-the-art method MDeePred. Moreover, EMPDTA also excels in CI and Spearman’s rank correlation, with improvements of 1.6% and 5.9% over the second-best method, respectively.

Table 4.

Performance comparison on Filtered Davis dataset.

EMPDTA also performs effectively on the Davis and KIBA benchmark datasets. Table 5 presents a comparison between EMPDTA and existing baseline models. Our model achieves a leading MSE of 0.218 on the Davis dataset, outperforming all baselines. However, EMPDTA attains a CI of 0.891 and an rm2 of 0.689, which are slightly lower than the best-performing models. Due to the significant decrease in protein quantity, our framework exhibits a decline in the KIBA dataset, as the designed POD and JT modules rely on patterns from protein pockets. Poor performance on these two datasets also indicates that enhancing predictive performance in affinity prediction with limited protein data remains a challenge in our framework. Future work will focus on improving the framework’s effectiveness in scenarios with insufficient protein quantity.

Table 5.

Performance comparison on Davis and KIBA datasets.

Our framework demonstrates leading performance on the PDBbind dataset, which includes a greater number of proteins. This enhanced performance is attributed to the richness of protein structures, enabling more effective pocket information extraction for predicting affinity values. Compared to the current state-of-the-art method, TANKbind, our model slightly leads off in RMSE and MAE and is almost identical in the other two metrics (Table 6).

Table 6.

A comparison of different methods on PDBbind v2020.

2.5. Model Interpretability on Both Affinity and Pocket Prediction

A major feature of our proposed framework is its ability to output high-quality pocket prediction results while simultaneously predicting affinity, supported by the strong integration of POD, MRL, and JT modules. Table 7 lists two types of evaluation indicators for the four benchmark datasets. The results show that our framework achieves high performance in pocket metrics while maintaining affinity prediction performance on par with SOTA methods.

Table 7.

Our EMPDTA performance on benchmark datasets.

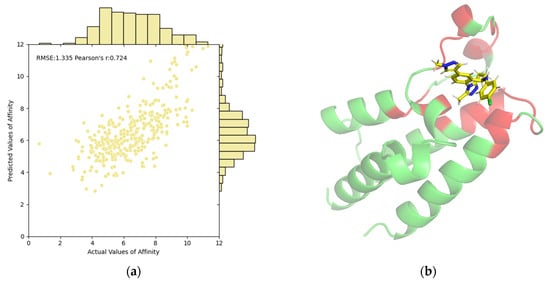

The correlations between predictive values and ground truths on the PDBbind test set are illustrated in Figure 1a. The scatter points are predominantly clustered in a narrow, positively correlated region, further validating the predictive capability of our EMPDTA. Additionally, we have visualized the predicted pocket (red) and non-pocket (green) regions of the protein. For instance, Figure 1b displays the pocket detection results for the first complex in the test set (ID: 6K04). Our predicted pockets are spatially close to the binding ligand (name: CQF). By concentrating on both prediction tasks, the POD module accurately targets potential interaction areas. The multi-task prediction framework enhances the interpretability of results and streamlines the process by combining multiple predictions into a single, efficient step, thus reducing the need for traditional separate predictions.

Figure 1.

Visualization and interpretability of our EMPDTA on the PDBbind test set. (a) Correlations of predictive and actual values of affinity. (b) Predicted pocket (red) of complex 6K04 is close to the binding ligand (yellow) by PyMol. The non-pocket parts of the complex are in green.

3. Materials and Methods

3.1. Dataset Construction

Four benchmark datasets are compiled for model training and evaluation (Table 8). Given the integration of pocket detection and affinity prediction tasks in our framework, we opt to utilize these affinity benchmark datasets as the foundation, subsequently augmenting it by incorporating pocket labels for pocket detection.

Table 8.

Summary of the benchmark datasets.

- The Davis and Filtered Davis datasets. The Davis dataset comprises 30,056 drug–target pairs with affinity values () among 72 drugs and 442 targets [24]. The Filtered Davis is derived from the Davis dataset, excluding pairs with no observed binding [21]. Consequently, the Filtered Davis dataset contains 72 drugs and 379 unique targets, forming 9125 interactions.

- KIBA dataset. KIBA incorporates a comprehensive combination of the inhibition constant (), dissociation constant (), and half-maximal inhibitory concentration (IC50) as affinity values [25]. It consists of 2111 drugs and 229 targets, forming 118,254 interactions.

- PDBbind dataset. PDBbind (v2020) comprises experimentally measured structures of 19,443 protein–ligand complexes with binding affinities [26].

As for pocket labels, the above sequence-based datasets (Davis, Filtered Davis, and KIBA) miss the information about the binding pockets. Therefore, we collect the pocket labels of the corresponding protein from the UniProt website (https://www.uniprot.org/, accessed on 1 May 2024). However, the structure-based PDBbind dataset conveniently offers structural files for protein pockets, facilitating their use as labels through straightforward index correspondence.

3.2. Problem Formulation

Given a drug–target pair with pocket labels and affinity values, our objective is twofold. Firstly, we aim to classify each residue of the protein as either “pocket” or “non-pocket,” treating this as a binary classification task [27]. Subsequently, utilizing the drug and online-extracted pocket features via multimodal encoders, the affinity value will be predicted by simply concatenating these features, framing it as a regression task [11].

3.3. Notation and Preprocessing

Consistent with our prior work, we represent the drug as an atom-level molecular graph , where edges signify chemical bonds. Utilizing the TorchDrug [28] implementation, we compute the drug node features, denoted by . On the other hand, the protein is depicted as a residue-level graph , with each node possessing 3D coordinates (the position of the alpha carbon of the residue). Subsequently, the edges are built based on seven types of representations including sequential, radius, and KNN edges following the GearNet [29]. The protein node features are computed using ESM-2b_650M to provide more biological knowledge [30].

3.4. Model Architecture of EMPDTA

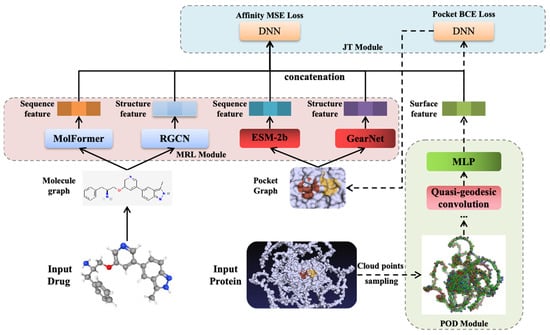

The proposed EMPDTA model is illustrated in Figure 2. Our end-to-end model comprises three main modules conducted sequentially. Firstly, the pocket online detection module is employed for online sampling and detecting the binding pockets as the preprocessing stage (POD Module). Subsequently, multimodal features are extracted from drugs and pockets by multimodal encoders and fused through simple concatenation (MRL Module). Lastly, both pocket detection BCE loss (classification task) and affinity prediction MSE loss (regression task) are jointly considered during model training and testing (JT Module). Moreover, fine-tuning on POD module is proposed for accurate and quick training on datasets with small proteins. In the following sections, a brief description of each component will be provided.

Figure 2.

The proposed EMPDTA model with three modules. A. Pocket Online Detection Module. B. Multimodal Representation Learning Module. C. Joint Training Module.

3.5. Pocket Online Detection Module

Our pocket detection relies on the protein structures. In sequence-based datasets (Davis, Filtered Davis, and KIBA), all protein structures are obtained from the AlphaFold Protein Structure Database (https://alphafold.ebi.ac.uk/, accessed on 1 May 2024) using PDB ID mapping from the amino acid sequences. Moreover, the PDBbind dataset provides structurally resolved protein PDB files that can be directly utilized.

3.5.1. Cloud Points Sampling

To enable online detection, we introduce a protein surface sampling method based on residue-level point clouds, eliminating the need for offline mesh generation. Inspired by dMaSIF [31], our sampling method extends the sampling objects from 6 types of atoms (C, H, O, N, S, Se) to 20 types of residues. Transitioning from atom-level to residue-level sampling can reduce the number of sampled points by nearly 20 times (each residue on average contains 20 atoms). Another distinction is the utilization of the Smooth Distance Function (SDF) in our protein surface definition (Formula (1)).

The input is provided as a cloud of residues , and represents the Euclidean distance between current point and . After a stable log-sum-exp reduction, a smoothing function is used to form a reasonable protein surface. The radius of all residues can be seen in Table 9.

Table 9.

Radius statistics of 20 different protein residues.

We sample the level set surface at radius r = 1.05 by gradient descent via the loss function:

The sampled points ultimately fall on the surface of the protein after several iterations, and grid clustering is employed to ensure the density of the point cloud. Given the substantial number of distance calculation operations required for geodesic distance computation, PyKeOps is utilized to mitigate the issue of excessive graphics memory usage during distance matrix calculation [32].

3.5.2. Quasi-Geodesic Convolution

Unlike convolution operations on images (CNN) and graphs (GCN), accurately defining convolution operations on curved surfaces remains challenging. Specifically, the Euclidean distance in three-dimensional space cannot accurately represent the surface distance between points. To maintain low computational costs, an approximate geodesic distance between two points and (with normal vector ) on a surface is defined as:

Therefore, the approximate geodesic distance is not only determined by the Euclidean distance between two points but also by the local coordinate system determined by their respective normal vectors [31]. Consequently, updated features that propagate along the surface can be obtained through the convolution operations using a local Gaussian window:

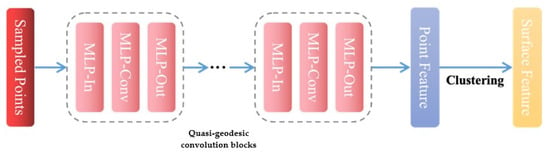

Finally, a three-layer MLP with shared and learnable weights is employed as the quasi-geodesic convolution block to learn features in the local geodesic neighborhood of point xi. The convolution blocks update the point feature into as shown in Formula (5). The structure of the pocket detection module is illustrated in Figure 3.

Figure 3.

The structure of pocket online detection module.

On one hand, surface features can be fed into a pocket classification DNN to predict the label of each residue (1 for pocket, 0 for non-pocket). On the other hand, these features can also be utilized for affinity prediction as a surface modality.

3.6. Multimodal Representation Learning Module

As validated in previous work, fused multimodal information helps to comprehensively understand drug–target interactions.

3.6.1. Sequence Modality

The SMILES strings and amino acid sequences serve as primary representations, highlighting the fundamental components and functional modules of drugs and proteins, respectively. Notably, sequence information boasts vast quantities and convenient storage capabilities. Consequently, with the advancement of exceptional transformer-based pretrained language models (PLMs), numerous approaches aim to decipher the biological language. Through extensive pretraining on massive datasets, PLMs can extract a broad knowledge of functional regions within sequences. In our MRL module, two outstanding and prevalent PLMs, MolFormer [33] and ESM-2b [30], are chosen to extract features from the sequence modality. Although the benchmark dataset may not contain a large number of drugs and proteins, these features obtained through pretrained models can still provide rich information.

3.6.2. Structure Modality

However, it is undeniable that the structural information of drugs and proteins offers a more micro perspective. The protein structure dictates its actual function and provides crucial geometric features such as folding states and binding sites, making it essential for understanding interactions and being widely employed in drug design. After preprocessing, relational graphs with multimodal information are constructed for both drugs and pockets. Graph neural network (GNN) models have proven effective in extracting topology representations of molecules [34,35]. A relational graph convolutional network [36] (RGCN) is further selected as the structure encoder for drugs to handle the four types of chemical bonds during message passing. Given the characteristics of pockets, GearNet [29] is chosen under the residue level of representation.

3.6.3. Surface Modality

The surface features of proteins, serving as fingerprints of interactions, play a crucial role in understanding their dynamics. Therefore, we directly leverage the protein surface features extracted by our POD module rather than complex hand-crafted features. The module has also been proven effective in subsequent ablation experiments.

3.7. Joint Training Module

In our framework, we treat pocket prediction as a binary classification task for residues and affinity prediction as a regression task for drug–target pairs. Our JT module allows for a more cohesive integration between the predicted labels (pocket or non-pocket) and the pocket extraction. Specifically, we employ binary cross-entropy loss for the pocket detection classifier:

Regarding affinity, we employ the mean squared error (MSE) as the loss function, a common choice for regression tasks. Here, represents the prediction and corresponds to the actual outputs, with n denoting the number of samples.

Therefore, the combined total loss consists of both the pocket classification loss and the affinity regression loss, with a weight factor set to 0.5 in our experiments.

3.8. Model Training and Evaluation

EMPDTA is implemented using the PyTorch framework (https://pytorch.org/, accessed on 1 May 2024) and TorchDrug platform (https://torchdrug.ai/, accessed on 1 May 2024). AdamW [37] is utilized to update the model parameters. The hyperparameters for EMPDTA are determined through a grid search using weights and biases (https://wandb.ai/, accessed on 1 May 2024). Experiments are conducted using a workstation with two Intel Xeon Silver 4314 processors @ 2.40 GHz and dual NVIDIA RTX4090 GPU running on Linux.

We have listed eight state-of-the-art deep learning methods for DTA prediction on sequence-based benchmark datasets:

- KronRLS [9] utilizes two independent kernel functions to process molecular fingerprint similarities and the Smith–Waterman [38] score of targets.

- SimBoost [10] leverages features of drugs, targets, and drug–target pairs, using gradient-boosting regression trees as the prediction model.

- CGKronRLS [39,40] is a similarity-based method employing similarity matrices of drugs and targets with a kernel method for affinity prediction.

- DeepDTA [11] is an innovative method using two branches of CNN blocks to encode drug SMILES strings and protein sequences.

- MDeePred [21] feeds multi-channel protein features into a CNN and fingerprint-based molecule vectors into a fully connected neural network (FNN).

- GraphDTA [12] introduces molecular graphs into DTA prediction, marking a pioneering approach.

- DeepGLSTM [22] employs three blocks of graph convolutional networks (GCN) for drug molecules and bidirectional LSTM for protein sequences.

- MFR-DTA [23] proposes a novel architecture that includes BioMLP/CNN blocks, an Elem-feature fusion block, and a Mix–Decoder block to extract drug–target interaction (DTI) information and predict binding regions simultaneously.

The experimental results of the methods mentioned above are obtained from their respective papers. For the Davis and KIBA datasets, we use the split indexes provided in DeepDTA, allowing us to maintain the same train/validation/test sets. The split indexes for the Filtered Davis dataset are sourced from MDeePred. For the PDBbind dataset, the split is taken from TANKBind.

4. Conclusions

Understanding and identifying binding pockets and the affinity of drug–target interactions play a crucial role in drug development. Early virtual screening methods could quickly identify drugs with high-affinity values, but these values alone could not distinguish binding pockets due to the diversity of pockets. In this paper, a comprehensive prediction framework that incorporates pocket online detection, enabling the simultaneous prediction of drug–target affinity and binding pocket regions, is proposed.

Our model seamlessly integrates pocket online detection, multimodal representation learning, and joint training modules. The outstanding performance of our multitask and multimodal framework for two downstream tasks has been validated across benchmark datasets. Visualizing the binding pocket results revealed a high consistency between the predicted and actual binding pockets. This accurate prediction of affinity, coupled with the precise identification of binding pockets, offers a robust solution for future drug screening and opens new areas for exploration in drug development.

Author Contributions

D.H. conducted the experiments and wrote the paper. J.X. helped revise this paper and conceived the experiments. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The source data and code repository can be accessed at https://github.com/BioCenter-SHU/EMPDTA, accessed on 1 May 2024.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Pei, Q.; Gao, K.; Wu, L.; Zhu, J.; Xia, Y.; Xie, S.; Qin, T.; He, K.; Liu, T.-Y.; Yan, R. FABind: Fast and Accurate Protein-Ligand Binding. Adv. Neural Inf. Process. Syst. 2023, 36, 55963–55980. [Google Scholar]

- Dhakal, A.; McKay, C.; Tanner, J.J.; Cheng, J. Artificial Intelligence in the Prediction of Protein–Ligand Interactions: Recent Advances and Future Directions. Brief. Bioinform. 2022, 23, bbab476. [Google Scholar] [CrossRef]

- Wouters, O.J.; McKee, M.; Luyten, J. Estimated Research and Development Investment Needed to Bring a New Medicine to Market, 2009–2018. JAMA 2020, 323, 844–853. [Google Scholar] [CrossRef]

- Stank, A.; Kokh, D.B.; Fuller, J.C.; Wade, R.C. Protein Binding Pocket Dynamics. Acc. Chem. Res. 2016, 49, 809–815. [Google Scholar] [CrossRef]

- Le Guilloux, V.; Schmidtke, P.; Tuffery, P. Fpocket: An Open Source Platform for Ligand Pocket Detection. BMC Bioinform. 2009, 10, 168. [Google Scholar] [CrossRef]

- Krivák, R.; Hoksza, D. P2Rank: Machine Learning Based Tool for Rapid and Accurate Prediction of Ligand Binding Sites from Protein Structure. J. Cheminform. 2018, 10, 39. [Google Scholar] [CrossRef]

- Huang, B.; Schroeder, M. LIGSITEcsc: Predicting Ligand Binding Sites Using the Connolly Surface and Degree of Conservation. BMC Struct. Biol. 2006, 6, 19. [Google Scholar] [CrossRef]

- Lu, W.; Wu, Q.; Zhang, J.; Rao, J.; Li, C.; Zheng, S. TANKBind: Trigonometry-Aware Neural NetworKs for Drug-Protein Binding Structure Prediction. Adv. Neural Inf. Process. Syst. 2022, 35, 7236–7249. [Google Scholar]

- Pahikkala, T.; Airola, A.; Pietilä, S.; Shakyawar, S.; Szwajda, A.; Tang, J.; Aittokallio, T. Toward More Realistic Drug-Target Interaction Predictions. Brief Bioinform. 2015, 16, 325–337. [Google Scholar] [CrossRef]

- He, T.; Heidemeyer, M.; Ban, F.; Cherkasov, A.; Ester, M. SimBoost: A Read-across Approach for Predicting Drug–Target Binding Affinities Using Gradient Boosting Machines. J. Cheminformatics 2017, 9, 24. [Google Scholar] [CrossRef]

- Öztürk, H.; Olmez, E.; Özgür, A. DeepDTA: Deep Drug–Target Binding Affinity Prediction. Bioinformatics 2018, 34, i821–i829. [Google Scholar] [CrossRef]

- Nguyen, T.; Le, H.; Quinn, T.P.; Nguyen, T.; Le, T.D.; Venkatesh, S. GraphDTA: Predicting Drug–Target Binding Affinity with Graph Neural Networks. Bioinformatics 2021, 37, 1140–1147. [Google Scholar] [CrossRef]

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly Accurate Protein Structure Prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Trott, O.; Olson, A.J. AutoDock Vina: Improving the Speed and Accuracy of Docking with a New Scoring Function, Efficient Optimization, and Multithreading. J. Comput. Chem. 2010, 31, 455–461. [Google Scholar] [CrossRef]

- Ackloo, S.; Al-awar, R.; Amaro, R.E.; Arrowsmith, C.H.; Azevedo, H.; Batey, R.A.; Bengio, Y.; Betz, U.A.K.; Bologa, C.G.; Chodera, J.D.; et al. CACHE (Critical Assessment of Computational Hit-Finding Experiments): A Public–Private Partnership Benchmarking Initiative to Enable the Development of Computational Methods for Hit-Finding. Nat. Rev. Chem. 2022, 6, 287–295. [Google Scholar] [CrossRef]

- Li, X.; Jacobson, M.P.; Friesner, R.A. High-Resolution Prediction of Protein Helix Positions and Orientations. Proteins: Struct. Funct. Bioinform. 2004, 55, 368–382. [Google Scholar] [CrossRef]

- Gentile, F.; Agrawal, V.; Hsing, M.; Ton, A.-T.; Ban, F.; Norinder, U.; Gleave, M.E.; Cherkasov, A. Deep Docking: A Deep Learning Platform for Augmentation of Structure Based Drug Discovery. ACS Cent. Sci. 2020, 6, 939–949. [Google Scholar] [CrossRef]

- Batzner, S.; Musaelian, A.; Sun, L.; Geiger, M.; Mailoa, J.P.; Kornbluth, M.; Molinari, N.; Smidt, T.E.; Kozinsky, B. E(3)-Equivariant Graph Neural Networks for Data-Efficient and Accurate Interatomic Potentials. Nat. Commun. 2022, 13, 2453. [Google Scholar] [CrossRef]

- Roche, R.; Moussad, B.; Shuvo, M.H.; Bhattacharya, D. E(3) Equivariant Graph Neural Networks for Robust and Accurate Protein-Protein Interaction Site Prediction. PLoS Comput. Biol. 2023, 19, e1011435. [Google Scholar] [CrossRef]

- D’Souza, S.; Prema, K.V.; Balaji, S.; Shah, R. Deep Learning-Based Modeling of Drug–Target Interaction Prediction Incorporating Binding Site Information of Proteins. Interdiscip. Sci. Comput. Life Sci. 2023, 15, 306–315. [Google Scholar] [CrossRef]

- Rifaioglu, A.S.; Cetin Atalay, R.; Cansen Kahraman, D.; Doğan, T.; Martin, M.; Atalay, V. MDeePred: Novel Multi-Channel Protein Featurization for Deep Learning-Based Binding Affinity Prediction in Drug Discovery. Bioinformatics 2021, 37, 693–704. [Google Scholar] [CrossRef]

- Mukherjee, S.; Ghosh, M.; Basuchowdhuri, P. DeepGLSTM: Deep Graph Convolutional Network and LSTM Based Approach for Predicting Drug-Target Binding Affinity. In Proceedings of the 2022 SIAM International Conference on Data Mining (SDM) Proceedings; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2022; pp. 729–737. [Google Scholar]

- Hua, Y.; Song, X.; Feng, Z.; Wu, X. MFR-DTA: A Multi-Functional and Robust Model for Predicting Drug-Target Binding Affinity and Region. Bioinformatics 2023, 39, btad056. [Google Scholar] [CrossRef]

- Davis, M.I.; Hunt, J.P.; Herrgård, S.; Ciceri, P.; Wodicka, L.; Pallares, G.; Hocker, M.; Treiber, D.K.; Zarrinkar, P. Comprehensive Analysis of Kinase Inhibitor Selectivity. Nat. Biotechnol. 2011, 29, 1046–1051. [Google Scholar] [CrossRef]

- Tang, J.; Szwajda, A.; Shakyawar, S.; Xu, T.; Hintsanen, P.; Wennerberg, K.; Aittokallio, T. Making Sense of Large-Scale Kinase Inhibitor Bioactivity Data Sets: A Comparative and Integrative Analysis. J. Chem. Inf. Model. 2014, 54, 735–743. [Google Scholar] [CrossRef]

- Liu, Z.; Su, M.; Han, L.; Liu, J.; Yang, Q.; Li, Y.; Wang, R. Forging the Basis for Developing Protein-Ligand Interaction Scoring Functions. Acc. Chem. Res. 2017, 50, 302–309. [Google Scholar] [CrossRef]

- Abdollahi, N.; Tonekaboni, S.; Huang, J.J.C.; Wang, B.; MacKinnon, S. NodeCoder: A Graph-Based Machine Learning Platform to Predict Active Sites of Modeled Protein Structures. arXiv 2023, arXiv:2302.03590. [Google Scholar]

- Zhu, Z.; Shi, C.; Zhang, Z.; Liu, S.; Xu, M.; Yuan, X.; Zhang, Y.; Chen, J.; Cai, H.; Lu, J.; et al. TorchDrug: A Powerful and Flexible Machine Learning Platform for Drug Discovery. arXiv 2022, arXiv:2202.08320. [Google Scholar] [CrossRef]

- Zhang, Z.; Xu, M.; Jamasb, A.; Chenthamarakshan, V.; Lozano, A.; Das, P.; Tang, J. Protein Representation Learning by Geometric Structure Pretraining. arXiv 2022, arXiv:2203.06125. [Google Scholar]

- Madani, A.; Krause, B.; Greene, E.R.; Subramanian, S.; Mohr, B.P.; Holton, J.M.; Olmos, J.L.; Xiong, C.; Sun, Z.Z.; Socher, R.; et al. Large Language Models Generate Functional Protein Sequences across Diverse Families. Nat. Biotechnol. 2023, 41, 1099–1106. [Google Scholar] [CrossRef]

- Sverrisson, F.; Feydy, J.; Correia, B.E.; Bronstein, M.M. Fast End-to-End Learning on Protein Surfaces. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 15267–15276. [Google Scholar] [CrossRef]

- Charlier, B.; Feydy, J.; Glaunès, J.; Collin, F.-D.; Durif, G. Kernel Operations on the GPU, with Autodiff, without Memory Overflows. J. Mach. Learn. Res. 2020, 22, 1–6. [Google Scholar]

- Ross, J.; Belgodere, B.; Chenthamarakshan, V.; Padhi, I.; Mroueh, Y.; Das, P. Large-Scale Chemical Language Representations Capture Molecular Structure and Properties. Nat. Mach. Intell. 2022, 4, 1256–1264. [Google Scholar] [CrossRef]

- Klicpera, J.; Groß, J.; Günnemann, S. Directional Message Passing for Molecular Graphs. arXiv 2020, arXiv:2003.03123. [Google Scholar]

- Li, S.; Zhou, J.; Xu, T.; Dou, D.; Xiong, H. GeomGCL: Geometric Graph Contrastive Learning for Molecular Property Prediction. Proc. AAAI Conf. Artif. Intell. 2021, 36, 4541–4549. [Google Scholar] [CrossRef]

- Schlichtkrull, M.; Kipf, T.; Bloem, P.; van den Berg, R.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. In Proceedings of The Semantic Web: 15th International Conference, ESWC 2018, Proceedings 15, Heraklion, Crete, Greece, 3–7 June 2018; Springer International Publishing: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization 2019. In Proceedings of the 7th International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Smith, T.F.; Waterman, M.S. Identification of Common Molecular Subsequences. J. Mol. Biol. 1981, 147, 195–197. [Google Scholar] [CrossRef]

- Airola, A.; Pahikkala, T. Fast Kronecker Product Kernel Methods via Generalized Vec Trick. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 3374–3387. [Google Scholar] [CrossRef]

- Cichońska, A.; Ravikumar, B.; Allaway, R.J.; Wan, F.; Park, S.; Isayev, O.; Li, S.; Mason, M.; Lamb, A.; Tanoli, Z.; et al. Crowdsourced Mapping of Unexplored Target Space of Kinase Inhibitors. Nat. Commun. 2021, 12, 3307. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).