Setting Boundaries for Statistical Mechanics

Abstract

1. Introduction

- The magnetic field is inextricably coupled to the electric field by the theory of (special) relativity, as Einstein put it (p. 57, [25]) “The special theory of relativity… was simply a systematic development of the electrodynamics of Clerk Maxwell and Lorentz.” The Feynman lectures [4]—e.g., Section 13-6 of the electrodynamics volume 2 of [4]—and many other texts of electrodynamics and/or special relativity [26] elaborate on Einstein’s statement.

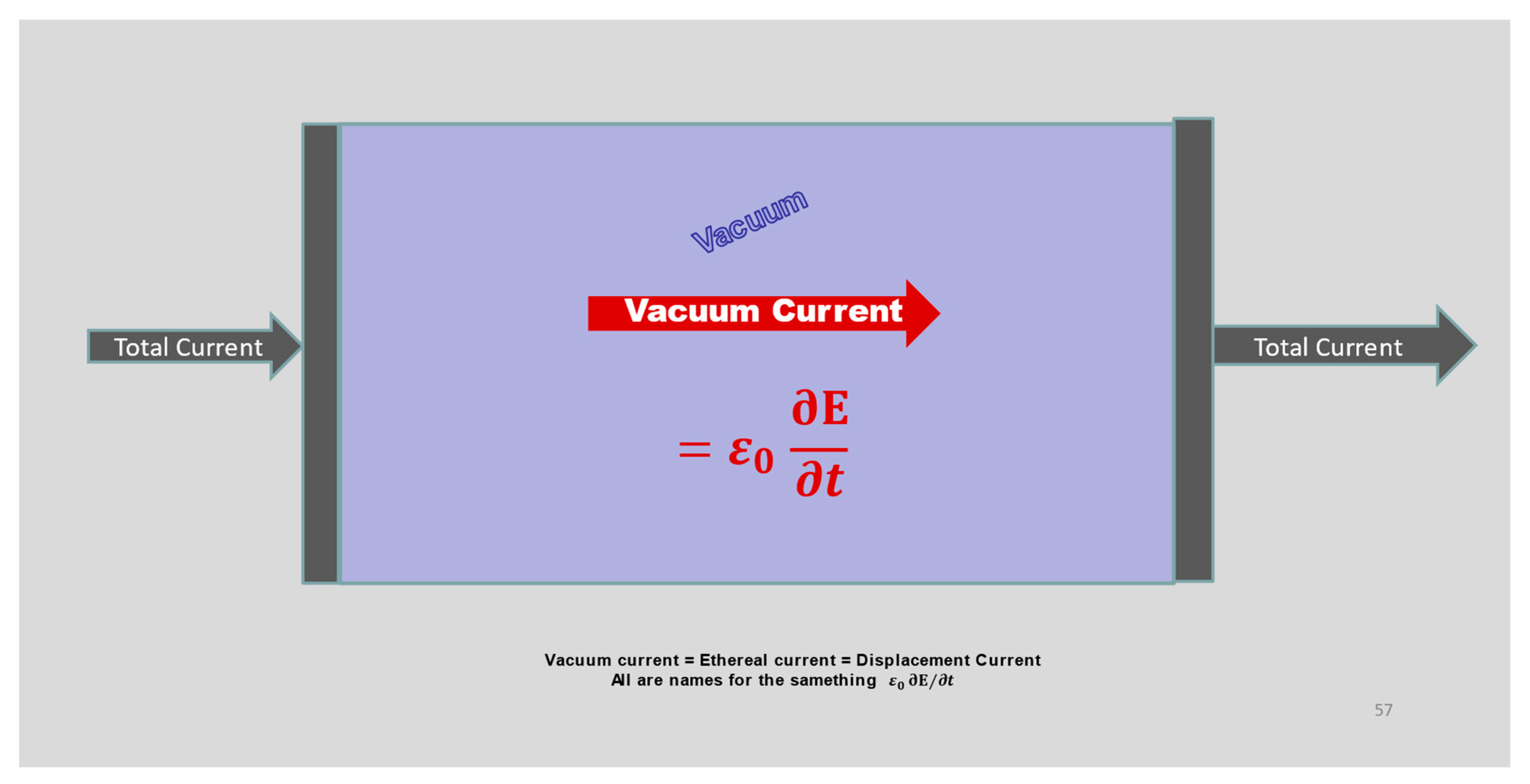

- The Maxwell-Ampere law allows electrical phenomena to couple with magnetic phenomena to produce radiation (like light) that propagates through a vacuum containing zero matter and zero charge.

- The Maxwell-Ampere implies that the divergence of the right hand side of Equation (5) is zero. The divergence of the curl is zero for any field that satisfies the Maxwell equations, as is proven in the first pages of textbooks on vector calculus. The reader uncomfortable with vector calculus can simply substitute the definitions of curl and divergence [27,28] into the relevant Equation (11) below and note the cancellation that occurs.

- A field with zero divergence is by definition a field that is perfectly conserved, that can neither accumulate nor dissipate nor disappear. Thus, the right side of the Maxwell-Ampere law is a perfectly conserved quantity, an incompressible fluid whose flow might be called ‘total current’ [29,30]. Because the right hand side of the Maxwell Ampere law always includes a term that is present everywhere, even where charge and its flux are zero, this term can provide the coupling needed to create radiation. The derivation of the radiation law (e.g., Equation (13) below) can be found in most textbooks of electrodynamics.

- The conservation of total current is of great practical importance [31,32] because it can be computed in situations involving large numbers (e.g., 1019) of charges, where computation of Equation (2) is impossible because of the extraordinary number of charges and interactions (that are not just pairwise, see [33] and references therein). The continuity equation so important in fluid mechanics is thus more or less useless in studying the electrodynamics of material (and chemical) systems on an atomic scale.

2. Theory

3. Results

4. Discussion

- (1)

- the structure of the system

- (2)

- the boundary conditions on the confining structure that bounds the system

- (3)

- the change in shape of the structure as it moves ‘to infinity’

- (4)

- the change in boundary conditions as the structure moves ‘to infinity’

- The boundary treatments must be compatible with electrodynamics because the equations of electrodynamics are universal and exact when written in the form of the Core Maxwell Equations Equations (2)–(5).

- Structures and boundaries must be involved, that describe the system and specific experimental setup used for measurement, albeit in an approximate way.

- Systems with known function, of known structure, should be studied first. These often dramatically simplify problems, as they were designed to do, by engineers or evolution, once we known how to describe and exploit the simplifications using mathematics.

- Systems that are devices, with well defined inputs, outputs, and input-output relations, should be identified because their properties are so much easier to deal with than systems and machines in general. Fortunately, devices are found throughout living systems, albeit not always as universally (or as clearly defined) as in engineering systems [132,133,134].

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Eisenberg, B. Setting Boundaries for Statistical Mechanics. arXiv 2021, arXiv:2112.12550. [Google Scholar]

- Politzer, P.; Murray, J.S. The Hellmann-Feynman theorem: A perspective. J. Mol. Model. 2018, 24, 266. [Google Scholar] [CrossRef] [PubMed]

- Feynman, R.P. Forces in molecules. Phys. Rev. 1939, 56, 340–343. [Google Scholar] [CrossRef]

- Feynman, R.P.; Leighton, R.B.; Sands, M. The Feynman: Lectures on Physics, Volume 1, 2, 3; Addison-Wesley Publishing Co.: New York, NY, USA, 1963; Available online: http://www.feynmanlectures.caltech.edu/II_toc.html (accessed on 2 October 2022).

- Oriols, X.; Ferry, D.K. Why engineers are right to avoid the quantum reality offered by the orthodox theory?[point of view]. Proc. IEEE 2021, 109, 955–961. [Google Scholar] [CrossRef]

- Benseny, A.; Tena, D.; Oriols, X. On the Classical Schrödinger Equation. Fluct. Noise Lett. 2016, 15, 1640011. [Google Scholar] [CrossRef]

- Albareda, G.; Marian, D.; Benali, A.; Alarcón, A.; Moises, S.; Oriols, X. BITLLES: Electron Transport Simulation with Quantum Trajectories. arXiv 2016, arXiv:1609.06534. [Google Scholar]

- Benseny, A.; Albareda, G.; Sanz, Á.S.; Mompart, J.; Oriols, X. Applied bohmian mechanics. Eur. Phys. J. D 2014, 68, 286. [Google Scholar] [CrossRef]

- Ferry, D.K. Quantum Mechanics: An Introduction for Device Physicists and Electrical Engineers; Taylor & Francis Group: New York, NY, USA, 2020. [Google Scholar]

- Ferry, D.K. An Introduction to Quantum Transport in Semiconductors; Jenny Stanford Publishing: Singapore, 2017. [Google Scholar]

- Brunner, R.; Ferry, D.; Akis, R.; Meisels, R.; Kuchar, F.; Burke, A.; Bird, J. Open quantum dots: II. Probing the classical to quantum transition. J. Phys. Condens. Matter 2012, 24, 343202. [Google Scholar] [CrossRef]

- Ferry, D.; Burke, A.; Akis, R.; Brunner, R.; Day, T.; Meisels, R.; Kuchar, F.; Bird, J.; Bennett, B. Open quantum dots—Probing the quantum to classical transition. Semicond. Sci. Technol. 2011, 26, 043001. [Google Scholar] [CrossRef]

- Hirschfelder, J.O.; Curtiss, C.F.; Bird, R.B. Molecular Theory of Gases and Liquids; John Wiley: New York, NY, USA, 1964; ISBN 9780471400653. [Google Scholar]

- Ferry, D.K.; Weinbub, J.; Nedjalkov, M.; Selberherr, S. A review of quantum transport in field-effect transistors. Semicond. Sci. Technol. 2021, 37, 4. [Google Scholar] [CrossRef]

- Ferry, D.K.; Nedjalkov, M. Wigner Function and Its Application; Institute of Physics Publishing: Philadelphia, PA, USA, 2019. [Google Scholar]

- Ferry, D.K. Transport in Semiconductor Mesoscopic Devices; Institute of Physics Publishing: Philadelphia, PA, USA, 2015. [Google Scholar]

- Albareda, G.; Marian, D.; Benali, A.; Alarcón, A.; Moises, S.; Oriols, X. Electron Devices Simulation with Bohmian Trajectories. Simul. Transp. Nanodevices 2016, 261–318. [Google Scholar] [CrossRef]

- Oriols, X.; Ferry, D. Quantum transport beyond DC. J. Comput. Electron. 2013, 12, 317–330. [Google Scholar] [CrossRef]

- Colomés, E.; Zhan, Z.; Marian, D.; Oriols, X. Quantum dissipation with conditional wave functions: Application to the realistic simulation of nanoscale electron devices. arXiv 2017, arXiv:1707.05990. [Google Scholar] [CrossRef]

- Devashish, P.; Xavier, O.; Guillermo, A. From micro- to macrorealism: Addressing experimental clumsiness with semi-weak measurements. New J. Phys. 2020, 22, 073047. [Google Scholar]

- Marian, D.; Colomés, E.; Zhan, Z.; Oriols, X. Quantum noise from a Bohmian perspective: Fundamental understanding and practical computation in electron devices. J. Comput. Electron. 2015, 14, 114–128. [Google Scholar] [CrossRef]

- Ferry, D.; Akis, R.; Brunner, R. Probing the quantum–classical connection with open quantum dots. Phys. Scr. 2015, 2015, 014010. [Google Scholar] [CrossRef][Green Version]

- Ferry, D.K. Ohm’s Law in a Quantum World. Science 2012, 335, 45–46. [Google Scholar] [CrossRef]

- Ferry, D.K. Nanowires in nanoelectronics. Science 2008, 319, 579–580. [Google Scholar] [CrossRef]

- Einstein, A. Essays in Science, Originally Published as Mein Weltbild 1933, Translated from the German by Alan Harris; Open Road Media: New York, NY, USA, 1934. [Google Scholar]

- Feynman, R.P.; Leighton, R.B.; Sands, M. Six Not-So-Easy Pieces: Einstein S Relativity, Symmetry, and Space-Time; Basic Books: New York, NY, USA, 2011. [Google Scholar]

- Schey, H.M. Div, Grad, Curl, and All That: An Informal Text on Vector Calculus; W. W. Norton & Company, Inc.: New York, NY, USA, 2005. [Google Scholar]

- Arfken, G.B.; Weber, H.J.; Harris, F.E. Mathematical Methods for Physicists: A Comprehensive Guide; Elsevier Science: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Eisenberg, R.S. Maxwell Equations Without a Polarization Field, Using a Paradigm from Biophysics. Entropy 2021, 23, 172. [Google Scholar] [CrossRef]

- Eisenberg, R. A Necessary Addition to Kirchhoff’s Current Law of Circuits, Version 2. Eng. Arch. 2022. [Google Scholar] [CrossRef]

- Eisenberg, R.; Oriols, X.; Ferry, D.K. Kirchhoff’s Current Law with Displacement Current. arXiv 2022, arXiv:2207.08277. [Google Scholar]

- Eisenberg, B.; Gold, N.; Song, Z.; Huang, H. What Current Flows Through a Resistor? arXiv 2018, arXiv:1805.04814. [Google Scholar]

- Xu, Z. Electrostatic interaction in the presence of dielectric interfaces and polarization-induced like-charge attraction. Phys. Rev. E 2013, 87, 013307. [Google Scholar] [CrossRef] [PubMed]

- Gielen, G.; Sansen, W.M. Symbolic Analysis for Automated Design of Analog Integrated Circuits; Springer Science & Business Media: New York, NY, USA, 2012; Volume 137. [Google Scholar]

- Ayers, J.E. Digital Integrated Circuits: Analysis and Design, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Gray, P.R.; Hurst, P.J.; Lewis, S.H.; Meyer, R.G. Analysis and Design of Analog Integrated Circuits; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Sedra, A.S.; Smith, K.C.; Chan, T.; Carusone, T.C.; Gaudet, V. Microelectronic Circuits; Oxford University Press: Oxford, UK, 2020. [Google Scholar]

- Lienig, J.; Scheible, J. Fundamentals of Layout Design for Electronic Circuits; Springer: New York, NY, USA, 2020. [Google Scholar]

- Hall, S.H.; Heck, H.L. Advanced Signal Integrity for High-Speed Digital Designs; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Horowitz, P.; Hill, W. The Art of Electronics, 3rd ed.; Cambridge University Press: New York, NY, USA, 2015; p. 1224. [Google Scholar]

- Berry, S.R.; Rice, S.A.; Ross, J. Physical Chemistry, 2nd ed.; Oxford: New York, NY, USA, 2000; p. 1064. [Google Scholar]

- Arthur, J.W. The Evolution of Maxwell’s Equations from 1862 to the Present Day. IEEE Antennas Propag. Mag. 2013, 55, 61–81. [Google Scholar] [CrossRef]

- Heaviside, O. Electromagnetic Theory; Cosimo, Inc.: New York, NY, USA, 2008; Volume 3. [Google Scholar]

- Nahin, P.J. Oliver Heaviside: The Life, Work, and Times of an Electrical Genius of the Victorian Age; Johns Hopkins University Press: Baltimore, MD, USA, 2002. [Google Scholar]

- Yavetz, I. From Obscurity to Enigma: The Work of Oliver Heaviside; Springer Science & Business Media: New York, NY, USA, 1995; Volume 16, pp. 1872–1889. [Google Scholar]

- Buchwald, J.Z. Oliver Heaviside, Maxwell’s Apostle and Maxwellian Apostate. Centaurus 1985, 28, 288–330. [Google Scholar] [CrossRef]

- Jackson, J.D. Classical Electrodynamics, Third Edition, 2nd ed.; Wiley: New York, NY, USA, 1999; p. 832. [Google Scholar]

- Whittaker, E. A History of the Theories of Aether & Electricity; Harper: New York, NY, USA, 1951. [Google Scholar]

- Abraham, M.; Becker, R. The Classical Theory of Electricity and Magnetism; Blackie and Subsequent Dover Reprints: Glasgow, UK, 1932; p. 303. [Google Scholar]

- Abraham, M.; Föppl, A. Theorie der Elektrizität: Bd. Elektromagnetische Theorie der Strahlung; BG Teubner: Berlin, Germany, 1905; Volume 2. [Google Scholar]

- Villani, M.; Oriols, X.; Clochiatti, S.; Weimann, N.; Prost, W. The accurate predictions of THz quantum currents requires a new displacement current coefficient instead of the traditional transmission one. In Proceedings of the 2020 Third International Workshop on Mobile Terahertz Systems (IWMTS), Essen, Germany, 1–2 July 2020. [Google Scholar]

- Cheng, L.; Ming, Y.; Ding, Z. Bohmian trajectory-bloch wave approach to dynamical simulation of electron diffraction in crystal. N. J. Phys. 2018, 20, 113004. [Google Scholar] [CrossRef]

- Xu, S.; Sheng, P.; Liu, C. An energetic variational approach for ion transport. arXiv 2014, arXiv:1408.4114. [Google Scholar] [CrossRef]

- Eisenberg, B.; Hyon, Y.; Liu, C. Energy Variational Analysis EnVarA of Ions in Water and Channels: Field Theory for Primitive Models of Complex Ionic Fluids. J. Chem. Phys. 2010, 133, 104104. [Google Scholar] [CrossRef]

- Xu, S.; Eisenberg, B.; Song, Z.; Huang, H. Osmosis through a Semi-permeable Membrane: A Consistent Approach to Interactions. arXiv 2018, arXiv:1806.00646. [Google Scholar]

- Giga, M.-H.; Kirshtein, A.; Liu, C. Variational Modeling and Complex Fluids. In Handbook of Mathematical Analysis in Mechanics of Viscous Fluids; Giga, Y., Novotny, A., Eds.; Springer International Publishing: Cham, Germany, 2017; pp. 1–41. [Google Scholar]

- Debye, P.J.W. Polar Molecules; Chemical Catalog Company, Incorporated and Francis Mills Turner Publishing: New York, New York, USA, 1929. [Google Scholar]

- Debye, P.; Falkenhagen, H. Dispersion of the Conductivity and Dielectric Constants of Strong Electrolytes. Phys. Z. 1928, 29, 401–426. [Google Scholar]

- Barsoukov, E.; Macdonald, J.R. Impedance Spectroscopy: Theory, Experiment, and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Kremer, F.; Schönhals, A. Broadband Dielectric Spectroscopy; Springer: Berlin/Heidelberg, Germany, 2003; p. 729. [Google Scholar]

- Eisenberg, B.; Oriols, X.; Ferry, D. Dynamics of Current, Charge, and Mass. Mol. Based Math. Biol. 2017, 5, 78–115. [Google Scholar] [CrossRef][Green Version]

- Buchner, R.; Barthel, J. Dielectric Relaxation in Solutions. Annu. Rep. Prog. Chem. Sect. C Phys. Chem. 2001, 97, 349–382. [Google Scholar] [CrossRef]

- Barthel, J.; Buchner, R.; Münsterer, M. Electrolyte Data Collection Vol. 12, Part 2: Dielectric Properties of Water and Aqueous Electrolyte Solutions; DECHEMA: Frankfurt am Main, Germany, 1995. [Google Scholar]

- Kraus, C.A.; Fuoss, R.M. Properties of Electrolytic Solutions. I. Conductance as Influenced by the Dielectric Constant of the Solvent Medium1. J. Am. Chem. Soc. 1933, 55, 21–36. [Google Scholar] [CrossRef]

- Oncley, J. The Investigation of Proteins by Dielectric Measurements. Chem. Rev. 1942, 30, 433–450. [Google Scholar] [CrossRef]

- Fuoss, R.M. Theory of dielectrics. J. Chem. Educ. 1949, 26, 683. [Google Scholar] [CrossRef][Green Version]

- Von Hippel, A.R. Dielectric Materials and Applications; Artech House on Demand: Norwood, MA, USA, 1954; Volume 2. [Google Scholar]

- Fröhlich, H. Theory of Dielectrics: Dielectric Constant and Dielectric Loss; Clarendon Press: Oxford, UK, 1958. [Google Scholar]

- Nee, T.-w.; Zwanzig, R. Theory of Dielectric Relaxation in Polar Liquids. J. Chem. Phys. 1970, 52, 6353–6363. [Google Scholar] [CrossRef]

- Scaife, B.K.P. Principles of Dielectrics; Oxford University Press: New York, NY, USA, 1989; p. 384. [Google Scholar]

- Ritschel, U.; Wilets, L.; Rehr, J.J.; Grabiak, M. Non-local dielectric functions in classical electrostatics and QCD models. J. Phys. G Nucl. Part. Phys. 1992, 18, 1889. [Google Scholar] [CrossRef]

- Kurnikova, M.G.; Waldeck, D.H.; Coalson, R.D. A molecular dynamics study of the dielectric friction. J. Chem. Phys. 1996, 105, 628–638. [Google Scholar] [CrossRef]

- Heinz, T.N.; Van Gunsteren, W.F.; Hunenberger, P.H. Comparison of four methods to compute the dielectric permittivity of liquids from molecular dynamics simulations. J. Chem. Phys. 2001, 115, 1125–1136. [Google Scholar] [CrossRef]

- Pitera, J.W.; Falta, M.; Van Gunsteren, W.F. Dielectric properties of proteins from simulation: The effects of solvent, ligands, pH, and temperature. Biophys. J. 2001, 80, 2546–2555. [Google Scholar] [CrossRef]

- Schutz, C.N.; Warshel, A. What are the dielectric “constants” of proteins and how to validate electrostatic models? Proteins 2001, 44, 400–417. [Google Scholar] [CrossRef] [PubMed]

- Fiedziuszko, S.J.; Hunter, I.C.; Itoh, T.; Kobayashi, Y.; Nishikawa, T.; Stitzer, S.N.; Wakino, K. Dielectric materials, devices, and circuits. IEEE Trans. Microw. Theory Tech. 2002, 50, 706–720. [Google Scholar] [CrossRef]

- Doerr, T.P.; Yu, Y.-K. Electrostatics in the presence of dielectrics: The benefits of treating the induced surface charge density directly. Am. J. Phys. 2004, 72, 190–196. [Google Scholar] [CrossRef]

- Rotenberg, B.; Cadene, A.; Dufreche, J.F.; Durand-Vidal, S.; Badot, J.C.; Turq, P. An analytical model for probing ion dynamics in clays with broadband dielectric spectroscopy. J. Phys. Chem. B 2005, 109, 15548–15557. [Google Scholar] [CrossRef] [PubMed]

- Kuehn, S.; Marohn, J.A.; Loring, R.F. Noncontact dielectric friction. J. Phys. Chem. B 2006, 110, 14525–14528. [Google Scholar] [CrossRef][Green Version]

- Dyer, K.M.; Perkyns, J.S.; Stell, G.; Pettitt, B.M. A molecular site-site integral equation that yields the dielectric constant. J. Chem. Phys. 2008, 129, 104512. [Google Scholar] [CrossRef]

- Fulton, R.L. The nonlinear dielectric behavior of water: Comparisons of various approaches to the nonlinear dielectric increment. J. Chem. Phys. 2009, 130, 204503–204510. [Google Scholar] [CrossRef]

- Angulo-Sherman, A.; Mercado-Uribe, H. Dielectric spectroscopy of water at low frequencies: The existence of an isopermitive point. Chem. Phys. Lett. 2011, 503, 327–330. [Google Scholar] [CrossRef]

- Ben-Yaakov, D.; Andelman, D.; Podgornik, R. Dielectric decrement as a source of ion-specific effects. J. Chem. Phys. 2011, 134, 074705. [Google Scholar] [CrossRef]

- Riniker, S.; Kunz, A.-P.E.; Van Gunsteren, W.F. On the Calculation of the Dielectric Permittivity and Relaxation of Molecular Models in the Liquid Phase. J. Chem. Theory Comput. 2011, 7, 1469–1475. [Google Scholar] [CrossRef]

- Zarubin, G.; Bier, M. Static dielectric properties of dense ionic fluids. J. Chem. Phys. 2015, 142, 184502. [Google Scholar] [CrossRef] [PubMed]

- Eisenberg, B.; Liu, W. Relative dielectric constants and selectivity ratios in open ionic channels. Mol. Based Math. Biol. 2017, 5, 125–137. [Google Scholar] [CrossRef]

- Böttcher, C.J.F.; Van Belle, O.C.; Bordewijk, P.; Rip, A. Theory of Electric Polarization; Elsevier Science Ltd.: Amsterdam, The Netherlands, 1978; Volume 2. [Google Scholar]

- Parsegian, V.A. Van der Waals Forces: A Handbook for Biologists, Chemists, Engineers, and Physicists; Cambridge University Press: New York, NY, USA, 2006; p. 396. [Google Scholar]

- Israelachvili, J. Intermolecular and Surface Forces; Academic Press: New York, NY, USA, 1992; p. 450. [Google Scholar]

- Banwell, C.N.; McCash, E.M. Fundamentals of Molecular Spectroscopy; McGraw-Hill: New York, NY, USA, 1994; Volume 851. [Google Scholar]

- Demchenko, A.P. Ultraviolet Spectroscopy of Proteins; Springer Science & Business Media: New York, NY USA, 2013. [Google Scholar]

- Jaffé, H.H.; Orchin, M. Theory and Applications of Ultraviolet Spectroscopy; John Wiley & Sons, Inc: Hoboken, NJ, USA, 1972. [Google Scholar]

- Rao, K.N. Molecular Spectroscopy: Modern Research; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Sindhu, P. Fundamentals of Molecular Spectroscopy; New Age International: New Delhi, India, 2006. [Google Scholar]

- Stuart, B. Infrared Spectroscopy; Wiley Online Library John Wiley & Sons, Inc: Hoboken, NJ, USA, 2005. [Google Scholar]

- Asmis, K.R.; Neumark, D.M. Vibrational Spectroscopy of Microhydrated Conjugate Base Anions. Acc. Chem. Res. 2011, 45, 43–52. [Google Scholar] [CrossRef]

- Faubel, M.; Siefermann, K.R.; Liu, Y.; Abel, B. Ultrafast Soft X-ray Photoelectron Spectroscopy at Liquid Water Microjets. Acc. Chem. Res. 2011, 45, 120–130. [Google Scholar] [CrossRef] [PubMed]

- Jeon, J.; Yang, S.; Choi, J.-H.; Cho, M. Computational Vibrational Spectroscopy of Peptides and Proteins in One and Two Dimensions. Acc. Chem. Res. 2009, 42, 1280–1289. [Google Scholar] [CrossRef]

- Gudarzi, M.M.; Aboutalebi, S.H. Self-consistent dielectric functions of materials: Toward accurate computation of Casimir–van der Waals forces. Sci. Adv. 2021, 7, eabg2272. [Google Scholar] [CrossRef]

- Wegener, M. Extreme Nonlinear Optics: An Introduction; Springer Science & Business Media: New York, NY, USA, 2005. [Google Scholar]

- Sutherland, R.L. Handbook of Nonlinear Optics; CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Boyd, R.W. Nonlinear Optics, 3rd ed.; Academic Press: Cambridge, MA, USA, 2008; p. 640. [Google Scholar]

- Hill, W.T.; Lee, C.H. Light-Matter Interaction; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Lodahl, P.; Mahmoodian, S.; Stobbe, S.; Rauschenbeutel, A.; Schneeweiss, P.; Volz, J.; Pichler, H.; Zoller, P. Chiral quantum optics. Nature 2017, 541, 473–480. [Google Scholar] [CrossRef]

- Zheng, B.; Madni, H.A.; Hao, R.; Zhang, X.; Liu, X.; Li, E.; Chen, H. Concealing arbitrary objects remotely with multi-folded transformation optics. Light Sci. Appl. 2016, 5, e16177. [Google Scholar] [CrossRef] [PubMed]

- Robinson, F.N.H. Macroscopic Electromagnetism; Pergamon: Bergama, Turkey, 1973; Volume 57. [Google Scholar]

- Eisenberg, R.S. Dielectric Dilemma. arXiv 2019, arXiv:1901.10805. [Google Scholar]

- Barsoukov, E.; Macdonald, J.R. Impedance Spectroscopy: Theory, Experiment, and Applications, 2nd ed.; Wiley-Interscience: Hoboken, NJ, USA, 2005; p. 616. [Google Scholar]

- Barthel, J.; Krienke, H.; Kunz, W. Physical Chemistry of Electrolyte Solutions: Modern Aspects; Springer: New York, NY, USA, 1998. [Google Scholar]

- Jeans, J.H. The Mathematical Theory of Electricity and Magnetism; Cambridge University Press: New York, NY, USA, 1908. [Google Scholar]

- Smythe, W.R. Static and Dynamic Electricity; McGraw-Hill: New York, NY, USA, 1950; p. 616. [Google Scholar]

- Purcell, E.M.; Morin, D.J. Electricity and Magnetism; Cambridge University Press: New York, NY, USA, 2013. [Google Scholar]

- Griffiths, D.J. Introduction to Electrodynamics, 3rd ed.; Cambridge University Press: New York, NY, USA, 2017. [Google Scholar]

- Buchwald, J.Z. From Maxwell to Microphysics. In Aspects of Electromagnetic Theory in the Last Quarter of the Nineteenth Century; University of Chicago: Chicago, IL, USA, 1985. [Google Scholar]

- Simpson, T.K. Maxwell on the Electromagnetic Field: A Guided Study; Rutgers University Press: Chicago, IL, USA, 1998; p. 441. [Google Scholar]

- Arthur, J.W. The fundamentals of electromagnetic theory revisited. IEEE Antennas Propag. Mag. 2008, 50, 19–65. [Google Scholar] [CrossRef]

- Karle, C.A.; Kiehn, J. An ion channel ‘addicted’ to ether, alcohol and cocaine: The HERG potassium channel. Cardiovasc. Res. 2002, 53, 6–8. [Google Scholar] [CrossRef][Green Version]

- El Harchi, A.; Brincourt, O. Pharmacological activation of the hERG K+ channel for the management of the long QT syndrome: A review. J. Arrhythmia 2022, 38, 554–569. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Mao, J.; Wei, M.; Qi, Y.; Zhang, J.Z. HergSPred: Accurate Classification of hERG Blockers/Nonblockers with Machine-Learning Models. J. Chem. Inf. Model. 2022, 62, 1830–1839. [Google Scholar] [CrossRef] [PubMed]

- Heaviside, O. Electromagnetic Theory: By Oliver Heaviside; “The Electrician” Printing and Publishing Company: London, UK, 1893. [Google Scholar]

- Eisenberg, R. S Maxwell Equations for Material Systems. Preprints 2020. [Google Scholar] [CrossRef]

- Eisenberg, R.S. Updating Maxwell with Electrons, Charge, and More Realistic Polarization. arXiv 2019, arXiv:1904.09695. [Google Scholar]

- Eisenberg, B. Maxwell Matters. arXiv 2016, arXiv:1607.06691. [Google Scholar]

- Eisenberg, R.S. Mass Action and Conservation of Current. Hung. J. Ind. Chem. 2016, 44, 1–28. [Google Scholar] [CrossRef]

- Eisenberg, B. Conservation of Current and Conservation of Charge. arXiv 2016, arXiv:1609.09175. [Google Scholar]

- Nielsen, C.P.; Bruus, H. Concentration polarization, surface currents, and bulk advection in a microchannel. Phys. Rev. E 2014, 90, 043020. [Google Scholar] [CrossRef]

- Tanaka, Y. 6-Concentration Polarization. In Ion Exchange Membranes, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2015; pp. 101–121. [Google Scholar]

- Rubinstein, I.; Zaltzman, B. Convective diffusive mixing in concentration polarization: From Taylor dispersion to surface convection. J. Fluid Mech. 2013, 728, 239–278. [Google Scholar] [CrossRef]

- Abu-Rjal, R.; Chinaryan, V.; Bazant, M.Z.; Rubinstein, I.; Zaltzman, B. Effect of concentration polarization on permselectivity. Phys. Rev. E 2014, 89, 012302. [Google Scholar] [CrossRef] [PubMed]

- Hodgkin, A.L.; Huxley, A.F.; Katz, B. Measurement of current-voltage relations in the membrane of the giant axon of Loligo. J. Physiol. 1952, 116, 424–448. [Google Scholar] [CrossRef] [PubMed]

- Hunt, B.J. The Maxwellians; Cornell University Press: Ithaca, NY, USA, 2005. [Google Scholar]

- Eisenberg, B. Ion Channels as Devices. J. Comput. Electron. 2003, 2, 245–249. [Google Scholar] [CrossRef]

- Eisenberg, B. Living Devices: The Physiological Point of View. arXiv 2012, arXiv:1206.6490. [Google Scholar]

- Eisenberg, B. Asking biological questions of physical systems: The device approach to emergent properties. J. Mol. Liq. 2018, 270, 212–217. [Google Scholar] [CrossRef]

- Bird, R.B.; Armstrong, R.C.; Hassager, O. Dynamics of Polymeric Fluids, Fluid Mechanics; Wiley: New York, NY, USA, 1977; Volume 1, p. 672. [Google Scholar]

- Chen, G.-Q.; Li, T.-T.; Liu, C. Nonlinear Conservation Laws, Fluid Systems and Related Topics; World Scientific: Singapore, 2009; p. 400. [Google Scholar]

- Doi, M.; Edwards, S.F. The Theory of Polymer Dynamics; Oxford University Press: New York, NY, USA, 1988. [Google Scholar]

- Gennes, P.-G.d.; Prost, J. The Physics of Liquid Crystals; Oxford University Press: New York, NY, USA, 1993; p. 616. [Google Scholar]

- Hou, T.Y.; Liu, C.; Liu, J.-G. Multi-Scale Phenomena in Complex Fluids: Modeling, Analysis and Numerical Simulations; World Scientific Publishing Company: Singapore, 2009. [Google Scholar]

- Larson, R.G. The Structure and Rheology of Complex Fluids; Oxford: New York, NY, USA, 1995; p. 688. [Google Scholar]

- Liu, C. An Introduction of Elastic Complex Fluids: An Energetic Variational Approach; World Scientific: Singapore, 2009. [Google Scholar]

- Wu, H.; Lin, T.-C.; Liu, C. On transport of ionic solutions: From kinetic laws to continuum descriptions. arXiv 2013, arXiv:1306.3053v2. [Google Scholar]

- Ryham, R.J. An Energetic Variational Approach to Mathematical Moldeling of Charged Fluids, Charge Phases, Simulation and Well Posedness. Ph.D. Thesis, The Pennsylvania State University, State College, PA, USA, 2006. [Google Scholar]

- Wang, Y.; Liu, C.; Eisenberg, B. On variational principles for polarization in electromechanical systems. arXiv 2021, arXiv:2108.11512. [Google Scholar]

- Mason, E.; McDaniel, E. Transport Properties of Ions in Gases; John Wiley and Sons: Hoboken, NJ, USA, 1988; p. 560. [Google Scholar]

- Boyd, T.J.M.; Sanderson, J.J. The Physics of Plasmas; Cambridge University Press: New York, NY, USA, 2003; p. 544. [Google Scholar]

- Ichimura, S. Statistical Plasma Physics. In Condensed Plasmas; Addison-Wesley: New York, NY, USA, 1994; Volume 2, p. 289. [Google Scholar]

- Kulsrud, R.M. Plasma Physics for Astrophysics; Princeton: Princeton, NJ, USA, 2005; p. 468. [Google Scholar]

- Shockley, W. Electrons and Holes in Semiconductors to Applications in Transistor Electronics; van Nostrand: New York, NY, USA, 1950; p. 558. [Google Scholar]

- Blotekjaer, K. Transport equations for electrons in two-valley semiconductors. Electron Devices IEEE Trans. 1970, 17, 38–47. [Google Scholar] [CrossRef]

- Selberherr, S. Analysis and Simulation of Semiconductor Devices; Springer: New York, NY, USA, 1984; pp. 1–293. [Google Scholar]

- Ferry, D.K. Semiconductor Transport; Taylor and Francis: New York, NY, USA, 2000; p. 384. [Google Scholar]

- Vasileska, D.; Goodnick, S.M.; Klimeck, G. Computational Electronics: Semiclassical and Quantum Device Modeling and Simulation; CRC Press: New York, NY, USA, 2010; p. 764. [Google Scholar]

- Morse, P.M.C.; Feshbach, H. Methods of Theoretical Physics; McGraw-Hill: New York, NY, USA, 1953. [Google Scholar]

- Courant, R.; Hilbert, D. Methods of Mathematical Physics Vol. 1; Interscience Publishers: New York, NY, USA, 1953. [Google Scholar]

- Kevorkian, J.; Cole, J.D. Multiple Scale and Singular Perturbation Methods; Springer: New York, NY, USA, 1996; pp. 1–632. [Google Scholar]

- He, Y.; Gillespie, D.; Boda, D.; Vlassiouk, I.; Eisenberg, R.S.; Siwy, Z.S. Tuning Transport Properties of Nanofluidic Devices with Local Charge Inversion. J. Am. Chem. Soc. 2009, 131, 5194–5202. [Google Scholar] [CrossRef]

- Hyon, Y.; Du, Q.; Liu, C. On Some Probability Density Function Based Moment Closure Approximations of Micro-Macro Models for Viscoelastic Polymeric Fluids. J. Comput. Theor. Nanosci. 2010, 7, 756–765. [Google Scholar] [CrossRef]

- Hu, X.; Lin, F.; Liu, C. Equations for viscoelastic fluids. In Handbook of Mathematical Analysis in Mechanics of Viscous Fluids; Giga, Y., Novotny, A., Eds.; Springer: New York, NY, USA, 2018. [Google Scholar]

- Hyon, Y.; Carrillo, J.A.; Du, Q.; Liu, C. A Maximum Entropy Principle Based Closure Method for Macro-Micro Models of Polymeric Materials. Kinet. Relat. Model. 2008, 1, 171–184. [Google Scholar] [CrossRef]

- Liu, C.; Liu, H. Boundary Conditions for the Microscopic FENE Models. SIAM J. Appl. Math. 2008, 68, 1304–1315. [Google Scholar] [CrossRef]

- Du, Q.; Hyon, Y.; Liu, C. An Enhanced Macroscopic Closure Approximation to the Micro-macro FENE Models for Polymeric Materials. J. Multiscale Model. Simul. 2008, 2, 978–1002. [Google Scholar]

- Lin, F.-H.; Liu, C.; Zhang, P. On a Micro-Macro Model for Polymeric Fluids near Equilibrium. Commun. Pure Appl. Math. 2007, 60, 838–866. [Google Scholar] [CrossRef]

- Yu, P.; Du, Q.; Liu, C. From Micro to Macro Dynamics via a New Closure Approximation to the FENE Model of Polymeric Fluids. Multiscale Model. Simul. 2005, 3, 895–917. [Google Scholar] [CrossRef]

- De Vries, H. The Simplest, and the Full Derivation of Mgnetism as a Relativistic Side Effect of Electrostatics. 2008. Available online: http://www.flooved.com/reader/3196 (accessed on 2 October 2022).

- Einstein, A. On the electrodynamics of moving bodies. Ann. Phys. 1905, 17, 50. [Google Scholar]

- Karlin, S.; Taylor, H.M. A First Course in Stochastic Processes, 2nd ed.; Academic Press: New York, NY, USA, 1975; p. 557. [Google Scholar]

- Schuss, Z. Theory and Applications of Stochastic Processes: An Analytical Approach; Springer: New York, NY, USA, 2009; p. 470. [Google Scholar]

- Muldowney, P. A Modern Theory of Random Variation; Wiley: Hoboken, NJ, USA, 2012; p. 527. [Google Scholar]

- Eisenberg, R.S. Electrodynamics Correlates Knock-on and Knock-off: Current is Spatially Uniform in Ion Channels. arXiv 2020, arXiv:2002.09012. [Google Scholar]

- Bork, A.M. Maxwell and the Electromagnetic Wave Equation. Am. J. Phys. 1967, 35, 844–849. [Google Scholar] [CrossRef]

- Blumenfeld, R.; Amitai, S.; Jordan, J.F.; Hihinashvili, R. Failure of the Volume Function in Granular Statistical Mechanics and an Alternative Formulation. Phys. Rev. Lett. 2016, 116, 148001. [Google Scholar] [CrossRef]

- Bideau, D.; Hansen, A. Disorder and Granular Media; Elsevier Science: Amsterdam, The Netherlands, 1993. [Google Scholar]

- Amitai, S. Statistical Mechanics, Entropy and Macroscopic Properties of Granular and Porous Materials. Ph.D. Thesis, Imperial College, London, UK, 2017. [Google Scholar]

- Hinrichsen, H.; Wolf, D.E. The Physics of Granular Media; Wiley: Hoboken, NJ, USA, 2006. [Google Scholar]

- Ristow, G.H. Pattern Formation in Granular Materials; Springer Science & Business Media: New York, NY, USA, 2000; Volume 164. [Google Scholar]

- Eisenberg, B. Life’s Solutions: A Mathematical Challenge. arXiv 2012, arXiv:1207.4737. [Google Scholar]

- Abbott, E.A. Flatland: A Romance of Many Dimensions: Roberts Brothers; John Wilson Son University Press: Boston, MA, USA, 1885. [Google Scholar]

- Abbott, E.A.; Lindgren, W.F.; Banchoff, T.F. Flatland: An Edition with Notes and Commentary; Cambridge University Press: New York, NY, USA, 2010. [Google Scholar]

- To, K.; Lai, P.-Y.; Pak, H. Jamming of granular flow in a two-dimensional hopper. Phys. Rev. Lett. 2001, 86, 71. [Google Scholar] [CrossRef] [PubMed]

- De Gennes, P.-G. Granular matter: A tentative view. Rev. Mod. Phys. 1999, 71, S374. [Google Scholar] [CrossRef]

- Edwards, S.F.; Grinev, D.V. Granular materials: Towards the statistical mechanics of jammed configurations. Adv. Phys. 2002, 51, 1669–1684. [Google Scholar] [CrossRef]

- Jimenez-Morales, D.; Liang, J.; Eisenberg, B. Ionizable side chains at catalytic active sites of enzymes. Eur. Biophys. J. 2012, 41, 449–460. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Eisenberg, B. Crowded Charges in Ion Channels. In Advances in Chemical Physics; Rice, S.A., Ed.; John Wiley & Sons, Inc.: New York, NY, USA, 2011; pp. 77–223. Available online: http://arxiv.org/abs/1009.1786v1001 (accessed on 2 October 2022).

- Eisenberg, R. Meeting Doug Henderson. J. Mol. Liq. 2022, 361, 119574. [Google Scholar] [CrossRef]

- Liu, J.L.; Eisenberg, B. Molecular Mean-Field Theory of Ionic Solutions: A Poisson-Nernst-Planck-Bikerman Model. Entropy 2020, 22, 550. [Google Scholar] [CrossRef]

- Eisenberg, R. Structural Analysis of Fluid Flow in Complex Biological Systems. Preprints 2022, 2022050365. [Google Scholar] [CrossRef]

- Bezanilla, F. The voltage sensor in voltage-dependent ion channels. Physiol. Rev. 2000, 80, 555–592. [Google Scholar] [CrossRef]

- Eisenberg, R.; Catacuzzeno, L.; Franciolini, F. Conformations and Currents Make the Nerve Signal. ScienceOpen Prepr. 2022. [CrossRef]

- Catacuzzeno, L.; Franciolini, F. The 70-year search for the voltage sensing mechanism of ion channels. J. Physiol. 2022, 600, 3227–3247. [Google Scholar] [CrossRef]

- Catacuzzeno, L.; Franciolini, F.; Bezanilla, F.; Eisenberg, R.S. Gating current noise produced by Brownian models of a voltage sensor. Biophys. J. 2021, 120, 3983–4001. [Google Scholar] [CrossRef] [PubMed]

- Catacuzzeno, L.; Sforna, L.; Franciolini, F.; Eisenberg, R.S. Multiscale modeling shows that dielectric differences make NaV channels faster than KV channels. J. Gen. Physiol. 2021, 153, e202012706. [Google Scholar] [CrossRef]

- Bezanilla, F. Gating currents. J. Gen. Physiol. 2018, 150, 911–932. [Google Scholar] [CrossRef] [PubMed]

- Catacuzzeno, L.; Franciolini, F. Simulation of gating currents of the Shaker K channel using a Brownian model of the voltage sensor. arXiv 2018, arXiv:1809.05464. [Google Scholar] [CrossRef] [PubMed]

- Horng, T.-L.; Eisenberg, R.S.; Liu, C.; Bezanilla, F. Continuum Gating Current Models Computed with Consistent Interactions. Biophys. J. 2019, 116, 270–282. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, S.; Eisenberg, R.S.; Huang, H. A Bidomain Model for Lens Microcirculation. Biophys. J. 2019, 116, 1171–1184. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, S.; Eisenberg, R.S.; Huang, H. Optic nerve microcirculation: Fluid flow and electrodiffusion. Phys. Fluids 2021, 33, 041906. [Google Scholar] [CrossRef]

- Zhu, Y.; Xu, S.; Eisenberg, R.S.; Huang, H. A tridomain model for potassium clearance in optic nerve of Necturus. Biophys. J. 2021, 120, 3008–3027. [Google Scholar] [CrossRef]

- Xu, S.; Eisenberg, R.; Song, Z.; Huang, H. Mathematical Model for Chemical Reactions in Electrolyte Applied to Cytochrome c Oxidase: An Electro-osmotic Approach. arXiv 2022, arXiv:2207.02215. [Google Scholar]

- Aristotle. Parts of Animals; Movement of Animals; Progression of Animals; Harvard University Press: Cambridge, MA, USA, 1961. [Google Scholar]

- Boron, W.; Boulpaep, E. Medical Physiology; Saunders: New York, NY, USA, 2008; p. 1352. [Google Scholar]

- Prosser, C.L.; Curtis, B.A.; Meisami, E. A History of Nerve, Muscle and Synapse Physiology; Stipes Public License: Champaign, IL USA, 2009; p. 572. [Google Scholar]

- Sherwood, L.; Klandorf, H.; Yancey, P. Animal Physiology: From Genes to Organisms; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Silverthorn, D.U.; Johnson, B.R.; Ober, W.C.; Ober, C.E.; Impagliazzo, A.; Silverthorn, A.C. Human Physiology: An Integrated Approach; Pearson Education, Incorporated: Upper Saddle River, NJ, USA, 2019. [Google Scholar]

- Hodgkin, A.L. Chance and Design; Cambridge University Press: New York, NY, USA, 1992; p. 401. [Google Scholar]

- Eisenberg, B. Multiple Scales in the Simulation of Ion Channels and Proteins. J. Phys. Chem. C 2010, 114, 20719–20733. [Google Scholar] [CrossRef]

- Eisenberg, B. Ion channels allow atomic control of macroscopic transport. Phys. Status Solidi C 2008, 5, 708–713. [Google Scholar] [CrossRef]

- Eisenberg, B. Engineering channels: Atomic biology. Proc. Natl. Acad. Sci. USA 2008, 105, 6211–6212. [Google Scholar] [CrossRef] [PubMed]

- Eisenberg, R.S. Look at biological systems through an engineer’s eyes. Nature 2007, 447, 376. [Google Scholar] [CrossRef] [PubMed]

- Eisenberg, B. Living Transistors: A Physicist’s View of Ion Channels (version 2). arXiv 2005, arXiv:q-bio/0506016v2. [Google Scholar]

- Eisenberg, R.S. From Structure to Function in Open Ionic Channels. J. Membr. Biol. 1999, 171, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Eisenberg, B. Ionic Channels in Biological Membranes: Natural Nanotubes described by the Drift-Diffusion Equations. In Proceedings of the VLSI Design: Special Issue on Computational Electronics. Papers Presented at the Fifth International Workshop on Computational Electronics (IWCE-5), Notre Dame, IN, USA, 28–30 May 1997; Volume 8, pp. 75–78. [Google Scholar]

- Eisenberg, R.S. Atomic Biology, Electrostatics and Ionic Channels. In New Developments and Theoretical Studies of Proteins; Elber, R., Ed.; World Scientific: Philadelphia, PA, USA, 1996; Volume 7, pp. 269–357. [Google Scholar]

- Eisenberg, R. From Structure to Permeation in Open Ionic Channels. Biophys. J. 1993, 64, A22. [Google Scholar]

- Katchalsky, A.; Curran, P.F. Nonequilibrium Thermodynamics; Harvard: Cambridge, MA, USA, 1965; p. 248. [Google Scholar]

- Rayleigh, L. Previously John Strutt. In Theory of Sound, 2nd ed.; Dover Reprint 1976: New York, NY, USA, 1896. [Google Scholar]

- Rayleigh, L. Previously John Strutt: Some General Theorems Relating to Vibrations. Proc. Lond. Math. Soc. 1871, IV, 357–368. [Google Scholar]

- Rayleigh, L. Previously John Strutt: No title: About Dissipation Principle. Philos. Mag. 1892, 33, 209. [Google Scholar] [CrossRef]

- Onsager, L. Reciprocal Relations in Irreversible Processes: II. Phys. Rev. 1931, 38, 2265–2279. [Google Scholar] [CrossRef]

- Onsager, L. Reciprocal Relations in Irreversible Processes: I. Phys. Rev. 1931, 37, 405. [Google Scholar] [CrossRef]

- Machlup, S.; Onsager, L. Fluctuations and Irreversible Process: II. Systems with Kinetic Energy. Phys. Rev. 1953, 91, 1512. [Google Scholar] [CrossRef]

- Onsager, L.; Machlup, S. Fluctuations and irreversible processes. Phys. Rev. 1953, 91, 1505–1512. [Google Scholar] [CrossRef]

- Finlayson, B.A.; Scriven, L.E. On the search for variational principles. Int. J. Heat Mass Transf. 1967, 10, 799–821. [Google Scholar] [CrossRef]

- Biot, M.A. Variational Principles in Heat Transfer: A Unified Lagrangian Analysis of Dissipative Phenomena; Oxford University Press: New York, NY, USA, 1970. [Google Scholar]

- Finlayson, B.A. The Method of Weighted Residuals and Variational Principles: With Application in Fluid Mechanics, Heat and Mass Transfer; Academic Press: New York, NY, USA, 1972; p. 412. [Google Scholar]

- Liu, C.; Walkington, N.J. An Eulerian description of fluids containing visco-hyperelastic particles. Arch. Ration. Mech. Anal. 2001, 159, 229–252. [Google Scholar] [CrossRef]

- Sciubba, E. Flow Exergy as a Lagrangian for the Navier-Stokes Equations for Incompressible Flow. Int. J. Thermodyn. 2004, 7, 115–122. [Google Scholar]

- Sciubba, E. Do the Navier-Stokes Equations Admit of a Variational Formulation? In Variational and Extremum Principles in Macroscopic Systems; Sieniutycz, S., Frankas, H., Eds.; Elsevier: Amsterdam, The Netherlands, 2005; pp. 561–575. [Google Scholar]

- Sieniutycz, S.; Frankas, H. (Eds.) Variational and Extremum Principles in Macroscopic Systems; Elsevier: Amsterdam, The Netherlands, 2005; p. 770. [Google Scholar]

- Forster, J. Mathematical Modeling of Complex Fluids. Master’s Thesis, University of Wurzburg, Wurzburg, Germany, 2013. [Google Scholar]

- Wang, Y.; Liu, C. Some Recent Advances in Energetic Variational Approaches. Entropy 2022, 24, 721. [Google Scholar] [CrossRef]

- Ryham, R.; Liu, C.; Wang, Z.Q. On electro-kinetic fluids: One dimensional configurations. Discret. Contin. Dyn. Syst.-Ser. B 2006, 6, 357–371. [Google Scholar]

- Ryham, R.; Liu, C.; Zikatanov, L. Mathematical models for the deformation of electrolyte droplets. Discret. Contin. Dyn. Syst.-Ser. B 2007, 8, 649–661. [Google Scholar] [CrossRef]

- Xu, S.; Chen, M.; Majd, S.; Yue, X.; Liu, C. Modeling and simulating asymmetrical conductance changes in Gramicidin pores. Mol. Based Math. Biol. 2014, 2, 34–55. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, C.; Liu, P.; Eisenberg, B. Field theory of reaction-diffusion: Law of mass action with an energetic variational approach. Phys. Rev. E 2020, 102, 062147. [Google Scholar] [CrossRef]

- Eisenberg, R.S. Computing the field in proteins and channels. J. Membr. Biol. 1996, 150, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Eisenberg, B. Shouldn’t we make biochemistry an exact science? ASBMB Today 2014, 13, 36–38. [Google Scholar]

- Hodgkin, A.L.; Rushton, W.A.H. The electrical constants of a crustacean nerve fiber. Proc. R. Soc. Ser. B 1946, 133, 444–479. [Google Scholar]

- Davis, L.D., Jr.; De No, R.L. Contribution to the Mathematical Theory of the electrotonus. Stud. Rockefeller Inst. Med. Res. 1947, 131, 442–496. [Google Scholar]

- Barcilon, V.; Cole, J.; Eisenberg, R.S. A singular perturbation analysis of induced electric fields in nerve cells. SIAM J. Appl. Math. 1971, 21, 339–354. [Google Scholar] [CrossRef]

- Jack, J.J.B.; Noble, D.; Tsien, R.W. Electric Current Flow in Excitable Cells; Oxford, Clarendon Press: New York, NY, USA, 1975. [Google Scholar]

- Zhu, Y.; Xu, S.; Eisenberg, R.S.; Huang, H. Membranes in Optic Nerve Models. arXiv 2021, arXiv:2105.14411. [Google Scholar]

- Mori, Y.; Liu, C.; Eisenberg, R.S. A model of electrodiffusion and osmotic water flow and its energetic structure. Phys. D Nonlinear Phenom. 2011, 240, 1835–1852. [Google Scholar] [CrossRef]

- Vera, J.H.; Wilczek-Vera, G. Classical Thermodynamics of Fluid Systems: Principles and Applications; CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Kunz, W. Specific Ion Effects; World Scientific: Singapore, 2009; p. 348. [Google Scholar]

- Eisenberg, B. Ionic Interactions Are Everywhere. Physiology 2013, 28, 28–38. [Google Scholar] [CrossRef][Green Version]

- Eisenberg, B. Interacting ions in Biophysics: Real is not ideal. Biophys. J. 2013, 104, 1849–1866. [Google Scholar] [CrossRef]

- Warshel, A. Energetics of enzyme catalysis. Proc. Natl. Acad. Sci. USA 1978, 75, 5250–5254. [Google Scholar] [CrossRef]

- Warshel, A. Electrostatic origin of the catalytic power of enzymes and the role of preorganized active sites. J. Biol. Chem. 1998, 273, 27035–27038. [Google Scholar] [CrossRef] [PubMed]

- Warshel, A.; Sharma, P.K.; Kato, M.; Xiang, Y.; Liu, H.; Olsson, M.H.M. Electrostatic Basis for Enzyme Catalysis. Chem. Rev. 2006, 106, 3210–3235. [Google Scholar] [CrossRef] [PubMed]

- Warshel, A. Multiscale modeling of biological functions: From enzymes to molecular machines (nobel lecture). Angew. Chem. Int. Ed. Engl. 2014, 53, 10020–10031. [Google Scholar] [CrossRef] [PubMed]

- Suydam, I.T.; Snow, C.D.; Pande, V.S.; Boxer, S.G. Electric fields at the active site of an enzyme: Direct comparison of experiment with theory. Science 2006, 313, 200–204. [Google Scholar] [CrossRef]

- Fried, S.D.; Bagchi, S.; Boxer, S.G. Extreme electric fields power catalysis in the active site of ketosteroid isomerase. Science 2014, 346, 1510–1514. [Google Scholar] [CrossRef]

- Wu, Y.; Boxer, S.G. A Critical Test of the Electrostatic Contribution to Catalysis with Noncanonical Amino Acids in Ketosteroid Isomerase. J. Am. Chem. Soc. 2016, 138, 11890–11895. [Google Scholar] [CrossRef]

- Fried, S.D.; Boxer, S.G. Electric Fields and Enzyme Catalysis. Ann. Rev. Biochem. 2017, 86, 387–415. [Google Scholar] [CrossRef]

- Wu, Y.; Fried, S.D.; Boxer, S.G. A Preorganized Electric Field Leads to Minimal Geometrical Reorientation in the Catalytic Reaction of Ketosteroid Isomerase. J. Am. Chem. Soc. 2020, 142, 9993–9998. [Google Scholar] [CrossRef]

- Boda, D. Monte Carlo Simulation of Electrolyte Solutions in biology: In and out of equilibrium. Annu. Rev. Compuational Chem. 2014, 10, 127–164. [Google Scholar]

- Gillespie, D. A review of steric interactions of ions: Why some theories succeed and others fail to account for ion size. Microfluid. Nanofluidics 2015, 18, 717–738. [Google Scholar] [CrossRef]

- Eisenberg, B. Proteins, Channels, and Crowded Ions. Biophys. Chem. 2003, 100, 507–517. [Google Scholar] [CrossRef]

- Boda, D.; Busath, D.; Eisenberg, B.; Henderson, D.; Nonner, W. Monte Carlo simulations of ion selectivity in a biological Na+ channel: Charge-space competition. Phys. Chem. Chem. Phys. 2002, 4, 5154–5160. [Google Scholar] [CrossRef]

- Nonner, W.; Catacuzzeno, L.; Eisenberg, B. Binding and Selectivity in L-type Ca Channels: A Mean Spherical Approximation. Biophys. J. 2000, 79, 1976–1992. [Google Scholar] [CrossRef]

- Miedema, H.; Vrouenraets, M.; Wierenga, J.; Gillespie, D.; Eisenberg, B.; Meijberg, W.; Nonner, W. Ca2+ selectivity of a chemically modified OmpF with reduced pore volume. Biophys. J. 2006, 91, 4392–4400. [Google Scholar] [CrossRef] [PubMed]

- Boda, D.; Nonner, W.; Henderson, D.; Eisenberg, B.; Gillespie, D. Volume Exclusion in Calcium Selective Channels. Biophys. J. 2008, 94, 3486–3496. [Google Scholar] [CrossRef]

- Boda, D.; Valisko, M.; Henderson, D.; Eisenberg, B.; Gillespie, D.; Nonner, W. Ionic selectivity in L-type calcium channels by electrostatics and hard-core repulsion. J. Gen. Physiol. 2009, 133, 497–509. [Google Scholar] [CrossRef] [PubMed]

- Boda, D.; Nonner, W.; Valisko, M.; Henderson, D.; Eisenberg, B.; Gillespie, D. Steric selectivity in Na channels arising from protein polarization and mobile side chains. Biophys. J. 2007, 93, 1960–1980. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.-L.; Eisenberg, B. Numerical Methods for Poisson-Nernst-Planck-Fermi Model. Phys. Rev. E 2015, 92, 012711. [Google Scholar]

- Liu, J.-L.; Li, C.-L. A generalized Debye-Hückel theory of electrolyte solutions. AIP Adv. 2019, 9, 015214. [Google Scholar] [CrossRef]

- De Souza, J.P.; Goodwin, Z.A.H.; McEldrew, M.; Kornyshev, A.A.; Bazant, M.Z. Interfacial Layering in the Electric Double Layer of Ionic Liquids. Phys. Rev. Lett. 2020, 125, 116001. [Google Scholar] [CrossRef]

- De Souza, J.P.; Pivnic, K.; Bazant, M.Z.; Urbakh, M.; Kornyshev, A.A. Structural Forces in Ionic Liquids: The Role of Ionic Size Asymmetry. J. Phys. Chem. 2022, 126, 1242–1253. [Google Scholar] [CrossRef] [PubMed]

- Groda, Y.; Dudka, M.; Oshanin, G.; Kornyshev, A.A.; Kondrat, S. Ionic liquids in conducting nanoslits: How important is the range of the screened electrostatic interactions? J. Phys. Condens. Matter 2022, 34, 26LT01. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eisenberg, B. Setting Boundaries for Statistical Mechanics. Molecules 2022, 27, 8017. https://doi.org/10.3390/molecules27228017

Eisenberg B. Setting Boundaries for Statistical Mechanics. Molecules. 2022; 27(22):8017. https://doi.org/10.3390/molecules27228017

Chicago/Turabian StyleEisenberg, Bob. 2022. "Setting Boundaries for Statistical Mechanics" Molecules 27, no. 22: 8017. https://doi.org/10.3390/molecules27228017

APA StyleEisenberg, B. (2022). Setting Boundaries for Statistical Mechanics. Molecules, 27(22), 8017. https://doi.org/10.3390/molecules27228017